Spaces:

Runtime error

Runtime error

sashavor

commited on

Commit

·

ece8ba5

1

Parent(s):

9c168fe

added bias draft

Browse files- app.py +31 -3

- images/bias/Cluster2.png +0 -0

- images/bias/Cluster4.png +0 -0

- images/bias/ceo_dir.png +0 -0

- images/bias/social.png +0 -0

app.py

CHANGED

|

@@ -20,14 +20,42 @@ with gr.Blocks() as demo:

|

|

| 20 |

|

| 21 |

with gr.Accordion("Identity group results (ethnicity and gender)", open=False):

|

| 22 |

gr.HTML('''

|

| 23 |

-

<p style="margin-bottom: 14px; font-size: 100%"> One of the approaches that we adopted in our work is hierarchical clustering of the images generated by the text-to-image systems in response to prompts that include identity terms with regards to ethnicity and gender. <br> We computed 3 different numbers of clusters (12, 24 and 48) and created an <a href='https://huggingface.co/spaces/society-ethics/DiffusionFaceClustering' style='text-decoration: underline;' target='_blank'> Identity Representation Demo </a> that allows for the exploration of the different clusters and their contents.

|

| 24 |

''')

|

| 25 |

with gr.Row():

|

| 26 |

impath = "images/identities"

|

| 27 |

identity_gallery = gr.Gallery([os.path.join(impath,im) for im in os.listdir(impath)],

|

| 28 |

-

label="Identity cluster images

|

| 29 |

).style(grid=3, height="auto")

|

|

|

|

|

|

|

|

|

|

| 30 |

|

| 31 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

|

| 33 |

demo.launch(debug=True)

|

|

|

|

| 20 |

|

| 21 |

with gr.Accordion("Identity group results (ethnicity and gender)", open=False):

|

| 22 |

gr.HTML('''

|

| 23 |

+

<p style="margin-bottom: 14px; font-size: 100%"> One of the approaches that we adopted in our work is hierarchical clustering of the images generated by the text-to-image systems in response to prompts that include identity terms with regards to ethnicity and gender. <br> We computed 3 different numbers of clusters (12, 24 and 48) and created an <a href='https://huggingface.co/spaces/society-ethics/DiffusionFaceClustering' style='text-decoration: underline;' target='_blank'> Identity Representation Demo </a> that allows for the exploration of the different clusters and their contents. </p>

|

| 24 |

''')

|

| 25 |

with gr.Row():

|

| 26 |

impath = "images/identities"

|

| 27 |

identity_gallery = gr.Gallery([os.path.join(impath,im) for im in os.listdir(impath)],

|

| 28 |

+

label="Identity cluster images", show_label=False, elem_id="gallery"

|

| 29 |

).style(grid=3, height="auto")

|

| 30 |

+

gr.HTML('''

|

| 31 |

+

<p style="margin-bottom: 14px; font-size: 100%"> TO DO: talk about what we see above. <br> Continue exploring the demo on your own to uncover other patterns! </p>

|

| 32 |

+

''')

|

| 33 |

|

| 34 |

+

with gr.Accordion("Bias Exploration", open=False):

|

| 35 |

+

gr.HTML('''

|

| 36 |

+

<p style="margin-bottom: 14px; font-size: 100%"> We queried our 3 systems with prompts that included names of professions, and one of our goals was to explore the social biases of these models. <br> Since artificial depictions of fictive

|

| 37 |

+

humans have no inherent gender or ethnicity nor do they belong to socially-constructed groups, we pursued our analysis <b> without </b> ascribing gender and ethnicity categories to the images generated. <b> We do this by calculating the correlations between the professions and the different identity clusters that we identified. <br> Using both the <a href='https://huggingface.co/spaces/society-ethics/DiffusionClustering' style='text-decoration: underline;' target='_blank'> Diffusion Cluster Explorer </a> and the <a href='https://huggingface.co/spaces/society-ethics/DiffusionFaceClustering' style='text-decoration: underline;' target='_blank'> Identity Representation Demo </a>, we can see which clusters are most correlated with each profession and what identities are in these clusters.</p>

|

| 38 |

+

''')

|

| 39 |

+

with gr.Row():

|

| 40 |

+

gr.HTML('''

|

| 41 |

+

<p style="margin-bottom: 14px; font-size: 100%"> Using the <a href='https://huggingface.co/spaces/society-ethics/DiffusionClustering' style='text-decoration: underline;' target='_blank'> Diffusion Cluster Explorer </a>, we can see that the top cluster for the CEO and director professions is Cluster 4: </p> ''')

|

| 42 |

+

ceo_img = gr.Image(Image.open("images/bias/ceo_dir.png"), label = "CEO Image", show_label=False)

|

| 43 |

+

|

| 44 |

+

with gr.Row():

|

| 45 |

+

gr.HTML('''

|

| 46 |

+

<p style="margin-bottom: 14px; font-size: 100%"> Going back to the <a href='https://huggingface.co/spaces/society-ethics/DiffusionFaceClustering' style='text-decoration: underline;' target='_blank'> Identity Representation Demo </a>, we can see that the most represented gender term is man (56% of the cluster) and White (29% of the cluster). </p> ''')

|

| 47 |

+

cluster4 = gr.Image(Image.open("images/bias/Cluster4.png"), label = "Cluster 4 Image", show_label=False)

|

| 48 |

+

with gr.Row():

|

| 49 |

+

gr.HTML('''

|

| 50 |

+

<p style="margin-bottom: 14px; font-size: 100%"> If we look at the cluster representation of professions such as social assistant and social worker, we can observe that the former is best represented by Cluster 2, whereas the latter has a more uniform representation across multiple clusters: </p> ''')

|

| 51 |

+

social_img = gr.Image(Image.open("images/bias/social.png"), label = "social image", show_label=False)

|

| 52 |

+

|

| 53 |

+

with gr.Row():

|

| 54 |

+

gr.HTML('''

|

| 55 |

+

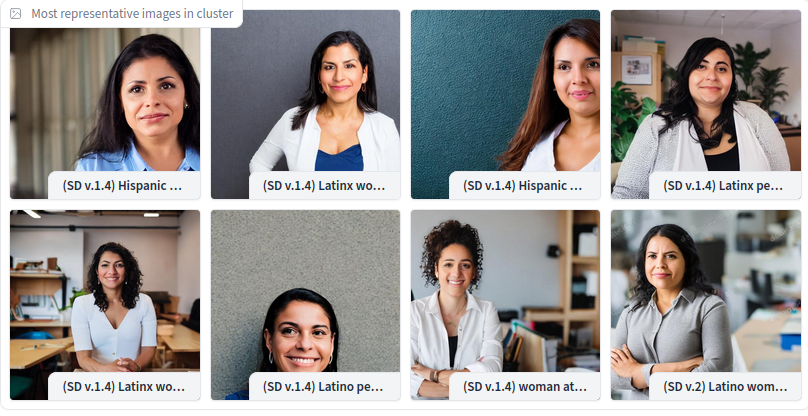

<p style="margin-bottom: 14px; font-size: 100%"> Cluster 2 is best represented by the gender term is woman (81%) as well as Latinx (19%). </p> ''')

|

| 56 |

+

cluster4 = gr.Image(Image.open("images/bias/Cluster2.png"), label = "Cluster 2 Image", show_label=False)

|

| 57 |

+

with gr.Row():

|

| 58 |

+

gr.HTML('''

|

| 59 |

+

<p style="margin-bottom: 14px; font-size: 100%"> TO DO: talk about what we see above. <br> Continue exploring the demo on your own to uncover other patterns! </p>''')

|

| 60 |

|

| 61 |

demo.launch(debug=True)

|

images/bias/Cluster2.png

ADDED

|

images/bias/Cluster4.png

ADDED

|

images/bias/ceo_dir.png

ADDED

|

images/bias/social.png

ADDED

|