Spaces:

Running

on

Zero

Running

on

Zero

Commit

•

8483373

1

Parent(s):

4d14b3a

Upload 200 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +6 -0

- LICENSE +27 -0

- README.md +61 -12

- assets/teaser.jpg +3 -0

- docs/images/compose_all.jpeg +0 -0

- docs/images/continuous.jpeg +0 -0

- docs/images/edits/0_0_4.jpg +0 -0

- docs/images/edits/0_1_0.jpg +0 -0

- docs/images/edits/0_1_1.jpg +0 -0

- docs/images/edits/0_1_2.jpg +0 -0

- docs/images/edits/0_1_3.jpg +0 -0

- docs/images/edits/0_1_4.jpg +0 -0

- docs/images/edits/0_2_4.jpg +0 -0

- docs/images/edits/0_3_0.jpg +0 -0

- docs/images/edits/0_3_1.jpg +0 -0

- docs/images/edits/0_3_2.jpg +0 -0

- docs/images/edits/0_3_3.jpg +0 -0

- docs/images/edits/0_3_4.jpg +0 -0

- docs/images/edits/0_4_0.jpg +0 -0

- docs/images/edits/0_4_1.jpg +0 -0

- docs/images/edits/0_4_2.jpg +0 -0

- docs/images/edits/0_4_3.jpg +0 -0

- docs/images/edits/1_0_0.jpg +0 -0

- docs/images/edits/1_0_1.jpg +0 -0

- docs/images/edits/1_0_2.jpg +0 -0

- docs/images/edits/1_0_3.jpg +0 -0

- docs/images/edits/1_1_0.jpg +0 -0

- docs/images/edits/1_1_1.jpg +0 -0

- docs/images/edits/1_1_2.jpg +0 -0

- docs/images/edits/1_1_3.jpg +0 -0

- docs/images/edits/1_1_4.jpg +0 -0

- docs/images/edits/1_2_0.jpg +0 -0

- docs/images/edits/1_2_2.jpg +0 -0

- docs/images/edits/1_2_3.jpg +0 -0

- docs/images/edits/1_3_0.jpg +0 -0

- docs/images/edits/1_3_1.jpg +0 -0

- docs/images/edits/1_3_2.jpg +0 -0

- docs/images/edits/1_3_3.jpg +0 -0

- docs/images/edits/1_3_4.jpg +0 -0

- docs/images/edits/1_4_4.jpg +0 -0

- docs/images/edits/2_0_0.jpg +0 -0

- docs/images/edits/2_0_1.jpg +0 -0

- docs/images/edits/2_0_2.jpg +0 -0

- docs/images/edits/2_0_3.jpg +0 -0

- docs/images/edits/2_0_4.jpg +0 -0

- docs/images/edits/2_1_0.jpg +0 -0

- docs/images/edits/2_1_1.jpg +0 -0

- docs/images/edits/2_1_2.jpg +0 -0

- docs/images/edits/2_1_3.jpg +0 -0

- docs/images/edits/2_2_0.jpg +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,9 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/teaser.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

docs/images/inversion/inversion_animation.gif filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

docs/images/inversion/inversion.gif filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

docs/images/sampling_web.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

docs/images/teaser_anim_final.m4v filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

docs/images/w2w_vs_GAN.jpg filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2024 Snap Inc. All rights reserved.

|

| 2 |

+

Copyright (c) 2024 University of California, Berkeley. All rights reserved.

|

| 3 |

+

Copyright (c) 2024 Stanford University. All rights reserved.

|

| 4 |

+

|

| 5 |

+

This sample code is made available for non-commercial, academic purposes only.

|

| 6 |

+

|

| 7 |

+

Non-commercial means not primarily intended for or directed towards commercial advantage or monetary compensation. Academic purposes mean solely for study, instruction, or non-commercial research, testing, or validation.

|

| 8 |

+

|

| 9 |

+

No commercial license, whether implied or otherwise, is granted in or to this code, unless you have entered into a separate agreement with the above-listed rights holders for such rights.

|

| 10 |

+

|

| 11 |

+

This sample code is provided as-is, without warranty of any kind, express or implied, including any warranties of merchantability, title, fitness for a particular purpose, non-infringement, or that the code is free of defects, errors or viruses. In no event will the above-listed rights holders be liable for any damages or losses of any kind arising from this sample code or your use thereof.

|

| 12 |

+

|

| 13 |

+

Any redistribution of this sample code, including in binary form, must retain or reproduce the above copyright notice, conditions, and disclaimer.

|

| 14 |

+

|

| 15 |

+

The following sets forth attribution notices for third-party software that may be included in portions of this sample code:

|

| 16 |

+

|

| 17 |

+

--------------------------- License for Stable Diffusion --------------------------------

|

| 18 |

+

|

| 19 |

+

Copyright (c) 2024 Stability AI.

|

| 20 |

+

|

| 21 |

+

MIT License

|

| 22 |

+

|

| 23 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

| 24 |

+

|

| 25 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 26 |

+

|

| 27 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,61 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Interpreting the Weight Space of Customized Diffusion Models

|

| 2 |

+

[[paper](https://arxiv.org/abs/2306.09346)] [[project page](https://snap-research.github.io/weights2weights/)]

|

| 3 |

+

|

| 4 |

+

Official implementation of the paper "Interpreting the Weight Space of Customized Diffusion Models."

|

| 5 |

+

|

| 6 |

+

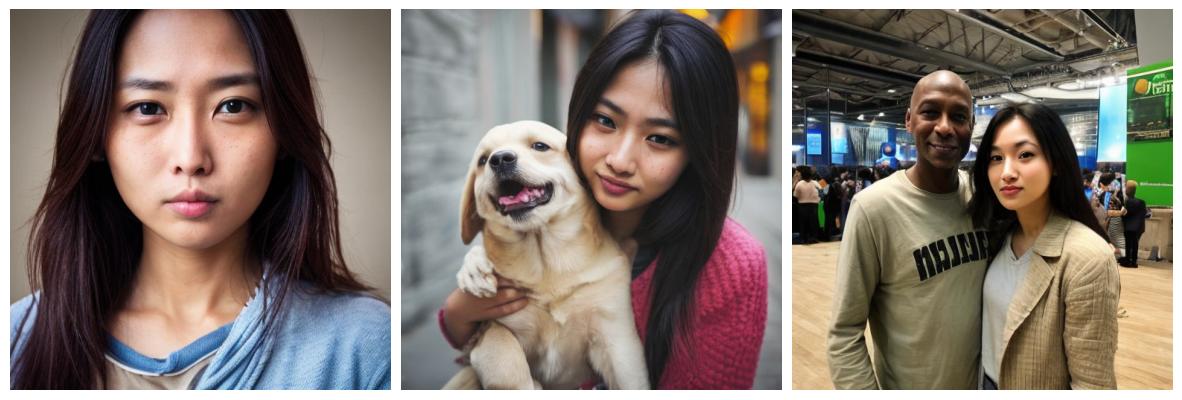

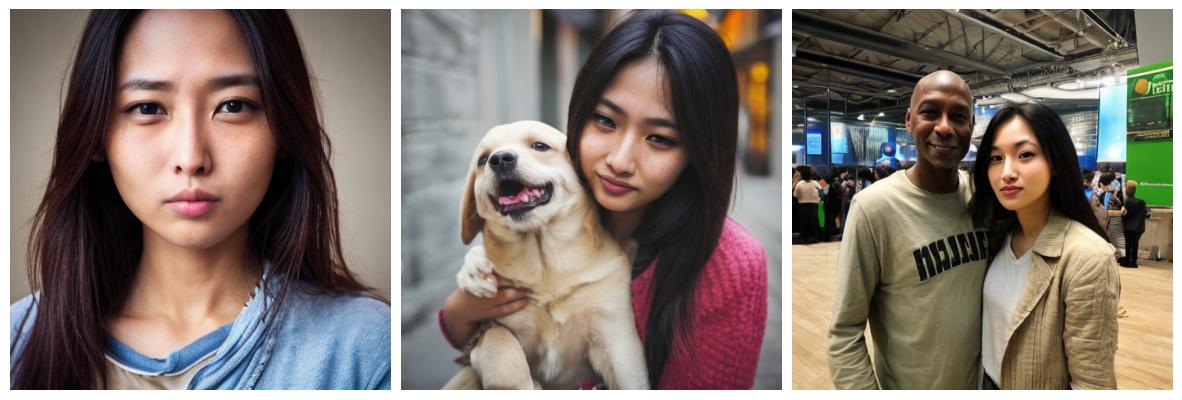

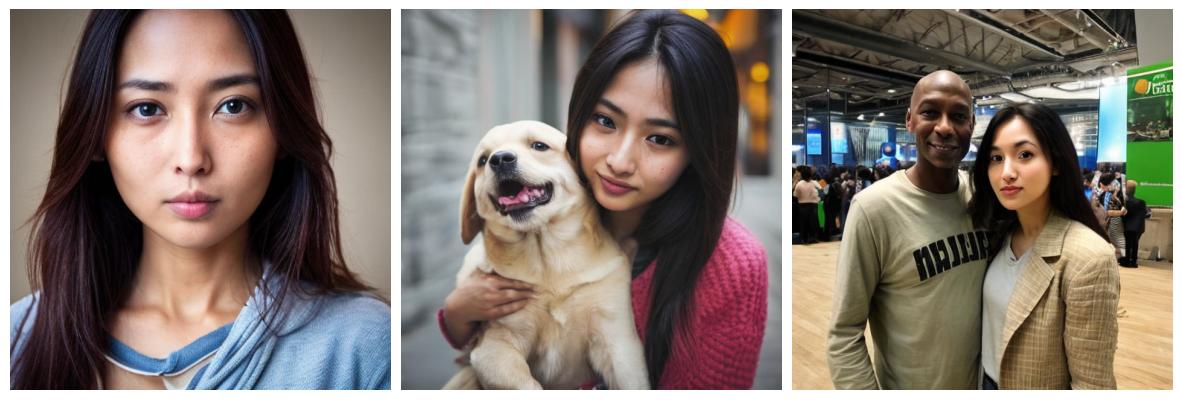

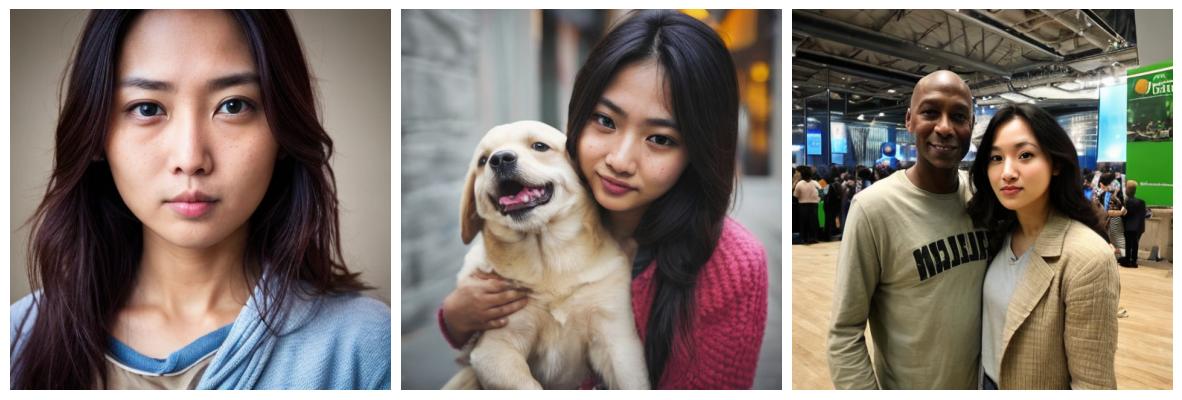

<img src="./assets/teaser.jpg" alt="teaser" width="800"/>

|

| 7 |

+

|

| 8 |

+

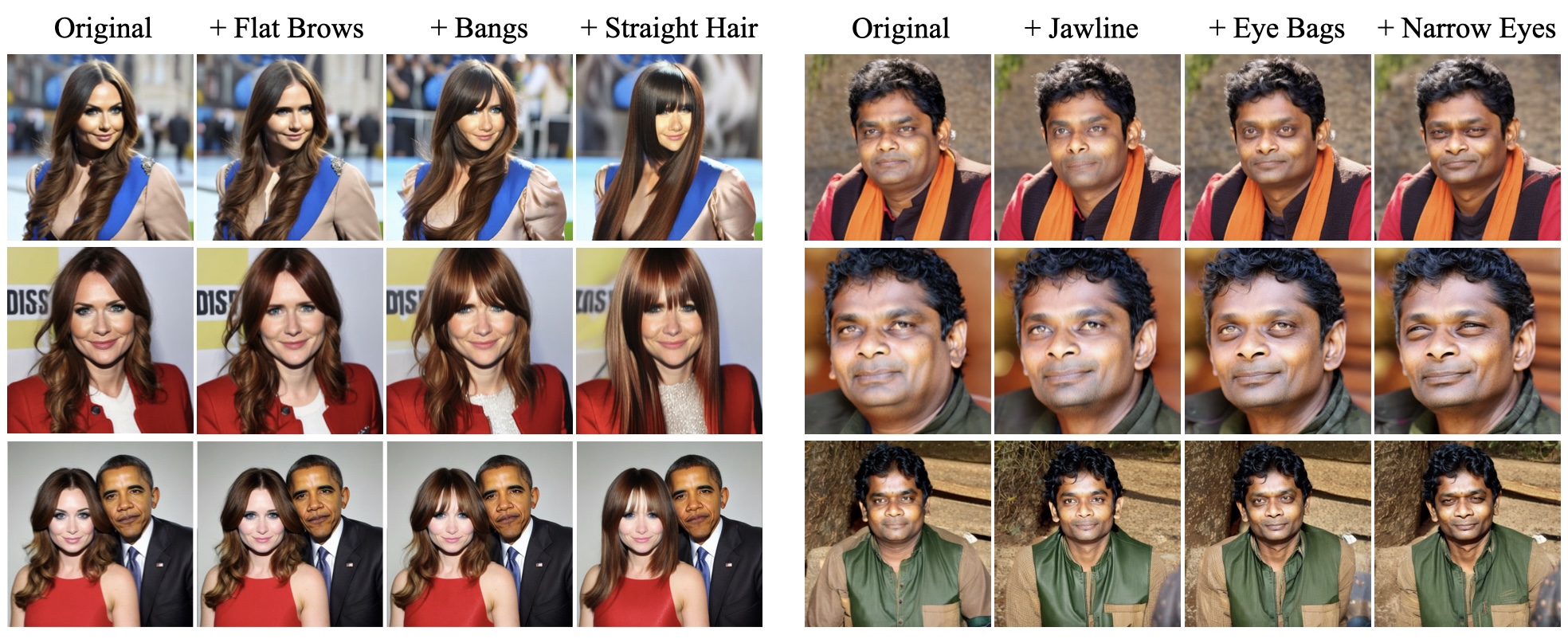

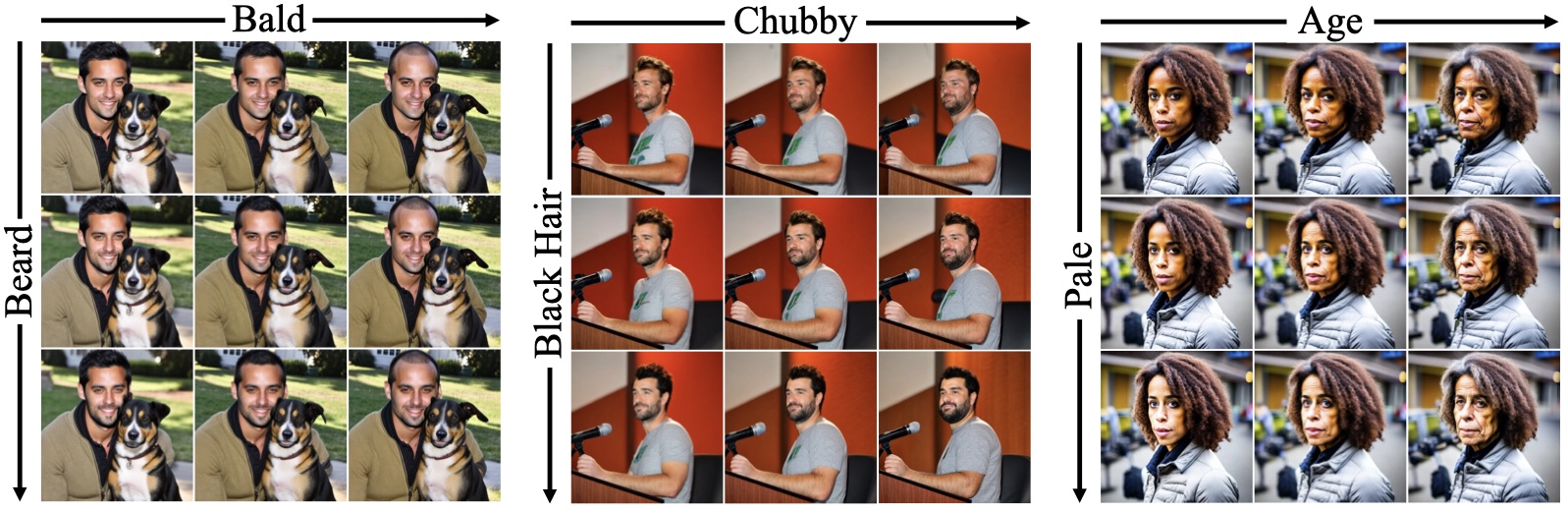

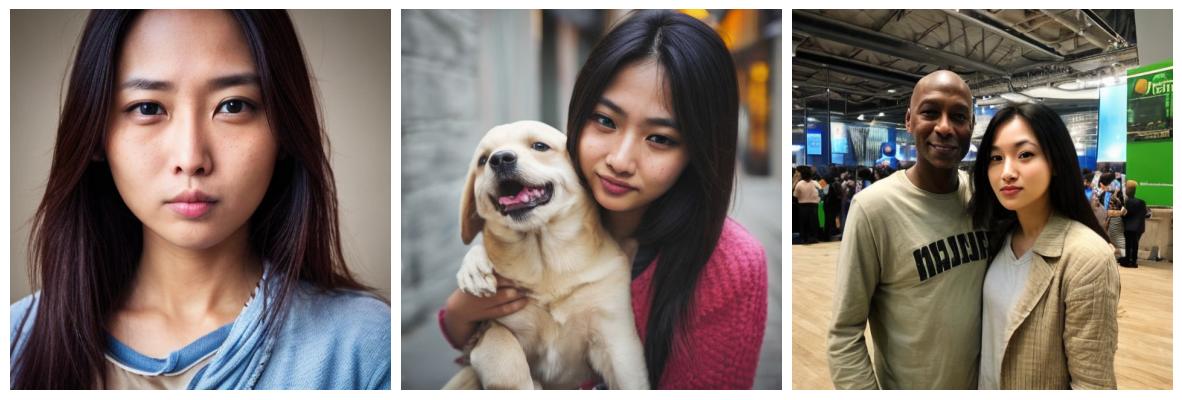

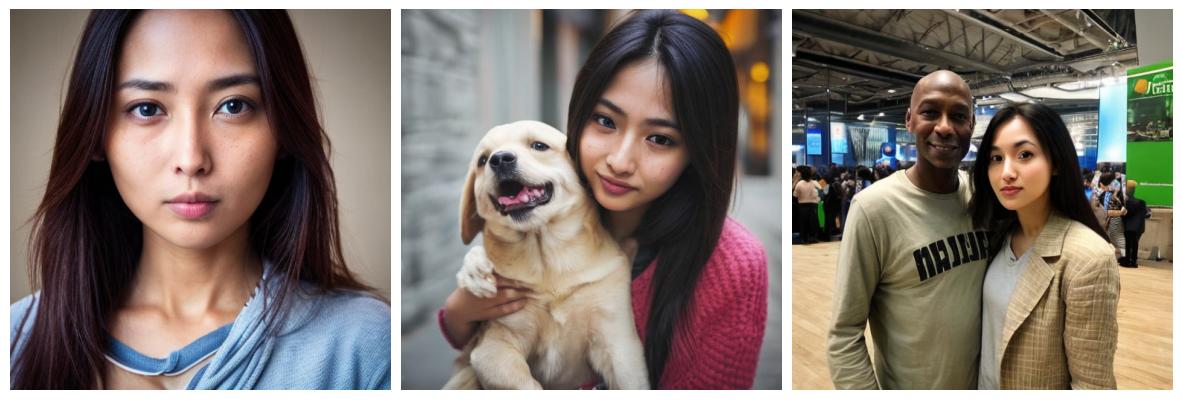

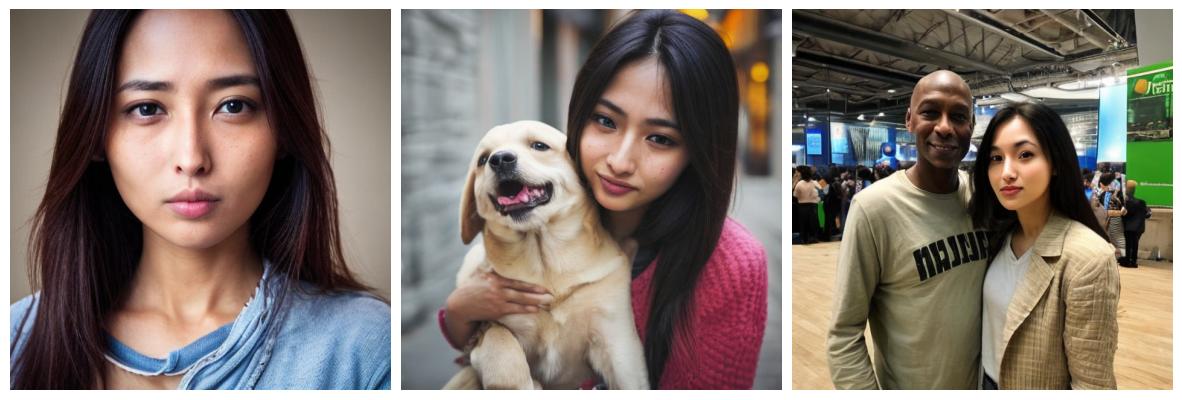

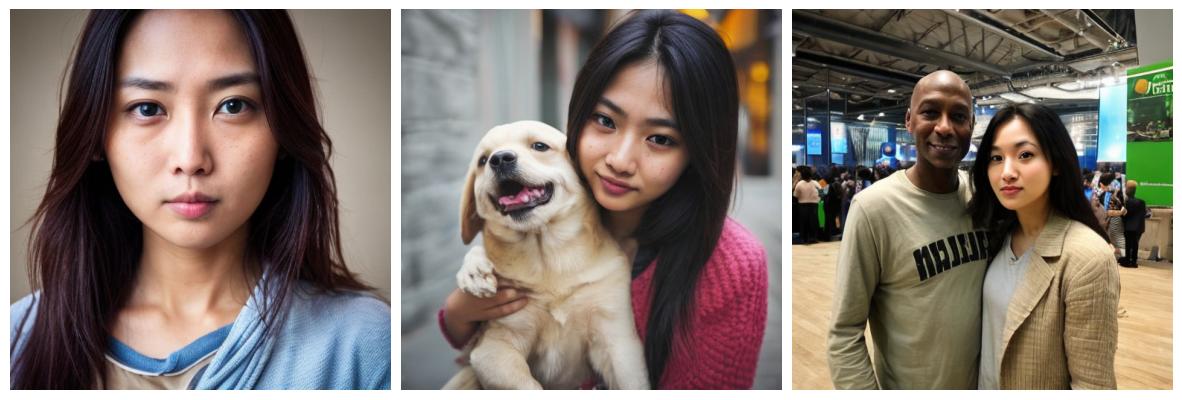

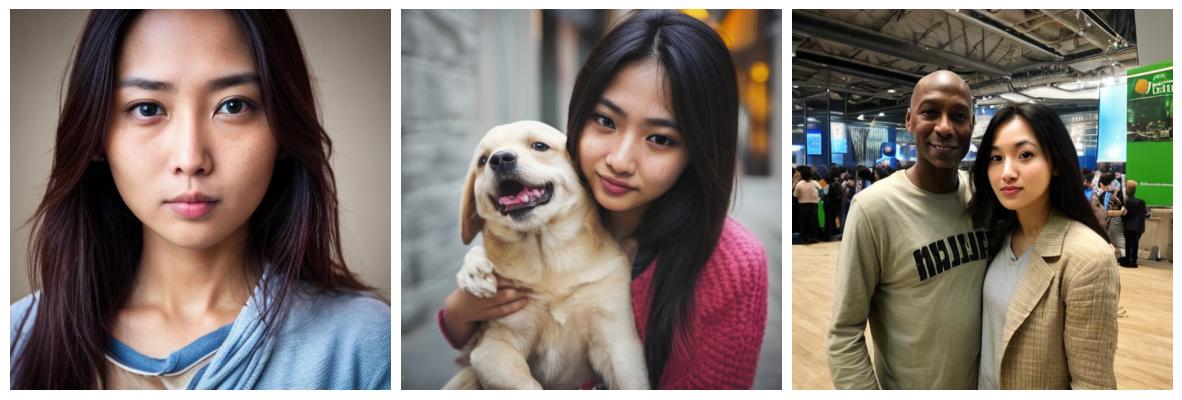

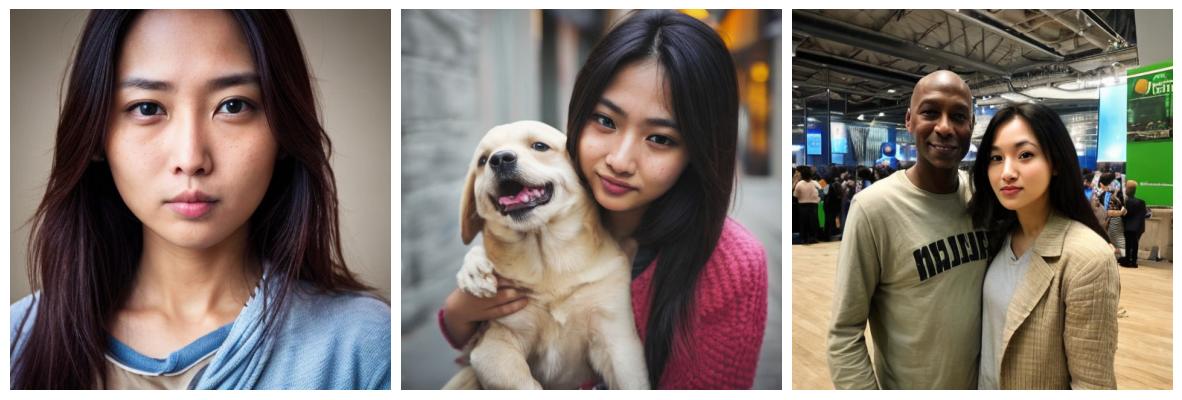

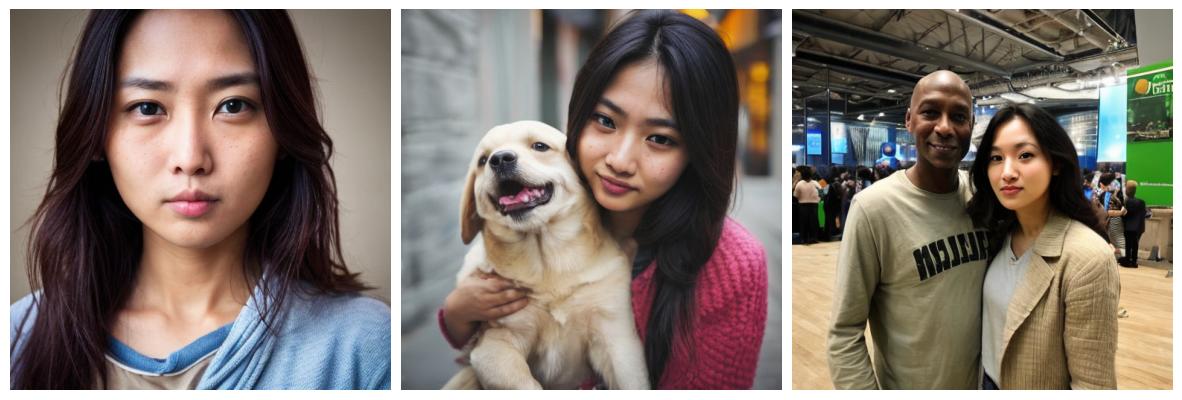

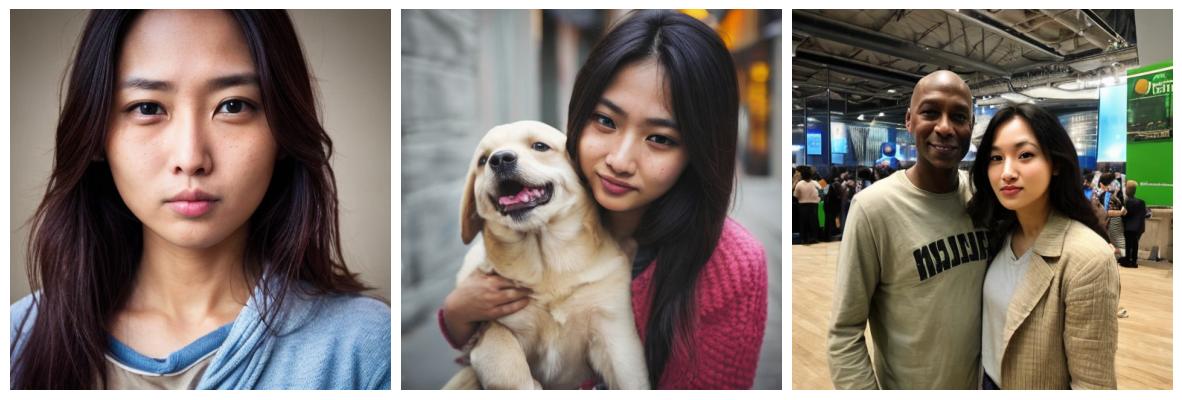

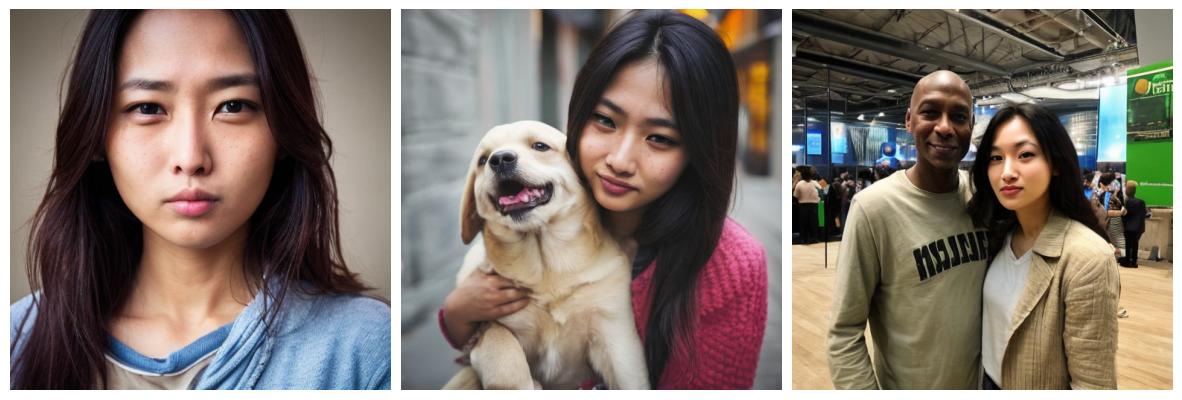

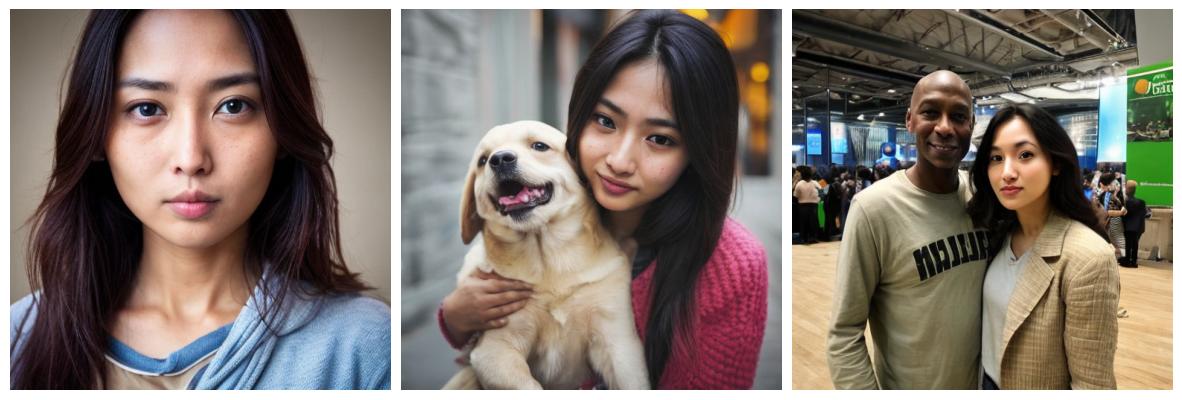

>We investigate the space of weights spanned by a large collection of customized diffusion models. We populate this space by creating a dataset of over 60,000 models, each of which is fine-tuned to insert a different person’s visual identity. Next, we model the underlying manifold of these weights as a subspace, which we term <em>weights2weights</em>. We demonstrate three immediate applications of this space -- sampling, editing, and inversion. First, as each point in the space corresponds to an identity, sampling a set of weights from it results in a model encoding a novel identity. Next, we find linear directions in this space corresponding to semantic edits of the identity (e.g., adding a beard). These edits persist in appearance across generated samples. Finally, we show that inverting a single image into this space reconstructs a realistic identity, even if the input image is out of distribution (e.g., a painting). Our results indicate that the weight space of fine-tuned diffusion models behaves as an interpretable latent space of identities.

|

| 9 |

+

|

| 10 |

+

## Setup

|

| 11 |

+

### Environment

|

| 12 |

+

Our code is developed in `PyTorch 2.3.0` with `CUDA 12.1`, `torchvision=0.18.0`, and `python=3.12.3`.

|

| 13 |

+

|

| 14 |

+

To replicate our environment, install [Anaconda](https://docs.anaconda.com/free/anaconda/install/index.html), and run the following commands.

|

| 15 |

+

```

|

| 16 |

+

$ conda env create -f w2w.yml

|

| 17 |

+

$ conda activate w2w

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

Alternatively, you can follow the setup from [PEFT](https://huggingface.co/docs/peft/main/en/task_guides/dreambooth_lora).

|

| 21 |

+

### Files

|

| 22 |

+

The files needed to create *w2w* space, load models, train classifiers, etc. can be downloaded at this [link](https://drive.google.com/file/d/1W1_klpdeCZr5b0Kdp7SaS7veDV2ZzfbB/view?usp=sharing). Keep the folder structure and place it into the `weights2weights` folder containing all the code.

|

| 23 |

+

|

| 24 |

+

The dataset of full model weights (i.e. the full Dreambooth LoRA parameters) will be released within the next week (by June 21).

|

| 25 |

+

|

| 26 |

+

## Sampling

|

| 27 |

+

We provide an interactive notebook for sampling new identity-encoding models from *w2w* space in `sampling/sampling.ipynb`. Instructions are provided in the notebook. Once a model is sampled, you can run typical inference with various text prompts and generation seeds as with a typical personalized model.

|

| 28 |

+

|

| 29 |

+

## Inversion

|

| 30 |

+

We provide an interactive notebook for inverting a single image into a model in *w2w* space in `inversion/inversion_real.ipynb`. Instructions are provided in the notebook. We provide another notebook that with an example of inverting an out-of-distribution identity in `inversion/inversion_ood.ipynb`. Assets for these notebooks are provided in `inversion/images/` and you can place your own assets in there.

|

| 31 |

+

|

| 32 |

+

Additionally, we provide an example script `run_inversion.sh` for running the inversion in `invert.py`. You can run the command:

|

| 33 |

+

```

|

| 34 |

+

$ bash inversion/run_inversion.sh

|

| 35 |

+

```

|

| 36 |

+

The details on the various arguments are provided in `invert.py`.

|

| 37 |

+

|

| 38 |

+

## Editing

|

| 39 |

+

We provide an interactive notebook for editing the identity encoded in a model in `editing/identity_editing.ipynb`. Instructions are provided in the notebook. Another notebook is provided which shows how to compose multiple attribute edits together in `editing/multiple_edits.ipynb`.

|

| 40 |

+

|

| 41 |

+

## Loading and Saving Models

|

| 42 |

+

Various notebooks provide examples on how to save models either as low dimensional *w2w* models (represented by principal component coefficients), or as models compatible with standard LoRA such as with Diffusers [pipelines](https://huggingface.co/docs/diffusers/en/api/pipelines/overview). We provide a notebook in `other/loading.ipynb`that demonstrates how these weights can be loaded into either format.

|

| 43 |

+

|

| 44 |

+

## Acknowledgments

|

| 45 |

+

Our code is based on implementations from the following repos:

|

| 46 |

+

|

| 47 |

+

>* [PEFT](https://github.com/huggingface/peft)

|

| 48 |

+

>* [Concept Sliders](https://github.com/rohitgandikota/sliders)

|

| 49 |

+

>* [Diffusers](https://github.com/huggingface/diffusers)

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

## Citation

|

| 53 |

+

If you found this repository useful please consider starring ⭐ and citing:

|

| 54 |

+

```

|

| 55 |

+

@misc{dravid2024interpreting,

|

| 56 |

+

title={Interpreting the Weight Space of Customized Diffusion Models},

|

| 57 |

+

author={Amil Dravid and Yossi Gandelsman and Kuan-Chieh Wang and Rameen Abdal and Gordon Wetzstein and Alexei A. Efros and Kfir Aberman},

|

| 58 |

+

year={2024},

|

| 59 |

+

eprint={2406.09413}

|

| 60 |

+

}

|

| 61 |

+

```

|

assets/teaser.jpg

ADDED

|

Git LFS Details

|

docs/images/compose_all.jpeg

ADDED

|

docs/images/continuous.jpeg

ADDED

|

docs/images/edits/0_0_4.jpg

ADDED

|

docs/images/edits/0_1_0.jpg

ADDED

|

docs/images/edits/0_1_1.jpg

ADDED

|

docs/images/edits/0_1_2.jpg

ADDED

|

docs/images/edits/0_1_3.jpg

ADDED

|

docs/images/edits/0_1_4.jpg

ADDED

|

docs/images/edits/0_2_4.jpg

ADDED

|

docs/images/edits/0_3_0.jpg

ADDED

|

docs/images/edits/0_3_1.jpg

ADDED

|

docs/images/edits/0_3_2.jpg

ADDED

|

docs/images/edits/0_3_3.jpg

ADDED

|

docs/images/edits/0_3_4.jpg

ADDED

|

docs/images/edits/0_4_0.jpg

ADDED

|

docs/images/edits/0_4_1.jpg

ADDED

|

docs/images/edits/0_4_2.jpg

ADDED

|

docs/images/edits/0_4_3.jpg

ADDED

|

docs/images/edits/1_0_0.jpg

ADDED

|

docs/images/edits/1_0_1.jpg

ADDED

|

docs/images/edits/1_0_2.jpg

ADDED

|

docs/images/edits/1_0_3.jpg

ADDED

|

docs/images/edits/1_1_0.jpg

ADDED

|

docs/images/edits/1_1_1.jpg

ADDED

|

docs/images/edits/1_1_2.jpg

ADDED

|

docs/images/edits/1_1_3.jpg

ADDED

|

docs/images/edits/1_1_4.jpg

ADDED

|

docs/images/edits/1_2_0.jpg

ADDED

|

docs/images/edits/1_2_2.jpg

ADDED

|

docs/images/edits/1_2_3.jpg

ADDED

|

docs/images/edits/1_3_0.jpg

ADDED

|

docs/images/edits/1_3_1.jpg

ADDED

|

docs/images/edits/1_3_2.jpg

ADDED

|

docs/images/edits/1_3_3.jpg

ADDED

|

docs/images/edits/1_3_4.jpg

ADDED

|

docs/images/edits/1_4_4.jpg

ADDED

|

docs/images/edits/2_0_0.jpg

ADDED

|

docs/images/edits/2_0_1.jpg

ADDED

|

docs/images/edits/2_0_2.jpg

ADDED

|

docs/images/edits/2_0_3.jpg

ADDED

|

docs/images/edits/2_0_4.jpg

ADDED

|

docs/images/edits/2_1_0.jpg

ADDED

|

docs/images/edits/2_1_1.jpg

ADDED

|

docs/images/edits/2_1_2.jpg

ADDED

|

docs/images/edits/2_1_3.jpg

ADDED

|

docs/images/edits/2_2_0.jpg

ADDED

|