Spaces:

Running

This demo showcases a lightweight model for speech-driven talking-face synthesis, a 28× Compressed Wav2Lip. The key features of our approach are:

- compact generator built by removing the residual blocks and reducing the channel width from Wav2Lip.

- knowledge distillation to effectively train the small-capacity generator without adversarial learning.

- selective quantization to accelerate inference on edge GPUs without noticeable performance degradation.

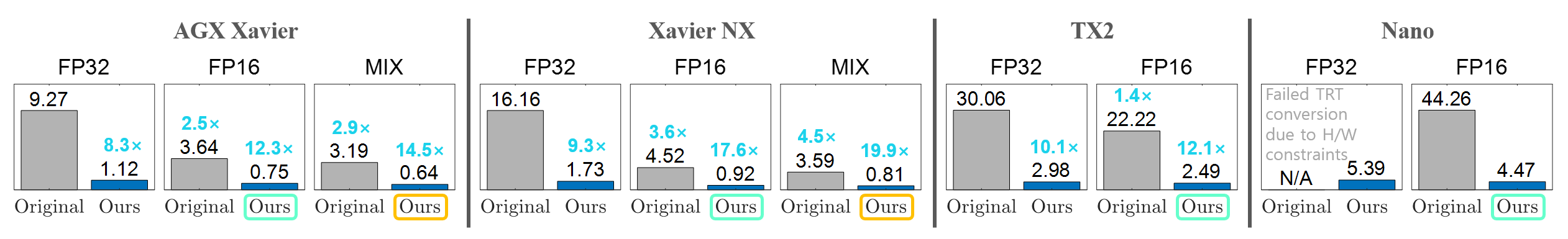

The below figure shows a latency comparison at different precisions on NVIDIA Jetson edge GPUs, highlighting a 8× to 17× speedup at FP16 and a 19× speedup on Xavier NX at mixed precision.

The generation speed may vary depending on network traffic. Nevertheless, our compresed Wav2Lip consistently delivers a faster inference than the original model, while maintaining similar visual quality. Different from the paper, in this demo, we measure total processing time and FPS throughout loading the preprocessed video and audio, generating with the model, and merging lip-synced facial images with the original video.

Notice

- This work was accepted to [Demo] ICCV 2023 Demo Track; [Paper] On-Device Intelligence Workshop (ODIW) @ MLSys 2023; [Poster] NVIDIA GPU Technology Conference (GTC) as Poster Spotlight.

- We thank NVIDIA Applied Research Accelerator Program for supporting this research and Wav2Lip's Authors for their pioneering research.