Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .gitattributes +11 -0

- .gitignore +162 -0

- README.md +46 -12

- app_ctrlx.py +412 -0

- assets/images/bear_avocado__spatext.jpg +0 -0

- assets/images/bedroom__sketch.jpg +0 -0

- assets/images/cat__mesh.jpg +0 -0

- assets/images/cat__point_cloud.jpg +0 -0

- assets/images/dog__sketch.jpg +0 -0

- assets/images/fruit_bowl.jpg +0 -0

- assets/images/grapes.jpg +0 -0

- assets/images/horse.jpg +0 -0

- assets/images/horse__point_cloud.jpg +0 -0

- assets/images/knight__humanoid.jpg +0 -0

- assets/images/library__mesh.jpg +0 -0

- assets/images/living_room__seg.jpg +0 -0

- assets/images/living_room_modern.jpg +0 -0

- assets/images/man_park.jpg +0 -0

- assets/images/person__mesh.jpg +0 -0

- assets/images/running__pose.jpg +0 -0

- assets/images/squirrel.jpg +0 -0

- assets/images/tiger.jpg +0 -0

- assets/images/van_gogh.jpg +0 -0

- ctrl_x/__init__.py +0 -0

- ctrl_x/pipelines/__init__.py +0 -0

- ctrl_x/pipelines/pipeline_sdxl.py +665 -0

- ctrl_x/utils/__init__.py +3 -0

- ctrl_x/utils/feature.py +79 -0

- ctrl_x/utils/media.py +21 -0

- ctrl_x/utils/sdxl.py +274 -0

- ctrl_x/utils/utils.py +88 -0

- docs/assets/bootstrap.min.css +0 -0

- docs/assets/cross_image_attention.jpg +3 -0

- docs/assets/ctrl-x.jpg +3 -0

- docs/assets/font.css +37 -0

- docs/assets/freecontrol.jpg +3 -0

- docs/assets/genforce.png +0 -0

- docs/assets/pipeline.jpg +3 -0

- docs/assets/results_animatediff.mp4 +3 -0

- docs/assets/results_multi_subject.jpg +3 -0

- docs/assets/results_struct+app.jpg +3 -0

- docs/assets/results_struct+app_2.jpg +3 -0

- docs/assets/results_struct+prompt.jpg +3 -0

- docs/assets/style.css +139 -0

- docs/assets/teaser_github.jpg +3 -0

- docs/assets/teaser_small.jpg +3 -0

- docs/index.html +186 -0

- environment.yaml +125 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,14 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

docs/assets/cross_image_attention.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

docs/assets/ctrl-x.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

docs/assets/freecontrol.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

docs/assets/pipeline.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

docs/assets/results_animatediff.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

docs/assets/results_multi_subject.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

docs/assets/results_struct+app.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

docs/assets/results_struct+app_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

docs/assets/results_struct+prompt.jpg filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

docs/assets/teaser_github.jpg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

docs/assets/teaser_small.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,162 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

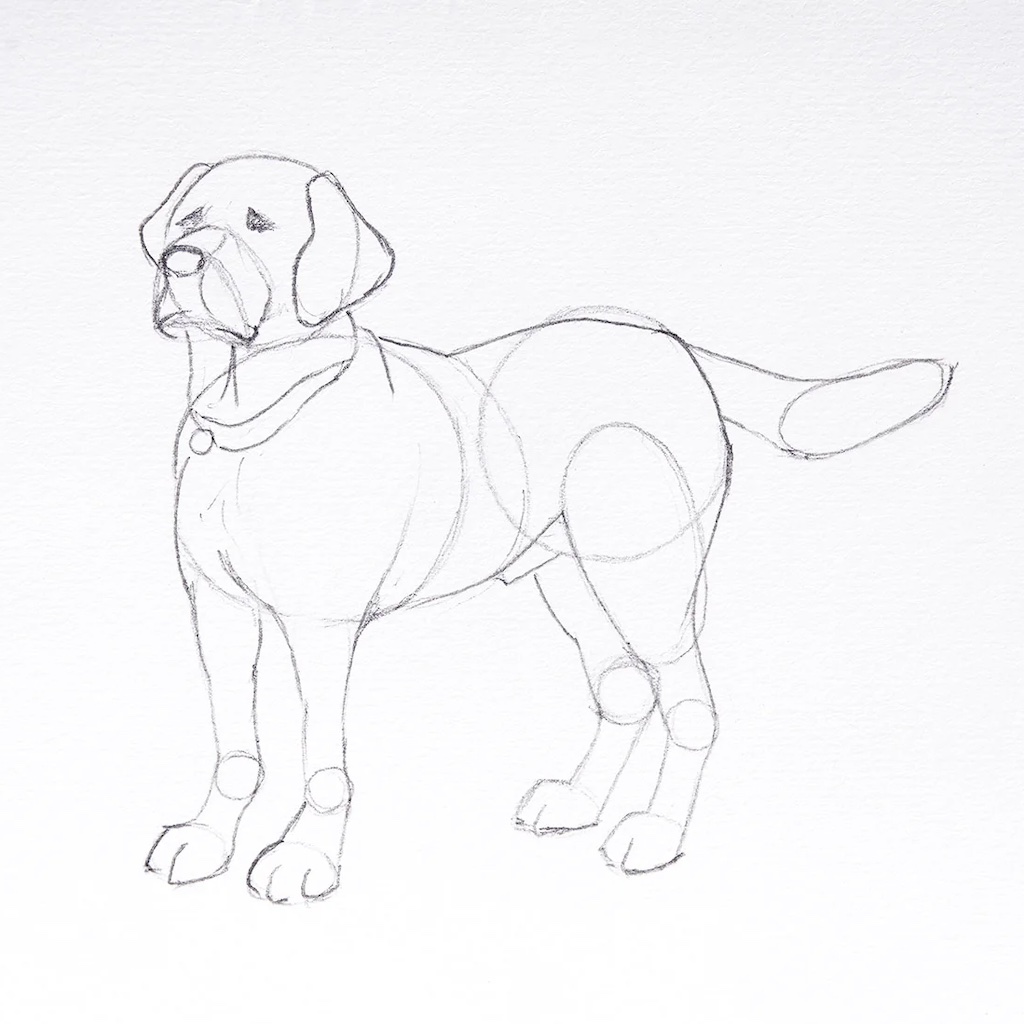

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

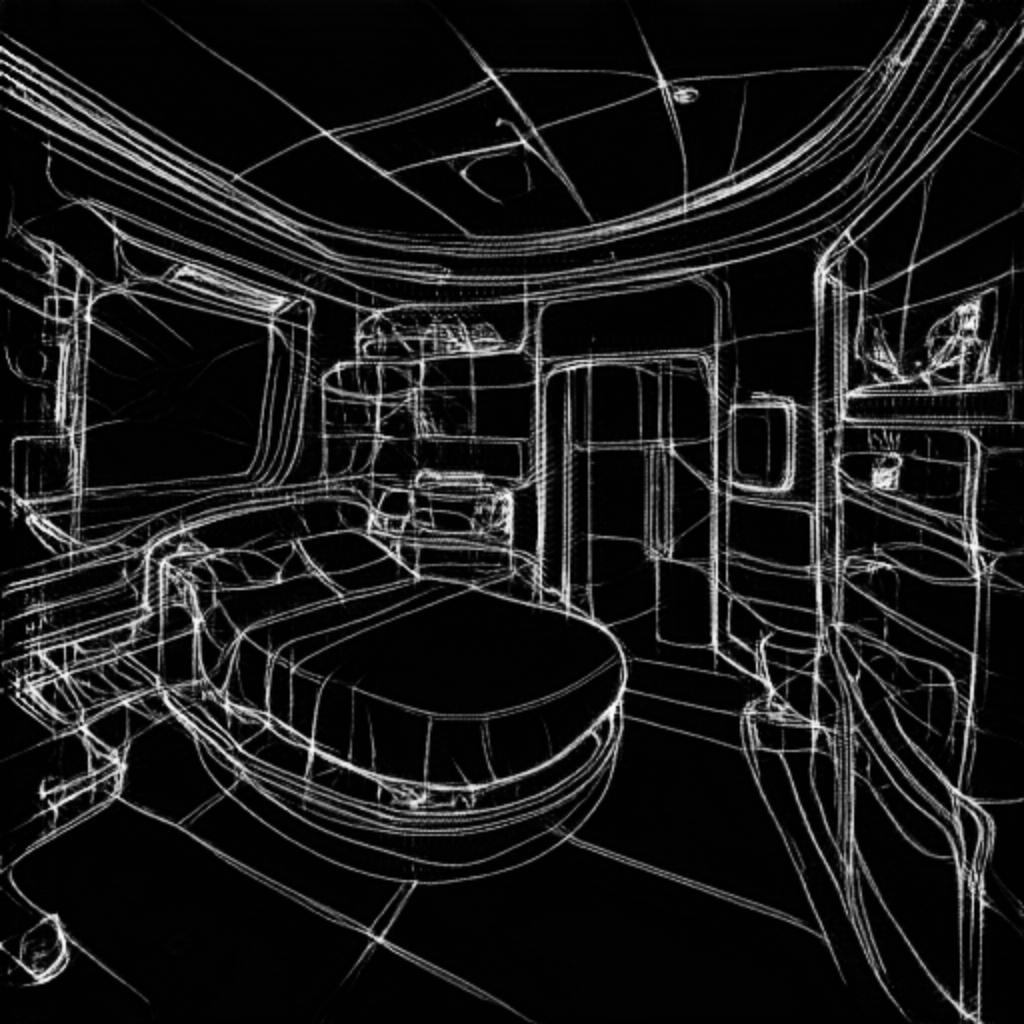

|

|

|

|

|

|

|

|

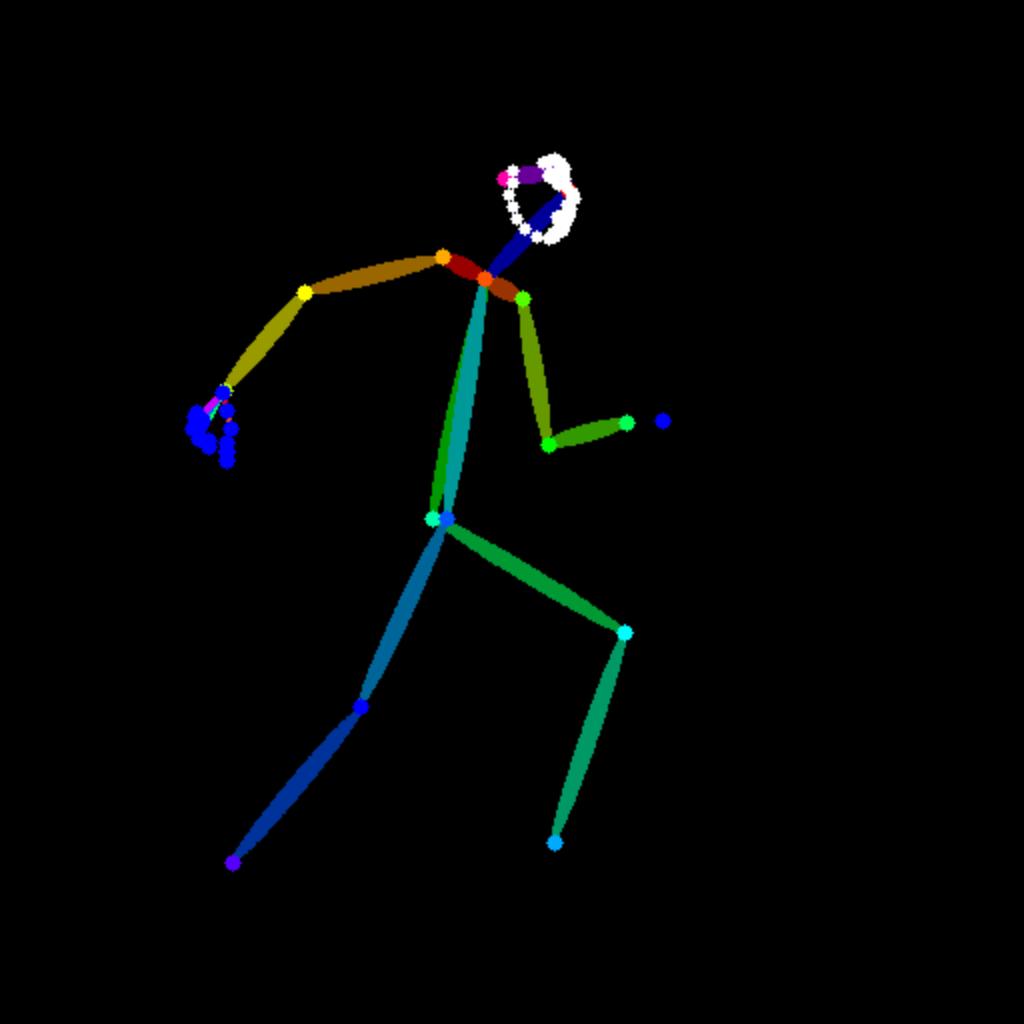

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

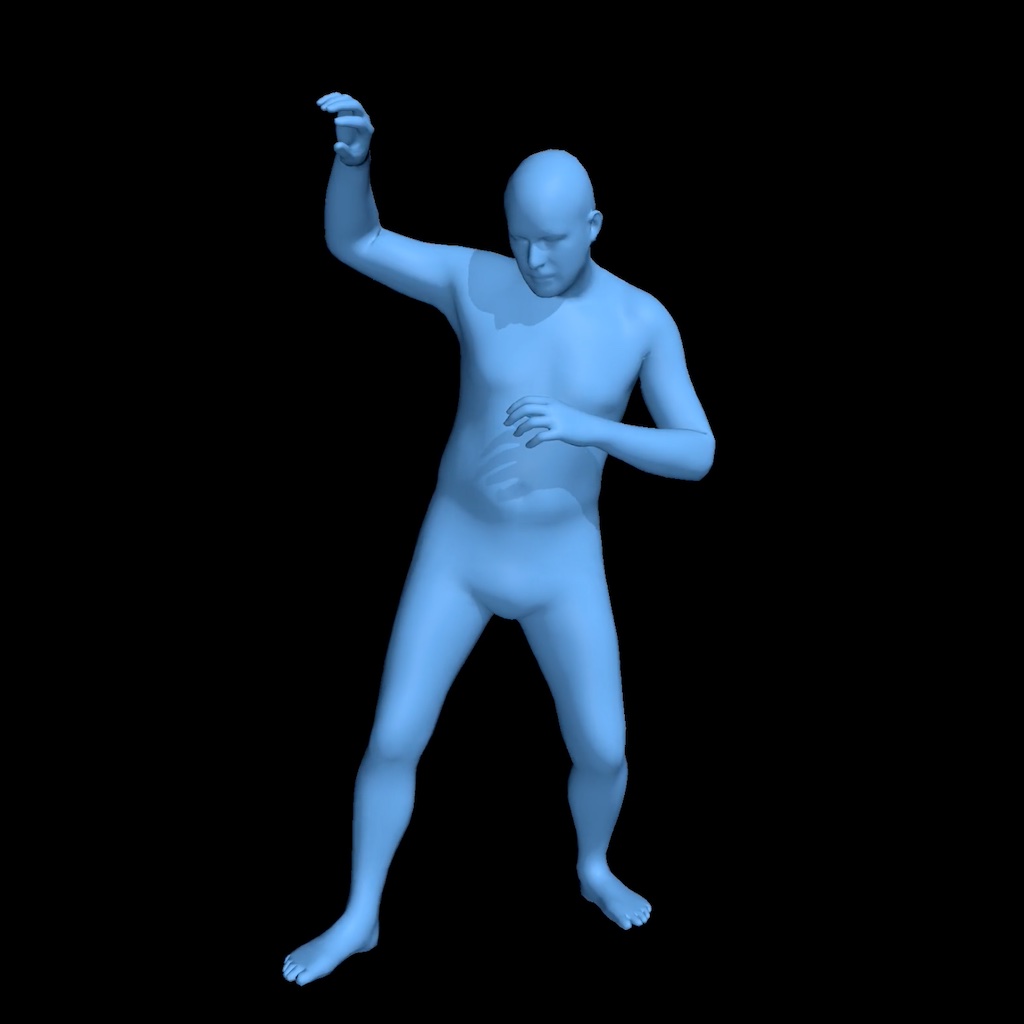

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 110 |

+

.pdm.toml

|

| 111 |

+

.pdm-python

|

| 112 |

+

.pdm-build/

|

| 113 |

+

|

| 114 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 115 |

+

__pypackages__/

|

| 116 |

+

|

| 117 |

+

# Celery stuff

|

| 118 |

+

celerybeat-schedule

|

| 119 |

+

celerybeat.pid

|

| 120 |

+

|

| 121 |

+

# SageMath parsed files

|

| 122 |

+

*.sage.py

|

| 123 |

+

|

| 124 |

+

# Environments

|

| 125 |

+

.env

|

| 126 |

+

.venv

|

| 127 |

+

env/

|

| 128 |

+

venv/

|

| 129 |

+

ENV/

|

| 130 |

+

env.bak/

|

| 131 |

+

venv.bak/

|

| 132 |

+

|

| 133 |

+

# Spyder project settings

|

| 134 |

+

.spyderproject

|

| 135 |

+

.spyproject

|

| 136 |

+

|

| 137 |

+

# Rope project settings

|

| 138 |

+

.ropeproject

|

| 139 |

+

|

| 140 |

+

# mkdocs documentation

|

| 141 |

+

/site

|

| 142 |

+

|

| 143 |

+

# mypy

|

| 144 |

+

.mypy_cache/

|

| 145 |

+

.dmypy.json

|

| 146 |

+

dmypy.json

|

| 147 |

+

|

| 148 |

+

# Pyre type checker

|

| 149 |

+

.pyre/

|

| 150 |

+

|

| 151 |

+

# pytype static type analyzer

|

| 152 |

+

.pytype/

|

| 153 |

+

|

| 154 |

+

# Cython debug symbols

|

| 155 |

+

cython_debug/

|

| 156 |

+

|

| 157 |

+

# PyCharm

|

| 158 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 159 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 160 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 161 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 162 |

+

#.idea/

|

README.md

CHANGED

|

@@ -1,12 +1,46 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Ctrl-X: Controlling Structure and Appearance for Text-To-Image Generation Without Guidance (NeurIPS 2024)

|

| 2 |

+

|

| 3 |

+

<a href="https://arxiv.org/abs/2406.07540"><img src="https://img.shields.io/badge/arXiv-Paper-red"></a>

|

| 4 |

+

<a href="https://genforce.github.io/ctrl-x"><img src="https://img.shields.io/badge/Project-Page-yellow"></a>

|

| 5 |

+

[](https://github.com/genforce/ctrl-x)

|

| 6 |

+

|

| 7 |

+

[Kuan Heng Lin](https://kuanhenglin.github.io)<sup>1*</sup>, [Sicheng Mo](https://sichengmo.github.io/)<sup>1*</sup>, [Ben Klingher](https://bklingher.github.io)<sup>1</sup>, [Fangzhou Mu](https://pages.cs.wisc.edu/~fmu/)<sup>2</sup>, [Bolei Zhou](https://boleizhou.github.io/)<sup>1</sup> <br>

|

| 8 |

+

<sup>1</sup>UCLA <sup>2</sup>NVIDIA <br>

|

| 9 |

+

<sup>*</sup>Equal contribution <br>

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

## Getting started

|

| 14 |

+

|

| 15 |

+

### Environment setup

|

| 16 |

+

|

| 17 |

+

Our code is built on top of [`diffusers v0.28.0`](https://github.com/huggingface/diffusers). To set up the environment, please run the following.

|

| 18 |

+

```

|

| 19 |

+

conda env create -f environment.yaml

|

| 20 |

+

conda activate ctrlx

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

### Gradio demo

|

| 24 |

+

|

| 25 |

+

We provide a user interface for testing our method. Running the following command starts the demo.

|

| 26 |

+

```

|

| 27 |

+

python3 app_ctrlx.py

|

| 28 |

+

```

|

| 29 |

+

Have fun playing around! :D

|

| 30 |

+

|

| 31 |

+

## Contact

|

| 32 |

+

|

| 33 |

+

For any questions, thoughts, discussions, and any other things you want to reach out for, please contact [Kuan Heng (Jordan) Lin](https://kuanhenglin.github.io) (kuanhenglin@ucla.edu).

|

| 34 |

+

|

| 35 |

+

## Reference

|

| 36 |

+

|

| 37 |

+

If you use our code in your research, please cite the following work.

|

| 38 |

+

|

| 39 |

+

```bibtex

|

| 40 |

+

@inproceedings{lin2024ctrlx,

|

| 41 |

+

author = {Lin, {Kuan Heng} and Mo, Sicheng and Klingher, Ben and Mu, Fangzhou and Zhou, Bolei},

|

| 42 |

+

booktitle = {Advances in Neural Information Processing Systems},

|

| 43 |

+

title = {Ctrl-X: Controlling Structure and Appearance for Text-To-Image Generation Without Guidance},

|

| 44 |

+

year = {2024}

|

| 45 |

+

}

|

| 46 |

+

```

|

app_ctrlx.py

ADDED

|

@@ -0,0 +1,412 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from argparse import ArgumentParser

|

| 2 |

+

|

| 3 |

+

from diffusers import DDIMScheduler, StableDiffusionXLImg2ImgPipeline

|

| 4 |

+

import gradio as gr

|

| 5 |

+

import torch

|

| 6 |

+

import yaml

|

| 7 |

+

|

| 8 |

+

from ctrl_x.pipelines.pipeline_sdxl import CtrlXStableDiffusionXLPipeline

|

| 9 |

+

from ctrl_x.utils import *

|

| 10 |

+

from ctrl_x.utils.sdxl import *

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

parser = ArgumentParser()

|

| 14 |

+

parser.add_argument("-m", "--model", type=str, default=None) # Optionally, load model checkpoint from single file

|

| 15 |

+

args = parser.parse_args()

|

| 16 |

+

|

| 17 |

+

torch.backends.cudnn.enabled = False # Sometimes necessary to suppress CUDNN_STATUS_NOT_SUPPORTED

|

| 18 |

+

|

| 19 |

+

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

|

| 20 |

+

|

| 21 |

+

model_id_or_path = "stabilityai/stable-diffusion-xl-base-1.0"

|

| 22 |

+

refiner_id_or_path = "stabilityai/stable-diffusion-xl-refiner-1.0"

|

| 23 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 24 |

+

variant = "fp16" if device == "cuda" else "fp32"

|

| 25 |

+

|

| 26 |

+

scheduler = DDIMScheduler.from_config(model_id_or_path, subfolder="scheduler") # TODO: Support other schedulers

|

| 27 |

+

if args.model is None:

|

| 28 |

+

pipe = CtrlXStableDiffusionXLPipeline.from_pretrained(

|

| 29 |

+

model_id_or_path, scheduler=scheduler, torch_dtype=torch_dtype, variant=variant, use_safetensors=True

|

| 30 |

+

)

|

| 31 |

+

else:

|

| 32 |

+

print(f"Using weights {args.model} for SDXL base model.")

|

| 33 |

+

pipe = CtrlXStableDiffusionXLPipeline.from_single_file(args.model, scheduler=scheduler, torch_dtype=torch_dtype)

|

| 34 |

+

refiner = StableDiffusionXLImg2ImgPipeline.from_pretrained(

|

| 35 |

+

refiner_id_or_path, scheduler=scheduler, text_encoder_2=pipe.text_encoder_2, vae=pipe.vae,

|

| 36 |

+

torch_dtype=torch_dtype, variant=variant, use_safetensors=True,

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

if torch.cuda.is_available():

|

| 40 |

+

pipe = pipe.to("cuda")

|

| 41 |

+

refiner = refiner.to("cuda")

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def get_control_config(structure_schedule, appearance_schedule):

|

| 45 |

+

s = structure_schedule

|

| 46 |

+

a = appearance_schedule

|

| 47 |

+

|

| 48 |

+

control_config =\

|

| 49 |

+

f"""control_schedule:

|

| 50 |

+

# structure_conv structure_attn appearance_attn conv/attn

|

| 51 |

+

encoder: # (num layers)

|

| 52 |

+

0: [[ ], [ ], [ ]] # 2/0

|

| 53 |

+

1: [[ ], [ ], [{a}, {a} ]] # 2/2

|

| 54 |

+

2: [[ ], [ ], [{a}, {a} ]] # 2/2

|

| 55 |

+

middle: [[ ], [ ], [ ]] # 2/1

|

| 56 |

+

decoder:

|

| 57 |

+

0: [[{s} ], [{s}, {s}, {s}], [0.0, {a}, {a}]] # 3/3

|

| 58 |

+

1: [[ ], [ ], [{a}, {a} ]] # 3/3

|

| 59 |

+

2: [[ ], [ ], [ ]] # 3/0

|

| 60 |

+

|

| 61 |

+

control_target:

|

| 62 |

+

- [output_tensor] # structure_conv choices: {{hidden_states, output_tensor}}

|

| 63 |

+

- [query, key] # structure_attn choices: {{query, key, value}}

|

| 64 |

+

- [before] # appearance_attn choices: {{before, value, after}}

|

| 65 |

+

|

| 66 |

+

self_recurrence_schedule:

|

| 67 |

+

- [0.1, 0.5, 2] # format: [start, end, num_recurrence]"""

|

| 68 |

+

|

| 69 |

+

return control_config

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

css = """

|

| 73 |

+

.config textarea {font-family: monospace; font-size: 80%; white-space: pre}

|

| 74 |

+

.mono {font-family: monospace}

|

| 75 |

+

"""

|

| 76 |

+

|

| 77 |

+

title = """

|

| 78 |

+

<div style="display: flex; align-items: center; justify-content: center;margin-bottom: -15px">

|

| 79 |

+

<h1 style="margin-left: 12px;text-align: center;display: inline-block">

|

| 80 |

+

Ctrl-X: Controlling Structure and Appearance for Text-To-Image Generation Without Guidance

|

| 81 |

+

</h1>

|

| 82 |

+

<h3 style="display: inline-block; margin-left: 10px; margin-top: 7.5px; font-weight: 500">

|

| 83 |

+

SDXL v1.0

|

| 84 |

+

</h3>

|

| 85 |

+

</div>

|

| 86 |

+

<div style="display: flex; align-items: center; justify-content: center;margin-bottom: 25px">

|

| 87 |

+

<h3 style="text-align: center">

|

| 88 |

+

[<a href="https://genforce.github.io/ctrl-x/">Page</a>]

|

| 89 |

+

|

| 90 |

+

[<a href="https://arxiv.org/abs/2406.07540">Paper</a>]

|

| 91 |

+

|

| 92 |

+

[<a href="https://github.com/genforce/ctrl-x">Code</a>]

|

| 93 |

+

</h3>

|

| 94 |

+

</div>

|

| 95 |

+

<div>

|

| 96 |

+

<p>

|

| 97 |

+

<b>Ctrl-X</b> is a simple training-free and guidance-free framework for text-to-image (T2I) generation with

|

| 98 |

+

structure and appearance control. Given structure and appearance images, Ctrl-X designs feedforward structure

|

| 99 |

+

control to enable structure alignment with the arbitrary structure image and semantic-aware appearance transfer

|

| 100 |

+

to facilitate the appearance transfer from the appearance image.

|

| 101 |

+

</p>

|

| 102 |

+

<p>

|

| 103 |

+

Here are some notes and tips for this demo:

|

| 104 |

+

</p>

|

| 105 |

+

<ul>

|

| 106 |

+

<li> On input images:

|

| 107 |

+

<ul>

|

| 108 |

+

<li>

|

| 109 |

+

If both the structure and appearance images are provided, then Ctrl-X does <i>structure and

|

| 110 |

+

appearance</i> control.

|

| 111 |

+

</li>

|

| 112 |

+

<li>

|

| 113 |

+

If only the structure image is provided, then Ctrl-X does <i>structure-only</i> control and the

|

| 114 |

+

appearance image is jointly generated with the output image.

|

| 115 |

+

</li>

|

| 116 |

+

<li>

|

| 117 |

+

Similarly, if only the appearance image is provided, then Ctrl-X does <i>appearance-only</i>

|

| 118 |

+

control.

|

| 119 |

+

</li>

|

| 120 |

+

</ul>

|

| 121 |

+

</li>

|

| 122 |

+

<li> On prompts:

|

| 123 |

+

<ul>

|

| 124 |

+

<li>

|

| 125 |

+

Though the output prompt can affect the output image to a noticeable extent, the "accuracy" of the

|

| 126 |

+

structure and appearance prompts are not impactful to the final image.

|

| 127 |

+

</li>

|

| 128 |

+

<li>

|

| 129 |

+

If the structure or appearance prompt is left blank, then it uses the (non-optional) output prompt

|

| 130 |

+

by default.

|

| 131 |

+

</li>

|

| 132 |

+

</ul>

|

| 133 |

+

</li>

|

| 134 |

+

<li> On control schedules:

|

| 135 |

+

<ul>

|

| 136 |

+

<li>

|

| 137 |

+

When "Use advanced config" is <b>OFF</b>, the demo uses the structure guidance

|

| 138 |

+

(<span class="mono">structure_conv</span> and <span class="mono">structure_attn</span>

|

| 139 |

+

in the advanced config) and appearance guidance (<span class="mono">appearance_attn</span> in the

|

| 140 |

+

advanced config) sliders to change the control schedules.

|

| 141 |

+

</li>

|

| 142 |

+

<li>

|

| 143 |

+

Otherwise, the demo uses "Advanced control config," which allows per-layer structure and

|

| 144 |

+

appearance schedule control, along with self-recurrence control. <i>This should be used

|

| 145 |

+

carefully</i>, and we recommend switching "Use advanced config" <b>OFF</b> in most cases. (For the

|

| 146 |

+

examples provided at the bottom of the demo, the advanced config uses the default schedules that

|

| 147 |

+

may not be the best settings for these examples.)

|

| 148 |

+

</li>

|

| 149 |

+

</ul>

|

| 150 |

+

</li>

|

| 151 |

+

</ul>

|

| 152 |

+

<p>

|

| 153 |

+

Have fun! :D

|

| 154 |

+

</p>

|

| 155 |

+

</div>

|

| 156 |

+

"""

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

def inference(

|

| 160 |

+

structure_image, appearance_image,

|

| 161 |

+

prompt, structure_prompt, appearance_prompt,

|

| 162 |

+

positive_prompt, negative_prompt,

|

| 163 |

+

guidance_scale, structure_guidance_scale, appearance_guidance_scale,

|

| 164 |

+

num_inference_steps, eta, seed,

|

| 165 |

+

width, height,

|

| 166 |

+

structure_schedule, appearance_schedule, use_advanced_config,

|

| 167 |

+

control_config,

|

| 168 |

+

):

|

| 169 |

+

torch.manual_seed(seed)

|

| 170 |

+

|

| 171 |

+

pipe.scheduler.set_timesteps(num_inference_steps, device=device)

|

| 172 |

+

timesteps = pipe.scheduler.timesteps

|

| 173 |

+

|

| 174 |

+

print(f"\nUsing the following control config (use_advanced_config={use_advanced_config}):")

|

| 175 |

+

if not use_advanced_config:

|

| 176 |

+

control_config = get_control_config(structure_schedule, appearance_schedule)

|

| 177 |

+

print(control_config, end="\n\n")

|

| 178 |

+

|

| 179 |

+

config = yaml.safe_load(control_config)

|

| 180 |

+

register_control(

|

| 181 |

+

model = pipe,

|

| 182 |

+

timesteps = timesteps,

|

| 183 |

+

control_schedule = config["control_schedule"],

|

| 184 |

+

control_target = config["control_target"],

|

| 185 |

+

)

|

| 186 |

+

|

| 187 |

+

pipe.safety_checker = None

|

| 188 |

+

pipe.requires_safety_checker = False

|

| 189 |

+

|

| 190 |

+

self_recurrence_schedule = get_self_recurrence_schedule(config["self_recurrence_schedule"], num_inference_steps)

|

| 191 |

+

|

| 192 |

+

pipe.set_progress_bar_config(desc="Ctrl-X inference")

|

| 193 |

+

refiner.set_progress_bar_config(desc="Refiner")

|

| 194 |

+

|

| 195 |

+

result, structure, appearance = pipe(

|

| 196 |

+

prompt = prompt,

|

| 197 |

+

structure_prompt = structure_prompt,

|

| 198 |

+

appearance_prompt = appearance_prompt,

|

| 199 |

+

structure_image = structure_image,

|

| 200 |

+

appearance_image = appearance_image,

|

| 201 |

+

num_inference_steps = num_inference_steps,

|

| 202 |

+

negative_prompt = negative_prompt,

|

| 203 |

+

positive_prompt = positive_prompt,

|

| 204 |

+

height = height,

|

| 205 |

+

width = width,

|

| 206 |

+

guidance_scale = guidance_scale,

|

| 207 |

+

structure_guidance_scale = structure_guidance_scale,

|

| 208 |

+

appearance_guidance_scale = appearance_guidance_scale,

|

| 209 |

+

eta = eta,

|

| 210 |

+

output_type = "pil",

|

| 211 |

+

return_dict = False,

|

| 212 |

+

control_schedule = config["control_schedule"],

|

| 213 |

+

self_recurrence_schedule = self_recurrence_schedule,

|

| 214 |

+

)

|

| 215 |

+

|

| 216 |

+

result_refiner = refiner(

|

| 217 |

+

image = pipe.refiner_args["latents"],

|

| 218 |

+

prompt = pipe.refiner_args["prompt"],

|

| 219 |

+

negative_prompt = pipe.refiner_args["negative_prompt"],

|

| 220 |

+

height = height,

|

| 221 |

+

width = width,

|

| 222 |

+

num_inference_steps = num_inference_steps,

|

| 223 |

+

guidance_scale = guidance_scale,

|

| 224 |

+

guidance_rescale = 0.7,

|

| 225 |

+

num_images_per_prompt = 1,

|

| 226 |

+

eta = eta,

|

| 227 |

+

output_type = "pil",

|

| 228 |

+

).images

|

| 229 |

+

del pipe.refiner_args

|

| 230 |

+

|

| 231 |

+

return [result[0], result_refiner[0], structure[0], appearance[0]]

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

with gr.Blocks(theme=gr.themes.Default(), css=css, title="Ctrl-X (SDXL v1.0)") as app:

|

| 235 |

+

gr.HTML(title)

|

| 236 |

+

|

| 237 |

+

with gr.Row():

|

| 238 |

+

|

| 239 |

+

with gr.Column(scale=55):

|

| 240 |

+

with gr.Group():

|

| 241 |

+

kwargs = {} # {"width": 400, "height": 400}

|

| 242 |

+

with gr.Row():

|

| 243 |

+

result = gr.Image(label="Output image", format="jpg", **kwargs)

|

| 244 |

+

result_refiner = gr.Image(label="Output image w/ refiner", format="jpg", **kwargs)

|

| 245 |

+

with gr.Row():

|

| 246 |

+

structure_recon = gr.Image(label="Structure image", format="jpg", **kwargs)

|

| 247 |

+

appearance_recon = gr.Image(label="Style image", format="jpg", **kwargs)

|

| 248 |

+

with gr.Row():

|

| 249 |

+

structure_image = gr.Image(label="Upload structure image (optional)", type="pil", **kwargs)

|

| 250 |

+

appearance_image = gr.Image(label="Upload appearance image (optional)", type="pil", **kwargs)

|

| 251 |

+

|

| 252 |

+

with gr.Column(scale=45):

|

| 253 |

+

with gr.Group():

|

| 254 |

+

with gr.Row():

|

| 255 |

+

structure_prompt = gr.Textbox(label="Structure prompt (optional)", placeholder="Prompt which describes the structure image")

|

| 256 |

+

appearance_prompt = gr.Textbox(label="Appearance prompt (optional)", placeholder="Prompt which describes the style image")

|

| 257 |

+

with gr.Row():

|

| 258 |

+

prompt = gr.Textbox(label="Output prompt", placeholder="Prompt which describes the output image")

|

| 259 |

+

with gr.Row():

|

| 260 |

+

positive_prompt = gr.Textbox(label="Positive prompt", value="high quality", placeholder="")

|

| 261 |

+

negative_prompt = gr.Textbox(label="Negative prompt", value="ugly, blurry, dark, low res, unrealistic", placeholder="")

|

| 262 |

+

with gr.Row():

|

| 263 |

+

guidance_scale = gr.Slider(label="Target guidance scale", value=5.0, minimum=1, maximum=10)

|

| 264 |

+

structure_guidance_scale = gr.Slider(label="Structure guidance scale", value=5.0, minimum=1, maximum=10)

|

| 265 |

+

appearance_guidance_scale = gr.Slider(label="Appearance guidance scale", value=5.0, minimum=1, maximum=10)

|

| 266 |

+

with gr.Row():

|

| 267 |

+

num_inference_steps = gr.Slider(label="# inference steps", value=50, minimum=1, maximum=200, step=1)

|

| 268 |

+

eta = gr.Slider(label="Eta (noise)", value=1.0, minimum=0, maximum=1.0, step=0.01)

|

| 269 |

+

seed = gr.Slider(0, 2147483647, label="Seed", value=90095, step=1)

|

| 270 |

+

with gr.Row():

|

| 271 |

+

width = gr.Slider(label="Width", value=1024, minimum=256, maximum=2048, step=pipe.vae_scale_factor)

|

| 272 |

+

height = gr.Slider(label="Height", value=1024, minimum=256, maximum=2048, step=pipe.vae_scale_factor)

|

| 273 |

+

with gr.Row():

|

| 274 |

+

structure_schedule = gr.Slider(label="Structure schedule", value=0.6, minimum=0.0, maximum=1.0, step=0.01, scale=2)

|

| 275 |

+

appearance_schedule = gr.Slider(label="Appearance schedule", value=0.6, minimum=0.0, maximum=1.0, step=0.01, scale=2)

|

| 276 |

+

use_advanced_config = gr.Checkbox(label="Use advanced config", value=False, scale=1)

|

| 277 |

+

with gr.Row():

|

| 278 |

+

control_config = gr.Textbox(

|

| 279 |

+

label="Advanced control config", lines=20, value=get_control_config(0.6, 0.6), elem_classes=["config"], visible=False,

|

| 280 |

+

)

|

| 281 |

+

use_advanced_config.change(

|

| 282 |

+

fn=lambda value: gr.update(visible=value), inputs=use_advanced_config, outputs=control_config,

|

| 283 |

+

)

|

| 284 |

+

with gr.Row():

|

| 285 |

+

generate = gr.Button(value="Run")

|

| 286 |

+

|

| 287 |

+

inputs = [

|

| 288 |

+

structure_image, appearance_image,

|

| 289 |

+

prompt, structure_prompt, appearance_prompt,

|

| 290 |

+

positive_prompt, negative_prompt,

|

| 291 |

+

guidance_scale, structure_guidance_scale, appearance_guidance_scale,

|

| 292 |

+

num_inference_steps, eta, seed,

|

| 293 |

+

width, height,

|

| 294 |

+

structure_schedule, appearance_schedule, use_advanced_config,

|

| 295 |

+

control_config,

|

| 296 |

+

]

|

| 297 |

+

outputs = [result, result_refiner, structure_recon, appearance_recon]

|

| 298 |

+

|

| 299 |

+

generate.click(inference, inputs=inputs, outputs=outputs)

|

| 300 |

+

|

| 301 |

+

examples = gr.Examples(

|

| 302 |

+

[

|

| 303 |

+

[

|

| 304 |

+

"assets/images/horse__point_cloud.jpg",

|

| 305 |

+

"assets/images/horse.jpg",

|

| 306 |

+

"a 3D point cloud of a horse",

|

| 307 |

+

"",

|

| 308 |

+

"a photo of a horse standing on grass",

|

| 309 |

+

0.6, 0.6,

|

| 310 |

+

],

|

| 311 |

+

[

|

| 312 |

+

"assets/images/cat__mesh.jpg",

|

| 313 |

+

"assets/images/tiger.jpg",

|

| 314 |

+

"a 3D mesh of a cat",

|

| 315 |

+

"",

|

| 316 |

+

"a photo of a tiger standing on snow",

|

| 317 |

+

0.6, 0.6,

|

| 318 |

+

],

|

| 319 |

+

[

|

| 320 |

+

"assets/images/dog__sketch.jpg",

|

| 321 |

+

"assets/images/squirrel.jpg",

|

| 322 |

+

"a sketch of a dog",

|

| 323 |

+

"",

|

| 324 |

+

"a photo of a squirrel",

|

| 325 |

+

0.6, 0.6,

|

| 326 |

+

],

|

| 327 |

+

[

|

| 328 |

+

"assets/images/living_room__seg.jpg",

|

| 329 |

+

"assets/images/van_gogh.jpg",

|

| 330 |

+

"a segmentation map of a living room",

|

| 331 |

+

"",

|

| 332 |

+

"a Van Gogh painting of a living room",

|

| 333 |

+

0.6, 0.6,

|

| 334 |

+

],

|

| 335 |

+

[

|

| 336 |

+

"assets/images/bedroom__sketch.jpg",

|

| 337 |

+

"assets/images/living_room_modern.jpg",

|

| 338 |

+

"a sketch of a bedroom",

|

| 339 |

+

"",

|

| 340 |

+

"a photo of a modern bedroom during sunset",

|

| 341 |

+

0.6, 0.6,

|

| 342 |

+

],

|

| 343 |

+

[

|

| 344 |

+

"assets/images/running__pose.jpg",

|

| 345 |

+

"assets/images/man_park.jpg",

|

| 346 |

+

"a pose image of a person running",

|

| 347 |

+

"",

|

| 348 |

+

"a photo of a man running in a park",

|

| 349 |

+

0.4, 0.6,

|

| 350 |

+

],

|

| 351 |

+

[

|

| 352 |

+

"assets/images/fruit_bowl.jpg",

|

| 353 |

+

"assets/images/grapes.jpg",

|

| 354 |

+

"a photo of a bowl of fruits",

|

| 355 |

+

"",

|

| 356 |

+

"a photo of a bowl of grapes in the trees",

|

| 357 |

+

0.6, 0.6,

|

| 358 |

+

],

|

| 359 |

+

[

|

| 360 |

+

"assets/images/bear_avocado__spatext.jpg",

|

| 361 |

+

None,

|

| 362 |

+

"a segmentation map of a bear and an avocado",

|

| 363 |

+

"",

|

| 364 |

+

"a realistic photo of a bear and an avocado in a forest",

|

| 365 |

+

0.6, 0.6,

|

| 366 |

+

],

|

| 367 |

+

[

|

| 368 |

+

"assets/images/cat__point_cloud.jpg",

|

| 369 |

+

None,

|

| 370 |

+

"a 3D point cloud of a cat",

|

| 371 |

+

"",

|

| 372 |

+

"an embroidery of a white cat sitting on a rock under the night sky",

|

| 373 |

+

0.6, 0.6,

|

| 374 |

+

],

|

| 375 |

+

[

|

| 376 |

+

"assets/images/library__mesh.jpg",

|

| 377 |

+

None,

|

| 378 |

+

"a 3D mesh of a library",

|

| 379 |

+

"",

|

| 380 |

+

"a Polaroid photo of an old library, sunlight streaming in",

|

| 381 |

+

0.6, 0.6,

|

| 382 |

+

],

|

| 383 |

+

[

|

| 384 |

+

"assets/images/knight__humanoid.jpg",

|

| 385 |

+

None,

|

| 386 |

+

"a 3D model of a person holding a sword and shield",

|

| 387 |

+

"",

|

| 388 |

+

"a photo of a medieval soldier standing on a barren field, raining",

|

| 389 |

+

0.6, 0.6,

|

| 390 |

+

],

|

| 391 |

+

[

|

| 392 |

+

"assets/images/person__mesh.jpg",

|

| 393 |

+

None,

|

| 394 |

+

"a 3D mesh of a person",

|

| 395 |

+

"",

|

| 396 |

+

"a photo of a Karate man performing in a cyberpunk city at night",

|

| 397 |

+

0.5, 0.6,

|

| 398 |

+

],

|

| 399 |

+

],

|

| 400 |

+

[

|

| 401 |

+

structure_image,

|

| 402 |

+

appearance_image,

|

| 403 |

+

structure_prompt,

|

| 404 |

+

appearance_prompt,

|

| 405 |

+

prompt,

|

| 406 |

+

structure_schedule,

|

| 407 |

+

appearance_schedule,

|

| 408 |

+

],

|

| 409 |

+

examples_per_page=50,

|

| 410 |

+

)

|

| 411 |

+

|

| 412 |

+

app.launch(debug=False, share=False)

|

assets/images/bear_avocado__spatext.jpg

ADDED

|

assets/images/bedroom__sketch.jpg

ADDED

|

assets/images/cat__mesh.jpg

ADDED

|

assets/images/cat__point_cloud.jpg

ADDED

|

assets/images/dog__sketch.jpg

ADDED

|

assets/images/fruit_bowl.jpg

ADDED

|

assets/images/grapes.jpg

ADDED

|

assets/images/horse.jpg

ADDED

|

assets/images/horse__point_cloud.jpg

ADDED

|

assets/images/knight__humanoid.jpg

ADDED

|

assets/images/library__mesh.jpg

ADDED

|

assets/images/living_room__seg.jpg

ADDED

|

assets/images/living_room_modern.jpg

ADDED

|

assets/images/man_park.jpg

ADDED

|

assets/images/person__mesh.jpg

ADDED

|

assets/images/running__pose.jpg

ADDED

|

assets/images/squirrel.jpg

ADDED

|

assets/images/tiger.jpg

ADDED

|

assets/images/van_gogh.jpg

ADDED

|

ctrl_x/__init__.py

ADDED

|

File without changes

|

ctrl_x/pipelines/__init__.py

ADDED

|

File without changes

|

ctrl_x/pipelines/pipeline_sdxl.py

ADDED

|

@@ -0,0 +1,665 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|