Spaces:

Runtime error

Runtime error

Commit

•

3380ee9

1

Parent(s):

eddc0a2

Initial commit

Browse files- .gitattributes +1 -0

- .gitignore +2 -0

- README.md +6 -4

- app.py +280 -0

- assets/images/cannibalization.png +0 -0

- assets/images/dynamic_demand.gif +3 -0

- assets/images/flywheel_1.png +0 -0

- assets/images/flywheel_2.png +0 -0

- assets/images/flywheel_3.png +0 -0

- assets/images/gaussian_process.gif +3 -0

- assets/images/ideal_case_demand.png +0 -0

- assets/images/ideal_case_demand_fitted.png +0 -0

- assets/images/ideal_case_optimal_profit.png +0 -0

- assets/images/ideal_case_profit_curve.png +0 -0

- assets/images/posterior_demand.png +0 -0

- assets/images/posterior_demand_2.png +0 -0

- assets/images/posterior_demand_sample.png +0 -0

- assets/images/posterior_demand_sample_2.png +0 -0

- assets/images/posterior_profit.png +0 -0

- assets/images/posterior_profit_2.png +0 -0

- assets/images/posterior_profit_sample.png +0 -0

- assets/images/posterior_profit_sample_2.png +0 -0

- assets/images/realistic_demand.png +0 -0

- assets/images/realistic_demand_latent_curve.png +0 -0

- assets/images/updated_prices_demand.png +0 -0

- assets/precalc_results/posterior_0.05.pkl +3 -0

- assets/precalc_results/posterior_0.1.pkl +3 -0

- assets/precalc_results/posterior_0.15.pkl +3 -0

- assets/precalc_results/posterior_0.2.pkl +3 -0

- assets/precalc_results/posterior_0.25.pkl +3 -0

- assets/precalc_results/posterior_0.3.pkl +3 -0

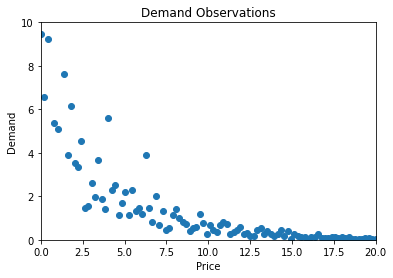

- assets/precalc_results/posterior_0.35.pkl +3 -0

- assets/precalc_results/posterior_0.4.pkl +3 -0

- assets/precalc_results/posterior_0.45.pkl +3 -0

- assets/precalc_results/posterior_0.5.pkl +3 -0

- assets/precalc_results/posterior_0.55.pkl +3 -0

- assets/precalc_results/posterior_0.6.pkl +3 -0

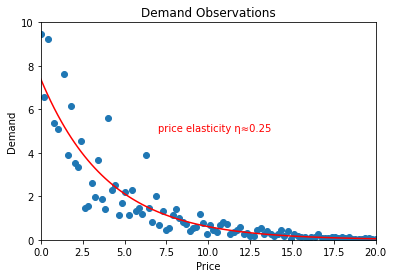

- assets/precalc_results/posterior_0.65.pkl +3 -0

- assets/precalc_results/posterior_0.7.pkl +3 -0

- assets/precalc_results/posterior_0.75.pkl +3 -0

- assets/precalc_results/posterior_0.8.pkl +3 -0

- assets/precalc_results/posterior_0.85.pkl +3 -0

- assets/precalc_results/posterior_0.9.pkl +3 -0

- assets/precalc_results/posterior_0.95.pkl +3 -0

- config.py +8 -0

- helpers/thompson_sampling.py +125 -0

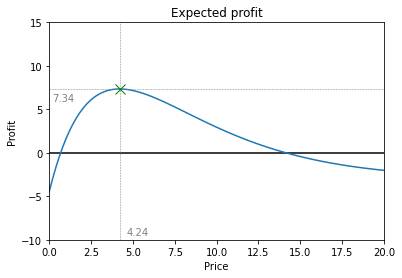

- requirements.txt +3 -0

- scripts/generate_posterior.py +33 -0

- scripts/posterior.py +19 -0

.gitattributes

CHANGED

|

@@ -29,3 +29,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

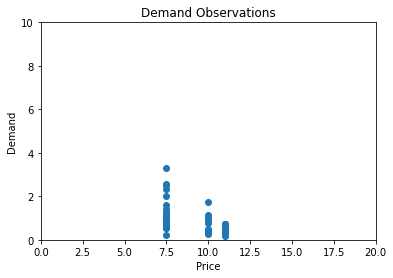

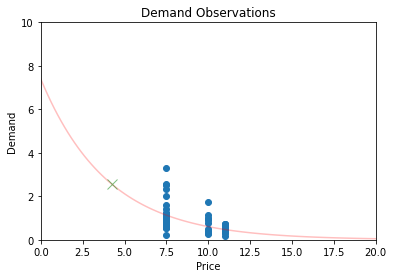

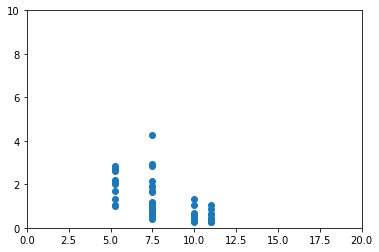

.gitignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

venv/

|

| 2 |

+

__pycache__/

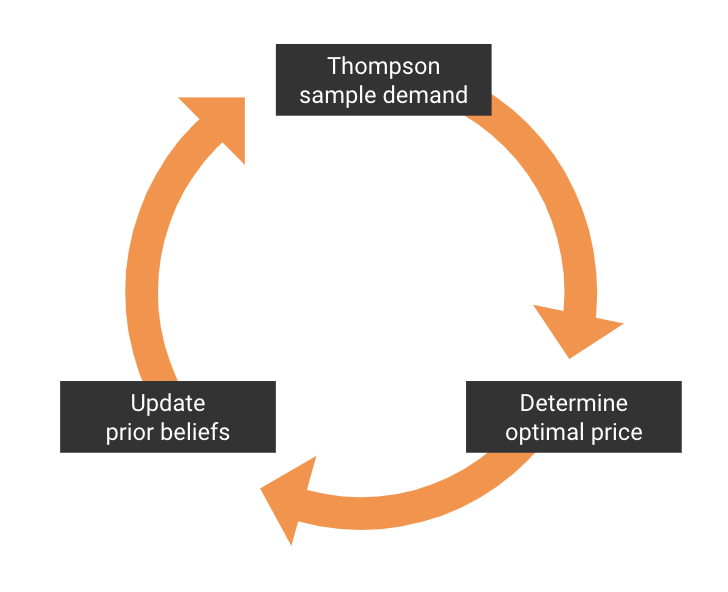

|

README.md

CHANGED

|

@@ -1,12 +1,14 @@

|

|

| 1 |

---

|

| 2 |

-

title: Dynamic Pricing

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: green

|

| 5 |

colorTo: gray

|

| 6 |

sdk: streamlit

|

| 7 |

-

sdk_version: 1.

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

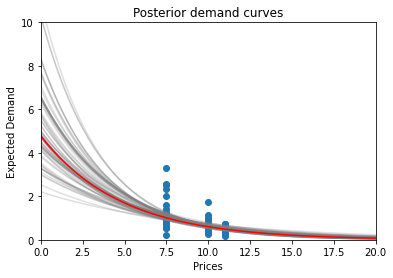

| 10 |

---

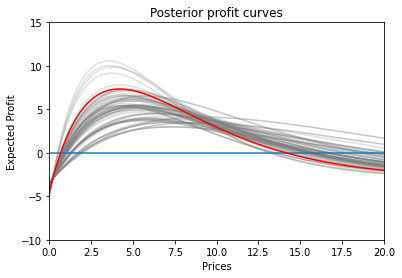

|

| 11 |

|

| 12 |

-

|

|

|

|

|

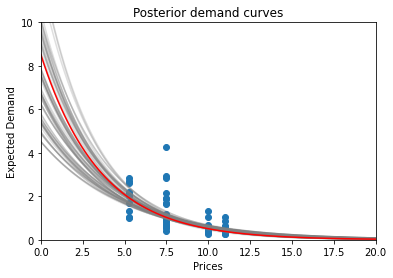

|

|

|

|

| 1 |

---

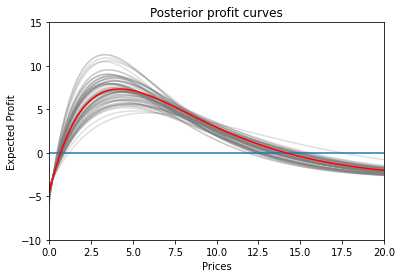

|

| 2 |

+

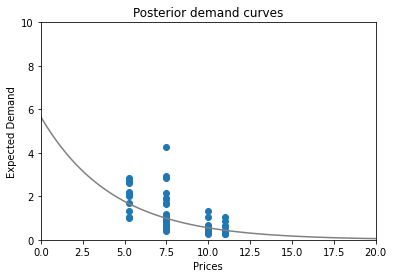

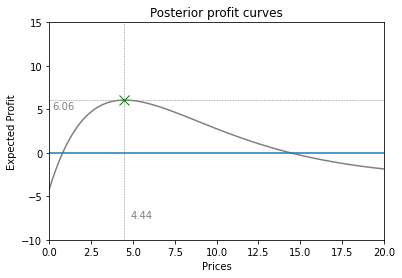

title: 💸 Dynamic Pricing 💸

|

| 3 |

+

emoji: 💸

|

| 4 |

colorFrom: green

|

| 5 |

colorTo: gray

|

| 6 |

sdk: streamlit

|

| 7 |

+

sdk_version: 1.13.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

+

This demo will explore the current state-of-the-art in dynamic pricing methods.

|

| 13 |

+

|

| 14 |

+

We will cover the motivation behind dynamic pricing and discuss how we can leverage Bayesian statistics, Thompson sampling and Gaussian processes to derive an optimal price-setting strategy.

|

app.py

ADDED

|

@@ -0,0 +1,280 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Streamlit entrypoint"""

|

| 2 |

+

|

| 3 |

+

import base64

|

| 4 |

+

import time

|

| 5 |

+

|

| 6 |

+

import numpy as np

|

| 7 |

+

import streamlit as st

|

| 8 |

+

import sympy

|

| 9 |

+

|

| 10 |

+

from helpers.thompson_sampling import ThompsonSampler

|

| 11 |

+

|

| 12 |

+

eta, a, p, D, profit, var_cost, fixed_cost = sympy.symbols("eta a p D Profit varcost fixedcost")

|

| 13 |

+

np.random.seed(42)

|

| 14 |

+

|

| 15 |

+

st.set_page_config(

|

| 16 |

+

page_title="💸 Dynamic Pricing 💸",

|

| 17 |

+

page_icon="💸",

|

| 18 |

+

layout="centered",

|

| 19 |

+

initial_sidebar_state="auto",

|

| 20 |

+

menu_items={

|

| 21 |

+

'Get help': None,

|

| 22 |

+

'Report a bug': None,

|

| 23 |

+

'About': "https://www.ml6.eu/",

|

| 24 |

+

}

|

| 25 |

+

)

|

| 26 |

+

|

| 27 |

+

st.title("💸 Dynamic Pricing 💸")

|

| 28 |

+

st.subheader("Setting optimal prices with Bayesian stats 📈")

|

| 29 |

+

|

| 30 |

+

# (1) Intro

|

| 31 |

+

st.header("Let's start with the basics 🏁")

|

| 32 |

+

|

| 33 |

+

st.markdown("The beginning is usually a good place to start so we'll kick things off there.")

|

| 34 |

+

st.markdown("""The one crucial piece information we need to find the optimal price is

|

| 35 |

+

**how demand behaves over different price points**. \nIf we can get make a decent guess of what we

|

| 36 |

+

can expect demand to be for a wide range of prices, we can figure out which price optimizes our target

|

| 37 |

+

(i.e., revenue, profit, ...).""")

|

| 38 |

+

st.markdown("""For the keen economists amongst you, this is beginning to sound a lot like a

|

| 39 |

+

**demand curve**.""")

|

| 40 |

+

|

| 41 |

+

st.markdown("""Estimating a demand curve, sounds easy enough right? \nLet's assume we have

|

| 42 |

+

demand with constant price elasticity; so a certain percent change in price will cause a

|

| 43 |

+

constant percent change in demand, independent of the price level. This is often seen as a

|

| 44 |

+

reasonable proxy for demand curves in the wild.""")

|

| 45 |

+

st.markdown("So our data will look something like this:")

|

| 46 |

+

st.image("assets/images/ideal_case_demand.png")

|

| 47 |

+

st.markdown("""Alright now we can get out our trusted regression toolbox and fit a nice curve

|

| 48 |

+

through the data because we know that our constant-elasticity demand function looks something

|

| 49 |

+

like this:""")

|

| 50 |

+

st.latex(sympy.latex(sympy.Eq(sympy.Function(D)(p), a*p**(-eta), evaluate=False)))

|

| 51 |

+

st.write("with shape parameter a and price elasticity η")

|

| 52 |

+

st.image("assets/images/ideal_case_demand_fitted.png")

|

| 53 |

+

st.markdown("""Now that we have a reasonable estimate of our demand, we can derive our expected

|

| 54 |

+

profit at different price points because we know the following holds:""")

|

| 55 |

+

st.latex(f"{profit} = {p}*{sympy.Function(D)(p)} - [{var_cost}*{sympy.Function(D)(p)} + {fixed_cost}]")

|

| 56 |

+

st.image("assets/images/ideal_case_profit_curve.png")

|

| 57 |

+

st.markdown("""Finally we can dust off our good old-fashioned high-school math and find the

|

| 58 |

+

price which we expect will optimize profit which is ultimately the goal of all this.""")

|

| 59 |

+

st.image("assets/images/ideal_case_optimal_profit.png")

|

| 60 |

+

st.markdown("""Voilà there you have it: we should price this product at 4.24 and we can expect

|

| 61 |

+

a bottom-line profit of 7.34""")

|

| 62 |

+

st.markdown("So can we kick back & relax now? \nWell, there are a few issues with what we just did.")

|

| 63 |

+

|

| 64 |

+

# (2) Dynamic demand curves

|

| 65 |

+

st.header("The demands they are a-changin' 🎸")

|

| 66 |

+

st.markdown("""We've got a first bit of bad news: unfortunately, you can't just estimate a demand

|

| 67 |

+

curve once and be done with it. \nWhy? Because demand is influenced by many factors (e.g., market

|

| 68 |

+

trends, competitor actions, human behavior, etc.) that tend to change a lot over time.""")

|

| 69 |

+

st.write("Below you can see an (exaggerated) example of what we're talking about:")

|

| 70 |

+

|

| 71 |

+

with open("assets/images/dynamic_demand.gif", "rb") as file_:

|

| 72 |

+

contents = file_.read()

|

| 73 |

+

data_url = base64.b64encode(contents).decode("utf-8")

|

| 74 |

+

|

| 75 |

+

st.markdown(

|

| 76 |

+

f'<img src="data:image/gif;base64,{data_url}" alt="dynamic demand">',

|

| 77 |

+

unsafe_allow_html=True,

|

| 78 |

+

)

|

| 79 |

+

st.markdown("""Now, you may think we can solve this issue by periodically re-estimating the demand

|

| 80 |

+

curve. \nAnd you would be very right! But also very wrong as this leads us nicely to the

|

| 81 |

+

next issue.""")

|

| 82 |

+

|

| 83 |

+

# (3) Constrained data

|

| 84 |

+

st.header("Where are we getting this data anyways? 🤔")

|

| 85 |

+

st.markdown("""So far, we have assumed that we get (and keep getting) data on demand levels at

|

| 86 |

+

different price points. \n

|

| 87 |

+

Not only is this assumption **unrealistic**, it is also very **undesirable**""")

|

| 88 |

+

st.markdown("""Why? Because getting demand data on a wide spectrum of price points implies that

|

| 89 |

+

we are spending a significant amount of time setting prices at levels that are either too high or

|

| 90 |

+

too low! \n

|

| 91 |

+

Which is ironically exactly the opposite of what we set out to achieve.""")

|

| 92 |

+

st.markdown("In practice, our demand will rather look something like this:")

|

| 93 |

+

st.image("assets/images/realistic_demand.png")

|

| 94 |

+

st.markdown("""As we can see, we have tried three price points in the past (€7.5, €10 and €11) for

|

| 95 |

+

which we have collected demand data.""")

|

| 96 |

+

st.markdown("""On a side note: keep in mind that we still assume the same latent demand curve and

|

| 97 |

+

optimal price point of €4.24 \n

|

| 98 |

+

So (for the sake of the example) we have been massively overpricing our product in the past""")

|

| 99 |

+

st.image("assets/images/realistic_demand_latent_curve.png")

|

| 100 |

+

st.markdown("""This constrained data brings along a major challenge in estimating the demand curve

|

| 101 |

+

though. \n

|

| 102 |

+

Intuitively, it makes sense that we can make a reasonable estimate of expected demand at €8 or €9,

|

| 103 |

+

given the observed demand at €7.5 and €10. \nBut can we extrapolate further to €2 or €20 with the

|

| 104 |

+

same reasonable confidence?""")

|

| 105 |

+

st.markdown("""This is a nice example of a very well-known problem in statistics called the

|

| 106 |

+

**\"exploration-exploitation\" trade-off** \n

|

| 107 |

+

👉 **Exploration**: We want to explore the demand for a diverse enough range of price points

|

| 108 |

+

so that we can accurately estimate our demand curve. \n

|

| 109 |

+

👉 **Exploitation**: We want to exploit all the knowledge we have gained through exploring and

|

| 110 |

+

actually do what we set out to do: set our price at an optimal level.""")

|

| 111 |

+

|

| 112 |

+

# (4) Thompson sampling explanation

|

| 113 |

+

st.header("Enter: Thompson Sampling 📊")

|

| 114 |

+

st.markdown("""As we mentioned, this is a well-known problem in statistics. So luckily for us,

|

| 115 |

+

there is a pretty neat solution in the form of **Thompson sampling**!""")

|

| 116 |

+

st.markdown("""Basically instead of estimating one demand function based on the data available to

|

| 117 |

+

us, we will estimate a probability distribution of demand functions or simply put, for every

|

| 118 |

+

possible demand function that fits our functional form (i.e. constant elasticity)

|

| 119 |

+

we will estimate the probability that it is the correct one, given our data.""")

|

| 120 |

+

st.markdown("""Or mathematically speaking, we will place a prior distribution on the parameters

|

| 121 |

+

that define our demand function and update these priors to posterior distributions via Bayes rule,

|

| 122 |

+

thus obtaining a posterior distribution for our demand function""")

|

| 123 |

+

st.markdown("""Thompson sampling then entails just sampling a demand function out of this

|

| 124 |

+

distribution, calculating the optimal price given this demand function, observing demand for this

|

| 125 |

+

new price point and using this information to refine our demand function estimates.""")

|

| 126 |

+

st.image("assets/images/flywheel_1.png")

|

| 127 |

+

st.markdown("""So: \n

|

| 128 |

+

👉 When we are **less certain** of our estimates, we will sample more diverse demand functions,

|

| 129 |

+

which means that we will also explore more diverse price points. Thus, we will **explore**. \n

|

| 130 |

+

👉 When we are **more certain** of our estimates, we will sample a demand function close to

|

| 131 |

+

the real one & set a price close to the optimal price more often. Thus, we will **exploit**.""")

|

| 132 |

+

|

| 133 |

+

st.markdown("""With that said, we'll take another look at our constrained data and see whether

|

| 134 |

+

Thompson sampling gets us any closer to the optimal price of €4.24""")

|

| 135 |

+

st.image("assets/images/realistic_demand_latent_curve.png")

|

| 136 |

+

st.markdown("""Let's start working our mathemagic: \n

|

| 137 |

+

We'll start off by placing semi-informed priors on the parameters that make up our

|

| 138 |

+

demand function.""")

|

| 139 |

+

|

| 140 |

+

st.latex(f"{sympy.latex(a)} \sim N(μ=0,σ=2)")

|

| 141 |

+

st.latex(f"{sympy.latex(eta)} \sim N(μ=0.5,σ=0.5)")

|

| 142 |

+

st.latex("sd \sim Exp(\lambda=1)")

|

| 143 |

+

st.latex(f"{sympy.latex(D)}|P=p \sim N(μ={sympy.latex(a*p**(-eta))},σ=sd)")

|

| 144 |

+

|

| 145 |

+

st.markdown("""These priors are semi-informed because we have the prior knowledge that

|

| 146 |

+

price elasticity is most likely between 0 and 1. As for the other parameters, we have little

|

| 147 |

+

knowledge about them so we can place a pretty uninformative prior.""")

|

| 148 |

+

st.markdown("If that made sense to you, great. If it didn't, don't worry about it")

|

| 149 |

+

|

| 150 |

+

st.markdown("""Now that are priors are taken care of, we can update these beliefs by incorporating

|

| 151 |

+

the data at the €7.5, €10 and €11 price levels we have available to us.""")

|

| 152 |

+

st.markdown("The resulting demand & profit curve distributions look a little something like this:")

|

| 153 |

+

st.image(["assets/images/posterior_demand.png", "assets/images/posterior_profit.png"])

|

| 154 |

+

|

| 155 |

+

st.markdown("""It's time to sample one demand curve out of this posterior distribution. \n

|

| 156 |

+

The lucky curve is:""")

|

| 157 |

+

st.image("assets/images/posterior_demand_sample.png")

|

| 158 |

+

st.markdown("This results in the following expected profit curve")

|

| 159 |

+

st.image("assets/images/posterior_profit_sample.png")

|

| 160 |

+

st.markdown("""And eventually we arrive at a new price: €5.25! Which is indeed considerably closer

|

| 161 |

+

to the actual optimal price of €4.24""")

|

| 162 |

+

st.markdown("""Now that we have our first updated price point, why stop there? Let's simulate 10

|

| 163 |

+

demand data points at this price point from out latent demand curve and check whether Thompson

|

| 164 |

+

sampling will edge us even closer to that optimal €4.24 point""")

|

| 165 |

+

st.image("assets/images/updated_prices_demand.png")

|

| 166 |

+

st.markdown("""We know the drill by down. \n

|

| 167 |

+

Let's recalculate our posteriors with this extra information.""")

|

| 168 |

+

st.image(["assets/images/posterior_demand_2.png", "assets/images/posterior_profit_2.png"])

|

| 169 |

+

st.markdown("""We immediately notice that the demand (and profit) posteriors are much less spread

|

| 170 |

+

apart this time around which implies that we are more confident in our predictions""")

|

| 171 |

+

st.markdown("Now, we can sample just one curve from the distribution")

|

| 172 |

+

st.image(["assets/images/posterior_demand_sample_2.png", "assets/images/posterior_profit_sample_2.png"])

|

| 173 |

+

st.markdown("""And finally we arrive at a price point of €4.44 which is eerily close to

|

| 174 |

+

the actual optimum of €4.24.""")

|

| 175 |

+

|

| 176 |

+

# (5) Thompson sampling demo

|

| 177 |

+

st.header("Demo time 🎮")

|

| 178 |

+

st.markdown("Now that we have covered the theory, you can go ahead and try it our for yourself!")

|

| 179 |

+

|

| 180 |

+

thompson_sampler = ThompsonSampler()

|

| 181 |

+

demo_button = st.checkbox(

|

| 182 |

+

label='Ready for the Demo? 🤯',

|

| 183 |

+

help="Starts interactive Thompson sampling demo"

|

| 184 |

+

)

|

| 185 |

+

elasticity = st.slider(

|

| 186 |

+

"Adjust latent elasticity",

|

| 187 |

+

key="latent_elasticity",

|

| 188 |

+

min_value=0.05,

|

| 189 |

+

max_value=0.95,

|

| 190 |

+

value=0.15,

|

| 191 |

+

step=0.05,

|

| 192 |

+

)

|

| 193 |

+

while demo_button:

|

| 194 |

+

thompson_sampler.run()

|

| 195 |

+

time.sleep(1)

|

| 196 |

+

|

| 197 |

+

# (6) Extra topics

|

| 198 |

+

st.header("Some final remarks")

|

| 199 |

+

|

| 200 |

+

st.markdown("""Because we have purposefully kept the example above quite simple, you may still be

|

| 201 |

+

wondering what happens when added complexities show up. \n

|

| 202 |

+

Let's discuss some of those concerns FAQ-style:""")

|

| 203 |

+

|

| 204 |

+

st.subheader("👉 Isn't this constant-elasticity model a bit too simple to work in practice?")

|

| 205 |

+

st.markdown("Brief answer: usually yes it is.")

|

| 206 |

+

st.markdown("""Luckily, more flexible methods exist. \n

|

| 207 |

+

We would recommend to use Gaussian Processes. We won't go into how these work here but the main idea

|

| 208 |

+

is that it doesn't impose a restrictive functional form onto the demand function but rather lets

|

| 209 |

+

the data speak for itself.""")

|

| 210 |

+

|

| 211 |

+

with open("assets/images/gaussian_process.gif", "rb") as file_:

|

| 212 |

+

contents = file_.read()

|

| 213 |

+

data_url = base64.b64encode(contents).decode("utf-8")

|

| 214 |

+

|

| 215 |

+

st.markdown(

|

| 216 |

+

f'<img src="data:image/gif;base64,{data_url}" alt="gaussian process">',

|

| 217 |

+

unsafe_allow_html=True,

|

| 218 |

+

)

|

| 219 |

+

st.markdown("""If you do want to learn more, we recommend these links:

|

| 220 |

+

[1](https://distill.pub/2019/visual-exploration-gaussian-processes/),

|

| 221 |

+

[2](https://thegradient.pub/gaussian-process-not-quite-for-dummies/),

|

| 222 |

+

[3](https://sidravi1.github.io/blog/2018/05/15/latent-gp-and-binomial-likelihood)""")

|

| 223 |

+

|

| 224 |

+

st.subheader("""👉 Price optimization is much more complex than just finding the point that maximizes a simple profit function. What about inventory constraints, complex cost structures, ...?""")

|

| 225 |

+

st.markdown("""It sure is but the nice thing about our setup is that it consists of three

|

| 226 |

+

components that you can change pretty much independently from each other. \n

|

| 227 |

+

This means that you can make the price optimization pillar arbitrarily custom/complex. As long as

|

| 228 |

+

it takes in a demand function and spits out a price.""")

|

| 229 |

+

st.image("assets/images/flywheel_2.png")

|

| 230 |

+

st.markdown("You can tune the other two steps as much as you like too.")

|

| 231 |

+

st.image("assets/images/flywheel_3.png")

|

| 232 |

+

|

| 233 |

+

st.subheader("👉 Changing prices has a huge impact. How can I mitigate this during experimentation?")

|

| 234 |

+

st.markdown("There are a few things we can do to minimize risk:")

|

| 235 |

+

st.markdown("""👉 **A/B testing**: You can do a gradual roll-out of the new pricing system where a

|

| 236 |

+

small (but increasing) percentage of your transactions are based on this new system. This allows you

|

| 237 |

+

to start small & track/grow the impact over time.""")

|

| 238 |

+

st.markdown("""👉 **Limit products**: Similarly to A/B testing, you can also segment on the

|

| 239 |

+

product-level. For instance, you can start gradually rolling out dynamic pricing for one product

|

| 240 |

+

type and extend this over time.""")

|

| 241 |

+

st.markdown("""👉 **Bound price range**: Theoretically, Thompson sampling in its purest form can

|

| 242 |

+

lead to any arbitrary price point (albeit with an increasingly low probability). In order to limit

|

| 243 |

+

the risk here, you can simply place a upper/lower bound on the price range you are comfortable

|

| 244 |

+

experimenting in.""")

|

| 245 |

+

st.markdown("""On top of all this, Bayesian methods (by design) explicitly quantify uncertainty.

|

| 246 |

+

This allows you to have a very concrete view on the variance of our demand estimates""")

|

| 247 |

+

|

| 248 |

+

st.subheader("👉 What if I have multiple products that can cannibalize each other?")

|

| 249 |

+

st.markdown("Here it really depends")

|

| 250 |

+

st.markdown("""👉 **If you have a handful of products**, we can simply reformulate our objective while

|

| 251 |

+

keeping our methods analogous. \n

|

| 252 |

+

Instead of tuning one price to optimize profit for the demand function of one product, we tune N

|

| 253 |

+

prices to optimize profit for the joint demand function of N products. This joint demand function

|

| 254 |

+

can then account for correlations in demand within products.""")

|

| 255 |

+

st.markdown("""👉 **If you have hundreds, thousands or more products**, we're sure you can imagine that

|

| 256 |

+

the procedure described above becomes increasingly infeasible. \n

|

| 257 |

+

A practical alternative is to group substitutable products into "baskets" and define the "price of

|

| 258 |

+

the basket" as the average price of all products in the basket. \n

|

| 259 |

+

If we assume that the products in baskets are subtitutable but the products in different baskets are

|

| 260 |

+

not, we can optimize basket prices indepedently from one another. \n

|

| 261 |

+

Finally, if we also assume that cannibalization remains constant if the ratio of prices remains

|

| 262 |

+

constant, we can calculate individual product prices as a fixed ratio of its basket price. \n""")

|

| 263 |

+

st.markdown("""For example, if a "burger basket" consists of a hamburger (€1) and a cheeseburger

|

| 264 |

+

(€3), then the "burger price" is ((€1 + €3) / 2 =) €2. So a hamburger costs 50% of the burger price

|

| 265 |

+

and a cheeseburger costs 150% of the burger price. \n

|

| 266 |

+

If we change the burger's price to €3, a hamburger will cost (50% * €3 =) €1.5 and a cheeseburger

|

| 267 |

+

will cost (150% * €3 =) €4.5 because we assume that the cannibalization effect between hamburgers &

|

| 268 |

+

cheeseburgers is the same when hamburgers cost €1 & cheeseburgers cost €3 and when hamburgers cost

|

| 269 |

+

€1.5 & cheeseburgers cost €4.5""")

|

| 270 |

+

st.image("assets/images/cannibalization.png")

|

| 271 |

+

|

| 272 |

+

st.subheader("👉 Is dynamic pricing even relevant for slow-selling products?")

|

| 273 |

+

st.markdown("""The boring answer is that it depends. It depends on how dynamic the market is, the

|

| 274 |

+

quality of the prior information, ...""")

|

| 275 |

+

st.markdown("""But obviously this isn't very helpful. \nIn general, we notice that you can already

|

| 276 |

+

get quite far with limited data, especially if you have an accurate prior belief on how the demand

|

| 277 |

+

likely behaves.""")

|

| 278 |

+

st.markdown("""For reference, in our simple example where we showed a Thompson sampling update, we

|

| 279 |

+

were already able to gain a lot of confidence in our estimates with just 10 extra demand

|

| 280 |

+

observations.""")

|

assets/images/cannibalization.png

ADDED

|

assets/images/dynamic_demand.gif

ADDED

|

Git LFS Details

|

assets/images/flywheel_1.png

ADDED

|

assets/images/flywheel_2.png

ADDED

|

assets/images/flywheel_3.png

ADDED

|

assets/images/gaussian_process.gif

ADDED

|

Git LFS Details

|

assets/images/ideal_case_demand.png

ADDED

|

assets/images/ideal_case_demand_fitted.png

ADDED

|

assets/images/ideal_case_optimal_profit.png

ADDED

|

assets/images/ideal_case_profit_curve.png

ADDED

|

assets/images/posterior_demand.png

ADDED

|

assets/images/posterior_demand_2.png

ADDED

|

assets/images/posterior_demand_sample.png

ADDED

|

assets/images/posterior_demand_sample_2.png

ADDED

|

assets/images/posterior_profit.png

ADDED

|

assets/images/posterior_profit_2.png

ADDED

|

assets/images/posterior_profit_sample.png

ADDED

|

assets/images/posterior_profit_sample_2.png

ADDED

|

assets/images/realistic_demand.png

ADDED

|

assets/images/realistic_demand_latent_curve.png

ADDED

|

assets/images/updated_prices_demand.png

ADDED

|

assets/precalc_results/posterior_0.05.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f67a5aad9591a4c65856ba0a9577e17defdb076631685d2e8dabb186a9d0c46e

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.1.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6dcf55382b9dfc8fe527ba19d4a825eb2611e1dbe65689c8b673abb1aae9ffc2

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.15.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:972b1d1d4c4a4765172b16acab7de44daee92b86c455f62b80d499992b0323cf

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.2.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dc48fe2c018588887488477ab37fcb3524d2bfda584119bd2c7d28b4393bc523

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.25.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9be07516c741b95ef5b9ecfeeabf8daa09691843ada1aa5da4e99d865285be8c

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.3.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:859c2422cc36d19441bc176820a3f686a4c44ceaa99466ac3a1daa39704127ff

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.35.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bfde23737ffcc92c6623d2a2ea107bdf1e126730573da40a2a72f9fe0c4559fa

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.4.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a6d12e42761d4b92c39f6568b1fe473d3ed9a7b590c32c23375ed06b7cbf7ef7

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.45.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a258c4695a392a24b090927444c0a3c25aa9508b36c8558b42844552f2e6a980

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.5.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f67fd5ca0f744f1f17e725b3efeb2e30b41117e8ed2f8f62ffdc531bff1c669a

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.55.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:be1fe091349573f37b4b8b8062805e78d860d8e3c3f2899223bad65c1abdb0ba

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.6.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:58bd0daad50052e0b6b706f642a4acf35ba065437d1181dcf361019e90688adb

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.65.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c2f44e9b821e0a302b8a9a869aa649d391a2a64335264425210e6a8a736c3670

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.7.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3fead4ab62f4cd4d6f1bff729ee033deca2e8ed7d6b0f9aa68b2371d61d412e1

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.75.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aff1524ebc87a8077a2ff2bf999b3561183500009e782c612c7cfda719b8e8c2

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.8.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1fd7c5cbe4db7d02635ed89b6a25c61f03d4a8727e399b6a506055bdd3571e8f

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.85.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bf6a6249e9946df213688e1d89325a1e69d9f74372c2b759269b6552ad8a15df

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.9.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:370a91ed077fd4e89b0fe576b9427731181d07d00d861a57b032ad4e317d0754

|

| 3 |

+

size 4284392

|

assets/precalc_results/posterior_0.95.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bc69a5b466f2a5d6a1797617ced35cf6544285d44930d84c9a534af070ba60e6

|

| 3 |

+

size 4284392

|

config.py

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Configuration variables"""

|

| 2 |

+

|

| 3 |

+

FIXED_COST = 3

|

| 4 |

+

VARIABLE_COST = 0.2

|

| 5 |

+

|

| 6 |

+

LATENT_ELASTICITY = 0.25

|

| 7 |

+

LATENT_SHAPE = 2

|

| 8 |

+

LATENT_STDEV = 0.25

|

helpers/thompson_sampling.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Helper file for Thompson sampling"""

|

| 2 |

+

|

| 3 |

+

import pickle

|

| 4 |

+

import random

|

| 5 |

+

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

import numpy as np

|

| 8 |

+

import streamlit as st

|

| 9 |

+

|

| 10 |

+

import config as cfg

|

| 11 |

+

|

| 12 |

+

random.seed(42)

|

| 13 |

+

|

| 14 |

+

class ThompsonSampler:

|

| 15 |

+

def __init__(self):

|

| 16 |

+

self.placeholder = st.empty()

|

| 17 |

+

|

| 18 |

+

self.latent_elasticity = cfg.LATENT_ELASTICITY

|

| 19 |

+

self.price_observations = np.concatenate(

|

| 20 |

+

[np.repeat(10,10), np.repeat(7.5,25), np.repeat(11,15)]

|

| 21 |

+

)

|

| 22 |

+

self.update_demand_observations()

|

| 23 |

+

|

| 24 |

+

self.possible_prices = np.linspace(0, 20, 100)

|

| 25 |

+

self.price_samples = []

|

| 26 |

+

self.latent_demand = self.calc_latent_demand()

|

| 27 |

+

self.latent_price = self.calc_optimal_price(self.latent_demand, sample=False)

|

| 28 |

+

self.update_posteriors()

|

| 29 |

+

|

| 30 |

+

def update_demand_observations(self):

|

| 31 |

+

self.demand_observations = np.exp(

|

| 32 |

+

np.random.normal(

|

| 33 |

+

loc=-self.latent_elasticity*self.price_observations+cfg.LATENT_SHAPE,

|

| 34 |

+

scale=cfg.LATENT_STDEV,

|

| 35 |

+

)

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

def update_elasticity(self):

|

| 39 |

+

self.latent_elasticity = st.session_state.latent_elasticity

|

| 40 |

+

self.price_samples = []

|

| 41 |

+

self.latent_demand = self.calc_latent_demand()

|

| 42 |

+

self.update_demand_observations()

|

| 43 |

+

self.latent_price = self.calc_optimal_price(self.latent_demand, sample=False)

|

| 44 |

+

self.update_posteriors(samples=75)

|

| 45 |

+

self.create_plots()

|

| 46 |

+

|

| 47 |

+

def create_plots(self, highlighted_sample=None):

|

| 48 |

+

with self.placeholder.container():

|

| 49 |

+

posterior_plot, price_plot = st.columns(2)

|

| 50 |

+

with posterior_plot:

|

| 51 |

+

st.markdown("## Demands")

|

| 52 |

+

fig = self.create_posteriors_plot(highlighted_sample)

|

| 53 |

+

st.write(fig)

|

| 54 |

+

plt.close(fig)

|

| 55 |

+

with price_plot:

|

| 56 |

+

st.markdown("## Prices")

|

| 57 |

+

fig = self.create_price_plot()

|

| 58 |

+

st.write(fig)

|

| 59 |

+

plt.close(fig)

|

| 60 |

+

|

| 61 |

+

def create_price_plot(self):

|

| 62 |

+

fig = plt.figure()

|

| 63 |

+

plt.xlabel("Price")

|

| 64 |

+

plt.yticks(color='w')

|

| 65 |

+

|

| 66 |

+

price_distr = [self.calc_optimal_price(post_demand, sample=False)

|

| 67 |

+

for post_demand in self.posterior]

|

| 68 |

+

plt.violinplot(price_distr, vert=False, showextrema=False)

|

| 69 |

+

|

| 70 |

+

for price in self.price_samples:

|

| 71 |

+

plt.plot(price, 1, marker='o', markersize = 5, color='grey')

|

| 72 |

+

|

| 73 |

+

plt.axhline(1, color='black')

|

| 74 |

+

plt.axvline(self.latent_price, 0, color='red')

|

| 75 |

+

|

| 76 |

+

return fig

|

| 77 |

+

|

| 78 |

+

def create_posteriors_plot(self, highlighted_sample=None):

|

| 79 |

+

fig = plt.figure()

|

| 80 |

+

plt.xlabel("Price")

|

| 81 |

+

plt.ylabel("Demand")

|

| 82 |

+

plt.xlim(0,20)

|

| 83 |

+

plt.ylim(0,10)

|

| 84 |

+

|

| 85 |

+

plt.scatter(self.price_observations, self.demand_observations)

|

| 86 |

+

plt.plot(self.possible_prices, self.latent_demand, color="red")

|

| 87 |

+

|

| 88 |

+

for posterior_sample in self.posterior_samples:

|

| 89 |

+

plt.plot(self.possible_prices, posterior_sample, color="grey", alpha=0.15)

|

| 90 |

+

if highlighted_sample is not None:

|

| 91 |

+

plt.plot(self.possible_prices, highlighted_sample, color="black")

|

| 92 |

+

return fig

|

| 93 |

+

|

| 94 |

+

def calc_latent_demand(self):

|

| 95 |

+

return np.exp(

|

| 96 |

+

-self.latent_elasticity*self.possible_prices + cfg.LATENT_SHAPE

|

| 97 |

+

)

|

| 98 |

+

|

| 99 |

+

@staticmethod

|

| 100 |

+

@np.vectorize

|

| 101 |

+

def _cost(demand):

|

| 102 |

+

return cfg.VARIABLE_COST*demand + cfg.FIXED_COST

|

| 103 |

+

|

| 104 |

+

def calc_optimal_price(self, sampled_demand, sample=False):

|

| 105 |

+

revenue = self.possible_prices * sampled_demand

|

| 106 |

+

profit = revenue - self._cost(sampled_demand)

|

| 107 |

+

optimal_price = self.possible_prices[np.argmax(profit)]

|

| 108 |

+

if sample:

|

| 109 |

+

self.price_samples.append(optimal_price)

|

| 110 |

+

return optimal_price

|

| 111 |

+

|

| 112 |

+

def update_posteriors(self, samples=75):

|

| 113 |

+

with open(f"assets/precalc_results/posterior_{self.latent_elasticity}.pkl", "rb") as post:

|

| 114 |

+

self.posterior = pickle.load(post)

|

| 115 |

+

self.posterior_samples = random.sample(self.posterior, samples)

|

| 116 |

+

|

| 117 |

+

def pick_posterior(self):

|

| 118 |

+

posterior_sample = random.choice(self.posterior_samples)

|

| 119 |

+

self.calc_optimal_price(posterior_sample, sample=True)

|

| 120 |

+

self.create_plots(highlighted_sample=posterior_sample)

|

| 121 |

+

|

| 122 |

+

def run(self):

|

| 123 |

+

if st.session_state.latent_elasticity != self.latent_elasticity:

|

| 124 |

+

self.update_elasticity()

|

| 125 |

+

self.pick_posterior()

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pymc3==3.11.5

|

| 2 |

+

streamlit==1.13.0

|

| 3 |

+

sympy==1.10.1

|

scripts/generate_posterior.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Script that generates, pickles and stores posterior data"""

|

| 2 |

+

|

| 3 |

+

import pickle

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

import config as cfg

|

| 8 |

+

from scripts.posterior import PosteriorGenerator

|

| 9 |

+

|

| 10 |

+

np.random.seed(42)

|

| 11 |

+

|

| 12 |

+

if __name__ == '__main__':

|

| 13 |

+

demo_prices = np.concatenate([np.repeat(10,10), np.repeat(7.5,25), np.repeat(11,15)])

|

| 14 |

+

possible_prices = np.linspace(0,20,100)

|

| 15 |

+

|

| 16 |

+

for el in [x/100 for x in range(5,100,5)]:

|

| 17 |

+

demo_demands = np.exp(

|

| 18 |

+

np.random.normal(

|

| 19 |

+

loc=-el*demo_prices+cfg.LATENT_SHAPE,

|

| 20 |

+

scale=cfg.LATENT_STDEV,

|

| 21 |

+

)

|

| 22 |

+

)

|

| 23 |

+

ts = PosteriorGenerator(prices=demo_prices, demands=demo_demands)

|

| 24 |

+

posterior = ts.calc_posterior(samples=5000)

|

| 25 |

+

post_demand_samples = []

|

| 26 |

+

for idx in range(len(posterior)):

|

| 27 |

+

elas = posterior.get_values("elas")[idx]

|

| 28 |

+

shape = posterior.get_values("shape")[idx]

|

| 29 |

+

post_demand_sample = np.exp(elas*possible_prices + shape)

|

| 30 |

+

post_demand_samples.append(post_demand_sample)

|

| 31 |

+

|

| 32 |

+

with open(f"assets/precalc_results/posterior_{el}.pkl", 'wb') as f:

|

| 33 |

+

pickle.dump(post_demand_samples, f)

|

scripts/posterior.py

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""File for updating prior into posterior"""

|

| 2 |

+

|

| 3 |

+

import pymc3 as pm

|

| 4 |

+

|

| 5 |

+

class PosteriorGenerator:

|

| 6 |

+

def __init__(self, prices, demands):

|

| 7 |

+

self.price_observations = prices

|

| 8 |

+

self.demand_observations = demands

|

| 9 |

+

|

| 10 |

+

def calc_posterior(self, samples=1000):

|

| 11 |

+

with pm.Model():

|

| 12 |

+

elas = pm.Normal("elas",mu=-0.5, sd=0.5)

|

| 13 |

+

shape = pm.Normal("shape",mu=0, sd=2)

|

| 14 |

+

stdev = pm.Exponential("stdev",lam=1)

|

| 15 |

+

y_hat = pm.math.dot(elas, self.price_observations) + shape

|

| 16 |

+

log_observations = pm.math.log(self.demand_observations)

|

| 17 |

+

_ = pm.Normal("demand", mu=y_hat, observed=log_observations, sigma=stdev)

|

| 18 |

+

trace = pm.sample(samples)

|

| 19 |

+

return trace

|