Spaces:

Running

Running

| title: GPT From Scratch | |

| emoji: ⚡ | |

| colorFrom: indigo | |

| colorTo: pink | |

| sdk: gradio | |

| sdk_version: 4.4.0 | |

| app_file: app.py | |

| pinned: false | |

| license: mit | |

| # GPT from scratch | |

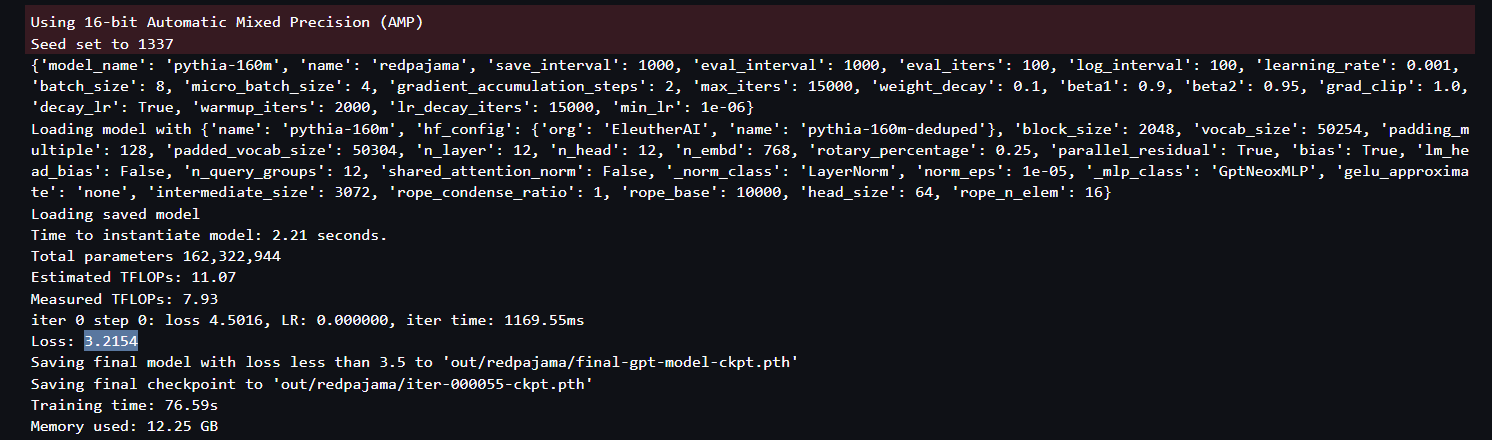

| This repo contains code to train a GPT from scratch. The dataset is taken from the [RedPajama 1 trillion data](https://huggingface.co/datasets/togethercomputer/RedPajama-Data-1T-Sample). Only samples from this are taken and used for the training purposes. The implementation of the transformer is similar to the [LitGPT](https://github.com/Lightning-AI/lit-gpt). | |

| The trained model has a parameter count of about 160M. The final training loss was found to be 3.2154. | |

|  | |

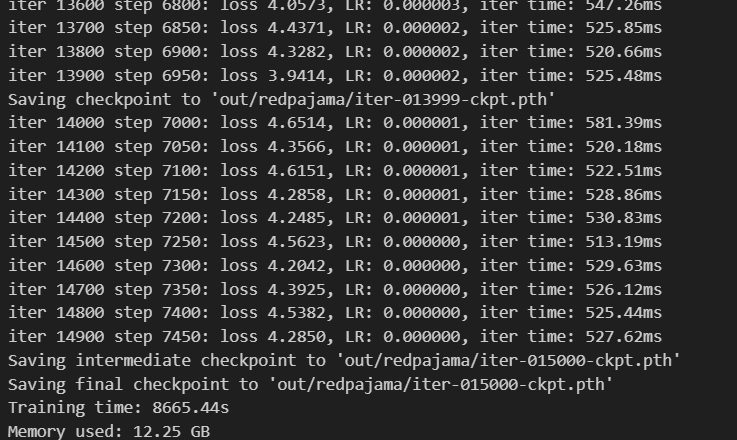

| The training details can be found in the attached notebooks. The initial training was stopped when the loss was around 4. | |

|  | |

| Using the checkpoint, the training was resumed and stopped when it went below 3.5. | |

| Github link - https://github.com/mkthoma/gpt_from_scratch |