Spaces:

Sleeping

Sleeping

Jacob Gershon

commited on

Commit

•

59a9ccf

0

Parent(s):

new b

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +34 -0

- LICENSE +21 -0

- README.md +17 -0

- app.py +404 -0

- examples/aa_weights.json +22 -0

- examples/binder_design.sh +16 -0

- examples/loop_design.sh +15 -0

- examples/motif_scaffolding.sh +14 -0

- examples/out/design_000000.pdb +0 -0

- examples/out/design_000000.trb +0 -0

- examples/partial_diffusion.sh +15 -0

- examples/pdbs/G12D_manual_mut.pdb +0 -0

- examples/pdbs/cd86.pdb +0 -0

- examples/pdbs/rsv5_5tpn.pdb +0 -0

- examples/secondary_structure.sh +21 -0

- examples/secondary_structure_bias.sh +15 -0

- examples/secondary_structure_from_pdb.sh +21 -0

- examples/symmetric_design.sh +16 -0

- examples/weighted_sequence.sh +15 -0

- examples/weighted_sequence_json.sh +16 -0

- model/.ipynb_checkpoints/RoseTTAFoldModel-checkpoint.py +140 -0

- model/Attention_module.py +411 -0

- model/AuxiliaryPredictor.py +92 -0

- model/Embeddings.py +307 -0

- model/RoseTTAFoldModel.py +140 -0

- model/SE3_network.py +83 -0

- model/Track_module.py +476 -0

- model/__pycache__/Attention_module.cpython-310.pyc +0 -0

- model/__pycache__/AuxiliaryPredictor.cpython-310.pyc +0 -0

- model/__pycache__/Embeddings.cpython-310.pyc +0 -0

- model/__pycache__/RoseTTAFoldModel.cpython-310.pyc +0 -0

- model/__pycache__/SE3_network.cpython-310.pyc +0 -0

- model/__pycache__/Track_module.cpython-310.pyc +0 -0

- model/__pycache__/ab_tools.cpython-310.pyc +0 -0

- model/__pycache__/apply_masks.cpython-310.pyc +0 -0

- model/__pycache__/arguments.cpython-310.pyc +0 -0

- model/__pycache__/chemical.cpython-310.pyc +0 -0

- model/__pycache__/data_loader.cpython-310.pyc +0 -0

- model/__pycache__/diffusion.cpython-310.pyc +0 -0

- model/__pycache__/kinematics.cpython-310.pyc +0 -0

- model/__pycache__/loss.cpython-310.pyc +0 -0

- model/__pycache__/mask_generator.cpython-310.pyc +0 -0

- model/__pycache__/parsers.cpython-310.pyc +0 -0

- model/__pycache__/scheduler.cpython-310.pyc +0 -0

- model/__pycache__/scoring.cpython-310.pyc +0 -0

- model/__pycache__/train_multi_deep.cpython-310.pyc +0 -0

- model/__pycache__/train_multi_deep_selfcond_nostruc.cpython-310.pyc +0 -0

- model/__pycache__/util.cpython-310.pyc +0 -0

- model/__pycache__/util_module.cpython-310.pyc +0 -0

- model/apply_masks.py +196 -0

.gitattributes

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

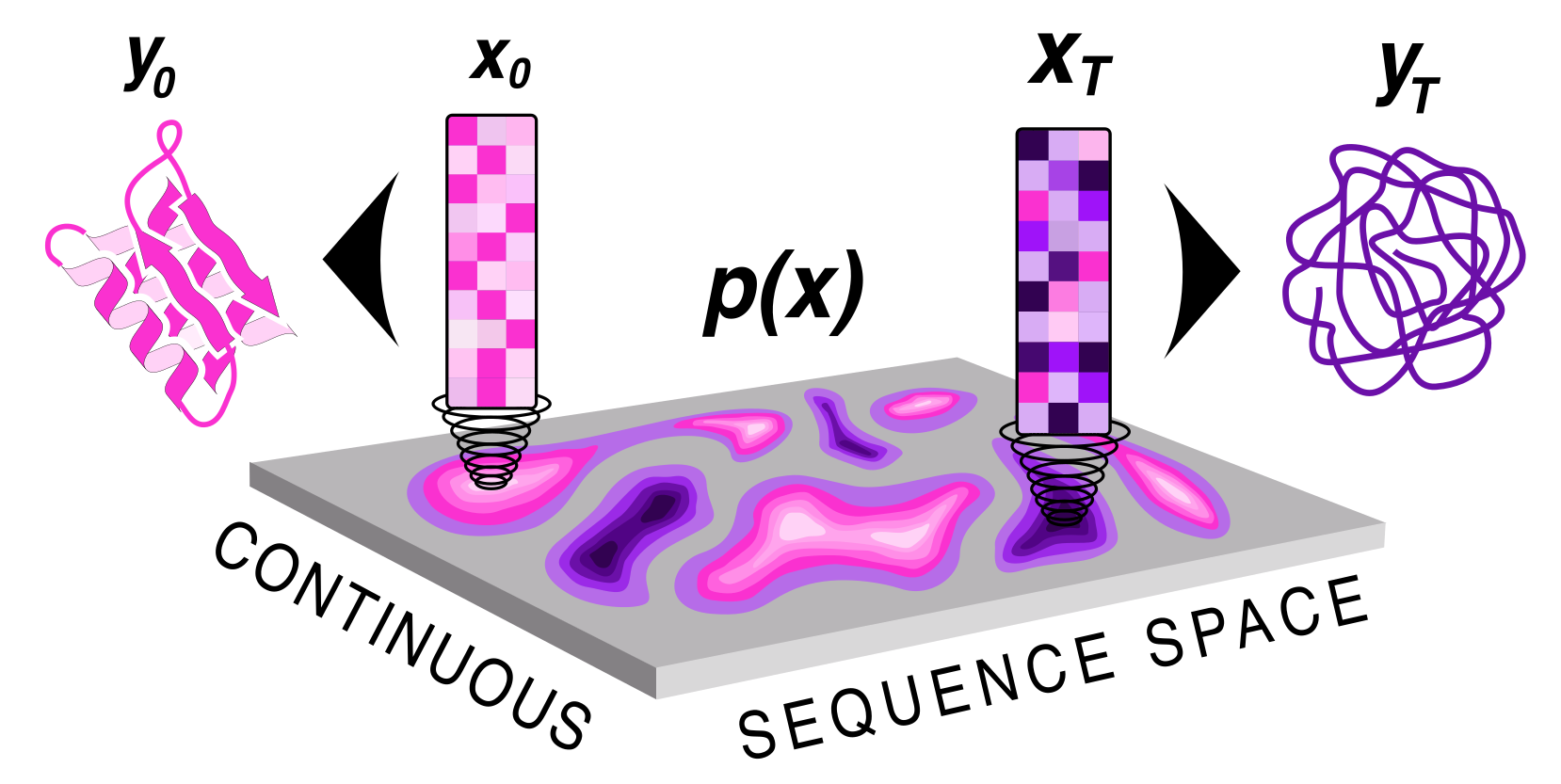

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 RosettaCommons

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: PROTEIN GENERATOR

|

| 3 |

+

emoji: 🧪

|

| 4 |

+

thumbnail: http://files.ipd.uw.edu/pub/sequence_diffusion/figs/diffusion_landscape.png

|

| 5 |

+

colorFrom: blue

|

| 6 |

+

colorTo: purple

|

| 7 |

+

sdk: gradio

|

| 8 |

+

sdk_version: 3.24.1

|

| 9 |

+

app_file: app.py

|

| 10 |

+

pinned: false

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

## Code Accessibility

|

| 16 |

+

|

| 17 |

+

To download code and for more details please visit the [github](https://github.com/RosettaCommons/protein_generator)!

|

app.py

ADDED

|

@@ -0,0 +1,404 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os,sys

|

| 2 |

+

|

| 3 |

+

# install environment goods

|

| 4 |

+

#os.system("pip -q install dgl -f https://data.dgl.ai/wheels/cu113/repo.html")

|

| 5 |

+

os.system('pip install dgl==1.0.2+cu116 -f https://data.dgl.ai/wheels/cu116/repo.html')

|

| 6 |

+

#os.system('pip install gradio')

|

| 7 |

+

os.environ["DGLBACKEND"] = "pytorch"

|

| 8 |

+

#os.system(f'pip install -r ./PROTEIN_GENERATOR/requirements.txt')

|

| 9 |

+

print('Modules installed')

|

| 10 |

+

|

| 11 |

+

os.system('pip install --force gradio==3.28.3')

|

| 12 |

+

|

| 13 |

+

os.environ["DGLBACKEND"] = "pytorch"

|

| 14 |

+

|

| 15 |

+

if not os.path.exists('./SEQDIFF_230205_dssp_hotspots_25mask_EQtasks_mod30.pt'):

|

| 16 |

+

print('Downloading model weights 1')

|

| 17 |

+

os.system('wget http://files.ipd.uw.edu/pub/sequence_diffusion/checkpoints/SEQDIFF_230205_dssp_hotspots_25mask_EQtasks_mod30.pt')

|

| 18 |

+

print('Successfully Downloaded')

|

| 19 |

+

|

| 20 |

+

if not os.path.exists('./SEQDIFF_221219_equalTASKS_nostrSELFCOND_mod30.pt'):

|

| 21 |

+

print('Downloading model weights 2')

|

| 22 |

+

os.system('wget http://files.ipd.uw.edu/pub/sequence_diffusion/checkpoints/SEQDIFF_221219_equalTASKS_nostrSELFCOND_mod30.pt')

|

| 23 |

+

print('Successfully Downloaded')

|

| 24 |

+

|

| 25 |

+

import numpy as np

|

| 26 |

+

import gradio as gr

|

| 27 |

+

import py3Dmol

|

| 28 |

+

from io import StringIO

|

| 29 |

+

import json

|

| 30 |

+

import secrets

|

| 31 |

+

import copy

|

| 32 |

+

import matplotlib.pyplot as plt

|

| 33 |

+

from utils.sampler import HuggingFace_sampler

|

| 34 |

+

|

| 35 |

+

plt.rcParams.update({'font.size': 13})

|

| 36 |

+

|

| 37 |

+

with open('./tmp/args.json','r') as f:

|

| 38 |

+

args = json.load(f)

|

| 39 |

+

|

| 40 |

+

# manually set checkpoint to load

|

| 41 |

+

args['checkpoint'] = None

|

| 42 |

+

args['dump_trb'] = False

|

| 43 |

+

args['dump_args'] = True

|

| 44 |

+

args['save_best_plddt'] = True

|

| 45 |

+

args['T'] = 25

|

| 46 |

+

args['strand_bias'] = 0.0

|

| 47 |

+

args['loop_bias'] = 0.0

|

| 48 |

+

args['helix_bias'] = 0.0

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

def protein_diffusion_model(sequence, seq_len, helix_bias, strand_bias, loop_bias,

|

| 53 |

+

secondary_structure, aa_bias, aa_bias_potential,

|

| 54 |

+

#target_charge, target_ph, charge_potential,

|

| 55 |

+

num_steps, noise, hydrophobic_target_score, hydrophobic_potential):

|

| 56 |

+

|

| 57 |

+

dssp_checkpoint = './SEQDIFF_230205_dssp_hotspots_25mask_EQtasks_mod30.pt'

|

| 58 |

+

og_checkpoint = './SEQDIFF_221219_equalTASKS_nostrSELFCOND_mod30.pt'

|

| 59 |

+

|

| 60 |

+

model_args = copy.deepcopy(args)

|

| 61 |

+

|

| 62 |

+

# make sampler

|

| 63 |

+

S = HuggingFace_sampler(args=model_args)

|

| 64 |

+

|

| 65 |

+

# get random prefix

|

| 66 |

+

S.out_prefix = './tmp/'+secrets.token_hex(nbytes=10).upper()

|

| 67 |

+

|

| 68 |

+

# set args

|

| 69 |

+

S.args['checkpoint'] = None

|

| 70 |

+

S.args['dump_trb'] = False

|

| 71 |

+

S.args['dump_args'] = True

|

| 72 |

+

S.args['save_best_plddt'] = True

|

| 73 |

+

S.args['T'] = 20

|

| 74 |

+

S.args['strand_bias'] = 0.0

|

| 75 |

+

S.args['loop_bias'] = 0.0

|

| 76 |

+

S.args['helix_bias'] = 0.0

|

| 77 |

+

S.args['potentials'] = None

|

| 78 |

+

S.args['potential_scale'] = None

|

| 79 |

+

S.args['aa_composition'] = None

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

# get sequence if entered and make sure all chars are valid

|

| 83 |

+

alt_aa_dict = {'B':['D','N'],'J':['I','L'],'U':['C'],'Z':['E','Q'],'O':['K']}

|

| 84 |

+

if sequence not in ['',None]:

|

| 85 |

+

L = len(sequence)

|

| 86 |

+

aa_seq = []

|

| 87 |

+

for aa in sequence.upper():

|

| 88 |

+

if aa in alt_aa_dict.keys():

|

| 89 |

+

aa_seq.append(np.random.choice(alt_aa_dict[aa]))

|

| 90 |

+

else:

|

| 91 |

+

aa_seq.append(aa)

|

| 92 |

+

|

| 93 |

+

S.args['sequence'] = aa_seq

|

| 94 |

+

else:

|

| 95 |

+

S.args['contigs'] = [f'{seq_len}']

|

| 96 |

+

L = int(seq_len)

|

| 97 |

+

|

| 98 |

+

if secondary_structure in ['',None]:

|

| 99 |

+

secondary_structure = None

|

| 100 |

+

else:

|

| 101 |

+

secondary_structure = ''.join(['E' if x == 'S' else x for x in secondary_structure])

|

| 102 |

+

if L < len(secondary_structure):

|

| 103 |

+

secondary_structure = secondary_structure[:len(sequence)]

|

| 104 |

+

elif L == len(secondary_structure):

|

| 105 |

+

pass

|

| 106 |

+

else:

|

| 107 |

+

dseq = L - len(secondary_structure)

|

| 108 |

+

secondary_structure += secondary_structure[-1]*dseq

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

# potentials

|

| 112 |

+

potential_list = []

|

| 113 |

+

potential_bias_list = []

|

| 114 |

+

|

| 115 |

+

if aa_bias not in ['',None]:

|

| 116 |

+

potential_list.append('aa_bias')

|

| 117 |

+

S.args['aa_composition'] = aa_bias

|

| 118 |

+

if aa_bias_potential in ['',None]:

|

| 119 |

+

aa_bias_potential = 3

|

| 120 |

+

potential_bias_list.append(str(aa_bias_potential))

|

| 121 |

+

'''

|

| 122 |

+

if target_charge not in ['',None]:

|

| 123 |

+

potential_list.append('charge')

|

| 124 |

+

if charge_potential in ['',None]:

|

| 125 |

+

charge_potential = 1

|

| 126 |

+

potential_bias_list.append(str(charge_potential))

|

| 127 |

+

S.args['target_charge'] = float(target_charge)

|

| 128 |

+

if target_ph in ['',None]:

|

| 129 |

+

target_ph = 7.4

|

| 130 |

+

S.args['target_pH'] = float(target_ph)

|

| 131 |

+

'''

|

| 132 |

+

|

| 133 |

+

if hydrophobic_target_score not in ['',None]:

|

| 134 |

+

potential_list.append('hydrophobic')

|

| 135 |

+

S.args['hydrophobic_score'] = float(hydrophobic_target_score)

|

| 136 |

+

if hydrophobic_potential in ['',None]:

|

| 137 |

+

hydrophobic_potential = 3

|

| 138 |

+

potential_bias_list.append(str(hydrophobic_potential))

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

if len(potential_list) > 0:

|

| 142 |

+

S.args['potentials'] = ','.join(potential_list)

|

| 143 |

+

S.args['potential_scale'] = ','.join(potential_bias_list)

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

# normalise secondary_structure bias from range 0-0.3

|

| 147 |

+

S.args['secondary_structure'] = secondary_structure

|

| 148 |

+

S.args['helix_bias'] = helix_bias

|

| 149 |

+

S.args['strand_bias'] = strand_bias

|

| 150 |

+

S.args['loop_bias'] = loop_bias

|

| 151 |

+

|

| 152 |

+

# set T

|

| 153 |

+

if num_steps in ['',None]:

|

| 154 |

+

S.args['T'] = 20

|

| 155 |

+

else:

|

| 156 |

+

S.args['T'] = int(num_steps)

|

| 157 |

+

|

| 158 |

+

# noise

|

| 159 |

+

if 'normal' in noise:

|

| 160 |

+

S.args['sample_distribution'] = noise

|

| 161 |

+

S.args['sample_distribution_gmm_means'] = [0]

|

| 162 |

+

S.args['sample_distribution_gmm_variances'] = [1]

|

| 163 |

+

elif 'gmm2' in noise:

|

| 164 |

+

S.args['sample_distribution'] = noise

|

| 165 |

+

S.args['sample_distribution_gmm_means'] = [-1,1]

|

| 166 |

+

S.args['sample_distribution_gmm_variances'] = [1,1]

|

| 167 |

+

elif 'gmm3' in noise:

|

| 168 |

+

S.args['sample_distribution'] = noise

|

| 169 |

+

S.args['sample_distribution_gmm_means'] = [-1,0,1]

|

| 170 |

+

S.args['sample_distribution_gmm_variances'] = [1,1,1]

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

if secondary_structure not in ['',None] or helix_bias+strand_bias+loop_bias > 0:

|

| 175 |

+

S.args['checkpoint'] = dssp_checkpoint

|

| 176 |

+

S.args['d_t1d'] = 29

|

| 177 |

+

print('using dssp checkpoint')

|

| 178 |

+

else:

|

| 179 |

+

S.args['checkpoint'] = og_checkpoint

|

| 180 |

+

S.args['d_t1d'] = 24

|

| 181 |

+

print('using og checkpoint')

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

for k,v in S.args.items():

|

| 185 |

+

print(f"{k} --> {v}")

|

| 186 |

+

|

| 187 |

+

# init S

|

| 188 |

+

S.model_init()

|

| 189 |

+

S.diffuser_init()

|

| 190 |

+

S.setup()

|

| 191 |

+

|

| 192 |

+

# sampling loop

|

| 193 |

+

plddt_data = []

|

| 194 |

+

for j in range(S.max_t):

|

| 195 |

+

output_seq, output_pdb, plddt = S.take_step_get_outputs(j)

|

| 196 |

+

plddt_data.append(plddt)

|

| 197 |

+

yield output_seq, output_pdb, display_pdb(output_pdb), get_plddt_plot(plddt_data, S.max_t)

|

| 198 |

+

|

| 199 |

+

output_seq, output_pdb, plddt = S.get_outputs()

|

| 200 |

+

|

| 201 |

+

yield output_seq, output_pdb, display_pdb(output_pdb), get_plddt_plot(plddt_data, S.max_t)

|

| 202 |

+

|

| 203 |

+

def get_plddt_plot(plddt_data, max_t):

|

| 204 |

+

x = [i+1 for i in range(len(plddt_data))]

|

| 205 |

+

fig, ax = plt.subplots(figsize=(15,6))

|

| 206 |

+

ax.plot(x,plddt_data,color='#661dbf', linewidth=3,marker='o')

|

| 207 |

+

ax.set_xticks([i+1 for i in range(max_t)])

|

| 208 |

+

ax.set_yticks([(i+1)/10 for i in range(10)])

|

| 209 |

+

ax.set_ylim([0,1])

|

| 210 |

+

ax.set_ylabel('model confidence (plddt)')

|

| 211 |

+

ax.set_xlabel('diffusion steps (t)')

|

| 212 |

+

return fig

|

| 213 |

+

|

| 214 |

+

def display_pdb(path_to_pdb):

|

| 215 |

+

'''

|

| 216 |

+

#function to display pdb in py3dmol

|

| 217 |

+

'''

|

| 218 |

+

pdb = open(path_to_pdb, "r").read()

|

| 219 |

+

|

| 220 |

+

view = py3Dmol.view(width=500, height=500)

|

| 221 |

+

view.addModel(pdb, "pdb")

|

| 222 |

+

view.setStyle({'model': -1}, {"cartoon": {'colorscheme':{'prop':'b','gradient':'roygb','min':0,'max':1}}})#'linear', 'min': 0, 'max': 1, 'colors': ["#ff9ef0","#a903fc",]}}})

|

| 223 |

+

view.zoomTo()

|

| 224 |

+

output = view._make_html().replace("'", '"')

|

| 225 |

+

print(view._make_html())

|

| 226 |

+

x = f"""<!DOCTYPE html><html></center> {output} </center></html>""" # do not use ' in this input

|

| 227 |

+

|

| 228 |

+

return f"""<iframe height="500px" width="100%" name="result" allow="midi; geolocation; microphone; camera;

|

| 229 |

+

display-capture; encrypted-media;" sandbox="allow-modals allow-forms

|

| 230 |

+

allow-scripts allow-same-origin allow-popups

|

| 231 |

+

allow-top-navigation-by-user-activation allow-downloads" allowfullscreen=""

|

| 232 |

+

allowpaymentrequest="" frameborder="0" srcdoc='{x}'></iframe>"""

|

| 233 |

+

|

| 234 |

+

'''

|

| 235 |

+

|

| 236 |

+

return f"""<iframe style="width: 100%; height:700px" name="result" allow="midi; geolocation; microphone; camera;

|

| 237 |

+

display-capture; encrypted-media;" sandbox="allow-modals allow-forms

|

| 238 |

+

allow-scripts allow-same-origin allow-popups

|

| 239 |

+

allow-top-navigation-by-user-activation allow-downloads" allowfullscreen=""

|

| 240 |

+

allowpaymentrequest="" frameborder="0" srcdoc='{x}'></iframe>"""

|

| 241 |

+

'''

|

| 242 |

+

|

| 243 |

+

def toggle_seq_input(choice):

|

| 244 |

+

if choice == "protein length":

|

| 245 |

+

return gr.update(visible=True, value=None), gr.update(visible=False, value=None)

|

| 246 |

+

elif choice == "custom sequence":

|

| 247 |

+

return gr.update(visible=False, value=None), gr.update(visible=True, value=None)

|

| 248 |

+

|

| 249 |

+

def toggle_secondary_structure(choice):

|

| 250 |

+

if choice == "sliders":

|

| 251 |

+

return gr.update(visible=True, value=None),gr.update(visible=True, value=None),gr.update(visible=True, value=None),gr.update(visible=False, value=None)

|

| 252 |

+

elif choice == "explicit":

|

| 253 |

+

return gr.update(visible=False, value=None),gr.update(visible=False, value=None),gr.update(visible=False, value=None),gr.update(visible=True, value=None)

|

| 254 |

+

|

| 255 |

+

# Define the Gradio interface

|

| 256 |

+

with gr.Blocks(theme='ParityError/Interstellar') as demo:

|

| 257 |

+

|

| 258 |

+

gr.Markdown(f"""# Protein Generation via Diffusion in Sequence Space""")

|

| 259 |

+

|

| 260 |

+

with gr.Row():

|

| 261 |

+

with gr.Column(min_width=500):

|

| 262 |

+

gr.Markdown(f"""

|

| 263 |

+

## How does it work?\n

|

| 264 |

+

--- [PREPRINT](https://biorxiv.org/content/10.1101/2023.05.08.539766v1) ---

|

| 265 |

+

|

| 266 |

+

Protein sequence and structure co-generation is a long outstanding problem in the field of protein design. By implementing [ddpm](https://arxiv.org/abs/2006.11239) style diffusion over protein seqeuence space we generate protein sequence and structure pairs. Starting with [RoseTTAFold](https://www.science.org/doi/10.1126/science.abj8754), a protein structure prediction network, we finetuned it to predict sequence and structure given a partially noised sequence. By applying losses to both the predicted sequence and structure the model is forced to generate meaningful pairs. Diffusing in sequence space makes it easy to implement potentials to guide the diffusive process toward particular amino acid composition, net charge, and more! Furthermore, you can sample proteins from a family of sequences or even train a small sequence to function classifier to guide generation toward desired sequences.

|

| 267 |

+

|

| 268 |

+

|

| 269 |

+

## How to use it?\n

|

| 270 |

+

A user can either design a custom input sequence to diffuse from or specify a length below. To scaffold a sequence use the following format where X represent residues to diffuse: XXXXXXXXSCIENCESCIENCEXXXXXXXXXXXXXXXXXXX. You can even design a protein with your name XXXXXXXXXXXXNAMEHEREXXXXXXXXXXXXX!

|

| 271 |

+

|

| 272 |

+

### Acknowledgements\n

|

| 273 |

+

Thank you to Simon Dürr and the Hugging Face team for setting us up with a community GPU grant!

|

| 274 |

+

""")

|

| 275 |

+

|

| 276 |

+

gr.Markdown("""

|

| 277 |

+

## Model in Action

|

| 278 |

+

|

| 279 |

+

""")

|

| 280 |

+

|

| 281 |

+

with gr.Row().style(equal_height=False):

|

| 282 |

+

with gr.Column():

|

| 283 |

+

gr.Markdown("""## INPUTS""")

|

| 284 |

+

gr.Markdown("""#### Start Sequence

|

| 285 |

+

Specify the protein length for complete unconditional generation, or scaffold a motif (or your name) using the custom sequence input""")

|

| 286 |

+

seq_opt = gr.Radio(["protein length","custom sequence"], label="How would you like to specify the starting sequence?", value='protein length')

|

| 287 |

+

|

| 288 |

+

sequence = gr.Textbox(label="custom sequence", lines=1, placeholder='AMINO ACIDS: A,C,D,E,F,G,H,I,K,L,M,N,P,Q,R,S,T,V,W,Y\n MASK TOKEN: X', visible=False)

|

| 289 |

+

seq_len = gr.Slider(minimum=5.0, maximum=250.0, label="protein length", value=100, visible=True)

|

| 290 |

+

|

| 291 |

+

seq_opt.change(fn=toggle_seq_input,

|

| 292 |

+

inputs=[seq_opt],

|

| 293 |

+

outputs=[seq_len, sequence],

|

| 294 |

+

queue=False)

|

| 295 |

+

|

| 296 |

+

gr.Markdown("""### Optional Parameters""")

|

| 297 |

+

with gr.Accordion(label='Secondary Structure',open=True):

|

| 298 |

+

gr.Markdown("""Try changing the sliders or inputing explicit secondary structure conditioning for each residue""")

|

| 299 |

+

sec_str_opt = gr.Radio(["sliders","explicit"], label="How would you like to specify secondary structure?", value='sliders')

|

| 300 |

+

|

| 301 |

+

secondary_structure = gr.Textbox(label="secondary structure", lines=1, placeholder='HELIX = H STRAND = S LOOP = L MASK = X(must be the same length as input sequence)', visible=False)

|

| 302 |

+

|

| 303 |

+

with gr.Column():

|

| 304 |

+

helix_bias = gr.Slider(minimum=0.0, maximum=0.05, label="helix bias", visible=True)

|

| 305 |

+

strand_bias = gr.Slider(minimum=0.0, maximum=0.05, label="strand bias", visible=True)

|

| 306 |

+

loop_bias = gr.Slider(minimum=0.0, maximum=0.20, label="loop bias", visible=True)

|

| 307 |

+

|

| 308 |

+

sec_str_opt.change(fn=toggle_secondary_structure,

|

| 309 |

+

inputs=[sec_str_opt],

|

| 310 |

+

outputs=[helix_bias,strand_bias,loop_bias,secondary_structure],

|

| 311 |

+

queue=False)

|

| 312 |

+

|

| 313 |

+

with gr.Accordion(label='Amino Acid Compositional Bias',open=False):

|

| 314 |

+

gr.Markdown("""Bias sequence composition for particular amino acids by specifying the one letter code followed by the fraction to bias. This can be input as a list for example: W0.2,E0.1""")

|

| 315 |

+

with gr.Row():

|

| 316 |

+

aa_bias = gr.Textbox(label="aa bias", lines=1, placeholder='specify one letter AA and fraction to bias, for example W0.1 or M0.1,K0.1' )

|

| 317 |

+

aa_bias_potential = gr.Textbox(label="aa bias scale", lines=1, placeholder='AA Bias potential scale (recomended range 1.0-5.0)')

|

| 318 |

+

|

| 319 |

+

'''

|

| 320 |

+

with gr.Accordion(label='Charge Bias',open=False):

|

| 321 |

+

gr.Markdown("""Bias for a specified net charge at a particular pH using the boxes below""")

|

| 322 |

+

with gr.Row():

|

| 323 |

+

target_charge = gr.Textbox(label="net charge", lines=1, placeholder='net charge to target')

|

| 324 |

+

target_ph = gr.Textbox(label="pH", lines=1, placeholder='pH at which net charge is desired')

|

| 325 |

+

charge_potential = gr.Textbox(label="charge potential scale", lines=1, placeholder='charge potential scale (recomended range 1.0-5.0)')

|

| 326 |

+

'''

|

| 327 |

+

|

| 328 |

+

with gr.Accordion(label='Hydrophobic Bias',open=False):

|

| 329 |

+

gr.Markdown("""Bias for or against hydrophobic composition, to get more soluble proteins, bias away with a negative target score (ex. -5)""")

|

| 330 |

+

with gr.Row():

|

| 331 |

+

hydrophobic_target_score = gr.Textbox(label="hydrophobic score", lines=1, placeholder='hydrophobic score to target (negative score is good for solublility)')

|

| 332 |

+

hydrophobic_potential = gr.Textbox(label="hydrophobic potential scale", lines=1, placeholder='hydrophobic potential scale (recomended range 1.0-2.0)')

|

| 333 |

+

|

| 334 |

+

with gr.Accordion(label='Diffusion Params',open=False):

|

| 335 |

+

gr.Markdown("""Increasing T to more steps can be helpful for harder design challenges, sampling from different distributions can change the sequence and structural composition""")

|

| 336 |

+

with gr.Row():

|

| 337 |

+

num_steps = gr.Textbox(label="T", lines=1, placeholder='number of diffusion steps (25 or less will speed things up)')

|

| 338 |

+

noise = gr.Dropdown(['normal','gmm2 [-1,1]','gmm3 [-1,0,1]'], label='noise type', value='normal')

|

| 339 |

+

|

| 340 |

+

btn = gr.Button("GENERATE")

|

| 341 |

+

|

| 342 |

+

#with gr.Row():

|

| 343 |

+

with gr.Column():

|

| 344 |

+

gr.Markdown("""## OUTPUTS""")

|

| 345 |

+

gr.Markdown("""#### Confidence score for generated structure at each timestep""")

|

| 346 |

+

plddt_plot = gr.Plot(label='plddt at step t')

|

| 347 |

+

gr.Markdown("""#### Output protein sequnece""")

|

| 348 |

+

output_seq = gr.Textbox(label="sequence")

|

| 349 |

+

gr.Markdown("""#### Download PDB file""")

|

| 350 |

+

output_pdb = gr.File(label="PDB file")

|

| 351 |

+

gr.Markdown("""#### Structure viewer""")

|

| 352 |

+

output_viewer = gr.HTML()

|

| 353 |

+

|

| 354 |

+

gr.Markdown("""### Don't know where to get started? Click on an example below to try it out!""")

|

| 355 |

+

gr.Examples(

|

| 356 |

+

[["","125",0.0,0.0,0.2,"","","","20","normal",'',''],

|

| 357 |

+

["","100",0.0,0.0,0.0,"","W0.2","2","20","normal",'',''],

|

| 358 |

+

["","100",0.0,0.0,0.0,"XXHHHHHHHHHXXXXXXXHHHHHHHHHXXXXXXXHHHHHHHHXXXXSSSSSSSSSSSXXXXXXXXSSSSSSSSSSSSXXXXXXXSSSSSSSSSXXXXXXX","","","25","normal",'',''],

|

| 359 |

+

["XXXXXXXXXXXXXXXXXXXXXXXXXIPDXXXXXXXXXXXXXXXXXXXXXXPEPSEQXXXXXXXXXXXXXXXXXXXXXXXXXXIPDXXXXXXXXXXXXXXXXXXX","",0.0,0.0,0.0,"","","","25","normal",'','']],

|

| 360 |

+

inputs=[sequence,

|

| 361 |

+

seq_len,

|

| 362 |

+

helix_bias,

|

| 363 |

+

strand_bias,

|

| 364 |

+

loop_bias,

|

| 365 |

+

secondary_structure,

|

| 366 |

+

aa_bias,

|

| 367 |

+

aa_bias_potential,

|

| 368 |

+

#target_charge,

|

| 369 |

+

#target_ph,

|

| 370 |

+

#charge_potential,

|

| 371 |

+

num_steps,

|

| 372 |

+

noise,

|

| 373 |

+

hydrophobic_target_score,

|

| 374 |

+

hydrophobic_potential],

|

| 375 |

+

outputs=[output_seq,

|

| 376 |

+

output_pdb,

|

| 377 |

+

output_viewer,

|

| 378 |

+

plddt_plot],

|

| 379 |

+

fn=protein_diffusion_model,

|

| 380 |

+

)

|

| 381 |

+

btn.click(protein_diffusion_model,

|

| 382 |

+

[sequence,

|

| 383 |

+

seq_len,

|

| 384 |

+

helix_bias,

|

| 385 |

+

strand_bias,

|

| 386 |

+

loop_bias,

|

| 387 |

+

secondary_structure,

|

| 388 |

+

aa_bias,

|

| 389 |

+

aa_bias_potential,

|

| 390 |

+

#target_charge,

|

| 391 |

+

#target_ph,

|

| 392 |

+

#charge_potential,

|

| 393 |

+

num_steps,

|

| 394 |

+

noise,

|

| 395 |

+

hydrophobic_target_score,

|

| 396 |

+

hydrophobic_potential],

|

| 397 |

+

[output_seq,

|

| 398 |

+

output_pdb,

|

| 399 |

+

output_viewer,

|

| 400 |

+

plddt_plot])

|

| 401 |

+

|

| 402 |

+

demo.queue()

|

| 403 |

+

demo.launch(debug=True)

|

| 404 |

+

|

examples/aa_weights.json

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"A": 0,

|

| 3 |

+

"R": 0,

|

| 4 |

+

"N": 0,

|

| 5 |

+

"D": 0,

|

| 6 |

+

"C": 0,

|

| 7 |

+

"Q": 0,

|

| 8 |

+

"E": 0,

|

| 9 |

+

"G": 0,

|

| 10 |

+

"H": 0,

|

| 11 |

+

"I": 0,

|

| 12 |

+

"L": 0,

|

| 13 |

+

"K": 0,

|

| 14 |

+

"M": 0,

|

| 15 |

+

"F": 0,

|

| 16 |

+

"P": 0,

|

| 17 |

+

"S": 0,

|

| 18 |

+

"T": 0,

|

| 19 |

+

"W": 0,

|

| 20 |

+

"Y": 0,

|

| 21 |

+

"V": 0

|

| 22 |

+

}

|

examples/binder_design.sh

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/binder_design \

|

| 13 |

+

--pdb pdbs/cd86.pdb \

|

| 14 |

+

--T 25 --save_best_plddt \

|

| 15 |

+

--contigs B1-110,0 25-75 \

|

| 16 |

+

--hotspots B40,B32,B87,B96,B30

|

examples/loop_design.sh

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--pdb pdbs/G12D_manual_mut.pdb \

|

| 13 |

+

--out out/ab_loop \

|

| 14 |

+

--contigs A2-176,0 C7-16,0 H2-95,12-15,H111-116,0 L1-45,10-12,L56-107 \

|

| 15 |

+

--T 25 --save_best_plddt --loop_design

|

examples/motif_scaffolding.sh

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--pdb pdbs/rsv5_5tpn.pdb \

|

| 14 |

+

--contigs 0-25,A163-181,25-30 --T 25 --save_best_plddt

|

examples/out/design_000000.pdb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/out/design_000000.trb

ADDED

|

Binary file (3.51 kB). View file

|

|

|

examples/partial_diffusion.sh

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--pdb out/design_000.pdb \

|

| 13 |

+

--trb out/design_000.trb \

|

| 14 |

+

--out out/partial_diffusion_design \

|

| 15 |

+

--contigs 0 --sampling_temp 0.3 --T 50 --save_best_plddt

|

examples/pdbs/G12D_manual_mut.pdb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/pdbs/cd86.pdb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/pdbs/rsv5_5tpn.pdb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

examples/secondary_structure.sh

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--contigs 100 \

|

| 14 |

+

--T 25 --save_best_plddt \

|

| 15 |

+

--secondary_structure XXXXXHHHHXXXLLLXXXXXXXXXXHHHHXXXLLLXXXXXXXXXXHHHHXXXLLLXXXXXXXXXXHHHHXXXLLLXXXXXXXXXXHHHHXXXLLLXXXXX

|

| 16 |

+

|

| 17 |

+

# FOR SECONDARY STRUCTURE:

|

| 18 |

+

# X - mask

|

| 19 |

+

# H - helix

|

| 20 |

+

# E - strand

|

| 21 |

+

# L - loop

|

examples/secondary_structure_bias.sh

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--contigs 100 \

|

| 14 |

+

--T 25 --save_best_plddt \

|

| 15 |

+

--helix_bias 0.01 --strand_bias 0.01 --loop_bias 0.0

|

examples/secondary_structure_from_pdb.sh

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--contigs 110 \

|

| 14 |

+

--T 25 --save_best_plddt \

|

| 15 |

+

--dssp_pdb ./pdbs/cd86.pdb

|

| 16 |

+

|

| 17 |

+

# FOR SECONDARY STRUCTURE:

|

| 18 |

+

# X - mask

|

| 19 |

+

# H - helix

|

| 20 |

+

# E - strand

|

| 21 |

+

# L - loop

|

examples/symmetric_design.sh

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/symmetric_design \

|

| 13 |

+

--contigs 25,0 25,0 25,0 \

|

| 14 |

+

--T 50 \

|

| 15 |

+

--save_best_plddt \

|

| 16 |

+

--symmetry 3

|

examples/weighted_sequence.sh

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--contigs 100 \

|

| 14 |

+

--T 25 --save_best_plddt \

|

| 15 |

+

--aa_composition W0.2 --potential_scale 1.75

|

examples/weighted_sequence_json.sh

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

#SBATCH -J seq_diff

|

| 3 |

+

#SBATCH -p gpu

|

| 4 |

+

#SBATCH --mem=8g

|

| 5 |

+

#SBATCH --gres=gpu:a6000:1

|

| 6 |

+

#SBATCH -o ./out/slurm/slurm_%j.out

|

| 7 |

+

|

| 8 |

+

source activate /software/conda/envs/SE3nv

|

| 9 |

+

|

| 10 |

+

srun python ../inference.py \

|

| 11 |

+

--num_designs 10 \

|

| 12 |

+

--out out/design \

|

| 13 |

+

--contigs 75 \

|

| 14 |

+

--aa_weights_json aa_weights.json \

|

| 15 |

+

--add_weight_every_n 5 --add_weight_every_n \

|

| 16 |

+

--T 25 --save_best_plddt

|

model/.ipynb_checkpoints/RoseTTAFoldModel-checkpoint.py

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

from Embeddings import MSA_emb, Extra_emb, Templ_emb, Recycling

|

| 4 |

+

from Track_module import IterativeSimulator

|

| 5 |

+

from AuxiliaryPredictor import DistanceNetwork, MaskedTokenNetwork, ExpResolvedNetwork, LDDTNetwork

|

| 6 |

+

from util import INIT_CRDS

|

| 7 |

+

from opt_einsum import contract as einsum

|

| 8 |

+

from icecream import ic

|

| 9 |

+

|

| 10 |

+

class RoseTTAFoldModule(nn.Module):

|

| 11 |

+

def __init__(self, n_extra_block=4, n_main_block=8, n_ref_block=4,\

|

| 12 |

+

d_msa=256, d_msa_full=64, d_pair=128, d_templ=64,

|

| 13 |

+

n_head_msa=8, n_head_pair=4, n_head_templ=4,

|

| 14 |

+

d_hidden=32, d_hidden_templ=64,

|

| 15 |

+

p_drop=0.15, d_t1d=24, d_t2d=44,

|

| 16 |

+

SE3_param_full={'l0_in_features':32, 'l0_out_features':16, 'num_edge_features':32},

|

| 17 |

+

SE3_param_topk={'l0_in_features':32, 'l0_out_features':16, 'num_edge_features':32},

|

| 18 |

+

):

|

| 19 |

+

super(RoseTTAFoldModule, self).__init__()

|

| 20 |

+

#

|

| 21 |

+

# Input Embeddings

|

| 22 |

+

d_state = SE3_param_topk['l0_out_features']

|

| 23 |

+

self.latent_emb = MSA_emb(d_msa=d_msa, d_pair=d_pair, d_state=d_state, p_drop=p_drop)

|

| 24 |

+

self.full_emb = Extra_emb(d_msa=d_msa_full, d_init=25, p_drop=p_drop)

|

| 25 |

+

self.templ_emb = Templ_emb(d_pair=d_pair, d_templ=d_templ, d_state=d_state,

|

| 26 |

+

n_head=n_head_templ,

|

| 27 |

+

d_hidden=d_hidden_templ, p_drop=0.25, d_t1d=d_t1d, d_t2d=d_t2d)

|

| 28 |

+

# Update inputs with outputs from previous round

|

| 29 |

+

self.recycle = Recycling(d_msa=d_msa, d_pair=d_pair, d_state=d_state)

|

| 30 |

+

#

|

| 31 |

+

self.simulator = IterativeSimulator(n_extra_block=n_extra_block,

|

| 32 |

+

n_main_block=n_main_block,

|

| 33 |

+

n_ref_block=n_ref_block,

|

| 34 |

+

d_msa=d_msa, d_msa_full=d_msa_full,

|

| 35 |

+

d_pair=d_pair, d_hidden=d_hidden,

|

| 36 |

+

n_head_msa=n_head_msa,

|

| 37 |

+

n_head_pair=n_head_pair,

|

| 38 |

+

SE3_param_full=SE3_param_full,

|

| 39 |

+

SE3_param_topk=SE3_param_topk,

|

| 40 |

+

p_drop=p_drop)

|

| 41 |

+

##

|

| 42 |

+

self.c6d_pred = DistanceNetwork(d_pair, p_drop=p_drop)

|

| 43 |

+

self.aa_pred = MaskedTokenNetwork(d_msa, p_drop=p_drop)

|

| 44 |

+

self.lddt_pred = LDDTNetwork(d_state)

|

| 45 |

+

|

| 46 |

+

self.exp_pred = ExpResolvedNetwork(d_msa, d_state)

|

| 47 |

+

|

| 48 |

+

def forward(self, msa_latent, msa_full, seq, xyz, idx,

|

| 49 |

+

seq1hot=None, t1d=None, t2d=None, xyz_t=None, alpha_t=None,

|

| 50 |

+

msa_prev=None, pair_prev=None, state_prev=None,

|

| 51 |

+

return_raw=False, return_full=False,

|

| 52 |

+

use_checkpoint=False, return_infer=False):

|

| 53 |

+

B, N, L = msa_latent.shape[:3]

|

| 54 |

+

# Get embeddings

|

| 55 |

+

#ic(seq.shape)

|

| 56 |

+

#ic(msa_latent.shape)

|

| 57 |

+

#ic(seq1hot.shape)

|

| 58 |

+

#ic(idx.shape)

|

| 59 |

+

#ic(xyz.shape)

|

| 60 |

+

#ic(seq1hot.shape)

|

| 61 |

+

#ic(t1d.shape)

|

| 62 |

+

#ic(t2d.shape)

|

| 63 |

+

|

| 64 |

+

idx = idx.long()

|

| 65 |

+

msa_latent, pair, state = self.latent_emb(msa_latent, seq, idx, seq1hot=seq1hot)

|

| 66 |

+

|

| 67 |

+

msa_full = self.full_emb(msa_full, seq, idx, seq1hot=seq1hot)

|

| 68 |

+

#

|

| 69 |

+

# Do recycling

|

| 70 |

+

if msa_prev == None:

|

| 71 |

+

msa_prev = torch.zeros_like(msa_latent[:,0])

|

| 72 |

+

if pair_prev == None:

|

| 73 |

+

pair_prev = torch.zeros_like(pair)

|

| 74 |

+

if state_prev == None:

|

| 75 |

+

state_prev = torch.zeros_like(state)

|

| 76 |

+

|

| 77 |

+

#ic(seq.shape)

|

| 78 |

+

#ic(msa_prev.shape)

|

| 79 |

+

#ic(pair_prev.shape)

|

| 80 |

+

#ic(xyz.shape)

|

| 81 |

+

#ic(state_prev.shape)

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

msa_recycle, pair_recycle, state_recycle = self.recycle(seq, msa_prev, pair_prev, xyz, state_prev)

|

| 85 |

+

msa_latent[:,0] = msa_latent[:,0] + msa_recycle.reshape(B,L,-1)

|

| 86 |

+

pair = pair + pair_recycle

|

| 87 |

+

state = state + state_recycle

|

| 88 |

+

#

|

| 89 |

+

#ic(t1d.dtype)

|

| 90 |

+

#ic(t2d.dtype)

|

| 91 |

+

#ic(alpha_t.dtype)

|

| 92 |

+

#ic(xyz_t.dtype)

|

| 93 |

+

#ic(pair.dtype)

|

| 94 |

+

#ic(state.dtype)

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

#import pdb; pdb.set_trace()

|

| 98 |

+

|

| 99 |

+

# add template embedding

|

| 100 |

+