Spaces:

Sleeping

MakeItTalk: Speaker-Aware Talking-Head Animation

This is the code repository implementing the paper:

MakeItTalk: Speaker-Aware Talking-Head Animation

Yang Zhou, Xintong Han, Eli Shechtman, Jose Echevarria , Evangelos Kalogerakis, Dingzeyu Li

SIGGRAPH Asia 2020

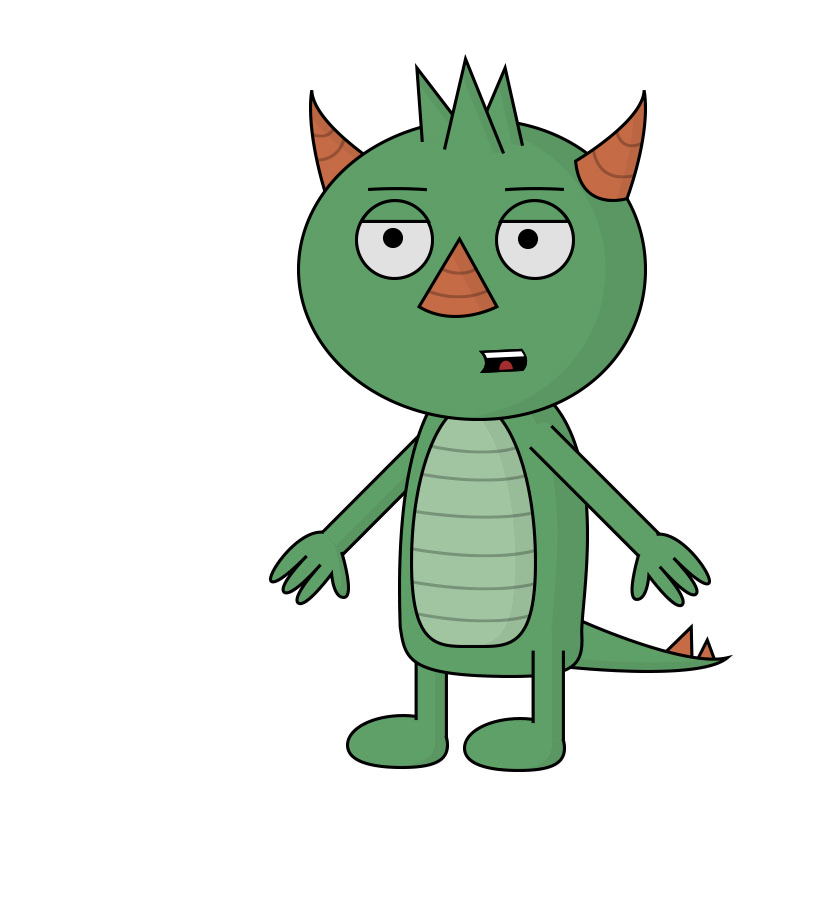

Abstract We present a method that generates expressive talking-head videos from a single facial image with audio as the only input. In contrast to previous attempts to learn direct mappings from audio to raw pixels for creating talking faces, our method first disentangles the content and speaker information in the input audio signal. The audio content robustly controls the motion of lips and nearby facial regions, while the speaker information determines the specifics of facial expressions and the rest of the talking-head dynamics. Another key component of our method is the prediction of facial landmarks reflecting the speaker-aware dynamics. Based on this intermediate representation, our method works with many portrait images in a single unified framework, including artistic paintings, sketches, 2D cartoon characters, Japanese mangas, and stylized caricatures. In addition, our method generalizes well for faces and characters that were not observed during training. We present extensive quantitative and qualitative evaluation of our method, in addition to user studies, demonstrating generated talking-heads of significantly higher quality compared to prior state-of-the-art methods.

[Project page] [Paper] [Video] [Arxiv] [Colab Demo] [Colab Demo TDLR]

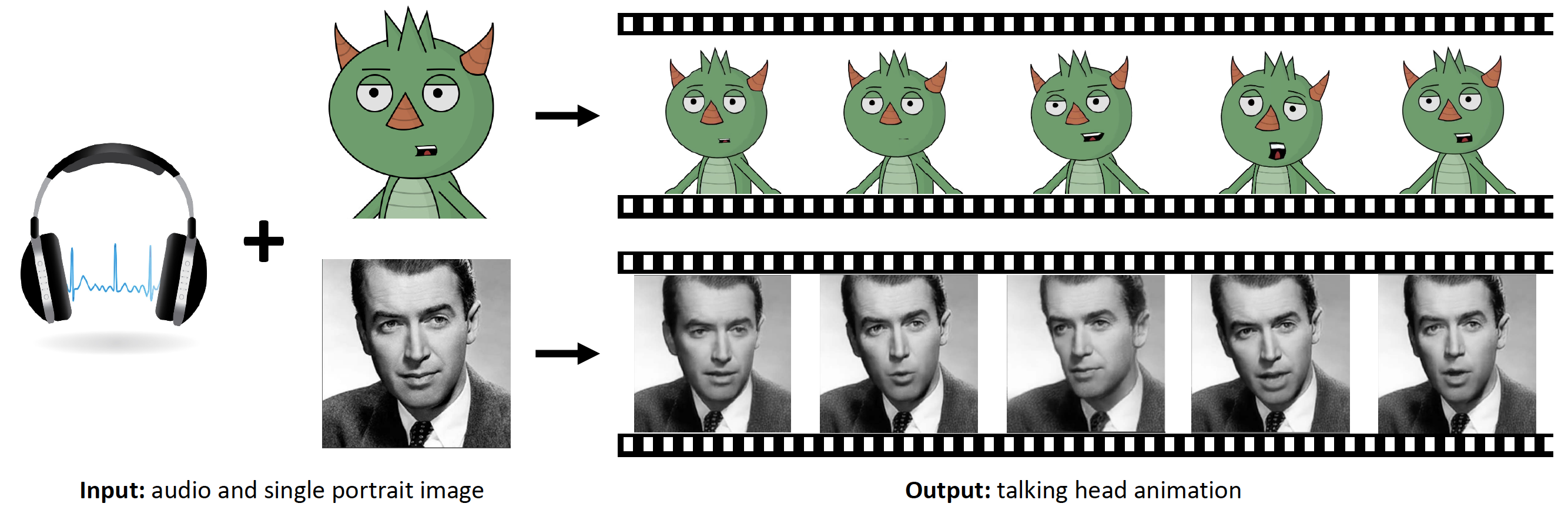

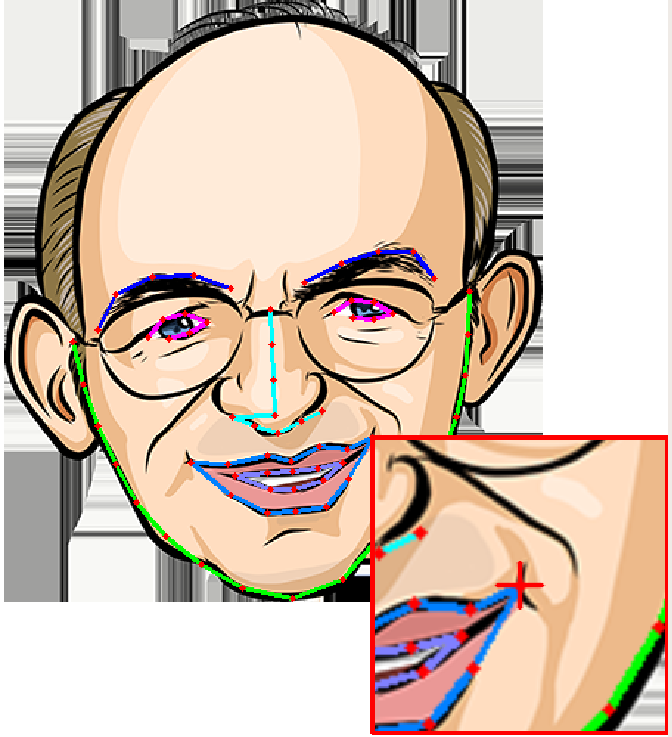

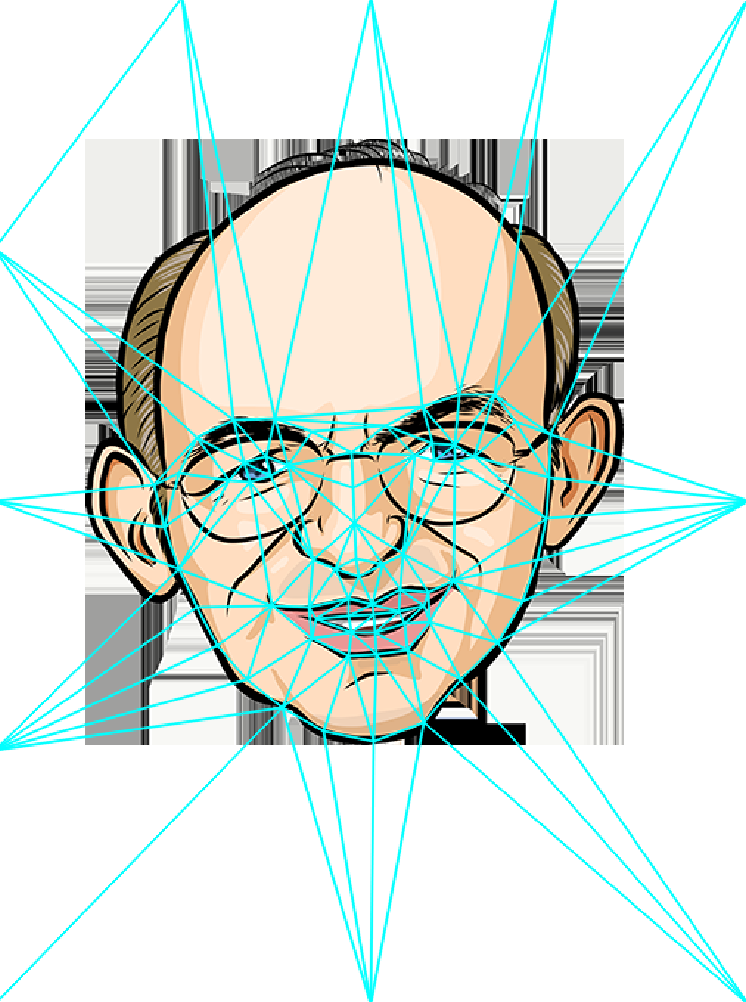

Figure. Given an audio speech signal and a single portrait image as input (left), our model generates speaker-aware talking-head animations (right). Both the speech signal and the input face image are not observed during the model training process. Our method creates both non-photorealistic cartoon animations (top) and natural human face videos (bottom).

Updates

- Generate new puppet! (tested on Ubuntu)

- Pre-trained models

- Google colab quick demo for natural faces [detail] [TDLR]

- Training code for each module

Requirements

- Python environment 3.6

conda create -n makeittalk_env python=3.6

conda activate makeittalk_env

- ffmpeg (https://ffmpeg.org/download.html)

sudo apt-get install ffmpeg

- python packages

pip install -r requirements.txt

winehq-stablefor cartoon face warping in Ubuntu (https://wiki.winehq.org/Ubuntu). Tested on Ubuntu16.04, wine==5.0.3.

sudo dpkg --add-architecture i386

wget -nc https://dl.winehq.org/wine-builds/winehq.key

sudo apt-key add winehq.key

sudo apt-add-repository 'deb https://dl.winehq.org/wine-builds/ubuntu/ xenial main'

sudo apt update

sudo apt install --install-recommends winehq-stable

Pre-trained Models

Download the following pre-trained models to MakeItTalk/examples/ckpt folder for testing your own animation.

| Model | Link to the model |

|---|---|

| Voice Conversion | Link |

| Speech Content Module | Link |

| Speaker-aware Module | Link |

| Image2Image Translation Module | Link |

| Non-photorealistic Warping (.exe) | Link |

Animate You Portraits!

- Download pre-trained embedding [here] and save to

MakeItTalk/examples/dumpfolder.

Nature Human Faces / Paintings

crop your portrait image into size

256x256and put it underexamplesfolder with.jpgformat. Make sure the head is almost in the middle (check existing examples for a reference).put test audio files under

examplesfolder as well with.wavformat.animate!

python main_end2end.py --jpg <portrait_file>

- use addition args

--amp_lip_x <x> --amp_lip_y <y> --amp_pos <pos>to amply lip motion (in x/y-axis direction) and head motion displacements, default values are<x>=2., <y>=2., <pos>=.5

Cartoon Faces

put test audio files under

examplesfolder as well with.wavformat.animate one of the existing puppets

python main_end2end_cartoon.py --jpg <cartoon_puppet_name_with_extension> --jpg_bg <puppet_background_with_extension>

--jpg_bgtakes a same-size image as the background image to create the animation, such as the puppet's body, the overall fixed background image. If you want to use the background, make sure the puppet face image (i.e.--jpgimage) is inpngformat and is transparent on the non-face area. If you don't need any background, please also create a same-size image (e.g. a pure white image) to hold the argument place.use addition args

--amp_lip_x <x> --amp_lip_y <y> --amp_pos <pos>to amply lip motion (in x/y-axis direction) and head motion displacements, default values are<x>=2., <y>=2., <pos>=.5

Generate Your New Puppet

put the cartoon image under

examples_cartooninstall conda environment

foa_env_py2(tested on python 2) for Face-of-art (https://github.com/papulke/face-of-art). Download the pre-trained weight here and put it underMakeItTalk/examples/ckpt. Activate the environment.

source activate foa_env_py2

- create necessary files to animate your cartoon image, i.e.

<your_puppet>_open_mouth.txt,<your_puppet>_close_mouth.txt,<your_puppet>_open_mouth_norm.txt,<your_puppet>_scale_shift.txt,<your_puppet>_delauney.txt

python main_gen_new_puppet.py <your_puppet_with_file_extension>

in details, it takes 3 steps

- Face-of-art automatic cartoon landmark detection.

- If it's wrong or not accurate, you can use our tool to drag and refine the landmarks.

- Estimate the closed mouth landmarks to serve as network input.

- Delauney triangulate the image with landmarks.

check puppet name

smiling_person_example.pngfor an example.

Train

Train Voice Conversion Module

Todo...

Train Content Branch

Create dataset root directory

<root_dir>Dataset: Download preprocessed dataset [here], and put it under

<root_dir>/dump.Train script: Run script below. Models will be saved in

<root_dir>/ckpt/<train_instance_name>.python main_train_content.py --train --write --root_dir <root_dir> --name <train_instance_name>

Train Speaker-Aware Branch

Todo...

Train Image-to-Image Translation

Todo...

License

Acknowledgement

We would like to thank Timothy Langlois for the narration, and Kaizhi Qian for the help with the voice conversion module. We thank Jakub Fiser for implementing the real-time GPU version of the triangle morphing algorithm. We thank Daichi Ito for sharing the caricature image and Dave Werner for Wilk, the gruff but ultimately lovable puppet.

This research is partially funded by NSF (EAGER-1942069) and a gift from Adobe. Our experiments were performed in the UMass GPU cluster obtained under the Collaborative Fund managed by the MassTech Collaborative.