Commit

•

2bf74f8

1

Parent(s):

18971d1

Upload 45 files

Browse files- .gitattributes +8 -0

- data/README.md +87 -0

- data/flux/.github/workflows/ci.yaml +20 -0

- data/flux/.gitignore +230 -0

- data/flux/LICENSE +201 -0

- data/flux/README.md +87 -0

- data/flux/assets/cup.png +3 -0

- data/flux/assets/cup_mask.png +0 -0

- data/flux/assets/dev_grid.jpg +3 -0

- data/flux/assets/docs/canny.png +3 -0

- data/flux/assets/docs/depth.png +3 -0

- data/flux/assets/docs/inpainting.png +3 -0

- data/flux/assets/docs/outpainting.png +3 -0

- data/flux/assets/docs/redux.png +0 -0

- data/flux/assets/grid.jpg +3 -0

- data/flux/assets/robot.webp +0 -0

- data/flux/assets/schnell_grid.jpg +3 -0

- data/flux/demo_gr.py +247 -0

- data/flux/demo_st.py +293 -0

- data/flux/demo_st_fill.py +487 -0

- data/flux/docs/fill.md +44 -0

- data/flux/docs/image-variation.md +33 -0

- data/flux/docs/structural-conditioning.md +40 -0

- data/flux/docs/text-to-image.md +93 -0

- data/flux/model_cards/FLUX.1-dev.md +46 -0

- data/flux/model_cards/FLUX.1-schnell.md +41 -0

- data/flux/model_licenses/LICENSE-FLUX1-dev +42 -0

- data/flux/model_licenses/LICENSE-FLUX1-schnell +54 -0

- data/flux/pyproject.toml +99 -0

- data/flux/setup.py +3 -0

- data/flux/src/flux/__init__.py +13 -0

- data/flux/src/flux/__main__.py +4 -0

- data/flux/src/flux/api.py +225 -0

- data/flux/src/flux/cli.py +238 -0

- data/flux/src/flux/cli_control.py +347 -0

- data/flux/src/flux/cli_fill.py +334 -0

- data/flux/src/flux/cli_redux.py +279 -0

- data/flux/src/flux/math.py +30 -0

- data/flux/src/flux/model.py +143 -0

- data/flux/src/flux/modules/autoencoder.py +312 -0

- data/flux/src/flux/modules/conditioner.py +37 -0

- data/flux/src/flux/modules/image_embedders.py +103 -0

- data/flux/src/flux/modules/layers.py +253 -0

- data/flux/src/flux/modules/lora.py +94 -0

- data/flux/src/flux/sampling.py +282 -0

- data/flux/src/flux/util.py +447 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,11 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

data/flux/assets/cup.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/flux/assets/dev_grid.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

data/flux/assets/docs/canny.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

data/flux/assets/docs/depth.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

data/flux/assets/docs/inpainting.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

data/flux/assets/docs/outpainting.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

data/flux/assets/grid.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

data/flux/assets/schnell_grid.jpg filter=lfs diff=lfs merge=lfs -text

|

data/README.md

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FLUX

|

| 2 |

+

by Black Forest Labs: https://blackforestlabs.ai. Documentation for our API can be found here: [docs.bfl.ml](https://docs.bfl.ml/).

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

This repo contains minimal inference code to run image generation & editing with our Flux models.

|

| 7 |

+

|

| 8 |

+

## Local installation

|

| 9 |

+

|

| 10 |

+

```bash

|

| 11 |

+

cd $HOME && git clone https://github.com/black-forest-labs/flux

|

| 12 |

+

cd $HOME/flux

|

| 13 |

+

python3.10 -m venv .venv

|

| 14 |

+

source .venv/bin/activate

|

| 15 |

+

pip install -e ".[all]"

|

| 16 |

+

```

|

| 17 |

+

|

| 18 |

+

### Models

|

| 19 |

+

|

| 20 |

+

We are offering an extensive suite of models. For more information about the invidual models, please refer to the link under **Usage**.

|

| 21 |

+

|

| 22 |

+

| Name | Usage | HuggingFace repo | License |

|

| 23 |

+

| --------------------------- | ---------------------------------------------------------- | ------------------------------------------------------------- | --------------------------------------------------------------------- |

|

| 24 |

+

| `FLUX.1 [schnell]` | [Text to Image](docs/text-to-image.md) | https://huggingface.co/black-forest-labs/FLUX.1-schnell | [apache-2.0](model_licenses/LICENSE-FLUX1-schnell) |

|

| 25 |

+

| `FLUX.1 [dev]` | [Text to Image](docs/text-to-image.md) | https://huggingface.co/black-forest-labs/FLUX.1-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 26 |

+

| `FLUX.1 Fill [dev]` | [In/Out-painting](docs/fill.md) | https://huggingface.co/black-forest-labs/FLUX.1-Fill-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 27 |

+

| `FLUX.1 Canny [dev]` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Canny-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 28 |

+

| `FLUX.1 Depth [dev]` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Depth-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 29 |

+

| `FLUX.1 Canny [dev] LoRA` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Canny-dev-lora | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 30 |

+

| `FLUX.1 Depth [dev] LoRA` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Depth-dev-lora | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 31 |

+

| `FLUX.1 Redux [dev]` | [Image variation](docs/image-variation.md) | https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 32 |

+

| `FLUX.1 [pro]` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 33 |

+

| `FLUX1.1 [pro]` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 34 |

+

| `FLUX1.1 [pro] Ultra/raw` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 35 |

+

| `FLUX.1 Fill [pro]` | [In/Out-painting](docs/fill.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 36 |

+

| `FLUX.1 Canny [pro]` | [Structural Conditioning](docs/controlnet.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 37 |

+

| `FLUX.1 Depth [pro]` | [Structural Conditioning](docs/controlnet.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 38 |

+

| `FLUX1.1 Redux [pro]` | [Image variation](docs/image-variation.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 39 |

+

| `FLUX1.1 Redux [pro] Ultra` | [Image variation](docs/image-variation.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 40 |

+

|

| 41 |

+

The weights of the autoencoder are also released under [apache-2.0](https://huggingface.co/datasets/choosealicense/licenses/blob/main/markdown/apache-2.0.md) and can be found in the HuggingFace repos above.

|

| 42 |

+

|

| 43 |

+

## API usage

|

| 44 |

+

|

| 45 |

+

Our API offers access to our models. It is documented here:

|

| 46 |

+

[docs.bfl.ml](https://docs.bfl.ml/).

|

| 47 |

+

|

| 48 |

+

In this repository we also offer an easy python interface. To use this, you

|

| 49 |

+

first need to register with the API on [api.bfl.ml](https://api.bfl.ml/), and

|

| 50 |

+

create a new API key.

|

| 51 |

+

|

| 52 |

+

To use the API key either run `export BFL_API_KEY=<your_key_here>` or provide

|

| 53 |

+

it via the `api_key=<your_key_here>` parameter. It is also expected that you

|

| 54 |

+

have installed the package as above.

|

| 55 |

+

|

| 56 |

+

Usage from python:

|

| 57 |

+

|

| 58 |

+

```python

|

| 59 |

+

from flux.api import ImageRequest

|

| 60 |

+

|

| 61 |

+

# this will create an api request directly but not block until the generation is finished

|

| 62 |

+

request = ImageRequest("A beautiful beach", name="flux.1.1-pro")

|

| 63 |

+

# or: request = ImageRequest("A beautiful beach", name="flux.1.1-pro", api_key="your_key_here")

|

| 64 |

+

|

| 65 |

+

# any of the following will block until the generation is finished

|

| 66 |

+

request.url

|

| 67 |

+

# -> https:<...>/sample.jpg

|

| 68 |

+

request.bytes

|

| 69 |

+

# -> b"..." bytes for the generated image

|

| 70 |

+

request.save("outputs/api.jpg")

|

| 71 |

+

# saves the sample to local storage

|

| 72 |

+

request.image

|

| 73 |

+

# -> a PIL image

|

| 74 |

+

```

|

| 75 |

+

|

| 76 |

+

Usage from the command line:

|

| 77 |

+

|

| 78 |

+

```bash

|

| 79 |

+

$ python -m flux.api --prompt="A beautiful beach" url

|

| 80 |

+

https:<...>/sample.jpg

|

| 81 |

+

|

| 82 |

+

# generate and save the result

|

| 83 |

+

$ python -m flux.api --prompt="A beautiful beach" save outputs/api

|

| 84 |

+

|

| 85 |

+

# open the image directly

|

| 86 |

+

$ python -m flux.api --prompt="A beautiful beach" image show

|

| 87 |

+

```

|

data/flux/.github/workflows/ci.yaml

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: CI

|

| 2 |

+

on: push

|

| 3 |

+

jobs:

|

| 4 |

+

lint:

|

| 5 |

+

runs-on: ubuntu-latest

|

| 6 |

+

steps:

|

| 7 |

+

- uses: actions/checkout@v2

|

| 8 |

+

- uses: actions/setup-python@v2

|

| 9 |

+

with:

|

| 10 |

+

python-version: "3.10"

|

| 11 |

+

- name: Install dependencies

|

| 12 |

+

run: |

|

| 13 |

+

python -m pip install --upgrade pip

|

| 14 |

+

pip install ruff==0.6.8

|

| 15 |

+

- name: Run Ruff

|

| 16 |

+

run: ruff check --output-format=github .

|

| 17 |

+

- name: Check imports

|

| 18 |

+

run: ruff check --select I --output-format=github .

|

| 19 |

+

- name: Check formatting

|

| 20 |

+

run: ruff format --check .

|

data/flux/.gitignore

ADDED

|

@@ -0,0 +1,230 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Created by https://www.toptal.com/developers/gitignore/api/linux,windows,macos,visualstudiocode,python

|

| 2 |

+

# Edit at https://www.toptal.com/developers/gitignore?templates=linux,windows,macos,visualstudiocode,python

|

| 3 |

+

|

| 4 |

+

### Linux ###

|

| 5 |

+

*~

|

| 6 |

+

|

| 7 |

+

# temporary files which can be created if a process still has a handle open of a deleted file

|

| 8 |

+

.fuse_hidden*

|

| 9 |

+

|

| 10 |

+

# KDE directory preferences

|

| 11 |

+

.directory

|

| 12 |

+

|

| 13 |

+

# Linux trash folder which might appear on any partition or disk

|

| 14 |

+

.Trash-*

|

| 15 |

+

|

| 16 |

+

# .nfs files are created when an open file is removed but is still being accessed

|

| 17 |

+

.nfs*

|

| 18 |

+

|

| 19 |

+

### macOS ###

|

| 20 |

+

# General

|

| 21 |

+

.DS_Store

|

| 22 |

+

.AppleDouble

|

| 23 |

+

.LSOverride

|

| 24 |

+

|

| 25 |

+

# Icon must end with two \r

|

| 26 |

+

Icon

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# Thumbnails

|

| 30 |

+

._*

|

| 31 |

+

|

| 32 |

+

# Files that might appear in the root of a volume

|

| 33 |

+

.DocumentRevisions-V100

|

| 34 |

+

.fseventsd

|

| 35 |

+

.Spotlight-V100

|

| 36 |

+

.TemporaryItems

|

| 37 |

+

.Trashes

|

| 38 |

+

.VolumeIcon.icns

|

| 39 |

+

.com.apple.timemachine.donotpresent

|

| 40 |

+

|

| 41 |

+

# Directories potentially created on remote AFP share

|

| 42 |

+

.AppleDB

|

| 43 |

+

.AppleDesktop

|

| 44 |

+

Network Trash Folder

|

| 45 |

+

Temporary Items

|

| 46 |

+

.apdisk

|

| 47 |

+

|

| 48 |

+

### Python ###

|

| 49 |

+

# Byte-compiled / optimized / DLL files

|

| 50 |

+

__pycache__/

|

| 51 |

+

*.py[cod]

|

| 52 |

+

*$py.class

|

| 53 |

+

|

| 54 |

+

# C extensions

|

| 55 |

+

*.so

|

| 56 |

+

|

| 57 |

+

# Distribution / packaging

|

| 58 |

+

.Python

|

| 59 |

+

build/

|

| 60 |

+

develop-eggs/

|

| 61 |

+

dist/

|

| 62 |

+

downloads/

|

| 63 |

+

eggs/

|

| 64 |

+

.eggs/

|

| 65 |

+

lib/

|

| 66 |

+

lib64/

|

| 67 |

+

parts/

|

| 68 |

+

sdist/

|

| 69 |

+

var/

|

| 70 |

+

wheels/

|

| 71 |

+

share/python-wheels/

|

| 72 |

+

*.egg-info/

|

| 73 |

+

.installed.cfg

|

| 74 |

+

*.egg

|

| 75 |

+

MANIFEST

|

| 76 |

+

|

| 77 |

+

# PyInstaller

|

| 78 |

+

# Usually these files are written by a python script from a template

|

| 79 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 80 |

+

*.manifest

|

| 81 |

+

*.spec

|

| 82 |

+

|

| 83 |

+

# Installer logs

|

| 84 |

+

pip-log.txt

|

| 85 |

+

pip-delete-this-directory.txt

|

| 86 |

+

|

| 87 |

+

# Unit test / coverage reports

|

| 88 |

+

htmlcov/

|

| 89 |

+

.tox/

|

| 90 |

+

.nox/

|

| 91 |

+

.coverage

|

| 92 |

+

.coverage.*

|

| 93 |

+

.cache

|

| 94 |

+

nosetests.xml

|

| 95 |

+

coverage.xml

|

| 96 |

+

*.cover

|

| 97 |

+

*.py,cover

|

| 98 |

+

.hypothesis/

|

| 99 |

+

.pytest_cache/

|

| 100 |

+

cover/

|

| 101 |

+

|

| 102 |

+

# Translations

|

| 103 |

+

*.mo

|

| 104 |

+

*.pot

|

| 105 |

+

|

| 106 |

+

# Django stuff:

|

| 107 |

+

*.log

|

| 108 |

+

local_settings.py

|

| 109 |

+

db.sqlite3

|

| 110 |

+

db.sqlite3-journal

|

| 111 |

+

|

| 112 |

+

# Flask stuff:

|

| 113 |

+

instance/

|

| 114 |

+

.webassets-cache

|

| 115 |

+

|

| 116 |

+

# Scrapy stuff:

|

| 117 |

+

.scrapy

|

| 118 |

+

|

| 119 |

+

# Sphinx documentation

|

| 120 |

+

docs/_build/

|

| 121 |

+

|

| 122 |

+

# PyBuilder

|

| 123 |

+

.pybuilder/

|

| 124 |

+

target/

|

| 125 |

+

|

| 126 |

+

# Jupyter Notebook

|

| 127 |

+

.ipynb_checkpoints

|

| 128 |

+

|

| 129 |

+

# IPython

|

| 130 |

+

profile_default/

|

| 131 |

+

ipython_config.py

|

| 132 |

+

|

| 133 |

+

# pyenv

|

| 134 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 135 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 136 |

+

# .python-version

|

| 137 |

+

|

| 138 |

+

# pipenv

|

| 139 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 140 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 141 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 142 |

+

# install all needed dependencies.

|

| 143 |

+

#Pipfile.lock

|

| 144 |

+

|

| 145 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 146 |

+

__pypackages__/

|

| 147 |

+

|

| 148 |

+

# Celery stuff

|

| 149 |

+

celerybeat-schedule

|

| 150 |

+

celerybeat.pid

|

| 151 |

+

|

| 152 |

+

# SageMath parsed files

|

| 153 |

+

*.sage.py

|

| 154 |

+

|

| 155 |

+

# Environments

|

| 156 |

+

.env

|

| 157 |

+

.venv

|

| 158 |

+

env/

|

| 159 |

+

venv/

|

| 160 |

+

ENV/

|

| 161 |

+

env.bak/

|

| 162 |

+

venv.bak/

|

| 163 |

+

|

| 164 |

+

# Spyder project settings

|

| 165 |

+

.spyderproject

|

| 166 |

+

.spyproject

|

| 167 |

+

|

| 168 |

+

# Rope project settings

|

| 169 |

+

.ropeproject

|

| 170 |

+

|

| 171 |

+

# mkdocs documentation

|

| 172 |

+

/site

|

| 173 |

+

|

| 174 |

+

# mypy

|

| 175 |

+

.mypy_cache/

|

| 176 |

+

.dmypy.json

|

| 177 |

+

dmypy.json

|

| 178 |

+

|

| 179 |

+

# Pyre type checker

|

| 180 |

+

.pyre/

|

| 181 |

+

|

| 182 |

+

# pytype static type analyzer

|

| 183 |

+

.pytype/

|

| 184 |

+

|

| 185 |

+

# Cython debug symbols

|

| 186 |

+

cython_debug/

|

| 187 |

+

|

| 188 |

+

### VisualStudioCode ###

|

| 189 |

+

.vscode/*

|

| 190 |

+

!.vscode/settings.json

|

| 191 |

+

!.vscode/tasks.json

|

| 192 |

+

!.vscode/launch.json

|

| 193 |

+

!.vscode/extensions.json

|

| 194 |

+

*.code-workspace

|

| 195 |

+

|

| 196 |

+

# Local History for Visual Studio Code

|

| 197 |

+

.history/

|

| 198 |

+

|

| 199 |

+

### VisualStudioCode Patch ###

|

| 200 |

+

# Ignore all local history of files

|

| 201 |

+

.history

|

| 202 |

+

.ionide

|

| 203 |

+

|

| 204 |

+

### Windows ###

|

| 205 |

+

# Windows thumbnail cache files

|

| 206 |

+

Thumbs.db

|

| 207 |

+

Thumbs.db:encryptable

|

| 208 |

+

ehthumbs.db

|

| 209 |

+

ehthumbs_vista.db

|

| 210 |

+

|

| 211 |

+

# Dump file

|

| 212 |

+

*.stackdump

|

| 213 |

+

|

| 214 |

+

# Folder config file

|

| 215 |

+

[Dd]esktop.ini

|

| 216 |

+

|

| 217 |

+

# Recycle Bin used on file shares

|

| 218 |

+

$RECYCLE.BIN/

|

| 219 |

+

|

| 220 |

+

# Windows Installer files

|

| 221 |

+

*.cab

|

| 222 |

+

*.msi

|

| 223 |

+

*.msix

|

| 224 |

+

*.msm

|

| 225 |

+

*.msp

|

| 226 |

+

|

| 227 |

+

# Windows shortcuts

|

| 228 |

+

*.lnk

|

| 229 |

+

|

| 230 |

+

# End of https://www.toptal.com/developers/gitignore/api/linux,windows,macos,visualstudiocode,python

|

data/flux/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

data/flux/README.md

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FLUX

|

| 2 |

+

by Black Forest Labs: https://blackforestlabs.ai. Documentation for our API can be found here: [docs.bfl.ml](https://docs.bfl.ml/).

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

This repo contains minimal inference code to run image generation & editing with our Flux models.

|

| 7 |

+

|

| 8 |

+

## Local installation

|

| 9 |

+

|

| 10 |

+

```bash

|

| 11 |

+

cd $HOME && git clone https://github.com/black-forest-labs/flux

|

| 12 |

+

cd $HOME/flux

|

| 13 |

+

python3.10 -m venv .venv

|

| 14 |

+

source .venv/bin/activate

|

| 15 |

+

pip install -e ".[all]"

|

| 16 |

+

```

|

| 17 |

+

|

| 18 |

+

### Models

|

| 19 |

+

|

| 20 |

+

We are offering an extensive suite of models. For more information about the invidual models, please refer to the link under **Usage**.

|

| 21 |

+

|

| 22 |

+

| Name | Usage | HuggingFace repo | License |

|

| 23 |

+

| --------------------------- | ---------------------------------------------------------- | ------------------------------------------------------------- | --------------------------------------------------------------------- |

|

| 24 |

+

| `FLUX.1 [schnell]` | [Text to Image](docs/text-to-image.md) | https://huggingface.co/black-forest-labs/FLUX.1-schnell | [apache-2.0](model_licenses/LICENSE-FLUX1-schnell) |

|

| 25 |

+

| `FLUX.1 [dev]` | [Text to Image](docs/text-to-image.md) | https://huggingface.co/black-forest-labs/FLUX.1-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 26 |

+

| `FLUX.1 Fill [dev]` | [In/Out-painting](docs/fill.md) | https://huggingface.co/black-forest-labs/FLUX.1-Fill-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 27 |

+

| `FLUX.1 Canny [dev]` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Canny-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 28 |

+

| `FLUX.1 Depth [dev]` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Depth-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 29 |

+

| `FLUX.1 Canny [dev] LoRA` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Canny-dev-lora | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 30 |

+

| `FLUX.1 Depth [dev] LoRA` | [Structural Conditioning](docs/structural-conditioning.md) | https://huggingface.co/black-forest-labs/FLUX.1-Depth-dev-lora | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 31 |

+

| `FLUX.1 Redux [dev]` | [Image variation](docs/image-variation.md) | https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev | [FLUX.1-dev Non-Commercial License](model_licenses/LICENSE-FLUX1-dev) |

|

| 32 |

+

| `FLUX.1 [pro]` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 33 |

+

| `FLUX1.1 [pro]` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 34 |

+

| `FLUX1.1 [pro] Ultra/raw` | [Text to Image](docs/text-to-image.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 35 |

+

| `FLUX.1 Fill [pro]` | [In/Out-painting](docs/fill.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 36 |

+

| `FLUX.1 Canny [pro]` | [Structural Conditioning](docs/controlnet.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 37 |

+

| `FLUX.1 Depth [pro]` | [Structural Conditioning](docs/controlnet.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 38 |

+

| `FLUX1.1 Redux [pro]` | [Image variation](docs/image-variation.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 39 |

+

| `FLUX1.1 Redux [pro] Ultra` | [Image variation](docs/image-variation.md) | [Available in our API.](https://docs.bfl.ml/) |

|

| 40 |

+

|

| 41 |

+

The weights of the autoencoder are also released under [apache-2.0](https://huggingface.co/datasets/choosealicense/licenses/blob/main/markdown/apache-2.0.md) and can be found in the HuggingFace repos above.

|

| 42 |

+

|

| 43 |

+

## API usage

|

| 44 |

+

|

| 45 |

+

Our API offers access to our models. It is documented here:

|

| 46 |

+

[docs.bfl.ml](https://docs.bfl.ml/).

|

| 47 |

+

|

| 48 |

+

In this repository we also offer an easy python interface. To use this, you

|

| 49 |

+

first need to register with the API on [api.bfl.ml](https://api.bfl.ml/), and

|

| 50 |

+

create a new API key.

|

| 51 |

+

|

| 52 |

+

To use the API key either run `export BFL_API_KEY=<your_key_here>` or provide

|

| 53 |

+

it via the `api_key=<your_key_here>` parameter. It is also expected that you

|

| 54 |

+

have installed the package as above.

|

| 55 |

+

|

| 56 |

+

Usage from python:

|

| 57 |

+

|

| 58 |

+

```python

|

| 59 |

+

from flux.api import ImageRequest

|

| 60 |

+

|

| 61 |

+

# this will create an api request directly but not block until the generation is finished

|

| 62 |

+

request = ImageRequest("A beautiful beach", name="flux.1.1-pro")

|

| 63 |

+

# or: request = ImageRequest("A beautiful beach", name="flux.1.1-pro", api_key="your_key_here")

|

| 64 |

+

|

| 65 |

+

# any of the following will block until the generation is finished

|

| 66 |

+

request.url

|

| 67 |

+

# -> https:<...>/sample.jpg

|

| 68 |

+

request.bytes

|

| 69 |

+

# -> b"..." bytes for the generated image

|

| 70 |

+

request.save("outputs/api.jpg")

|

| 71 |

+

# saves the sample to local storage

|

| 72 |

+

request.image

|

| 73 |

+

# -> a PIL image

|

| 74 |

+

```

|

| 75 |

+

|

| 76 |

+

Usage from the command line:

|

| 77 |

+

|

| 78 |

+

```bash

|

| 79 |

+

$ python -m flux.api --prompt="A beautiful beach" url

|

| 80 |

+

https:<...>/sample.jpg

|

| 81 |

+

|

| 82 |

+

# generate and save the result

|

| 83 |

+

$ python -m flux.api --prompt="A beautiful beach" save outputs/api

|

| 84 |

+

|

| 85 |

+

# open the image directly

|

| 86 |

+

$ python -m flux.api --prompt="A beautiful beach" image show

|

| 87 |

+

```

|

data/flux/assets/cup.png

ADDED

|

Git LFS Details

|

data/flux/assets/cup_mask.png

ADDED

|

data/flux/assets/dev_grid.jpg

ADDED

|

Git LFS Details

|

data/flux/assets/docs/canny.png

ADDED

|

Git LFS Details

|

data/flux/assets/docs/depth.png

ADDED

|

Git LFS Details

|

data/flux/assets/docs/inpainting.png

ADDED

|

Git LFS Details

|

data/flux/assets/docs/outpainting.png

ADDED

|

Git LFS Details

|

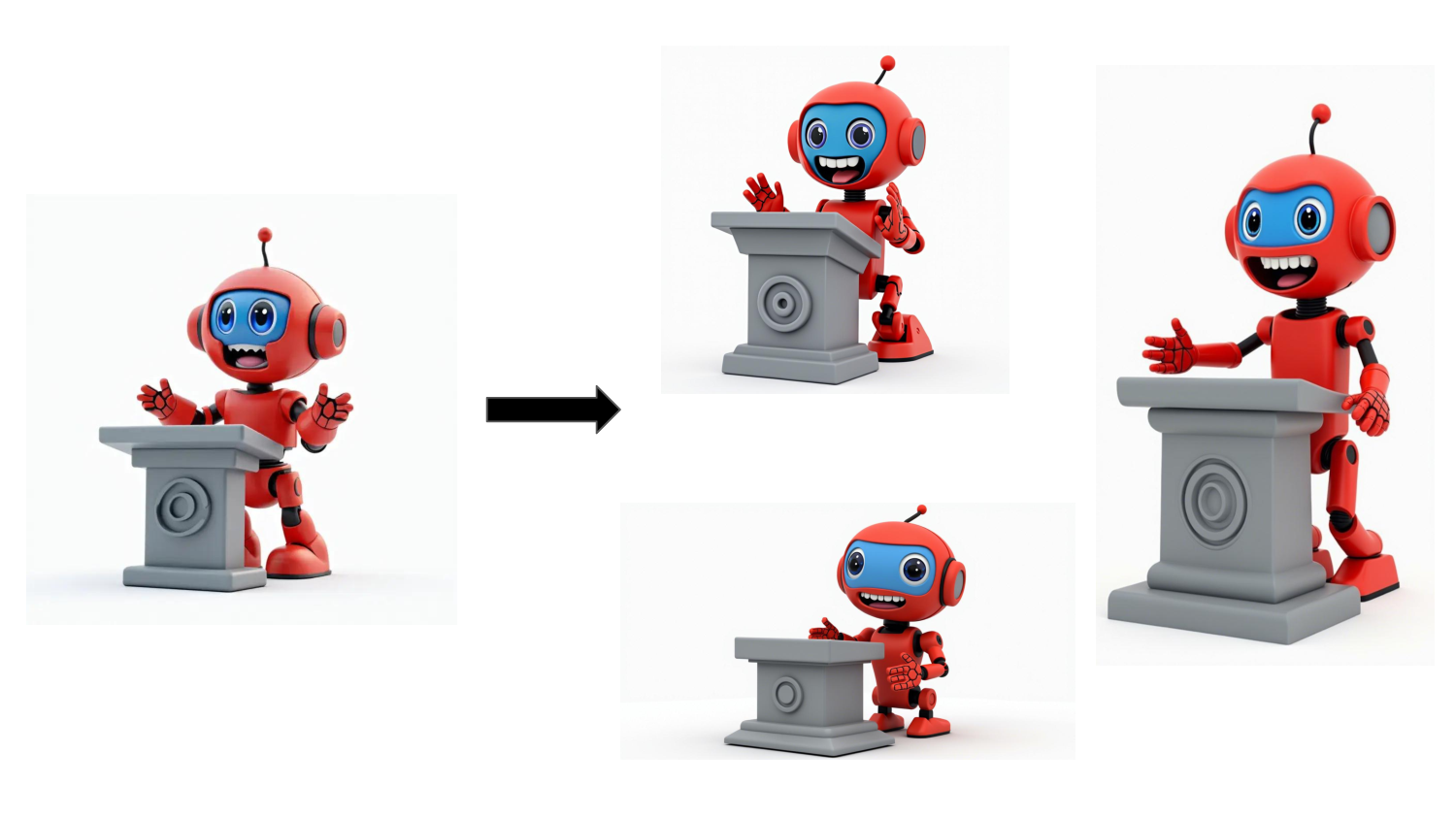

data/flux/assets/docs/redux.png

ADDED

|

data/flux/assets/grid.jpg

ADDED

|

Git LFS Details

|

data/flux/assets/robot.webp

ADDED

|

data/flux/assets/schnell_grid.jpg

ADDED

|

Git LFS Details

|

data/flux/demo_gr.py

ADDED

|

@@ -0,0 +1,247 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import uuid

|

| 4 |

+

|

| 5 |

+

import gradio as gr

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

from einops import rearrange

|

| 9 |

+

from PIL import ExifTags, Image

|

| 10 |

+

from transformers import pipeline

|

| 11 |

+

|

| 12 |

+

from flux.cli import SamplingOptions

|

| 13 |

+

from flux.sampling import denoise, get_noise, get_schedule, prepare, unpack

|

| 14 |

+

from flux.util import configs, embed_watermark, load_ae, load_clip, load_flow_model, load_t5

|

| 15 |

+

|

| 16 |

+

NSFW_THRESHOLD = 0.85

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def get_models(name: str, device: torch.device, offload: bool, is_schnell: bool):

|

| 20 |

+

t5 = load_t5(device, max_length=256 if is_schnell else 512)

|

| 21 |

+

clip = load_clip(device)

|

| 22 |

+

model = load_flow_model(name, device="cpu" if offload else device)

|

| 23 |

+

ae = load_ae(name, device="cpu" if offload else device)

|

| 24 |

+

nsfw_classifier = pipeline("image-classification", model="Falconsai/nsfw_image_detection", device=device)

|

| 25 |

+

return model, ae, t5, clip, nsfw_classifier

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

class FluxGenerator:

|

| 29 |

+

def __init__(self, model_name: str, device: str, offload: bool):

|

| 30 |

+

self.device = torch.device(device)

|

| 31 |

+

self.offload = offload

|

| 32 |

+

self.model_name = model_name

|

| 33 |

+

self.is_schnell = model_name == "flux-schnell"

|

| 34 |

+

self.model, self.ae, self.t5, self.clip, self.nsfw_classifier = get_models(

|

| 35 |

+

model_name,

|

| 36 |

+

device=self.device,

|

| 37 |

+

offload=self.offload,

|

| 38 |

+

is_schnell=self.is_schnell,

|

| 39 |

+

)

|

| 40 |

+

|

| 41 |

+

@torch.inference_mode()

|

| 42 |

+

def generate_image(

|

| 43 |

+

self,

|

| 44 |

+

width,

|

| 45 |

+

height,

|

| 46 |

+

num_steps,

|

| 47 |

+

guidance,

|

| 48 |

+

seed,

|

| 49 |

+

prompt,

|

| 50 |

+

init_image=None,

|

| 51 |

+

image2image_strength=0.0,

|

| 52 |

+

add_sampling_metadata=True,

|

| 53 |

+

):

|

| 54 |

+

seed = int(seed)

|

| 55 |

+

if seed == -1:

|

| 56 |

+

seed = None

|

| 57 |

+

|

| 58 |

+

opts = SamplingOptions(

|

| 59 |

+

prompt=prompt,

|

| 60 |

+

width=width,

|

| 61 |

+

height=height,

|

| 62 |

+

num_steps=num_steps,

|

| 63 |

+

guidance=guidance,

|

| 64 |

+

seed=seed,

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |