Spaces:

Runtime error

Runtime error

seokju cho

commited on

Commit

•

f8f62f3

1

Parent(s):

7722584

initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- INSTALL.md +20 -0

- R-101.pkl +3 -0

- README.md +48 -12

- app.py +130 -0

- assets/fig1.png +0 -0

- cat_seg/__init__.py +19 -0

- cat_seg/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/__pycache__/cat_sam_model.cpython-38.pyc +0 -0

- cat_seg/__pycache__/cat_seg_model.cpython-38.pyc +0 -0

- cat_seg/__pycache__/cat_seg_panoptic.cpython-38.pyc +0 -0

- cat_seg/__pycache__/config.cpython-38.pyc +0 -0

- cat_seg/__pycache__/pancat_model.cpython-38.pyc +0 -0

- cat_seg/__pycache__/test_time_augmentation.cpython-38.pyc +0 -0

- cat_seg/cat_seg_model.py +386 -0

- cat_seg/config.py +93 -0

- cat_seg/data/__init__.py +2 -0

- cat_seg/data/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/data/dataset_mappers/__init__.py +1 -0

- cat_seg/data/dataset_mappers/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/data/dataset_mappers/__pycache__/detr_panoptic_dataset_mapper.cpython-38.pyc +0 -0

- cat_seg/data/dataset_mappers/__pycache__/mask_former_panoptic_dataset_mapper.cpython-38.pyc +0 -0

- cat_seg/data/dataset_mappers/__pycache__/mask_former_semantic_dataset_mapper.cpython-38.pyc +0 -0

- cat_seg/data/dataset_mappers/detr_panoptic_dataset_mapper.py +180 -0

- cat_seg/data/dataset_mappers/mask_former_panoptic_dataset_mapper.py +165 -0

- cat_seg/data/dataset_mappers/mask_former_semantic_dataset_mapper.py +186 -0

- cat_seg/data/datasets/__init__.py +8 -0

- cat_seg/data/datasets/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_ade20k_150.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_ade20k_847.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_ade_panoptic.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_coco_panoptic.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_coco_stuff.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_pascal_20.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_pascal_59.cpython-38.pyc +0 -0

- cat_seg/data/datasets/__pycache__/register_pascal_context.cpython-38.pyc +0 -0

- cat_seg/data/datasets/register_ade20k_150.py +28 -0

- cat_seg/data/datasets/register_ade20k_847.py +0 -0

- cat_seg/data/datasets/register_coco_stuff.py +216 -0

- cat_seg/data/datasets/register_pascal_20.py +53 -0

- cat_seg/data/datasets/register_pascal_59.py +81 -0

- cat_seg/modeling/__init__.py +3 -0

- cat_seg/modeling/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/modeling/__pycache__/criterion.cpython-38.pyc +0 -0

- cat_seg/modeling/__pycache__/matcher.cpython-38.pyc +0 -0

- cat_seg/modeling/backbone/__init__.py +1 -0

- cat_seg/modeling/backbone/__pycache__/__init__.cpython-38.pyc +0 -0

- cat_seg/modeling/backbone/__pycache__/image_encoder.cpython-38.pyc +0 -0

- cat_seg/modeling/backbone/__pycache__/swin.cpython-38.pyc +0 -0

- cat_seg/modeling/backbone/swin.py +768 -0

- cat_seg/modeling/heads/__init__.py +1 -0

INSTALL.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Installation

|

| 2 |

+

|

| 3 |

+

### Requirements

|

| 4 |

+

- Linux or macOS with Python ≥ 3.6

|

| 5 |

+

- PyTorch ≥ 1.7 and [torchvision](https://github.com/pytorch/vision/) that matches the PyTorch installation.

|

| 6 |

+

Install them together at [pytorch.org](https://pytorch.org) to make sure of this. Note, please check

|

| 7 |

+

PyTorch version matches that is required by Detectron2.

|

| 8 |

+

- Detectron2: follow [Detectron2 installation instructions](https://detectron2.readthedocs.io/tutorials/install.html).

|

| 9 |

+

- OpenCV is optional but needed by demo and visualization

|

| 10 |

+

- `pip install -r requirements.txt`

|

| 11 |

+

|

| 12 |

+

An example of installation is shown below:

|

| 13 |

+

|

| 14 |

+

```

|

| 15 |

+

git clone https://github.com/~~~/CAT-Seg.git

|

| 16 |

+

cd CAT-Seg

|

| 17 |

+

conda create -n catseg python=3.8

|

| 18 |

+

conda activate catseg

|

| 19 |

+

pip install -r requirements.txt

|

| 20 |

+

```

|

R-101.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1156c77bff95ecb027060b5c83391b45bf159acd7f5bf7eacb656be0c1f0ab55

|

| 3 |

+

size 178666803

|

README.md

CHANGED

|

@@ -1,12 +1,48 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

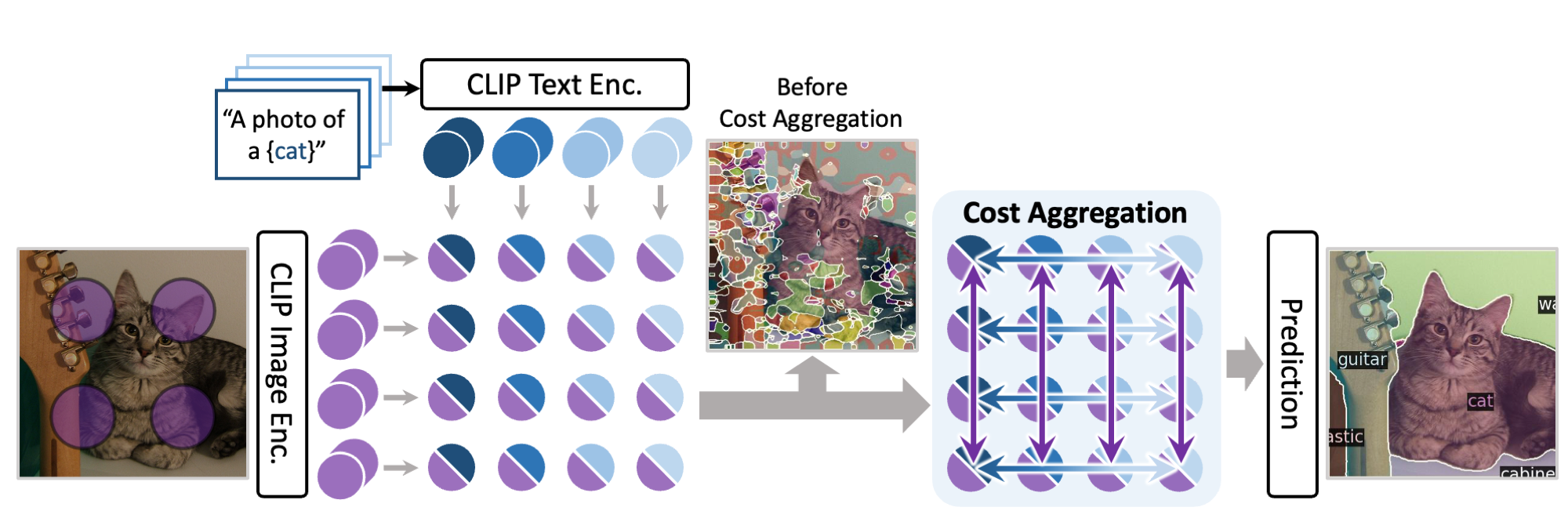

# CAT-Seg🐱: Cost Aggregation for Open-Vocabulary Semantic Segmentation

|

| 2 |

+

|

| 3 |

+

This is our official implementation of CAT-Seg🐱!

|

| 4 |

+

|

| 5 |

+

[[arXiv](#)] [[Project](#)]<br>

|

| 6 |

+

by [Seokju Cho](https://seokju-cho.github.io/)\*, [Heeseong Shin](https://github.com/hsshin98)\*, [Sunghwan Hong](https://sunghwanhong.github.io), Seungjun An, Seungjun Lee, [Anurag Arnab](https://anuragarnab.github.io), [Paul Hongsuck Seo](https://phseo.github.io), [Seungryong Kim](https://cvlab.korea.ac.kr)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Introduction

|

| 10 |

+

|

| 11 |

+

We introduce cost aggregation to open-vocabulary semantic segmentation, which jointly aggregates both image and text modalities within the matching cost.

|

| 12 |

+

|

| 13 |

+

## Installation

|

| 14 |

+

Install required packages.

|

| 15 |

+

|

| 16 |

+

```bash

|

| 17 |

+

conda create --name catseg python=3.8

|

| 18 |

+

conda activate catseg

|

| 19 |

+

conda install pytorch==1.10.1 torchvision==0.11.2 torchaudio==0.10.1 cudatoolkit=11.3 -c pytorch -c conda-forge

|

| 20 |

+

pip install -r requirements.txt

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

## Data Preparation

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

## Training

|

| 27 |

+

### Preparation

|

| 28 |

+

you have to blah

|

| 29 |

+

### Training script

|

| 30 |

+

```bash

|

| 31 |

+

python train.py --config configs/eval/{a847 | pc459 | a150 | pc59 | pas20 | pas20b}.yaml

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

## Evaluation

|

| 35 |

+

```bash

|

| 36 |

+

python eval.py --config configs/eval/{a847 | pc459 | a150 | pc59 | pas20 | pas20b}.yaml

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

## Citing CAT-Seg🐱 :pray:

|

| 40 |

+

|

| 41 |

+

```BibTeX

|

| 42 |

+

@article{liang2022open,

|

| 43 |

+

title={Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP},

|

| 44 |

+

author={Liang, Feng and Wu, Bichen and Dai, Xiaoliang and Li, Kunpeng and Zhao, Yinan and Zhang, Hang and Zhang, Peizhao and Vajda, Peter and Marculescu, Diana},

|

| 45 |

+

journal={arXiv preprint arXiv:2210.04150},

|

| 46 |

+

year={2022}

|

| 47 |

+

}

|

| 48 |

+

```

|

app.py

ADDED

|

@@ -0,0 +1,130 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 2 |

+

# Modified by Bowen Cheng from: https://github.com/facebookresearch/detectron2/blob/master/demo/demo.py

|

| 3 |

+

import argparse

|

| 4 |

+

import glob

|

| 5 |

+

import multiprocessing as mp

|

| 6 |

+

import os

|

| 7 |

+

#os.environ["CUDA_VISIBLE_DEVICES"] = ""

|

| 8 |

+

try:

|

| 9 |

+

import detectron2

|

| 10 |

+

except ModuleNotFoundError:

|

| 11 |

+

os.system('pip install git+https://github.com/facebookresearch/detectron2.git')

|

| 12 |

+

|

| 13 |

+

try:

|

| 14 |

+

import segment_anything

|

| 15 |

+

except ModuleNotFoundError:

|

| 16 |

+

os.system('pip install git+https://github.com/facebookresearch/segment-anything.git')

|

| 17 |

+

|

| 18 |

+

# fmt: off

|

| 19 |

+

import sys

|

| 20 |

+

sys.path.insert(1, os.path.join(sys.path[0], '..'))

|

| 21 |

+

# fmt: on

|

| 22 |

+

|

| 23 |

+

import tempfile

|

| 24 |

+

import time

|

| 25 |

+

import warnings

|

| 26 |

+

|

| 27 |

+

import cv2

|

| 28 |

+

import numpy as np

|

| 29 |

+

import tqdm

|

| 30 |

+

|

| 31 |

+

from detectron2.config import get_cfg

|

| 32 |

+

from detectron2.data.detection_utils import read_image

|

| 33 |

+

from detectron2.projects.deeplab import add_deeplab_config

|

| 34 |

+

from detectron2.utils.logger import setup_logger

|

| 35 |

+

|

| 36 |

+

from cat_seg import add_cat_seg_config

|

| 37 |

+

from demo.predictor import VisualizationDemo

|

| 38 |

+

import gradio as gr

|

| 39 |

+

import torch

|

| 40 |

+

from matplotlib.backends.backend_agg import FigureCanvasAgg as fc

|

| 41 |

+

|

| 42 |

+

# constants

|

| 43 |

+

WINDOW_NAME = "MaskFormer demo"

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def setup_cfg(args):

|

| 47 |

+

# load config from file and command-line arguments

|

| 48 |

+

cfg = get_cfg()

|

| 49 |

+

add_deeplab_config(cfg)

|

| 50 |

+

add_cat_seg_config(cfg)

|

| 51 |

+

cfg.merge_from_file(args.config_file)

|

| 52 |

+

cfg.merge_from_list(args.opts)

|

| 53 |

+

if torch.cuda.is_available():

|

| 54 |

+

cfg.MODEL.DEVICE = "cuda"

|

| 55 |

+

cfg.freeze()

|

| 56 |

+

return cfg

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def get_parser():

|

| 60 |

+

parser = argparse.ArgumentParser(description="Detectron2 demo for builtin configs")

|

| 61 |

+

parser.add_argument(

|

| 62 |

+

"--config-file",

|

| 63 |

+

default="configs/vitl_swinb_384.yaml",

|

| 64 |

+

metavar="FILE",

|

| 65 |

+

help="path to config file",

|

| 66 |

+

)

|

| 67 |

+

parser.add_argument(

|

| 68 |

+

"--input",

|

| 69 |

+

nargs="+",

|

| 70 |

+

help="A list of space separated input images; "

|

| 71 |

+

"or a single glob pattern such as 'directory/*.jpg'",

|

| 72 |

+

)

|

| 73 |

+

parser.add_argument(

|

| 74 |

+

"--opts",

|

| 75 |

+

help="Modify config options using the command-line 'KEY VALUE' pairs",

|

| 76 |

+

default=(

|

| 77 |

+

[

|

| 78 |

+

"MODEL.WEIGHTS", "model_final_cls.pth",

|

| 79 |

+

"MODEL.SEM_SEG_HEAD.TRAIN_CLASS_JSON", "datasets/voc20.json",

|

| 80 |

+

"MODEL.SEM_SEG_HEAD.TEST_CLASS_JSON", "datasets/voc20.json",

|

| 81 |

+

"TEST.SLIDING_WINDOW", "True",

|

| 82 |

+

"MODEL.SEM_SEG_HEAD.POOLING_SIZES", "[1,1]",

|

| 83 |

+

"MODEL.PROMPT_ENSEMBLE_TYPE", "single",

|

| 84 |

+

"MODEL.DEVICE", "cpu",

|

| 85 |

+

]),

|

| 86 |

+

nargs=argparse.REMAINDER,

|

| 87 |

+

)

|

| 88 |

+

return parser

|

| 89 |

+

|

| 90 |

+

def save_masks(preds, text):

|

| 91 |

+

preds = preds['sem_seg'].argmax(dim=0).cpu().numpy() # C H W

|

| 92 |

+

for i, t in enumerate(text):

|

| 93 |

+

dir = f"mask_{t}.png"

|

| 94 |

+

mask = preds == i

|

| 95 |

+

cv2.imwrite(dir, mask * 255)

|

| 96 |

+

|

| 97 |

+

def predict(image, text, model_type):

|

| 98 |

+

#import pdb; pdb.set_trace()

|

| 99 |

+

#use_sam = True #

|

| 100 |

+

use_sam = model_type != "CAT-Seg"

|

| 101 |

+

|

| 102 |

+

predictions, visualized_output = demo.run_on_image(image, text, use_sam)

|

| 103 |

+

#save_masks(predictions, text.split(','))

|

| 104 |

+

canvas = fc(visualized_output.fig)

|

| 105 |

+

canvas.draw()

|

| 106 |

+

out = np.frombuffer(canvas.tostring_rgb(), dtype='uint8').reshape(canvas.get_width_height()[::-1] + (3,))

|

| 107 |

+

|

| 108 |

+

return out[..., ::-1]

|

| 109 |

+

|

| 110 |

+

if __name__ == "__main__":

|

| 111 |

+

args = get_parser().parse_args()

|

| 112 |

+

cfg = setup_cfg(args)

|

| 113 |

+

global demo

|

| 114 |

+

demo = VisualizationDemo(cfg)

|

| 115 |

+

|

| 116 |

+

iface = gr.Interface(

|

| 117 |

+

fn=predict,

|

| 118 |

+

inputs=[gr.Image(), gr.Textbox(placeholder='background, cat, person'), ], #gr.Radio(["CAT-Seg", "Segment Anycat"], value="CAT-Seg")],

|

| 119 |

+

outputs="image",

|

| 120 |

+

description="""## Segment Anything with CAT-Seg!

|

| 121 |

+

Welcome to the Segment Anything with CAT-Seg!

|

| 122 |

+

|

| 123 |

+

In this demo, we combine state-of-the-art open-vocabulary semantic segmentation model, CAT-Seg with SAM(Segment Anything) for semantically labelling mask predictions from SAM.

|

| 124 |

+

|

| 125 |

+

Please note that this is an optimized version of the full model, and as such, its performance may be limited compared to the full model.

|

| 126 |

+

|

| 127 |

+

Also, the demo might run on a CPU depending on the demand, so it may take a little time to process your image.

|

| 128 |

+

|

| 129 |

+

To get started, simply upload an image and a comma-separated list of categories, and let the model work its magic!""")

|

| 130 |

+

iface.launch()

|

assets/fig1.png

ADDED

|

cat_seg/__init__.py

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 2 |

+

from . import data # register all new datasets

|

| 3 |

+

from . import modeling

|

| 4 |

+

|

| 5 |

+

# config

|

| 6 |

+

from .config import add_cat_seg_config

|

| 7 |

+

|

| 8 |

+

# dataset loading

|

| 9 |

+

from .data.dataset_mappers.detr_panoptic_dataset_mapper import DETRPanopticDatasetMapper

|

| 10 |

+

from .data.dataset_mappers.mask_former_panoptic_dataset_mapper import (

|

| 11 |

+

MaskFormerPanopticDatasetMapper,

|

| 12 |

+

)

|

| 13 |

+

from .data.dataset_mappers.mask_former_semantic_dataset_mapper import (

|

| 14 |

+

MaskFormerSemanticDatasetMapper,

|

| 15 |

+

)

|

| 16 |

+

|

| 17 |

+

# models

|

| 18 |

+

from .cat_seg_model import CATSeg

|

| 19 |

+

from .test_time_augmentation import SemanticSegmentorWithTTA

|

cat_seg/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (693 Bytes). View file

|

|

|

cat_seg/__pycache__/cat_sam_model.cpython-38.pyc

ADDED

|

Binary file (13.7 kB). View file

|

|

|

cat_seg/__pycache__/cat_seg_model.cpython-38.pyc

ADDED

|

Binary file (12.6 kB). View file

|

|

|

cat_seg/__pycache__/cat_seg_panoptic.cpython-38.pyc

ADDED

|

Binary file (10 kB). View file

|

|

|

cat_seg/__pycache__/config.cpython-38.pyc

ADDED

|

Binary file (2.39 kB). View file

|

|

|

cat_seg/__pycache__/pancat_model.cpython-38.pyc

ADDED

|

Binary file (11.4 kB). View file

|

|

|

cat_seg/__pycache__/test_time_augmentation.cpython-38.pyc

ADDED

|

Binary file (4.41 kB). View file

|

|

|

cat_seg/cat_seg_model.py

ADDED

|

@@ -0,0 +1,386 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 2 |

+

from typing import Tuple

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

from torch import nn

|

| 6 |

+

from torch.nn import functional as F

|

| 7 |

+

|

| 8 |

+

from detectron2.config import configurable

|

| 9 |

+

from detectron2.data import MetadataCatalog

|

| 10 |

+

from detectron2.modeling import META_ARCH_REGISTRY, build_backbone, build_sem_seg_head

|

| 11 |

+

from detectron2.modeling.backbone import Backbone

|

| 12 |

+

from detectron2.modeling.postprocessing import sem_seg_postprocess

|

| 13 |

+

from detectron2.structures import ImageList

|

| 14 |

+

from detectron2.utils.memory import _ignore_torch_cuda_oom

|

| 15 |

+

|

| 16 |

+

import numpy as np

|

| 17 |

+

from einops import rearrange

|

| 18 |

+

from segment_anything import SamPredictor, sam_model_registry, SamAutomaticMaskGenerator

|

| 19 |

+

|

| 20 |

+

@META_ARCH_REGISTRY.register()

|

| 21 |

+

class CATSeg(nn.Module):

|

| 22 |

+

@configurable

|

| 23 |

+

def __init__(

|

| 24 |

+

self,

|

| 25 |

+

*,

|

| 26 |

+

backbone: Backbone,

|

| 27 |

+

sem_seg_head: nn.Module,

|

| 28 |

+

size_divisibility: int,

|

| 29 |

+

pixel_mean: Tuple[float],

|

| 30 |

+

pixel_std: Tuple[float],

|

| 31 |

+

clip_pixel_mean: Tuple[float],

|

| 32 |

+

clip_pixel_std: Tuple[float],

|

| 33 |

+

train_class_json: str,

|

| 34 |

+

test_class_json: str,

|

| 35 |

+

sliding_window: bool,

|

| 36 |

+

clip_finetune: str,

|

| 37 |

+

backbone_multiplier: float,

|

| 38 |

+

clip_pretrained: str,

|

| 39 |

+

):

|

| 40 |

+

"""

|

| 41 |

+

Args:

|

| 42 |

+

backbone: a backbone module, must follow detectron2's backbone interface

|

| 43 |

+

sem_seg_head: a module that predicts semantic segmentation from backbone features

|

| 44 |

+

"""

|

| 45 |

+

super().__init__()

|

| 46 |

+

self.backbone = backbone

|

| 47 |

+

self.sem_seg_head = sem_seg_head

|

| 48 |

+

if size_divisibility < 0:

|

| 49 |

+

size_divisibility = self.backbone.size_divisibility

|

| 50 |

+

self.size_divisibility = size_divisibility

|

| 51 |

+

|

| 52 |

+

self.register_buffer("pixel_mean", torch.Tensor(pixel_mean).view(-1, 1, 1), False)

|

| 53 |

+

self.register_buffer("pixel_std", torch.Tensor(pixel_std).view(-1, 1, 1), False)

|

| 54 |

+

self.register_buffer("clip_pixel_mean", torch.Tensor(clip_pixel_mean).view(-1, 1, 1), False)

|

| 55 |

+

self.register_buffer("clip_pixel_std", torch.Tensor(clip_pixel_std).view(-1, 1, 1), False)

|

| 56 |

+

|

| 57 |

+

self.train_class_json = train_class_json

|

| 58 |

+

self.test_class_json = test_class_json

|

| 59 |

+

|

| 60 |

+

self.clip_finetune = clip_finetune

|

| 61 |

+

for name, params in self.sem_seg_head.predictor.clip_model.named_parameters():

|

| 62 |

+

if "visual" in name:

|

| 63 |

+

if clip_finetune == "prompt":

|

| 64 |

+

params.requires_grad = True if "prompt" in name else False

|

| 65 |

+

elif clip_finetune == "attention":

|

| 66 |

+

params.requires_grad = True if "attn" in name or "position" in name else False

|

| 67 |

+

elif clip_finetune == "full":

|

| 68 |

+

params.requires_grad = True

|

| 69 |

+

else:

|

| 70 |

+

params.requires_grad = False

|

| 71 |

+

else:

|

| 72 |

+

params.requires_grad = False

|

| 73 |

+

|

| 74 |

+

finetune_backbone = backbone_multiplier > 0.

|

| 75 |

+

for name, params in self.backbone.named_parameters():

|

| 76 |

+

if "norm0" in name:

|

| 77 |

+

params.requires_grad = False

|

| 78 |

+

else:

|

| 79 |

+

params.requires_grad = finetune_backbone

|

| 80 |

+

|

| 81 |

+

self.sliding_window = sliding_window

|

| 82 |

+

self.clip_resolution = (384, 384) if clip_pretrained == "ViT-B/16" else (336, 336)

|

| 83 |

+

self.sequential = False

|

| 84 |

+

|

| 85 |

+

self.use_sam = False

|

| 86 |

+

self.sam = sam_model_registry["vit_h"](checkpoint="sam_vit_h_4b8939.pth").to(self.device)

|

| 87 |

+

|

| 88 |

+

amg_kwargs = {

|

| 89 |

+

"points_per_side": 32,

|

| 90 |

+

"points_per_batch": None,

|

| 91 |

+

#"pred_iou_thresh": 0.0,

|

| 92 |

+

#"stability_score_thresh": 0.0,

|

| 93 |

+

"stability_score_offset": None,

|

| 94 |

+

"box_nms_thresh": None,

|

| 95 |

+

"crop_n_layers": None,

|

| 96 |

+

"crop_nms_thresh": None,

|

| 97 |

+

"crop_overlap_ratio": None,

|

| 98 |

+

"crop_n_points_downscale_factor": None,

|

| 99 |

+

"min_mask_region_area": None,

|

| 100 |

+

}

|

| 101 |

+

amg_kwargs = {k: v for k, v in amg_kwargs.items() if v is not None}

|

| 102 |

+

self.mask = SamAutomaticMaskGenerator(self.sam, output_mode="binary_mask", **amg_kwargs)

|

| 103 |

+

self.overlap_threshold = 0.8

|

| 104 |

+

self.panoptic_on = False

|

| 105 |

+

|

| 106 |

+

@classmethod

|

| 107 |

+

def from_config(cls, cfg):

|

| 108 |

+

backbone = build_backbone(cfg)

|

| 109 |

+

sem_seg_head = build_sem_seg_head(cfg, backbone.output_shape())

|

| 110 |

+

|

| 111 |

+

return {

|

| 112 |

+

"backbone": backbone,

|

| 113 |

+

"sem_seg_head": sem_seg_head,

|

| 114 |

+

"size_divisibility": cfg.MODEL.MASK_FORMER.SIZE_DIVISIBILITY,

|

| 115 |

+

"pixel_mean": cfg.MODEL.PIXEL_MEAN,

|

| 116 |

+

"pixel_std": cfg.MODEL.PIXEL_STD,

|

| 117 |

+

"clip_pixel_mean": cfg.MODEL.CLIP_PIXEL_MEAN,

|

| 118 |

+

"clip_pixel_std": cfg.MODEL.CLIP_PIXEL_STD,

|

| 119 |

+

"train_class_json": cfg.MODEL.SEM_SEG_HEAD.TRAIN_CLASS_JSON,

|

| 120 |

+

"test_class_json": cfg.MODEL.SEM_SEG_HEAD.TEST_CLASS_JSON,

|

| 121 |

+

"sliding_window": cfg.TEST.SLIDING_WINDOW,

|

| 122 |

+

"clip_finetune": cfg.MODEL.SEM_SEG_HEAD.CLIP_FINETUNE,

|

| 123 |

+

"backbone_multiplier": cfg.SOLVER.BACKBONE_MULTIPLIER,

|

| 124 |

+

"clip_pretrained": cfg.MODEL.SEM_SEG_HEAD.CLIP_PRETRAINED,

|

| 125 |

+

}

|

| 126 |

+

|

| 127 |

+

@property

|

| 128 |

+

def device(self):

|

| 129 |

+

return self.pixel_mean.device

|

| 130 |

+

|

| 131 |

+

def forward(self, batched_inputs):

|

| 132 |

+

"""

|

| 133 |

+

Args:

|

| 134 |

+

batched_inputs: a list, batched outputs of :class:`DatasetMapper`.

|

| 135 |

+

Each item in the list contains the inputs for one image.

|

| 136 |

+

For now, each item in the list is a dict that contains:

|

| 137 |

+

* "image": Tensor, image in (C, H, W) format.

|

| 138 |

+

* "instances": per-region ground truth

|

| 139 |

+

* Other information that's included in the original dicts, such as:

|

| 140 |

+

"height", "width" (int): the output resolution of the model (may be different

|

| 141 |

+

from input resolution), used in inference.

|

| 142 |

+

Returns:

|

| 143 |

+

list[dict]:

|

| 144 |

+

each dict has the results for one image. The dict contains the following keys:

|

| 145 |

+

|

| 146 |

+

* "sem_seg":

|

| 147 |

+

A Tensor that represents the

|

| 148 |

+

per-pixel segmentation prediced by the head.

|

| 149 |

+

The prediction has shape KxHxW that represents the logits of

|

| 150 |

+

each class for each pixel.

|

| 151 |

+

"""

|

| 152 |

+

images = [x["image"].to(self.device) for x in batched_inputs]

|

| 153 |

+

sam_images = images

|

| 154 |

+

if not self.training and self.sliding_window:

|

| 155 |

+

if not self.sequential:

|

| 156 |

+

with _ignore_torch_cuda_oom():

|

| 157 |

+

return self.inference_sliding_window(batched_inputs)

|

| 158 |

+

self.sequential = True

|

| 159 |

+

return self.inference_sliding_window(batched_inputs)

|

| 160 |

+

|

| 161 |

+

clip_images = [(x - self.clip_pixel_mean) / self.clip_pixel_std for x in images]

|

| 162 |

+

clip_images = ImageList.from_tensors(clip_images, self.size_divisibility)

|

| 163 |

+

|

| 164 |

+

images = [(x - self.pixel_mean) / self.pixel_std for x in images]

|

| 165 |

+

images = ImageList.from_tensors(images, self.size_divisibility)

|

| 166 |

+

|

| 167 |

+

clip_images = F.interpolate(clip_images.tensor, size=self.clip_resolution, mode='bilinear', align_corners=False, )

|

| 168 |

+

clip_features = self.sem_seg_head.predictor.clip_model.encode_image(clip_images, dense=True)

|

| 169 |

+

|

| 170 |

+

images_resized = F.interpolate(images.tensor, size=(384, 384), mode='bilinear', align_corners=False,)

|

| 171 |

+

features = self.backbone(images_resized)

|

| 172 |

+

|

| 173 |

+

outputs = self.sem_seg_head(clip_features, features)

|

| 174 |

+

|

| 175 |

+

if self.training:

|

| 176 |

+

targets = torch.stack([x["sem_seg"].to(self.device) for x in batched_inputs], dim=0)

|

| 177 |

+

outputs = F.interpolate(outputs, size=(targets.shape[-2], targets.shape[-1]), mode="bilinear", align_corners=False)

|

| 178 |

+

|

| 179 |

+

num_classes = outputs.shape[1]

|

| 180 |

+

mask = targets != self.sem_seg_head.ignore_value

|

| 181 |

+

|

| 182 |

+

outputs = outputs.permute(0,2,3,1)

|

| 183 |

+

_targets = torch.zeros(outputs.shape, device=self.device)

|

| 184 |

+

_onehot = F.one_hot(targets[mask], num_classes=num_classes).float()

|

| 185 |

+

_targets[mask] = _onehot

|

| 186 |

+

|

| 187 |

+

loss = F.binary_cross_entropy_with_logits(outputs, _targets)

|

| 188 |

+

losses = {"loss_sem_seg" : loss}

|

| 189 |

+

return losses

|

| 190 |

+

else:

|

| 191 |

+

#outputs = outputs.sigmoid()

|

| 192 |

+

image_size = images.image_sizes[0]

|

| 193 |

+

if self.use_sam:

|

| 194 |

+

masks = self.mask.generate(np.uint8(sam_images[0].permute(1, 2, 0).cpu().numpy()))

|

| 195 |

+

outputs, sam_cls = self.discrete_semantic_inference(outputs, masks, image_size)

|

| 196 |

+

#outputs, sam_cls = self.continuous_semantic_inference(outputs, masks, image_size)

|

| 197 |

+

#outputs, sam_cls = self.continuous_semantic_inference2(outputs, masks, image_size, img=img, text=text)

|

| 198 |

+

height = batched_inputs[0].get("height", image_size[0])

|

| 199 |

+

width = batched_inputs[0].get("width", image_size[1])

|

| 200 |

+

|

| 201 |

+

output = sem_seg_postprocess(outputs[0], image_size, height, width)

|

| 202 |

+

processed_results = [{'sem_seg': output}]

|

| 203 |

+

return processed_results

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

@torch.no_grad()

|

| 207 |

+

def inference_sliding_window(self, batched_inputs, kernel=384, overlap=0.333, out_res=[640, 640]):

|

| 208 |

+

|

| 209 |

+

images = [x["image"].to(self.device, dtype=torch.float32) for x in batched_inputs]

|

| 210 |

+

stride = int(kernel * (1 - overlap))

|

| 211 |

+

unfold = nn.Unfold(kernel_size=kernel, stride=stride)

|

| 212 |

+

fold = nn.Fold(out_res, kernel_size=kernel, stride=stride)

|

| 213 |

+

|

| 214 |

+

image = F.interpolate(images[0].unsqueeze(0), size=out_res, mode='bilinear', align_corners=False).squeeze()

|

| 215 |

+

sam_images = [image]

|

| 216 |

+

image = rearrange(unfold(image), "(C H W) L-> L C H W", C=3, H=kernel)

|

| 217 |

+

global_image = F.interpolate(images[0].unsqueeze(0), size=(kernel, kernel), mode='bilinear', align_corners=False)

|

| 218 |

+

image = torch.cat((image, global_image), dim=0)

|

| 219 |

+

|

| 220 |

+

images = (image - self.pixel_mean) / self.pixel_std

|

| 221 |

+

clip_images = (image - self.clip_pixel_mean) / self.clip_pixel_std

|

| 222 |

+

clip_images = F.interpolate(clip_images, size=self.clip_resolution, mode='bilinear', align_corners=False, )

|

| 223 |

+

clip_features = self.sem_seg_head.predictor.clip_model.encode_image(clip_images, dense=True)

|

| 224 |

+

|

| 225 |

+

if self.sequential:

|

| 226 |

+

outputs = []

|

| 227 |

+

for clip_feat, image in zip(clip_features, images):

|

| 228 |

+

feature = self.backbone(image.unsqueeze(0))

|

| 229 |

+

output = self.sem_seg_head(clip_feat.unsqueeze(0), feature)

|

| 230 |

+

outputs.append(output[0])

|

| 231 |

+

outputs = torch.stack(outputs, dim=0)

|

| 232 |

+

else:

|

| 233 |

+

features = self.backbone(images)

|

| 234 |

+

outputs = self.sem_seg_head(clip_features, features)

|

| 235 |

+

|

| 236 |

+

outputs = F.interpolate(outputs, size=kernel, mode="bilinear", align_corners=False)

|

| 237 |

+

outputs = outputs.sigmoid()

|

| 238 |

+

|

| 239 |

+

global_output = outputs[-1:]

|

| 240 |

+

global_output = F.interpolate(global_output, size=out_res, mode='bilinear', align_corners=False,)

|

| 241 |

+

outputs = outputs[:-1]

|

| 242 |

+

outputs = fold(outputs.flatten(1).T) / fold(unfold(torch.ones([1] + out_res, device=self.device)))

|

| 243 |

+

outputs = (outputs + global_output) / 2.

|

| 244 |

+

|

| 245 |

+

height = batched_inputs[0].get("height", out_res[0])

|

| 246 |

+

width = batched_inputs[0].get("width", out_res[1])

|

| 247 |

+

catseg_outputs = sem_seg_postprocess(outputs[0], out_res, height, width)

|

| 248 |

+

#catseg_outputs = catseg_outputs.argmax(dim=1)[0].cpu()

|

| 249 |

+

|

| 250 |

+

masks = self.mask.generate(np.uint8(sam_images[0].permute(1, 2, 0).cpu().numpy()))

|

| 251 |

+

if self.use_sam:

|

| 252 |

+

outputs, sam_cls = self.discrete_semantic_inference(outputs, masks, out_res)

|

| 253 |

+

#outputs, sam_cls = self.continuous_semantic_inference(outputs, masks, out_res)

|

| 254 |

+

|

| 255 |

+

output = sem_seg_postprocess(outputs[0], out_res, height, width)

|

| 256 |

+

|

| 257 |

+

ret = [{'sem_seg': output}]

|

| 258 |

+

if self.panoptic_on:

|

| 259 |

+

panoptic_r = self.panoptic_inference(catseg_outputs, masks, sam_cls, size=output.shape[-2:])

|

| 260 |

+

ret[0]['panoptic_seg'] = panoptic_r

|

| 261 |

+

|

| 262 |

+

return ret

|

| 263 |

+

|

| 264 |

+

def discrete_semantic_inference(self, outputs, masks, image_size):

|

| 265 |

+

catseg_outputs = F.interpolate(outputs, size=image_size, mode="bilinear", align_corners=True) #.argmax(dim=1)[0].cpu()

|

| 266 |

+

sam_outputs = torch.zeros_like(catseg_outputs).cpu()

|

| 267 |

+

catseg_outputs = catseg_outputs.argmax(dim=1)[0].cpu()

|

| 268 |

+

sam_classes = torch.zeros(len(masks))

|

| 269 |

+

for i in range(len(masks)):

|

| 270 |

+

m = masks[i]['segmentation']

|

| 271 |

+

s = masks[i]['stability_score']

|

| 272 |

+

idx = catseg_outputs[m].bincount().argmax()

|

| 273 |

+

sam_outputs[0, idx][m] = s

|

| 274 |

+

sam_classes[i] = idx

|

| 275 |

+

|

| 276 |

+

return sam_outputs, sam_classes

|

| 277 |

+

|

| 278 |

+

def continuous_semantic_inference(self, outputs, masks, image_size, scale=100/7.):

|

| 279 |

+

#import pdb; pdb.set_trace()

|

| 280 |

+

catseg_outputs = F.interpolate(outputs, size=image_size, mode="bilinear", align_corners=True)[0].cpu()

|

| 281 |

+

sam_outputs = torch.zeros_like(catseg_outputs)

|

| 282 |

+

#catseg_outputs = catseg_outputs.argmax(dim=1)[0].cpu()

|

| 283 |

+

sam_classes = torch.zeros(len(masks))

|

| 284 |

+

#import pdb; pdb.set_trace()

|

| 285 |

+

mask_pred = torch.tensor(np.asarray([x['segmentation'] for x in masks]), dtype=torch.float32) # N H W

|

| 286 |

+

mask_score = torch.tensor(np.asarray([x['predicted_iou'] for x in masks]), dtype=torch.float32) # N

|

| 287 |

+

|

| 288 |

+

mask_cls = torch.einsum("nhw, chw -> nc", mask_pred, catseg_outputs)

|

| 289 |

+

mask_norm = mask_pred.sum(-1).sum(-1)

|

| 290 |

+

mask_cls = mask_cls / mask_norm[:, None]

|

| 291 |

+

mask_cls = mask_cls / mask_cls.norm(p=1, dim=1)[:, None]

|

| 292 |

+

|

| 293 |

+

mask_logits = mask_pred * mask_score[:, None, None]

|

| 294 |

+

output = torch.einsum("nhw, nc -> chw", mask_logits, mask_cls)

|

| 295 |

+

|

| 296 |

+

return output.unsqueeze(0), mask_cls

|

| 297 |

+

|

| 298 |

+

def continuous_semantic_inference2(self, outputs, masks, image_size, scale=100/7., img=None, text=None):

|

| 299 |

+

assert img is not None and text is not None

|

| 300 |

+

import pdb; pdb.set_trace()

|

| 301 |

+

#catseg_outputs = F.interpolate(outputs, size=image_size, mode="bilinear", align_corners=True)[0].cpu()

|

| 302 |

+

img = F.interpolate(img, size=image_size, mode="bilinear", align_corners=True)[0].cpu()

|

| 303 |

+

img = img.permute(1, 2, 0)

|

| 304 |

+

|

| 305 |

+

#sam_outputs = torch.zeros_like(catseg_outputs)

|

| 306 |

+

#catseg_outputs = catseg_outputs.argmax(dim=1)[0].cpu()

|

| 307 |

+

sam_classes = torch.zeros(len(masks))

|

| 308 |

+

#import pdb; pdb.set_trace()

|

| 309 |

+

mask_pred = torch.tensor(np.asarray([x['segmentation'] for x in masks]), dtype=torch.float32) # N H W

|

| 310 |

+

mask_score = torch.tensor(np.asarray([x['predicted_iou'] for x in masks]), dtype=torch.float32) # N

|

| 311 |

+

|

| 312 |

+

mask_pool = torch.einsum("nhw, hwd -> nd ", mask_pred, img)

|

| 313 |

+

mask_pool = mask_pool / mask_pool.norm(dim=1, keepdim=True)

|

| 314 |

+

mask_cls = torch.einsum("nd, cd -> nc", 100 * mask_pool, text.cpu())

|

| 315 |

+

mask_cls = mask_cls.softmax(dim=1)

|

| 316 |

+

|

| 317 |

+

#mask_cls = torch.einsum("nhw, chw -> nc", mask_pred, catseg_outputs)

|

| 318 |

+

mask_norm = mask_pred.sum(-1).sum(-1)

|

| 319 |

+

mask_cls = mask_cls / mask_norm[:, None]

|

| 320 |

+

mask_cls = mask_cls / mask_cls.norm(p=1, dim=1)[:, None]

|

| 321 |

+

|

| 322 |

+

mask_logits = mask_pred * mask_score[:, None, None]

|

| 323 |

+

output = torch.einsum("nhw, nc -> chw", mask_logits, mask_cls)

|

| 324 |

+

|

| 325 |

+

return output.unsqueeze(0), sam_classes

|

| 326 |

+

|

| 327 |

+

def panoptic_inference(self, outputs, masks, sam_classes, size=None):

|

| 328 |

+

#import pdb; pdb.set_trace()

|

| 329 |

+

scores = np.asarray([x['predicted_iou'] for x in masks])

|

| 330 |

+

mask_pred = np.asarray([x['segmentation'] for x in masks])

|

| 331 |

+

|

| 332 |

+

#keep = labels.ne(self.sem_seg_head.num_classes) & (scores > self.object_mask_threshold)

|

| 333 |

+

cur_scores = torch.tensor(scores)

|

| 334 |

+

cur_masks = torch.tensor(mask_pred)

|

| 335 |

+

cur_masks = F.interpolate(cur_masks.unsqueeze(0).float(), size=outputs.shape[-2:], mode="nearest")[0]

|

| 336 |

+

cur_classes = sam_classes.argmax(dim=-1)

|

| 337 |

+

#cur_mask_cls = mask_cls#[keep]

|

| 338 |

+

#cur_mask_cls = cur_mask_cls[:, :-1]

|

| 339 |

+

|

| 340 |

+

#import pdb; pdb.set_trace()

|

| 341 |

+

cur_prob_masks = cur_scores.view(-1, 1, 1) * cur_masks

|

| 342 |

+

|

| 343 |

+

h, w = cur_masks.shape[-2:]

|

| 344 |

+

panoptic_seg = torch.zeros((h, w), dtype=torch.int32, device=cur_masks.device)

|

| 345 |

+

segments_info = []

|

| 346 |

+

|

| 347 |

+

current_segment_id = 0

|

| 348 |

+

if cur_masks.shape[0] == 0:

|

| 349 |

+

# We didn't detect any mask :(

|

| 350 |

+

return panoptic_seg, segments_info

|

| 351 |

+

else:

|

| 352 |

+

# take argmax

|

| 353 |

+

cur_mask_ids = cur_prob_masks.argmax(0)

|

| 354 |

+

stuff_memory_list = {}

|

| 355 |

+

for k in range(cur_classes.shape[0]):

|

| 356 |

+

pred_class = cur_classes[k].item()

|

| 357 |

+

#isthing = pred_class in self.metadata.thing_dataset_id_to_contiguous_id.values()

|

| 358 |

+

isthing = pred_class in [3, 6] #[i for i in range(10)]#self.metadata.thing_dataset_id_to_contiguous_id.values()

|

| 359 |

+

mask = cur_mask_ids == k

|

| 360 |

+

mask_area = mask.sum().item()

|

| 361 |

+

original_area = (cur_masks[k] >= 0.5).sum().item()

|

| 362 |

+

|

| 363 |

+

if mask_area > 0 and original_area > 0:

|

| 364 |

+

if mask_area / original_area < self.overlap_threshold:

|

| 365 |

+

continue

|

| 366 |

+

|

| 367 |

+

# merge stuff regions

|

| 368 |

+

if not isthing:

|

| 369 |

+

if int(pred_class) in stuff_memory_list.keys():

|

| 370 |

+

panoptic_seg[mask] = stuff_memory_list[int(pred_class)]

|

| 371 |

+

continue

|

| 372 |

+

else:

|

| 373 |

+

stuff_memory_list[int(pred_class)] = current_segment_id + 1

|

| 374 |

+

|

| 375 |

+

current_segment_id += 1

|

| 376 |

+

panoptic_seg[mask] = current_segment_id

|

| 377 |

+

|

| 378 |

+

segments_info.append(

|

| 379 |

+

{

|

| 380 |

+

"id": current_segment_id,

|

| 381 |

+

"isthing": bool(isthing),

|

| 382 |

+

"category_id": int(pred_class),

|

| 383 |

+

}

|

| 384 |

+

)

|

| 385 |

+

|

| 386 |

+

return panoptic_seg, segments_info

|

cat_seg/config.py

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 3 |

+

from detectron2.config import CfgNode as CN

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def add_cat_seg_config(cfg):

|

| 7 |

+

"""

|

| 8 |

+

Add config for MASK_FORMER.

|

| 9 |

+

"""

|

| 10 |

+

# data config

|

| 11 |

+

# select the dataset mapper

|

| 12 |

+

cfg.INPUT.DATASET_MAPPER_NAME = "mask_former_semantic"

|

| 13 |

+

|

| 14 |

+

cfg.DATASETS.VAL_ALL = ("coco_2017_val_all_stuff_sem_seg",)

|

| 15 |

+

|

| 16 |

+

# Color augmentation

|

| 17 |

+

cfg.INPUT.COLOR_AUG_SSD = False

|

| 18 |

+

# We retry random cropping until no single category in semantic segmentation GT occupies more

|

| 19 |

+

# than `SINGLE_CATEGORY_MAX_AREA` part of the crop.

|

| 20 |

+

cfg.INPUT.CROP.SINGLE_CATEGORY_MAX_AREA = 1.0

|

| 21 |

+

# Pad image and segmentation GT in dataset mapper.

|

| 22 |

+

cfg.INPUT.SIZE_DIVISIBILITY = -1

|

| 23 |

+

|

| 24 |

+

# solver config

|

| 25 |

+

# weight decay on embedding

|

| 26 |

+

cfg.SOLVER.WEIGHT_DECAY_EMBED = 0.0

|

| 27 |

+

# optimizer

|

| 28 |

+

cfg.SOLVER.OPTIMIZER = "ADAMW"

|

| 29 |

+

cfg.SOLVER.BACKBONE_MULTIPLIER = 0.1

|

| 30 |

+

|

| 31 |

+

# mask_former model config

|

| 32 |

+

cfg.MODEL.MASK_FORMER = CN()

|

| 33 |

+

|

| 34 |

+

# Sometimes `backbone.size_divisibility` is set to 0 for some backbone (e.g. ResNet)

|

| 35 |

+

# you can use this config to override

|

| 36 |

+

cfg.MODEL.MASK_FORMER.SIZE_DIVISIBILITY = 32

|

| 37 |

+

|

| 38 |

+

# swin transformer backbone

|

| 39 |

+

cfg.MODEL.SWIN = CN()

|

| 40 |

+

cfg.MODEL.SWIN.PRETRAIN_IMG_SIZE = 224

|

| 41 |

+

cfg.MODEL.SWIN.PATCH_SIZE = 4

|

| 42 |

+

cfg.MODEL.SWIN.EMBED_DIM = 96

|

| 43 |

+

cfg.MODEL.SWIN.DEPTHS = [2, 2, 6, 2]

|

| 44 |

+

cfg.MODEL.SWIN.NUM_HEADS = [3, 6, 12, 24]

|

| 45 |

+

cfg.MODEL.SWIN.WINDOW_SIZE = 7

|

| 46 |

+

cfg.MODEL.SWIN.MLP_RATIO = 4.0

|

| 47 |

+

cfg.MODEL.SWIN.QKV_BIAS = True

|

| 48 |

+

cfg.MODEL.SWIN.QK_SCALE = None

|

| 49 |

+

cfg.MODEL.SWIN.DROP_RATE = 0.0

|

| 50 |

+

cfg.MODEL.SWIN.ATTN_DROP_RATE = 0.0

|

| 51 |

+

cfg.MODEL.SWIN.DROP_PATH_RATE = 0.3

|

| 52 |

+

cfg.MODEL.SWIN.APE = False

|

| 53 |

+

cfg.MODEL.SWIN.PATCH_NORM = True

|

| 54 |

+

cfg.MODEL.SWIN.OUT_FEATURES = ["res2", "res3", "res4", "res5"]

|

| 55 |

+

|

| 56 |

+

# zero shot config

|

| 57 |

+

cfg.MODEL.SEM_SEG_HEAD.TRAIN_CLASS_JSON = "datasets/ADE20K_2021_17_01/ADE20K_847.json"

|

| 58 |

+

cfg.MODEL.SEM_SEG_HEAD.TEST_CLASS_JSON = "datasets/ADE20K_2021_17_01/ADE20K_847.json"

|

| 59 |

+

cfg.MODEL.SEM_SEG_HEAD.TRAIN_CLASS_INDEXES = "datasets/coco/coco_stuff/split/seen_indexes.json"

|

| 60 |

+

cfg.MODEL.SEM_SEG_HEAD.TEST_CLASS_INDEXES = "datasets/coco/coco_stuff/split/unseen_indexes.json"

|

| 61 |

+

|

| 62 |

+

cfg.MODEL.SEM_SEG_HEAD.CLIP_PRETRAINED = "ViT-B/16"

|

| 63 |

+

|

| 64 |

+

cfg.MODEL.PROMPT_ENSEMBLE = False

|

| 65 |

+

cfg.MODEL.PROMPT_ENSEMBLE_TYPE = "single"

|

| 66 |

+

|

| 67 |

+

cfg.MODEL.CLIP_PIXEL_MEAN = [122.7709383, 116.7460125, 104.09373615]

|

| 68 |

+

cfg.MODEL.CLIP_PIXEL_STD = [68.5005327, 66.6321579, 70.3231630]

|

| 69 |

+

# three styles for clip classification, crop, mask, cropmask

|

| 70 |

+

|

| 71 |

+

cfg.MODEL.SEM_SEG_HEAD.TEXT_AFFINITY_DIM = 512

|

| 72 |

+

cfg.MODEL.SEM_SEG_HEAD.TEXT_AFFINITY_PROJ_DIM = 128

|

| 73 |

+

cfg.MODEL.SEM_SEG_HEAD.APPEARANCE_AFFINITY_DIM = 512

|

| 74 |

+

cfg.MODEL.SEM_SEG_HEAD.APPEARANCE_AFFINITY_PROJ_DIM = 128

|

| 75 |

+

|

| 76 |

+

cfg.MODEL.SEM_SEG_HEAD.DECODER_DIMS = [64, 32]

|

| 77 |

+

cfg.MODEL.SEM_SEG_HEAD.DECODER_AFFINITY_DIMS = [256, 128]

|

| 78 |

+

cfg.MODEL.SEM_SEG_HEAD.DECODER_AFFINITY_PROJ_DIMS = [32, 16]

|

| 79 |

+

|

| 80 |

+

cfg.MODEL.SEM_SEG_HEAD.NUM_LAYERS = 4

|

| 81 |

+

cfg.MODEL.SEM_SEG_HEAD.NUM_HEADS = 4

|

| 82 |

+

cfg.MODEL.SEM_SEG_HEAD.HIDDEN_DIMS = 128

|

| 83 |

+

cfg.MODEL.SEM_SEG_HEAD.POOLING_SIZES = [6, 6]

|

| 84 |

+

cfg.MODEL.SEM_SEG_HEAD.FEATURE_RESOLUTION = [24, 24]

|

| 85 |

+

cfg.MODEL.SEM_SEG_HEAD.WINDOW_SIZES = 12

|

| 86 |

+

cfg.MODEL.SEM_SEG_HEAD.ATTENTION_TYPE = "linear"

|

| 87 |

+

|

| 88 |

+

cfg.MODEL.SEM_SEG_HEAD.PROMPT_DEPTH = 0

|

| 89 |

+

cfg.MODEL.SEM_SEG_HEAD.PROMPT_LENGTH = 0

|

| 90 |

+

cfg.SOLVER.CLIP_MULTIPLIER = 0.01

|

| 91 |

+

|

| 92 |

+

cfg.MODEL.SEM_SEG_HEAD.CLIP_FINETUNE = "attention"

|

| 93 |

+

cfg.TEST.SLIDING_WINDOW = False

|

cat_seg/data/__init__.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

| 2 |

+

from . import datasets

|

cat_seg/data/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (184 Bytes). View file

|

|

|

cat_seg/data/dataset_mappers/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Facebook, Inc. and its affiliates.

|

cat_seg/data/dataset_mappers/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (167 Bytes). View file

|

|

|

cat_seg/data/dataset_mappers/__pycache__/detr_panoptic_dataset_mapper.cpython-38.pyc

ADDED

|

Binary file (4.88 kB). View file

|

|

|

cat_seg/data/dataset_mappers/__pycache__/mask_former_panoptic_dataset_mapper.cpython-38.pyc

ADDED

|

Binary file (4.41 kB). View file

|

|

|

cat_seg/data/dataset_mappers/__pycache__/mask_former_semantic_dataset_mapper.cpython-38.pyc

ADDED

|

Binary file (5.05 kB). View file

|

|

|

cat_seg/data/dataset_mappers/detr_panoptic_dataset_mapper.py

ADDED

|

@@ -0,0 +1,180 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|