Usage guide to be completed

Browse files- .gitignore +2 -1

- README.md +1 -1

- app.py +132 -51

- contents.py +60 -26

- img/cci_white_outline.png +0 -0

- img/cti_white_outline.png +0 -0

- img/escience_logo_white_contour.png +0 -0

- img/indeep_logo_white_contour.png +0 -0

- img/inseq_logo_white_contour.png +0 -0

- img/pecore_logo_white_contour.png +0 -0

- img/pecore_ui_output_example.png +0 -0

- presets.py +24 -12

- style.py +24 -2

.gitignore

CHANGED

|

@@ -1,3 +1,4 @@

|

|

| 1 |

*.pyc

|

| 2 |

*.html

|

| 3 |

-

*.json

|

|

|

|

|

|

| 1 |

*.pyc

|

| 2 |

*.html

|

| 3 |

+

*.json

|

| 4 |

+

.DS_Store

|

README.md

CHANGED

|

@@ -4,7 +4,7 @@ emoji: 🐑 🐑

|

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: green

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

|

|

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: green

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 4.21.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

app.py

CHANGED

|

@@ -7,10 +7,15 @@ from contents import (

|

|

| 7 |

citation,

|

| 8 |

description,

|

| 9 |

examples,

|

| 10 |

-

|

|

|

|

|

|

|

| 11 |

how_to_use,

|

|

|

|

| 12 |

subtitle,

|

| 13 |

title,

|

|

|

|

|

|

|

| 14 |

)

|

| 15 |

from gradio_highlightedtextbox import HighlightedTextbox

|

| 16 |

from presets import (

|

|

@@ -21,6 +26,7 @@ from presets import (

|

|

| 21 |

set_towerinstruct_preset,

|

| 22 |

set_zephyr_preset,

|

| 23 |

set_gemma_preset,

|

|

|

|

| 24 |

)

|

| 25 |

from style import custom_css

|

| 26 |

from utils import get_formatted_attribute_context_results

|

|

@@ -50,8 +56,9 @@ def pecore(

|

|

| 50 |

attribution_std_threshold: float,

|

| 51 |

attribution_topk: int,

|

| 52 |

input_template: str,

|

| 53 |

-

contextless_input_current_text: str,

|

| 54 |

output_template: str,

|

|

|

|

|

|

|

| 55 |

special_tokens_to_keep: str | list[str] | None,

|

| 56 |

decoder_input_output_separator: str,

|

| 57 |

model_kwargs: str,

|

|

@@ -62,7 +69,7 @@ def pecore(

|

|

| 62 |

global loaded_model

|

| 63 |

if "{context}" in output_template and not output_context_text:

|

| 64 |

raise gr.Error(

|

| 65 |

-

"Parameter '

|

| 66 |

)

|

| 67 |

if loaded_model is None or model_name_or_path != loaded_model.model_name:

|

| 68 |

gr.Info("Loading model...")

|

|

@@ -109,16 +116,29 @@ def pecore(

|

|

| 109 |

input_current_text=input_current_text,

|

| 110 |

input_template=input_template,

|

| 111 |

output_template=output_template,

|

| 112 |

-

contextless_input_current_text=

|

|

|

|

| 113 |

handle_output_context_strategy="pre",

|

| 114 |

**kwargs,

|

| 115 |

)

|

| 116 |

out = attribute_context_with_model(pecore_args, loaded_model)

|

| 117 |

tuples = get_formatted_attribute_context_results(loaded_model, out.info, out)

|

| 118 |

if not tuples:

|

| 119 |

-

msg = f"Output: {out.output_current}\nWarning: No pairs were found by PECoRe

|

| 120 |

tuples = [(msg, None)]

|

| 121 |

-

return

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 122 |

|

| 123 |

|

| 124 |

@spaces.GPU()

|

|

@@ -140,19 +160,25 @@ def preload_model(

|

|

| 140 |

|

| 141 |

|

| 142 |

with gr.Blocks(css=custom_css) as demo:

|

| 143 |

-

gr.

|

| 144 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 145 |

gr.Markdown(description)

|

| 146 |

-

with gr.Tab("🐑

|

| 147 |

with gr.Row():

|

| 148 |

with gr.Column():

|

| 149 |

input_context_text = gr.Textbox(

|

| 150 |

-

label="Input context", lines=

|

| 151 |

)

|

| 152 |

input_current_text = gr.Textbox(

|

| 153 |

label="Input query", placeholder="Your input query..."

|

| 154 |

)

|

| 155 |

-

attribute_input_button = gr.Button("

|

| 156 |

with gr.Column():

|

| 157 |

pecore_output_highlights = HighlightedTextbox(

|

| 158 |

value=[

|

|

@@ -163,8 +189,8 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 163 |

(" tokens.", None),

|

| 164 |

],

|

| 165 |

color_map={

|

| 166 |

-

"Context sensitive": "

|

| 167 |

-

"Influential context": "

|

| 168 |

},

|

| 169 |

show_legend=True,

|

| 170 |

label="PECoRe Output",

|

|

@@ -172,30 +198,31 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 172 |

interactive=False,

|

| 173 |

)

|

| 174 |

with gr.Row(equal_height=True):

|

| 175 |

-

download_output_file_button = gr.

|

| 176 |

-

"

|

| 177 |

visible=False,

|

| 178 |

-

link=os.path.join(

|

| 179 |

-

os.path.dirname(__file__), "/file=outputs/output.json"

|

| 180 |

-

),

|

| 181 |

)

|

| 182 |

-

download_output_html_button = gr.

|

| 183 |

"🔍 Download HTML",

|

| 184 |

visible=False,

|

| 185 |

-

|

| 186 |

-

os.path.dirname(__file__), "

|

| 187 |

),

|

| 188 |

)

|

| 189 |

-

|

|

|

|

|

|

|

| 190 |

attribute_input_examples = gr.Examples(

|

| 191 |

examples,

|

| 192 |

inputs=[input_current_text, input_context_text],

|

| 193 |

outputs=pecore_output_highlights,

|

|

|

|

| 194 |

)

|

| 195 |

with gr.Tab("⚙️ Parameters") as params_tab:

|

| 196 |

gr.Markdown(

|

| 197 |

-

"## ✨ Presets\nSelect a preset to load default parameters into the fields below

|

| 198 |

)

|

|

|

|

| 199 |

with gr.Row(equal_height=True):

|

| 200 |

with gr.Column():

|

| 201 |

default_preset = gr.Button("Default", variant="secondary")

|

|

@@ -208,9 +235,9 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 208 |

"Preset for the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model.\nUses special templates for inputs."

|

| 209 |

)

|

| 210 |

with gr.Column():

|

| 211 |

-

zephyr_preset = gr.Button("Zephyr Template", variant="secondary")

|

| 212 |

gr.Markdown(

|

| 213 |

-

"Preset for models using the <a href='https://huggingface.co/

|

| 214 |

)

|

| 215 |

with gr.Row(equal_height=True):

|

| 216 |

with gr.Column(scale=1):

|

|

@@ -227,7 +254,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 227 |

)

|

| 228 |

with gr.Column(scale=1):

|

| 229 |

towerinstruct_template = gr.Button(

|

| 230 |

-

"Unbabel TowerInstruct", variant="secondary"

|

| 231 |

)

|

| 232 |

gr.Markdown(

|

| 233 |

"Preset for models using the <a href='https://huggingface.co/Unbabel/TowerInstruct-7B-v0.1' target='_blank'>Unbabel TowerInstruct</a> conversational template.\nUses <code><|im_start|></code>, <code><|im_end|></code> special tokens."

|

|

@@ -235,16 +262,23 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 235 |

with gr.Row(equal_height=True):

|

| 236 |

with gr.Column(scale=1):

|

| 237 |

gemma_template = gr.Button(

|

| 238 |

-

"Gemma Chat Template", variant="secondary"

|

| 239 |

)

|

| 240 |

gr.Markdown(

|

| 241 |

"Preset for <a href='https://huggingface.co/google/gemma-2b-it' target='_blank'>Gemma</a> instruction-tuned models."

|

| 242 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 243 |

gr.Markdown("## ⚙️ PECoRe Parameters")

|

| 244 |

with gr.Row(equal_height=True):

|

| 245 |

with gr.Column():

|

| 246 |

model_name_or_path = gr.Textbox(

|

| 247 |

-

value="

|

| 248 |

label="Model",

|

| 249 |

info="Hugging Face Hub identifier of the model to analyze with PECoRe.",

|

| 250 |

interactive=True,

|

|

@@ -277,7 +311,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 277 |

gr.Markdown("#### Results Selection Parameters")

|

| 278 |

with gr.Row(equal_height=True):

|

| 279 |

context_sensitivity_std_threshold = gr.Number(

|

| 280 |

-

value=

|

| 281 |

label="Context sensitivity threshold",

|

| 282 |

info="Select N to keep context sensitive tokens with scores above N * std. 0 = above mean.",

|

| 283 |

precision=1,

|

|

@@ -306,33 +340,39 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 306 |

interactive=True,

|

| 307 |

)

|

| 308 |

attribution_topk = gr.Number(

|

| 309 |

-

value=

|

| 310 |

label="Attribution top-k",

|

| 311 |

info="Select N to keep top N attributed tokens in the context. 0 = keep all.",

|

| 312 |

interactive=True,

|

| 313 |

precision=0,

|

| 314 |

minimum=0,

|

| 315 |

-

maximum=

|

| 316 |

)

|

| 317 |

|

| 318 |

gr.Markdown("#### Text Format Parameters")

|

| 319 |

with gr.Row(equal_height=True):

|

| 320 |

input_template = gr.Textbox(

|

| 321 |

-

value="{current} <P>:{context}",

|

| 322 |

-

label="

|

| 323 |

-

info="Template to format the input for the model. Use {current} and {context} placeholders.",

|

| 324 |

interactive=True,

|

| 325 |

)

|

| 326 |

output_template = gr.Textbox(

|

| 327 |

value="{current}",

|

| 328 |

-

label="

|

| 329 |

-

info="Template to format the output from the model. Use {current} and {context} placeholders.",

|

| 330 |

interactive=True,

|

| 331 |

)

|

| 332 |

-

|

| 333 |

value="<Q>:{current}",

|

| 334 |

-

label="

|

| 335 |

-

info="Template to format the input query

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 336 |

interactive=True,

|

| 337 |

)

|

| 338 |

with gr.Row(equal_height=True):

|

|

@@ -401,16 +441,34 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 401 |

)

|

| 402 |

with gr.Column():

|

| 403 |

attribution_kwargs = gr.Code(

|

| 404 |

-

value="

|

| 405 |

language="json",

|

| 406 |

label="Attribution kwargs (JSON)",

|

| 407 |

interactive=True,

|

| 408 |

lines=1,

|

| 409 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 410 |

|

| 411 |

-

gr.Markdown(how_it_works)

|

| 412 |

-

gr.Markdown(how_to_use)

|

| 413 |

-

gr.Markdown(citation)

|

| 414 |

|

| 415 |

# Main logic

|

| 416 |

|

|

@@ -422,6 +480,10 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 422 |

]

|

| 423 |

|

| 424 |

attribute_input_button.click(

|

|

|

|

|

|

|

|

|

|

|

|

|

| 425 |

pecore,

|

| 426 |

inputs=[

|

| 427 |

input_current_text,

|

|

@@ -437,8 +499,9 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 437 |

attribution_std_threshold,

|

| 438 |

attribution_topk,

|

| 439 |

input_template,

|

| 440 |

-

contextless_input_current_text,

|

| 441 |

output_template,

|

|

|

|

|

|

|

| 442 |

special_tokens_to_keep,

|

| 443 |

decoder_input_output_separator,

|

| 444 |

model_kwargs,

|

|

@@ -461,11 +524,18 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 461 |

|

| 462 |

# Preset params

|

| 463 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 464 |

outputs_to_reset = [

|

| 465 |

model_name_or_path,

|

| 466 |

input_template,

|

| 467 |

-

contextless_input_current_text,

|

| 468 |

output_template,

|

|

|

|

|

|

|

| 469 |

special_tokens_to_keep,

|

| 470 |

decoder_input_output_separator,

|

| 471 |

model_kwargs,

|

|

@@ -485,7 +555,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 485 |

|

| 486 |

cora_preset.click(**reset_kwargs).then(

|

| 487 |

set_cora_preset,

|

| 488 |

-

outputs=[model_name_or_path, input_template,

|

| 489 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 490 |

|

| 491 |

zephyr_preset.click(**reset_kwargs).then(

|

|

@@ -493,8 +563,9 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 493 |

outputs=[

|

| 494 |

model_name_or_path,

|

| 495 |

input_template,

|

| 496 |

-

|

| 497 |

decoder_input_output_separator,

|

|

|

|

| 498 |

],

|

| 499 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 500 |

|

|

@@ -508,7 +579,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 508 |

outputs=[

|

| 509 |

model_name_or_path,

|

| 510 |

input_template,

|

| 511 |

-

|

| 512 |

decoder_input_output_separator,

|

| 513 |

special_tokens_to_keep,

|

| 514 |

],

|

|

@@ -519,7 +590,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 519 |

outputs=[

|

| 520 |

model_name_or_path,

|

| 521 |

input_template,

|

| 522 |

-

|

| 523 |

decoder_input_output_separator,

|

| 524 |

special_tokens_to_keep,

|

| 525 |

],

|

|

@@ -530,10 +601,20 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 530 |

outputs=[

|

| 531 |

model_name_or_path,

|

| 532 |

input_template,

|

| 533 |

-

|

| 534 |

decoder_input_output_separator,

|

| 535 |

special_tokens_to_keep,

|

| 536 |

],

|

| 537 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 538 |

|

| 539 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

citation,

|

| 8 |

description,

|

| 9 |

examples,

|

| 10 |

+

how_it_works_intro,

|

| 11 |

+

cti_explanation,

|

| 12 |

+

cci_explanation,

|

| 13 |

how_to_use,

|

| 14 |

+

example_explanation,

|

| 15 |

subtitle,

|

| 16 |

title,

|

| 17 |

+

powered_by,

|

| 18 |

+

support,

|

| 19 |

)

|

| 20 |

from gradio_highlightedtextbox import HighlightedTextbox

|

| 21 |

from presets import (

|

|

|

|

| 26 |

set_towerinstruct_preset,

|

| 27 |

set_zephyr_preset,

|

| 28 |

set_gemma_preset,

|

| 29 |

+

set_mistral_instruct_preset,

|

| 30 |

)

|

| 31 |

from style import custom_css

|

| 32 |

from utils import get_formatted_attribute_context_results

|

|

|

|

| 56 |

attribution_std_threshold: float,

|

| 57 |

attribution_topk: int,

|

| 58 |

input_template: str,

|

|

|

|

| 59 |

output_template: str,

|

| 60 |

+

contextless_input_template: str,

|

| 61 |

+

contextless_output_template: str,

|

| 62 |

special_tokens_to_keep: str | list[str] | None,

|

| 63 |

decoder_input_output_separator: str,

|

| 64 |

model_kwargs: str,

|

|

|

|

| 69 |

global loaded_model

|

| 70 |

if "{context}" in output_template and not output_context_text:

|

| 71 |

raise gr.Error(

|

| 72 |

+

"Parameter 'Generation context' must be set when including {context} in the output template."

|

| 73 |

)

|

| 74 |

if loaded_model is None or model_name_or_path != loaded_model.model_name:

|

| 75 |

gr.Info("Loading model...")

|

|

|

|

| 116 |

input_current_text=input_current_text,

|

| 117 |

input_template=input_template,

|

| 118 |

output_template=output_template,

|

| 119 |

+

contextless_input_current_text=contextless_input_template,

|

| 120 |

+

contextless_output_current_text=contextless_output_template,

|

| 121 |

handle_output_context_strategy="pre",

|

| 122 |

**kwargs,

|

| 123 |

)

|

| 124 |

out = attribute_context_with_model(pecore_args, loaded_model)

|

| 125 |

tuples = get_formatted_attribute_context_results(loaded_model, out.info, out)

|

| 126 |

if not tuples:

|

| 127 |

+

msg = f"Output: {out.output_current}\nWarning: No pairs were found by PECoRe.\nTry adjusting Results Selection parameters to soften selection constraints (e.g. setting Context sensitivity threshold to 0)."

|

| 128 |

tuples = [(msg, None)]

|

| 129 |

+

return [

|

| 130 |

+

tuples,

|

| 131 |

+

gr.DownloadButton(

|

| 132 |

+

label="📂 Download output",

|

| 133 |

+

value=os.path.join(os.path.dirname(__file__), "outputs/output.json"),

|

| 134 |

+

visible=True,

|

| 135 |

+

),

|

| 136 |

+

gr.DownloadButton(

|

| 137 |

+

label="🔍 Download HTML",

|

| 138 |

+

value=os.path.join(os.path.dirname(__file__), "outputs/output.html"),

|

| 139 |

+

visible=True,

|

| 140 |

+

)

|

| 141 |

+

]

|

| 142 |

|

| 143 |

|

| 144 |

@spaces.GPU()

|

|

|

|

| 160 |

|

| 161 |

|

| 162 |

with gr.Blocks(css=custom_css) as demo:

|

| 163 |

+

with gr.Row():

|

| 164 |

+

with gr.Column(scale=0.1, min_width=100):

|

| 165 |

+

gr.HTML(f'<img src="file/img/pecore_logo_white_contour.png" width=100px />')

|

| 166 |

+

with gr.Column(scale=0.8):

|

| 167 |

+

gr.Markdown(title)

|

| 168 |

+

gr.Markdown(subtitle)

|

| 169 |

+

with gr.Column(scale=0.1, min_width=100):

|

| 170 |

+

gr.HTML(f'<img src="file/img/pecore_logo_white_contour.png" width=100px />')

|

| 171 |

gr.Markdown(description)

|

| 172 |

+

with gr.Tab("🐑 Demo"):

|

| 173 |

with gr.Row():

|

| 174 |

with gr.Column():

|

| 175 |

input_context_text = gr.Textbox(

|

| 176 |

+

label="Input context", lines=3, placeholder="Your input context..."

|

| 177 |

)

|

| 178 |

input_current_text = gr.Textbox(

|

| 179 |

label="Input query", placeholder="Your input query..."

|

| 180 |

)

|

| 181 |

+

attribute_input_button = gr.Button("Run PECoRe", variant="primary")

|

| 182 |

with gr.Column():

|

| 183 |

pecore_output_highlights = HighlightedTextbox(

|

| 184 |

value=[

|

|

|

|

| 189 |

(" tokens.", None),

|

| 190 |

],

|

| 191 |

color_map={

|

| 192 |

+

"Context sensitive": "#5fb77d",

|

| 193 |

+

"Influential context": "#80ace8",

|

| 194 |

},

|

| 195 |

show_legend=True,

|

| 196 |

label="PECoRe Output",

|

|

|

|

| 198 |

interactive=False,

|

| 199 |

)

|

| 200 |

with gr.Row(equal_height=True):

|

| 201 |

+

download_output_file_button = gr.DownloadButton(

|

| 202 |

+

"📂 Download output",

|

| 203 |

visible=False,

|

|

|

|

|

|

|

|

|

|

| 204 |

)

|

| 205 |

+

download_output_html_button = gr.DownloadButton(

|

| 206 |

"🔍 Download HTML",

|

| 207 |

visible=False,

|

| 208 |

+

value=os.path.join(

|

| 209 |

+

os.path.dirname(__file__), "outputs/output.html"

|

| 210 |

),

|

| 211 |

)

|

| 212 |

+

preset_comment = gr.Markdown(

|

| 213 |

+

"<i>The <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model by <a href='https://openreview.net/forum?id=e8blYRui3j' target='_blank'>Asai et al. (2021)</a> is set as default and can be used with the examples below. Explore other presets in the ⚙️ Parameters tab.</i>"

|

| 214 |

+

)

|

| 215 |

attribute_input_examples = gr.Examples(

|

| 216 |

examples,

|

| 217 |

inputs=[input_current_text, input_context_text],

|

| 218 |

outputs=pecore_output_highlights,

|

| 219 |

+

examples_per_page=1,

|

| 220 |

)

|

| 221 |

with gr.Tab("⚙️ Parameters") as params_tab:

|

| 222 |

gr.Markdown(

|

| 223 |

+

"## ✨ Presets\nSelect a preset to load the selected model and its default parameters (e.g. prompt template, special tokens, etc.) into the fields below.<br>⚠️ **This will overwrite existing parameters. If you intend to use large models that could crash the demo, please clone this Space and allocate appropriate resources for them to run comfortably.**"

|

| 224 |

)

|

| 225 |

+

check_enable_large_models = gr.Checkbox(False, label = "I understand, enable large models presets")

|

| 226 |

with gr.Row(equal_height=True):

|

| 227 |

with gr.Column():

|

| 228 |

default_preset = gr.Button("Default", variant="secondary")

|

|

|

|

| 235 |

"Preset for the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model.\nUses special templates for inputs."

|

| 236 |

)

|

| 237 |

with gr.Column():

|

| 238 |

+

zephyr_preset = gr.Button("Zephyr Template", variant="secondary", interactive=False)

|

| 239 |

gr.Markdown(

|

| 240 |

+

"Preset for models using the <a href='https://huggingface.co/stabilityai/stablelm-2-zephyr-1_6b' target='_blank'>StableLM 2 Zephyr conversational template</a>.\nUses <code><|system|></code>, <code><|user|></code> and <code><|assistant|></code> special tokens."

|

| 241 |

)

|

| 242 |

with gr.Row(equal_height=True):

|

| 243 |

with gr.Column(scale=1):

|

|

|

|

| 254 |

)

|

| 255 |

with gr.Column(scale=1):

|

| 256 |

towerinstruct_template = gr.Button(

|

| 257 |

+

"Unbabel TowerInstruct", variant="secondary", interactive=False

|

| 258 |

)

|

| 259 |

gr.Markdown(

|

| 260 |

"Preset for models using the <a href='https://huggingface.co/Unbabel/TowerInstruct-7B-v0.1' target='_blank'>Unbabel TowerInstruct</a> conversational template.\nUses <code><|im_start|></code>, <code><|im_end|></code> special tokens."

|

|

|

|

| 262 |

with gr.Row(equal_height=True):

|

| 263 |

with gr.Column(scale=1):

|

| 264 |

gemma_template = gr.Button(

|

| 265 |

+

"Gemma Chat Template", variant="secondary", interactive=False

|

| 266 |

)

|

| 267 |

gr.Markdown(

|

| 268 |

"Preset for <a href='https://huggingface.co/google/gemma-2b-it' target='_blank'>Gemma</a> instruction-tuned models."

|

| 269 |

)

|

| 270 |

+

with gr.Column(scale=1):

|

| 271 |

+

mistral_instruct_template = gr.Button(

|

| 272 |

+

"Mistral Instruct", variant="secondary", interactive=False

|

| 273 |

+

)

|

| 274 |

+

gr.Markdown(

|

| 275 |

+

"Preset for models using the <a href='https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2' target='_blank'>Mistral Instruct template</a>.\nUses <code>[INST]...[/INST]</code> special tokens."

|

| 276 |

+

)

|

| 277 |

gr.Markdown("## ⚙️ PECoRe Parameters")

|

| 278 |

with gr.Row(equal_height=True):

|

| 279 |

with gr.Column():

|

| 280 |

model_name_or_path = gr.Textbox(

|

| 281 |

+

value="gsarti/cora_mgen",

|

| 282 |

label="Model",

|

| 283 |

info="Hugging Face Hub identifier of the model to analyze with PECoRe.",

|

| 284 |

interactive=True,

|

|

|

|

| 311 |

gr.Markdown("#### Results Selection Parameters")

|

| 312 |

with gr.Row(equal_height=True):

|

| 313 |

context_sensitivity_std_threshold = gr.Number(

|

| 314 |

+

value=0.0,

|

| 315 |

label="Context sensitivity threshold",

|

| 316 |

info="Select N to keep context sensitive tokens with scores above N * std. 0 = above mean.",

|

| 317 |

precision=1,

|

|

|

|

| 340 |

interactive=True,

|

| 341 |

)

|

| 342 |

attribution_topk = gr.Number(

|

| 343 |

+

value=5,

|

| 344 |

label="Attribution top-k",

|

| 345 |

info="Select N to keep top N attributed tokens in the context. 0 = keep all.",

|

| 346 |

interactive=True,

|

| 347 |

precision=0,

|

| 348 |

minimum=0,

|

| 349 |

+

maximum=100,

|

| 350 |

)

|

| 351 |

|

| 352 |

gr.Markdown("#### Text Format Parameters")

|

| 353 |

with gr.Row(equal_height=True):

|

| 354 |

input_template = gr.Textbox(

|

| 355 |

+

value="<Q>:{current} <P>:{context}",

|

| 356 |

+

label="Contextual input template",

|

| 357 |

+

info="Template to format the input for the model. Use {current} and {context} placeholders for Input Query and Input Context, respectively.",

|

| 358 |

interactive=True,

|

| 359 |

)

|

| 360 |

output_template = gr.Textbox(

|

| 361 |

value="{current}",

|

| 362 |

+

label="Contextual output template",

|

| 363 |

+

info="Template to format the output from the model. Use {current} and {context} placeholders for Generation Output and Generation Context, respectively.",

|

| 364 |

interactive=True,

|

| 365 |

)

|

| 366 |

+

contextless_input_template = gr.Textbox(

|

| 367 |

value="<Q>:{current}",

|

| 368 |

+

label="Contextless input template",

|

| 369 |

+

info="Template to format the input query in the non-contextual setting. Use {current} placeholder for Input Query.",

|

| 370 |

+

interactive=True,

|

| 371 |

+

)

|

| 372 |

+

contextless_output_template = gr.Textbox(

|

| 373 |

+

value="{current}",

|

| 374 |

+

label="Contextless output template",

|

| 375 |

+

info="Template to format the output from the model. Use {current} placeholder for Generation Output.",

|

| 376 |

interactive=True,

|

| 377 |

)

|

| 378 |

with gr.Row(equal_height=True):

|

|

|

|

| 441 |

)

|

| 442 |

with gr.Column():

|

| 443 |

attribution_kwargs = gr.Code(

|

| 444 |

+

value='{\n\t"logprob": true\n}',

|

| 445 |

language="json",

|

| 446 |

label="Attribution kwargs (JSON)",

|

| 447 |

interactive=True,

|

| 448 |

lines=1,

|

| 449 |

)

|

| 450 |

+

with gr.Tab("🔍 How Does It Work?"):

|

| 451 |

+

gr.Markdown(how_it_works_intro)

|

| 452 |

+

with gr.Row(equal_height=True):

|

| 453 |

+

with gr.Column(scale=0.60):

|

| 454 |

+

gr.Markdown(cti_explanation)

|

| 455 |

+

with gr.Column(scale=0.30):

|

| 456 |

+

gr.HTML('<img src="file/img/cti_white_outline.png" width=100% />')

|

| 457 |

+

with gr.Row(equal_height=True):

|

| 458 |

+

with gr.Column(scale=0.35):

|

| 459 |

+

gr.HTML('<img src="file/img/cci_white_outline.png" width=100% />')

|

| 460 |

+

with gr.Column(scale=0.65):

|

| 461 |

+

gr.Markdown(cci_explanation)

|

| 462 |

+

with gr.Tab("🔧 Usage Guide"):

|

| 463 |

+

gr.Markdown(how_to_use)

|

| 464 |

+

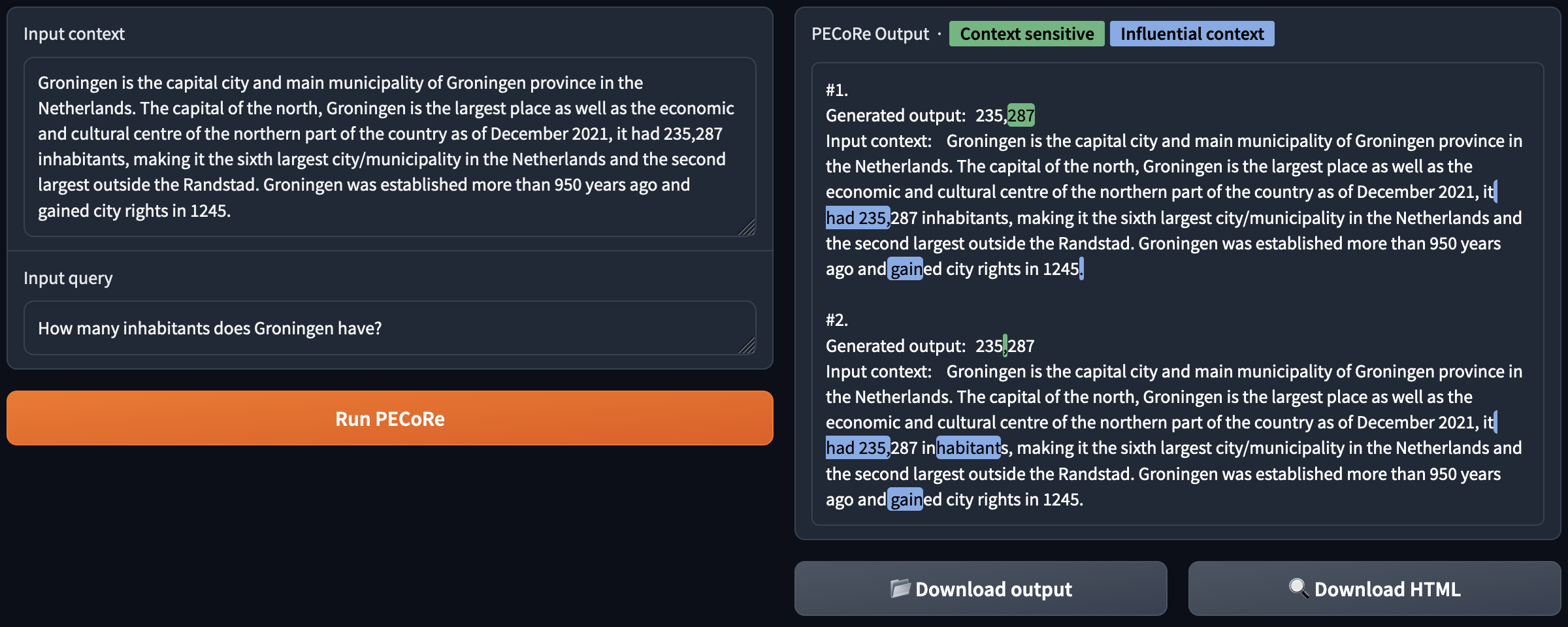

gr.HTML('<img src="file/img/pecore_ui_output_example.png" width=100% />')

|

| 465 |

+

gr.Markdown(example_explanation)

|

| 466 |

+

with gr.Tab("📚 Citing PECoRe"):

|

| 467 |

+

gr.Markdown(citation)

|

| 468 |

+

with gr.Row(elem_classes="footer-container"):

|

| 469 |

+

gr.Markdown(powered_by)

|

| 470 |

+

gr.Markdown(support)

|

| 471 |

|

|

|

|

|

|

|

|

|

|

| 472 |

|

| 473 |

# Main logic

|

| 474 |

|

|

|

|

| 480 |

]

|

| 481 |

|

| 482 |

attribute_input_button.click(

|

| 483 |

+

lambda *args: [gr.DownloadButton(visible=False), gr.DownloadButton(visible=False)],

|

| 484 |

+

inputs=[],

|

| 485 |

+

outputs=[download_output_file_button, download_output_html_button],

|

| 486 |

+

).then(

|

| 487 |

pecore,

|

| 488 |

inputs=[

|

| 489 |

input_current_text,

|

|

|

|

| 499 |

attribution_std_threshold,

|

| 500 |

attribution_topk,

|

| 501 |

input_template,

|

|

|

|

| 502 |

output_template,

|

| 503 |

+

contextless_input_template,

|

| 504 |

+

contextless_output_template,

|

| 505 |

special_tokens_to_keep,

|

| 506 |

decoder_input_output_separator,

|

| 507 |

model_kwargs,

|

|

|

|

| 524 |

|

| 525 |

# Preset params

|

| 526 |

|

| 527 |

+

check_enable_large_models.input(

|

| 528 |

+

lambda checkbox, *buttons: [gr.Button(interactive=checkbox) for _ in buttons],

|

| 529 |

+

inputs=[check_enable_large_models, zephyr_preset, towerinstruct_template, gemma_template, mistral_instruct_template],

|

| 530 |

+

outputs=[zephyr_preset, towerinstruct_template, gemma_template, mistral_instruct_template],

|

| 531 |

+

)

|

| 532 |

+

|

| 533 |

outputs_to_reset = [

|

| 534 |

model_name_or_path,

|

| 535 |

input_template,

|

|

|

|

| 536 |

output_template,

|

| 537 |

+

contextless_input_template,

|

| 538 |

+

contextless_output_template,

|

| 539 |

special_tokens_to_keep,

|

| 540 |

decoder_input_output_separator,

|

| 541 |

model_kwargs,

|

|

|

|

| 555 |

|

| 556 |

cora_preset.click(**reset_kwargs).then(

|

| 557 |

set_cora_preset,

|

| 558 |

+

outputs=[model_name_or_path, input_template, contextless_input_template],

|

| 559 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 560 |

|

| 561 |

zephyr_preset.click(**reset_kwargs).then(

|

|

|

|

| 563 |

outputs=[

|

| 564 |

model_name_or_path,

|

| 565 |

input_template,

|

| 566 |

+

contextless_input_template,

|

| 567 |

decoder_input_output_separator,

|

| 568 |

+

special_tokens_to_keep,

|

| 569 |

],

|

| 570 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 571 |

|

|

|

|

| 579 |

outputs=[

|

| 580 |

model_name_or_path,

|

| 581 |

input_template,

|

| 582 |

+

contextless_input_template,

|

| 583 |

decoder_input_output_separator,

|

| 584 |

special_tokens_to_keep,

|

| 585 |

],

|

|

|

|

| 590 |

outputs=[

|

| 591 |

model_name_or_path,

|

| 592 |

input_template,

|

| 593 |

+

contextless_input_template,

|

| 594 |

decoder_input_output_separator,

|

| 595 |

special_tokens_to_keep,

|

| 596 |

],

|

|

|

|

| 601 |

outputs=[

|

| 602 |

model_name_or_path,

|

| 603 |

input_template,

|

| 604 |

+

contextless_input_template,

|

| 605 |

decoder_input_output_separator,

|

| 606 |

special_tokens_to_keep,

|

| 607 |

],

|

| 608 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 609 |

|

| 610 |

+

mistral_instruct_template.click(**reset_kwargs).then(

|

| 611 |

+

set_mistral_instruct_preset,

|

| 612 |

+

outputs=[

|

| 613 |

+

model_name_or_path,

|

| 614 |

+

input_template,

|

| 615 |

+

contextless_input_template,

|

| 616 |

+

decoder_input_output_separator,

|

| 617 |

+

],

|

| 618 |

+

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 619 |

+

|

| 620 |

+

demo.launch(allowed_paths=["outputs/", "img/"])

|

contents.py

CHANGED

|

@@ -3,31 +3,52 @@ title = "<h1 class='demo-title'>🐑 Plausibility Evaluation of Context Reliance

|

|

| 3 |

subtitle = "<h2 class='demo-subtitle'>An Interpretability Framework to Detect and Attribute Context Reliance in Language Models</h2>"

|

| 4 |

|

| 5 |

description = """

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 9 |

"""

|

| 10 |

|

| 11 |

-

|

| 12 |

-

<

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

</

|

| 18 |

"""

|

| 19 |

|

| 20 |

-

|

| 21 |

-

<

|

| 22 |

-

<

|

| 23 |

|

| 24 |

-

</details>

|

| 25 |

"""

|

| 26 |

|

| 27 |

citation = r"""

|

| 28 |

-

<

|

| 29 |

-

<summary><h3 class="summary-label">📚 Citing PECoRe</h3></summary>

|

| 30 |

-

<p>To refer to the PECoRe framework for context usage detection, cite:</p>

|

| 31 |

<div class="code_wrap"><button class="copy_code_button" title="copy">

|

| 32 |

<span class="copy-text"><svg viewBox="0 0 32 32" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><path d="M28 10v18H10V10h18m0-2H10a2 2 0 0 0-2 2v18a2 2 0 0 0 2 2h18a2 2 0 0 0 2-2V10a2 2 0 0 0-2-2Z" fill="currentColor"></path><path d="M4 18H2V4a2 2 0 0 1 2-2h14v2H4Z" fill="currentColor"></path></svg></span>

|

| 33 |

<span class="check"><svg stroke-linejoin="round" stroke-linecap="round" stroke-width="3" stroke="currentColor" fill="none" viewBox="0 0 24 24" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><polyline points="20 6 9 17 4 12"></polyline></svg></span>

|

|

@@ -47,8 +68,7 @@ citation = r"""

|

|

| 47 |

}

|

| 48 |

</code></pre></div>

|

| 49 |

|

| 50 |

-

|

| 51 |

-

If you use the Inseq implementation of PECoRe (<a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a>), please also cite:

|

| 52 |

<div class="code_wrap"><button class="copy_code_button" title="copy">

|

| 53 |

<span class="copy-text"><svg viewBox="0 0 32 32" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><path d="M28 10v18H10V10h18m0-2H10a2 2 0 0 0-2 2v18a2 2 0 0 0 2 2h18a2 2 0 0 0 2-2V10a2 2 0 0 0-2-2Z" fill="currentColor"></path><path d="M4 18H2V4a2 2 0 0 1 2-2h14v2H4Z" fill="currentColor"></path></svg></span>

|

| 54 |

<span class="check"><svg stroke-linejoin="round" stroke-linecap="round" stroke-width="3" stroke="currentColor" fill="none" viewBox="0 0 24 24" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><polyline points="20 6 9 17 4 12"></polyline></svg></span>

|

|

@@ -56,11 +76,11 @@ If you use the Inseq implementation of PECoRe (<a href="https://inseq.org/en/lat

|

|

| 56 |

@inproceedings{sarti-etal-2023-inseq,

|

| 57 |

title = "Inseq: An Interpretability Toolkit for Sequence Generation Models",

|

| 58 |

author = "Sarti, Gabriele and

|

| 59 |

-

|

| 60 |

-

|

| 61 |

-

|

| 62 |

-

|

| 63 |

-

|

| 64 |

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations)",

|

| 65 |

month = jul,

|

| 66 |

year = "2023",

|

|

@@ -70,13 +90,27 @@ If you use the Inseq implementation of PECoRe (<a href="https://inseq.org/en/lat

|

|

| 70 |

pages = "421--435",

|

| 71 |

}

|

| 72 |

</code></pre></div>

|

| 73 |

-

|

| 74 |

-

</details>

|

| 75 |

"""

|

| 76 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 77 |

examples = [

|

|

|

|

|

|

|

|

|

|

|

|

|

| 78 |

[

|

| 79 |

"When was Banff National Park established?",

|

| 80 |

"Banff National Park is Canada's oldest national park, established in 1885 as Rocky Mountains Park. Located in Alberta's Rocky Mountains, 110-180 kilometres (68-112 mi) west of Calgary, Banff encompasses 6,641 square kilometres (2,564 sq mi) of mountainous terrain.",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 81 |

]

|

| 82 |

]

|

|

|

|

| 3 |

subtitle = "<h2 class='demo-subtitle'>An Interpretability Framework to Detect and Attribute Context Reliance in Language Models</h2>"

|

| 4 |

|

| 5 |

description = """

|

| 6 |

+

PECoRe is a framework for trustworthy language generation using only model internals to detect and attribute model

|

| 7 |

+

generations to its available input context. Given a query-context input pair, PECoRe identifies which tokens in the generated

|

| 8 |

+

response were more dependant on context (<span class="category-label" style="background-color:#5fb77d; color: black; font-weight: var(--weight-semibold)">Context sensitive </span>), and match them with context tokens contributing the most to their prediction (<span class="category-label" style="background-color:#80ace8; color: black; font-weight: var(--weight-semibold)">Influential context </span>).

|

| 9 |

+

|

| 10 |

+

Check out <a href="https://openreview.net/forum?id=XTHfNGI3zT" target='_blank'>our ICLR 2024 paper</a> for more details. A new paper applying PECoRe to retrieval-augmented QA is forthcoming ✨ stay tuned!

|

| 11 |

+

"""

|

| 12 |

+

|

| 13 |

+

how_it_works_intro = """

|

| 14 |

+

The PECoRe (Plausibility Evaluation of Context Reliance) framework is designed to <b>detect and quantify context usage</b> throughout language model generations. Its final goal is to return <b>one or more pairs</b> representing tokens in the generated response that were influenced by the presence of context (<span class="category-label" style="background-color:#5fb77d; color: black; font-weight: var(--weight-semibold)">Context sensitive </span>), and their corresponding influential context tokens (<span class="category-label" style="background-color:#80ace8; color: black; font-weight: var(--weight-semibold)">Influential context </span>).

|

| 15 |

+

|

| 16 |

+

The PECoRe procedure involves two contrastive comparison steps:

|

| 17 |

+

"""

|

| 18 |

+

|

| 19 |

+

cti_explanation = """

|

| 20 |

+

<h3>1. Context-sensitive Token Identification (CTI)</h3>

|

| 21 |

+

<p>In this step, the goal is to identify which tokens in the generated text were influenced by the preceding context.</p>

|

| 22 |

+

<p>First, a context-aware generation is produced using the model's inputs augmented with available context. Then, the same generation is force-decoded using the contextless inputs. During both processes, a <b>contrastive metric</b> (KL-divergence is used as default for the <code>Context sensitivity metric</code> parameter) are collected for every generated token. Intuitively, higher metric scores indicate that the current generation step was more influenced by the presence of context.</p>

|

| 23 |

+

<p>The generated tokens are ranked according to their metric scores, and the most salient tokens are selected for the next step (This demo provides a <code>Context sensitivity threshold</code> parameter to select tokens above <code>N</code> standard deviations from the in-example metric average, and <code>Context sensitivity top-k</code> to pick the K most salient tokens.)</p>

|

| 24 |

+

<p>In the example shown in the figure, <code>elle</code> is selected as the only context-sensitive token by the procedure.</p>

|

| 25 |

+

"""

|

| 26 |

+

|

| 27 |

+

cci_explanation = """

|

| 28 |

+

<h3>2. Contextual Cue Imputation (CCI)</h3>

|

| 29 |

+

<p>Once context-sensitive tokens are identified, the next step is to link every one of these tokens to specific contextual cues that justified its prediction.</p>

|

| 30 |

+

<p>This is achieved by means of <b>contrastive feature attribution</b> (<a href="https://aclanthology.org/2022.emnlp-main.14/" target="_blank">Yin and Neubig, 2022</a>). More specifically, for a given context-sensitive token, a contrastive alternative to it is generated in absence of input context, and a function of the probabilities of the pair is used to identify salient parts of the context (By default, in this demo we use <code>saliency</code>, i.e. raw gradients, for the <code>Attribution method</code> and <code>contrast_prob_diff</code>, i.e. the probability difference between the two options, for the <code>Attributed function</code>).</p>

|

| 31 |

+

<p>Gradients are collected and aggregated to obtain a single score per context token, which is then used to rank the tokens and select the most influential ones (This demo provides a <code>Attribution threshold</code> parameter to select tokens above <code>N</code> standard deviations from the in-example metric average, and <code>Attribution top-k</code> to pick the K most salient tokens.)</p>

|

| 32 |

+

<p>In the example shown in the figure, the attribution process links <code>elle</code> to <code>dishes</code> and <code>assiettes</code> in the source and target contexts, respectively. This makes sense intuitively, as <code>they</code> in the original input is gender-neutral in English, and the presence of its gendered coreferent disambiguates the choice for the French pronoun in the translation.</p>

|

| 33 |

"""

|

| 34 |

|

| 35 |

+

how_to_use = """

|

| 36 |

+

<h3>How to use this demo</h3>

|

| 37 |

+

|

| 38 |

+

<p>This demo provides a convenient UI for the Inseq implementation of PECoRe (the <a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a> CLI command).</p>

|

| 39 |

+

<p>In the demo tab, fill in the input and context fields with the text you want to analyze, and click the <code>Run PECoRe</code> button to produce an output where the tokens selected by PECoRe in the model generation and context are highlighted. For more details on the parameters and their meaning, check the <code>Parameters</code> tab.</p>

|

| 40 |

+

|

| 41 |

+

<h3>Interpreting PECoRe results</h3>

|

| 42 |

"""

|

| 43 |

|

| 44 |

+

example_explanation = """

|

| 45 |

+

<p>The example shows the output of the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model used as default in the interface, using default settings.</p>

|

| 46 |

+

<p>

|

| 47 |

|

|

|

|

| 48 |

"""

|

| 49 |

|

| 50 |

citation = r"""

|

| 51 |

+

<p>To refer to the PECoRe framework for context usage detection, cite:</p>

|

|

|

|

|

|

|

| 52 |

<div class="code_wrap"><button class="copy_code_button" title="copy">

|

| 53 |

<span class="copy-text"><svg viewBox="0 0 32 32" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><path d="M28 10v18H10V10h18m0-2H10a2 2 0 0 0-2 2v18a2 2 0 0 0 2 2h18a2 2 0 0 0 2-2V10a2 2 0 0 0-2-2Z" fill="currentColor"></path><path d="M4 18H2V4a2 2 0 0 1 2-2h14v2H4Z" fill="currentColor"></path></svg></span>

|

| 54 |

<span class="check"><svg stroke-linejoin="round" stroke-linecap="round" stroke-width="3" stroke="currentColor" fill="none" viewBox="0 0 24 24" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><polyline points="20 6 9 17 4 12"></polyline></svg></span>

|

|

|

|

| 68 |

}

|

| 69 |

</code></pre></div>

|

| 70 |

|

| 71 |

+

If you use the Inseq implementation of PECoRe (<a href="https://inseq.org/en/latest/main_classes/cli.html#attribute-context"><code>inseq attribute-context</code></a>, including this demo), please also cite:

|

|

|

|

| 72 |

<div class="code_wrap"><button class="copy_code_button" title="copy">

|

| 73 |

<span class="copy-text"><svg viewBox="0 0 32 32" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><path d="M28 10v18H10V10h18m0-2H10a2 2 0 0 0-2 2v18a2 2 0 0 0 2 2h18a2 2 0 0 0 2-2V10a2 2 0 0 0-2-2Z" fill="currentColor"></path><path d="M4 18H2V4a2 2 0 0 1 2-2h14v2H4Z" fill="currentColor"></path></svg></span>

|

| 74 |

<span class="check"><svg stroke-linejoin="round" stroke-linecap="round" stroke-width="3" stroke="currentColor" fill="none" viewBox="0 0 24 24" height="100%" width="100%" xmlns="http://www.w3.org/2000/svg"><polyline points="20 6 9 17 4 12"></polyline></svg></span>

|

|

|

|

| 76 |

@inproceedings{sarti-etal-2023-inseq,

|

| 77 |

title = "Inseq: An Interpretability Toolkit for Sequence Generation Models",

|

| 78 |

author = "Sarti, Gabriele and

|

| 79 |

+

Feldhus, Nils and

|

| 80 |

+

Sickert, Ludwig and

|

| 81 |

+

van der Wal, Oskar and

|

| 82 |

+

Nissim, Malvina and

|

| 83 |

+

Bisazza, Arianna",

|

| 84 |

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations)",

|

| 85 |

month = jul,

|

| 86 |

year = "2023",

|

|

|

|

| 90 |

pages = "421--435",

|

| 91 |

}

|

| 92 |

</code></pre></div>

|

|

|

|

|

|

|

| 93 |

"""

|

| 94 |

|

| 95 |

+

powered_by = """<div class="footer-custom-block"><b>Powered by</b> <a href='https://github.com/inseq-team/inseq' target='_blank'><img src="file/img/inseq_logo_white_contour.png" width=150px /></a></div>"""

|

| 96 |

+

|

| 97 |

+

support = """<div class="footer-custom-block"><b>With the support of</b> <a href='https://projects.illc.uva.nl/indeep/' target='_blank'><img src="file/img/indeep_logo_white_contour.png" width=120px /></a><a href='https://www.esciencecenter.nl/' target='_blank'><img src="file/img/escience_logo_white_contour.png" width=160px /></a></div>"""

|

| 98 |

+

|

| 99 |

examples = [

|

| 100 |

+

[

|

| 101 |

+

"How many inhabitants does Groningen have?",

|

| 102 |

+

"Groningen is the capital city and main municipality of Groningen province in the Netherlands. The capital of the north, Groningen is the largest place as well as the economic and cultural centre of the northern part of the country as of December 2021, it had 235,287 inhabitants, making it the sixth largest city/municipality in the Netherlands and the second largest outside the Randstad. Groningen was established more than 950 years ago and gained city rights in 1245."

|

| 103 |

+

],

|

| 104 |

[

|

| 105 |

"When was Banff National Park established?",

|

| 106 |

"Banff National Park is Canada's oldest national park, established in 1885 as Rocky Mountains Park. Located in Alberta's Rocky Mountains, 110-180 kilometres (68-112 mi) west of Calgary, Banff encompasses 6,641 square kilometres (2,564 sq mi) of mountainous terrain.",

|

| 107 |

+

],

|

| 108 |

+

[

|

| 109 |

+

"约翰·埃尔维目前在野马队中担任什么角色?",

|

| 110 |

+

"培顿·曼宁成为史上首位带领两支不同球队多次进入超级碗的四分卫。他也以 39 岁高龄参加超级碗而成为史上年龄最大的四分卫。过去的记录是由约翰·埃尔维保持的,他在 38岁时带领野马队赢得第 33 届超级碗,目前担任丹佛的橄榄球运营执行副总裁兼总经理。",

|

| 111 |

+

],

|

| 112 |

+

[

|

| 113 |

+

"Qual'è il porto più settentrionale della Slovenia?",

|

| 114 |

+

"Trieste si trova a nordest dell'Italia. La città dista solo alcuni chilometri dal confine con la Slovenia e si trova fra la penisola italiana e la penisola istriana. Il porto triestino è il più settentrionale tra quelli situati nel mare Adriatico. Questa particolare posizione ha da sempre permesso alle navi di approdare direttamente nell'Europa centrale. L'incredibile sviluppo che la città conobbe nell'800 grazie al suo porto franco, indusse a trasferirsi qui una moltitudine di lavoratori provenienti dall'Italia nonché tanti uomini d'affari da tutta Europa. Questa crescita così vorticosa, indotta dalla costituzione del porto franco, portò in poco più di un secolo la popolazione a crescere da poche migliaia fino a più di 200 000 persone, disseminando la città di chiese di tutte le maggiori religioni europee. La nuova città multietnica così formata ha nel tempo sviluppato un proprio linguaggio, infatti il Triestino moderno è un dialetto della lingua veneta. Nella provincia di Trieste vive la minoranza autoctona slovena, infatti nei paesi che circondano il capoluogo giuliano, i cartelli stradali e le insegne di molti negozi sono bilingui. La Provincia è la meno estesa d'Italia ed è quarta per densità abitativa, dopo Napoli, Milano e Monza."

|

| 115 |

]

|

| 116 |

]

|

img/cci_white_outline.png

ADDED

|

img/cti_white_outline.png

ADDED

|

img/escience_logo_white_contour.png

ADDED

|

img/indeep_logo_white_contour.png

ADDED

|

img/inseq_logo_white_contour.png

ADDED

|

img/pecore_logo_white_contour.png

ADDED

|

img/pecore_ui_output_example.png

ADDED

|

presets.py

CHANGED

|

@@ -1,3 +1,5 @@

|

|

|

|

|

|

|

|

| 1 |

def set_cora_preset():

|

| 2 |

return (

|

| 3 |

"gsarti/cora_mgen", # model_name_or_path

|

|

@@ -10,8 +12,9 @@ def set_default_preset():

|

|

| 10 |

return (

|

| 11 |

"gpt2", # model_name_or_path

|

| 12 |

"{current} {context}", # input_template

|

| 13 |

-

"{current}", # input_current_template

|

| 14 |

"{current}", # output_template

|

|

|

|

|

|

|

| 15 |

[], # special_tokens_to_keep

|

| 16 |

"", # decoder_input_output_separator

|

| 17 |

"{}", # model_kwargs

|

|

@@ -24,18 +27,19 @@ def set_default_preset():

|

|

| 24 |

def set_zephyr_preset():

|

| 25 |

return (

|

| 26 |

"stabilityai/stablelm-2-zephyr-1_6b", # model_name_or_path

|

| 27 |

-

"<|system

|

| 28 |

-

"<|user|>\n{current}

|

| 29 |

"\n", # decoder_input_output_separator

|

|

|

|

| 30 |

)

|

| 31 |

|

| 32 |

|

| 33 |

def set_chatml_preset():

|

| 34 |

return (

|

| 35 |

"Qwen/Qwen1.5-0.5B-Chat", # model_name_or_path

|

| 36 |

-

"<|im_start|>system\n{

|

| 37 |

-

"<|im_start|>user\n{current}<|im_end|>\n<|im_start|>assistant

|

| 38 |

-

"", # decoder_input_output_separator

|

| 39 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 40 |

)

|

| 41 |

|

|

@@ -52,17 +56,25 @@ def set_mmt_preset():

|

|

| 52 |

def set_towerinstruct_preset():

|

| 53 |

return (

|

| 54 |

"Unbabel/TowerInstruct-7B-v0.1", # model_name_or_path

|

| 55 |

-

"<|im_start|>user\nSource: {current}\nContext: {context}\nTranslate the above text into French. Use the context to guide your answer.\nTarget:<|im_end|>\n<|im_start|>assistant

|

| 56 |

-

"<|im_start|>user\nSource: {current}\nTranslate the above text into French.\nTarget:<|im_end|>\n<|im_start|>assistant

|

| 57 |

-

"", # decoder_input_output_separator

|

| 58 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 59 |

)

|

| 60 |

|

| 61 |

def set_gemma_preset():

|

| 62 |

return (

|

| 63 |

"google/gemma-2b-it", # model_name_or_path

|

| 64 |

-

"<start_of_turn>user\n{context}\n{current}<end_of_turn>\n<start_of_turn>model

|

| 65 |

-

"<start_of_turn>user\n{current}<end_of_turn>\n<start_of_turn>model

|

| 66 |

-

"", # decoder_input_output_separator

|

| 67 |

["<start_of_turn>", "<end_of_turn>"], # special_tokens_to_keep

|

| 68 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

SYSTEM_PROMPT = "You are a helpful assistant that provide concise and accurate answers."

|

| 2 |

+

|

| 3 |

def set_cora_preset():

|

| 4 |

return (

|

| 5 |

"gsarti/cora_mgen", # model_name_or_path

|

|

|

|

| 12 |

return (

|

| 13 |

"gpt2", # model_name_or_path

|

| 14 |

"{current} {context}", # input_template

|

|

|

|

| 15 |

"{current}", # output_template

|

| 16 |

+

"{current}", # contextless_input_template

|

| 17 |

+

"{current}", # contextless_output_template

|

| 18 |

[], # special_tokens_to_keep

|

| 19 |

"", # decoder_input_output_separator

|

| 20 |

"{}", # model_kwargs

|

|

|

|

| 27 |

def set_zephyr_preset():

|

| 28 |

return (

|

| 29 |

"stabilityai/stablelm-2-zephyr-1_6b", # model_name_or_path

|

| 30 |

+

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{context}\n\n{current}<|endoftext|>\n<|assistant|>".format(system_prompt=SYSTEM_PROMPT), # input_template

|

| 31 |

+

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{current}<|endoftext|>\n<|assistant|>".format(system_prompt=SYSTEM_PROMPT), # input_current_text_template

|

| 32 |

"\n", # decoder_input_output_separator

|

| 33 |

+

["<|im_start|>", "<|im_end|>", "<|endoftext|>"], # special_tokens_to_keep

|

| 34 |

)

|

| 35 |

|

| 36 |

|

| 37 |

def set_chatml_preset():

|

| 38 |

return (

|

| 39 |

"Qwen/Qwen1.5-0.5B-Chat", # model_name_or_path

|

| 40 |

+

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{context}\n\n{current}<|im_end|>\n<|im_start|>assistant".format(system_prompt=SYSTEM_PROMPT), # input_template

|

| 41 |

+

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{current}<|im_end|>\n<|im_start|>assistant".format(system_prompt=SYSTEM_PROMPT), # input_current_text_template

|

| 42 |

+

"\n", # decoder_input_output_separator

|

| 43 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 44 |

)

|

| 45 |

|

|

|

|

| 56 |

def set_towerinstruct_preset():

|

| 57 |

return (

|

| 58 |

"Unbabel/TowerInstruct-7B-v0.1", # model_name_or_path

|

| 59 |

+

"<|im_start|>user\nSource: {current}\nContext: {context}\nTranslate the above text into French. Use the context to guide your answer.\nTarget:<|im_end|>\n<|im_start|>assistant", # input_template

|

| 60 |

+

"<|im_start|>user\nSource: {current}\nTranslate the above text into French.\nTarget:<|im_end|>\n<|im_start|>assistant", # input_current_text_template

|

| 61 |

+

"\n", # decoder_input_output_separator

|

| 62 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 63 |

)

|

| 64 |

|

| 65 |

def set_gemma_preset():

|

| 66 |

return (

|

| 67 |

"google/gemma-2b-it", # model_name_or_path

|

| 68 |

+

"<start_of_turn>user\n{context}\n{current}<end_of_turn>\n<start_of_turn>model", # input_template

|

| 69 |

+

"<start_of_turn>user\n{current}<end_of_turn>\n<start_of_turn>model", # input_current_text_template

|

| 70 |

+

"\n", # decoder_input_output_separator

|

| 71 |

["<start_of_turn>", "<end_of_turn>"], # special_tokens_to_keep

|

| 72 |

)

|

| 73 |

+

|

| 74 |

+

def set_mistral_instruct_preset():

|

| 75 |

+

return (

|

| 76 |

+

"mistralai/Mistral-7B-Instruct-v0.2" # model_name_or_path

|

| 77 |

+

"[INST]{context}\n{current}[/INST]" # input_template

|

| 78 |

+

"[INST]{current}[/INST]" # input_current_text_template

|

| 79 |

+

"\n" # decoder_input_output_separator

|

| 80 |

+

)

|

style.py

CHANGED

|

@@ -3,17 +3,39 @@ custom_css = """

|

|

| 3 |

text-align: center;

|

| 4 |

display: block;

|

| 5 |

margin-bottom: 0;

|

| 6 |

-

font-size:

|

| 7 |

}

|

| 8 |

|

| 9 |

.demo-subtitle {

|

| 10 |

text-align: center;

|

| 11 |

display: block;

|

| 12 |

margin-top: 0;

|

| 13 |

-

font-size: 1.

|

| 14 |

}

|

| 15 |

|

| 16 |

.summary-label {

|

| 17 |

display: inline;

|

| 18 |

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

"""

|

|

|

|

| 3 |

text-align: center;

|

| 4 |

display: block;

|

| 5 |

margin-bottom: 0;

|

| 6 |

+

font-size: 1.7em;

|

| 7 |

}

|

| 8 |

|

| 9 |

.demo-subtitle {

|

| 10 |

text-align: center;

|

| 11 |

display: block;

|

| 12 |

margin-top: 0;

|

| 13 |

+

font-size: 1.3em;

|

| 14 |

}

|

| 15 |

|

| 16 |

.summary-label {

|

| 17 |

display: inline;

|

| 18 |

}

|

| 19 |

+

|

| 20 |

+

.prose a:visited {

|

| 21 |

+

color: var(--link-text-color);

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

.footer-container {

|

| 25 |

+

align-items: center;

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

.footer-custom-block {

|

| 29 |

+

display: flex;

|

| 30 |

+

justify-content: center;

|

| 31 |

+

align-items: center;

|

| 32 |

+

}

|

| 33 |

+

|

| 34 |

+

.footer-custom-block b {

|

| 35 |

+

margin-right: 10px;

|

| 36 |

+

}

|

| 37 |

+

|

| 38 |

+

.footer-custom-block a {

|

| 39 |

+

margin-right: 15px;

|

| 40 |

+

}

|

| 41 |

"""

|