Fix bugs with CORA and Qwen

Browse files- app.py +39 -24

- contents.py +1 -3

- img/pecore_ui_output_example.png +0 -0

- presets.py +17 -17

app.py

CHANGED

|

@@ -17,7 +17,6 @@ from contents import (

|

|

| 17 |

subtitle,

|

| 18 |

title,

|

| 19 |

powered_by,

|

| 20 |

-

support,

|

| 21 |

)

|

| 22 |

from gradio_highlightedtextbox import HighlightedTextbox

|

| 23 |

from gradio_modal import Modal

|

|

@@ -82,6 +81,8 @@ def pecore(

|

|

| 82 |

model_kwargs=json.loads(model_kwargs),

|

| 83 |

tokenizer_kwargs=json.loads(tokenizer_kwargs),

|

| 84 |

)

|

|

|

|

|

|

|

| 85 |

kwargs = {}

|

| 86 |

if context_sensitivity_topk > 0:

|

| 87 |

kwargs["context_sensitivity_topk"] = context_sensitivity_topk

|

|

@@ -160,6 +161,8 @@ def preload_model(

|

|

| 160 |

model_kwargs=json.loads(model_kwargs),

|

| 161 |

tokenizer_kwargs=json.loads(tokenizer_kwargs),

|

| 162 |

)

|

|

|

|

|

|

|

| 163 |

|

| 164 |

|

| 165 |

with gr.Blocks(css=custom_css) as demo:

|

|

@@ -372,7 +375,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 372 |

gr.Markdown("#### Text Format Parameters")

|

| 373 |

with gr.Row(equal_height=True):

|

| 374 |

input_template = gr.Textbox(

|

| 375 |

-

value="<Q>:{current} <P>:{context}",

|

| 376 |

label="Contextual input template",

|

| 377 |

info="Template to format the input for the model. Use {current} and {context} placeholders for Input Query and Input Context, respectively.",

|

| 378 |

interactive=True,

|

|

@@ -384,7 +387,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 384 |

interactive=True,

|

| 385 |

)

|

| 386 |

contextless_input_template = gr.Textbox(

|

| 387 |

-

value="<Q>:{current}",

|

| 388 |

label="Contextless input template",

|

| 389 |

info="Template to format the input query in the non-contextual setting. Use {current} placeholder for Input Query.",

|

| 390 |

interactive=True,

|

|

@@ -488,25 +491,37 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 488 |

gr.Markdown("If you use the Inseq implementation of PECoRe (<a href=\"https://inseq.org/en/latest/main_classes/cli.html#attribute-context\"><code>inseq attribute-context</code></a>, including this demo), please also cite:")

|

| 489 |

gr.Code(inseq_citation, interactive=False, label="Inseq (Sarti et al., 2023)")

|

| 490 |

with gr.Row(elem_classes="footer-container"):

|

| 491 |

-

gr.

|

| 492 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 493 |

with Modal(visible=False) as code_modal:

|

| 494 |

gr.Markdown(show_code_modal)

|

| 495 |

with gr.Row(equal_height=True):

|

| 496 |

-

|

| 497 |

-

|

| 498 |

-

|

| 499 |

-

|

| 500 |

-

|

| 501 |

-

|

| 502 |

-

|

| 503 |

-

|

| 504 |

-

|

| 505 |

-

|

| 506 |

-

|

| 507 |

-

|

| 508 |

-

|

| 509 |

-

|

|

|

|

|

|

|

| 510 |

|

| 511 |

# Main logic

|

| 512 |

|

|

@@ -604,8 +619,8 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 604 |

model_name_or_path,

|

| 605 |

input_template,

|

| 606 |

contextless_input_template,

|

| 607 |

-

decoder_input_output_separator,

|

| 608 |

special_tokens_to_keep,

|

|

|

|

| 609 |

],

|

| 610 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 611 |

|

|

@@ -620,8 +635,8 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 620 |

model_name_or_path,

|

| 621 |

input_template,

|

| 622 |

contextless_input_template,

|

| 623 |

-

decoder_input_output_separator,

|

| 624 |

special_tokens_to_keep,

|

|

|

|

| 625 |

],

|

| 626 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 627 |

|

|

@@ -631,8 +646,8 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 631 |

model_name_or_path,

|

| 632 |

input_template,

|

| 633 |

contextless_input_template,

|

| 634 |

-

decoder_input_output_separator,

|

| 635 |

special_tokens_to_keep,

|

|

|

|

| 636 |

],

|

| 637 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 638 |

|

|

@@ -642,8 +657,8 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 642 |

model_name_or_path,

|

| 643 |

input_template,

|

| 644 |

contextless_input_template,

|

| 645 |

-

decoder_input_output_separator,

|

| 646 |

special_tokens_to_keep,

|

|

|

|

| 647 |

],

|

| 648 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 649 |

|

|

@@ -653,7 +668,7 @@ with gr.Blocks(css=custom_css) as demo:

|

|

| 653 |

model_name_or_path,

|

| 654 |

input_template,

|

| 655 |

contextless_input_template,

|

| 656 |

-

|

| 657 |

],

|

| 658 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 659 |

|

|

|

|

| 17 |

subtitle,

|

| 18 |

title,

|

| 19 |

powered_by,

|

|

|

|

| 20 |

)

|

| 21 |

from gradio_highlightedtextbox import HighlightedTextbox

|

| 22 |

from gradio_modal import Modal

|

|

|

|

| 81 |

model_kwargs=json.loads(model_kwargs),

|

| 82 |

tokenizer_kwargs=json.loads(tokenizer_kwargs),

|

| 83 |

)

|

| 84 |

+

if loaded_model.tokenizer.pad_token is None:

|

| 85 |

+

loaded_model.tokenizer.add_special_tokens({"pad_token": "[PAD]"})

|

| 86 |

kwargs = {}

|

| 87 |

if context_sensitivity_topk > 0:

|

| 88 |

kwargs["context_sensitivity_topk"] = context_sensitivity_topk

|

|

|

|

| 161 |

model_kwargs=json.loads(model_kwargs),

|

| 162 |

tokenizer_kwargs=json.loads(tokenizer_kwargs),

|

| 163 |

)

|

| 164 |

+

if loaded_model.tokenizer.pad_token is None:

|

| 165 |

+

loaded_model.tokenizer.add_special_tokens({"pad_token": "[PAD]"})

|

| 166 |

|

| 167 |

|

| 168 |

with gr.Blocks(css=custom_css) as demo:

|

|

|

|

| 375 |

gr.Markdown("#### Text Format Parameters")

|

| 376 |

with gr.Row(equal_height=True):

|

| 377 |

input_template = gr.Textbox(

|

| 378 |

+

value="<Q>: {current} <P>: {context}",

|

| 379 |

label="Contextual input template",

|

| 380 |

info="Template to format the input for the model. Use {current} and {context} placeholders for Input Query and Input Context, respectively.",

|

| 381 |

interactive=True,

|

|

|

|

| 387 |

interactive=True,

|

| 388 |

)

|

| 389 |

contextless_input_template = gr.Textbox(

|

| 390 |

+

value="<Q>: {current}",

|

| 391 |

label="Contextless input template",

|

| 392 |

info="Template to format the input query in the non-contextual setting. Use {current} placeholder for Input Query.",

|

| 393 |

interactive=True,

|

|

|

|

| 491 |

gr.Markdown("If you use the Inseq implementation of PECoRe (<a href=\"https://inseq.org/en/latest/main_classes/cli.html#attribute-context\"><code>inseq attribute-context</code></a>, including this demo), please also cite:")

|

| 492 |

gr.Code(inseq_citation, interactive=False, label="Inseq (Sarti et al., 2023)")

|

| 493 |

with gr.Row(elem_classes="footer-container"):

|

| 494 |

+

with gr.Column():

|

| 495 |

+

gr.Markdown(powered_by)

|

| 496 |

+

with gr.Column():

|

| 497 |

+

with gr.Row(elem_classes="footer-custom-block"):

|

| 498 |

+

with gr.Column(scale=0.25, min_width=150):

|

| 499 |

+

gr.Markdown("""<b>Built by <a href="https://gsarti.com" target="_blank">Gabriele Sarti</a><br> with the support of</b>""")

|

| 500 |

+

with gr.Column(scale=0.25, min_width=120):

|

| 501 |

+

gr.Markdown("""<a href='https://www.rug.nl/research/clcg/research/cl/' target='_blank'><img src="file/img/rug_logo_white_contour.png" width=170px /></a>""")

|

| 502 |

+

with gr.Column(scale=0.25, min_width=120):

|

| 503 |

+

gr.Markdown("""<a href='https://projects.illc.uva.nl/indeep/' target='_blank'><img src="file/img/indeep_logo_white_contour.png" width=100px /></a>""")

|

| 504 |

+

with gr.Column(scale=0.25, min_width=120):

|

| 505 |

+

gr.Markdown("""<a href='https://www.esciencecenter.nl/' target='_blank'><img src="file/img/escience_logo_white_contour.png" width=120px /></a>""")

|

| 506 |

with Modal(visible=False) as code_modal:

|

| 507 |

gr.Markdown(show_code_modal)

|

| 508 |

with gr.Row(equal_height=True):

|

| 509 |

+

with gr.Column(scale=0.5):

|

| 510 |

+

python_code_snippet = gr.Code(

|

| 511 |

+

value="""Generate Python code snippet by pressing the button.""",

|

| 512 |

+

language="python",

|

| 513 |

+

label="Python",

|

| 514 |

+

interactive=False,

|

| 515 |

+

show_label=True,

|

| 516 |

+

)

|

| 517 |

+

with gr.Column(scale=0.5):

|

| 518 |

+

shell_code_snippet = gr.Code(

|

| 519 |

+

value="""Generate Shell code snippet by pressing the button.""",

|

| 520 |

+

language="shell",

|

| 521 |

+

label="Shell",

|

| 522 |

+

interactive=False,

|

| 523 |

+

show_label=True,

|

| 524 |

+

)

|

| 525 |

|

| 526 |

# Main logic

|

| 527 |

|

|

|

|

| 619 |

model_name_or_path,

|

| 620 |

input_template,

|

| 621 |

contextless_input_template,

|

|

|

|

| 622 |

special_tokens_to_keep,

|

| 623 |

+

generation_kwargs,

|

| 624 |

],

|

| 625 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 626 |

|

|

|

|

| 635 |

model_name_or_path,

|

| 636 |

input_template,

|

| 637 |

contextless_input_template,

|

|

|

|

| 638 |

special_tokens_to_keep,

|

| 639 |

+

generation_kwargs,

|

| 640 |

],

|

| 641 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 642 |

|

|

|

|

| 646 |

model_name_or_path,

|

| 647 |

input_template,

|

| 648 |

contextless_input_template,

|

|

|

|

| 649 |

special_tokens_to_keep,

|

| 650 |

+

generation_kwargs,

|

| 651 |

],

|

| 652 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 653 |

|

|

|

|

| 657 |

model_name_or_path,

|

| 658 |

input_template,

|

| 659 |

contextless_input_template,

|

|

|

|

| 660 |

special_tokens_to_keep,

|

| 661 |

+

generation_kwargs,

|

| 662 |

],

|

| 663 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 664 |

|

|

|

|

| 668 |

model_name_or_path,

|

| 669 |

input_template,

|

| 670 |

contextless_input_template,

|

| 671 |

+

generation_kwargs,

|

| 672 |

],

|

| 673 |

).success(preload_model, inputs=load_model_args, cancels=load_model_event)

|

| 674 |

|

contents.py

CHANGED

|

@@ -44,7 +44,7 @@ how_to_use = """

|

|

| 44 |

example_explanation = """

|

| 45 |

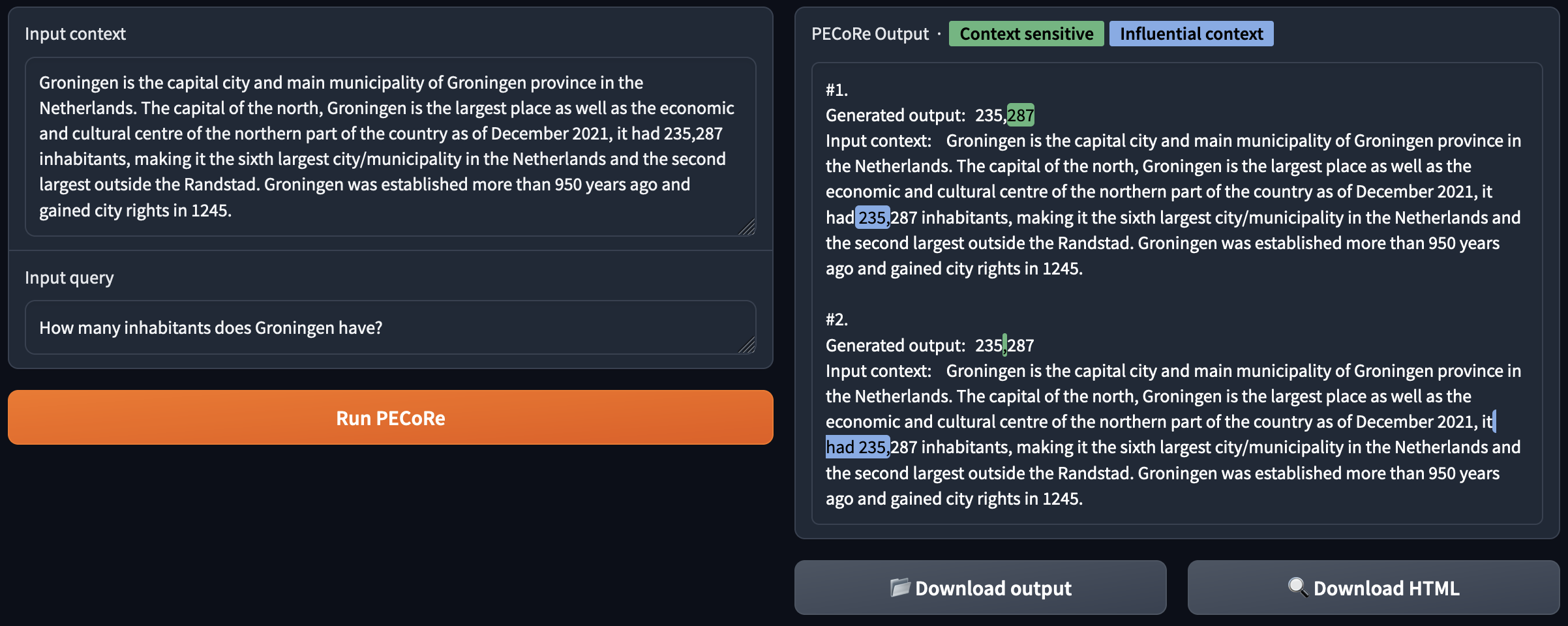

<p>Consider the following example, showing inputs and outputs of the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model provided as default in the interface, using default settings.</p>

|

| 46 |

<img src="file/img/pecore_ui_output_example.png" width=100% />

|

| 47 |

-

<p>The PECoRe CTI step identified two context-sensitive tokens in the generation (<code>287</code> and <code>,</code>), while the CCI step associated each of those with the most influential tokens in the context. It can be observed that in both cases

|

| 48 |

<h2>Usage tips</h3>

|

| 49 |

<ol>

|

| 50 |

<li>The <code>📂 Download output</code> button allows you to download the full JSON output produced by the Inseq CLI. It includes, among other things, the full set of CTI and CCI scores produced by PECoRe, tokenized versions of the input context and generated output and the full arguments used for the CLI call.</li>

|

|

@@ -95,8 +95,6 @@ inseq_citation = """@inproceedings{sarti-etal-2023-inseq,

|

|

| 95 |

|

| 96 |

powered_by = """<div class="footer-custom-block"><b>Powered by</b> <a href='https://github.com/inseq-team/inseq' target='_blank'><img src="file/img/inseq_logo_white_contour.png" width=150px /></a></div>"""

|

| 97 |

|

| 98 |

-

support = """<div class="footer-custom-block"><b>Built by <a href="https://gsarti.com" target="_blank">Gabriele Sarti</a><br> with the support of</b> <a href='https://www.rug.nl/research/clcg/research/cl/' target='_blank'><img src="file/img/rug_logo_white_contour.png" width=170px /></a><a href='https://projects.illc.uva.nl/indeep/' target='_blank'><img src="file/img/indeep_logo_white_contour.png" width=100px /></a><a href='https://www.esciencecenter.nl/' target='_blank'><img src="file/img/escience_logo_white_contour.png" width=120px /></a></div>"""

|

| 99 |

-

|

| 100 |

examples = [

|

| 101 |

[

|

| 102 |

"How many inhabitants does Groningen have?",

|

|

|

|

| 44 |

example_explanation = """

|

| 45 |

<p>Consider the following example, showing inputs and outputs of the <a href='https://huggingface.co/gsarti/cora_mgen' target='_blank'>CORA Multilingual QA</a> model provided as default in the interface, using default settings.</p>

|

| 46 |

<img src="file/img/pecore_ui_output_example.png" width=100% />

|

| 47 |

+

<p>The PECoRe CTI step identified two context-sensitive tokens in the generation (<code>287</code> and <code>,</code>), while the CCI step associated each of those with the most influential tokens in the context. It can be observed that in both cases the matching tokens stating the number of inhabitants are identified as salient (<code>,</code> and <code>287</code> for the generated <code>287</code>, while <code>235</code> is also found salient for the generated <code>,</code>). In this case, the influential context found by PECoRe is lexically equal to the generated output, but in principle better LMs might not use their inputs verbatim, hence the interest for using model internals with PECoRe.</p>

|

| 48 |

<h2>Usage tips</h3>

|

| 49 |

<ol>

|

| 50 |

<li>The <code>📂 Download output</code> button allows you to download the full JSON output produced by the Inseq CLI. It includes, among other things, the full set of CTI and CCI scores produced by PECoRe, tokenized versions of the input context and generated output and the full arguments used for the CLI call.</li>

|

|

|

|

| 95 |

|

| 96 |

powered_by = """<div class="footer-custom-block"><b>Powered by</b> <a href='https://github.com/inseq-team/inseq' target='_blank'><img src="file/img/inseq_logo_white_contour.png" width=150px /></a></div>"""

|

| 97 |

|

|

|

|

|

|

|

| 98 |

examples = [

|

| 99 |

[

|

| 100 |

"How many inhabitants does Groningen have?",

|

img/pecore_ui_output_example.png

CHANGED

|

|

presets.py

CHANGED

|

@@ -5,8 +5,8 @@ SYSTEM_PROMPT = "You are a helpful assistant that provide concise and accurate a

|

|

| 5 |

def set_cora_preset():

|

| 6 |

return (

|

| 7 |

"gsarti/cora_mgen", # model_name_or_path

|

| 8 |

-

"<Q>:{current} <P>:{context}", # input_template

|

| 9 |

-

"<Q>:{current}", # input_current_text_template

|

| 10 |

)

|

| 11 |

|

| 12 |

|

|

@@ -29,20 +29,20 @@ def set_default_preset():

|

|

| 29 |

def set_zephyr_preset():

|

| 30 |

return (

|

| 31 |

"stabilityai/stablelm-2-zephyr-1_6b", # model_name_or_path

|

| 32 |

-

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{context}\n\n{current}<|endoftext|>\n<|assistant

|

| 33 |

-

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{current}<|endoftext|>\n<|assistant

|

| 34 |

-

"\n", # decoder_input_output_separator

|

| 35 |

["<|im_start|>", "<|im_end|>", "<|endoftext|>"], # special_tokens_to_keep

|

|

|

|

| 36 |

)

|

| 37 |

|

| 38 |

|

| 39 |

def set_chatml_preset():

|

| 40 |

return (

|

| 41 |

"Qwen/Qwen1.5-0.5B-Chat", # model_name_or_path

|

| 42 |

-

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{context}\n\n{current}<|im_end|>\n<|im_start|>assistant".

|

| 43 |

-

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{current}<|im_end|>\n<|im_start|>assistant".

|

| 44 |

-

"\n", # decoder_input_output_separator

|

| 45 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

|

|

|

| 46 |

)

|

| 47 |

|

| 48 |

|

|

@@ -58,19 +58,19 @@ def set_mmt_preset():

|

|

| 58 |

def set_towerinstruct_preset():

|

| 59 |

return (

|

| 60 |

"Unbabel/TowerInstruct-7B-v0.1", # model_name_or_path

|

| 61 |

-

"<|im_start|>user\nSource: {current}\nContext: {context}\nTranslate the above text into French. Use the context to guide your answer.\nTarget:<|im_end|>\n<|im_start|>assistant", # input_template

|

| 62 |

-

"<|im_start|>user\nSource: {current}\nTranslate the above text into French.\nTarget:<|im_end|>\n<|im_start|>assistant", # input_current_text_template

|

| 63 |

-

"\n", # decoder_input_output_separator

|

| 64 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

|

|

|

| 65 |

)

|

| 66 |

|

| 67 |

def set_gemma_preset():

|

| 68 |

return (

|

| 69 |

"google/gemma-2b-it", # model_name_or_path

|

| 70 |

-

"<start_of_turn>user\n{context}\n{current}<end_of_turn>\n<start_of_turn>model", # input_template

|

| 71 |

-

"<start_of_turn>user\n{current}<end_of_turn>\n<start_of_turn>model", # input_current_text_template

|

| 72 |

-

"\n", # decoder_input_output_separator

|

| 73 |

["<start_of_turn>", "<end_of_turn>"], # special_tokens_to_keep

|

|

|

|

| 74 |

)

|

| 75 |

|

| 76 |

def set_mistral_instruct_preset():

|

|

@@ -78,7 +78,7 @@ def set_mistral_instruct_preset():

|

|

| 78 |

"mistralai/Mistral-7B-Instruct-v0.2" # model_name_or_path

|

| 79 |

"[INST]{context}\n{current}[/INST]" # input_template

|

| 80 |

"[INST]{current}[/INST]" # input_current_text_template

|

| 81 |

-

|

| 82 |

)

|

| 83 |

|

| 84 |

def update_code_snippets_fn(

|

|

@@ -137,7 +137,7 @@ def update_code_snippets_fn(

|

|

| 137 |

# Python

|

| 138 |

python = f"""#!pip install inseq

|

| 139 |

import inseq

|

| 140 |

-

from inseq.commands.attribute_context import attribute_context_with_model

|

| 141 |

|

| 142 |

inseq_model = inseq.load_model(

|

| 143 |

"{model_name_or_path}",

|

|

@@ -160,7 +160,7 @@ pecore_args = AttributeContextArgs(

|

|

| 160 |

viz_path="pecore_output.html",{py_get_kwargs_str(model_kwargs, "model_kwargs")}{py_get_kwargs_str(tokenizer_kwargs, "tokenizer_kwargs")}{py_get_kwargs_str(generation_kwargs, "generation_kwargs")}{py_get_kwargs_str(attribution_kwargs, "attribution_kwargs")}

|

| 161 |

)

|

| 162 |

|

| 163 |

-

out = attribute_context_with_model(pecore_args,

|

| 164 |

# Bash

|

| 165 |

bash = f"""# pip install inseq

|

| 166 |

inseq attribute-context \\

|

|

|

|

| 5 |

def set_cora_preset():

|

| 6 |

return (

|

| 7 |

"gsarti/cora_mgen", # model_name_or_path

|

| 8 |

+

"<Q>: {current} <P>: {context}", # input_template

|

| 9 |

+

"<Q>: {current}", # input_current_text_template

|

| 10 |

)

|

| 11 |

|

| 12 |

|

|

|

|

| 29 |

def set_zephyr_preset():

|

| 30 |

return (

|

| 31 |

"stabilityai/stablelm-2-zephyr-1_6b", # model_name_or_path

|

| 32 |

+

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{context}\n\n{current}<|endoftext|>\n<|assistant|>\n".replace("{system_prompt}", SYSTEM_PROMPT), # input_template

|

| 33 |

+

"<|system|>{system_prompt}<|endoftext|>\n<|user|>\n{current}<|endoftext|>\n<|assistant|>\n".replace("{system_prompt}", SYSTEM_PROMPT), # input_current_text_template

|

|

|

|

| 34 |

["<|im_start|>", "<|im_end|>", "<|endoftext|>"], # special_tokens_to_keep

|

| 35 |

+

'{\n\t"max_new_tokens": 50\n}', # generation_kwargs

|

| 36 |

)

|

| 37 |

|

| 38 |

|

| 39 |

def set_chatml_preset():

|

| 40 |

return (

|

| 41 |

"Qwen/Qwen1.5-0.5B-Chat", # model_name_or_path

|

| 42 |

+

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{context}\n\n{current}<|im_end|>\n<|im_start|>assistant\n".replace("{system_prompt}", SYSTEM_PROMPT), # input_template

|

| 43 |

+

"<|im_start|>system\n{system_prompt}<|im_end|>\n<|im_start|>user\n{current}<|im_end|>\n<|im_start|>assistant\n".replace("{system_prompt}", SYSTEM_PROMPT), # input_current_text_template

|

|

|

|

| 44 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 45 |

+

'{\n\t"max_new_tokens": 50\n}', # generation_kwargs

|

| 46 |

)

|

| 47 |

|

| 48 |

|

|

|

|

| 58 |

def set_towerinstruct_preset():

|

| 59 |

return (

|

| 60 |

"Unbabel/TowerInstruct-7B-v0.1", # model_name_or_path

|

| 61 |

+

"<|im_start|>user\nSource: {current}\nContext: {context}\nTranslate the above text into French. Use the context to guide your answer.\nTarget:<|im_end|>\n<|im_start|>assistant\n", # input_template

|

| 62 |

+

"<|im_start|>user\nSource: {current}\nTranslate the above text into French.\nTarget:<|im_end|>\n<|im_start|>assistant\n", # input_current_text_template

|

|

|

|

| 63 |

["<|im_start|>", "<|im_end|>"], # special_tokens_to_keep

|

| 64 |

+

'{\n\t"max_new_tokens": 50\n}', # generation_kwargs

|

| 65 |

)

|

| 66 |

|

| 67 |

def set_gemma_preset():

|

| 68 |

return (

|

| 69 |

"google/gemma-2b-it", # model_name_or_path

|

| 70 |

+

"<start_of_turn>user\n{context}\n{current}<end_of_turn>\n<start_of_turn>model\n", # input_template

|

| 71 |

+

"<start_of_turn>user\n{current}<end_of_turn>\n<start_of_turn>model\n", # input_current_text_template

|

|

|

|

| 72 |

["<start_of_turn>", "<end_of_turn>"], # special_tokens_to_keep

|

| 73 |

+

'{\n\t"max_new_tokens": 50\n}', # generation_kwargs

|

| 74 |

)

|

| 75 |

|

| 76 |

def set_mistral_instruct_preset():

|

|

|

|

| 78 |

"mistralai/Mistral-7B-Instruct-v0.2" # model_name_or_path

|

| 79 |

"[INST]{context}\n{current}[/INST]" # input_template

|

| 80 |

"[INST]{current}[/INST]" # input_current_text_template

|

| 81 |

+

'{\n\t"max_new_tokens": 50\n}', # generation_kwargs

|

| 82 |

)

|

| 83 |

|

| 84 |

def update_code_snippets_fn(

|

|

|

|

| 137 |

# Python

|

| 138 |

python = f"""#!pip install inseq

|

| 139 |

import inseq

|

| 140 |

+

from inseq.commands.attribute_contex.attribute_context import attribute_context_with_model, AttributeContextArgs

|

| 141 |

|

| 142 |

inseq_model = inseq.load_model(

|

| 143 |

"{model_name_or_path}",

|

|

|

|

| 160 |

viz_path="pecore_output.html",{py_get_kwargs_str(model_kwargs, "model_kwargs")}{py_get_kwargs_str(tokenizer_kwargs, "tokenizer_kwargs")}{py_get_kwargs_str(generation_kwargs, "generation_kwargs")}{py_get_kwargs_str(attribution_kwargs, "attribution_kwargs")}

|

| 161 |

)

|

| 162 |

|

| 163 |

+

out = attribute_context_with_model(pecore_args, inseq_model)"""

|

| 164 |

# Bash

|

| 165 |

bash = f"""# pip install inseq

|

| 166 |

inseq attribute-context \\

|