Spaces:

Runtime error

Runtime error

Commit

•

f4fac26

1

Parent(s):

dfbdf47

Upload 59 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .gitignore +27 -0

- LICENSE +201 -0

- README.en.md +457 -0

- README.md +474 -12

- accelerate.yaml +25 -0

- api_demo.py +104 -0

- app.py +37 -0

- cli_demo.py +105 -0

- config.py +139 -0

- data/my_test_dataset_2k.parquet +3 -0

- data/my_train_dataset_3k.parquet +3 -0

- data/my_valid_dataset_1k.parquet +3 -0

- dpo_train.py +203 -0

- eval/.gitignore +5 -0

- eval/c_eavl.ipynb +657 -0

- eval/cmmlu.ipynb +241 -0

- finetune_examples/.gitignore +3 -0

- finetune_examples/info_extract/data_process.py +146 -0

- finetune_examples/info_extract/finetune_IE_task.ipynb +463 -0

- img/api_example.png +0 -0

- img/dpo_loss.png +0 -0

- img/ie_task_chat.png +0 -0

- img/sentence_length.png +0 -0

- img/sft_loss.png +0 -0

- img/show1.png +0 -0

- img/stream_chat.gif +3 -0

- img/train_loss.png +0 -0

- model/__pycache__/chat_model.cpython-310.pyc +0 -0

- model/__pycache__/infer.cpython-310.pyc +0 -0

- model/chat_model.py +74 -0

- model/chat_model_config.py +4 -0

- model/dataset.py +290 -0

- model/infer.py +121 -0

- model/trainer.py +606 -0

- model_save/.gitattributes +35 -0

- model_save/README.md +0 -0

- model_save/config.json +33 -0

- model_save/configuration_chat_model.py +4 -0

- model_save/generation_config.json +7 -0

- model_save/model.safetensors +3 -0

- model_save/modeling_chat_model.py +74 -0

- model_save/put_model_files_here +0 -0

- model_save/special_tokens_map.json +5 -0

- model_save/tokenizer.json +0 -0

- model_save/tokenizer_config.json +66 -0

- pre_train.py +136 -0

- requirements.txt +29 -0

- sft_train.py +134 -0

- train.ipynb +82 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

img/stream_chat.gif filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.vscode/*

|

| 2 |

+

.vscode

|

| 3 |

+

!.vscode/settings.json

|

| 4 |

+

!.vscode/tasks.json

|

| 5 |

+

!.vscode/launch.json

|

| 6 |

+

!.vscode/extensions.json

|

| 7 |

+

*.code-workspace

|

| 8 |

+

|

| 9 |

+

# Local History for Visual Studio Code

|

| 10 |

+

.history/

|

| 11 |

+

.idea/

|

| 12 |

+

|

| 13 |

+

# python cache

|

| 14 |

+

*.pyc

|

| 15 |

+

*.cache

|

| 16 |

+

|

| 17 |

+

logs/*

|

| 18 |

+

|

| 19 |

+

data/*

|

| 20 |

+

!/data/my_train_dataset_3k.parquet

|

| 21 |

+

!/data/my_test_dataset_2k.parquet

|

| 22 |

+

!/data/my_valid_dataset_1k.parquet

|

| 23 |

+

|

| 24 |

+

model_save/*

|

| 25 |

+

!model_save/put_model_files_here

|

| 26 |

+

|

| 27 |

+

wandb/*

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.en.md

ADDED

|

@@ -0,0 +1,457 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

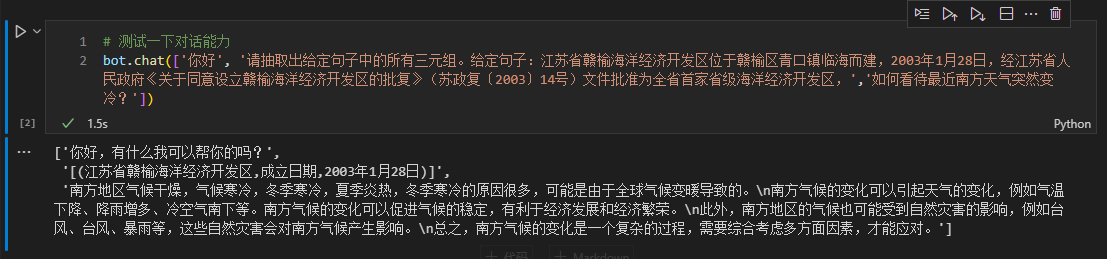

| 1 |

+

<div align="center">

|

| 2 |

+

|

| 3 |

+

# A Small Chat with Chinese Language Model: ChatLM-Chinese-0.2B

|

| 4 |

+

[中文](./README.md) | English

|

| 5 |

+

|

| 6 |

+

</div>

|

| 7 |

+

|

| 8 |

+

# 1. 👋Introduction

|

| 9 |

+

|

| 10 |

+

Today's large language models tend to have large parameters, and consumer-grade computers are slow to do simple inference, let alone train a model from scratch. The goal of this project is to train a generative language models from scratch, including data cleaning, tokenizer training, model pre-training, SFT instruction fine-tuning, RLHF optimization, etc.

|

| 11 |

+

|

| 12 |

+

ChatLM-mini-Chinese is a small Chinese chat model with only 0.2B (added shared weight is about 210M) parameters. It can be pre-trained on machine with a minimum of 4GB of GPU memory (`batch_size=1`, `fp16` or `bf16`), `float16` loading and inference only require a minimum of 512MB of GPU memory.

|

| 13 |

+

|

| 14 |

+

- Make public all pre-training, SFT instruction fine-tuning, and DPO preference optimization datasets sources.

|

| 15 |

+

- Use the `Huggingface` NLP framework, including `transformers`, `accelerate`, `trl`, `peft`, etc.

|

| 16 |

+

- Self-implemented `trainer`, supporting pre-training and SFT fine-tuning on a single machine with a single card or with multiple cards on a single machine. It supports stopping at any position during training and continuing training at any position.

|

| 17 |

+

- Pre-training: Integrated into end-to-end `Text-to-Text` pre-training, non-`mask` mask prediction pre-training.

|

| 18 |

+

- Open source all data cleaning (such as standardization, document deduplication based on mini_hash, etc.), data set construction, data set loading optimization and other processes;

|

| 19 |

+

- tokenizer multi-process word frequency statistics, supports tokenizer training of `sentencepiece` and `huggingface tokenizers`;

|

| 20 |

+

- Pre-training supports checkpoint at any step, and training can be continued from the breakpoint;

|

| 21 |

+

- Streaming loading of large datasets (GB level), supporting buffer data shuffling, does not use memory or hard disk as cache, effectively reducing memory and disk usage. configuring `batch_size=1, max_len=320`, supporting pre-training on a machine with at least 16GB RAM + 4GB GPU memory;

|

| 22 |

+

- Training log record.

|

| 23 |

+

- SFT fine-tuning: open source SFT dataset and data processing process.

|

| 24 |

+

- The self-implemented `trainer` supports prompt command fine-tuning and supports any breakpoint to continue training;

|

| 25 |

+

- Support `sequence to sequence` fine-tuning of `Huggingface trainer`;

|

| 26 |

+

- Supports traditional low learning rate and only trains fine-tuning of the decoder layer.

|

| 27 |

+

- RLHF Preference optimization: Use DPO to optimize all preferences.

|

| 28 |

+

- Support using `peft lora` for preference optimization;

|

| 29 |

+

- Supports model merging, `Lora adapter` can be merged into the original model.

|

| 30 |

+

- Support downstream task fine-tuning: [finetune_examples](./finetune_examples/info_extract/) gives a fine-tuning example of the **Triple Information Extraction Task**. The model dialogue capability after fine-tuning is still there.

|

| 31 |

+

|

| 32 |

+

If you need to do retrieval augmented generation (RAG) based on small models, you can refer to my other project [Phi2-mini-Chinese](https://github.com/charent/Phi2-mini-Chinese). For the code, see [rag_with_langchain.ipynb](https://github.com/charent/Phi2-mini-Chinese/blob/main/rag_with_langchain.ipynb)

|

| 33 |

+

|

| 34 |

+

🟢**Latest Update**

|

| 35 |

+

|

| 36 |

+

<details open>

|

| 37 |

+

<summary> <b>2024-01-30</b> </summary>

|

| 38 |

+

- The model files are updated to Moda modelscope and can be quickly downloaded through `snapshot_download`. <br/>

|

| 39 |

+

</details>

|

| 40 |

+

|

| 41 |

+

<details close>

|

| 42 |

+

<summary> <b>2024-01-07</b> </summary>

|

| 43 |

+

- Add document deduplication based on mini hash during the data cleaning process (in this project, it's to deduplicated the rows of datasets actually). Prevent the model from spitting out training data during inference after encountering multiple repeated data. <br/>

|

| 44 |

+

- Add the `DropDatasetDuplicate` class to implement deduplication of documents from large data sets. <br/>

|

| 45 |

+

</details>

|

| 46 |

+

|

| 47 |

+

<details close>

|

| 48 |

+

<summary> <b>2023-12-29</b> </summary>

|

| 49 |

+

- Update the model code (weights is NOT changed), you can directly use `AutoModelForSeq2SeqLM.from_pretrained(...)` to load the model for using. <br/>

|

| 50 |

+

- Updated readme documentation. <br/>

|

| 51 |

+

</details>

|

| 52 |

+

|

| 53 |

+

<details close>

|

| 54 |

+

<summary> <b>2023-12-18</b> </summary>

|

| 55 |

+

- Supplementary use of the `ChatLM-mini-0.2B` model to fine-tune the downstream triplet information extraction task code and display the extraction results. <br/>

|

| 56 |

+

- Updated readme documentation. <br/>

|

| 57 |

+

</details>

|

| 58 |

+

|

| 59 |

+

<details close>

|

| 60 |

+

<summary> <b>2023-12-14</b> </summary>

|

| 61 |

+

- Updated model weight files after SFT and DPO. <br/>

|

| 62 |

+

- Updated pre-training, SFT and DPO scripts. <br/>

|

| 63 |

+

- update `tokenizer` to `PreTrainedTokenizerFast`. <br/>

|

| 64 |

+

- Refactor the `dataset` code to support dynamic maximum length. The maximum length of each batch is determined by the longest text in the batch, saving GPU memory. <br/>

|

| 65 |

+

- Added `tokenizer` training details. <br/>

|

| 66 |

+

</details>

|

| 67 |

+

|

| 68 |

+

<details close>

|

| 69 |

+

<summary> <b>2023-12-04</b> </summary>

|

| 70 |

+

- Updated `generate` parameters and model effect display. <br/>

|

| 71 |

+

- Updated readme documentation. <br/>

|

| 72 |

+

</details>

|

| 73 |

+

|

| 74 |

+

<details close>

|

| 75 |

+

<summary> <b>2023-11-28</b> </summary>

|

| 76 |

+

- Updated dpo training code and model weights. <br/>

|

| 77 |

+

</details>

|

| 78 |

+

|

| 79 |

+

<details close>

|

| 80 |

+

<summary> <b>2023-10-19</b> </summary>

|

| 81 |

+

- The project is open source and the model weights are open for download. <br/>

|

| 82 |

+

</details>

|

| 83 |

+

|

| 84 |

+

# 2. 🛠️ChatLM-0.2B-Chinese model training process

|

| 85 |

+

## 2.1 Pre-training dataset

|

| 86 |

+

All datasets come from the **Single Round Conversation** dataset published on the Internet. After data cleaning and formatting, they are saved as parquet files. For the data processing process, see `utils/raw_data_process.py`. Main datasets include:

|

| 87 |

+

|

| 88 |

+

1. Community Q&A json version webtext2019zh-large-scale high-quality dataset, see: [nlp_chinese_corpus](https://github.com/brightmart/nlp_chinese_corpus). A total of 4.1 million, with 2.6 million remaining after cleaning.

|

| 89 |

+

2. baike_qa2019 encyclopedia Q&A, see: <https://aistudio.baidu.com/datasetdetail/107726>, a total of 1.4 million, and the remaining 1.3 million after waking up.

|

| 90 |

+

3. Chinese medical field question and answer dataset, see: [Chinese-medical-dialogue-data](https://github.com/Toyhom/Chinese-medical-dialogue-data), with a total of 790,000, and the remaining 790,000 after cleaning.

|

| 91 |

+

4. ~~Financial industry question and answer data, see: <https://zhuanlan.zhihu.com/p/609821974>, a total of 770,000, and the remaining 520,000 after cleaning. ~~**The data quality is too poor and not used. **

|

| 92 |

+

5. Zhihu question and answer data, see: [Zhihu-KOL](https://huggingface.co/datasets/wangrui6/Zhihu-KOL), with a total of 1 million rows, and 970,000 rows remain after cleaning.

|

| 93 |

+

6. belle open source instruction training data, introduction: [BELLE](https://github.com/LianjiaTech/BELLE), download: [BelleGroup](https://huggingface.co/BelleGroup), only select `Belle_open_source_1M` , `train_2M_CN`, and `train_3.5M_CN` contain some data with short answers, no complex table structure, and translation tasks (no English vocabulary list), totaling 3.7 million rows, and 3.38 million rows remain after cleaning.

|

| 94 |

+

7. Wikipedia entry data, piece together the entries into prompts, the first `N` words of the encyclopedia are the answers, use the encyclopedia data of `202309`, and after cleaning, the remaining 1.19 million entry prompts and answers . Wiki download: [zhwiki](https://dumps.wikimedia.org/zhwiki/), convert the downloaded bz2 file to wiki.txt reference: [WikiExtractor](https://github.com/apertium/WikiExtractor).

|

| 95 |

+

|

| 96 |

+

The total number of datasets is 10.23 million: Text-to-Text pre-training set: 9.3 million, evaluation set: 25,000 (because the decoding is slow, the evaluation set is not set too large). ~~Test set: 900,000~~

|

| 97 |

+

SFT fine-tuning and DPO optimization datasets are shown below.

|

| 98 |

+

|

| 99 |

+

## 2.2 Model

|

| 100 |

+

T5 model (Text-to-Text Transfer Transformer), for details, see the paper: [Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer](https://arxiv.org/abs/1910.10683).

|

| 101 |

+

|

| 102 |

+

The model source code comes from huggingface, see: [T5ForConditionalGeneration](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/modeling_t5.py#L1557).

|

| 103 |

+

|

| 104 |

+

For model configuration, see [model_config.json](https://huggingface.co/charent/ChatLM-mini-Chinese/blob/main/config.json). The official `T5-base`: `encoder layer` and `decoder layer` are both 12 layers. In this project, these two parameters are modified to 10 layers.

|

| 105 |

+

|

| 106 |

+

Model parameters: 0.2B. Word list size: 29298, including only Chinese and a small amount of English.

|

| 107 |

+

|

| 108 |

+

## 2.3 Training process

|

| 109 |

+

hardware:

|

| 110 |

+

```bash

|

| 111 |

+

# Pre-training phase:

|

| 112 |

+

CPU: 28 vCPU Intel(R) Xeon(R) Gold 6330 CPU @ 2.00GHz

|

| 113 |

+

Memory: 60 GB

|

| 114 |

+

GPU: RTX A5000 (24GB) * 2

|

| 115 |

+

|

| 116 |

+

# sft and dpo stages:

|

| 117 |

+

CPU: Intel(R) i5-13600k @ 5.1GHz

|

| 118 |

+

Memory: 32 GB

|

| 119 |

+

GPU: NVIDIA GeForce RTX 4060 Ti 16GB * 1

|

| 120 |

+

```

|

| 121 |

+

|

| 122 |

+

1. **tokenizer training**: The existing `tokenizer` training library has OOM problems when encountering large corpus. Therefore, the full corpus is merged and constructed according to word frequency according to a method similar to `BPE`, and the operation takes half a day.

|

| 123 |

+

|

| 124 |

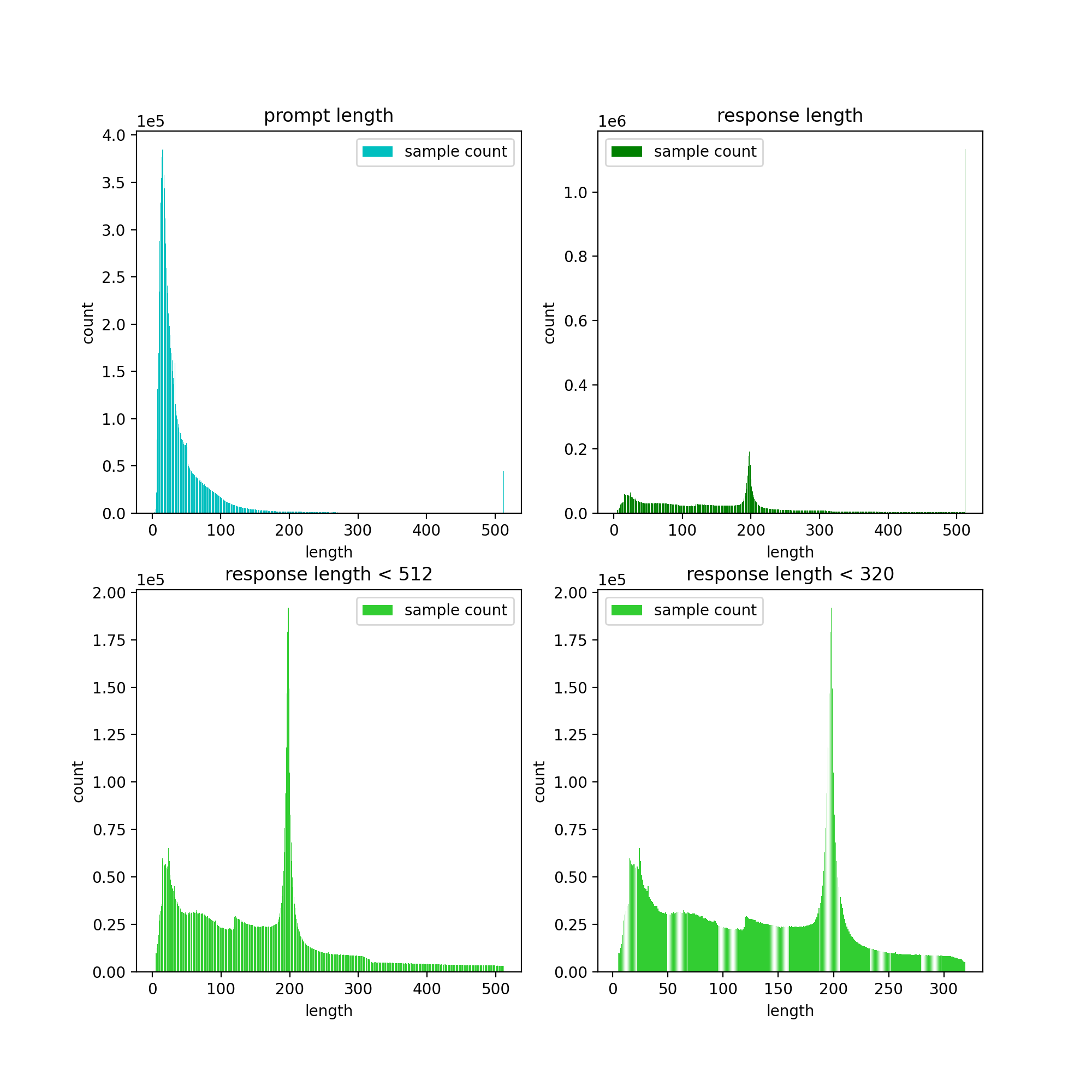

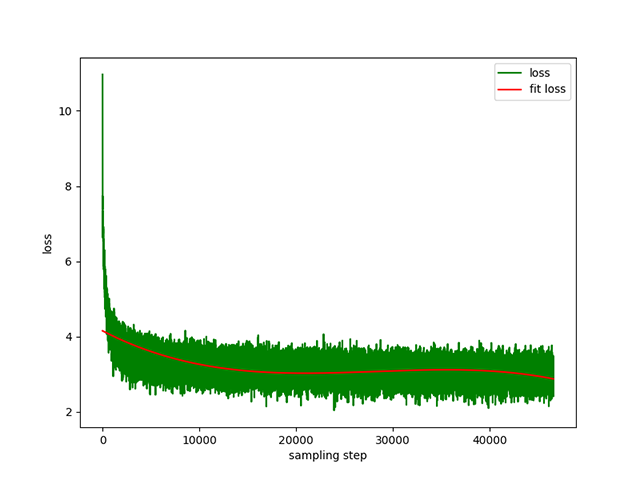

+

2. **Text-to-Text pre-training**: The learning rate is a dynamic learning rate from `1e-4` to `5e-3`, and the pre-training time is 8 days. Training loss:

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

3. **prompt supervised fine-tuning (SFT)**: Use the `belle` instruction training dataset (both instruction and answer lengths are below 512), with a dynamic learning rate from `1e-7` to `5e-5` , the fine-tuning time is 2 days. Fine-tuning loss:

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

4. **dpo direct preference optimization(RLHF)**: dataset [alpaca-gpt4-data-zh](https://huggingface.co/datasets/c-s-ale/alpaca-gpt4-data-zh) as `chosen` text , in step `2`, the SFT model performs batch `generate` on the prompts in the dataset, and obtains the `rejected` text, which takes 1 day, dpo full preference optimization, learning rate `le-5`, half precision `fp16`, total `2` `epoch`, taking 3h. dpo loss:

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

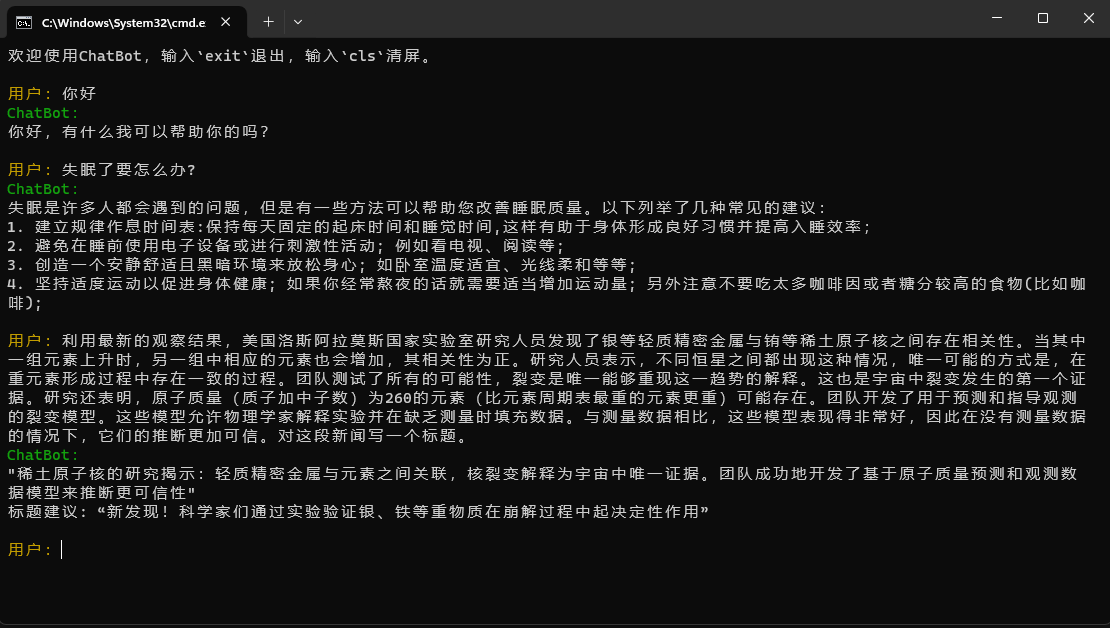

## 2.4 chat show

|

| 134 |

+

### 2.4.1 stream chat

|

| 135 |

+

By default, `TextIteratorStreamer` of `huggingface transformers` is used to implement streaming dialogue, and only `greedy search` is supported. If you need `beam sample` and other generation methods, please change the `stream_chat` parameter of `cli_demo.py` to `False` .

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

### 2.4.2 Dialogue show

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

There are problems: the pre-training dataset only has more than 9 million, and the model parameters are only 0.2B. It cannot cover all aspects, and there will be situations where the answer is wrong and the generator is nonsense.

|

| 142 |

+

|

| 143 |

+

# 3. 📑Instructions for using

|

| 144 |

+

## 3.1 Quick start:

|

| 145 |

+

```python

|

| 146 |

+

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

|

| 147 |

+

import torch

|

| 148 |

+

|

| 149 |

+

model_id = 'charent/ChatLM-mini-Chinese'

|

| 150 |

+

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

|

| 151 |

+

|

| 152 |

+

# 如果无法连接huggingface,打开以下两行代码的注释,将从modelscope下载模型文件,模型文件保存到'./model_save'目录

|

| 153 |

+

# from modelscope import snapshot_download

|

| 154 |

+

# model_id = snapshot_download(model_id, cache_dir='./model_save')

|

| 155 |

+

|

| 156 |

+

tokenizer = AutoTokenizer.from_pretrained(model_id)

|

| 157 |

+

model = AutoModelForSeq2SeqLM.from_pretrained(model_id, trust_remote_code=True).to(device)

|

| 158 |

+

|

| 159 |

+

txt = '如何评价Apple这家公司?'

|

| 160 |

+

|

| 161 |

+

encode_ids = tokenizer([txt])

|

| 162 |

+

input_ids, attention_mask = torch.LongTensor(encode_ids['input_ids']), torch.LongTensor(encode_ids['attention_mask'])

|

| 163 |

+

|

| 164 |

+

outs = model.my_generate(

|

| 165 |

+

input_ids=input_ids.to(device),

|

| 166 |

+

attention_mask=attention_mask.to(device),

|

| 167 |

+

max_seq_len=256,

|

| 168 |

+

search_type='beam',

|

| 169 |

+

)

|

| 170 |

+

|

| 171 |

+

outs_txt = tokenizer.batch_decode(outs.cpu().numpy(), skip_special_tokens=True, clean_up_tokenization_spaces=True)

|

| 172 |

+

print(outs_txt[0])

|

| 173 |

+

```

|

| 174 |

+

```txt

|

| 175 |

+

Apple是一家专注于设计和用户体验的公司,其产品在设计上注重简约、流畅和功能性,而在用户体验方面则注重用户的反馈和使用体验。作为一家领先的科技公司,苹果公司一直致力于为用户提供最优质的产品和服务,不断推陈出新,不断创新和改进,以满足不断变化的市场需求。

|

| 176 |

+

在iPhone、iPad和Mac等产品上,苹果公司一直保持着创新的态度,不断推出新的功能和设计,为用户提供更好的使用体验。在iPad上推出的iPad Pro和iPod touch等产品,也一直保持着优秀的用户体验。

|

| 177 |

+

此外,苹果公司还致力于开发和销售软件和服务,例如iTunes、iCloud和App Store等,这些产品在市场上也获得了广泛的认可和好评。

|

| 178 |

+

总的来说,苹果公司在设计、用户体验和产品创新方面都做得非常出色,为用户带来了许多便利和惊喜。

|

| 179 |

+

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

## 3.2 from clone code repository start

|

| 183 |

+

> [!CAUTION]

|

| 184 |

+

> The model of this project is the `TextToText` model. In the `prompt`, `response` and other fields of the pre-training stage, SFT stage, and RLFH stage, please be sure to add the `[EOS]` end-of-sequence mark.

|

| 185 |

+

|

| 186 |

+

### 3.2.1 Clone repository

|

| 187 |

+

```bash

|

| 188 |

+

git clone --depth 1 https://github.com/charent/ChatLM-mini-Chinese.git

|

| 189 |

+

|

| 190 |

+

cd ChatLM-mini-Chinese

|

| 191 |

+

```

|

| 192 |

+

### 3.2.2 Install dependencies

|

| 193 |

+

It is recommended to use `python 3.10` for this project. Older python versions may not be compatible with the third-party libraries it depends on.

|

| 194 |

+

|

| 195 |

+

pip installation:

|

| 196 |

+

```bash

|

| 197 |

+

pip install -r ./requirements.txt

|

| 198 |

+

```

|

| 199 |

+

|

| 200 |

+

If pip installed the CPU version of pytorch, you can install the CUDA version of pytorch with the following command:

|

| 201 |

+

```bash

|

| 202 |

+

# pip install torch + cu118

|

| 203 |

+

pip3 install torch --index-url https://download.pytorch.org/whl/cu118

|

| 204 |

+

```

|

| 205 |

+

|

| 206 |

+

conda installation:

|

| 207 |

+

```bash

|

| 208 |

+

conda install --yes --file ./requirements.txt

|

| 209 |

+

```

|

| 210 |

+

|

| 211 |

+

### 3.2.3 Download the pre-trained model and model configuration file

|

| 212 |

+

|

| 213 |

+

Download model weights and configuration files from `Hugging Face Hub` with `git` command, you need to install [Git LFS](https://docs.github.com/zh/repositories/working-with-files/managing-large-files/installing-git-large -file-storage), then run:

|

| 214 |

+

|

| 215 |

+

```bash

|

| 216 |

+

# Use the git command to download the huggingface model. Install [Git LFS] first, otherwise the downloaded model file will not be available.

|

| 217 |

+

git clone --depth 1 https://huggingface.co/charent/ChatLM-mini-Chinese

|

| 218 |

+

|

| 219 |

+

# If unable to connect huggingface, please download from modelscope

|

| 220 |

+

git clone --depth 1 https://www.modelscope.cn/charent/ChatLM-mini-Chinese.git

|

| 221 |

+

|

| 222 |

+

mv ChatLM-mini-Chinese model_save

|

| 223 |

+

```

|

| 224 |

+

|

| 225 |

+

You can also manually download it directly from the `Hugging Face Hub` warehouse [ChatLM-mini-Chinese](https://huggingface.co/charent/ChatLM-mini-Chinese) and move the downloaded file to the `model_save` directory. .

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

## 3.3 Tokenizer training

|

| 229 |

+

|

| 230 |

+

1. Prepare txt corpus

|

| 231 |

+

|

| 232 |

+

The corpus requirements should be as complete as possible. It is recommended to add multiple corpora, such as encyclopedias, codes, papers, blogs, conversations, etc.

|

| 233 |

+

|

| 234 |

+

This project is mainly based on wiki Chinese encyclopedia. How to obtain Chinese wiki corpus: Chinese Wiki download address: [zhwiki](https://dumps.wikimedia.org/zhwiki/), download the `zhwiki-[archive date]-pages-articles-multistream.xml.bz2` file, About 2.7GB, convert the downloaded bz2 file to wiki.txt reference: [WikiExtractor](https://github.com/apertium/WikiExtractor), then use python's `OpenCC` library to convert to Simplified Chinese, and finally get the Just put `wiki.simple.txt` in the `data` directory of the project root directory. Please merge multiple corpora into one `txt` file yourself.

|

| 235 |

+

|

| 236 |

+

Since training tokenizer consumes a lot of memory, if your corpus is very large (the merged `txt` file exceeds 2G), it is recommended to sample the corpus according to categories and proportions to reduce training time and memory consumption. Training a 1.7GB `txt` file requires about 48GB of memory (estimated, I only have 32GB, triggering swap frequently, computer stuck for a long time T_T), 13600k CPU takes about 1 hour.

|

| 237 |

+

|

| 238 |

+

2. train tokenizer

|

| 239 |

+

|

| 240 |

+

The difference between `char level` and `byte level` is as follows (Please search for information on your own for specific differences in use.). The tokenizer of `char level` is trained by default. If `byte level` is required, just set `token_type='byte'` in `train_tokenizer.py`.

|

| 241 |

+

|

| 242 |

+

```python

|

| 243 |

+

# original text

|

| 244 |

+

txt = '这是一段中英混输的句子, (chinese and English, here are words.)'

|

| 245 |

+

|

| 246 |

+

tokens = charlevel_tokenizer.tokenize(txt)

|

| 247 |

+

print(tokens)

|

| 248 |

+

# char level tokens output

|

| 249 |

+

# ['▁这是', '一段', '中英', '混', '输', '的', '句子', '▁,', '▁(', '▁ch', 'inese', '▁and', '▁Eng', 'lish', '▁,', '▁h', 'ere', '▁', 'are', '▁w', 'ord', 's', '▁.', '▁)']

|

| 250 |

+

|

| 251 |

+

tokens = bytelevel_tokenizer.tokenize(txt)

|

| 252 |

+

print(tokens)

|

| 253 |

+

# byte level tokens output

|

| 254 |

+

# ['Ġè¿Ļæĺ¯', 'ä¸Ģ段', 'ä¸Ńèĭ±', 'æ··', 'è¾ĵ', 'çļĦ', 'åı¥åŃIJ', 'Ġ,', 'Ġ(', 'Ġch', 'inese', 'Ġand', 'ĠEng', 'lish', 'Ġ,', 'Ġh', 'ere', 'Ġare', 'Ġw', 'ord', 's', 'Ġ.', 'Ġ)']

|

| 255 |

+

```

|

| 256 |

+

|

| 257 |

+

Start training:

|

| 258 |

+

|

| 259 |

+

```python

|

| 260 |

+

# Make sure your training corpus `txt` file is in the data directory

|

| 261 |

+

python train_tokenizer.py

|

| 262 |

+

```

|

| 263 |

+

|

| 264 |

+

## 3.4 Text-to-Text pre-training

|

| 265 |

+

1. Pre-training dataset example

|

| 266 |

+

```json

|

| 267 |

+

{

|

| 268 |

+

"prompt": "对于花园街,你有什么了解或看法吗?",

|

| 269 |

+

"response": "花园街(是香港油尖旺区的一条富有特色的街道,位于九龙旺角东部,北至界限街,南至登打士街,与通菜街及洗衣街等街道平行。现时这条街道是香港著名的购物区之一。位于亚皆老街以南的一段花园街,也就是\"波鞋街\"整条街约150米长,有50多间售卖运动鞋和运动用品的店舖。旺角道至太子道西一段则为排档区,售卖成衣、蔬菜和水果等。花园街一共分成三段。明清时代,花园街是芒角村栽种花卉的地方。此外,根据历史专家郑宝鸿的考证:花园街曾是1910年代东方殷琴拿烟厂的花园。纵火案。自2005年起,花园街一带最少发生5宗纵火案,当中4宗涉及排档起火。2010年。2010年12月6日,花园街222号一个卖鞋的排档于凌晨5时许首先起火,浓烟涌往旁边住宅大厦,消防接报4"

|

| 270 |

+

}

|

| 271 |

+

```

|

| 272 |

+

|

| 273 |

+

2. jupyter-lab or jupyter notebook:

|

| 274 |

+

|

| 275 |

+

See the file `train.ipynb`. It is recommended to use jupyter-lab to avoid considering the situation where the terminal process is killed after disconnecting from the server.

|

| 276 |

+

|

| 277 |

+

3. Console:

|

| 278 |

+

|

| 279 |

+

Console training needs to consider that the process will be killed after the connection is disconnected. It is recommended to use the process daemon tool `Supervisor` or `screen` to establish a connection session.

|

| 280 |

+

|

| 281 |

+

First, configure `accelerate`, execute the following command, and select according to the prompts. Refer to `accelerate.yaml`, *Note: DeepSpeed installation in Windows is more troublesome*.

|

| 282 |

+

```bash

|

| 283 |

+

accelerate config

|

| 284 |

+

```

|

| 285 |

+

|

| 286 |

+

Start training. If you want to use the configuration provided by the project, please add the parameter `--config_file ./accelerate.yaml` after the following command `accelerate launch`. *This configuration is based on the single-machine 2xGPU configuration.*

|

| 287 |

+

|

| 288 |

+

*There are two scripts for pre-training. The trainer implemented in this project corresponds to `train.py`, and the trainer implemented by huggingface corresponds to `pre_train.py`. You can use either one and the effect will be the same. The training information display of the trainer implemented in this project is more beautiful, and it is easier to modify the training details (such as loss function, log records, etc.). All support checkpoint to continue training. The trainer implemented in this project supports continuing training after a breakpoint at any position. Press ` ctrl+c` will save the breakpoint information when exiting the script.*

|

| 289 |

+

|

| 290 |

+

Single machine and single card:

|

| 291 |

+

```bash

|

| 292 |

+

# The trainer implemented in this project

|

| 293 |

+

accelerate launch ./train.py train

|

| 294 |

+

|

| 295 |

+

# Or use huggingface trainer

|

| 296 |

+

accelerate launch --multi_gpu --num_processes 2 pre_train.py

|

| 297 |

+

```

|

| 298 |

+

|

| 299 |

+

Single machine with multiple GPUs:

|

| 300 |

+

'2' is the number of gpus, please modify it according to your actual situation.

|

| 301 |

+

```bash

|

| 302 |

+

# The trainer implemented in this project

|

| 303 |

+

accelerate launch --multi_gpu --num_processes 2 ./train.py train

|

| 304 |

+

|

| 305 |

+

# Or use huggingface trainer

|

| 306 |

+

accelerate launch --multi_gpu --num_processes 2 pre_train.py

|

| 307 |

+

```

|

| 308 |

+

|

| 309 |

+

Continue training from the breakpoint:

|

| 310 |

+

```bash

|

| 311 |

+

# The trainer implemented in this project

|

| 312 |

+

accelerate launch --multi_gpu --num_processes 2 ./train.py train --is_keep_training=True

|

| 313 |

+

|

| 314 |

+

# Or use huggingface trainer

|

| 315 |

+

# You need to add `resume_from_checkpoint=True` to the `train` function in `pre_train.py`

|

| 316 |

+

python pre_train.py

|

| 317 |

+

```

|

| 318 |

+

|

| 319 |

+

## 3.5 Supervised Fine-tuning, SFT

|

| 320 |

+

|

| 321 |

+

The SFT dataset all comes from the contribution of [BELLE](https://github.com/LianjiaTech/BELLE). Thank you. The SFT datasets are: [generated_chat_0.4M](https://huggingface.co/datasets/BelleGroup/generated_chat_0.4M), [train_0.5M_CN](https://huggingface.co/datasets/BelleGroup/train_0.5M_CN ) and [train_2M_CN](https://huggingface.co/datasets/BelleGroup/train_2M_CN), about 1.37 million rows remain after cleaning.

|

| 322 |

+

Example of fine-tuning dataset with sft command:

|

| 323 |

+

|

| 324 |

+

```json

|

| 325 |

+

{

|

| 326 |

+

"prompt": "解释什么是欧洲启示录",

|

| 327 |

+

"response": "欧洲启示录(The Book of Revelation)是新约圣经的最后一卷书,也被称为《启示录》、《默示录》或《约翰默示录》。这本书从宗教的角度描述了世界末日的来临,以及上帝对世界的审判和拯救。 书中的主题包括来临的基督的荣耀,上帝对人性的惩罚和拯救,以及魔鬼和邪恶力量的存在。欧洲启示录是一个充满象征和暗示的文本,对于解读和理解有许多不同的方法和观点。"

|

| 328 |

+

}

|

| 329 |

+

```

|

| 330 |

+

Make your own dataset by referring to the sample `parquet` file in the `data` directory. The dataset format is: the `parquet` file is divided into two columns, one column of `prompt` text, representing the prompt, and one column of `response` text, representing the expected model. output.

|

| 331 |

+

For fine-tuning details, see the `train` method under `model/trainer.py`. When `is_finetune` is set to `True`, fine-tuning will be performed. Fine-tuning will freeze the embedding layer and encoder layer by default, and only train the decoder layer. If you need to freeze other parameters, please adjust the code yourself.

|

| 332 |

+

|

| 333 |

+

Run SFT fine-tuning:

|

| 334 |

+

```bash

|

| 335 |

+

# For the trainer implemented in this project, just add the parameter `--is_finetune=True`. The parameter `--is_keep_training=True` can continue training from any breakpoint.

|

| 336 |

+

accelerate launch --multi_gpu --num_processes 2 ./train.py --is_finetune=True

|

| 337 |

+

|

| 338 |

+

# Or use huggingface trainer

|

| 339 |

+

python sft_train.py

|

| 340 |

+

```

|

| 341 |

+

|

| 342 |

+

## 3.6 RLHF (Reinforcement Learning Human Feedback Optimization Method)

|

| 343 |

+

|

| 344 |

+

Here are two common preferred methods: PPO and DPO. Please search papers and blogs for specific implementations.

|

| 345 |

+

|

| 346 |

+

1. PPO method (approximate preference optimization, Proximal Policy Optimization)

|

| 347 |

+

Step 1: Use the fine-tuning dataset to do supervised fine-tuning (SFT, Supervised Finetuning).

|

| 348 |

+

Step 2: Use the preference dataset (a prompt contains at least 2 responses, one wanted response and one unwanted response. Multiple responses can be sorted by score, with the most wanted one having the highest score) to train the reward model (RM, Reward Model). You can use the `peft` library to quickly build the Lora reward model.

|

| 349 |

+

Step 3: Use RM to perform supervised PPO training on the SFT model so that the model meets preferences.

|

| 350 |

+

|

| 351 |

+

2. Use DPO (Direct Preference Optimization) fine-tuning (**This project uses the DPO fine-tuning method, which saves GPU memory**)

|

| 352 |

+

On the basis of obtaining the SFT model, there is no need to train the reward model, and fine-tuning can be started by obtaining the positive answer (chosen) and the negative answer (rejected). The fine-tuned `chosen` text comes from the original dataset [alpaca-gpt4-data-zh](https://huggingface.co/datasets/c-s-ale/alpaca-gpt4-data-zh), and the rejected text `rejected` comes from SFT Model output after fine-tuning 1 epoch, two other datasets: [huozi_rlhf_data_json](https://huggingface.co/datasets/Skepsun/huozi_rlhf_data_json) and [rlhf-reward-single-round-trans_chinese](https:// huggingface.co/datasets/beyond/rlhf-reward-single-round-trans_chinese), a total of 80,000 dpo data after the merger.

|

| 353 |

+

|

| 354 |

+

For the dpo dataset processing process, see `utils/dpo_data_process.py`.

|

| 355 |

+

|

| 356 |

+

DPO preference optimization dataset example:

|

| 357 |

+

```json

|

| 358 |

+

{

|

| 359 |

+

"prompt": "为给定的产品创建一个创意标语。,输入:可重复使用的水瓶。",

|

| 360 |

+

"chosen": "\"保护地球,从拥有可重复使用的水瓶开始!\"",

|

| 361 |

+

"rejected": "\"让你的水瓶成为你的生活伴侣,使用可重复使用的水瓶,让你的水瓶成为你的伙伴\""

|

| 362 |

+

}

|

| 363 |

+

```

|

| 364 |

+

Run preference optimization:

|

| 365 |

+

```bash

|

| 366 |

+

pythondpo_train.py

|

| 367 |

+

```

|

| 368 |

+

|

| 369 |

+

## 3.7 Infering

|

| 370 |

+

Make sure there are the following files in the `model_save` directory, These files can be found in the `Hugging Face Hub` repository [ChatLM-Chinese-0.2B](https://huggingface.co/charent/ChatLM-mini-Chinese)::

|

| 371 |

+

```bash

|

| 372 |

+

ChatLM-mini-Chinese

|

| 373 |

+

├─model_save

|

| 374 |

+

| ├─config.json

|

| 375 |

+

| ├─configuration_chat_model.py

|

| 376 |

+

| ���─generation_config.json

|

| 377 |

+

| ├─model.safetensors

|

| 378 |

+

| ├─modeling_chat_model.py

|

| 379 |

+

| ├─special_tokens_map.json

|

| 380 |

+

| ├─tokenizer.json

|

| 381 |

+

| └─tokenizer_config.json

|

| 382 |

+

```

|

| 383 |

+

|

| 384 |

+

1. Console run:

|

| 385 |

+

```bash

|

| 386 |

+

python cli_demo.py

|

| 387 |

+

```

|

| 388 |

+

|

| 389 |

+

2. API call

|

| 390 |

+

```bash

|

| 391 |

+

python api_demo.py

|

| 392 |

+

```

|

| 393 |

+

|

| 394 |

+

API call example:

|

| 395 |

+

API调用示例:

|

| 396 |

+

```bash

|

| 397 |

+

curl --location '127.0.0.1:8812/api/chat' \

|

| 398 |

+

--header 'Content-Type: application/json' \

|

| 399 |

+

--header 'Authorization: Bearer Bearer' \

|

| 400 |

+

--data '{

|

| 401 |

+

"input_txt": "感冒了要怎么办"

|

| 402 |

+

}'

|

| 403 |

+

```

|

| 404 |

+

|

| 405 |

+

|

| 406 |

+

## 3.8 Fine-tuning of downstream tasks

|

| 407 |

+

|

| 408 |

+

Here we take the triplet information in the text as an example to do downstream fine-tuning. Traditional deep learning extraction methods for this task can be found in the repository [pytorch_IE_model](https://github.com/charent/pytorch_IE_model). Extract all the triples in a piece of text, such as the sentence `"Sketching Essays" is a book published by Metallurgical Industry in 2006, the author is Zhang Lailiang`, extract the triples `(Sketching Essays, author, Zhang Lailiang)` and `( Sketching essays, publishing house, metallurgical industry)`.

|

| 409 |

+

|

| 410 |

+

The original dataset is: [Baidu Triplet Extraction dataset](https://aistudio.baidu.com/datasetdetail/11384). Example of the processed fine-tuned dataset format:

|

| 411 |

+

```json

|

| 412 |

+

{

|

| 413 |

+

"prompt": "请抽取出给定句子中的所有三元组。给定句子:《家乡的月亮》是宋雪莱演唱的一首歌曲,所属专辑是《久违的哥们》",

|

| 414 |

+

"response": "[(家乡的月亮,歌手,宋雪莱),(家乡的月亮,所属专辑,久违的哥们)]"

|

| 415 |

+

}

|

| 416 |

+

```

|

| 417 |

+

|

| 418 |

+