shenchucheng

commited on

Commit

·

faf9dc6

1

Parent(s):

4e8e742

create meatgpt webui

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .agent-store-config.yaml +1 -1

- .agent-store-config.yaml.example +0 -9

- .dockerignore +1 -0

- Dockerfile +8 -10

- README.md +1 -260

- metagpt/web/app.py → app.py +56 -10

- config/config.yaml +58 -22

- docs/FAQ-EN.md +0 -181

- docs/README_CN.md +0 -201

- docs/README_JA.md +0 -210

- docs/ROADMAP.md +0 -84

- docs/resources/20230811-214014.jpg +0 -3

- docs/resources/MetaGPT-WeChat-Personal.jpeg +0 -0

- docs/resources/MetaGPT-WorkWeChatGroup-6.jpg +0 -3

- docs/resources/MetaGPT-logo.jpeg +0 -0

- docs/resources/MetaGPT-logo.png +0 -3

- docs/resources/software_company_cd.jpeg +0 -0

- docs/resources/software_company_sd.jpeg +0 -0

- docs/resources/workspace/content_rec_sys/resources/competitive_analysis.pdf +0 -0

- docs/resources/workspace/content_rec_sys/resources/competitive_analysis.png +0 -3

- docs/resources/workspace/content_rec_sys/resources/competitive_analysis.svg +0 -3

- docs/resources/workspace/content_rec_sys/resources/data_api_design.pdf +0 -0

- docs/resources/workspace/content_rec_sys/resources/data_api_design.png +0 -3

- docs/resources/workspace/content_rec_sys/resources/data_api_design.svg +0 -3

- docs/resources/workspace/content_rec_sys/resources/seq_flow.pdf +0 -0

- docs/resources/workspace/content_rec_sys/resources/seq_flow.png +0 -3

- docs/resources/workspace/content_rec_sys/resources/seq_flow.svg +0 -3

- docs/resources/workspace/llmops_framework/resources/competitive_analysis.pdf +0 -0

- docs/resources/workspace/llmops_framework/resources/competitive_analysis.png +0 -3

- docs/resources/workspace/llmops_framework/resources/competitive_analysis.svg +0 -3

- docs/resources/workspace/llmops_framework/resources/data_api_design.pdf +0 -0

- docs/resources/workspace/llmops_framework/resources/data_api_design.png +0 -3

- docs/resources/workspace/llmops_framework/resources/data_api_design.svg +0 -3

- docs/resources/workspace/llmops_framework/resources/seq_flow.pdf +0 -0

- docs/resources/workspace/llmops_framework/resources/seq_flow.png +0 -3

- docs/resources/workspace/llmops_framework/resources/seq_flow.svg +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.pdf +0 -0

- docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.png +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/competitive_analysis.svg +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/data_api_design.pdf +0 -0

- docs/resources/workspace/match3_puzzle_game/resources/data_api_design.png +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/data_api_design.svg +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/seq_flow.pdf +0 -0

- docs/resources/workspace/match3_puzzle_game/resources/seq_flow.png +0 -3

- docs/resources/workspace/match3_puzzle_game/resources/seq_flow.svg +0 -3

- docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.pdf +0 -0

- docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.png +0 -3

- docs/resources/workspace/minimalist_pomodoro_timer/resources/competitive_analysis.svg +0 -3

- docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.pdf +0 -0

- docs/resources/workspace/minimalist_pomodoro_timer/resources/data_api_design.png +0 -3

.agent-store-config.yaml

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

role:

|

| 2 |

name: SoftwareCompany

|

| 3 |

-

module:

|

| 4 |

skills:

|

| 5 |

- name: WritePRD

|

| 6 |

- name: WriteDesign

|

|

|

|

| 1 |

role:

|

| 2 |

name: SoftwareCompany

|

| 3 |

+

module: software_company

|

| 4 |

skills:

|

| 5 |

- name: WritePRD

|

| 6 |

- name: WriteDesign

|

.agent-store-config.yaml.example

DELETED

|

@@ -1,9 +0,0 @@

|

|

| 1 |

-

role:

|

| 2 |

-

name: Teacher # Referenced the `Teacher` in `metagpt/roles/teacher.py`.

|

| 3 |

-

module: metagpt.roles.teacher # Referenced `metagpt/roles/teacher.py`.

|

| 4 |

-

skills: # Refer to the skill `name` of the published skill in `.well-known/skills.yaml`.

|

| 5 |

-

- name: text_to_speech

|

| 6 |

-

description: Text-to-speech

|

| 7 |

-

- name: text_to_image

|

| 8 |

-

description: Create a drawing based on the text.

|

| 9 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.dockerignore

CHANGED

|

@@ -5,3 +5,4 @@ workspace

|

|

| 5 |

dist

|

| 6 |

data

|

| 7 |

geckodriver.log

|

|

|

|

|

|

| 5 |

dist

|

| 6 |

data

|

| 7 |

geckodriver.log

|

| 8 |

+

logs

|

Dockerfile

CHANGED

|

@@ -4,7 +4,8 @@ FROM nikolaik/python-nodejs:python3.9-nodejs20-slim

|

|

| 4 |

USER root

|

| 5 |

|

| 6 |

# Install Debian software needed by MetaGPT and clean up in one RUN command to reduce image size

|

| 7 |

-

RUN apt

|

|

|

|

| 8 |

apt install -y git chromium fonts-ipafont-gothic fonts-wqy-zenhei fonts-thai-tlwg fonts-kacst fonts-freefont-ttf libxss1 --no-install-recommends &&\

|

| 9 |

apt clean && rm -rf /var/lib/apt/lists/*

|

| 10 |

|

|

@@ -12,20 +13,17 @@ RUN apt update &&\

|

|

| 12 |

ENV CHROME_BIN="/usr/bin/chromium" \

|

| 13 |

PUPPETEER_CONFIG="/app/metagpt/config/puppeteer-config.json"\

|

| 14 |

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD="true"

|

| 15 |

-

|

|

|

|

| 16 |

npm cache clean --force

|

| 17 |

|

| 18 |

# Install Python dependencies and install MetaGPT

|

| 19 |

COPY requirements.txt requirements.txt

|

| 20 |

|

| 21 |

-

RUN pip install --no-cache-dir -r requirements.txt

|

| 22 |

|

| 23 |

-

|

| 24 |

-

WORKDIR /app/metagpt

|

| 25 |

-

RUN chmod -R 777 /app/metagpt/logs/ &&\

|

| 26 |

-

mkdir workspace &&\

|

| 27 |

-

chmod -R 777 /app/metagpt/workspace/ &&\

|

| 28 |

-

python -m pip install -e.

|

| 29 |

|

|

|

|

| 30 |

|

| 31 |

-

CMD ["

|

|

|

|

| 4 |

USER root

|

| 5 |

|

| 6 |

# Install Debian software needed by MetaGPT and clean up in one RUN command to reduce image size

|

| 7 |

+

RUN sed -i 's/deb.debian.org/mirrors.ustc.edu.cn/g' /etc/apt/sources.list.d/debian.sources && \

|

| 8 |

+

apt update &&\

|

| 9 |

apt install -y git chromium fonts-ipafont-gothic fonts-wqy-zenhei fonts-thai-tlwg fonts-kacst fonts-freefont-ttf libxss1 --no-install-recommends &&\

|

| 10 |

apt clean && rm -rf /var/lib/apt/lists/*

|

| 11 |

|

|

|

|

| 13 |

ENV CHROME_BIN="/usr/bin/chromium" \

|

| 14 |

PUPPETEER_CONFIG="/app/metagpt/config/puppeteer-config.json"\

|

| 15 |

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD="true"

|

| 16 |

+

|

| 17 |

+

RUN npm install -g @mermaid-js/mermaid-cli --registry=http://registry.npmmirror.com &&\

|

| 18 |

npm cache clean --force

|

| 19 |

|

| 20 |

# Install Python dependencies and install MetaGPT

|

| 21 |

COPY requirements.txt requirements.txt

|

| 22 |

|

| 23 |

+

RUN pip install --no-cache-dir -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

|

| 24 |

|

| 25 |

+

WORKDIR /app

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

+

COPY . .

|

| 28 |

|

| 29 |

+

CMD ["uvicorn", "app:app"]

|

README.md

CHANGED

|

@@ -1,260 +1 @@

|

|

| 1 |

-

|

| 2 |

-

title: MetaGPT

|

| 3 |

-

emoji: 🐼

|

| 4 |

-

colorFrom: green

|

| 5 |

-

colorTo: blue

|

| 6 |

-

sdk: docker

|

| 7 |

-

app_file: app.py

|

| 8 |

-

pinned: false

|

| 9 |

-

---

|

| 10 |

-

|

| 11 |

-

# MetaGPT: The Multi-Agent Framework

|

| 12 |

-

|

| 13 |

-

<p align="center">

|

| 14 |

-

<a href=""><img src="docs/resources/MetaGPT-logo.jpeg" alt="MetaGPT logo: Enable GPT to work in software company, collaborating to tackle more complex tasks." width="150px"></a>

|

| 15 |

-

</p>

|

| 16 |

-

|

| 17 |

-

<p align="center">

|

| 18 |

-

<b>Assign different roles to GPTs to form a collaborative software entity for complex tasks.</b>

|

| 19 |

-

</p>

|

| 20 |

-

|

| 21 |

-

<p align="center">

|

| 22 |

-

<a href="docs/README_CN.md"><img src="https://img.shields.io/badge/文档-中文版-blue.svg" alt="CN doc"></a>

|

| 23 |

-

<a href="README.md"><img src="https://img.shields.io/badge/document-English-blue.svg" alt="EN doc"></a>

|

| 24 |

-

<a href="docs/README_JA.md"><img src="https://img.shields.io/badge/ドキュメント-日本語-blue.svg" alt="JA doc"></a>

|

| 25 |

-

<a href="https://discord.gg/wCp6Q3fsAk"><img src="https://dcbadge.vercel.app/api/server/wCp6Q3fsAk?compact=true&style=flat" alt="Discord Follow"></a>

|

| 26 |

-

<a href="https://opensource.org/licenses/MIT"><img src="https://img.shields.io/badge/License-MIT-yellow.svg" alt="License: MIT"></a>

|

| 27 |

-

<a href="docs/ROADMAP.md"><img src="https://img.shields.io/badge/ROADMAP-路线图-blue" alt="roadmap"></a>

|

| 28 |

-

<a href="docs/resources/MetaGPT-WeChat-Personal.jpeg"><img src="https://img.shields.io/badge/WeChat-微信-blue" alt="roadmap"></a>

|

| 29 |

-

<a href="https://twitter.com/DeepWisdom2019"><img src="https://img.shields.io/twitter/follow/MetaGPT?style=social" alt="Twitter Follow"></a>

|

| 30 |

-

</p>

|

| 31 |

-

|

| 32 |

-

<p align="center">

|

| 33 |

-

<a href="https://vscode.dev/redirect?url=vscode://ms-vscode-remote.remote-containers/cloneInVolume?url=https://github.com/geekan/MetaGPT"><img src="https://img.shields.io/static/v1?label=Dev%20Containers&message=Open&color=blue&logo=visualstudiocode" alt="Open in Dev Containers"></a>

|

| 34 |

-

<a href="https://codespaces.new/geekan/MetaGPT"><img src="https://img.shields.io/badge/Github_Codespace-Open-blue?logo=github" alt="Open in GitHub Codespaces"></a>

|

| 35 |

-

</p>

|

| 36 |

-

|

| 37 |

-

1. MetaGPT takes a **one line requirement** as input and outputs **user stories / competitive analysis / requirements / data structures / APIs / documents, etc.**

|

| 38 |

-

2. Internally, MetaGPT includes **product managers / architects / project managers / engineers.** It provides the entire process of a **software company along with carefully orchestrated SOPs.**

|

| 39 |

-

1. `Code = SOP(Team)` is the core philosophy. We materialize SOP and apply it to teams composed of LLMs.

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

<p align="center">Software Company Multi-Role Schematic (Gradually Implementing)</p>

|

| 44 |

-

|

| 45 |

-

## Examples (fully generated by GPT-4)

|

| 46 |

-

|

| 47 |

-

For example, if you type `python startup.py "Design a RecSys like Toutiao"`, you would get many outputs, one of them is data & api design

|

| 48 |

-

|

| 49 |

-

|

| 50 |

-

|

| 51 |

-

It costs approximately **$0.2** (in GPT-4 API fees) to generate one example with analysis and design, and around **$2.0** for a full project.

|

| 52 |

-

|

| 53 |

-

## Installation

|

| 54 |

-

|

| 55 |

-

### Installation Video Guide

|

| 56 |

-

|

| 57 |

-

- [Matthew Berman: How To Install MetaGPT - Build A Startup With One Prompt!!](https://youtu.be/uT75J_KG_aY)

|

| 58 |

-

|

| 59 |

-

### Traditional Installation

|

| 60 |

-

|

| 61 |

-

```bash

|

| 62 |

-

# Step 1: Ensure that NPM is installed on your system. Then install mermaid-js.

|

| 63 |

-

npm --version

|

| 64 |

-

sudo npm install -g @mermaid-js/mermaid-cli

|

| 65 |

-

|

| 66 |

-

# Step 2: Ensure that Python 3.9+ is installed on your system. You can check this by using:

|

| 67 |

-

python --version

|

| 68 |

-

|

| 69 |

-

# Step 3: Clone the repository to your local machine, and install it.

|

| 70 |

-

git clone https://github.com/geekan/metagpt

|

| 71 |

-

cd metagpt

|

| 72 |

-

python setup.py install

|

| 73 |

-

```

|

| 74 |

-

|

| 75 |

-

**Note:**

|

| 76 |

-

|

| 77 |

-

- If already have Chrome, Chromium, or MS Edge installed, you can skip downloading Chromium by setting the environment variable

|

| 78 |

-

`PUPPETEER_SKIP_CHROMIUM_DOWNLOAD` to `true`.

|

| 79 |

-

|

| 80 |

-

- Some people are [having issues](https://github.com/mermaidjs/mermaid.cli/issues/15) installing this tool globally. Installing it locally is an alternative solution,

|

| 81 |

-

|

| 82 |

-

```bash

|

| 83 |

-

npm install @mermaid-js/mermaid-cli

|

| 84 |

-

```

|

| 85 |

-

|

| 86 |

-

- don't forget to the configuration for mmdc in config.yml

|

| 87 |

-

|

| 88 |

-

```yml

|

| 89 |

-

PUPPETEER_CONFIG: "./config/puppeteer-config.json"

|

| 90 |

-

MMDC: "./node_modules/.bin/mmdc"

|

| 91 |

-

```

|

| 92 |

-

|

| 93 |

-

- if `python setup.py install` fails with error `[Errno 13] Permission denied: '/usr/local/lib/python3.11/dist-packages/test-easy-install-13129.write-test'`, try instead running `python setup.py install --user`

|

| 94 |

-

|

| 95 |

-

### Installation by Docker

|

| 96 |

-

|

| 97 |

-

```bash

|

| 98 |

-

# Step 1: Download metagpt official image and prepare config.yaml

|

| 99 |

-

docker pull metagpt/metagpt:v0.3.1

|

| 100 |

-

mkdir -p /opt/metagpt/{config,workspace}

|

| 101 |

-

docker run --rm metagpt/metagpt:v0.3.1 cat /app/metagpt/config/config.yaml > /opt/metagpt/config/key.yaml

|

| 102 |

-

vim /opt/metagpt/config/key.yaml # Change the config

|

| 103 |

-

|

| 104 |

-

# Step 2: Run metagpt demo with container

|

| 105 |

-

docker run --rm \

|

| 106 |

-

--privileged \

|

| 107 |

-

-v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

|

| 108 |

-

-v /opt/metagpt/workspace:/app/metagpt/workspace \

|

| 109 |

-

metagpt/metagpt:v0.3.1 \

|

| 110 |

-

python startup.py "Write a cli snake game"

|

| 111 |

-

|

| 112 |

-

# You can also start a container and execute commands in it

|

| 113 |

-

docker run --name metagpt -d \

|

| 114 |

-

--privileged \

|

| 115 |

-

-v /opt/metagpt/config/key.yaml:/app/metagpt/config/key.yaml \

|

| 116 |

-

-v /opt/metagpt/workspace:/app/metagpt/workspace \

|

| 117 |

-

metagpt/metagpt:v0.3.1

|

| 118 |

-

|

| 119 |

-

docker exec -it metagpt /bin/bash

|

| 120 |

-

$ python startup.py "Write a cli snake game"

|

| 121 |

-

```

|

| 122 |

-

|

| 123 |

-

The command `docker run ...` do the following things:

|

| 124 |

-

|

| 125 |

-

- Run in privileged mode to have permission to run the browser

|

| 126 |

-

- Map host directory `/opt/metagpt/config` to container directory `/app/metagpt/config`

|

| 127 |

-

- Map host directory `/opt/metagpt/workspace` to container directory `/app/metagpt/workspace`

|

| 128 |

-

- Execute the demo command `python startup.py "Write a cli snake game"`

|

| 129 |

-

|

| 130 |

-

### Build image by yourself

|

| 131 |

-

|

| 132 |

-

```bash

|

| 133 |

-

# You can also build metagpt image by yourself.

|

| 134 |

-

git clone https://github.com/geekan/MetaGPT.git

|

| 135 |

-

cd MetaGPT && docker build -t metagpt:custom .

|

| 136 |

-

```

|

| 137 |

-

|

| 138 |

-

## Configuration

|

| 139 |

-

|

| 140 |

-

- Configure your `OPENAI_API_KEY` in any of `config/key.yaml / config/config.yaml / env`

|

| 141 |

-

- Priority order: `config/key.yaml > config/config.yaml > env`

|

| 142 |

-

|

| 143 |

-

```bash

|

| 144 |

-

# Copy the configuration file and make the necessary modifications.

|

| 145 |

-

cp config/config.yaml config/key.yaml

|

| 146 |

-

```

|

| 147 |

-

|

| 148 |

-

| Variable Name | config/key.yaml | env |

|

| 149 |

-

| ------------------------------------------ | ----------------------------------------- | ----------------------------------------------- |

|

| 150 |

-

| OPENAI_API_KEY # Replace with your own key | OPENAI_API_KEY: "sk-..." | export OPENAI_API_KEY="sk-..." |

|

| 151 |

-

| OPENAI_API_BASE # Optional | OPENAI_API_BASE: "https://<YOUR_SITE>/v1" | export OPENAI_API_BASE="https://<YOUR_SITE>/v1" |

|

| 152 |

-

|

| 153 |

-

## Tutorial: Initiating a startup

|

| 154 |

-

|

| 155 |

-

```shell

|

| 156 |

-

# Run the script

|

| 157 |

-

python startup.py "Write a cli snake game"

|

| 158 |

-

# Do not hire an engineer to implement the project

|

| 159 |

-

python startup.py "Write a cli snake game" --implement False

|

| 160 |

-

# Hire an engineer and perform code reviews

|

| 161 |

-

python startup.py "Write a cli snake game" --code_review True

|

| 162 |

-

```

|

| 163 |

-

|

| 164 |

-

After running the script, you can find your new project in the `workspace/` directory.

|

| 165 |

-

|

| 166 |

-

### Preference of Platform or Tool

|

| 167 |

-

|

| 168 |

-

You can tell which platform or tool you want to use when stating your requirements.

|

| 169 |

-

|

| 170 |

-

```shell

|

| 171 |

-

python startup.py "Write a cli snake game based on pygame"

|

| 172 |

-

```

|

| 173 |

-

|

| 174 |

-

### Usage

|

| 175 |

-

|

| 176 |

-

```

|

| 177 |

-

NAME

|

| 178 |

-

startup.py - We are a software startup comprised of AI. By investing in us, you are empowering a future filled with limitless possibilities.

|

| 179 |

-

|

| 180 |

-

SYNOPSIS

|

| 181 |

-

startup.py IDEA <flags>

|

| 182 |

-

|

| 183 |

-

DESCRIPTION

|

| 184 |

-

We are a software startup comprised of AI. By investing in us, you are empowering a future filled with limitless possibilities.

|

| 185 |

-

|

| 186 |

-

POSITIONAL ARGUMENTS

|

| 187 |

-

IDEA

|

| 188 |

-

Type: str

|

| 189 |

-

Your innovative idea, such as "Creating a snake game."

|

| 190 |

-

|

| 191 |

-

FLAGS

|

| 192 |

-

--investment=INVESTMENT

|

| 193 |

-

Type: float

|

| 194 |

-

Default: 3.0

|

| 195 |

-

As an investor, you have the opportunity to contribute a certain dollar amount to this AI company.

|

| 196 |

-

--n_round=N_ROUND

|

| 197 |

-

Type: int

|

| 198 |

-

Default: 5

|

| 199 |

-

|

| 200 |

-

NOTES

|

| 201 |

-

You can also use flags syntax for POSITIONAL ARGUMENTS

|

| 202 |

-

```

|

| 203 |

-

|

| 204 |

-

### Code walkthrough

|

| 205 |

-

|

| 206 |

-

```python

|

| 207 |

-

from metagpt.software_company import SoftwareCompany

|

| 208 |

-

from metagpt.roles import ProjectManager, ProductManager, Architect, Engineer

|

| 209 |

-

|

| 210 |

-

async def startup(idea: str, investment: float = 3.0, n_round: int = 5):

|

| 211 |

-

"""Run a startup. Be a boss."""

|

| 212 |

-

company = SoftwareCompany()

|

| 213 |

-

company.hire([ProductManager(), Architect(), ProjectManager(), Engineer()])

|

| 214 |

-

company.invest(investment)

|

| 215 |

-

company.start_project(idea)

|

| 216 |

-

await company.run(n_round=n_round)

|

| 217 |

-

```

|

| 218 |

-

|

| 219 |

-

You can check `examples` for more details on single role (with knowledge base) and LLM only examples.

|

| 220 |

-

|

| 221 |

-

## QuickStart

|

| 222 |

-

|

| 223 |

-

It is difficult to install and configure the local environment for some users. The following tutorials will allow you to quickly experience the charm of MetaGPT.

|

| 224 |

-

|

| 225 |

-

- [MetaGPT quickstart](https://deepwisdom.feishu.cn/wiki/CyY9wdJc4iNqArku3Lncl4v8n2b)

|

| 226 |

-

|

| 227 |

-

## Citation

|

| 228 |

-

|

| 229 |

-

For now, cite the [Arxiv paper](https://arxiv.org/abs/2308.00352):

|

| 230 |

-

|

| 231 |

-

```bibtex

|

| 232 |

-

@misc{hong2023metagpt,

|

| 233 |

-

title={MetaGPT: Meta Programming for Multi-Agent Collaborative Framework},

|

| 234 |

-

author={Sirui Hong and Xiawu Zheng and Jonathan Chen and Yuheng Cheng and Jinlin Wang and Ceyao Zhang and Zili Wang and Steven Ka Shing Yau and Zijuan Lin and Liyang Zhou and Chenyu Ran and Lingfeng Xiao and Chenglin Wu},

|

| 235 |

-

year={2023},

|

| 236 |

-

eprint={2308.00352},

|

| 237 |

-

archivePrefix={arXiv},

|

| 238 |

-

primaryClass={cs.AI}

|

| 239 |

-

}

|

| 240 |

-

```

|

| 241 |

-

|

| 242 |

-

## Contact Information

|

| 243 |

-

|

| 244 |

-

If you have any questions or feedback about this project, please feel free to contact us. We highly appreciate your suggestions!

|

| 245 |

-

|

| 246 |

-

- **Email:** alexanderwu@fuzhi.ai

|

| 247 |

-

- **GitHub Issues:** For more technical inquiries, you can also create a new issue in our [GitHub repository](https://github.com/geekan/metagpt/issues).

|

| 248 |

-

|

| 249 |

-

We will respond to all questions within 2-3 business days.

|

| 250 |

-

|

| 251 |

-

## Demo

|

| 252 |

-

|

| 253 |

-

https://github.com/geekan/MetaGPT/assets/2707039/5e8c1062-8c35-440f-bb20-2b0320f8d27d

|

| 254 |

-

|

| 255 |

-

## Join us

|

| 256 |

-

|

| 257 |

-

📢 Join Our Discord Channel!

|

| 258 |

-

https://discord.gg/ZRHeExS6xv

|

| 259 |

-

|

| 260 |

-

Looking forward to seeing you there! 🎉

|

|

|

|

| 1 |

+

# MetaGPT Web UI

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

metagpt/web/app.py → app.py

RENAMED

|

@@ -1,10 +1,17 @@

|

|

| 1 |

#!/usr/bin/python3

|

| 2 |

# -*- coding: utf-8 -*-

|

|

|

|

|

|

|

| 3 |

import asyncio

|

|

|

|

|

|

|

|

|

|

| 4 |

import urllib.parse

|

| 5 |

from datetime import datetime

|

| 6 |

import uuid

|

| 7 |

from enum import Enum

|

|

|

|

|

|

|

| 8 |

|

| 9 |

from fastapi import FastAPI, Request, HTTPException

|

| 10 |

from fastapi.responses import StreamingResponse, RedirectResponse

|

|

@@ -15,12 +22,12 @@ import uvicorn

|

|

| 15 |

|

| 16 |

from typing import Any, Optional

|

| 17 |

|

| 18 |

-

from metagpt import Message

|

| 19 |

from metagpt.actions.action import Action

|

| 20 |

from metagpt.actions.action_output import ActionOutput

|

| 21 |

from metagpt.config import CONFIG

|

| 22 |

|

| 23 |

-

from

|

| 24 |

|

| 25 |

|

| 26 |

class QueryAnswerType(Enum):

|

|

@@ -97,13 +104,14 @@ class ThinkActPrompt(BaseModel):

|

|

| 97 |

description=action.desc,

|

| 98 |

)

|

| 99 |

|

| 100 |

-

def update_act(self, message: ActionOutput):

|

| 101 |

-

|

|

|

|

| 102 |

self.step.content = Sentence(

|

| 103 |

type="text",

|

| 104 |

-

id=

|

| 105 |

-

value=SentenceValue(answer=message.content),

|

| 106 |

-

is_finished=

|

| 107 |

)

|

| 108 |

|

| 109 |

@staticmethod

|

|

@@ -147,7 +155,11 @@ async def create_message(req_model: NewMsg, request: Request):

|

|

| 147 |

Session message stream

|

| 148 |

"""

|

| 149 |

config = {k.upper(): v for k, v in req_model.config.items()}

|

| 150 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 151 |

role = SoftwareCompany()

|

| 152 |

role.recv(message=Message(content=req_model.query))

|

| 153 |

answer = MessageJsonModel(

|

|

@@ -171,16 +183,47 @@ async def create_message(req_model: NewMsg, request: Request):

|

|

| 171 |

think_result: RoleRun = await role.think()

|

| 172 |

if not think_result: # End of conversion

|

| 173 |

break

|

|

|

|

| 174 |

think_act_prompt = ThinkActPrompt(role=think_result.role.profile)

|

| 175 |

think_act_prompt.update_think(tc_id, think_result)

|

| 176 |

yield think_act_prompt.prompt + "\n\n"

|

| 177 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 178 |

think_act_prompt.update_act(act_result)

|

| 179 |

yield think_act_prompt.prompt + "\n\n"

|

| 180 |

answer.add_think_act(think_act_prompt)

|

| 181 |

yield answer.prompt + "\n\n" # Notify the front-end that the message is complete.

|

| 182 |

|

| 183 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 184 |

class ChatHandler:

|

| 185 |

@staticmethod

|

| 186 |

async def create_message(req_model: NewMsg, request: Request):

|

|

@@ -194,7 +237,7 @@ app = FastAPI()

|

|

| 194 |

|

| 195 |

app.mount(

|

| 196 |

"/static",

|

| 197 |

-

StaticFiles(directory="./

|

| 198 |

name="static",

|

| 199 |

)

|

| 200 |

app.add_api_route(

|

|

@@ -216,6 +259,9 @@ async def catch_all(request: Request):

|

|

| 216 |

return RedirectResponse(url=new_path)

|

| 217 |

|

| 218 |

|

|

|

|

|

|

|

|

|

|

| 219 |

def main():

|

| 220 |

uvicorn.run(app="__main__:app", host="0.0.0.0", port=7860)

|

| 221 |

|

|

|

|

| 1 |

#!/usr/bin/python3

|

| 2 |

# -*- coding: utf-8 -*-

|

| 3 |

+

from __future__ import annotations

|

| 4 |

+

|

| 5 |

import asyncio

|

| 6 |

+

from collections import deque

|

| 7 |

+

import contextlib

|

| 8 |

+

from functools import partial

|

| 9 |

import urllib.parse

|

| 10 |

from datetime import datetime

|

| 11 |

import uuid

|

| 12 |

from enum import Enum

|

| 13 |

+

from metagpt.logs import set_llm_stream_logfunc

|

| 14 |

+

import pathlib

|

| 15 |

|

| 16 |

from fastapi import FastAPI, Request, HTTPException

|

| 17 |

from fastapi.responses import StreamingResponse, RedirectResponse

|

|

|

|

| 22 |

|

| 23 |

from typing import Any, Optional

|

| 24 |

|

| 25 |

+

from metagpt.schema import Message

|

| 26 |

from metagpt.actions.action import Action

|

| 27 |

from metagpt.actions.action_output import ActionOutput

|

| 28 |

from metagpt.config import CONFIG

|

| 29 |

|

| 30 |

+

from software_company import RoleRun, SoftwareCompany

|

| 31 |

|

| 32 |

|

| 33 |

class QueryAnswerType(Enum):

|

|

|

|

| 104 |

description=action.desc,

|

| 105 |

)

|

| 106 |

|

| 107 |

+

def update_act(self, message: ActionOutput | str, is_finished: bool = True):

|

| 108 |

+

if is_finished:

|

| 109 |

+

self.step.status = "finish"

|

| 110 |

self.step.content = Sentence(

|

| 111 |

type="text",

|

| 112 |

+

id=str(1),

|

| 113 |

+

value=SentenceValue(answer=message.content if is_finished else message),

|

| 114 |

+

is_finished=is_finished,

|

| 115 |

)

|

| 116 |

|

| 117 |

@staticmethod

|

|

|

|

| 155 |

Session message stream

|

| 156 |

"""

|

| 157 |

config = {k.upper(): v for k, v in req_model.config.items()}

|

| 158 |

+

set_context(config, uuid.uuid4().hex)

|

| 159 |

+

|

| 160 |

+

msg_queue = deque()

|

| 161 |

+

CONFIG.LLM_STREAM_LOG = lambda x: msg_queue.appendleft(x) if x else None

|

| 162 |

+

|

| 163 |

role = SoftwareCompany()

|

| 164 |

role.recv(message=Message(content=req_model.query))

|

| 165 |

answer = MessageJsonModel(

|

|

|

|

| 183 |

think_result: RoleRun = await role.think()

|

| 184 |

if not think_result: # End of conversion

|

| 185 |

break

|

| 186 |

+

|

| 187 |

think_act_prompt = ThinkActPrompt(role=think_result.role.profile)

|

| 188 |

think_act_prompt.update_think(tc_id, think_result)

|

| 189 |

yield think_act_prompt.prompt + "\n\n"

|

| 190 |

+

task = asyncio.create_task(role.act())

|

| 191 |

+

|

| 192 |

+

while not await request.is_disconnected():

|

| 193 |

+

if msg_queue:

|

| 194 |

+

think_act_prompt.update_act(msg_queue.pop(), False)

|

| 195 |

+

yield think_act_prompt.prompt + "\n\n"

|

| 196 |

+

continue

|

| 197 |

+

|

| 198 |

+

if task.done():

|

| 199 |

+

break

|

| 200 |

+

|

| 201 |

+

await asyncio.sleep(0.5)

|

| 202 |

+

|

| 203 |

+

act_result = await task

|

| 204 |

think_act_prompt.update_act(act_result)

|

| 205 |

yield think_act_prompt.prompt + "\n\n"

|

| 206 |

answer.add_think_act(think_act_prompt)

|

| 207 |

yield answer.prompt + "\n\n" # Notify the front-end that the message is complete.

|

| 208 |

|

| 209 |

|

| 210 |

+

default_llm_stream_log = partial(print, end="")

|

| 211 |

+

|

| 212 |

+

|

| 213 |

+

def llm_stream_log(msg):

|

| 214 |

+

with contextlib.suppress():

|

| 215 |

+

CONFIG._get("LLM_STREAM_LOG", default_llm_stream_log)(msg)

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

def set_context(context, uid):

|

| 219 |

+

context["WORKSPACE_PATH"] = pathlib.Path("workspace", uid)

|

| 220 |

+

for old, new in (("DEPLOYMENT_ID", "DEPLOYMENT_NAME"), ("OPENAI_API_BASE", "OPENAI_BASE_URL")):

|

| 221 |

+

if old in context and new not in context:

|

| 222 |

+

context[new] = context[old]

|

| 223 |

+

CONFIG.set_context(context)

|

| 224 |

+

return context

|

| 225 |

+

|

| 226 |

+

|

| 227 |

class ChatHandler:

|

| 228 |

@staticmethod

|

| 229 |

async def create_message(req_model: NewMsg, request: Request):

|

|

|

|

| 237 |

|

| 238 |

app.mount(

|

| 239 |

"/static",

|

| 240 |

+

StaticFiles(directory="./static/", check_dir=True),

|

| 241 |

name="static",

|

| 242 |

)

|

| 243 |

app.add_api_route(

|

|

|

|

| 259 |

return RedirectResponse(url=new_path)

|

| 260 |

|

| 261 |

|

| 262 |

+

set_llm_stream_logfunc(llm_stream_log)

|

| 263 |

+

|

| 264 |

+

|

| 265 |

def main():

|

| 266 |

uvicorn.run(app="__main__:app", host="0.0.0.0", port=7860)

|

| 267 |

|

config/config.yaml

CHANGED

|

@@ -1,27 +1,56 @@

|

|

| 1 |

# DO NOT MODIFY THIS FILE, create a new key.yaml, define OPENAI_API_KEY.

|

| 2 |

# The configuration of key.yaml has a higher priority and will not enter git

|

| 3 |

|

|

|

|

|

|

|

|

|

|

| 4 |

#### if OpenAI

|

| 5 |

-

## The official

|

| 6 |

-

## If the official

|

| 7 |

-

## Or, you can configure OPENAI_PROXY to access official

|

| 8 |

-

|

| 9 |

#OPENAI_PROXY: "http://127.0.0.1:8118"

|

| 10 |

-

#OPENAI_API_KEY: "YOUR_API_KEY"

|

| 11 |

-

OPENAI_API_MODEL: "gpt-4"

|

| 12 |

-

MAX_TOKENS:

|

| 13 |

RPM: 10

|

| 14 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

#### if Anthropic

|

| 16 |

-

#

|

| 17 |

|

| 18 |

#### if AZURE, check https://github.com/openai/openai-cookbook/blob/main/examples/azure/chat.ipynb

|

| 19 |

-

|

| 20 |

#OPENAI_API_TYPE: "azure"

|

| 21 |

-

#

|

| 22 |

#OPENAI_API_KEY: "YOUR_AZURE_API_KEY"

|

| 23 |

#OPENAI_API_VERSION: "YOUR_AZURE_API_VERSION"

|

| 24 |

-

#

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 25 |

|

| 26 |

#### for Search

|

| 27 |

|

|

@@ -57,8 +86,8 @@ RPM: 10

|

|

| 57 |

|

| 58 |

#### for Stable Diffusion

|

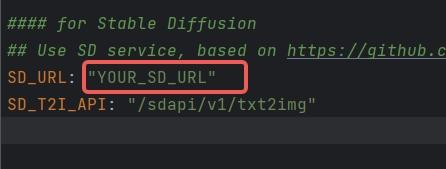

| 59 |

## Use SD service, based on https://github.com/AUTOMATIC1111/stable-diffusion-webui

|

| 60 |

-

SD_URL: "YOUR_SD_URL"

|

| 61 |

-

SD_T2I_API: "/sdapi/v1/txt2img"

|

| 62 |

|

| 63 |

#### for Execution

|

| 64 |

#LONG_TERM_MEMORY: false

|

|

@@ -73,14 +102,21 @@ SD_T2I_API: "/sdapi/v1/txt2img"

|

|

| 73 |

# CALC_USAGE: false

|

| 74 |

|

| 75 |

### for Research

|

| 76 |

-

MODEL_FOR_RESEARCHER_SUMMARY: gpt-3.5-turbo

|

| 77 |

-

MODEL_FOR_RESEARCHER_REPORT: gpt-3.5-turbo-16k

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 78 |

|

| 79 |

-

|

| 80 |

-

#METAGPT_TEXT_TO_IMAGE_MODEL: MODEL_URL

|

| 81 |

|

| 82 |

-

|

| 83 |

-

S3:

|

| 84 |

-

access_key: "YOUR_S3_ACCESS_KEY"

|

| 85 |

-

secret_key: "YOUR_S3_SECRET_KEY"

|

| 86 |

-

endpoint_url: "YOUR_S3_ENDPOINT_URL"

|

|

|

|

| 1 |

# DO NOT MODIFY THIS FILE, create a new key.yaml, define OPENAI_API_KEY.

|

| 2 |

# The configuration of key.yaml has a higher priority and will not enter git

|

| 3 |

|

| 4 |

+

#### Project Path Setting

|

| 5 |

+

# WORKSPACE_PATH: "Path for placing output files"

|

| 6 |

+

|

| 7 |

#### if OpenAI

|

| 8 |

+

## The official OPENAI_BASE_URL is https://api.openai.com/v1

|

| 9 |

+

## If the official OPENAI_BASE_URL is not available, we recommend using the [openai-forward](https://github.com/beidongjiedeguang/openai-forward).

|

| 10 |

+

## Or, you can configure OPENAI_PROXY to access official OPENAI_BASE_URL.

|

| 11 |

+

OPENAI_BASE_URL: "https://api.openai.com/v1"

|

| 12 |

#OPENAI_PROXY: "http://127.0.0.1:8118"

|

| 13 |

+

#OPENAI_API_KEY: "YOUR_API_KEY" # set the value to sk-xxx if you host the openai interface for open llm model

|

| 14 |

+

OPENAI_API_MODEL: "gpt-4-1106-preview"

|

| 15 |

+

MAX_TOKENS: 4096

|

| 16 |

RPM: 10

|

| 17 |

|

| 18 |

+

#### if Spark

|

| 19 |

+

#SPARK_APPID : "YOUR_APPID"

|

| 20 |

+

#SPARK_API_SECRET : "YOUR_APISecret"

|

| 21 |

+

#SPARK_API_KEY : "YOUR_APIKey"

|

| 22 |

+

#DOMAIN : "generalv2"

|

| 23 |

+

#SPARK_URL : "ws://spark-api.xf-yun.com/v2.1/chat"

|

| 24 |

+

|

| 25 |

#### if Anthropic

|

| 26 |

+

#ANTHROPIC_API_KEY: "YOUR_API_KEY"

|

| 27 |

|

| 28 |

#### if AZURE, check https://github.com/openai/openai-cookbook/blob/main/examples/azure/chat.ipynb

|

|

|

|

| 29 |

#OPENAI_API_TYPE: "azure"

|

| 30 |

+

#OPENAI_BASE_URL: "YOUR_AZURE_ENDPOINT"

|

| 31 |

#OPENAI_API_KEY: "YOUR_AZURE_API_KEY"

|

| 32 |

#OPENAI_API_VERSION: "YOUR_AZURE_API_VERSION"

|

| 33 |

+

#DEPLOYMENT_NAME: "YOUR_DEPLOYMENT_NAME"

|

| 34 |

+

|

| 35 |

+

#### if zhipuai from `https://open.bigmodel.cn`. You can set here or export API_KEY="YOUR_API_KEY"

|

| 36 |

+

# ZHIPUAI_API_KEY: "YOUR_API_KEY"

|

| 37 |

+

|

| 38 |

+

#### if Google Gemini from `https://ai.google.dev/` and API_KEY from `https://makersuite.google.com/app/apikey`.

|

| 39 |

+

#### You can set here or export GOOGLE_API_KEY="YOUR_API_KEY"

|

| 40 |

+

# GEMINI_API_KEY: "YOUR_API_KEY"

|

| 41 |

+

|

| 42 |

+

#### if use self-host open llm model with openai-compatible interface

|

| 43 |

+

#OPEN_LLM_API_BASE: "http://127.0.0.1:8000/v1"

|

| 44 |

+

#OPEN_LLM_API_MODEL: "llama2-13b"

|

| 45 |

+

#

|

| 46 |

+

##### if use Fireworks api

|

| 47 |

+

#FIREWORKS_API_KEY: "YOUR_API_KEY"

|

| 48 |

+

#FIREWORKS_API_BASE: "https://api.fireworks.ai/inference/v1"

|

| 49 |

+

#FIREWORKS_API_MODEL: "YOUR_LLM_MODEL" # example, accounts/fireworks/models/llama-v2-13b-chat

|

| 50 |

+

|

| 51 |

+

#### if use self-host open llm model by ollama

|

| 52 |

+

# OLLAMA_API_BASE: http://127.0.0.1:11434/api

|

| 53 |

+

# OLLAMA_API_MODEL: llama2

|

| 54 |

|

| 55 |

#### for Search

|

| 56 |

|

|

|

|

| 86 |

|

| 87 |

#### for Stable Diffusion

|

| 88 |

## Use SD service, based on https://github.com/AUTOMATIC1111/stable-diffusion-webui

|

| 89 |

+

#SD_URL: "YOUR_SD_URL"

|

| 90 |

+

#SD_T2I_API: "/sdapi/v1/txt2img"

|

| 91 |

|

| 92 |

#### for Execution

|

| 93 |

#LONG_TERM_MEMORY: false

|

|

|

|

| 102 |

# CALC_USAGE: false

|

| 103 |

|

| 104 |

### for Research

|

| 105 |

+

# MODEL_FOR_RESEARCHER_SUMMARY: gpt-3.5-turbo

|

| 106 |

+

# MODEL_FOR_RESEARCHER_REPORT: gpt-3.5-turbo-16k

|

| 107 |

+

|

| 108 |

+

### choose the engine for mermaid conversion,

|

| 109 |

+

# default is nodejs, you can change it to playwright,pyppeteer or ink

|

| 110 |

+

# MERMAID_ENGINE: nodejs

|

| 111 |

+

|

| 112 |

+

### browser path for pyppeteer engine, support Chrome, Chromium,MS Edge

|

| 113 |

+

#PYPPETEER_EXECUTABLE_PATH: "/usr/bin/google-chrome-stable"

|

| 114 |

+

|

| 115 |

+

### for repair non-openai LLM's output when parse json-text if PROMPT_FORMAT=json

|

| 116 |

+

### due to non-openai LLM's output will not always follow the instruction, so here activate a post-process

|

| 117 |

+

### repair operation on the content extracted from LLM's raw output. Warning, it improves the result but not fix all cases.

|

| 118 |

+

# REPAIR_LLM_OUTPUT: false

|

| 119 |

|

| 120 |

+

# PROMPT_FORMAT: json #json or markdown

|

|

|

|

| 121 |

|

| 122 |

+

DISABLE_LLM_PROVIDER_CHECK: true

|

|

|

|

|

|

|

|

|

|

|

|

docs/FAQ-EN.md

DELETED

|

@@ -1,181 +0,0 @@

|

|

| 1 |

-

Our vision is to [extend human life](https://github.com/geekan/HowToLiveLonger) and [reduce working hours](https://github.com/geekan/MetaGPT/).

|

| 2 |

-

|

| 3 |

-

1. ### Convenient Link for Sharing this Document:

|

| 4 |

-

|

| 5 |

-

```

|

| 6 |

-

- MetaGPT-Index/FAQ https://deepwisdom.feishu.cn/wiki/MsGnwQBjiif9c3koSJNcYaoSnu4

|

| 7 |

-

```

|

| 8 |

-

|

| 9 |

-

2. ### Link

|

| 10 |

-

|

| 11 |

-

<!---->

|

| 12 |

-

|

| 13 |

-

1. Code:https://github.com/geekan/MetaGPT

|

| 14 |

-

|

| 15 |

-

1. Roadmap:https://github.com/geekan/MetaGPT/blob/main/docs/ROADMAP.md

|

| 16 |

-

|

| 17 |

-

1. EN

|

| 18 |

-

|

| 19 |

-

1. Demo Video: [MetaGPT: Multi-Agent AI Programming Framework](https://www.youtube.com/watch?v=8RNzxZBTW8M)

|

| 20 |

-

1. Tutorial: [MetaGPT: Deploy POWERFUL Autonomous Ai Agents BETTER Than SUPERAGI!](https://www.youtube.com/watch?v=q16Gi9pTG_M&t=659s)

|

| 21 |

-

|

| 22 |

-

1. CN

|

| 23 |

-

|

| 24 |

-

1. Demo Video: [MetaGPT:一行代码搭建你的虚拟公司_哔哩哔哩_bilibili](https://www.bilibili.com/video/BV1NP411C7GW/?spm_id_from=333.999.0.0&vd_source=735773c218b47da1b4bd1b98a33c5c77)

|

| 25 |

-

1. Tutorial: [一个提示词写游戏 Flappy bird, 比AutoGPT强10倍的MetaGPT,最接近AGI的AI项目](https://youtu.be/Bp95b8yIH5c)

|

| 26 |

-

1. Author's thoughts video(CN): [MetaGPT作者深度解析直播回放_哔哩哔哩_bilibili](https://www.bilibili.com/video/BV1Ru411V7XL/?spm_id_from=333.337.search-card.all.click)

|

| 27 |

-

|

| 28 |

-

<!---->

|

| 29 |

-

|

| 30 |

-

3. ### How to become a contributor?

|

| 31 |

-

|

| 32 |

-

<!---->

|

| 33 |

-

|

| 34 |

-

1. Choose a task from the Roadmap (or you can propose one). By submitting a PR, you can become a contributor and join the dev team.

|

| 35 |

-

1. Current contributors come from backgrounds including: ByteDance AI Lab/DingDong/Didi/Xiaohongshu, Tencent/Baidu/MSRA/TikTok/BloomGPT Infra/Bilibili/CUHK/HKUST/CMU/UCB

|

| 36 |

-

|

| 37 |

-

<!---->

|

| 38 |

-

|

| 39 |

-

4. ### Chief Evangelist (Monthly Rotation)

|

| 40 |

-

|

| 41 |

-

MetaGPT Community - The position of Chief Evangelist rotates on a monthly basis. The primary responsibilities include:

|

| 42 |

-

|

| 43 |

-

1. Maintaining community FAQ documents, announcements, Github resources/READMEs.

|

| 44 |

-

1. Responding to, answering, and distributing community questions within an average of 30 minutes, including on platforms like Github Issues, Discord and WeChat.

|

| 45 |

-

1. Upholding a community atmosphere that is enthusiastic, genuine, and friendly.

|

| 46 |

-

1. Encouraging everyone to become contributors and participate in projects that are closely related to achieving AGI (Artificial General Intelligence).

|

| 47 |

-

1. (Optional) Organizing small-scale events, such as hackathons.

|

| 48 |

-

|

| 49 |

-

<!---->

|

| 50 |

-

|

| 51 |

-

5. ### FAQ

|

| 52 |

-

|

| 53 |

-

<!---->

|

| 54 |

-

|

| 55 |

-

1. Experience with the generated repo code:

|

| 56 |

-

|

| 57 |

-

1. https://github.com/geekan/MetaGPT/releases/tag/v0.1.0

|

| 58 |

-

|

| 59 |

-

1. Code truncation/ Parsing failure:

|

| 60 |

-

|

| 61 |

-

1. Check if it's due to exceeding length. Consider using the gpt-3.5-turbo-16k or other long token versions.

|

| 62 |

-

|

| 63 |

-

1. Success rate:

|

| 64 |

-

|

| 65 |

-

1. There hasn't been a quantitative analysis yet, but the success rate of code generated by GPT-4 is significantly higher than that of gpt-3.5-turbo.

|

| 66 |

-

|

| 67 |

-

1. Support for incremental, differential updates (if you wish to continue a half-done task):

|

| 68 |

-

|

| 69 |

-

1. Several prerequisite tasks are listed on the ROADMAP.

|

| 70 |

-

|

| 71 |

-

1. Can existing code be loaded?

|

| 72 |

-

|

| 73 |

-

1. It's not on the ROADMAP yet, but there are plans in place. It just requires some time.

|

| 74 |

-

|

| 75 |

-

1. Support for multiple programming languages and natural languages?

|

| 76 |

-

|

| 77 |

-

1. It's listed on ROADMAP.

|

| 78 |

-

|

| 79 |

-

1. Want to join the contributor team? How to proceed?

|

| 80 |

-

|

| 81 |

-

1. Merging a PR will get you into the contributor's team. The main ongoing tasks are all listed on the ROADMAP.

|

| 82 |

-

|

| 83 |

-

1. PRD stuck / unable to access/ connection interrupted

|

| 84 |

-

|

| 85 |

-

1. The official OPENAI_API_BASE address is `https://api.openai.com/v1`

|

| 86 |

-

1. If the official OPENAI_API_BASE address is inaccessible in your environment (this can be verified with curl), it's recommended to configure using the reverse proxy OPENAI_API_BASE provided by libraries such as openai-forward. For instance, `OPENAI_API_BASE: "``https://api.openai-forward.com/v1``"`

|

| 87 |

-

1. If the official OPENAI_API_BASE address is inaccessible in your environment (again, verifiable via curl), another option is to configure the OPENAI_PROXY parameter. This way, you can access the official OPENAI_API_BASE via a local proxy. If you don't need to access via a proxy, please do not enable this configuration; if accessing through a proxy is required, modify it to the correct proxy address. Note that when OPENAI_PROXY is enabled, don't set OPENAI_API_BASE.

|

| 88 |

-

1. Note: OpenAI's default API design ends with a v1. An example of the correct configuration is: `OPENAI_API_BASE: "``https://api.openai.com/v1``"`

|

| 89 |

-

|

| 90 |

-

1. Absolutely! How can I assist you today?

|

| 91 |

-

|

| 92 |

-

1. Did you use Chi or a similar service? These services are prone to errors, and it seems that the error rate is higher when consuming 3.5k-4k tokens in GPT-4

|

| 93 |

-

|

| 94 |

-

1. What does Max token mean?

|

| 95 |

-

|

| 96 |

-

1. It's a configuration for OpenAI's maximum response length. If the response exceeds the max token, it will be truncated.

|

| 97 |

-

|

| 98 |

-

1. How to change the investment amount?

|

| 99 |

-

|

| 100 |

-

1. You can view all commands by typing `python startup.py --help`

|

| 101 |

-

|

| 102 |

-

1. Which version of Python is more stable?

|

| 103 |

-

|

| 104 |

-

1. python3.9 / python3.10

|

| 105 |

-

|

| 106 |

-

1. Can't use GPT-4, getting the error "The model gpt-4 does not exist."

|

| 107 |

-

|

| 108 |

-

1. OpenAI's official requirement: You can use GPT-4 only after spending $1 on OpenAI.

|

| 109 |

-

1. Tip: Run some data with gpt-3.5-turbo (consume the free quota and $1), and then you should be able to use gpt-4.

|

| 110 |

-

|

| 111 |

-

1. Can games whose code has never been seen before be written?

|

| 112 |

-

|

| 113 |

-

1. Refer to the README. The recommendation system of Toutiao is one of the most complex systems in the world currently. Although it's not on GitHub, many discussions about it exist online. If it can visualize these, it suggests it can also summarize these discussions and convert them into code. The prompt would be something like "write a recommendation system similar to Toutiao". Note: this was approached in earlier versions of the software. The SOP of those versions was different; the current one adopts Elon Musk's five-step work method, emphasizing trimming down requirements as much as possible.

|

| 114 |

-

|

| 115 |

-

1. Under what circumstances would there typically be errors?

|

| 116 |

-

|

| 117 |

-

1. More than 500 lines of code: some function implementations may be left blank.

|

| 118 |

-

1. When using a database, it often gets the implementation wrong — since the SQL database initialization process is usually not in the code.

|

| 119 |

-

1. With more lines of code, there's a higher chance of false impressions, leading to calls to non-existent APIs.

|

| 120 |

-

|

| 121 |

-

1. Instructions for using SD Skills/UI Role:

|

| 122 |

-

|

| 123 |

-

1. Currently, there is a test script located in /tests/metagpt/roles. The file ui_role provides the corresponding code implementation. For testing, you can refer to the test_ui in the same directory.

|

| 124 |

-

|

| 125 |

-

1. The UI role takes over from the product manager role, extending the output from the 【UI Design draft】 provided by the product manager role. The UI role has implemented the UIDesign Action. Within the run of UIDesign, it processes the respective context, and based on the set template, outputs the UI. The output from the UI role includes:

|

| 126 |

-

|

| 127 |

-

1. UI Design Description:Describes the content to be designed and the design objectives.

|

| 128 |

-

1. Selected Elements:Describes the elements in the design that need to be illustrated.

|

| 129 |

-

1. HTML Layout:Outputs the HTML code for the page.

|

| 130 |

-

1. CSS Styles (styles.css):Outputs the CSS code for the page.

|

| 131 |

-

|

| 132 |

-

1. Currently, the SD skill is a tool invoked by UIDesign. It instantiates the SDEngine, with specific code found in metagpt/tools/sd_engine.

|

| 133 |

-

|

| 134 |

-

1. Configuration instructions for SD Skills: The SD interface is currently deployed based on *https://github.com/AUTOMATIC1111/stable-diffusion-webui* **For environmental configurations and model downloads, please refer to the aforementioned GitHub repository. To initiate the SD service that supports API calls, run the command specified in cmd with the parameter nowebui, i.e.,

|

| 135 |

-

|

| 136 |

-

1. > python webui.py --enable-insecure-extension-access --port xxx --no-gradio-queue --nowebui

|

| 137 |

-

1. Once it runs without errors, the interface will be accessible after approximately 1 minute when the model finishes loading.

|

| 138 |

-

1. Configure SD_URL and SD_T2I_API in the config.yaml/key.yaml files.

|

| 139 |

-

1.

|

| 140 |

-

1. SD_URL is the deployed server/machine IP, and Port is the specified port above, defaulting to 7860.

|

| 141 |

-

1. > SD_URL: IP:Port

|

| 142 |

-

|

| 143 |

-

1. An error occurred during installation: "Another program is using this file...egg".

|

| 144 |

-

|

| 145 |

-

1. Delete the file and try again.

|

| 146 |

-

1. Or manually execute`pip install -r requirements.txt`

|

| 147 |

-

|

| 148 |

-

1. The origin of the name MetaGPT?

|

| 149 |

-

|

| 150 |

-

1. The name was derived after iterating with GPT-4 over a dozen rounds. GPT-4 scored and suggested it.

|

| 151 |

-

|

| 152 |

-

1. Is there a more step-by-step installation tutorial?

|

| 153 |

-

|

| 154 |

-

1. Youtube(CN):[一个提示词写游戏 Flappy bird, 比AutoGPT强10倍的MetaGPT,最接近AGI的AI项目=一个软件公司产品经理+程序员](https://youtu.be/Bp95b8yIH5c)

|

| 155 |

-

1. Youtube(EN)https://www.youtube.com/watch?v=q16Gi9pTG_M&t=659s

|

| 156 |

-

|

| 157 |

-

1. openai.error.RateLimitError: You exceeded your current quota, please check your plan and billing details

|

| 158 |

-

|

| 159 |

-

1. If you haven't exhausted your free quota, set RPM to 3 or lower in the settings.

|

| 160 |

-

1. If your free quota is used up, consider adding funds to your account.

|

| 161 |

-

|

| 162 |

-

1. What does "borg" mean in n_borg?

|

| 163 |

-

|

| 164 |

-

1. https://en.wikipedia.org/wiki/Borg

|

| 165 |

-

1. The Borg civilization operates based on a hive or collective mentality, known as "the Collective." Every Borg individual is connected to the collective via a sophisticated subspace network, ensuring continuous oversight and guidance for every member. This collective consciousness allows them to not only "share the same thoughts" but also to adapt swiftly to new strategies. While individual members of the collective rarely communicate, the collective "voice" sometimes transmits aboard ships.

|

| 166 |

-

|

| 167 |

-

1. How to use the Claude API?

|

| 168 |

-

|

| 169 |

-

1. The full implementation of the Claude API is not provided in the current code.

|

| 170 |

-

1. You can use the Claude API through third-party API conversion projects like: https://github.com/jtsang4/claude-to-chatgpt

|

| 171 |

-

|

| 172 |

-

1. Is Llama2 supported?

|

| 173 |

-

|

| 174 |

-

1. On the day Llama2 was released, some of the community members began experiments and found that output can be generated based on MetaGPT's structure. However, Llama2's context is too short to generate a complete project. Before regularly using Llama2, it's necessary to expand the context window to at least 8k. If anyone has good recommendations for expansion models or methods, please leave a comment.

|

| 175 |

-

|

| 176 |

-

1. `mermaid-cli getElementsByTagName SyntaxError: Unexpected token '.'`

|

| 177 |

-

|

| 178 |

-

1. Upgrade node to version 14.x or above:

|

| 179 |

-

|

| 180 |

-

1. `npm install -g n`

|

| 181 |

-

1. `n stable` to install the stable version of node(v18.x)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

docs/README_CN.md

DELETED

|

@@ -1,201 +0,0 @@

|

|

| 1 |

-

# MetaGPT: 多智能体框架

|

| 2 |

-

|

| 3 |

-