Spaces:

Runtime error

Runtime error

Merge pull request #56 from huggingface/bump-autotrain-api

Browse files- README.md +51 -3

- app.py +1 -1

- images/autotrain_job.png +0 -0

- images/autotrain_projects.png +0 -0

README.md

CHANGED

|

@@ -44,18 +44,47 @@ Next, copy the example file of environment variables:

|

|

| 44 |

cp .env.template .env

|

| 45 |

```

|

| 46 |

|

| 47 |

-

and set the `HF_TOKEN` variable with a valid API token from the `autoevaluator` user. Finally, spin up the application by running:

|

| 48 |

|

| 49 |

```

|

| 50 |

streamlit run app.py

|

| 51 |

```

|

| 52 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

## AutoTrain configuration details

|

| 54 |

|

| 55 |

-

Models are evaluated by AutoTrain, with the payload sent to the `AUTOTRAIN_BACKEND_API` environment variable.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 56 |

|

| 57 |

```

|

| 58 |

-

AUTOTRAIN_BACKEND_API=https://api

|

| 59 |

```

|

| 60 |

|

| 61 |

To evaluate models with a _local_ instance of AutoTrain, change the environment to:

|

|

@@ -63,3 +92,22 @@ To evaluate models with a _local_ instance of AutoTrain, change the environment

|

|

| 63 |

```

|

| 64 |

AUTOTRAIN_BACKEND_API=http://localhost:8000

|

| 65 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

cp .env.template .env

|

| 45 |

```

|

| 46 |

|

| 47 |

+

and set the `HF_TOKEN` variable with a valid API token from the [`autoevaluator`](https://huggingface.co/autoevaluator) bot user. Finally, spin up the application by running:

|

| 48 |

|

| 49 |

```

|

| 50 |

streamlit run app.py

|

| 51 |

```

|

| 52 |

|

| 53 |

+

## Usage

|

| 54 |

+

|

| 55 |

+

Evaluation on the Hub involves two main steps:

|

| 56 |

+

|

| 57 |

+

1. Submitting an evaluation job via the UI. This creates an AutoTrain project with `N` models for evaluation. At this stage, the dataset is also processed and prepared for evaluation.

|

| 58 |

+

2. Triggering the evaluation itself once the dataset is processed.

|

| 59 |

+

|

| 60 |

+

From the user perspective, only step (1) is needed since step (2) is handled by a cron job on GitHub Actions that executes the `run_evaluation_jobs.py` script every 15 minutes.

|

| 61 |

+

|

| 62 |

+

See below for details on manually triggering evaluation jobs.

|

| 63 |

+

|

| 64 |

+

### Triggering an evaluation

|

| 65 |

+

|

| 66 |

+

To evaluate the models in an AutoTrain project, run:

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

python run_evaluation_jobs.py

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

This will download the [`autoevaluate/evaluation-job-logs`](https://huggingface.co/datasets/autoevaluate/evaluation-job-logs) dataset from the Hub and check which evaluation projects are ready for evaluation (i.e. those whose dataset has been processed).

|

| 73 |

+

|

| 74 |

## AutoTrain configuration details

|

| 75 |

|

| 76 |

+

Models are evaluated by the [`autoevaluator`](https://huggingface.co/autoevaluator) bot user in AutoTrain, with the payload sent to the `AUTOTRAIN_BACKEND_API` environment variable. Evaluation projects are created and run on either the `prod` or `staging` environments. You can view the status of projects in the AutoTrain UI by navigating to one of the links below (ask internally for access to the staging UI):

|

| 77 |

+

|

| 78 |

+

| AutoTrain environment | AutoTrain UI URL | `AUTOTRAIN_BACKEND_API` |

|

| 79 |

+

|:---------------------:|:--------------------------------------------------------------------------------------------------------------:|:--------------------------------------------:|

|

| 80 |

+

| `prod` | [`https://ui.autotrain.huggingface.co/projects`](https://ui.autotrain.huggingface.co/projects) | https://api.autotrain.huggingface.co |

|

| 81 |

+

| `staging` | [`https://ui-staging.autotrain.huggingface.co/projects`](https://ui-staging.autotrain.huggingface.co/projects) | https://api-staging.autotrain.huggingface.co |

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

The current configuration for evaluation jobs running on [Spaces](https://huggingface.co/spaces/autoevaluate/model-evaluator) is:

|

| 85 |

|

| 86 |

```

|

| 87 |

+

AUTOTRAIN_BACKEND_API=https://api.autotrain.huggingface.co

|

| 88 |

```

|

| 89 |

|

| 90 |

To evaluate models with a _local_ instance of AutoTrain, change the environment to:

|

|

|

|

| 92 |

```

|

| 93 |

AUTOTRAIN_BACKEND_API=http://localhost:8000

|

| 94 |

```

|

| 95 |

+

|

| 96 |

+

### Migrating from staging to production (and vice versa)

|

| 97 |

+

|

| 98 |

+

In general, evaluation jobs should run in AutoTrain's `prod` environment, which is defined by the following environment variable:

|

| 99 |

+

|

| 100 |

+

```

|

| 101 |

+

AUTOTRAIN_BACKEND_API=https://api.autotrain.huggingface.co

|

| 102 |

+

```

|

| 103 |

+

|

| 104 |

+

However, there are times when it is necessary to run evaluation jobs in AutoTrain's `staging` environment (e.g. because a new evaluation pipeline is being deployed). In these cases the corresponding environement variable is:

|

| 105 |

+

|

| 106 |

+

```

|

| 107 |

+

AUTOTRAIN_BACKEND_API=https://api-staging.autotrain.huggingface.co

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

To migrate between these two environments, update the `AUTOTRAIN_BACKEND_API` in two places:

|

| 111 |

+

|

| 112 |

+

* In the [repo secrets](https://huggingface.co/spaces/autoevaluate/model-evaluator/settings) associated with the `model-evaluator` Space. This will ensure evaluation projects are created in the desired environment.

|

| 113 |

+

* In the [GitHub Actions secrets](https://github.com/huggingface/model-evaluator/settings/secrets/actions) associated with this repo. This will ensure that the correct evaluation jobs are approved and launched via the `run_evaluation_jobs.py` script.

|

app.py

CHANGED

|

@@ -576,7 +576,7 @@ with st.form(key="form"):

|

|

| 576 |

else "en",

|

| 577 |

"max_models": 5,

|

| 578 |

"instance": {

|

| 579 |

-

"provider": "sagemaker",

|

| 580 |

"instance_type": AUTOTRAIN_MACHINE[selected_task]

|

| 581 |

if selected_task in AUTOTRAIN_MACHINE.keys()

|

| 582 |

else "p3",

|

|

|

|

| 576 |

else "en",

|

| 577 |

"max_models": 5,

|

| 578 |

"instance": {

|

| 579 |

+

"provider": "sagemaker" if selected_task in AUTOTRAIN_MACHINE.keys() else "ovh",

|

| 580 |

"instance_type": AUTOTRAIN_MACHINE[selected_task]

|

| 581 |

if selected_task in AUTOTRAIN_MACHINE.keys()

|

| 582 |

else "p3",

|

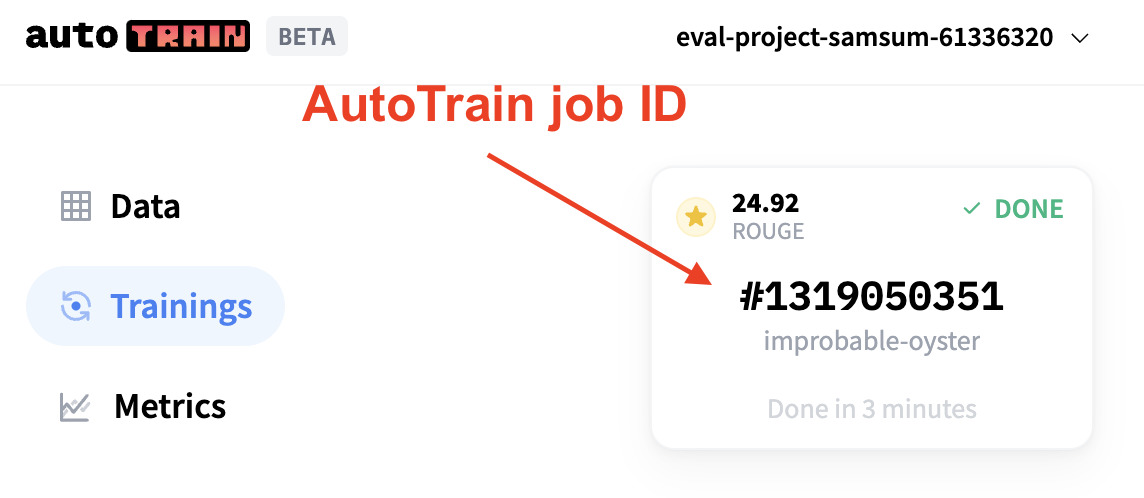

images/autotrain_job.png

ADDED

|

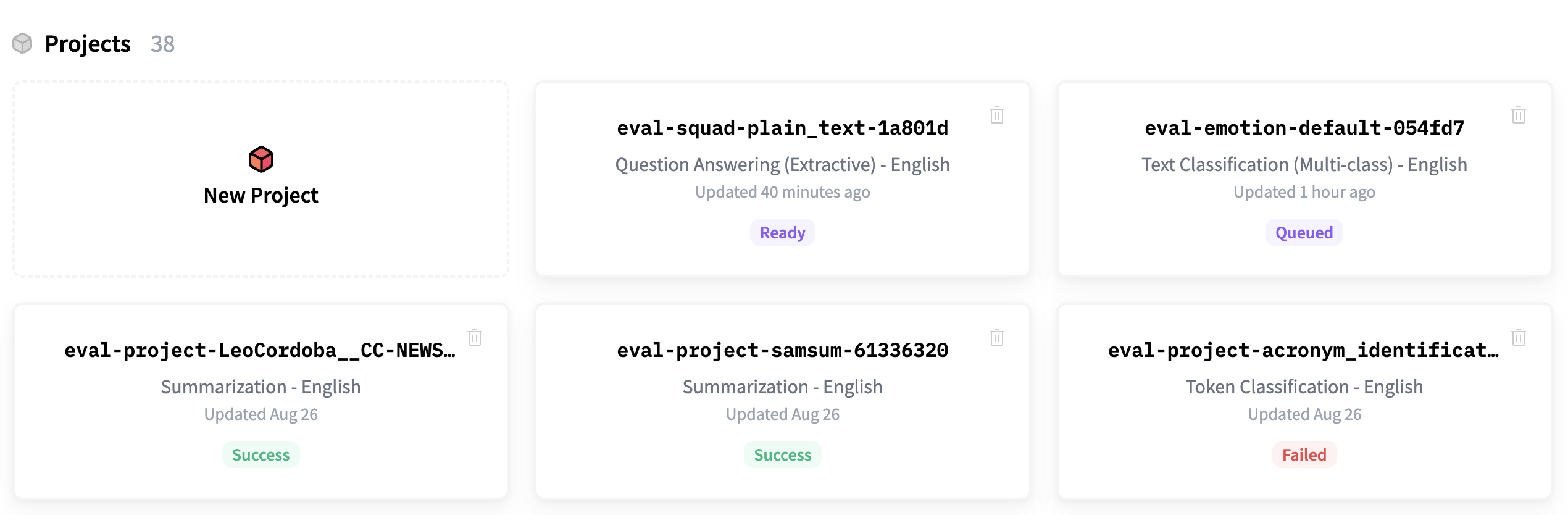

images/autotrain_projects.png

ADDED

|