Spaces:

Paused

Paused

A newer version of the Gradio SDK is available:

4.39.0

Optimization

The .optimization module provides:

- an optimizer with weight decay fixed that can be used to fine-tuned models, and

- several schedules in the form of schedule objects that inherit from

_LRSchedule: - a gradient accumulation class to accumulate the gradients of multiple batches

AdamW (PyTorch)

[[autodoc]] AdamW

AdaFactor (PyTorch)

[[autodoc]] Adafactor

AdamWeightDecay (TensorFlow)

[[autodoc]] AdamWeightDecay

[[autodoc]] create_optimizer

Schedules

Learning Rate Schedules (Pytorch)

[[autodoc]] SchedulerType

[[autodoc]] get_scheduler

[[autodoc]] get_constant_schedule

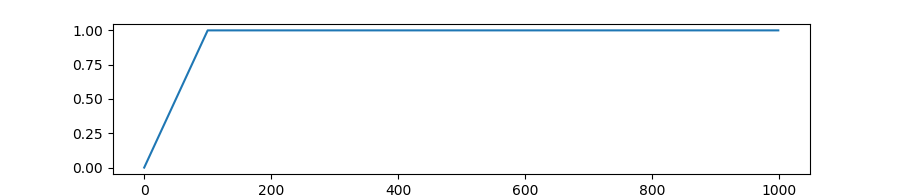

[[autodoc]] get_constant_schedule_with_warmup

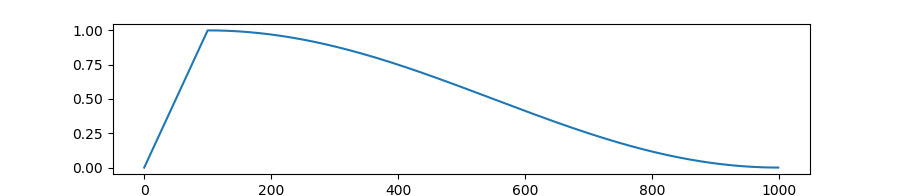

[[autodoc]] get_cosine_schedule_with_warmup

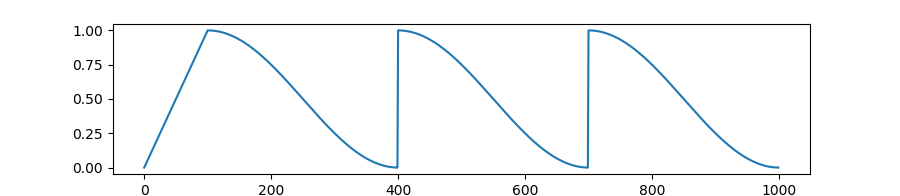

[[autodoc]] get_cosine_with_hard_restarts_schedule_with_warmup

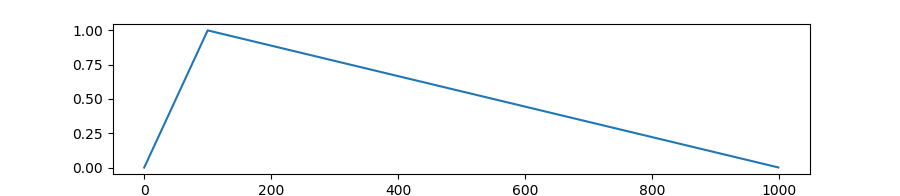

[[autodoc]] get_linear_schedule_with_warmup

[[autodoc]] get_polynomial_decay_schedule_with_warmup

[[autodoc]] get_inverse_sqrt_schedule

Warmup (TensorFlow)

[[autodoc]] WarmUp

Gradient Strategies

GradientAccumulator (TensorFlow)

[[autodoc]] GradientAccumulator