A newer version of the Gradio SDK is available:

5.7.0

title: Chatbot

emoji: 🌖

colorFrom: gray

colorTo: purple

sdk: gradio

sdk_version: 4.18.0

app_file: app.py

pinned: false

Repository with chatbot on five algorithms: TF-IDF, W2V (Glove-25), BERT, Bi_BERT, Bi-Cross-BERT

Introduction

This repo was created to compare different retrieval algorithms on chatbot which speaks my favorite character. The favourite character is taken as Rachel Green from Friends.

All transcripts were taken from this website "https://fangj.github.io/friends/" For this chatbot parser.py file was created to download data from website, parse it with beautifulsoup and create dataframes with certaing constraint, like name of favorite character and threshold of frequency most popular characters. In total about 6k lines of dialogs were collected. For data with labels this amount increased by two times - 12k lines.

First part of training based in this notebook HW1_NLP_GEN_TFIDF_W2V_BERT_StarodubovKG.ipynb

TF-IDF, W2V, BERT algorithms are considered inside. For cleaning and preparing data for first two algorithms nltk library is used. From the data punctuation and stopwords are removed, also lemmatization is used to short form.

Chart below shows that length of sentences in data not exceeds 60 words.

Chatbot with TF-IDF convert "question" to vector, find equivalent or most relevant vector in database and for this vector extract answer which sends to user.

Experiments shows that TF-IDF gives good results from the box. ChatBot with TF-IDF works perfect if we have all possible questions and answers.

Second algorithm is W2V. Experiments shows that better to use pretrained vectors instead of trained on small amount of data. This is why "glove-twitter-25" is used. Chatbot with W2V shows, probably, similar results as TF-IDF, but spends less time on processing. It is related to length of vectors, 25 in W2V against 5024 in TF-IDF. 5024 is the length of dictionary in TF-IDF.

Third algorithm which could be used with chatbot is BERT. BERT-like models are good because in addition to returning a contextualised embedding for each token. So for sentence all embeddings shoud be summed As BERT model are trained for CLS token, chatbot could find more relevant question from database to user's request. Which leads to better answer, but not always. This chatbot uses "distilbert/distilbert-base-uncased" model from huggingface. Distiled version of BERT increase refence speed by reason of less weights in model.

All three methods above don't use training in common understanding, but all vectorize question and find relevant from database. These methods show good results, but don't shows flexibility in answers. They usually return answer which corresponds to database.

Last two algorithms are shown in "HW1_NLP_GEN_BI_Cross_StarodubovKG.ipynb".

Fourth method is Bi-Encoder.

This algorithm requires preliminary training to make model more close to training data.

During this training chatbot starts "to speak" more like Rachel. Training of the model shows below:

After training model could find the range of possible answerss and return the one with best score argmax()

To choose better one more precisely from the previous range, chat bot uses Cross-Encoder model, which helps select answer, which fits more to the question.

Training of the model shows below

To sum up, for the baseline first three algorithms could be used. Chatbot answer with quite dirict answers, which are better than better the dataframe. With last two algorithms, Bi and Cross Encoder, model doesn't use database as dictionary. It tries to find more relevant question to answer with Bi-encoder and filter the best one with Cross-Encoder.

Architecture

- models - contains weights for Bi and Cross encoder models.

- parse.py - parser of website ("https://fangj.github.io/friends/"), which download transcripts of friends movie and make csv file

- HW1_NLP_GEN_TFIDF_W2V_BERT_StarodubovKG.ipynb - training and tests of TF-IDF, W2V, BERT algorithms

- HW1_NLP_GEN_BI_Cross_StarodubovKG.ipynb - training and tests of Bi and Cross Encoder

- rachel_friends.csv - dataframe for TF-IDF, W2V, BERT models, which used as dictionary for chatbot

- rachel_friends_label.csv - data for Bi and Cross encoder. With labels models tries to identify how speaks rachel.

- bi_bert_answers.npy - numpy array with vectorized answers, which are used to faster inference of the model.

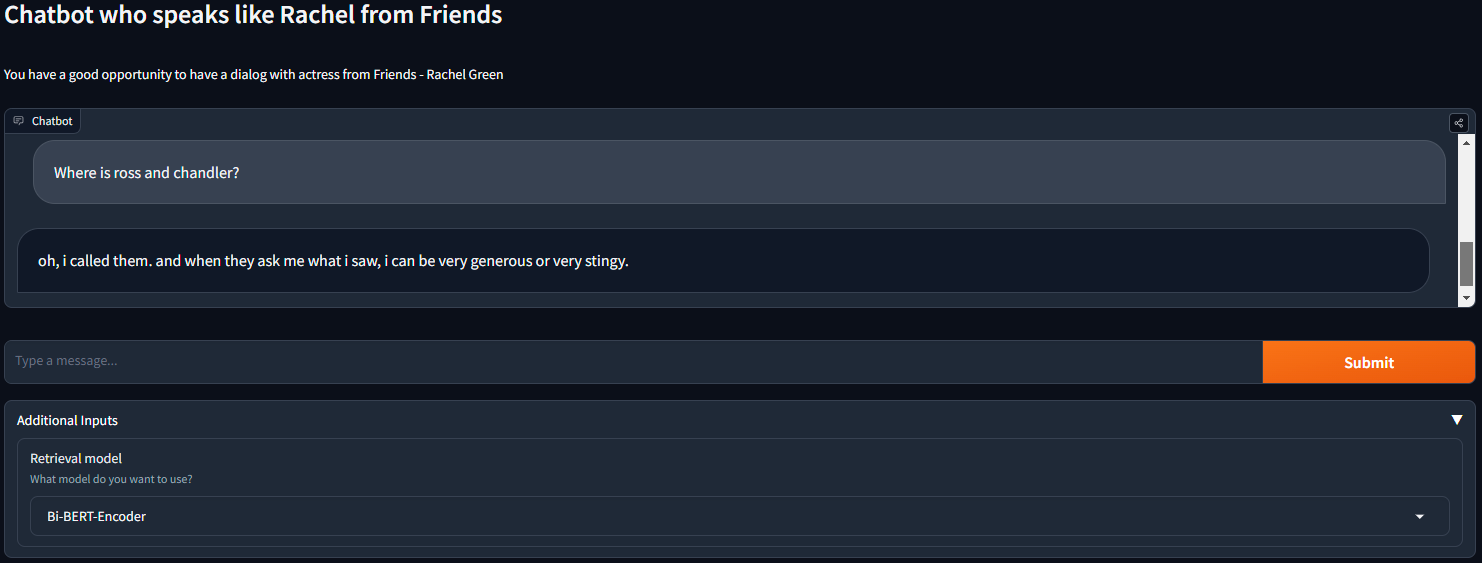

Example of conversation with chatbot

Example 1

Example 2

Example 2

Example 3

Example 3

Example 4

Example 4

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference