title: InternChat

emoji: 🤖💬

colorFrom: indigo

colorTo: pink

sdk: gradio

sdk_version: 3.28.1

app_file: app.py

pinned: false

license: apache-2.0

The project is still under construction, we will continue to update it and welcome contributions/pull requests from the community.

|

|

|

|

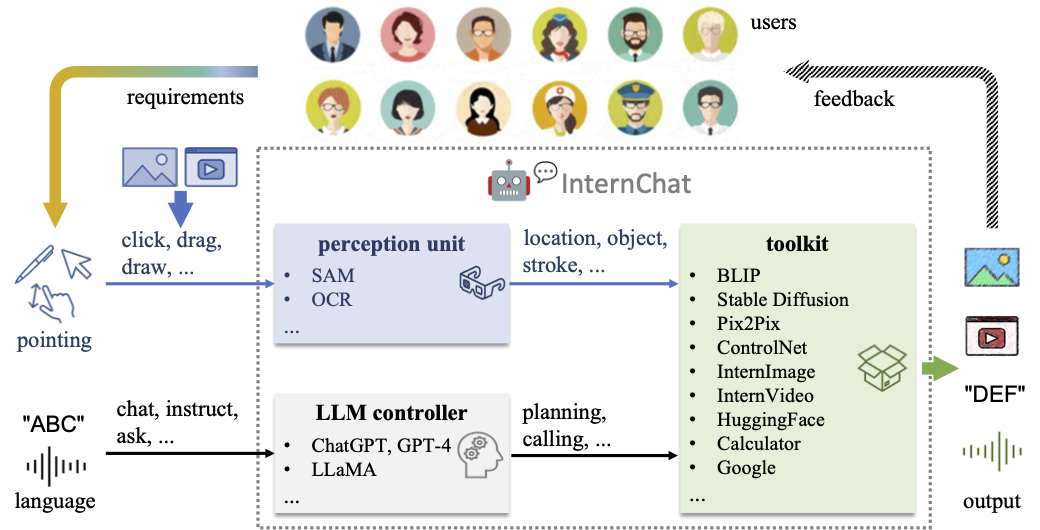

InternChat [paper]

InternChat(short for iChat) is pointing-language-driven visual interactive system, allowing you to interact with ChatGPT by clicking, dragging and drawing using a pointing device. The name InternChat stands for interaction, nonverbal, and chatbots. Different from existing interactive systems that rely on pure language, by incorporating pointing instructions, iChat significantly improves the efficiency of communication between users and chatbots, as well as the accuracy of chatbots in vision-centric tasks, especially in complicated visual scenarios. Additionally, in iChat, an auxiliary control mechanism is used to improve the control capability of LLM, and a large vision-language model termed Husky is fine-tuned for high-quality multi-modal dialogue (impressing ChatGPT-3.5-turbo with 93.89% GPT-4 Quality).

Online Demo

InternChat is online. Let's try it!

[NOTE] It is possible that you are waiting in a lengthy queue. You can clone our repo and run it with your private GPU.

https://github.com/OpenGVLab/InternChat/assets/13723743/3270b05f-0823-4f13-9966-4010fd855643

Schedule

- Support Chinese

- Support MOSS

- More powerful foundation models based on InternImage and InternVideo

- More accurate interactive experience

- Web Page & Code Generation

- Support voice assistant

- Support click interaction

- Interactive image editing

- Interactive image generation

- Interactive visual question answering

- Segment Anything

- Image inpainting

- Image caption

- image matting

- Optical character recognition

- Action recognition

- Video caption

- Video dense caption

- video highlight interpretation

System Overview

🎁 Major Features

(a) Remove the masked object

(b) Interactive image editing

(c) Image generation

(d) Interactive visual question answer

(e) Interactive image generation

(f) Video highlight interpretation

🛠️ Installation

Basic requirements

- Linux

- Python 3.8+

- PyTorch 1.12+

- CUDA 11.6+

- GCC & G++ 5.4+

- GPU Memory >= 17G for loading basic tools (HuskyVQA, SegmentAnything, ImageOCRRecognition)

Install Python dependencies

pip install -r requirements.txt

Model zoo

Coming soon...

👨🏫 Get Started

Running the following shell can start a gradio service:

python -u iChatApp.py --load "HuskyVQA_cuda:0,SegmentAnything_cuda:0,ImageOCRRecognition_cuda:0" --port 3456

if you want to enable the voice assistant, please use openssl to generate the certificate:

openssl req -x509 -newkey rsa:4096 -keyout ./key.pem -out ./cert.pem -sha256 -days 365 -nodes

and then run:

python -u iChatApp.py --load "HuskyVQA_cuda:0,SegmentAnything_cuda:0,ImageOCRRecognition_cuda:0" --port 3456 --https

🎫 License

This project is released under the Apache 2.0 license.

🖊️ Citation

If you find this project useful in your research, please consider cite:

@misc{2023internchat,

title={InternChat: Solving Vision-Centric Tasks by Interacting with Chatbots Beyond Language},

author={Zhaoyang Liu and Yinan He and Wenhai Wang and Weiyun Wang and Yi Wang and Shoufa Chen and Qinglong Zhang and Yang Yang and Qingyun Li and Jiashuo Yu and Kunchang Li and Zhe Chen and Xue Yang and Xizhou Zhu and Yali Wang and Limin Wang and Ping Luo and Jifeng Dai and Yu Qiao},

howpublished = {\url{https://arxiv.org/abs/2305.05662}},

year={2023}

}

🤝 Acknowledgement

Thanks to the open source of the following projects:

Hugging Face LangChain TaskMatrix SAM Stable Diffusion ControlNet InstructPix2Pix BLIP Latent Diffusion Models EasyOCR

Welcome to discuss with us and continuously improve the user experience of InternChat.

WeChat QR Code