Spaces:

Running

Running

github-actions[bot]

commited on

Commit

•

49ee91e

0

Parent(s):

Sync to HuggingFace Spaces

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .dockerignore +25 -0

- .editorconfig +7 -0

- .env.example +33 -0

- .github/workflows/deploy.yml +54 -0

- .github/workflows/llama-cpp.yml +175 -0

- .github/workflows/on-pull-request-to-main.yml +9 -0

- .github/workflows/on-push-to-main.yml +7 -0

- .github/workflows/reusable-test-lint-ping.yml +25 -0

- .github/workflows/update-searxng-docker-image.yml +44 -0

- .gitignore +7 -0

- .npmrc +1 -0

- Dockerfile +82 -0

- README.md +139 -0

- biome.json +30 -0

- client/components/AiResponse/AiModelDownloadAllowanceContent.tsx +62 -0

- client/components/AiResponse/AiResponseContent.tsx +199 -0

- client/components/AiResponse/AiResponseSection.tsx +105 -0

- client/components/AiResponse/ChatInterface.tsx +186 -0

- client/components/AiResponse/CopyIconButton.tsx +32 -0

- client/components/AiResponse/FormattedMarkdown.tsx +107 -0

- client/components/AiResponse/LoadingModelContent.tsx +40 -0

- client/components/AiResponse/PreparingContent.tsx +33 -0

- client/components/AiResponse/WebLlmModelSelect.tsx +81 -0

- client/components/AiResponse/WllamaModelSelect.tsx +42 -0

- client/components/App/App.tsx +94 -0

- client/components/Logs/LogsModal.tsx +101 -0

- client/components/Logs/ShowLogsButton.tsx +42 -0

- client/components/Pages/AccessPage.tsx +61 -0

- client/components/Pages/Main/MainPage.tsx +60 -0

- client/components/Pages/Main/Menu/AISettingsForm.tsx +441 -0

- client/components/Pages/Main/Menu/ActionsForm.tsx +18 -0

- client/components/Pages/Main/Menu/ClearDataButton.tsx +63 -0

- client/components/Pages/Main/Menu/InterfaceSettingsForm.tsx +45 -0

- client/components/Pages/Main/Menu/MenuButton.tsx +53 -0

- client/components/Pages/Main/Menu/MenuDrawer.tsx +120 -0

- client/components/Pages/Main/Menu/SearchSettingsForm.tsx +52 -0

- client/components/Pages/Main/Menu/VoiceSettingsForm.tsx +72 -0

- client/components/Search/Form/SearchForm.tsx +131 -0

- client/components/Search/Results/Graphical/ImageResultsList.tsx +122 -0

- client/components/Search/Results/Graphical/ImageResultsLoadingState.tsx +22 -0

- client/components/Search/Results/Graphical/ImageSearchResults.tsx +62 -0

- client/components/Search/Results/SearchResultsSection.tsx +49 -0

- client/components/Search/Results/Textual/SearchResultsList.tsx +87 -0

- client/components/Search/Results/Textual/TextResultsLoadingState.tsx +18 -0

- client/components/Search/Results/Textual/TextSearchResults.tsx +60 -0

- client/index.html +36 -0

- client/index.tsx +9 -0

- client/modules/accessKey.ts +95 -0

- client/modules/keyboard.ts +15 -0

- client/modules/logEntries.ts +20 -0

.dockerignore

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Logs

|

| 2 |

+

logs

|

| 3 |

+

*.log

|

| 4 |

+

npm-debug.log*

|

| 5 |

+

yarn-debug.log*

|

| 6 |

+

yarn-error.log*

|

| 7 |

+

pnpm-debug.log*

|

| 8 |

+

lerna-debug.log*

|

| 9 |

+

|

| 10 |

+

node_modules

|

| 11 |

+

dist

|

| 12 |

+

dist-ssr

|

| 13 |

+

*.local

|

| 14 |

+

|

| 15 |

+

# Editor directories and files

|

| 16 |

+

.vscode/*

|

| 17 |

+

!.vscode/extensions.json

|

| 18 |

+

.idea

|

| 19 |

+

.DS_Store

|

| 20 |

+

*.suo

|

| 21 |

+

*.ntvs*

|

| 22 |

+

*.njsproj

|

| 23 |

+

*.sln

|

| 24 |

+

*.sw?

|

| 25 |

+

|

.editorconfig

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[*]

|

| 2 |

+

charset = utf-8

|

| 3 |

+

insert_final_newline = true

|

| 4 |

+

end_of_line = lf

|

| 5 |

+

indent_style = space

|

| 6 |

+

indent_size = 2

|

| 7 |

+

max_line_length = 80

|

.env.example

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# A comma-separated list of access keys. Example: `ACCESS_KEYS="ABC123,JUD71F,HUWE3"`. Leave blank for unrestricted access.

|

| 2 |

+

ACCESS_KEYS=""

|

| 3 |

+

|

| 4 |

+

# The timeout in hours for access key validation. Set to 0 to require validation on every page load.

|

| 5 |

+

ACCESS_KEY_TIMEOUT_HOURS="24"

|

| 6 |

+

|

| 7 |

+

# The default model ID for WebLLM with F16 shaders.

|

| 8 |

+

WEBLLM_DEFAULT_F16_MODEL_ID="Qwen2.5-0.5B-Instruct-q4f16_1-MLC"

|

| 9 |

+

|

| 10 |

+

# The default model ID for WebLLM with F32 shaders.

|

| 11 |

+

WEBLLM_DEFAULT_F32_MODEL_ID="Qwen2.5-0.5B-Instruct-q4f32_1-MLC"

|

| 12 |

+

|

| 13 |

+

# The default model ID for Wllama.

|

| 14 |

+

WLLAMA_DEFAULT_MODEL_ID="qwen-2.5-0.5b"

|

| 15 |

+

|

| 16 |

+

# The base URL for the internal OpenAI compatible API. Example: `INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL="https://api.openai.com/v1"`. Leave blank to disable internal OpenAI compatible API.

|

| 17 |

+

INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL=""

|

| 18 |

+

|

| 19 |

+

# The access key for the internal OpenAI compatible API.

|

| 20 |

+

INTERNAL_OPENAI_COMPATIBLE_API_KEY=""

|

| 21 |

+

|

| 22 |

+

# The model for the internal OpenAI compatible API.

|

| 23 |

+

INTERNAL_OPENAI_COMPATIBLE_API_MODEL=""

|

| 24 |

+

|

| 25 |

+

# The name of the internal OpenAI compatible API, displayed in the UI.

|

| 26 |

+

INTERNAL_OPENAI_COMPATIBLE_API_NAME="Internal API"

|

| 27 |

+

|

| 28 |

+

# The type of inference to use by default. The possible values are:

|

| 29 |

+

# "browser" -> In the browser (Private)

|

| 30 |

+

# "openai" -> Remote Server (API)

|

| 31 |

+

# "horde" -> AI Horde (Pre-configured)

|

| 32 |

+

# "internal" -> $INTERNAL_OPENAI_COMPATIBLE_API_NAME

|

| 33 |

+

DEFAULT_INFERENCE_TYPE="browser"

|

.github/workflows/deploy.yml

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Deploy

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

workflow_dispatch:

|

| 5 |

+

|

| 6 |

+

jobs:

|

| 7 |

+

build-and-push-image:

|

| 8 |

+

name: Publish Docker image to GitHub Packages

|

| 9 |

+

runs-on: ubuntu-latest

|

| 10 |

+

env:

|

| 11 |

+

REGISTRY: ghcr.io

|

| 12 |

+

IMAGE_NAME: ${{ github.repository }}

|

| 13 |

+

permissions:

|

| 14 |

+

contents: read

|

| 15 |

+

packages: write

|

| 16 |

+

steps:

|

| 17 |

+

- name: Checkout repository

|

| 18 |

+

uses: actions/checkout@v4

|

| 19 |

+

- name: Log in to the Container registry

|

| 20 |

+

uses: docker/login-action@v3

|

| 21 |

+

with:

|

| 22 |

+

registry: ${{ env.REGISTRY }}

|

| 23 |

+

username: ${{ github.actor }}

|

| 24 |

+

password: ${{ secrets.GITHUB_TOKEN }}

|

| 25 |

+

- name: Extract metadata (tags, labels) for Docker

|

| 26 |

+

id: meta

|

| 27 |

+

uses: docker/metadata-action@v5

|

| 28 |

+

with:

|

| 29 |

+

images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

|

| 30 |

+

- name: Set up Docker Buildx

|

| 31 |

+

uses: docker/setup-buildx-action@v3

|

| 32 |

+

- name: Build and push Docker image

|

| 33 |

+

uses: docker/build-push-action@v6

|

| 34 |

+

with:

|

| 35 |

+

context: .

|

| 36 |

+

push: true

|

| 37 |

+

tags: ${{ steps.meta.outputs.tags }}

|

| 38 |

+

labels: ${{ steps.meta.outputs.labels }}

|

| 39 |

+

platforms: linux/amd64,linux/arm64

|

| 40 |

+

|

| 41 |

+

sync-to-hf:

|

| 42 |

+

name: Sync to HuggingFace Spaces

|

| 43 |

+

runs-on: ubuntu-latest

|

| 44 |

+

steps:

|

| 45 |

+

- uses: actions/checkout@v4

|

| 46 |

+

with:

|

| 47 |

+

lfs: true

|

| 48 |

+

- uses: JacobLinCool/huggingface-sync@v1

|

| 49 |

+

with:

|

| 50 |

+

github: ${{ secrets.GITHUB_TOKEN }}

|

| 51 |

+

user: ${{ vars.HF_SPACE_OWNER }}

|

| 52 |

+

space: ${{ vars.HF_SPACE_NAME }}

|

| 53 |

+

token: ${{ secrets.HF_TOKEN }}

|

| 54 |

+

configuration: "hf-space-config.yml"

|

.github/workflows/llama-cpp.yml

ADDED

|

@@ -0,0 +1,175 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Review Pull Request with llama.cpp

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

pull_request:

|

| 5 |

+

types: [opened, synchronize, reopened]

|

| 6 |

+

branches: ["main"]

|

| 7 |

+

|

| 8 |

+

concurrency:

|

| 9 |

+

group: ${{ github.workflow }}-${{ github.event.pull_request.number || github.ref }}

|

| 10 |

+

cancel-in-progress: true

|

| 11 |

+

|

| 12 |

+

jobs:

|

| 13 |

+

llama-cpp:

|

| 14 |

+

if: ${{ !contains(github.event.pull_request.labels.*.name, 'skip-ai-review') }}

|

| 15 |

+

continue-on-error: true

|

| 16 |

+

runs-on: ubuntu-latest

|

| 17 |

+

name: llama.cpp

|

| 18 |

+

permissions:

|

| 19 |

+

pull-requests: write

|

| 20 |

+

contents: read

|

| 21 |

+

timeout-minutes: 120

|

| 22 |

+

env:

|

| 23 |

+

HF_MODEL_NAME: Qwen2.5.1-Coder-7B-Instruct-GGUF

|

| 24 |

+

steps:

|

| 25 |

+

- name: Checkout Repository

|

| 26 |

+

uses: actions/checkout@v4

|

| 27 |

+

|

| 28 |

+

- name: Create temporary directory

|

| 29 |

+

run: mkdir -p /tmp/llama_review

|

| 30 |

+

|

| 31 |

+

- name: Process PR description

|

| 32 |

+

id: process_pr

|

| 33 |

+

run: |

|

| 34 |

+

PR_BODY_ESCAPED=$(cat << 'EOF'

|

| 35 |

+

${{ github.event.pull_request.body }}

|

| 36 |

+

EOF

|

| 37 |

+

)

|

| 38 |

+

PROCESSED_BODY=$(echo "$PR_BODY_ESCAPED" | sed -E 's/\[(.*?)\]\(.*?\)/\1/g')

|

| 39 |

+

echo "$PROCESSED_BODY" > /tmp/llama_review/processed_body.txt

|

| 40 |

+

|

| 41 |

+

- name: Fetch branches and output the diff in this step

|

| 42 |

+

run: |

|

| 43 |

+

git fetch origin main:main

|

| 44 |

+

git fetch origin pull/${{ github.event.pull_request.number }}/head:pr-branch

|

| 45 |

+

git diff main..pr-branch > /tmp/llama_review/diff.txt

|

| 46 |

+

|

| 47 |

+

- name: Write prompt to file

|

| 48 |

+

id: build_prompt

|

| 49 |

+

run: |

|

| 50 |

+

PR_TITLE=$(echo "${{ github.event.pull_request.title }}" | sed 's/[()]/\\&/g')

|

| 51 |

+

DIFF_CONTENT=$(cat /tmp/llama_review/diff.txt)

|

| 52 |

+

PROCESSED_BODY=$(cat /tmp/llama_review/processed_body.txt)

|

| 53 |

+

echo "<|im_start|>system

|

| 54 |

+

You are an experienced developer reviewing a Pull Request. You focus only on what matters and provide concise, actionable feedback.

|

| 55 |

+

|

| 56 |

+

Review Context:

|

| 57 |

+

Repository Name: \"${{ github.event.repository.name }}\"

|

| 58 |

+

Repository Description: \"${{ github.event.repository.description }}\"

|

| 59 |

+

Branch: \"${{ github.event.pull_request.head.ref }}\"

|

| 60 |

+

PR Title: \"$PR_TITLE\"

|

| 61 |

+

|

| 62 |

+

Guidelines:

|

| 63 |

+

1. Only comment on issues that:

|

| 64 |

+

- Could cause bugs or security issues

|

| 65 |

+

- Significantly impact performance

|

| 66 |

+

- Make the code harder to maintain

|

| 67 |

+

- Violate critical best practices

|

| 68 |

+

|

| 69 |

+

2. For each issue:

|

| 70 |

+

- Point to the specific line/file

|

| 71 |

+

- Explain why it's a problem

|

| 72 |

+

- Suggest a concrete fix

|

| 73 |

+

|

| 74 |

+

3. Praise exceptional solutions briefly, only if truly innovative

|

| 75 |

+

|

| 76 |

+

4. Skip commenting on:

|

| 77 |

+

- Minor style issues

|

| 78 |

+

- Obvious changes

|

| 79 |

+

- Working code that could be marginally improved

|

| 80 |

+

- Things that are just personal preference

|

| 81 |

+

|

| 82 |

+

Remember:

|

| 83 |

+

Less is more. If the code is good and working, just say so, with a short message.<|im_end|>

|

| 84 |

+

<|im_start|>user

|

| 85 |

+

This is the description of the pull request:

|

| 86 |

+

\`\`\`markdown

|

| 87 |

+

$PROCESSED_BODY

|

| 88 |

+

\`\`\`

|

| 89 |

+

|

| 90 |

+

And here is the diff of the changes, for you to review:

|

| 91 |

+

\`\`\`diff

|

| 92 |

+

$DIFF_CONTENT

|

| 93 |

+

\`\`\`

|

| 94 |

+

<|im_end|>

|

| 95 |

+

<|im_start|>assistant

|

| 96 |

+

### Overall Summary

|

| 97 |

+

" > /tmp/llama_review/prompt.txt

|

| 98 |

+

|

| 99 |

+

- name: Show Prompt

|

| 100 |

+

run: cat /tmp/llama_review/prompt.txt

|

| 101 |

+

|

| 102 |

+

- name: Set up Homebrew

|

| 103 |

+

uses: Homebrew/actions/setup-homebrew@master

|

| 104 |

+

|

| 105 |

+

- name: Install and cache Homebrew tools

|

| 106 |

+

uses: tecolicom/actions-use-homebrew-tools@v1

|

| 107 |

+

with:

|

| 108 |

+

tools: llama.cpp

|

| 109 |

+

|

| 110 |

+

- name: Cache the LLM

|

| 111 |

+

id: cache_llama_cpp

|

| 112 |

+

uses: actions/cache@v4

|

| 113 |

+

with:

|

| 114 |

+

path: ~/.cache/llama.cpp/

|

| 115 |

+

key: llama-cpp-${{ env.HF_MODEL_NAME }}

|

| 116 |

+

|

| 117 |

+

- name: Download and cache the LLM

|

| 118 |

+

if: steps.cache_llama_cpp.outputs.cache-hit != 'true'

|

| 119 |

+

run: |

|

| 120 |

+

mkdir -p ~/.cache/llama.cpp/

|

| 121 |

+

curl -L -o ~/.cache/llama.cpp/model.gguf https://huggingface.co/bartowski/${{ env.HF_MODEL_NAME }}/resolve/main/Qwen2.5.1-Coder-7B-Instruct-IQ4_XS.gguf

|

| 122 |

+

|

| 123 |

+

- name: Run llama.cpp

|

| 124 |

+

run: |

|

| 125 |

+

llama-server \

|

| 126 |

+

--model ~/.cache/llama.cpp/model.gguf \

|

| 127 |

+

--ctx-size 32768 \

|

| 128 |

+

--threads -1 \

|

| 129 |

+

--predict -1 \

|

| 130 |

+

--temp 0.5 \

|

| 131 |

+

--top-p 0.9 \

|

| 132 |

+

--min-p 0.1 \

|

| 133 |

+

--top-k 0 \

|

| 134 |

+

--cache-type-k q8_0 \

|

| 135 |

+

--cache-type-v q8_0 \

|

| 136 |

+

--flash-attn \

|

| 137 |

+

--port 11434 &

|

| 138 |

+

|

| 139 |

+

- name: cURL llama-server to get the completion and timings

|

| 140 |

+

run: |

|

| 141 |

+

DATA=$(jq -n --arg prompt "$(cat /tmp/llama_review/prompt.txt)" '{"prompt": $prompt}')

|

| 142 |

+

echo -e '### Review\n\n' > /tmp/llama_review/response.txt

|

| 143 |

+

# Save the full response to a temporary file

|

| 144 |

+

curl \

|

| 145 |

+

--silent \

|

| 146 |

+

--request POST \

|

| 147 |

+

--url http://localhost:11434/completion \

|

| 148 |

+

--header "Content-Type: application/json" \

|

| 149 |

+

--data "$DATA" > /tmp/llama_review/full_response.json

|

| 150 |

+

|

| 151 |

+

# Extract and append content to response.txt

|

| 152 |

+

jq -r '.content' /tmp/llama_review/full_response.json >> /tmp/llama_review/response.txt

|

| 153 |

+

|

| 154 |

+

# Pretty print the timings information

|

| 155 |

+

echo "=== Performance Metrics ==="

|

| 156 |

+

jq -r '.timings | to_entries | .[] | "\(.key): \(.value)"' /tmp/llama_review/full_response.json

|

| 157 |

+

|

| 158 |

+

- name: Show Response

|

| 159 |

+

run: cat /tmp/llama_review/response.txt

|

| 160 |

+

|

| 161 |

+

- name: Find Comment

|

| 162 |

+

uses: peter-evans/find-comment@v3

|

| 163 |

+

id: find_comment

|

| 164 |

+

with:

|

| 165 |

+

issue-number: ${{ github.event.pull_request.number }}

|

| 166 |

+

comment-author: "github-actions[bot]"

|

| 167 |

+

body-includes: "### Review"

|

| 168 |

+

|

| 169 |

+

- name: Post or Update PR Review

|

| 170 |

+

uses: peter-evans/create-or-update-comment@v4

|

| 171 |

+

with:

|

| 172 |

+

comment-id: ${{ steps.find_comment.outputs.comment-id }}

|

| 173 |

+

issue-number: ${{ github.event.pull_request.number }}

|

| 174 |

+

body-path: /tmp/llama_review/response.txt

|

| 175 |

+

edit-mode: replace

|

.github/workflows/on-pull-request-to-main.yml

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: On Pull Request To Main

|

| 2 |

+

on:

|

| 3 |

+

pull_request:

|

| 4 |

+

types: [opened, synchronize, reopened]

|

| 5 |

+

branches: ["main"]

|

| 6 |

+

jobs:

|

| 7 |

+

test-lint-ping:

|

| 8 |

+

if: ${{ !contains(github.event.pull_request.labels.*.name, 'skip-test-lint-ping') }}

|

| 9 |

+

uses: ./.github/workflows/reusable-test-lint-ping.yml

|

.github/workflows/on-push-to-main.yml

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: On Push To Main

|

| 2 |

+

on:

|

| 3 |

+

push:

|

| 4 |

+

branches: ["main"]

|

| 5 |

+

jobs:

|

| 6 |

+

test-lint-ping:

|

| 7 |

+

uses: ./.github/workflows/reusable-test-lint-ping.yml

|

.github/workflows/reusable-test-lint-ping.yml

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

on:

|

| 2 |

+

workflow_call:

|

| 3 |

+

jobs:

|

| 4 |

+

check-code-quality:

|

| 5 |

+

name: Check Code Quality

|

| 6 |

+

runs-on: ubuntu-latest

|

| 7 |

+

steps:

|

| 8 |

+

- uses: actions/checkout@v4

|

| 9 |

+

- uses: actions/setup-node@v4

|

| 10 |

+

with:

|

| 11 |

+

node-version: 20

|

| 12 |

+

cache: "npm"

|

| 13 |

+

- run: npm ci --ignore-scripts

|

| 14 |

+

- run: npm test

|

| 15 |

+

- run: npm run lint

|

| 16 |

+

check-docker-container:

|

| 17 |

+

needs: [check-code-quality]

|

| 18 |

+

name: Check Docker Container

|

| 19 |

+

runs-on: ubuntu-latest

|

| 20 |

+

steps:

|

| 21 |

+

- uses: actions/checkout@v4

|

| 22 |

+

- run: docker compose -f docker-compose.production.yml up -d

|

| 23 |

+

- name: Check if main page is available

|

| 24 |

+

run: until curl -s -o /dev/null -w "%{http_code}" localhost:7860 | grep 200; do sleep 1; done

|

| 25 |

+

- run: docker compose -f docker-compose.production.yml down

|

.github/workflows/update-searxng-docker-image.yml

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Update SearXNG Docker Image

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

schedule:

|

| 5 |

+

- cron: "0 14 * * *"

|

| 6 |

+

workflow_dispatch:

|

| 7 |

+

|

| 8 |

+

permissions:

|

| 9 |

+

contents: write

|

| 10 |

+

|

| 11 |

+

jobs:

|

| 12 |

+

update-searxng-image:

|

| 13 |

+

runs-on: ubuntu-latest

|

| 14 |

+

steps:

|

| 15 |

+

- name: Checkout code

|

| 16 |

+

uses: actions/checkout@v4

|

| 17 |

+

with:

|

| 18 |

+

token: ${{ secrets.GITHUB_TOKEN }}

|

| 19 |

+

|

| 20 |

+

- name: Get latest SearXNG image tag

|

| 21 |

+

id: get_latest_tag

|

| 22 |

+

run: |

|

| 23 |

+

LATEST_TAG=$(curl -s "https://hub.docker.com/v2/repositories/searxng/searxng/tags/?page_size=3&ordering=last_updated" | jq -r '.results[] | select(.name != "latest-build-cache" and .name != "latest") | .name' | head -n 1)

|

| 24 |

+

echo "LATEST_TAG=${LATEST_TAG}" >> $GITHUB_OUTPUT

|

| 25 |

+

|

| 26 |

+

- name: Update Dockerfile

|

| 27 |

+

run: |

|

| 28 |

+

sed -i 's|FROM searxng/searxng:.*|FROM searxng/searxng:${{ steps.get_latest_tag.outputs.LATEST_TAG }}|' Dockerfile

|

| 29 |

+

|

| 30 |

+

- name: Check for changes

|

| 31 |

+

id: git_status

|

| 32 |

+

run: |

|

| 33 |

+

git diff --exit-code || echo "changes=true" >> $GITHUB_OUTPUT

|

| 34 |

+

|

| 35 |

+

- name: Commit and push if changed

|

| 36 |

+

if: steps.git_status.outputs.changes == 'true'

|

| 37 |

+

run: |

|

| 38 |

+

git config --local user.email "github-actions[bot]@users.noreply.github.com"

|

| 39 |

+

git config --local user.name "github-actions[bot]"

|

| 40 |

+

git add Dockerfile

|

| 41 |

+

git commit -m "Update SearXNG Docker image to tag ${{ steps.get_latest_tag.outputs.LATEST_TAG }}"

|

| 42 |

+

git push

|

| 43 |

+

env:

|

| 44 |

+

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

.gitignore

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

node_modules

|

| 2 |

+

.DS_Store

|

| 3 |

+

/client/dist

|

| 4 |

+

/server/models

|

| 5 |

+

.vscode

|

| 6 |

+

/vite-build-stats.html

|

| 7 |

+

.env

|

.npmrc

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

legacy-peer-deps = true

|

Dockerfile

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Use the SearXNG image as the base

|

| 2 |

+

FROM searxng/searxng:2024.12.16-65c970bdf

|

| 3 |

+

|

| 4 |

+

# Set the default port to 7860 if not provided

|

| 5 |

+

ENV PORT=7860

|

| 6 |

+

|

| 7 |

+

# Expose the port specified by the PORT environment variable

|

| 8 |

+

EXPOSE $PORT

|

| 9 |

+

|

| 10 |

+

# Install necessary packages using Alpine's package manager

|

| 11 |

+

RUN apk add --update \

|

| 12 |

+

nodejs \

|

| 13 |

+

npm \

|

| 14 |

+

git \

|

| 15 |

+

build-base \

|

| 16 |

+

cmake \

|

| 17 |

+

ccache

|

| 18 |

+

|

| 19 |

+

# Set the SearXNG settings folder path

|

| 20 |

+

ARG SEARXNG_SETTINGS_FOLDER=/etc/searxng

|

| 21 |

+

|

| 22 |

+

# Modify SearXNG configuration:

|

| 23 |

+

# 1. Change output format from HTML to JSON

|

| 24 |

+

# 2. Remove user switching in the entrypoint script

|

| 25 |

+

# 3. Create and set permissions for the settings folder

|

| 26 |

+

RUN sed -i 's/- html/- json/' /usr/local/searxng/searx/settings.yml \

|

| 27 |

+

&& sed -i 's/su-exec searxng:searxng //' /usr/local/searxng/dockerfiles/docker-entrypoint.sh \

|

| 28 |

+

&& mkdir -p ${SEARXNG_SETTINGS_FOLDER} \

|

| 29 |

+

&& chmod 777 ${SEARXNG_SETTINGS_FOLDER}

|

| 30 |

+

|

| 31 |

+

# Set up user and directory structure

|

| 32 |

+

ARG USERNAME=user

|

| 33 |

+

ARG HOME_DIR=/home/${USERNAME}

|

| 34 |

+

ARG APP_DIR=${HOME_DIR}/app

|

| 35 |

+

|

| 36 |

+

# Create a non-root user and set up the application directory

|

| 37 |

+

RUN adduser -D -u 1000 ${USERNAME} \

|

| 38 |

+

&& mkdir -p ${APP_DIR} \

|

| 39 |

+

&& chown -R ${USERNAME}:${USERNAME} ${HOME_DIR}

|

| 40 |

+

|

| 41 |

+

# Switch to the non-root user

|

| 42 |

+

USER ${USERNAME}

|

| 43 |

+

|

| 44 |

+

# Set the working directory to the application directory

|

| 45 |

+

WORKDIR ${APP_DIR}

|

| 46 |

+

|

| 47 |

+

# Define environment variables that can be passed to the container during build.

|

| 48 |

+

# This approach allows for dynamic configuration without relying on a `.env` file,

|

| 49 |

+

# which might not be suitable for all deployment scenarios.

|

| 50 |

+

ARG ACCESS_KEYS

|

| 51 |

+

ARG ACCESS_KEY_TIMEOUT_HOURS

|

| 52 |

+

ARG WEBLLM_DEFAULT_F16_MODEL_ID

|

| 53 |

+

ARG WEBLLM_DEFAULT_F32_MODEL_ID

|

| 54 |

+

ARG WLLAMA_DEFAULT_MODEL_ID

|

| 55 |

+

ARG INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL

|

| 56 |

+

ARG INTERNAL_OPENAI_COMPATIBLE_API_KEY

|

| 57 |

+

ARG INTERNAL_OPENAI_COMPATIBLE_API_MODEL

|

| 58 |

+

ARG INTERNAL_OPENAI_COMPATIBLE_API_NAME

|

| 59 |

+

ARG DEFAULT_INFERENCE_TYPE

|

| 60 |

+

|

| 61 |

+

# Copy package.json, package-lock.json, and .npmrc files

|

| 62 |

+

COPY --chown=${USERNAME}:${USERNAME} ./package.json ./package.json

|

| 63 |

+

COPY --chown=${USERNAME}:${USERNAME} ./package-lock.json ./package-lock.json

|

| 64 |

+

COPY --chown=${USERNAME}:${USERNAME} ./.npmrc ./.npmrc

|

| 65 |

+

|

| 66 |

+

# Install Node.js dependencies

|

| 67 |

+

RUN npm ci

|

| 68 |

+

|

| 69 |

+

# Copy the rest of the application files

|

| 70 |

+

COPY --chown=${USERNAME}:${USERNAME} . .

|

| 71 |

+

|

| 72 |

+

# Configure Git to treat the app directory as safe

|

| 73 |

+

RUN git config --global --add safe.directory ${APP_DIR}

|

| 74 |

+

|

| 75 |

+

# Build the application

|

| 76 |

+

RUN npm run build

|

| 77 |

+

|

| 78 |

+

# Set the entrypoint to use a shell

|

| 79 |

+

ENTRYPOINT [ "/bin/sh", "-c" ]

|

| 80 |

+

|

| 81 |

+

# Run SearXNG in the background and start the Node.js application using PM2

|

| 82 |

+

CMD [ "(/usr/local/searxng/dockerfiles/docker-entrypoint.sh -f > /dev/null 2>&1) & (npx pm2 start ecosystem.config.cjs && npx pm2 logs production-server)" ]

|

README.md

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

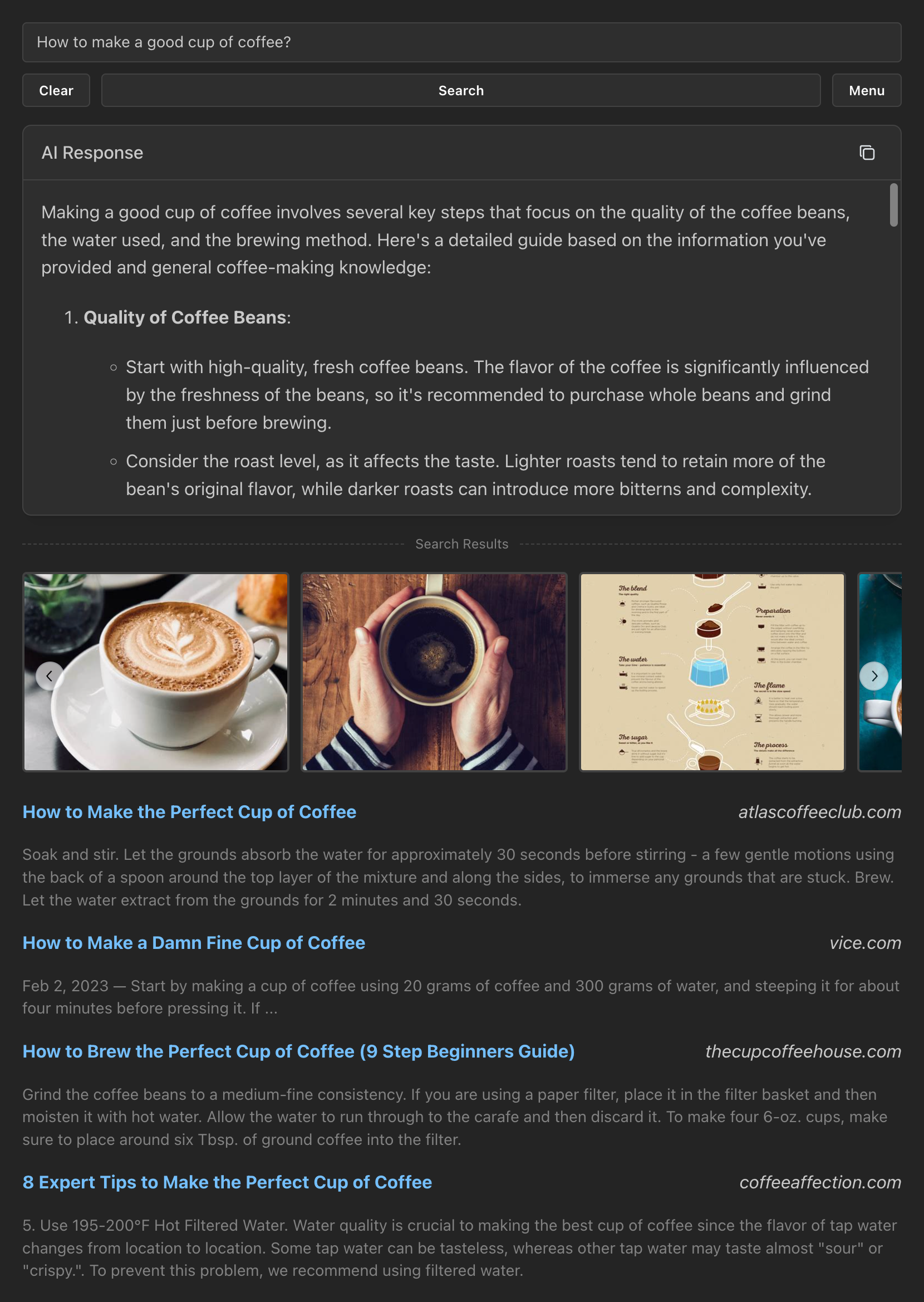

+

title: MiniSearch

|

| 3 |

+

emoji: 👌🔍

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: yellow

|

| 6 |

+

sdk: docker

|

| 7 |

+

short_description: Minimalist web-searching app with browser-based AI assistant

|

| 8 |

+

pinned: true

|

| 9 |

+

custom_headers:

|

| 10 |

+

cross-origin-embedder-policy: require-corp

|

| 11 |

+

cross-origin-opener-policy: same-origin

|

| 12 |

+

cross-origin-resource-policy: cross-origin

|

| 13 |

+

---

|

| 14 |

+

|

| 15 |

+

# MiniSearch

|

| 16 |

+

|

| 17 |

+

A minimalist web-searching app with an AI assistant that runs directly from your browser.

|

| 18 |

+

|

| 19 |

+

Live demo: https://felladrin-minisearch.hf.space

|

| 20 |

+

|

| 21 |

+

## Screenshot

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## Features

|

| 26 |

+

|

| 27 |

+

- **Privacy-focused**: [No tracking, no ads, no data collection](https://docs.searxng.org/own-instance.html#how-does-searxng-protect-privacy)

|

| 28 |

+

- **Easy to use**: Minimalist yet intuitive interface for all users

|

| 29 |

+

- **Cross-platform**: Models run inside the browser, both on desktop and mobile

|

| 30 |

+

- **Integrated**: Search from the browser address bar by setting it as the default search engine

|

| 31 |

+

- **Efficient**: Models are loaded and cached only when needed

|

| 32 |

+

- **Customizable**: Tweakable settings for search results and text generation

|

| 33 |

+

- **Open-source**: [The code is available for inspection and contribution at GitHub](https://github.com/felladrin/MiniSearch)

|

| 34 |

+

|

| 35 |

+

## Prerequisites

|

| 36 |

+

|

| 37 |

+

- [Docker](https://docs.docker.com/get-docker/)

|

| 38 |

+

|

| 39 |

+

## Getting started

|

| 40 |

+

|

| 41 |

+

Here are the easiest ways to get started with MiniSearch. Pick the one that suits you best.

|

| 42 |

+

|

| 43 |

+

**Option 1** - Use [MiniSearch's Docker Image](https://github.com/felladrin/MiniSearch/pkgs/container/minisearch) by running in your terminal:

|

| 44 |

+

|

| 45 |

+

```bash

|

| 46 |

+

docker run -p 7860:7860 ghcr.io/felladrin/minisearch:main

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

**Option 2** - Add MiniSearch's Docker Image to your existing Docker Compose file:

|

| 50 |

+

|

| 51 |

+

```yaml

|

| 52 |

+

services:

|

| 53 |

+

minisearch:

|

| 54 |

+

image: ghcr.io/felladrin/minisearch:main

|

| 55 |

+

ports:

|

| 56 |

+

- "7860:7860"

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

**Option 3** - Build from source by [downloading the repository files](https://github.com/felladrin/MiniSearch/archive/refs/heads/main.zip) and running:

|

| 60 |

+

|

| 61 |

+

```bash

|

| 62 |

+

docker compose -f docker-compose.production.yml up --build

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

Once the container is running, open http://localhost:7860 in your browser and start searching!

|

| 66 |

+

|

| 67 |

+

## Frequently asked questions

|

| 68 |

+

|

| 69 |

+

<details>

|

| 70 |

+

<summary>How do I search via the browser's address bar?</summary>

|

| 71 |

+

<p>

|

| 72 |

+

You can set MiniSearch as your browser's address-bar search engine using the pattern <code>http://localhost:7860/?q=%s</code>, in which your search term replaces <code>%s</code>.

|

| 73 |

+

</p>

|

| 74 |

+

</details>

|

| 75 |

+

|

| 76 |

+

<details>

|

| 77 |

+

<summary>How do I search via Raycast?</summary>

|

| 78 |

+

<p>

|

| 79 |

+

You can add <a href="https://ray.so/quicklinks/shared?quicklinks=%7B%22link%22:%22https:%5C/%5C/felladrin-minisearch.hf.space%5C/?q%3D%7BQuery%7D%22,%22name%22:%22MiniSearch%22%7D" target="_blank">this Quicklink</a> to Raycast, so typying your query will open MiniSearch with the search results. You can also edit it to point to your own domain.

|

| 80 |

+

</p>

|

| 81 |

+

<img width="744" alt="image" src="https://github.com/user-attachments/assets/521dca22-c77b-42de-8cc8-9feb06f9a97e">

|

| 82 |

+

</details>

|

| 83 |

+

|

| 84 |

+

<details>

|

| 85 |

+

<summary>Can I use custom models via OpenAI-Compatible API?</summary>

|

| 86 |

+

<p>

|

| 87 |

+

Yes! For this, open the Menu and change the "AI Processing Location" to <code>Remote server (API)</code>. Then configure the Base URL, and optionally set an API Key and a Model to use.

|

| 88 |

+

</p>

|

| 89 |

+

</details>

|

| 90 |

+

|

| 91 |

+

<details>

|

| 92 |

+

<summary>How do I restrict the access to my MiniSearch instance via password?</summary>

|

| 93 |

+

<p>

|

| 94 |

+

Create a <code>.env</code> file and set a value for <code>ACCESS_KEYS</code>. Then reset the MiniSearch docker container.

|

| 95 |

+

</p>

|

| 96 |

+

<p>

|

| 97 |

+

For example, if you to set the password to <code>PepperoniPizza</code>, then this is what you should add to your <code>.env</code>:<br/>

|

| 98 |

+

<code>ACCESS_KEYS="PepperoniPizza"</code>

|

| 99 |

+

</p>

|

| 100 |

+

<p>

|

| 101 |

+

You can find more examples in the <code>.env.example</code> file.

|

| 102 |

+

</p>

|

| 103 |

+

</details>

|

| 104 |

+

|

| 105 |

+

<details>

|

| 106 |

+

<summary>I want to serve MiniSearch to other users, allowing them to use my own OpenAI-Compatible API key, but without revealing it to them. Is it possible?</summary>

|

| 107 |

+

<p>Yes! In MiniSearch, we call this text-generation feature "Internal OpenAI-Compatible API". To use this it:</p>

|

| 108 |

+

<ol>

|

| 109 |

+

<li>Set up your OpenAI-Compatible API endpoint by configuring the following environment variables in your <code>.env</code> file:

|

| 110 |

+

<ul>

|

| 111 |

+

<li><code>INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL</code>: The base URL for your API</li>

|

| 112 |

+

<li><code>INTERNAL_OPENAI_COMPATIBLE_API_KEY</code>: Your API access key</li>

|

| 113 |

+

<li><code>INTERNAL_OPENAI_COMPATIBLE_API_MODEL</code>: The model to use</li>

|

| 114 |

+

<li><code>INTERNAL_OPENAI_COMPATIBLE_API_NAME</code>: The name to display in the UI</li>

|

| 115 |

+

</ul>

|

| 116 |

+

</li>

|

| 117 |

+

<li>Restart MiniSearch server.</li>

|

| 118 |

+

<li>In the MiniSearch menu, select the new option (named as per your <code>INTERNAL_OPENAI_COMPATIBLE_API_NAME</code> setting) from the "AI Processing Location" dropdown.</li>

|

| 119 |

+

</ol>

|

| 120 |

+

</details>

|

| 121 |

+

|

| 122 |

+

<details>

|

| 123 |

+

<summary>How can I contribute to the development of this tool?</summary>

|

| 124 |

+

<p>Fork this repository and clone it. Then, start the development server by running the following command:</p>

|

| 125 |

+

<p><code>docker compose up</code></p>

|

| 126 |

+

<p>Make your changes, push them to your fork, and open a pull request! All contributions are welcome!</p>

|

| 127 |

+

</details>

|

| 128 |

+

|

| 129 |

+

<details>

|

| 130 |

+

<summary>Why is MiniSearch built upon SearXNG's Docker Image and using a single image instead of composing it from multiple services?</summary>

|

| 131 |

+

<p>There are a few reasons for this:</p>

|

| 132 |

+

<ul>

|

| 133 |

+

<li>MiniSearch utilizes SearXNG as its meta-search engine.</li>

|

| 134 |

+

<li>Manual installation of SearXNG is not trivial, so we use the docker image they provide, which has everything set up.</li>

|

| 135 |

+

<li>SearXNG only provides a Docker Image based on Alpine Linux.</li>

|

| 136 |

+

<li>The user of the image needs to be customized in a specific way to run on HuggingFace Spaces, where MiniSearch's demo runs.</li>

|

| 137 |

+

<li>HuggingFace only accepts a single docker image. It doesn't run docker compose or multiple images, unfortunately.</li>

|

| 138 |

+

</ul>

|

| 139 |

+

</details>

|

biome.json

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"$schema": "https://biomejs.dev/schemas/1.9.4/schema.json",

|

| 3 |

+

"vcs": {

|

| 4 |

+

"enabled": false,

|

| 5 |

+

"clientKind": "git",

|

| 6 |

+

"useIgnoreFile": false

|

| 7 |

+

},

|

| 8 |

+

"files": {

|

| 9 |

+

"ignoreUnknown": false,

|

| 10 |

+

"ignore": []

|

| 11 |

+

},

|

| 12 |

+

"formatter": {

|

| 13 |

+

"enabled": true,

|

| 14 |

+

"indentStyle": "space"

|

| 15 |

+

},

|

| 16 |

+

"organizeImports": {

|

| 17 |

+

"enabled": true

|

| 18 |

+

},

|

| 19 |

+

"linter": {

|

| 20 |

+

"enabled": true,

|

| 21 |

+

"rules": {

|

| 22 |

+

"recommended": true

|

| 23 |

+

}

|

| 24 |

+

},

|

| 25 |

+

"javascript": {

|

| 26 |

+

"formatter": {

|

| 27 |

+

"quoteStyle": "double"

|

| 28 |

+

}

|

| 29 |

+

}

|

| 30 |

+

}

|

client/components/AiResponse/AiModelDownloadAllowanceContent.tsx

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import { Alert, Button, Group, Text } from "@mantine/core";

|

| 2 |

+

import { IconCheck, IconInfoCircle, IconX } from "@tabler/icons-react";

|

| 3 |

+

import { usePubSub } from "create-pubsub/react";

|

| 4 |

+

import { useState } from "react";

|

| 5 |

+

import { addLogEntry } from "../../modules/logEntries";

|

| 6 |

+

import { settingsPubSub } from "../../modules/pubSub";

|

| 7 |

+

|

| 8 |

+

export default function AiModelDownloadAllowanceContent() {

|

| 9 |

+

const [settings, setSettings] = usePubSub(settingsPubSub);

|

| 10 |

+

const [hasDeniedDownload, setDeniedDownload] = useState(false);

|

| 11 |

+

|

| 12 |

+

const handleAccept = () => {

|

| 13 |

+

setSettings({

|

| 14 |

+

...settings,

|

| 15 |

+

allowAiModelDownload: true,

|

| 16 |

+

});

|

| 17 |

+

addLogEntry("User allowed the AI model download");

|

| 18 |

+

};

|

| 19 |

+

|

| 20 |

+

const handleDecline = () => {

|

| 21 |

+

setDeniedDownload(true);

|

| 22 |

+

addLogEntry("User denied the AI model download");

|

| 23 |

+

};

|

| 24 |

+

|

| 25 |

+

return hasDeniedDownload ? null : (

|

| 26 |

+

<Alert

|

| 27 |

+

variant="light"

|

| 28 |

+

color="blue"

|

| 29 |

+

title="Allow AI model download?"

|

| 30 |

+

icon={<IconInfoCircle />}

|

| 31 |

+

>

|

| 32 |

+

<Text size="sm" mb="md">

|

| 33 |

+

To obtain AI responses, a language model needs to be downloaded to your

|

| 34 |

+

browser. Enabling this option lets the app store it and load it

|

| 35 |

+

instantly on subsequent uses.

|

| 36 |

+

</Text>

|

| 37 |

+

<Text size="sm" mb="md">

|

| 38 |

+

Please note that the download size ranges from 100 MB to 4 GB, depending

|

| 39 |

+

on the model you select in the Menu, so it's best to avoid using mobile

|

| 40 |

+

data for this.

|

| 41 |

+

</Text>

|

| 42 |

+

<Group justify="flex-end" mt="md">

|

| 43 |

+

<Button

|

| 44 |

+

variant="subtle"

|

| 45 |

+

color="gray"

|

| 46 |

+

leftSection={<IconX size="1rem" />}

|

| 47 |

+

onClick={handleDecline}

|

| 48 |

+

size="xs"

|

| 49 |

+

>

|

| 50 |

+

Not now

|

| 51 |

+

</Button>

|

| 52 |

+

<Button

|

| 53 |

+

leftSection={<IconCheck size="1rem" />}

|

| 54 |

+

onClick={handleAccept}

|

| 55 |

+

size="xs"

|

| 56 |

+

>

|

| 57 |

+

Allow download

|

| 58 |

+

</Button>

|

| 59 |

+

</Group>

|

| 60 |

+

</Alert>

|

| 61 |

+

);

|

| 62 |

+

}

|

client/components/AiResponse/AiResponseContent.tsx

ADDED

|

@@ -0,0 +1,199 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import {

|

| 2 |

+

ActionIcon,

|

| 3 |

+

Alert,

|

| 4 |

+

Badge,

|

| 5 |

+

Box,

|

| 6 |

+

Card,

|

| 7 |

+

Group,

|

| 8 |

+

ScrollArea,

|

| 9 |

+

Text,

|

| 10 |

+

Tooltip,

|

| 11 |

+

} from "@mantine/core";

|

| 12 |

+

import {

|

| 13 |

+

IconArrowsMaximize,

|

| 14 |

+

IconArrowsMinimize,

|

| 15 |

+

IconHandStop,

|

| 16 |

+

IconInfoCircle,

|

| 17 |

+

IconRefresh,

|

| 18 |

+

IconVolume2,

|

| 19 |

+

} from "@tabler/icons-react";

|

| 20 |

+

import type { PublishFunction } from "create-pubsub";

|

| 21 |

+

import { usePubSub } from "create-pubsub/react";

|

| 22 |

+

import { type ReactNode, Suspense, lazy, useMemo, useState } from "react";

|

| 23 |

+

import { settingsPubSub } from "../../modules/pubSub";

|

| 24 |

+

import { searchAndRespond } from "../../modules/textGeneration";

|

| 25 |

+

|

| 26 |

+

const FormattedMarkdown = lazy(() => import("./FormattedMarkdown"));

|

| 27 |

+

const CopyIconButton = lazy(() => import("./CopyIconButton"));

|

| 28 |

+

|

| 29 |

+

export default function AiResponseContent({

|

| 30 |

+

textGenerationState,

|

| 31 |

+

response,

|

| 32 |

+

setTextGenerationState,

|

| 33 |

+

}: {

|

| 34 |

+

textGenerationState: string;

|

| 35 |

+

response: string;

|

| 36 |

+

setTextGenerationState: PublishFunction<

|

| 37 |

+

| "failed"

|

| 38 |

+

| "awaitingSearchResults"

|

| 39 |

+

| "preparingToGenerate"

|

| 40 |

+

| "idle"

|

| 41 |

+

| "loadingModel"

|

| 42 |

+

| "generating"

|

| 43 |

+

| "interrupted"

|

| 44 |

+

| "completed"

|

| 45 |

+

>;

|

| 46 |

+

}) {

|

| 47 |

+

const [settings, setSettings] = usePubSub(settingsPubSub);

|

| 48 |

+

const [isSpeaking, setIsSpeaking] = useState(false);

|

| 49 |

+

|

| 50 |

+

const ConditionalScrollArea = useMemo(

|

| 51 |

+

() =>

|

| 52 |

+

({ children }: { children: ReactNode }) => {

|

| 53 |

+

return settings.enableAiResponseScrolling ? (

|

| 54 |

+

<ScrollArea.Autosize mah={300} type="auto" offsetScrollbars>

|

| 55 |

+

{children}

|

| 56 |

+

</ScrollArea.Autosize>

|

| 57 |

+

) : (

|

| 58 |

+

<Box>{children}</Box>

|

| 59 |

+

);

|

| 60 |

+

},

|

| 61 |

+

[settings.enableAiResponseScrolling],

|

| 62 |

+

);

|

| 63 |

+

|

| 64 |

+

function speakResponse(text: string) {

|

| 65 |

+

if (isSpeaking) {

|

| 66 |

+

self.speechSynthesis.cancel();

|

| 67 |

+

setIsSpeaking(false);

|

| 68 |

+

return;

|

| 69 |

+

}

|

| 70 |

+

|

| 71 |

+

const cleanText = text.replace(/[#*`_~\[\]]/g, "");

|

| 72 |

+

const utterance = new SpeechSynthesisUtterance(cleanText);

|

| 73 |

+

|

| 74 |

+

const voices = self.speechSynthesis.getVoices();

|

| 75 |

+