Spaces:

Sleeping

Sleeping

arnavkumar24

commited on

Commit

•

89040ed

1

Parent(s):

ebbe80d

Addon

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- AudioSep_Colab.ipynb +128 -0

- CONTRIBUTING.md +92 -0

- Dockerfile +22 -0

- LICENSE +21 -0

- assets/results.png +0 -0

- benchmark.py +116 -0

- callbacks/base.py +35 -0

- checkpoint/audiosep_base_4M_steps.ckpt +3 -0

- checkpoint/music_speech_audioset_epoch_15_esc_89.98.pt +3 -0

- cog.yaml +21 -0

- config/audiosep_base.yaml +41 -0

- data/audiotext_dataset.py +91 -0

- data/datamodules.py +122 -0

- data/waveform_mixers.py +127 -0

- datafiles/template.json +8 -0

- environment.yml +326 -0

- evaluation/evaluate_audiocaps.py +110 -0

- evaluation/evaluate_audioset.py +155 -0

- evaluation/evaluate_clotho.py +102 -0

- evaluation/evaluate_esc50.py +102 -0

- evaluation/evaluate_music.py +118 -0

- evaluation/evaluate_vggsound.py +114 -0

- evaluation/metadata/audiocaps_eval.csv +0 -0

- evaluation/metadata/audioset_eval.csv +0 -0

- evaluation/metadata/class_labels_indices.csv +528 -0

- evaluation/metadata/clotho_eval.csv +0 -0

- evaluation/metadata/esc50_eval.csv +0 -0

- evaluation/metadata/music_eval.csv +0 -0

- evaluation/metadata/vggsound_eval.csv +0 -0

- losses.py +17 -0

- models/CLAP/__init__.py +0 -0

- models/CLAP/__pycache__/__init__.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__init__.py +25 -0

- models/CLAP/open_clip/__pycache__/__init__.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/factory.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/feature_fusion.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/htsat.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/loss.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/model.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/openai.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/pann_model.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/pretrained.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/timm_model.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/tokenizer.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/transform.cpython-310.pyc +0 -0

- models/CLAP/open_clip/__pycache__/utils.cpython-310.pyc +0 -0

- models/CLAP/open_clip/bert.py +40 -0

- models/CLAP/open_clip/bpe_simple_vocab_16e6.txt.gz +3 -0

- models/CLAP/open_clip/factory.py +277 -0

- models/CLAP/open_clip/feature_fusion.py +192 -0

AudioSep_Colab.ipynb

ADDED

|

@@ -0,0 +1,128 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": [

|

| 9 |

+

"from pathlib import Path\n",

|

| 10 |

+

"\n",

|

| 11 |

+

"repo_path = Path(\"/content/AudioSep\")\n",

|

| 12 |

+

"if not repo_path.exists():\n",

|

| 13 |

+

" !git clone https://github.com/Audio-AGI/AudioSep.git\n",

|

| 14 |

+

"\n",

|

| 15 |

+

"%cd /content/AudioSep"

|

| 16 |

+

]

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"cell_type": "code",

|

| 20 |

+

"execution_count": null,

|

| 21 |

+

"metadata": {

|

| 22 |

+

"id": "pjIhw5ECS_3_"

|

| 23 |

+

},

|

| 24 |

+

"outputs": [],

|

| 25 |

+

"source": [

|

| 26 |

+

"!pip install torchlibrosa==0.1.0 gradio==3.47.1 gdown lightning transformers==4.28.1 ftfy braceexpand webdataset soundfile wget h5py"

|

| 27 |

+

]

|

| 28 |

+

},

|

| 29 |

+

{

|

| 30 |

+

"cell_type": "code",

|

| 31 |

+

"execution_count": null,

|

| 32 |

+

"metadata": {

|

| 33 |

+

"id": "t6h9KB3CcjBd"

|

| 34 |

+

},

|

| 35 |

+

"outputs": [],

|

| 36 |

+

"source": [

|

| 37 |

+

"checkpoints_dir = Path(\"checkpoint\")\n",

|

| 38 |

+

"checkpoints_dir.mkdir(exist_ok=True)\n",

|

| 39 |

+

"\n",

|

| 40 |

+

"models = (\n",

|

| 41 |

+

" (\n",

|

| 42 |

+

" \"https://huggingface.co/spaces/badayvedat/AudioSep/resolve/main/checkpoint/audiosep_base_4M_steps.ckpt\",\n",

|

| 43 |

+

" checkpoints_dir / \"audiosep_base_4M_steps.ckpt\"\n",

|

| 44 |

+

" ),\n",

|

| 45 |

+

" (\n",

|

| 46 |

+

" \"https://huggingface.co/spaces/badayvedat/AudioSep/resolve/main/checkpoint/music_speech_audioset_epoch_15_esc_89.98.pt\",\n",

|

| 47 |

+

" checkpoints_dir / \"music_speech_audioset_epoch_15_esc_89.98.pt\"\n",

|

| 48 |

+

" )\n",

|

| 49 |

+

")\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"for model_url, model_path in models:\n",

|

| 52 |

+

" if not model_path.exists():\n",

|

| 53 |

+

" !wget {model_url} -O {model_path}"

|

| 54 |

+

]

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"cell_type": "code",

|

| 58 |

+

"execution_count": null,

|

| 59 |

+

"metadata": {

|

| 60 |

+

"id": "3uDrzCQyY58h"

|

| 61 |

+

},

|

| 62 |

+

"outputs": [],

|

| 63 |

+

"source": [

|

| 64 |

+

"!wget \"https://audio-agi.github.io/Separate-Anything-You-Describe/demos/exp31_water drops_mixture.wav\""

|

| 65 |

+

]

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"cell_type": "code",

|

| 69 |

+

"execution_count": null,

|

| 70 |

+

"metadata": {

|

| 71 |

+

"id": "0nr77CGXTwO1"

|

| 72 |

+

},

|

| 73 |

+

"outputs": [],

|

| 74 |

+

"source": [

|

| 75 |

+

"import torch\n",

|

| 76 |

+

"from pipeline import build_audiosep, inference\n",

|

| 77 |

+

"\n",

|

| 78 |

+

"device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')\n",

|

| 79 |

+

"\n",

|

| 80 |

+

"model = build_audiosep(\n",

|

| 81 |

+

" config_yaml='config/audiosep_base.yaml',\n",

|

| 82 |

+

" checkpoint_path=str(models[0][1]),\n",

|

| 83 |

+

" device=device)\n",

|

| 84 |

+

"\n",

|

| 85 |

+

"audio_file = 'exp31_water drops_mixture.wav'\n",

|

| 86 |

+

"text = 'water drops'\n",

|

| 87 |

+

"output_file='separated_audio.wav'\n",

|

| 88 |

+

"\n",

|

| 89 |

+

"# AudioSep processes the audio at 32 kHz sampling rate\n",

|

| 90 |

+

"inference(model, audio_file, text, output_file, device)"

|

| 91 |

+

]

|

| 92 |

+

},

|

| 93 |

+

{

|

| 94 |

+

"cell_type": "code",

|

| 95 |

+

"execution_count": null,

|

| 96 |

+

"metadata": {

|

| 97 |

+

"id": "kssOe0pbPSWp"

|

| 98 |

+

},

|

| 99 |

+

"outputs": [],

|

| 100 |

+

"source": [

|

| 101 |

+

"print(f\"The separated audio is saved to: '{output_file}' file.\")"

|

| 102 |

+

]

|

| 103 |

+

},

|

| 104 |

+

{

|

| 105 |

+

"cell_type": "code",

|

| 106 |

+

"execution_count": null,

|

| 107 |

+

"metadata": {

|

| 108 |

+

"id": "sl35U3dAR6KN"

|

| 109 |

+

},

|

| 110 |

+

"outputs": [],

|

| 111 |

+

"source": []

|

| 112 |

+

}

|

| 113 |

+

],

|

| 114 |

+

"metadata": {

|

| 115 |

+

"colab": {

|

| 116 |

+

"provenance": []

|

| 117 |

+

},

|

| 118 |

+

"kernelspec": {

|

| 119 |

+

"display_name": "Python 3",

|

| 120 |

+

"name": "python3"

|

| 121 |

+

},

|

| 122 |

+

"language_info": {

|

| 123 |

+

"name": "python"

|

| 124 |

+

}

|

| 125 |

+

},

|

| 126 |

+

"nbformat": 4,

|

| 127 |

+

"nbformat_minor": 0

|

| 128 |

+

}

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 🎵 Contributing to AudioSep

|

| 2 |

+

|

| 3 |

+

Welcome to the AudioSep repository, where your contributions can harmonize the world of audio separation. To ensure a harmonious and organized collaboration, please follow the contribution guidelines outlined below.

|

| 4 |

+

|

| 5 |

+

## **Submitting Contributions**

|

| 6 |

+

|

| 7 |

+

To contribute to this project, please adhere to the following steps:

|

| 8 |

+

|

| 9 |

+

### **1. Choose or Create an Issue**

|

| 10 |

+

|

| 11 |

+

- Start by reviewing the existing issues to identify areas where your contributions can make a significant impact.

|

| 12 |

+

- If you have ideas for new features, enhancements, or bug fixes, feel free to create a new issue to propose your contributions. Provide comprehensive details for clarity.

|

| 13 |

+

|

| 14 |

+

### **2. Fork the Repository**

|

| 15 |

+

|

| 16 |

+

- To initiate your contribution, fork the primary repository by clicking the "Fork" button. This will create a copy of the repository in your personal GitHub account.

|

| 17 |

+

|

| 18 |

+

### **3. Clone Your Forked Repository**

|

| 19 |

+

|

| 20 |

+

- Clone your forked repository to your local development environment using the following command:

|

| 21 |

+

|

| 22 |

+

```bash

|

| 23 |

+

git clone https://github.com/your-username/AudioSep.git

|

| 24 |

+

```

|

| 25 |

+

|

| 26 |

+

### **4. Set Up the Upstream Remote**

|

| 27 |

+

|

| 28 |

+

- Maintain a reference to the primary project by adding it as the upstream remote:

|

| 29 |

+

|

| 30 |

+

```bash

|

| 31 |

+

cd AudioSep

|

| 32 |

+

git remote add upstream https://github.com/Audio-AGI/AudioSep

|

| 33 |

+

git remote -v

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

### **5. Create a New Branch**

|

| 37 |

+

|

| 38 |

+

- Before starting your contribution, establish a new branch dedicated to your specific task:

|

| 39 |

+

|

| 40 |

+

```bash

|

| 41 |

+

git checkout -b my-contribution

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

## **Working on Your Contribution**

|

| 45 |

+

|

| 46 |

+

Now that your development environment is ready and a new branch is established, you can start working on your contribution. Please ensure you adhere to the following guidelines:

|

| 47 |

+

|

| 48 |

+

### **6. Make Changes**

|

| 49 |

+

|

| 50 |

+

- Implement the necessary changes, including code additions, enhancements, or bug fixes. Ensure your contributions are well-structured, documented, and aligned with the project's objectives.

|

| 51 |

+

|

| 52 |

+

### **7. Commit Your Changes**

|

| 53 |

+

|

| 54 |

+

- Commit your changes using informative commit messages that clearly convey the purpose of your contributions:

|

| 55 |

+

|

| 56 |

+

```bash

|

| 57 |

+

git commit -m "Add a descriptive message here"

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

### **8. Push Your Changes**

|

| 61 |

+

|

| 62 |

+

- Push the committed changes to your remote repository on GitHub:

|

| 63 |

+

|

| 64 |

+

```bash

|

| 65 |

+

git push origin my-contribution

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

### **9. Create a Pull Request**

|

| 69 |

+

|

| 70 |

+

- Visit your repository on GitHub and click the "New Pull Request" button to initiate a pull request from your branch to the primary repository.

|

| 71 |

+

|

| 72 |

+

### **10. Await Review**

|

| 73 |

+

|

| 74 |

+

- Your pull request will undergo review, and feedback will be provided by the project maintainers or fellow contributors. Be prepared to address any suggested changes or refinements.

|

| 75 |

+

|

| 76 |

+

## **Community Engagement**

|

| 77 |

+

|

| 78 |

+

While contributing, please consider engaging with the community in the following ways:

|

| 79 |

+

|

| 80 |

+

### **11. Join Discussions**

|

| 81 |

+

|

| 82 |

+

- Participate in discussions related to audio separation techniques and their applications. Share your insights, experiences, and expertise in the audio field.

|

| 83 |

+

|

| 84 |

+

### **12. Share Ideas**

|

| 85 |

+

|

| 86 |

+

- If you have innovative ideas for advancing the project or optimizing audio separation, such as new algorithms or research findings, feel free to open issues to initiate productive discussions.

|

| 87 |

+

|

| 88 |

+

## **Acknowledgment**

|

| 89 |

+

|

| 90 |

+

We appreciate your dedication to the world of audio separation. Your contributions play a crucial role in harmonizing audio and improving the listening experience for all. If you have questions or require assistance, please don't hesitate to contact the project maintainers.

|

| 91 |

+

|

| 92 |

+

Thank you for your valuable contributions, and we eagerly anticipate collaborating with you on AudioSep! 🎶🙌

|

Dockerfile

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.10.11

|

| 2 |

+

|

| 3 |

+

# Copy the current directory contents into the container at .

|

| 4 |

+

COPY . .

|

| 5 |

+

|

| 6 |

+

# Set the working directory to /

|

| 7 |

+

WORKDIR /

|

| 8 |

+

|

| 9 |

+

# Install requirements.txt

|

| 10 |

+

RUN pip install --no-cache-dir --upgrade -r /requirements.txt

|

| 11 |

+

|

| 12 |

+

RUN useradd -m -u 1000 user

|

| 13 |

+

USER user

|

| 14 |

+

ENV HOME=/home/user \

|

| 15 |

+

PATH=/home/user/.local/bin:$PATH

|

| 16 |

+

|

| 17 |

+

WORKDIR $HOME/app

|

| 18 |

+

|

| 19 |

+

COPY --chown=user . $HOME/app

|

| 20 |

+

|

| 21 |

+

# Start the FastAPI app on port 7860, the default port expected by Spaces

|

| 22 |

+

CMD ["uvicorn", "server:app", "--host", "0.0.0.0", "--port", "7860"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Xubo Liu

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE

|

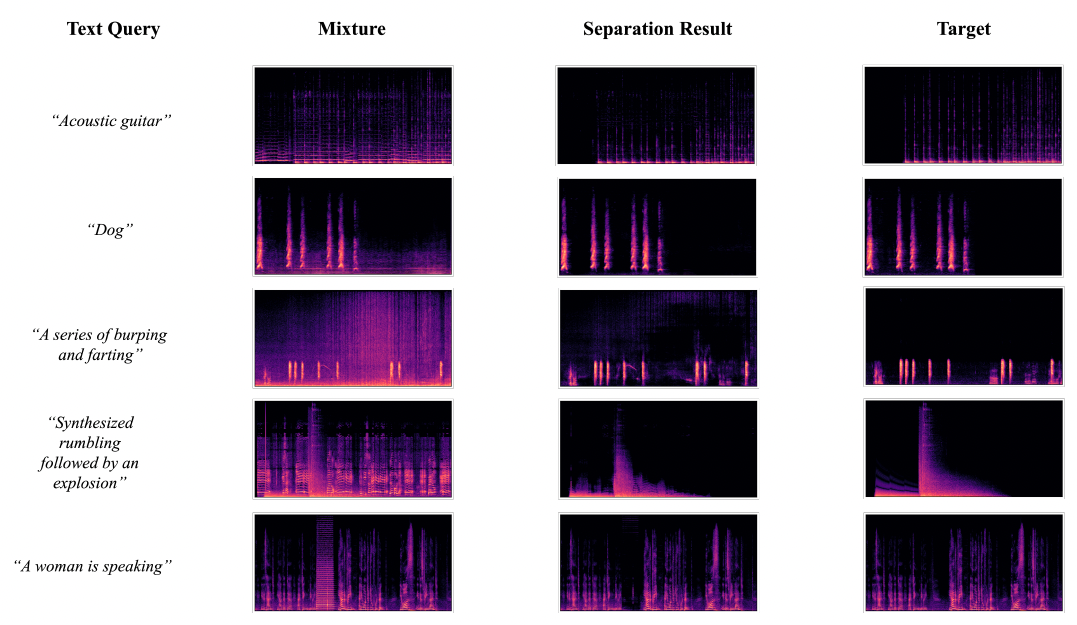

assets/results.png

ADDED

|

benchmark.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from tqdm import tqdm

|

| 3 |

+

import numpy as np

|

| 4 |

+

from evaluation.evaluate_audioset import AudioSetEvaluator

|

| 5 |

+

from evaluation.evaluate_audiocaps import AudioCapsEvaluator

|

| 6 |

+

from evaluation.evaluate_vggsound import VGGSoundEvaluator

|

| 7 |

+

from evaluation.evaluate_music import MUSICEvaluator

|

| 8 |

+

from evaluation.evaluate_esc50 import ESC50Evaluator

|

| 9 |

+

from evaluation.evaluate_clotho import ClothoEvaluator

|

| 10 |

+

from models.clap_encoder import CLAP_Encoder

|

| 11 |

+

|

| 12 |

+

from utils import (

|

| 13 |

+

load_ss_model,

|

| 14 |

+

calculate_sdr,

|

| 15 |

+

calculate_sisdr,

|

| 16 |

+

parse_yaml,

|

| 17 |

+

get_mean_sdr_from_dict,

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

def eval(checkpoint_path, config_yaml='config/audiosep_base.yaml'):

|

| 21 |

+

|

| 22 |

+

log_dir = 'eval_logs'

|

| 23 |

+

os.makedirs(log_dir, exist_ok=True)

|

| 24 |

+

|

| 25 |

+

device = "cuda"

|

| 26 |

+

|

| 27 |

+

configs = parse_yaml(config_yaml)

|

| 28 |

+

|

| 29 |

+

# AudioSet Evaluators

|

| 30 |

+

audioset_evaluator = AudioSetEvaluator()

|

| 31 |

+

# AudioCaps Evaluator

|

| 32 |

+

audiocaps_evaluator = AudioCapsEvaluator()

|

| 33 |

+

# VGGSound+ Evaluator

|

| 34 |

+

vggsound_evaluator = VGGSoundEvaluator()

|

| 35 |

+

# Clotho Evaluator

|

| 36 |

+

clotho_evaluator = ClothoEvaluator()

|

| 37 |

+

# MUSIC Evaluator

|

| 38 |

+

music_evaluator = MUSICEvaluator()

|

| 39 |

+

# ESC-50 Evaluator

|

| 40 |

+

esc50_evaluator = ESC50Evaluator()

|

| 41 |

+

|

| 42 |

+

# Load model

|

| 43 |

+

query_encoder = CLAP_Encoder().eval()

|

| 44 |

+

|

| 45 |

+

pl_model = load_ss_model(

|

| 46 |

+

configs=configs,

|

| 47 |

+

checkpoint_path=checkpoint_path,

|

| 48 |

+

query_encoder=query_encoder

|

| 49 |

+

).to(device)

|

| 50 |

+

|

| 51 |

+

print(f'------- Start Evaluation -------')

|

| 52 |

+

|

| 53 |

+

# evaluation on Clotho

|

| 54 |

+

SISDR, SDRi = clotho_evaluator(pl_model)

|

| 55 |

+

msg_clotho = "Clotho Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 56 |

+

print(msg_clotho)

|

| 57 |

+

|

| 58 |

+

# evaluation on VGGSound+ (YAN)

|

| 59 |

+

SISDR, SDRi = vggsound_evaluator(pl_model)

|

| 60 |

+

msg_vgg = "VGGSound Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 61 |

+

print(msg_vgg)

|

| 62 |

+

|

| 63 |

+

# evaluation on MUSIC

|

| 64 |

+

SISDR, SDRi = music_evaluator(pl_model)

|

| 65 |

+

msg_music = "MUSIC Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 66 |

+

print(msg_music)

|

| 67 |

+

|

| 68 |

+

# evaluation on ESC-50

|

| 69 |

+

SISDR, SDRi = esc50_evaluator(pl_model)

|

| 70 |

+

msg_esc50 = "ESC-50 Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 71 |

+

print(msg_esc50)

|

| 72 |

+

|

| 73 |

+

# evaluation on AudioSet

|

| 74 |

+

stats_dict = audioset_evaluator(pl_model=pl_model)

|

| 75 |

+

median_sdris = {}

|

| 76 |

+

median_sisdrs = {}

|

| 77 |

+

|

| 78 |

+

for class_id in range(527):

|

| 79 |

+

median_sdris[class_id] = np.nanmedian(stats_dict["sdris_dict"][class_id])

|

| 80 |

+

median_sisdrs[class_id] = np.nanmedian(stats_dict["sisdrs_dict"][class_id])

|

| 81 |

+

|

| 82 |

+

SDRi = get_mean_sdr_from_dict(median_sdris)

|

| 83 |

+

SISDR = get_mean_sdr_from_dict(median_sisdrs)

|

| 84 |

+

msg_audioset = "AudioSet Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 85 |

+

print(msg_audioset)

|

| 86 |

+

|

| 87 |

+

# evaluation on AudioCaps

|

| 88 |

+

SISDR, SDRi = audiocaps_evaluator(pl_model)

|

| 89 |

+

msg_audiocaps = "AudioCaps Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 90 |

+

print(msg_audiocaps)

|

| 91 |

+

|

| 92 |

+

# evaluation on Clotho

|

| 93 |

+

SISDR, SDRi = clotho_evaluator(pl_model)

|

| 94 |

+

msg_clotho = "Clotho Avg SDRi: {:.3f}, SISDR: {:.3f}".format(SDRi, SISDR)

|

| 95 |

+

print(msg_clotho)

|

| 96 |

+

|

| 97 |

+

msgs = [msg_audioset, msg_vgg, msg_audiocaps, msg_clotho, msg_music, msg_esc50]

|

| 98 |

+

|

| 99 |

+

# open file in write mode

|

| 100 |

+

log_path = os.path.join(log_dir, 'eval_results.txt')

|

| 101 |

+

with open(log_path, 'w') as fp:

|

| 102 |

+

for msg in msgs:

|

| 103 |

+

fp.write(msg + '\n')

|

| 104 |

+

print(f'Eval log is written to {log_path} ...')

|

| 105 |

+

print('------------------------- Done ---------------------------')

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

if __name__ == '__main__':

|

| 109 |

+

eval(checkpoint_path='checkpoint/audiosep_base.ckpt')

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

|

callbacks/base.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import lightning.pytorch as pl

|

| 3 |

+

from lightning.pytorch.utilities import rank_zero_only

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class CheckpointEveryNSteps(pl.Callback):

|

| 7 |

+

def __init__(

|

| 8 |

+

self,

|

| 9 |

+

checkpoints_dir,

|

| 10 |

+

save_step_frequency,

|

| 11 |

+

) -> None:

|

| 12 |

+

r"""Save a checkpoint every N steps.

|

| 13 |

+

|

| 14 |

+

Args:

|

| 15 |

+

checkpoints_dir (str): directory to save checkpoints

|

| 16 |

+

save_step_frequency (int): save checkpoint every N step

|

| 17 |

+

"""

|

| 18 |

+

|

| 19 |

+

self.checkpoints_dir = checkpoints_dir

|

| 20 |

+

self.save_step_frequency = save_step_frequency

|

| 21 |

+

|

| 22 |

+

@rank_zero_only

|

| 23 |

+

def on_train_batch_end(self, *args, **kwargs) -> None:

|

| 24 |

+

r"""Save a checkpoint every N steps."""

|

| 25 |

+

|

| 26 |

+

trainer = args[0]

|

| 27 |

+

global_step = trainer.global_step

|

| 28 |

+

|

| 29 |

+

if global_step == 1 or global_step % self.save_step_frequency == 0:

|

| 30 |

+

|

| 31 |

+

ckpt_path = os.path.join(

|

| 32 |

+

self.checkpoints_dir,

|

| 33 |

+

"step={}.ckpt".format(global_step))

|

| 34 |

+

trainer.save_checkpoint(ckpt_path)

|

| 35 |

+

print("Save checkpoint to {}".format(ckpt_path))

|

checkpoint/audiosep_base_4M_steps.ckpt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f8cda01bfd0ebd141eef45d41db7a3ada23a56568465840d3cff04b8010ce82c

|

| 3 |

+

size 1264844076

|

checkpoint/music_speech_audioset_epoch_15_esc_89.98.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:51c68f12f9d7ea25fdaaccf741ec7f81e93ee594455410f3bca4f47f88d8e006

|

| 3 |

+

size 2352471003

|

cog.yaml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Configuration for Cog ⚙️

|

| 2 |

+

# Reference: https://github.com/replicate/cog/blob/main/docs/yaml.md

|

| 3 |

+

|

| 4 |

+

build:

|

| 5 |

+

gpu: true

|

| 6 |

+

python_version: "3.11"

|

| 7 |

+

python_packages:

|

| 8 |

+

- "torchlibrosa==0.1.0"

|

| 9 |

+

- "lightning==2.1.0"

|

| 10 |

+

- "torch==2.0.1"

|

| 11 |

+

- "transformers==4.28.1"

|

| 12 |

+

- "braceexpand==0.1.7"

|

| 13 |

+

- "webdataset==0.2.60"

|

| 14 |

+

- "soundfile==0.12.1"

|

| 15 |

+

- "torchaudio==2.0.2"

|

| 16 |

+

- "torchvision==0.15.2"

|

| 17 |

+

- "h5py==3.10.0"

|

| 18 |

+

- "ftfy==6.1.1"

|

| 19 |

+

- "pandas==2.1.1"

|

| 20 |

+

- "wget==3.2"

|

| 21 |

+

predict: "predict.py:Predictor"

|

config/audiosep_base.yaml

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

task_name: AudioSep

|

| 3 |

+

|

| 4 |

+

data:

|

| 5 |

+

datafiles:

|

| 6 |

+

- 'datafiles/template.json'

|

| 7 |

+

|

| 8 |

+

sampling_rate: 32000

|

| 9 |

+

segment_seconds: 5

|

| 10 |

+

loudness_norm:

|

| 11 |

+

lower_db: -10

|

| 12 |

+

higher_db: 10

|

| 13 |

+

max_mix_num: 2

|

| 14 |

+

|

| 15 |

+

model:

|

| 16 |

+

query_net: CLAP

|

| 17 |

+

condition_size: 512

|

| 18 |

+

model_type: ResUNet30

|

| 19 |

+

input_channels: 1

|

| 20 |

+

output_channels: 1

|

| 21 |

+

resume_checkpoint: ""

|

| 22 |

+

use_text_ratio: 1.0

|

| 23 |

+

|

| 24 |

+

train:

|

| 25 |

+

optimizer:

|

| 26 |

+

optimizer_type: AdamW

|

| 27 |

+

learning_rate: 1e-3

|

| 28 |

+

warm_up_steps: 10000

|

| 29 |

+

reduce_lr_steps: 1000000

|

| 30 |

+

lr_lambda_type: constant_warm_up

|

| 31 |

+

num_nodes: 1

|

| 32 |

+

num_workers: 6

|

| 33 |

+

loss_type: l1_wav

|

| 34 |

+

sync_batchnorm: True

|

| 35 |

+

batch_size_per_device: 12

|

| 36 |

+

steps_per_epoch: 10000 # Every 10000 steps is called an `epoch`.

|

| 37 |

+

evaluate_step_frequency: 10000 # Evaluate every #evaluate_step_frequency steps.

|

| 38 |

+

save_step_frequency: 20000 # Save every #save_step_frequency steps.

|

| 39 |

+

early_stop_steps: 10000001

|

| 40 |

+

random_seed: 1234

|

| 41 |

+

|

data/audiotext_dataset.py

ADDED

|

@@ -0,0 +1,91 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import random

|

| 3 |

+

import torch

|

| 4 |

+

import torchaudio

|

| 5 |

+

from torch.utils.data import Dataset

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class AudioTextDataset(Dataset):

|

| 9 |

+

"""Can sample data from audio-text databases

|

| 10 |

+

Params:

|

| 11 |

+

sampling_rate: audio sampling rate

|

| 12 |

+

max_clip_len: max length (seconds) of audio clip to be sampled

|

| 13 |

+

"""

|

| 14 |

+

def __init__(

|

| 15 |

+

self,

|

| 16 |

+

datafiles=[''],

|

| 17 |

+

sampling_rate=32000,

|

| 18 |

+

max_clip_len=5,

|

| 19 |

+

):

|

| 20 |

+

all_data_json = []

|

| 21 |

+

for datafile in datafiles:

|

| 22 |

+

with open(datafile, 'r') as fp:

|

| 23 |

+

data_json = json.load(fp)['data']

|

| 24 |

+

all_data_json.extend(data_json)

|

| 25 |

+

self.all_data_json = all_data_json

|

| 26 |

+

|

| 27 |

+

self.sampling_rate = sampling_rate

|

| 28 |

+

self.max_length = max_clip_len * sampling_rate

|

| 29 |

+

|

| 30 |

+

def __len__(self):

|

| 31 |

+

return len(self.all_data_json)

|

| 32 |

+

|

| 33 |

+

def _cut_or_randomcrop(self, waveform):

|

| 34 |

+

# waveform: [1, samples]

|

| 35 |

+

# random crop

|

| 36 |

+

if waveform.size(1) > self.max_length:

|

| 37 |

+

random_idx = random.randint(0, waveform.size(1)-self.max_length)

|

| 38 |

+

waveform = waveform[:, random_idx:random_idx+self.max_length]

|

| 39 |

+

else:

|

| 40 |

+

temp_wav = torch.zeros(1, self.max_length)

|

| 41 |

+

temp_wav[:, 0:waveform.size(1)] = waveform

|

| 42 |

+

waveform = temp_wav

|

| 43 |

+

|

| 44 |

+

assert waveform.size(1) == self.max_length, \

|

| 45 |

+

f"number of audio samples is {waveform.size(1)}"

|

| 46 |

+

|

| 47 |

+

return waveform

|

| 48 |

+

|

| 49 |

+

def _read_audio(self, index):

|

| 50 |

+

try:

|

| 51 |

+

audio_path = self.all_data_json[index]['wav']

|

| 52 |

+

audio_data, audio_rate = torchaudio.load(audio_path, channels_first=True)

|

| 53 |

+

text = self.all_data_json[index]['caption']

|

| 54 |

+

|

| 55 |

+

# drop short utterance

|

| 56 |

+

if audio_data.size(1) < self.sampling_rate * 1:

|

| 57 |

+

raise Exception(f'{audio_path} is too short, drop it ...')

|

| 58 |

+

|

| 59 |

+

return text, audio_data, audio_rate

|

| 60 |

+

|

| 61 |

+

except Exception as e:

|

| 62 |

+

print(f'error: {e} occurs, when loading {audio_path}')

|

| 63 |

+

random_index = random.randint(0, len(self.all_data_json)-1)

|

| 64 |

+

return self._read_audio(index=random_index)

|

| 65 |

+

|

| 66 |

+

def __getitem__(self, index):

|

| 67 |

+

# create a audio tensor

|

| 68 |

+

text, audio_data, audio_rate = self._read_audio(index)

|

| 69 |

+

audio_len = audio_data.shape[1] / audio_rate

|

| 70 |

+

# convert stero to single channel

|

| 71 |

+

if audio_data.shape[0] > 1:

|

| 72 |

+

# audio_data: [samples]

|

| 73 |

+

audio_data = (audio_data[0] + audio_data[1]) / 2

|

| 74 |

+

else:

|

| 75 |

+

audio_data = audio_data.squeeze(0)

|

| 76 |

+

|

| 77 |

+

# resample audio clip

|

| 78 |

+

if audio_rate != self.sampling_rate:

|

| 79 |

+

audio_data = torchaudio.functional.resample(audio_data, orig_freq=audio_rate, new_freq=self.sampling_rate)

|

| 80 |

+

|

| 81 |

+

audio_data = audio_data.unsqueeze(0)

|

| 82 |

+

|

| 83 |

+

audio_data = self._cut_or_randomcrop(audio_data)

|

| 84 |

+

|

| 85 |

+

data_dict = {

|

| 86 |

+

'text': text,

|

| 87 |

+

'waveform': audio_data,

|

| 88 |

+

'modality': 'audio_text'

|

| 89 |

+

}

|

| 90 |

+

|

| 91 |

+

return data_dict

|

data/datamodules.py

ADDED

|

@@ -0,0 +1,122 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Dict, List, Optional, NoReturn

|

| 2 |

+

import torch

|

| 3 |

+

import lightning.pytorch as pl

|

| 4 |

+

from torch.utils.data import DataLoader

|

| 5 |

+

from data.audiotext_dataset import AudioTextDataset

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class DataModule(pl.LightningDataModule):

|

| 9 |

+

def __init__(

|

| 10 |

+

self,

|

| 11 |

+

train_dataset: object,

|

| 12 |

+

batch_size: int,

|

| 13 |

+

num_workers: int

|

| 14 |

+

):

|

| 15 |

+

r"""Data module. To get one batch of data:

|

| 16 |

+

|

| 17 |

+

code-block:: python

|

| 18 |

+

|

| 19 |

+

data_module.setup()

|

| 20 |

+

|

| 21 |

+

for batch_data_dict in data_module.train_dataloader():

|

| 22 |

+

print(batch_data_dict.keys())

|

| 23 |

+

break

|

| 24 |

+

|

| 25 |

+

Args:

|

| 26 |

+

train_sampler: Sampler object

|

| 27 |

+

train_dataset: Dataset object

|

| 28 |

+

num_workers: int

|

| 29 |

+

distributed: bool

|

| 30 |

+

"""

|

| 31 |

+

super().__init__()

|

| 32 |

+

self._train_dataset = train_dataset

|

| 33 |

+

self.num_workers = num_workers

|

| 34 |

+

self.batch_size = batch_size

|

| 35 |

+

self.collate_fn = collate_fn

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def prepare_data(self):

|

| 39 |

+

# download, split, etc...

|

| 40 |

+

# only called on 1 GPU/TPU in distributed

|

| 41 |

+

pass

|

| 42 |

+

|

| 43 |

+

def setup(self, stage: Optional[str] = None) -> NoReturn:

|

| 44 |

+

r"""called on every device."""

|

| 45 |

+

|

| 46 |

+

# make assignments here (val/train/test split)

|

| 47 |

+

# called on every process in DDP

|

| 48 |

+

|

| 49 |

+

# SegmentSampler is used for selecting segments for training.

|

| 50 |

+

# On multiple devices, each SegmentSampler samples a part of mini-batch

|

| 51 |

+

# data.

|

| 52 |

+

self.train_dataset = self._train_dataset

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def train_dataloader(self) -> torch.utils.data.DataLoader:

|

| 56 |

+

r"""Get train loader."""

|

| 57 |

+

train_loader = DataLoader(

|

| 58 |

+

dataset=self.train_dataset,

|

| 59 |

+

batch_size=self.batch_size,

|

| 60 |

+

collate_fn=self.collate_fn,

|

| 61 |

+

num_workers=self.num_workers,

|

| 62 |

+

pin_memory=True,

|

| 63 |

+

persistent_workers=False,

|

| 64 |

+

shuffle=True

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

return train_loader

|

| 68 |

+

|

| 69 |

+

def val_dataloader(self):

|

| 70 |

+

# val_split = Dataset(...)

|

| 71 |

+

# return DataLoader(val_split)

|

| 72 |

+

pass

|

| 73 |

+

|

| 74 |

+

def test_dataloader(self):

|

| 75 |

+

# test_split = Dataset(...)

|

| 76 |

+

# return DataLoader(test_split)

|

| 77 |

+

pass

|

| 78 |

+

|

| 79 |

+

def teardown(self):

|

| 80 |

+

# clean up after fit or test

|

| 81 |

+

# called on every process in DDP

|

| 82 |

+

pass

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def collate_fn(list_data_dict):

|

| 86 |

+

r"""Collate mini-batch data to inputs and targets for training.

|

| 87 |

+

|

| 88 |

+

Args:

|

| 89 |

+

list_data_dict: e.g., [

|

| 90 |

+

{

|

| 91 |

+

'text': 'a sound of dog',

|

| 92 |

+

'waveform': (1, samples),

|

| 93 |

+

'modality': 'audio_text'

|

| 94 |

+

}

|

| 95 |

+

...

|

| 96 |

+

]

|

| 97 |

+

Returns:

|

| 98 |

+

data_dict: e.g.

|

| 99 |

+

'audio_text': {

|

| 100 |

+

'text': ['a sound of dog', ...]

|

| 101 |

+

'waveform': (batch_size, 1, samples)

|

| 102 |

+

}

|

| 103 |

+

"""

|

| 104 |

+

|

| 105 |

+

at_list_data_dict = [data_dict for data_dict in list_data_dict if data_dict['modality']=='audio_text']

|

| 106 |

+

|

| 107 |

+

at_data_dict = {}

|

| 108 |

+

|

| 109 |

+

if len(at_list_data_dict) > 0:

|

| 110 |

+

for key in at_list_data_dict[0].keys():

|

| 111 |

+

at_data_dict[key] = [at_data_dict[key] for at_data_dict in at_list_data_dict]

|

| 112 |

+

if key == 'waveform':

|

| 113 |

+

at_data_dict[key] = torch.stack(at_data_dict[key])

|

| 114 |

+

elif key == 'text':

|

| 115 |

+

at_data_dict[key] = [text for text in at_data_dict[key]]

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

data_dict = {

|

| 119 |

+

'audio_text': at_data_dict

|

| 120 |

+

}

|

| 121 |

+

|

| 122 |

+

return data_dict

|

data/waveform_mixers.py

ADDED

|

@@ -0,0 +1,127 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import random

|

| 2 |

+

import sre_compile

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn as nn

|

| 6 |

+

import pyloudnorm as pyln

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class SegmentMixer(nn.Module):

|

| 10 |

+

def __init__(self, max_mix_num, lower_db, higher_db):

|

| 11 |

+

super(SegmentMixer, self).__init__()

|

| 12 |

+

|

| 13 |

+

self.max_mix_num = max_mix_num

|

| 14 |

+

self.loudness_param = {

|

| 15 |

+

'lower_db': lower_db,

|

| 16 |

+

'higher_db': higher_db,

|

| 17 |

+

}

|

| 18 |

+

|

| 19 |

+

def __call__(self, waveforms):

|

| 20 |

+

|

| 21 |

+

batch_size = waveforms.shape[0]

|

| 22 |

+

|

| 23 |

+

data_dict = {

|

| 24 |

+

'segment': [],

|

| 25 |

+

'mixture': [],

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

for n in range(0, batch_size):

|

| 29 |

+

|

| 30 |

+

segment = waveforms[n].clone()

|

| 31 |

+

|

| 32 |

+

# create zero tensors as the background template

|

| 33 |

+

noise = torch.zeros_like(segment)

|

| 34 |

+

|

| 35 |

+

mix_num = random.randint(2, self.max_mix_num)

|

| 36 |

+

assert mix_num >= 2

|

| 37 |

+

|

| 38 |

+

for i in range(1, mix_num):

|

| 39 |

+

next_segment = waveforms[(n + i) % batch_size]

|

| 40 |

+

rescaled_next_segment = dynamic_loudnorm(audio=next_segment, reference=segment, **self.loudness_param)

|

| 41 |

+

noise += rescaled_next_segment

|

| 42 |

+

|

| 43 |

+

# randomly normalize background noise

|

| 44 |

+

noise = dynamic_loudnorm(audio=noise, reference=segment, **self.loudness_param)

|

| 45 |

+

|

| 46 |

+

# create audio mixyure

|

| 47 |

+

mixture = segment + noise

|

| 48 |

+

|

| 49 |

+

# declipping if need be

|

| 50 |

+

max_value = torch.max(torch.abs(mixture))

|

| 51 |

+

if max_value > 1:

|

| 52 |

+

segment *= 0.9 / max_value

|

| 53 |

+

mixture *= 0.9 / max_value

|

| 54 |

+

|

| 55 |

+

data_dict['segment'].append(segment)

|

| 56 |

+

data_dict['mixture'].append(mixture)

|

| 57 |

+

|

| 58 |

+

for key in data_dict.keys():

|

| 59 |

+

data_dict[key] = torch.stack(data_dict[key], dim=0)

|

| 60 |

+

|

| 61 |

+

# return data_dict

|

| 62 |

+

return data_dict['mixture'], data_dict['segment']

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

def rescale_to_match_energy(segment1, segment2):

|

| 66 |

+

|

| 67 |

+

ratio = get_energy_ratio(segment1, segment2)

|

| 68 |

+

rescaled_segment1 = segment1 / ratio

|

| 69 |

+

return rescaled_segment1

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def get_energy(x):

|

| 73 |

+

return torch.mean(x ** 2)

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def get_energy_ratio(segment1, segment2):

|

| 77 |

+

|

| 78 |

+

energy1 = get_energy(segment1)

|

| 79 |

+

energy2 = max(get_energy(segment2), 1e-10)

|

| 80 |

+

ratio = (energy1 / energy2) ** 0.5

|

| 81 |

+

ratio = torch.clamp(ratio, 0.02, 50)

|

| 82 |

+

return ratio

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def dynamic_loudnorm(audio, reference, lower_db=-10, higher_db=10):

|

| 86 |

+

rescaled_audio = rescale_to_match_energy(audio, reference)

|

| 87 |

+

|

| 88 |

+

delta_loudness = random.randint(lower_db, higher_db)

|

| 89 |

+

|

| 90 |

+

gain = np.power(10.0, delta_loudness / 20.0)

|

| 91 |

+

|

| 92 |

+

return gain * rescaled_audio

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

def torch_to_numpy(tensor):

|

| 96 |

+

"""Convert a PyTorch tensor to a NumPy array."""

|

| 97 |

+

if isinstance(tensor, torch.Tensor):

|

| 98 |

+

return tensor.detach().cpu().numpy()

|

| 99 |

+

else:

|

| 100 |

+

raise ValueError("Input must be a PyTorch tensor.")

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

def numpy_to_torch(array):

|

| 104 |

+

"""Convert a NumPy array to a PyTorch tensor."""

|

| 105 |

+

if isinstance(array, np.ndarray):

|

| 106 |

+

return torch.from_numpy(array)

|

| 107 |

+

else:

|

| 108 |

+

raise ValueError("Input must be a NumPy array.")

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

# decayed

|

| 112 |

+

def random_loudness_norm(audio, lower_db=-35, higher_db=-15, sr=32000):

|

| 113 |

+

device = audio.device

|

| 114 |

+

audio = torch_to_numpy(audio.squeeze(0))

|

| 115 |

+

# randomly select a norm volume

|

| 116 |

+

norm_vol = random.randint(lower_db, higher_db)

|

| 117 |

+

|

| 118 |

+

# measure the loudness first

|

| 119 |

+

meter = pyln.Meter(sr) # create BS.1770 meter

|

| 120 |

+

loudness = meter.integrated_loudness(audio)

|

| 121 |

+

# loudness normalize audio

|

| 122 |

+

normalized_audio = pyln.normalize.loudness(audio, loudness, norm_vol)

|

| 123 |

+

|

| 124 |

+

normalized_audio = numpy_to_torch(normalized_audio).unsqueeze(0)

|

| 125 |

+

|

| 126 |

+

return normalized_audio.to(device)

|

| 127 |

+

|

datafiles/template.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"data": [

|

| 3 |

+

{

|

| 4 |

+

"wav": "path_to_audio_file",

|

| 5 |

+

"caption": "textual_desciptions"

|

| 6 |

+

}

|

| 7 |

+

]

|

| 8 |

+

}

|

environment.yml

ADDED

|

@@ -0,0 +1,326 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|