Spaces:

Build error

Build error

Upload 35 files

Browse files- audio_detection/__init__.py +0 -0

- audio_detection/audio_infer/__init__.py +0 -0

- audio_detection/audio_infer/__pycache__/__init__.cpython-38.pyc +0 -0

- audio_detection/audio_infer/metadata/black_list/groundtruth_weak_label_evaluation_set.csv +1350 -0

- audio_detection/audio_infer/metadata/black_list/groundtruth_weak_label_testing_set.csv +606 -0

- audio_detection/audio_infer/metadata/class_labels_indices.csv +528 -0

- audio_detection/audio_infer/pytorch/__pycache__/models.cpython-38.pyc +0 -0

- audio_detection/audio_infer/pytorch/__pycache__/pytorch_utils.cpython-38.pyc +0 -0

- audio_detection/audio_infer/pytorch/evaluate.py +42 -0

- audio_detection/audio_infer/pytorch/finetune_template.py +127 -0

- audio_detection/audio_infer/pytorch/inference.py +206 -0

- audio_detection/audio_infer/pytorch/losses.py +14 -0

- audio_detection/audio_infer/pytorch/main.py +378 -0

- audio_detection/audio_infer/pytorch/models.py +951 -0

- audio_detection/audio_infer/pytorch/pytorch_utils.py +251 -0

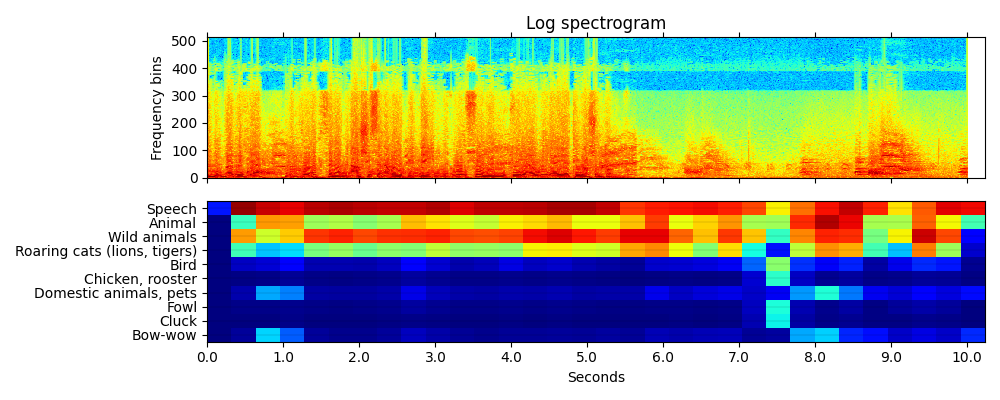

- audio_detection/audio_infer/results/YDlWd7Wmdi1E.png +0 -0

- audio_detection/audio_infer/useful_ckpts/audio_detection.pth +3 -0

- audio_detection/audio_infer/utils/__pycache__/config.cpython-38.pyc +0 -0

- audio_detection/audio_infer/utils/config.py +94 -0

- audio_detection/audio_infer/utils/crash.py +12 -0

- audio_detection/audio_infer/utils/create_black_list.py +64 -0

- audio_detection/audio_infer/utils/create_indexes.py +126 -0

- audio_detection/audio_infer/utils/data_generator.py +421 -0

- audio_detection/audio_infer/utils/dataset.py +224 -0

- audio_detection/audio_infer/utils/plot_for_paper.py +565 -0

- audio_detection/audio_infer/utils/plot_statistics.py +0 -0

- audio_detection/audio_infer/utils/utilities.py +172 -0

- audio_detection/target_sound_detection/src/__pycache__/models.cpython-38.pyc +0 -0

- audio_detection/target_sound_detection/src/__pycache__/utils.cpython-38.pyc +0 -0

- audio_detection/target_sound_detection/src/models.py +1288 -0

- audio_detection/target_sound_detection/src/utils.py +353 -0

- audio_detection/target_sound_detection/useful_ckpts/tsd/ref_mel.pth +3 -0

- audio_detection/target_sound_detection/useful_ckpts/tsd/run_config.pth +3 -0

- audio_detection/target_sound_detection/useful_ckpts/tsd/run_model_7_loss=-0.0724.pt +3 -0

- audio_detection/target_sound_detection/useful_ckpts/tsd/text_emb.pth +3 -0

audio_detection/__init__.py

ADDED

|

File without changes

|

audio_detection/audio_infer/__init__.py

ADDED

|

File without changes

|

audio_detection/audio_infer/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (171 Bytes). View file

|

|

|

audio_detection/audio_infer/metadata/black_list/groundtruth_weak_label_evaluation_set.csv

ADDED

|

@@ -0,0 +1,1350 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-JMT0mK0Dbg_30.000_40.000.wav 30.000 40.000 Train horn

|

| 2 |

+

3ACjUf9QpAQ_30.000_40.000.wav 30.000 40.000 Train horn

|

| 3 |

+

3S2-TODd__k_90.000_100.000.wav 90.000 100.000 Train horn

|

| 4 |

+

3YJewEC-NWo_30.000_40.000.wav 30.000 40.000 Train horn

|

| 5 |

+

3jXAh3V2FO8_30.000_40.000.wav 30.000 40.000 Train horn

|

| 6 |

+

53oq_Otm_XI_30.000_40.000.wav 30.000 40.000 Train horn

|

| 7 |

+

8IaInXpdd9M_0.000_10.000.wav 0.000 10.000 Train horn

|

| 8 |

+

8nU1aVscJec_30.000_40.000.wav 30.000 40.000 Train horn

|

| 9 |

+

9LQEZJPNVpw_30.000_40.000.wav 30.000 40.000 Train horn

|

| 10 |

+

AHom7lBbtoY_30.000_40.000.wav 30.000 40.000 Train horn

|

| 11 |

+

Ag_zT74ZGNc_9.000_19.000.wav 9.000 19.000 Train horn

|

| 12 |

+

BQpa8whzwAE_30.000_40.000.wav 30.000 40.000 Train horn

|

| 13 |

+

CCX_4cW_SAU_0.000_10.000.wav 0.000 10.000 Train horn

|

| 14 |

+

CLIdVCUO_Vw_30.000_40.000.wav 30.000 40.000 Train horn

|

| 15 |

+

D_nXtMgbPNY_30.000_40.000.wav 30.000 40.000 Train horn

|

| 16 |

+

GFQnh84kNwU_30.000_40.000.wav 30.000 40.000 Train horn

|

| 17 |

+

I4qODX0fypE_30.000_40.000.wav 30.000 40.000 Train horn

|

| 18 |

+

IdqEbjujFb8_30.000_40.000.wav 30.000 40.000 Train horn

|

| 19 |

+

L3a132_uApg_50.000_60.000.wav 50.000 60.000 Train horn

|

| 20 |

+

LzcNa3HvD7c_30.000_40.000.wav 30.000 40.000 Train horn

|

| 21 |

+

MCYY8tJsnfY_7.000_17.000.wav 7.000 17.000 Train horn

|

| 22 |

+

MPSf7dJpV5w_30.000_40.000.wav 30.000 40.000 Train horn

|

| 23 |

+

NdCr5IDnkxc_30.000_40.000.wav 30.000 40.000 Train horn

|

| 24 |

+

P54KKbTA_TE_0.000_7.000.wav 0.000 7.000 Train horn

|

| 25 |

+

PJUy17bXlhc_40.000_50.000.wav 40.000 50.000 Train horn

|

| 26 |

+

QrAoRSA13bM_30.000_40.000.wav 30.000 40.000 Train horn

|

| 27 |

+

R_Lpb-51Kl4_30.000_40.000.wav 30.000 40.000 Train horn

|

| 28 |

+

Rq-22Cycrpg_30.000_40.000.wav 30.000 40.000 Train horn

|

| 29 |

+

TBjrN1aMRrM_30.000_40.000.wav 30.000 40.000 Train horn

|

| 30 |

+

XAUtk9lwzU8_30.000_40.000.wav 30.000 40.000 Train horn

|

| 31 |

+

XW8pSKLyr0o_20.000_30.000.wav 20.000 30.000 Train horn

|

| 32 |

+

Y10I9JSvJuQ_30.000_40.000.wav 30.000 40.000 Train horn

|

| 33 |

+

Y_jwEflLthg_190.000_200.000.wav 190.000 200.000 Train horn

|

| 34 |

+

YilfKdY7w6Y_60.000_70.000.wav 60.000 70.000 Train horn

|

| 35 |

+

ZcTI8fQgEZE_240.000_250.000.wav 240.000 250.000 Train horn

|

| 36 |

+

_8MvhMlbwiE_40.000_50.000.wav 40.000 50.000 Train horn

|

| 37 |

+

_dkeW6lqmq4_30.000_40.000.wav 30.000 40.000 Train horn

|

| 38 |

+

aXsUHAKbyLs_30.000_40.000.wav 30.000 40.000 Train horn

|

| 39 |

+

arevYmB0qGg_30.000_40.000.wav 30.000 40.000 Train horn

|

| 40 |

+

d1o334I5X_k_30.000_40.000.wav 30.000 40.000 Train horn

|

| 41 |

+

dSzZWgbJ378_30.000_40.000.wav 30.000 40.000 Train horn

|

| 42 |

+

ePVb5Upev8k_40.000_50.000.wav 40.000 50.000 Train horn

|

| 43 |

+

g4cA-ifQc70_30.000_40.000.wav 30.000 40.000 Train horn

|

| 44 |

+

g9JVq7wfDIo_30.000_40.000.wav 30.000 40.000 Train horn

|

| 45 |

+

gTFCK9TuLOQ_30.000_40.000.wav 30.000 40.000 Train horn

|

| 46 |

+

hYqzr_rIIAw_30.000_40.000.wav 30.000 40.000 Train horn

|

| 47 |

+

iZgzRfa-xPQ_30.000_40.000.wav 30.000 40.000 Train horn

|

| 48 |

+

k8H8rn4NaSM_0.000_10.000.wav 0.000 10.000 Train horn

|

| 49 |

+

lKQ-I_P7TEM_20.000_30.000.wav 20.000 30.000 Train horn

|

| 50 |

+

nfY_zkJceDw_30.000_40.000.wav 30.000 40.000 Train horn

|

| 51 |

+

pW5SI1ZKUpA_30.000_40.000.wav 30.000 40.000 Train horn

|

| 52 |

+

pxmrmtEnROk_30.000_40.000.wav 30.000 40.000 Train horn

|

| 53 |

+

q7zzKHFWGkg_30.000_40.000.wav 30.000 40.000 Train horn

|

| 54 |

+

qu8vVFWKszA_30.000_40.000.wav 30.000 40.000 Train horn

|

| 55 |

+

stdjjG6Y5IU_30.000_40.000.wav 30.000 40.000 Train horn

|

| 56 |

+

tdRMxc4UWRk_30.000_40.000.wav 30.000 40.000 Train horn

|

| 57 |

+

tu-cxDG2mW8_0.000_10.000.wav 0.000 10.000 Train horn

|

| 58 |

+

txXSE7kgrc8_30.000_40.000.wav 30.000 40.000 Train horn

|

| 59 |

+

xabrKa79prM_30.000_40.000.wav 30.000 40.000 Train horn

|

| 60 |

+

yBVxtq9k8Sg_0.000_10.000.wav 0.000 10.000 Train horn

|

| 61 |

+

-WoudI3gGvk_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 62 |

+

0_gci63CtFY_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 63 |

+

2-h8MRSRvEg_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 64 |

+

3NX4HaOVBoo_240.000_250.000.wav 240.000 250.000 Air horn, truck horn

|

| 65 |

+

9NPKQDaNCRk_0.000_6.000.wav 0.000 6.000 Air horn, truck horn

|

| 66 |

+

9ct4w4aYWdc_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 67 |

+

9l9QXgsJSfo_120.000_130.000.wav 120.000 130.000 Air horn, truck horn

|

| 68 |

+

CN0Bi4MDpA4_20.000_30.000.wav 20.000 30.000 Air horn, truck horn

|

| 69 |

+

CU2MyVM_B48_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 70 |

+

Cg-DWc9nPfQ_90.000_100.000.wav 90.000 100.000 Air horn, truck horn

|

| 71 |

+

D62L3husEa0_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 72 |

+

GO2zKyMtBV4_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 73 |

+

Ge_KWS-0098_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 74 |

+

Hk7HqLBHWng_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 75 |

+

IpyingiCwV8_0.000_3.000.wav 0.000 3.000 Air horn, truck horn

|

| 76 |

+

Isuh9pOuH6I_300.000_310.000.wav 300.000 310.000 Air horn, truck horn

|

| 77 |

+

IuTfMfzkr5Y_120.000_130.000.wav 120.000 130.000 Air horn, truck horn

|

| 78 |

+

MFxsgcZZtFs_10.000_20.000.wav 10.000 20.000 Air horn, truck horn

|

| 79 |

+

N3osL4QmOL8_49.000_59.000.wav 49.000 59.000 Air horn, truck horn

|

| 80 |

+

NOZsDTFLm7M_0.000_9.000.wav 0.000 9.000 Air horn, truck horn

|

| 81 |

+

OjVY3oM1jEU_40.000_50.000.wav 40.000 50.000 Air horn, truck horn

|

| 82 |

+

PNaLTW50fxM_60.000_70.000.wav 60.000 70.000 Air horn, truck horn

|

| 83 |

+

TYLZuBBu8ms_0.000_10.000.wav 0.000 10.000 Air horn, truck horn

|

| 84 |

+

UdHR1P_NIbo_110.000_120.000.wav 110.000 120.000 Air horn, truck horn

|

| 85 |

+

YilfKdY7w6Y_60.000_70.000.wav 60.000 70.000 Air horn, truck horn

|

| 86 |

+

Yt4ZWNjvJOY_50.000_60.000.wav 50.000 60.000 Air horn, truck horn

|

| 87 |

+

Z5M3fGT3Xjk_60.000_70.000.wav 60.000 70.000 Air horn, truck horn

|

| 88 |

+

ZauRsP1uH74_12.000_22.000.wav 12.000 22.000 Air horn, truck horn

|

| 89 |

+

a_6CZ2JaEuc_0.000_2.000.wav 0.000 2.000 Air horn, truck horn

|

| 90 |

+

b7m5Kt5U7Vc_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 91 |

+

bIObkrK06rk_15.000_25.000.wav 15.000 25.000 Air horn, truck horn

|

| 92 |

+

cdrjKqyDrak_420.000_430.000.wav 420.000 430.000 Air horn, truck horn

|

| 93 |

+

ckSYn557ZyE_20.000_30.000.wav 20.000 30.000 Air horn, truck horn

|

| 94 |

+

cs-RPPsg_ks_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 95 |

+

ctsq33oUBT8_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 96 |

+

eCFUwyU9ZWA_9.000_19.000.wav 9.000 19.000 Air horn, truck horn

|

| 97 |

+

ePVb5Upev8k_40.000_50.000.wav 40.000 50.000 Air horn, truck horn

|

| 98 |

+

fHaQPHCjyfA_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 99 |

+

fOVsAMJ3Yms_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 100 |

+

g4cA-ifQc70_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 101 |

+

gjlo4evwjlE_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 102 |

+

i9VjpIbM3iE_410.000_420.000.wav 410.000 420.000 Air horn, truck horn

|

| 103 |

+

ieZVo7W3BQ4_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 104 |

+

ii87iO6JboA_10.000_20.000.wav 10.000 20.000 Air horn, truck horn

|

| 105 |

+

jko48cNdvFA_80.000_90.000.wav 80.000 90.000 Air horn, truck horn

|

| 106 |

+

kJuvA2zmrnY_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 107 |

+

kUrb38hMwPs_0.000_10.000.wav 0.000 10.000 Air horn, truck horn

|

| 108 |

+

km_hVyma2vo_0.000_10.000.wav 0.000 10.000 Air horn, truck horn

|

| 109 |

+

m1e9aOwRiDQ_0.000_9.000.wav 0.000 9.000 Air horn, truck horn

|

| 110 |

+

mQJcObz1k_E_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 111 |

+

pk75WDyNZKc_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 112 |

+

rhUfN81puDI_0.000_10.000.wav 0.000 10.000 Air horn, truck horn

|

| 113 |

+

suuYwAifIAQ_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 114 |

+

wDdEZ46B-tM_460.000_470.000.wav 460.000 470.000 Air horn, truck horn

|

| 115 |

+

wHISHmuP58s_80.000_90.000.wav 80.000 90.000 Air horn, truck horn

|

| 116 |

+

xwqIKDz1bT4_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 117 |

+

y4Ko6VNiqB0_30.000_40.000.wav 30.000 40.000 Air horn, truck horn

|

| 118 |

+

yhcmPrU3QSk_61.000_71.000.wav 61.000 71.000 Air horn, truck horn

|

| 119 |

+

3FWHjjZGT9U_80.000_90.000.wav 80.000 90.000 Car alarm

|

| 120 |

+

3YChVhqW42E_130.000_140.000.wav 130.000 140.000 Car alarm

|

| 121 |

+

3YRkin3bMlQ_170.000_180.000.wav 170.000 180.000 Car alarm

|

| 122 |

+

4APBvMmKubU_10.000_20.000.wav 10.000 20.000 Car alarm

|

| 123 |

+

4JDah6Ckr9k_5.000_15.000.wav 5.000 15.000 Car alarm

|

| 124 |

+

5hL1uGb4sas_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 125 |

+

969Zfj4IoPk_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 126 |

+

AyfuBDN3Vdw_40.000_50.000.wav 40.000 50.000 Car alarm

|

| 127 |

+

B-ZqhRg3km4_60.000_70.000.wav 60.000 70.000 Car alarm

|

| 128 |

+

BDnwA3AaclE_10.000_20.000.wav 10.000 20.000 Car alarm

|

| 129 |

+

ES-rjFfuxq4_120.000_130.000.wav 120.000 130.000 Car alarm

|

| 130 |

+

EWbZq5ruCpg_0.000_10.000.wav 0.000 10.000 Car alarm

|

| 131 |

+

F50h9HiyC3k_40.000_50.000.wav 40.000 50.000 Car alarm

|

| 132 |

+

F5AP8kQvogM_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 133 |

+

FKJuDOAumSk_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 134 |

+

GmbNjZi4xBw_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 135 |

+

H7lOMlND9dc_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 136 |

+

Hu8lxbHYaqg_40.000_50.000.wav 40.000 50.000 Car alarm

|

| 137 |

+

IziTYkSwq9Q_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 138 |

+

JcO2TTtiplA_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 139 |

+

KKx7dWRg8s8_8.000_18.000.wav 8.000 18.000 Car alarm

|

| 140 |

+

Kf9Kr69mwOA_14.000_24.000.wav 14.000 24.000 Car alarm

|

| 141 |

+

L535vIV3ED4_40.000_50.000.wav 40.000 50.000 Car alarm

|

| 142 |

+

LOjT44tFx1A_0.000_10.000.wav 0.000 10.000 Car alarm

|

| 143 |

+

Mxn2FKuNwiI_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 144 |

+

Nkqx09b-xyI_70.000_80.000.wav 70.000 80.000 Car alarm

|

| 145 |

+

QNKo1W1WRbc_22.000_32.000.wav 22.000 32.000 Car alarm

|

| 146 |

+

R0VxYDfjyAU_60.000_70.000.wav 60.000 70.000 Car alarm

|

| 147 |

+

TJ58vMpSy1w_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 148 |

+

ToU1kRagUjY_0.000_10.000.wav 0.000 10.000 Car alarm

|

| 149 |

+

TrQGIZqrW0s_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 150 |

+

ULFhHR0OLSE_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 151 |

+

ULS3ffQkCW4_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 152 |

+

U_9NuNORYQM_1.000_11.000.wav 1.000 11.000 Car alarm

|

| 153 |

+

UkCEuwYUW8c_110.000_120.000.wav 110.000 120.000 Car alarm

|

| 154 |

+

Wak5QxsS-QU_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 155 |

+

XzE7mp3pVik_0.000_10.000.wav 0.000 10.000 Car alarm

|

| 156 |

+

Y-4dtrP-RNo_7.000_17.000.wav 7.000 17.000 Car alarm

|

| 157 |

+

Zltlj0fDeS4_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 158 |

+

cB1jkzgH2es_150.000_160.000.wav 150.000 160.000 Car alarm

|

| 159 |

+

eIMjkADTWzA_60.000_70.000.wav 60.000 70.000 Car alarm

|

| 160 |

+

eL7s5CoW0UA_0.000_7.000.wav 0.000 7.000 Car alarm

|

| 161 |

+

i9VjpIbM3iE_410.000_420.000.wav 410.000 420.000 Car alarm

|

| 162 |

+

iWl-5LNURFc_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 163 |

+

iX34nDCq9NU_10.000_20.000.wav 10.000 20.000 Car alarm

|

| 164 |

+

ii87iO6JboA_10.000_20.000.wav 10.000 20.000 Car alarm

|

| 165 |

+

l6_h_YHuTbY_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 166 |

+

lhedRVb85Fk_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 167 |

+

monelE7hnwI_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 168 |

+

o2CmtHNUrXg_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 169 |

+

pXX6cK4xtiY_11.000_21.000.wav 11.000 21.000 Car alarm

|

| 170 |

+

stnVta2ip9g_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 171 |

+

uvuVg9Cl0n0_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 172 |

+

vF2zXcbADUk_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 173 |

+

vN7dJyt-nj0_20.000_30.000.wav 20.000 30.000 Car alarm

|

| 174 |

+

w8Md65mE5Vc_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 175 |

+

ySqfMcFk5LM_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 176 |

+

ysNK5RVF3Zw_0.000_10.000.wav 0.000 10.000 Car alarm

|

| 177 |

+

za8KPcQ0dTw_30.000_40.000.wav 30.000 40.000 Car alarm

|

| 178 |

+

-2sE5CH8Wb8_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 179 |

+

-fJsZm3YRc0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 180 |

+

-oSzD8P2BtU_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 181 |

+

-pzwalZ0ub0_5.000_15.000.wav 5.000 15.000 Reversing beeps

|

| 182 |

+

-t-htrAtNvM_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 183 |

+

-zNEcuo28oE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 184 |

+

077aWlQn6XI_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 185 |

+

0O-gZoirpRA_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 186 |

+

10aF24rMeu0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 187 |

+

1P5FFxXLSpY_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 188 |

+

1n_s2Gb5R1Q_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 189 |

+

2HZcxlRs-hg_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 190 |

+

2Jpg_KvJWL0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 191 |

+

2WTk_j_fivY_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 192 |

+

38F6eeIR-s0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 193 |

+

3xh2kScw64U_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 194 |

+

4MIHbR4QZhE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 195 |

+

4Tpy1lsfcSM_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 196 |

+

4XMY2IvVSf0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 197 |

+

4ep09nZl3LA_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 198 |

+

4t1VqRz4w2g_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 199 |

+

4tKvAMmAUMM_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 200 |

+

5-x2pk3YYAs_11.000_21.000.wav 11.000 21.000 Reversing beeps

|

| 201 |

+

5DW8WjxxCag_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 202 |

+

5DjZHCumLfs_11.000_21.000.wav 11.000 21.000 Reversing beeps

|

| 203 |

+

5V0xKS-FGMk_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 204 |

+

5fLzQegwHUg_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 205 |

+

6Y8bKS6KLeE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 206 |

+

6xEHP-C-ZuU_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 207 |

+

6yyToq9cW9A_60.000_70.000.wav 60.000 70.000 Reversing beeps

|

| 208 |

+

7Gua0-UrKIw_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 209 |

+

7nglQSmcjAk_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 210 |

+

81DteAPIhoE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 211 |

+

96a4smrM_30_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 212 |

+

9EsgN-WS2qY_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 213 |

+

9OcAwC8y-eQ_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 214 |

+

9Ti98L4PRCo_17.000_27.000.wav 17.000 27.000 Reversing beeps

|

| 215 |

+

9yhMtJ50sys_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 216 |

+

A9KMqwqLboE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 217 |

+

AFwmMFq_xlc_390.000_400.000.wav 390.000 400.000 Reversing beeps

|

| 218 |

+

AvhBRiwWJU4_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 219 |

+

CL5vkiMs2c0_10.000_20.000.wav 10.000 20.000 Reversing beeps

|

| 220 |

+

DcU6AzN7imA_210.000_220.000.wav 210.000 220.000 Reversing beeps

|

| 221 |

+

ISBJKY8hwnM_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 222 |

+

LA5TekLaIPI_10.000_20.000.wav 10.000 20.000 Reversing beeps

|

| 223 |

+

NqzZbJJl3E4_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 224 |

+

PSt0xAYgf4g_0.000_10.000.wav 0.000 10.000 Reversing beeps

|

| 225 |

+

Q1CMSV81_ws_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 226 |

+

_gG0KNGD47M_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 227 |

+

ckt7YEGcSoY_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 228 |

+

eIkUuCRE_0U_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 229 |

+

kH6fFjIZkB0_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 230 |

+

mCJ0aqIygWE_24.000_34.000.wav 24.000 34.000 Reversing beeps

|

| 231 |

+

nFqf1vflJaI_350.000_360.000.wav 350.000 360.000 Reversing beeps

|

| 232 |

+

nMaSkwx6cHE_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 233 |

+

oHKTmTLEy68_11.000_21.000.wav 11.000 21.000 Reversing beeps

|

| 234 |

+

saPU2JNoytU_0.000_10.000.wav 0.000 10.000 Reversing beeps

|

| 235 |

+

tQd0vFueRKs_30.000_40.000.wav 30.000 40.000 Reversing beeps

|

| 236 |

+

vzP6soELj2Q_0.000_10.000.wav 0.000 10.000 Reversing beeps

|

| 237 |

+

0x82_HySIVU_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 238 |

+

1IQdvfm9SDY_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 239 |

+

1_hGvbEiYAs_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 240 |

+

26CM8IXODG4_2.000_12.000.wav 2.000 12.000 Bicycle

|

| 241 |

+

2f7Ad-XpbnY_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 242 |

+

3-a8i_MEUl8_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 243 |

+

7KiTXYwaD04_7.000_17.000.wav 7.000 17.000 Bicycle

|

| 244 |

+

7gkjn-LLInI_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 245 |

+

84flVacRHUI_21.000_31.000.wav 21.000 31.000 Bicycle

|

| 246 |

+

9VziOIkNXsE_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 247 |

+

ANofTuuN0W0_160.000_170.000.wav 160.000 170.000 Bicycle

|

| 248 |

+

B6n0op0sLPA_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 249 |

+

D4_zTwsCRds_60.000_70.000.wav 60.000 70.000 Bicycle

|

| 250 |

+

DEs_Sp9S1Nw_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 251 |

+

GjsxrMRRdfQ_3.000_13.000.wav 3.000 13.000 Bicycle

|

| 252 |

+

GkpUU3VX4wQ_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 253 |

+

H9HNXYxRmv8_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 254 |

+

HPWRKwrs-rY_370.000_380.000.wav 370.000 380.000 Bicycle

|

| 255 |

+

HrQxbNO5jXU_6.000_16.000.wav 6.000 16.000 Bicycle

|

| 256 |

+

IYaEZkAO0LU_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 257 |

+

Idzfy0XbZRo_7.000_17.000.wav 7.000 17.000 Bicycle

|

| 258 |

+

Iigfz_GeXVs_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 259 |

+

JWCtQ_94YoQ_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 260 |

+

JXmBrD4b4EI_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 261 |

+

LSZPNwZex9s_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 262 |

+

M5kwg1kx4q0_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 263 |

+

NrR1wmCpqAk_12.000_22.000.wav 12.000 22.000 Bicycle

|

| 264 |

+

O1_Rw2dHb1I_2.000_12.000.wav 2.000 12.000 Bicycle

|

| 265 |

+

OEN0TySl1Jw_10.000_20.000.wav 10.000 20.000 Bicycle

|

| 266 |

+

PF7uY9ydMYc_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 267 |

+

SDl0tWf9Q44_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 268 |

+

SkXXjcw9sJI_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 269 |

+

Ssa1m5Mnllw_0.000_9.000.wav 0.000 9.000 Bicycle

|

| 270 |

+

UB-A1oyNyyg_0.000_6.000.wav 0.000 6.000 Bicycle

|

| 271 |

+

UqyvFyQthHo_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 272 |

+

Wg4ik5zZxBc_250.000_260.000.wav 250.000 260.000 Bicycle

|

| 273 |

+

WvquSD2PcCE_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 274 |

+

YIJBuXUi64U_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 275 |

+

aBHdl_TiseI_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 276 |

+

aeHCq6fFkNo_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 277 |

+

amKDjVcs1Vg_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 278 |

+

ehYwty_G2L4_13.000_23.000.wav 13.000 23.000 Bicycle

|

| 279 |

+

jOlVJv7jAHg_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 280 |

+

lGFDQ-ZwUfk_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 281 |

+

lmTHvLGQy3g_50.000_60.000.wav 50.000 60.000 Bicycle

|

| 282 |

+

nNHW3Uxlb-g_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 283 |

+

o98R4ruf8kw_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 284 |

+

oiLHBkHgkAo_0.000_8.000.wav 0.000 8.000 Bicycle

|

| 285 |

+

qL0ESQcaPhM_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 286 |

+

qjz5t9M4YCw_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 287 |

+

qrCWPsqG9vA_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 288 |

+

r06tmeUDgc8_3.000_13.000.wav 3.000 13.000 Bicycle

|

| 289 |

+

sAMjMyCdGOc_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 290 |

+

tKdRlWz-1pg_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 291 |

+

uNpSMpqlkMA_0.000_10.000.wav 0.000 10.000 Bicycle

|

| 292 |

+

vOYj9W7Jsxk_8.000_18.000.wav 8.000 18.000 Bicycle

|

| 293 |

+

xBKrmKdjAIA_0.000_10.000.wav 0.000 10.000 Bicycle

|

| 294 |

+

xfNeZaw4o3U_17.000_27.000.wav 17.000 27.000 Bicycle

|

| 295 |

+

xgiJqbhhU3c_30.000_40.000.wav 30.000 40.000 Bicycle

|

| 296 |

+

0vg9qxNKXOw_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 297 |

+

10YXuv9Go0E_140.000_150.000.wav 140.000 150.000 Skateboard

|

| 298 |

+

3-a8i_MEUl8_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 299 |

+

6kXUG1Zo6VA_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 300 |

+

84fDGWoRtsU_210.000_220.000.wav 210.000 220.000 Skateboard

|

| 301 |

+

8kbHA22EWd0_330.000_340.000.wav 330.000 340.000 Skateboard

|

| 302 |

+

8m-a_6wLTkU_230.000_240.000.wav 230.000 240.000 Skateboard

|

| 303 |

+

9QwaP-cvdeU_360.000_370.000.wav 360.000 370.000 Skateboard

|

| 304 |

+

9ZYj5toEbGA_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 305 |

+

9gkppwB5CXA_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 306 |

+

9hlXgXWXYXQ_0.000_6.000.wav 0.000 6.000 Skateboard

|

| 307 |

+

ALxn5-2bVyI_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 308 |

+

ANPjV_rudog_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 309 |

+

ATAL-_Dblvg_0.000_7.000.wav 0.000 7.000 Skateboard

|

| 310 |

+

An-4jPvUT14_60.000_70.000.wav 60.000 70.000 Skateboard

|

| 311 |

+

BGR0QnX4k6w_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 312 |

+

BlhUt8AJJO8_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 313 |

+

CD7INyI79fM_170.000_180.000.wav 170.000 180.000 Skateboard

|

| 314 |

+

CNcxzB9F-Q8_100.000_110.000.wav 100.000 110.000 Skateboard

|

| 315 |

+

DqOGYyFVnKk_200.000_210.000.wav 200.000 210.000 Skateboard

|

| 316 |

+

E0gBwPTHxqE_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 317 |

+

E3XIdP8kxwg_110.000_120.000.wav 110.000 120.000 Skateboard

|

| 318 |

+

FQZnQhiM41U_0.000_6.000.wav 0.000 6.000 Skateboard

|

| 319 |

+

FRwFfq3Tl1g_310.000_320.000.wav 310.000 320.000 Skateboard

|

| 320 |

+

JJo971B_eDg_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 321 |

+

KXkxqxoCylc_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 322 |

+

L4Z7XkS6CtA_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 323 |

+

LjEqr0Z7xm0_0.000_6.000.wav 0.000 6.000 Skateboard

|

| 324 |

+

MAbDEeLF4cQ_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 325 |

+

MUBbiivNYZs_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 326 |

+

Nq8GyBrTI8Y_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 327 |

+

PPq9QZmV7jc_25.000_35.000.wav 25.000 35.000 Skateboard

|

| 328 |

+

PVgL5wFOKMs_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 329 |

+

Tcq_xAdCMr4_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 330 |

+

UtZofZjccBs_290.000_300.000.wav 290.000 300.000 Skateboard

|

| 331 |

+

VZfrDZhI7BU_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 332 |

+

WxChkRrVOIs_0.000_7.000.wav 0.000 7.000 Skateboard

|

| 333 |

+

YV0noe1sZAs_150.000_160.000.wav 150.000 160.000 Skateboard

|

| 334 |

+

YjScrri_F7U_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 335 |

+

YrGQKTbiG1g_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 336 |

+

ZM67kt6G-d4_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 337 |

+

ZaUaqnLdg6k_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 338 |

+

ZhpkRcAEJzc_3.000_13.000.wav 3.000 13.000 Skateboard

|

| 339 |

+

_43OOP6UEw0_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 340 |

+

_6Fyave4jqA_260.000_270.000.wav 260.000 270.000 Skateboard

|

| 341 |

+

aOoZ0bCoaZw_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 342 |

+

gV6y9L24wWg_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 343 |

+

hHb0Eq1I7Fk_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 344 |

+

lGf_L6i6AZI_20.000_30.000.wav 20.000 30.000 Skateboard

|

| 345 |

+

leOH87itNWM_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 346 |

+

mIkW7mWlnXw_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 347 |

+

qadmKrM0ppo_20.000_30.000.wav 20.000 30.000 Skateboard

|

| 348 |

+

rLUIHCc4b9A_0.000_7.000.wav 0.000 7.000 Skateboard

|

| 349 |

+

u3vBJgEVJvk_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 350 |

+

vHKBrtPDSvA_150.000_160.000.wav 150.000 160.000 Skateboard

|

| 351 |

+

wWmydRt0Z-w_21.000_31.000.wav 21.000 31.000 Skateboard

|

| 352 |

+

xeHt-R5ScmI_0.000_10.000.wav 0.000 10.000 Skateboard

|

| 353 |

+

xqGtIVeeXY4_330.000_340.000.wav 330.000 340.000 Skateboard

|

| 354 |

+

y_lfY0uzmr0_30.000_40.000.wav 30.000 40.000 Skateboard

|

| 355 |

+

02Ak1eIyj3M_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 356 |

+

0N0C0Wbe6AI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 357 |

+

2-h8MRSRvEg_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 358 |

+

4APBvMmKubU_10.000_20.000.wav 10.000 20.000 Ambulance (siren)

|

| 359 |

+

5RgHBmX2HLw_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 360 |

+

6rXgD5JlYxY_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 361 |

+

7eeN-fXbso8_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 362 |

+

8Aq2DyLbUBA_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 363 |

+

8qMHvgA9mGw_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 364 |

+

9CRb-PToaAM_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 365 |

+

AwFuGITwrms_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 366 |

+

BGp9-Ro5h8Y_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 367 |

+

CDrpqsGqfPo_10.000_20.000.wav 10.000 20.000 Ambulance (siren)

|

| 368 |

+

Cc7-P0py1Mc_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 369 |

+

Daqv2F6SEmQ_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 370 |

+

F9Dbcxr-lAI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 371 |

+

GORjnSWhZeY_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 372 |

+

GgV0yYogTPI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 373 |

+

H9xQQVv3ElI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 374 |

+

LNQ7fzfdLiY_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 375 |

+

MEUcv-QM0cQ_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 376 |

+

QWVub6-0jX4_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 377 |

+

R8G5Y0HASxY_60.000_70.000.wav 60.000 70.000 Ambulance (siren)

|

| 378 |

+

RVTKY5KR3ME_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 379 |

+

Sm0pPvXPA9U_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 380 |

+

VXI3-DI4xNs_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 381 |

+

W8fIlauyJkk_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 382 |

+

ZlS4vIWQMmE_0.000_10.000.wav 0.000 10.000 Ambulance (siren)

|

| 383 |

+

ZxlbI2Rj1VY_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 384 |

+

ZyuX_gMFiss_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 385 |

+

bA8mt0JI0Ko_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 386 |

+

bIU0X1v4SF0_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 387 |

+

cHm1cYBAXMI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 388 |

+

cR79KnWpiQA_70.000_80.000.wav 70.000 80.000 Ambulance (siren)

|

| 389 |

+

dPcw4R5lczw_500.000_510.000.wav 500.000 510.000 Ambulance (siren)

|

| 390 |

+

epwDz5WBkvc_80.000_90.000.wav 80.000 90.000 Ambulance (siren)

|

| 391 |

+

fHaQPHCjyfA_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 392 |

+

gw9pYEG2Zb0_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 393 |

+

iEX8L_oEbsU_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 394 |

+

iM-U56fTTOQ_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 395 |

+

iSnWMz4FUAg_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 396 |

+

kJuvA2zmrnY_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 397 |

+

kSjvt2Z_pBo_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 398 |

+

ke35yF1LHs4_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 399 |

+

lqGtL8sUo_g_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 400 |

+

mAfPu0meA_Y_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 401 |

+

mlS9LLiMIG8_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 402 |

+

oPR7tUEUptk_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 403 |

+

qsHc2X1toLs_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 404 |

+

rCQykaL8Hy4_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 405 |

+

rhUfN81puDI_0.000_10.000.wav 0.000 10.000 Ambulance (siren)

|

| 406 |

+

s0iddDFzL9s_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 407 |

+

tcKlq7_cOkw_8.000_18.000.wav 8.000 18.000 Ambulance (siren)

|

| 408 |

+

u3yYpMwG4Us_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 409 |

+

vBXPyBiyJG0_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 410 |

+

vVqUvv1SSu8_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 411 |

+

vYKWnuvq2FI_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 412 |

+

ysNK5RVF3Zw_0.000_10.000.wav 0.000 10.000 Ambulance (siren)

|

| 413 |

+

z4B14tAqJ4w_30.000_40.000.wav 30.000 40.000 Ambulance (siren)

|

| 414 |

+

zbiJEml563w_20.000_30.000.wav 20.000 30.000 Ambulance (siren)

|

| 415 |

+

-HxRz4w60-Y_150.000_160.000.wav 150.000 160.000 Fire engine, fire truck (siren)

|

| 416 |

+

-_dElQcyJnA_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)

|

| 417 |

+

0K1mroXg8bs_9.000_19.000.wav 9.000 19.000 Fire engine, fire truck (siren)

|

| 418 |

+

0SvSNVatkv0_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)

|

| 419 |

+

2-h8MRSRvEg_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)

|

| 420 |

+

31WGUPOYS5g_22.000_32.000.wav 22.000 32.000 Fire engine, fire truck (siren)

|

| 421 |

+

3h3_IZWhX0g_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)

|

| 422 |

+

4APBvMmKubU_10.000_20.000.wav 10.000 20.000 Fire engine, fire truck (siren)

|

| 423 |

+

5fjy_2ajEkg_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)

|

| 424 |

+

6rXgD5JlYxY_30.000_40.000.wav 30.000 40.000 Fire engine, fire truck (siren)