Update README.md

Browse files

README.md

CHANGED

|

@@ -20,16 +20,16 @@ language:

|

|

| 20 |

|

| 21 |

### Overview:

|

| 22 |

|

| 23 |

-

Lamarck-14B

|

| 24 |

|

| 25 |

-

|

| 26 |

|

| 27 |

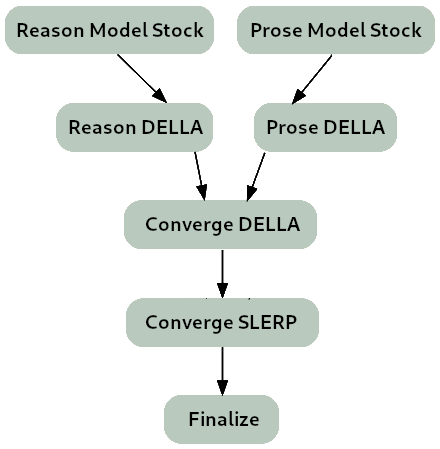

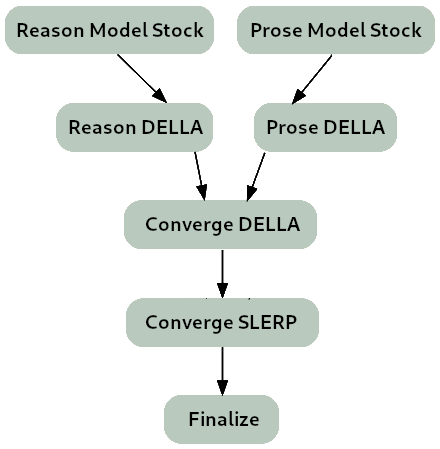

**The merge strategy of Lamarck 0.3 can be summarized as:**

|

| 28 |

|

| 29 |

-

- Two model_stocks

|

| 30 |

-

- For refinement on

|

| 31 |

- For smooth instruction following, a SLERP merged Virtuoso with converged branches.

|

| 32 |

-

- For finalization, a TIES merge.

|

| 33 |

|

| 34 |

|

| 35 |

|

|

|

|

| 20 |

|

| 21 |

### Overview:

|

| 22 |

|

| 23 |

+

Lamarck-14B is a carefully designed merge which emphasizes [arcee-ai/Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small) in early and finishing layers, and midway features strong influence on reasoning and prose from [CultriX/SeQwence-14B-EvolMerge](http://huggingface.co/CultriX/SeQwence-14B-EvolMerge) especially, but a hefty list of other models as well.

|

| 24 |

|

| 25 |

+

Its reasoning and prose skills are quite strong. Version 0.3 is the product of a carefully planned and tested sequence of templated merges, produced by a toolchain which wraps around Arcee's mergekit.

|

| 26 |

|

| 27 |

**The merge strategy of Lamarck 0.3 can be summarized as:**

|

| 28 |

|

| 29 |

+

- Two model_stocks commence specialized branches for reasoning and prose quality.

|

| 30 |

+

- For refinement on both model_stocks, DELLA and SLERP merges re-emphasize selected ancestors.

|

| 31 |

- For smooth instruction following, a SLERP merged Virtuoso with converged branches.

|

| 32 |

+

- For finalization and normalization, a TIES merge.

|

| 33 |

|

| 34 |

|

| 35 |

|