Training in progress, step 1000

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +4 -0

- .gitignore +166 -0

- README.md +16 -0

- added_tokens.json +1609 -0

- computer-vision-study-group/Notebooks/HuggingFace_vision_ecosystem_overview_(June_2022).ipynb +0 -0

- computer-vision-study-group/README.md +15 -0

- computer-vision-study-group/Sessions/Blip2.md +25 -0

- computer-vision-study-group/Sessions/Fiber.md +24 -0

- computer-vision-study-group/Sessions/FlexiViT.md +23 -0

- computer-vision-study-group/Sessions/HFVisionEcosystem.md +10 -0

- computer-vision-study-group/Sessions/HowDoVisionTransformersWork.md +27 -0

- computer-vision-study-group/Sessions/MaskedAutoEncoders.md +24 -0

- computer-vision-study-group/Sessions/NeuralRadianceFields.md +19 -0

- computer-vision-study-group/Sessions/PolarizedSelfAttention.md +14 -0

- computer-vision-study-group/Sessions/SwinTransformer.md +25 -0

- config.json +52 -0

- gradio-blocks/README.md +123 -0

- huggan/README.md +487 -0

- huggan/__init__.py +3 -0

- huggan/assets/cyclegan.png +3 -0

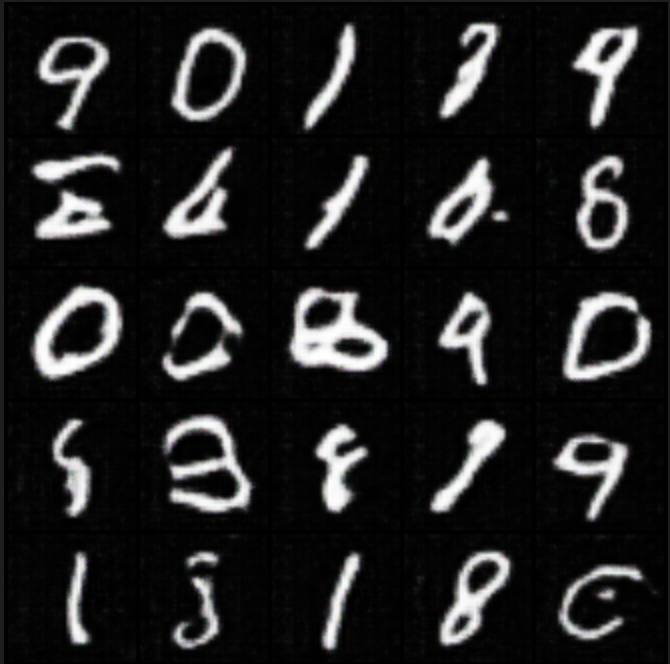

- huggan/assets/dcgan_mnist.png +0 -0

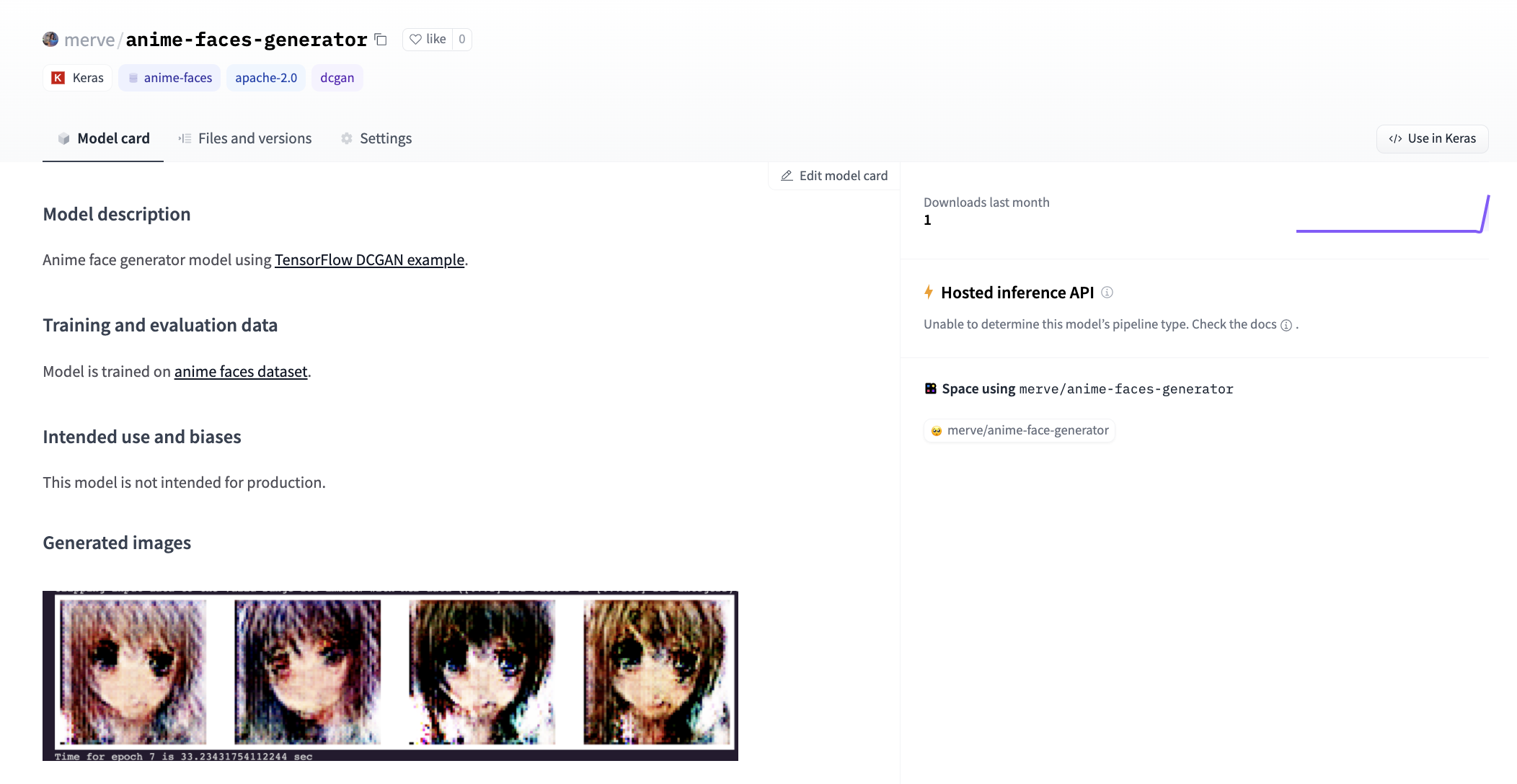

- huggan/assets/example_model.png +0 -0

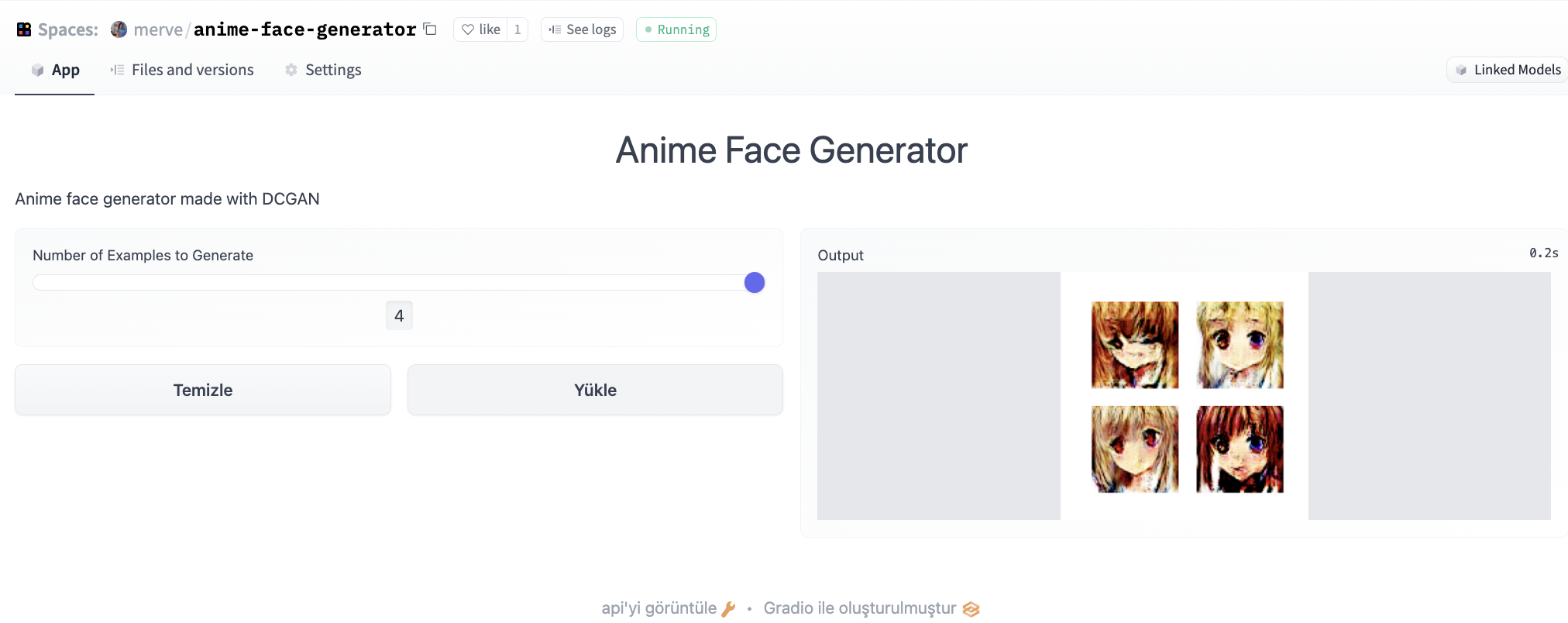

- huggan/assets/example_space.png +0 -0

- huggan/assets/huggan_banner.png +0 -0

- huggan/assets/lightweight_gan_wandb.png +3 -0

- huggan/assets/metfaces.png +0 -0

- huggan/assets/pix2pix_maps.png +3 -0

- huggan/assets/wandb.png +3 -0

- huggan/model_card_template.md +50 -0

- huggan/pytorch/README.md +19 -0

- huggan/pytorch/__init__.py +0 -0

- huggan/pytorch/cyclegan/README.md +81 -0

- huggan/pytorch/cyclegan/__init__.py +0 -0

- huggan/pytorch/cyclegan/modeling_cyclegan.py +108 -0

- huggan/pytorch/cyclegan/train.py +354 -0

- huggan/pytorch/cyclegan/utils.py +44 -0

- huggan/pytorch/dcgan/README.md +155 -0

- huggan/pytorch/dcgan/__init__.py +0 -0

- huggan/pytorch/dcgan/modeling_dcgan.py +80 -0

- huggan/pytorch/dcgan/train.py +346 -0

- huggan/pytorch/huggan_mixin.py +131 -0

- huggan/pytorch/lightweight_gan/README.md +89 -0

- huggan/pytorch/lightweight_gan/__init__.py +0 -0

- huggan/pytorch/lightweight_gan/cli.py +178 -0

- huggan/pytorch/lightweight_gan/diff_augment.py +102 -0

- huggan/pytorch/lightweight_gan/lightweight_gan.py +1598 -0

- huggan/pytorch/metrics/README.md +39 -0

- huggan/pytorch/metrics/__init__.py +0 -0

- huggan/pytorch/metrics/fid_score.py +80 -0

- huggan/pytorch/metrics/inception.py +328 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

huggan/assets/cyclegan.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

huggan/assets/lightweight_gan_wandb.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

huggan/assets/pix2pix_maps.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

huggan/assets/wandb.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Initially taken from Github's Python gitignore file

|

| 2 |

+

|

| 3 |

+

# Byte-compiled / optimized / DLL files

|

| 4 |

+

__pycache__/

|

| 5 |

+

*.py[cod]

|

| 6 |

+

*$py.class

|

| 7 |

+

|

| 8 |

+

# C extensions

|

| 9 |

+

*.so

|

| 10 |

+

|

| 11 |

+

# tests and logs

|

| 12 |

+

tests/fixtures/cached_*_text.txt

|

| 13 |

+

logs/

|

| 14 |

+

lightning_logs/

|

| 15 |

+

lang_code_data/

|

| 16 |

+

|

| 17 |

+

# Distribution / packaging

|

| 18 |

+

.Python

|

| 19 |

+

build/

|

| 20 |

+

develop-eggs/

|

| 21 |

+

dist/

|

| 22 |

+

downloads/

|

| 23 |

+

eggs/

|

| 24 |

+

.eggs/

|

| 25 |

+

lib/

|

| 26 |

+

lib64/

|

| 27 |

+

parts/

|

| 28 |

+

sdist/

|

| 29 |

+

var/

|

| 30 |

+

wheels/

|

| 31 |

+

*.egg-info/

|

| 32 |

+

.installed.cfg

|

| 33 |

+

*.egg

|

| 34 |

+

MANIFEST

|

| 35 |

+

|

| 36 |

+

# PyInstaller

|

| 37 |

+

# Usually these files are written by a python script from a template

|

| 38 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 39 |

+

*.manifest

|

| 40 |

+

*.spec

|

| 41 |

+

|

| 42 |

+

# Installer logs

|

| 43 |

+

pip-log.txt

|

| 44 |

+

pip-delete-this-directory.txt

|

| 45 |

+

|

| 46 |

+

# Unit test / coverage reports

|

| 47 |

+

htmlcov/

|

| 48 |

+

.tox/

|

| 49 |

+

.nox/

|

| 50 |

+

.coverage

|

| 51 |

+

.coverage.*

|

| 52 |

+

.cache

|

| 53 |

+

nosetests.xml

|

| 54 |

+

coverage.xml

|

| 55 |

+

*.cover

|

| 56 |

+

.hypothesis/

|

| 57 |

+

.pytest_cache/

|

| 58 |

+

|

| 59 |

+

# Translations

|

| 60 |

+

*.mo

|

| 61 |

+

*.pot

|

| 62 |

+

|

| 63 |

+

# Django stuff:

|

| 64 |

+

*.log

|

| 65 |

+

local_settings.py

|

| 66 |

+

db.sqlite3

|

| 67 |

+

|

| 68 |

+

# Flask stuff:

|

| 69 |

+

instance/

|

| 70 |

+

.webassets-cache

|

| 71 |

+

|

| 72 |

+

# Scrapy stuff:

|

| 73 |

+

.scrapy

|

| 74 |

+

|

| 75 |

+

# Sphinx documentation

|

| 76 |

+

docs/_build/

|

| 77 |

+

|

| 78 |

+

# PyBuilder

|

| 79 |

+

target/

|

| 80 |

+

|

| 81 |

+

# Jupyter Notebook

|

| 82 |

+

.ipynb_checkpoints

|

| 83 |

+

|

| 84 |

+

# IPython

|

| 85 |

+

profile_default/

|

| 86 |

+

ipython_config.py

|

| 87 |

+

|

| 88 |

+

# pyenv

|

| 89 |

+

.python-version

|

| 90 |

+

|

| 91 |

+

# celery beat schedule file

|

| 92 |

+

celerybeat-schedule

|

| 93 |

+

|

| 94 |

+

# SageMath parsed files

|

| 95 |

+

*.sage.py

|

| 96 |

+

|

| 97 |

+

# Environments

|

| 98 |

+

.env

|

| 99 |

+

.venv

|

| 100 |

+

env/

|

| 101 |

+

venv/

|

| 102 |

+

ENV/

|

| 103 |

+

env.bak/

|

| 104 |

+

venv.bak/

|

| 105 |

+

|

| 106 |

+

# Spyder project settings

|

| 107 |

+

.spyderproject

|

| 108 |

+

.spyproject

|

| 109 |

+

|

| 110 |

+

# Rope project settings

|

| 111 |

+

.ropeproject

|

| 112 |

+

|

| 113 |

+

# mkdocs documentation

|

| 114 |

+

/site

|

| 115 |

+

|

| 116 |

+

# mypy

|

| 117 |

+

.mypy_cache/

|

| 118 |

+

.dmypy.json

|

| 119 |

+

dmypy.json

|

| 120 |

+

|

| 121 |

+

# Pyre type checker

|

| 122 |

+

.pyre/

|

| 123 |

+

|

| 124 |

+

# vscode

|

| 125 |

+

.vs

|

| 126 |

+

.vscode

|

| 127 |

+

|

| 128 |

+

# Pycharm

|

| 129 |

+

.idea

|

| 130 |

+

|

| 131 |

+

# TF code

|

| 132 |

+

tensorflow_code

|

| 133 |

+

|

| 134 |

+

# Models

|

| 135 |

+

proc_data

|

| 136 |

+

|

| 137 |

+

# examples

|

| 138 |

+

runs

|

| 139 |

+

/runs_old

|

| 140 |

+

/wandb

|

| 141 |

+

/examples/runs

|

| 142 |

+

/examples/**/*.args

|

| 143 |

+

/examples/rag/sweep

|

| 144 |

+

|

| 145 |

+

# data

|

| 146 |

+

/data

|

| 147 |

+

serialization_dir

|

| 148 |

+

|

| 149 |

+

# emacs

|

| 150 |

+

*.*~

|

| 151 |

+

debug.env

|

| 152 |

+

|

| 153 |

+

# vim

|

| 154 |

+

.*.swp

|

| 155 |

+

|

| 156 |

+

#ctags

|

| 157 |

+

tags

|

| 158 |

+

|

| 159 |

+

# pre-commit

|

| 160 |

+

.pre-commit*

|

| 161 |

+

|

| 162 |

+

# .lock

|

| 163 |

+

*.lock

|

| 164 |

+

|

| 165 |

+

# DS_Store (MacOS)

|

| 166 |

+

.DS_Store

|

README.md

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Community Events @ 🤗

|

| 2 |

+

|

| 3 |

+

A central repository for all community events organized by 🤗 HuggingFace. Come one, come all!

|

| 4 |

+

We're constantly finding ways to democratise the use of ML across modalities and languages. This repo contains information about all past, present and upcoming events.

|

| 5 |

+

|

| 6 |

+

## Hugging Events

|

| 7 |

+

|

| 8 |

+

| **Event Name** | **Dates** | **Status** |

|

| 9 |

+

|-------------------------------------------------------------------------|-----------------|--------------------------------------------------------------------------------------------------------------|

|

| 10 |

+

| [Open Source AI Game Jam 🎮 (First Edition)](/open-source-ai-game-jam) | July 7th - 9th, 2023 | Finished |

|

| 11 |

+

| [Whisper Fine Tuning Event](/whisper-fine-tuning-event) | Dec 5th - 19th, 2022 | Finished |

|

| 12 |

+

| [Computer Vision Study Group](/computer-vision-study-group) | Ongoing | Monthly |

|

| 13 |

+

| [ML for Audio Study Group](https://github.com/Vaibhavs10/ml-with-audio) | Ongoing | Monthly |

|

| 14 |

+

| [Gradio Blocks](/gradio-blocks) | May 16th - 31st, 2022 | Finished |

|

| 15 |

+

| [HugGAN](/huggan) | Apr 4th - 17th, 2022 | Finished |

|

| 16 |

+

| [Keras Sprint](keras-sprint) | June, 2022 | Finished |

|

added_tokens.json

ADDED

|

@@ -0,0 +1,1609 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"<|0.00|>": 50364,

|

| 3 |

+

"<|0.02|>": 50365,

|

| 4 |

+

"<|0.04|>": 50366,

|

| 5 |

+

"<|0.06|>": 50367,

|

| 6 |

+

"<|0.08|>": 50368,

|

| 7 |

+

"<|0.10|>": 50369,

|

| 8 |

+

"<|0.12|>": 50370,

|

| 9 |

+

"<|0.14|>": 50371,

|

| 10 |

+

"<|0.16|>": 50372,

|

| 11 |

+

"<|0.18|>": 50373,

|

| 12 |

+

"<|0.20|>": 50374,

|

| 13 |

+

"<|0.22|>": 50375,

|

| 14 |

+

"<|0.24|>": 50376,

|

| 15 |

+

"<|0.26|>": 50377,

|

| 16 |

+

"<|0.28|>": 50378,

|

| 17 |

+

"<|0.30|>": 50379,

|

| 18 |

+

"<|0.32|>": 50380,

|

| 19 |

+

"<|0.34|>": 50381,

|

| 20 |

+

"<|0.36|>": 50382,

|

| 21 |

+

"<|0.38|>": 50383,

|

| 22 |

+

"<|0.40|>": 50384,

|

| 23 |

+

"<|0.42|>": 50385,

|

| 24 |

+

"<|0.44|>": 50386,

|

| 25 |

+

"<|0.46|>": 50387,

|

| 26 |

+

"<|0.48|>": 50388,

|

| 27 |

+

"<|0.50|>": 50389,

|

| 28 |

+

"<|0.52|>": 50390,

|

| 29 |

+

"<|0.54|>": 50391,

|

| 30 |

+

"<|0.56|>": 50392,

|

| 31 |

+

"<|0.58|>": 50393,

|

| 32 |

+

"<|0.60|>": 50394,

|

| 33 |

+

"<|0.62|>": 50395,

|

| 34 |

+

"<|0.64|>": 50396,

|

| 35 |

+

"<|0.66|>": 50397,

|

| 36 |

+

"<|0.68|>": 50398,

|

| 37 |

+

"<|0.70|>": 50399,

|

| 38 |

+

"<|0.72|>": 50400,

|

| 39 |

+

"<|0.74|>": 50401,

|

| 40 |

+

"<|0.76|>": 50402,

|

| 41 |

+

"<|0.78|>": 50403,

|

| 42 |

+

"<|0.80|>": 50404,

|

| 43 |

+

"<|0.82|>": 50405,

|

| 44 |

+

"<|0.84|>": 50406,

|

| 45 |

+

"<|0.86|>": 50407,

|

| 46 |

+

"<|0.88|>": 50408,

|

| 47 |

+

"<|0.90|>": 50409,

|

| 48 |

+

"<|0.92|>": 50410,

|

| 49 |

+

"<|0.94|>": 50411,

|

| 50 |

+

"<|0.96|>": 50412,

|

| 51 |

+

"<|0.98|>": 50413,

|

| 52 |

+

"<|1.00|>": 50414,

|

| 53 |

+

"<|1.02|>": 50415,

|

| 54 |

+

"<|1.04|>": 50416,

|

| 55 |

+

"<|1.06|>": 50417,

|

| 56 |

+

"<|1.08|>": 50418,

|

| 57 |

+

"<|1.10|>": 50419,

|

| 58 |

+

"<|1.12|>": 50420,

|

| 59 |

+

"<|1.14|>": 50421,

|

| 60 |

+

"<|1.16|>": 50422,

|

| 61 |

+

"<|1.18|>": 50423,

|

| 62 |

+

"<|1.20|>": 50424,

|

| 63 |

+

"<|1.22|>": 50425,

|

| 64 |

+

"<|1.24|>": 50426,

|

| 65 |

+

"<|1.26|>": 50427,

|

| 66 |

+

"<|1.28|>": 50428,

|

| 67 |

+

"<|1.30|>": 50429,

|

| 68 |

+

"<|1.32|>": 50430,

|

| 69 |

+

"<|1.34|>": 50431,

|

| 70 |

+

"<|1.36|>": 50432,

|

| 71 |

+

"<|1.38|>": 50433,

|

| 72 |

+

"<|1.40|>": 50434,

|

| 73 |

+

"<|1.42|>": 50435,

|

| 74 |

+

"<|1.44|>": 50436,

|

| 75 |

+

"<|1.46|>": 50437,

|

| 76 |

+

"<|1.48|>": 50438,

|

| 77 |

+

"<|1.50|>": 50439,

|

| 78 |

+

"<|1.52|>": 50440,

|

| 79 |

+

"<|1.54|>": 50441,

|

| 80 |

+

"<|1.56|>": 50442,

|

| 81 |

+

"<|1.58|>": 50443,

|

| 82 |

+

"<|1.60|>": 50444,

|

| 83 |

+

"<|1.62|>": 50445,

|

| 84 |

+

"<|1.64|>": 50446,

|

| 85 |

+

"<|1.66|>": 50447,

|

| 86 |

+

"<|1.68|>": 50448,

|

| 87 |

+

"<|1.70|>": 50449,

|

| 88 |

+

"<|1.72|>": 50450,

|

| 89 |

+

"<|1.74|>": 50451,

|

| 90 |

+

"<|1.76|>": 50452,

|

| 91 |

+

"<|1.78|>": 50453,

|

| 92 |

+

"<|1.80|>": 50454,

|

| 93 |

+

"<|1.82|>": 50455,

|

| 94 |

+

"<|1.84|>": 50456,

|

| 95 |

+

"<|1.86|>": 50457,

|

| 96 |

+

"<|1.88|>": 50458,

|

| 97 |

+

"<|1.90|>": 50459,

|

| 98 |

+

"<|1.92|>": 50460,

|

| 99 |

+

"<|1.94|>": 50461,

|

| 100 |

+

"<|1.96|>": 50462,

|

| 101 |

+

"<|1.98|>": 50463,

|

| 102 |

+

"<|10.00|>": 50864,

|

| 103 |

+

"<|10.02|>": 50865,

|

| 104 |

+

"<|10.04|>": 50866,

|

| 105 |

+

"<|10.06|>": 50867,

|

| 106 |

+

"<|10.08|>": 50868,

|

| 107 |

+

"<|10.10|>": 50869,

|

| 108 |

+

"<|10.12|>": 50870,

|

| 109 |

+

"<|10.14|>": 50871,

|

| 110 |

+

"<|10.16|>": 50872,

|

| 111 |

+

"<|10.18|>": 50873,

|

| 112 |

+

"<|10.20|>": 50874,

|

| 113 |

+

"<|10.22|>": 50875,

|

| 114 |

+

"<|10.24|>": 50876,

|

| 115 |

+

"<|10.26|>": 50877,

|

| 116 |

+

"<|10.28|>": 50878,

|

| 117 |

+

"<|10.30|>": 50879,

|

| 118 |

+

"<|10.32|>": 50880,

|

| 119 |

+

"<|10.34|>": 50881,

|

| 120 |

+

"<|10.36|>": 50882,

|

| 121 |

+

"<|10.38|>": 50883,

|

| 122 |

+

"<|10.40|>": 50884,

|

| 123 |

+

"<|10.42|>": 50885,

|

| 124 |

+

"<|10.44|>": 50886,

|

| 125 |

+

"<|10.46|>": 50887,

|

| 126 |

+

"<|10.48|>": 50888,

|

| 127 |

+

"<|10.50|>": 50889,

|

| 128 |

+

"<|10.52|>": 50890,

|

| 129 |

+

"<|10.54|>": 50891,

|

| 130 |

+

"<|10.56|>": 50892,

|

| 131 |

+

"<|10.58|>": 50893,

|

| 132 |

+

"<|10.60|>": 50894,

|

| 133 |

+

"<|10.62|>": 50895,

|

| 134 |

+

"<|10.64|>": 50896,

|

| 135 |

+

"<|10.66|>": 50897,

|

| 136 |

+

"<|10.68|>": 50898,

|

| 137 |

+

"<|10.70|>": 50899,

|

| 138 |

+

"<|10.72|>": 50900,

|

| 139 |

+

"<|10.74|>": 50901,

|

| 140 |

+

"<|10.76|>": 50902,

|

| 141 |

+

"<|10.78|>": 50903,

|

| 142 |

+

"<|10.80|>": 50904,

|

| 143 |

+

"<|10.82|>": 50905,

|

| 144 |

+

"<|10.84|>": 50906,

|

| 145 |

+

"<|10.86|>": 50907,

|

| 146 |

+

"<|10.88|>": 50908,

|

| 147 |

+

"<|10.90|>": 50909,

|

| 148 |

+

"<|10.92|>": 50910,

|

| 149 |

+

"<|10.94|>": 50911,

|

| 150 |

+

"<|10.96|>": 50912,

|

| 151 |

+

"<|10.98|>": 50913,

|

| 152 |

+

"<|11.00|>": 50914,

|

| 153 |

+

"<|11.02|>": 50915,

|

| 154 |

+

"<|11.04|>": 50916,

|

| 155 |

+

"<|11.06|>": 50917,

|

| 156 |

+

"<|11.08|>": 50918,

|

| 157 |

+

"<|11.10|>": 50919,

|

| 158 |

+

"<|11.12|>": 50920,

|

| 159 |

+

"<|11.14|>": 50921,

|

| 160 |

+

"<|11.16|>": 50922,

|

| 161 |

+

"<|11.18|>": 50923,

|

| 162 |

+

"<|11.20|>": 50924,

|

| 163 |

+

"<|11.22|>": 50925,

|

| 164 |

+

"<|11.24|>": 50926,

|

| 165 |

+

"<|11.26|>": 50927,

|

| 166 |

+

"<|11.28|>": 50928,

|

| 167 |

+

"<|11.30|>": 50929,

|

| 168 |

+

"<|11.32|>": 50930,

|

| 169 |

+

"<|11.34|>": 50931,

|

| 170 |

+

"<|11.36|>": 50932,

|

| 171 |

+

"<|11.38|>": 50933,

|

| 172 |

+

"<|11.40|>": 50934,

|

| 173 |

+

"<|11.42|>": 50935,

|

| 174 |

+

"<|11.44|>": 50936,

|

| 175 |

+

"<|11.46|>": 50937,

|

| 176 |

+

"<|11.48|>": 50938,

|

| 177 |

+

"<|11.50|>": 50939,

|

| 178 |

+

"<|11.52|>": 50940,

|

| 179 |

+

"<|11.54|>": 50941,

|

| 180 |

+

"<|11.56|>": 50942,

|

| 181 |

+

"<|11.58|>": 50943,

|

| 182 |

+

"<|11.60|>": 50944,

|

| 183 |

+

"<|11.62|>": 50945,

|

| 184 |

+

"<|11.64|>": 50946,

|

| 185 |

+

"<|11.66|>": 50947,

|

| 186 |

+

"<|11.68|>": 50948,

|

| 187 |

+

"<|11.70|>": 50949,

|

| 188 |

+

"<|11.72|>": 50950,

|

| 189 |

+

"<|11.74|>": 50951,

|

| 190 |

+

"<|11.76|>": 50952,

|

| 191 |

+

"<|11.78|>": 50953,

|

| 192 |

+

"<|11.80|>": 50954,

|

| 193 |

+

"<|11.82|>": 50955,

|

| 194 |

+

"<|11.84|>": 50956,

|

| 195 |

+

"<|11.86|>": 50957,

|

| 196 |

+

"<|11.88|>": 50958,

|

| 197 |

+

"<|11.90|>": 50959,

|

| 198 |

+

"<|11.92|>": 50960,

|

| 199 |

+

"<|11.94|>": 50961,

|

| 200 |

+

"<|11.96|>": 50962,

|

| 201 |

+

"<|11.98|>": 50963,

|

| 202 |

+

"<|12.00|>": 50964,

|

| 203 |

+

"<|12.02|>": 50965,

|

| 204 |

+

"<|12.04|>": 50966,

|

| 205 |

+

"<|12.06|>": 50967,

|

| 206 |

+

"<|12.08|>": 50968,

|

| 207 |

+

"<|12.10|>": 50969,

|

| 208 |

+

"<|12.12|>": 50970,

|

| 209 |

+

"<|12.14|>": 50971,

|

| 210 |

+

"<|12.16|>": 50972,

|

| 211 |

+

"<|12.18|>": 50973,

|

| 212 |

+

"<|12.20|>": 50974,

|

| 213 |

+

"<|12.22|>": 50975,

|

| 214 |

+

"<|12.24|>": 50976,

|

| 215 |

+

"<|12.26|>": 50977,

|

| 216 |

+

"<|12.28|>": 50978,

|

| 217 |

+

"<|12.30|>": 50979,

|

| 218 |

+

"<|12.32|>": 50980,

|

| 219 |

+

"<|12.34|>": 50981,

|

| 220 |

+

"<|12.36|>": 50982,

|

| 221 |

+

"<|12.38|>": 50983,

|

| 222 |

+

"<|12.40|>": 50984,

|

| 223 |

+

"<|12.42|>": 50985,

|

| 224 |

+

"<|12.44|>": 50986,

|

| 225 |

+

"<|12.46|>": 50987,

|

| 226 |

+

"<|12.48|>": 50988,

|

| 227 |

+

"<|12.50|>": 50989,

|

| 228 |

+

"<|12.52|>": 50990,

|

| 229 |

+

"<|12.54|>": 50991,

|

| 230 |

+

"<|12.56|>": 50992,

|

| 231 |

+

"<|12.58|>": 50993,

|

| 232 |

+

"<|12.60|>": 50994,

|

| 233 |

+

"<|12.62|>": 50995,

|

| 234 |

+

"<|12.64|>": 50996,

|

| 235 |

+

"<|12.66|>": 50997,

|

| 236 |

+

"<|12.68|>": 50998,

|

| 237 |

+

"<|12.70|>": 50999,

|

| 238 |

+

"<|12.72|>": 51000,

|

| 239 |

+

"<|12.74|>": 51001,

|

| 240 |

+

"<|12.76|>": 51002,

|

| 241 |

+

"<|12.78|>": 51003,

|

| 242 |

+

"<|12.80|>": 51004,

|

| 243 |

+

"<|12.82|>": 51005,

|

| 244 |

+

"<|12.84|>": 51006,

|

| 245 |

+

"<|12.86|>": 51007,

|

| 246 |

+

"<|12.88|>": 51008,

|

| 247 |

+

"<|12.90|>": 51009,

|

| 248 |

+

"<|12.92|>": 51010,

|

| 249 |

+

"<|12.94|>": 51011,

|

| 250 |

+

"<|12.96|>": 51012,

|

| 251 |

+

"<|12.98|>": 51013,

|

| 252 |

+

"<|13.00|>": 51014,

|

| 253 |

+

"<|13.02|>": 51015,

|

| 254 |

+

"<|13.04|>": 51016,

|

| 255 |

+

"<|13.06|>": 51017,

|

| 256 |

+

"<|13.08|>": 51018,

|

| 257 |

+

"<|13.10|>": 51019,

|

| 258 |

+

"<|13.12|>": 51020,

|

| 259 |

+

"<|13.14|>": 51021,

|

| 260 |

+

"<|13.16|>": 51022,

|

| 261 |

+

"<|13.18|>": 51023,

|

| 262 |

+

"<|13.20|>": 51024,

|

| 263 |

+

"<|13.22|>": 51025,

|

| 264 |

+

"<|13.24|>": 51026,

|

| 265 |

+

"<|13.26|>": 51027,

|

| 266 |

+

"<|13.28|>": 51028,

|

| 267 |

+

"<|13.30|>": 51029,

|

| 268 |

+

"<|13.32|>": 51030,

|

| 269 |

+

"<|13.34|>": 51031,

|

| 270 |

+

"<|13.36|>": 51032,

|

| 271 |

+

"<|13.38|>": 51033,

|

| 272 |

+

"<|13.40|>": 51034,

|

| 273 |

+

"<|13.42|>": 51035,

|

| 274 |

+

"<|13.44|>": 51036,

|

| 275 |

+

"<|13.46|>": 51037,

|

| 276 |

+

"<|13.48|>": 51038,

|

| 277 |

+

"<|13.50|>": 51039,

|

| 278 |

+

"<|13.52|>": 51040,

|

| 279 |

+

"<|13.54|>": 51041,

|

| 280 |

+

"<|13.56|>": 51042,

|

| 281 |

+

"<|13.58|>": 51043,

|

| 282 |

+

"<|13.60|>": 51044,

|

| 283 |

+

"<|13.62|>": 51045,

|

| 284 |

+

"<|13.64|>": 51046,

|

| 285 |

+

"<|13.66|>": 51047,

|

| 286 |

+

"<|13.68|>": 51048,

|

| 287 |

+

"<|13.70|>": 51049,

|

| 288 |

+

"<|13.72|>": 51050,

|

| 289 |

+

"<|13.74|>": 51051,

|

| 290 |

+

"<|13.76|>": 51052,

|

| 291 |

+

"<|13.78|>": 51053,

|

| 292 |

+

"<|13.80|>": 51054,

|

| 293 |

+

"<|13.82|>": 51055,

|

| 294 |

+

"<|13.84|>": 51056,

|

| 295 |

+

"<|13.86|>": 51057,

|

| 296 |

+

"<|13.88|>": 51058,

|

| 297 |

+

"<|13.90|>": 51059,

|

| 298 |

+

"<|13.92|>": 51060,

|

| 299 |

+

"<|13.94|>": 51061,

|

| 300 |

+

"<|13.96|>": 51062,

|

| 301 |

+

"<|13.98|>": 51063,

|

| 302 |

+

"<|14.00|>": 51064,

|

| 303 |

+

"<|14.02|>": 51065,

|

| 304 |

+

"<|14.04|>": 51066,

|

| 305 |

+

"<|14.06|>": 51067,

|

| 306 |

+

"<|14.08|>": 51068,

|

| 307 |

+

"<|14.10|>": 51069,

|

| 308 |

+

"<|14.12|>": 51070,

|

| 309 |

+

"<|14.14|>": 51071,

|

| 310 |

+

"<|14.16|>": 51072,

|

| 311 |

+

"<|14.18|>": 51073,

|

| 312 |

+

"<|14.20|>": 51074,

|

| 313 |

+

"<|14.22|>": 51075,

|

| 314 |

+

"<|14.24|>": 51076,

|

| 315 |

+

"<|14.26|>": 51077,

|

| 316 |

+

"<|14.28|>": 51078,

|

| 317 |

+

"<|14.30|>": 51079,

|