adding in addition of prompts and hyperparams, and improved one of the images

Browse files

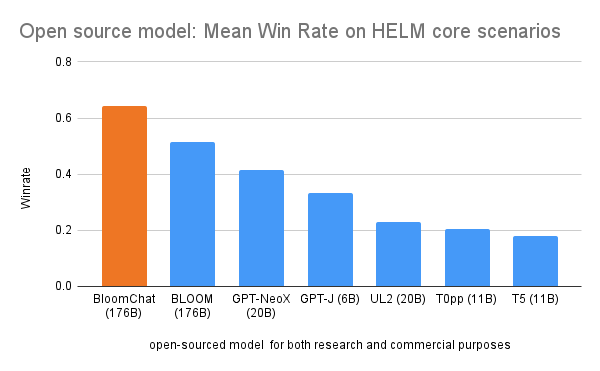

Open_source_model_Mean_Win_Rate_on_HELM_core_scenarios.png

CHANGED

|

|

README.md

CHANGED

|

@@ -73,6 +73,35 @@ Use the code below to get started with the model.

|

|

| 73 |

|

| 74 |

[More Information Needed]

|

| 75 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 76 |

## Training Details

|

| 77 |

|

| 78 |

### Training Data

|

|

@@ -89,6 +118,21 @@ Use the code below to get started with the model.

|

|

| 89 |

|

| 90 |

We trained BloomChat with SambaStudio, a platform built on SambaNova's in-house Reconfigurable Dataflow Unit (RDU). We started from [Bloom-176B](https://huggingface.co/bigscience/bloom), an OSS multilingual 176B GPT model pretrained by the [BigScience group](https://huggingface.co/bigscience).

|

| 91 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 92 |

### Hyperparameters

|

| 93 |

|

| 94 |

**Instruction-tuned Training on OIG**

|

|

@@ -99,7 +143,9 @@ We trained BloomChat with SambaStudio, a platform built on SambaNova's in-house

|

|

| 99 |

- Epochs: 1

|

| 100 |

- Global Batch size: 128

|

| 101 |

- Batch tokens: 128 * 2048 = 262,144 tokens

|

| 102 |

-

-

|

|

|

|

|

|

|

| 103 |

- Weight decay: 0.1

|

| 104 |

|

| 105 |

**Instruction-tuned Training on Dolly 2.0 and Oasst1**

|

|

@@ -110,7 +156,9 @@ We trained BloomChat with SambaStudio, a platform built on SambaNova's in-house

|

|

| 110 |

- Epochs: 3

|

| 111 |

- Global Batch size: 128

|

| 112 |

- Batch tokens: 128 * 2048 = 262,144 tokens

|

| 113 |

-

-

|

|

|

|

|

|

|

| 114 |

- Weight decay: 0.1

|

| 115 |

|

| 116 |

|

|

|

|

| 73 |

|

| 74 |

[More Information Needed]

|

| 75 |

|

| 76 |

+

### Suggested inference parameters

|

| 77 |

+

- Temperature: 0.8

|

| 78 |

+

- Repetition penalty: 1.2

|

| 79 |

+

- Top-p: 0.9

|

| 80 |

+

- Max generated tokens: 512

|

| 81 |

+

|

| 82 |

+

### Suggested System Prompts

|

| 83 |

+

```

|

| 84 |

+

<human>: Write a script in which Bob accidentally breaks his dad's guitar

|

| 85 |

+

<bot>:

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

```

|

| 89 |

+

<human>: Classify the sentiment of the following sentence into Positive, Neutral, or Negative. Do it on a scale of 1/10: How about the following sentence: It is raining outside and I feel so blue

|

| 90 |

+

<bot>:

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

<human>: give a python code to open a http server in 8080 port using python 3.7

|

| 95 |

+

<bot>:

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

```

|

| 99 |

+

<human>: Answer the following question using the context below:

|

| 100 |

+

Q: Which regulatory body is invovled?

|

| 101 |

+

Context: U.S. authorities launched emergency measures on Sunday to shore up confidence in the banking system after the failure of Silicon Valley Bank (SIVB.O) threatened to trigger a broader financial crisis. After a dramatic weekend, regulators said the failed bank’s customers will have access to all their deposits starting Monday and set up a new facility to give banks access to emergency funds. The Federal Reserve also made it easier for banks to borrow from it in emergencies. While the measures provided some relief for Silicon Valley firms and global markets on Monday, worries about broader banking risks remain and have cast doubts over whether the Fed will stick with its plan for aggressive interest rate hikes.

|

| 102 |

+

<bot>:

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

## Training Details

|

| 106 |

|

| 107 |

### Training Data

|

|

|

|

| 118 |

|

| 119 |

We trained BloomChat with SambaStudio, a platform built on SambaNova's in-house Reconfigurable Dataflow Unit (RDU). We started from [Bloom-176B](https://huggingface.co/bigscience/bloom), an OSS multilingual 176B GPT model pretrained by the [BigScience group](https://huggingface.co/bigscience).

|

| 120 |

|

| 121 |

+

### Prompting Style Used For Training

|

| 122 |

+

```

|

| 123 |

+

<human>: {input that the user wants from the bot}

|

| 124 |

+

<bot>:

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

```

|

| 128 |

+

<human>: {fewshot1 input}

|

| 129 |

+

<bot>: {fewshot1 response}

|

| 130 |

+

<human>: {fewshot2 input}

|

| 131 |

+

<bot>: {fewshot2 response}

|

| 132 |

+

<human>: {input that the user wants from the bot}

|

| 133 |

+

<bot>:

|

| 134 |

+

```

|

| 135 |

+

|

| 136 |

### Hyperparameters

|

| 137 |

|

| 138 |

**Instruction-tuned Training on OIG**

|

|

|

|

| 143 |

- Epochs: 1

|

| 144 |

- Global Batch size: 128

|

| 145 |

- Batch tokens: 128 * 2048 = 262,144 tokens

|

| 146 |

+

- Learning Rate: 1e-5

|

| 147 |

+

- Learning Rate Scheduler: Cosine Schedule with Warmup

|

| 148 |

+

- Warmup Steps: 0

|

| 149 |

- Weight decay: 0.1

|

| 150 |

|

| 151 |

**Instruction-tuned Training on Dolly 2.0 and Oasst1**

|

|

|

|

| 156 |

- Epochs: 3

|

| 157 |

- Global Batch size: 128

|

| 158 |

- Batch tokens: 128 * 2048 = 262,144 tokens

|

| 159 |

+

- Learning Rate: 1e-5

|

| 160 |

+

- Learning Rate Scheduler: Cosine Schedule with Warmup

|

| 161 |

+

- Warmup Steps: 0

|

| 162 |

- Weight decay: 0.1

|

| 163 |

|

| 164 |

|