Raphaël Bournhonesque

commited on

Commit

•

6e7f851

1

Parent(s):

f75c095

add v1 of the proof classification model

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +13 -0

- args.yaml +106 -0

- confusion_matrix.png +0 -0

- confusion_matrix_normalized.png +0 -0

- mlflow/0/meta.yaml +6 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/args.yaml +106 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/confusion_matrix.png +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/confusion_matrix_normalized.png +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/results.csv +101 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/results.png +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch0.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch1.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch2.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3330.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3331.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3332.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch0_labels.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch0_pred.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch1_labels.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch1_pred.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch2_labels.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch2_pred.jpg +0 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/weights/best.pt +3 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/weights/last.pt +3 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/meta.yaml +15 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg0 +100 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg1 +100 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg2 +100 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/metrics/accuracy_top1 +101 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/metrics/accuracy_top5 +101 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/train/loss +100 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/val/loss +100 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/agnostic_nms +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/amp +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/augment +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/auto_augment +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/batch +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/bgr +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/box +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/cache +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/cfg +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/classes +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/close_mosaic +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/cls +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/conf +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/copy_paste +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/cos_lr +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/crop_fraction +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/data +1 -0

- mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/params/degrees +1 -0

README.md

CHANGED

|

@@ -1,3 +1,16 @@

|

|

| 1 |

---

|

| 2 |

license: agpl-3.0

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: agpl-3.0

|

| 3 |

---

|

| 4 |

+

|

| 5 |

+

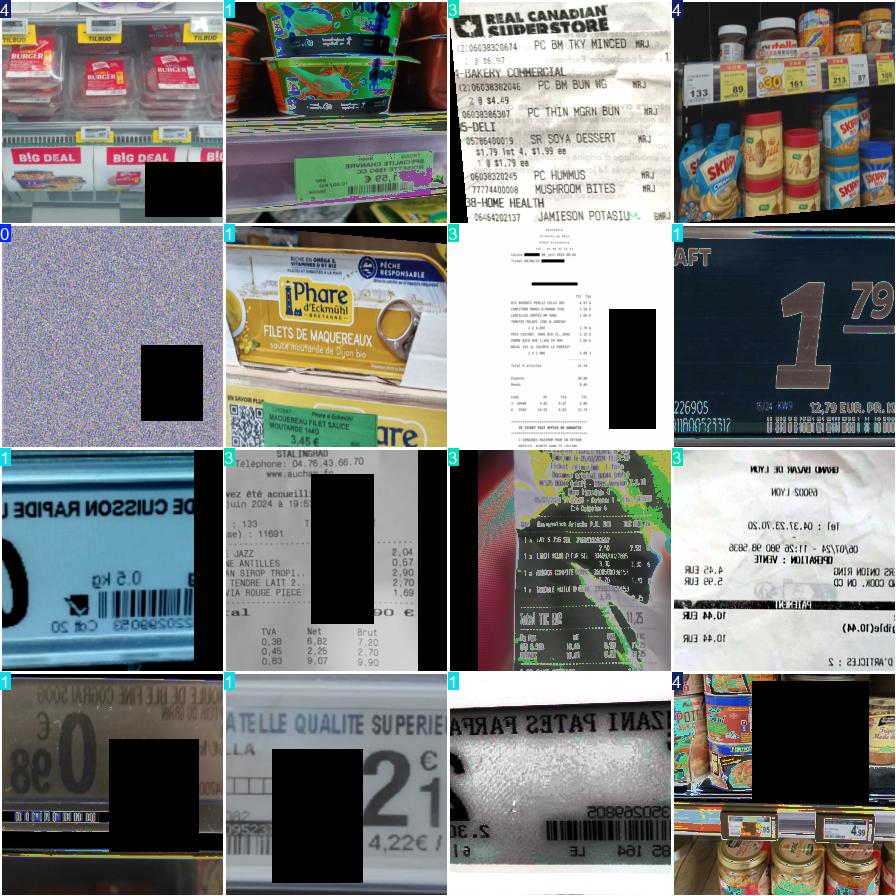

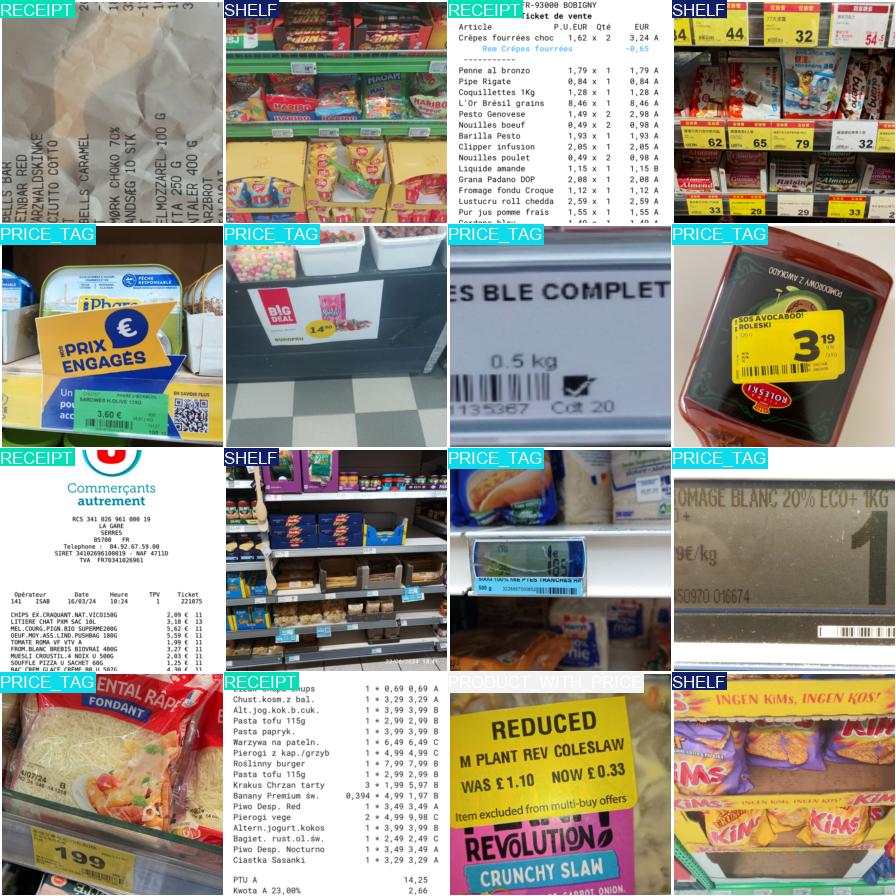

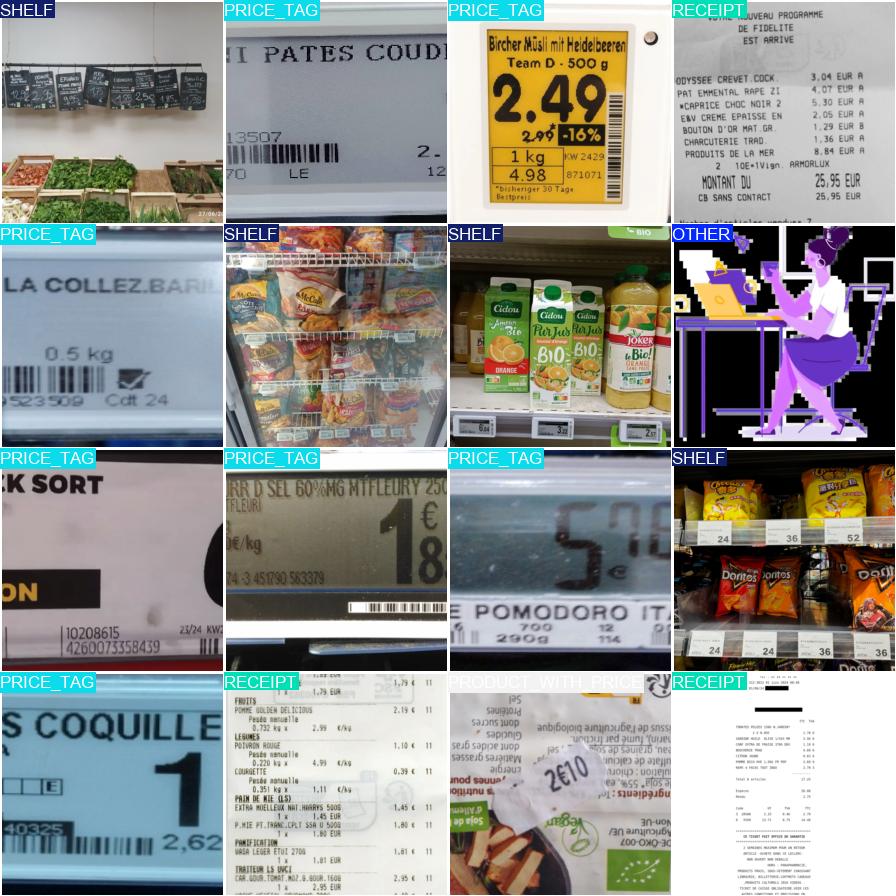

This model is an image classifier used to predict the proof type of a proof image on [Open Prices](https://prices.openfoodfacts.org/).

|

| 6 |

+

|

| 7 |

+

The folling categories are supported:

|

| 8 |

+

|

| 9 |

+

- `PRICE_TAG`: a photo a single price tag in a shop. If several price tags are visible, the image should be classified as `SHELF`.

|

| 10 |

+

- `SHELF`: a photo of a shelf with several products and price tags. If only one price tag is visible, the image should be classified as `PRICE_TAG`.

|

| 11 |

+

- `PRODUCT_WITH_PRICE`: a single product with a price written (or added) directly on the product.

|

| 12 |

+

- `RECEIPT`: a photo of a shop receipt.

|

| 13 |

+

- `WEB_PRINT`: a screenshot of a web page displaying a price/receipt or a photo of a price brochure.

|

| 14 |

+

- `OTHER`: every other image that doesn't belong to any of the above category.

|

| 15 |

+

|

| 16 |

+

The model was trained with Ultralytics library. It's was fine-tuned from YOLOv8n model. The training dataset is an annotated subset of the [Open Prices dataset](https://prices.openfoodfacts.org/).

|

args.yaml

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: classify

|

| 2 |

+

mode: train

|

| 3 |

+

model: yolov8n-cls.pt

|

| 4 |

+

data: ./proof-classification

|

| 5 |

+

epochs: 100

|

| 6 |

+

time: null

|

| 7 |

+

patience: 100

|

| 8 |

+

batch: 16

|

| 9 |

+

imgsz: 224

|

| 10 |

+

save: true

|

| 11 |

+

save_period: -1

|

| 12 |

+

cache: false

|

| 13 |

+

device: null

|

| 14 |

+

workers: 8

|

| 15 |

+

project: null

|

| 16 |

+

name: train

|

| 17 |

+

exist_ok: false

|

| 18 |

+

pretrained: true

|

| 19 |

+

optimizer: auto

|

| 20 |

+

verbose: true

|

| 21 |

+

seed: 0

|

| 22 |

+

deterministic: true

|

| 23 |

+

single_cls: false

|

| 24 |

+

rect: false

|

| 25 |

+

cos_lr: false

|

| 26 |

+

close_mosaic: 10

|

| 27 |

+

resume: false

|

| 28 |

+

amp: true

|

| 29 |

+

fraction: 1.0

|

| 30 |

+

profile: false

|

| 31 |

+

freeze: null

|

| 32 |

+

multi_scale: false

|

| 33 |

+

overlap_mask: true

|

| 34 |

+

mask_ratio: 4

|

| 35 |

+

dropout: 0.0

|

| 36 |

+

val: true

|

| 37 |

+

split: val

|

| 38 |

+

save_json: false

|

| 39 |

+

save_hybrid: false

|

| 40 |

+

conf: null

|

| 41 |

+

iou: 0.7

|

| 42 |

+

max_det: 300

|

| 43 |

+

half: false

|

| 44 |

+

dnn: false

|

| 45 |

+

plots: true

|

| 46 |

+

source: null

|

| 47 |

+

vid_stride: 1

|

| 48 |

+

stream_buffer: false

|

| 49 |

+

visualize: false

|

| 50 |

+

augment: false

|

| 51 |

+

agnostic_nms: false

|

| 52 |

+

classes: null

|

| 53 |

+

retina_masks: false

|

| 54 |

+

embed: null

|

| 55 |

+

show: false

|

| 56 |

+

save_frames: false

|

| 57 |

+

save_txt: false

|

| 58 |

+

save_conf: false

|

| 59 |

+

save_crop: false

|

| 60 |

+

show_labels: true

|

| 61 |

+

show_conf: true

|

| 62 |

+

show_boxes: true

|

| 63 |

+

line_width: null

|

| 64 |

+

format: torchscript

|

| 65 |

+

keras: false

|

| 66 |

+

optimize: false

|

| 67 |

+

int8: false

|

| 68 |

+

dynamic: false

|

| 69 |

+

simplify: false

|

| 70 |

+

opset: null

|

| 71 |

+

workspace: 4

|

| 72 |

+

nms: false

|

| 73 |

+

lr0: 0.01

|

| 74 |

+

lrf: 0.01

|

| 75 |

+

momentum: 0.937

|

| 76 |

+

weight_decay: 0.0005

|

| 77 |

+

warmup_epochs: 3.0

|

| 78 |

+

warmup_momentum: 0.8

|

| 79 |

+

warmup_bias_lr: 0.1

|

| 80 |

+

box: 7.5

|

| 81 |

+

cls: 0.5

|

| 82 |

+

dfl: 1.5

|

| 83 |

+

pose: 12.0

|

| 84 |

+

kobj: 1.0

|

| 85 |

+

label_smoothing: 0.0

|

| 86 |

+

nbs: 64

|

| 87 |

+

hsv_h: 0.015

|

| 88 |

+

hsv_s: 0.7

|

| 89 |

+

hsv_v: 0.4

|

| 90 |

+

degrees: 0.0

|

| 91 |

+

translate: 0.1

|

| 92 |

+

scale: 0.5

|

| 93 |

+

shear: 0.0

|

| 94 |

+

perspective: 0.0

|

| 95 |

+

flipud: 0.0

|

| 96 |

+

fliplr: 0.5

|

| 97 |

+

bgr: 0.0

|

| 98 |

+

mosaic: 1.0

|

| 99 |

+

mixup: 0.0

|

| 100 |

+

copy_paste: 0.0

|

| 101 |

+

auto_augment: randaugment

|

| 102 |

+

erasing: 0.4

|

| 103 |

+

crop_fraction: 1.0

|

| 104 |

+

cfg: null

|

| 105 |

+

tracker: botsort.yaml

|

| 106 |

+

save_dir: runs/classify/train

|

confusion_matrix.png

ADDED

|

confusion_matrix_normalized.png

ADDED

|

mlflow/0/meta.yaml

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

artifact_location: /home/onyxia/work/open-prices-cls/runs/mlflow/0

|

| 2 |

+

creation_time: 1723032671813

|

| 3 |

+

experiment_id: '0'

|

| 4 |

+

last_update_time: 1723032671813

|

| 5 |

+

lifecycle_stage: active

|

| 6 |

+

name: Default

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/args.yaml

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: classify

|

| 2 |

+

mode: train

|

| 3 |

+

model: yolov8n-cls.pt

|

| 4 |

+

data: ./proof-classification

|

| 5 |

+

epochs: 100

|

| 6 |

+

time: null

|

| 7 |

+

patience: 100

|

| 8 |

+

batch: 16

|

| 9 |

+

imgsz: 224

|

| 10 |

+

save: true

|

| 11 |

+

save_period: -1

|

| 12 |

+

cache: false

|

| 13 |

+

device: null

|

| 14 |

+

workers: 8

|

| 15 |

+

project: null

|

| 16 |

+

name: train

|

| 17 |

+

exist_ok: false

|

| 18 |

+

pretrained: true

|

| 19 |

+

optimizer: auto

|

| 20 |

+

verbose: true

|

| 21 |

+

seed: 0

|

| 22 |

+

deterministic: true

|

| 23 |

+

single_cls: false

|

| 24 |

+

rect: false

|

| 25 |

+

cos_lr: false

|

| 26 |

+

close_mosaic: 10

|

| 27 |

+

resume: false

|

| 28 |

+

amp: true

|

| 29 |

+

fraction: 1.0

|

| 30 |

+

profile: false

|

| 31 |

+

freeze: null

|

| 32 |

+

multi_scale: false

|

| 33 |

+

overlap_mask: true

|

| 34 |

+

mask_ratio: 4

|

| 35 |

+

dropout: 0.0

|

| 36 |

+

val: true

|

| 37 |

+

split: val

|

| 38 |

+

save_json: false

|

| 39 |

+

save_hybrid: false

|

| 40 |

+

conf: null

|

| 41 |

+

iou: 0.7

|

| 42 |

+

max_det: 300

|

| 43 |

+

half: false

|

| 44 |

+

dnn: false

|

| 45 |

+

plots: true

|

| 46 |

+

source: null

|

| 47 |

+

vid_stride: 1

|

| 48 |

+

stream_buffer: false

|

| 49 |

+

visualize: false

|

| 50 |

+

augment: false

|

| 51 |

+

agnostic_nms: false

|

| 52 |

+

classes: null

|

| 53 |

+

retina_masks: false

|

| 54 |

+

embed: null

|

| 55 |

+

show: false

|

| 56 |

+

save_frames: false

|

| 57 |

+

save_txt: false

|

| 58 |

+

save_conf: false

|

| 59 |

+

save_crop: false

|

| 60 |

+

show_labels: true

|

| 61 |

+

show_conf: true

|

| 62 |

+

show_boxes: true

|

| 63 |

+

line_width: null

|

| 64 |

+

format: torchscript

|

| 65 |

+

keras: false

|

| 66 |

+

optimize: false

|

| 67 |

+

int8: false

|

| 68 |

+

dynamic: false

|

| 69 |

+

simplify: false

|

| 70 |

+

opset: null

|

| 71 |

+

workspace: 4

|

| 72 |

+

nms: false

|

| 73 |

+

lr0: 0.01

|

| 74 |

+

lrf: 0.01

|

| 75 |

+

momentum: 0.937

|

| 76 |

+

weight_decay: 0.0005

|

| 77 |

+

warmup_epochs: 3.0

|

| 78 |

+

warmup_momentum: 0.8

|

| 79 |

+

warmup_bias_lr: 0.1

|

| 80 |

+

box: 7.5

|

| 81 |

+

cls: 0.5

|

| 82 |

+

dfl: 1.5

|

| 83 |

+

pose: 12.0

|

| 84 |

+

kobj: 1.0

|

| 85 |

+

label_smoothing: 0.0

|

| 86 |

+

nbs: 64

|

| 87 |

+

hsv_h: 0.015

|

| 88 |

+

hsv_s: 0.7

|

| 89 |

+

hsv_v: 0.4

|

| 90 |

+

degrees: 0.0

|

| 91 |

+

translate: 0.1

|

| 92 |

+

scale: 0.5

|

| 93 |

+

shear: 0.0

|

| 94 |

+

perspective: 0.0

|

| 95 |

+

flipud: 0.0

|

| 96 |

+

fliplr: 0.5

|

| 97 |

+

bgr: 0.0

|

| 98 |

+

mosaic: 1.0

|

| 99 |

+

mixup: 0.0

|

| 100 |

+

copy_paste: 0.0

|

| 101 |

+

auto_augment: randaugment

|

| 102 |

+

erasing: 0.4

|

| 103 |

+

crop_fraction: 1.0

|

| 104 |

+

cfg: null

|

| 105 |

+

tracker: botsort.yaml

|

| 106 |

+

save_dir: runs/classify/train

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/confusion_matrix.png

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/confusion_matrix_normalized.png

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/results.csv

ADDED

|

@@ -0,0 +1,101 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/loss, metrics/accuracy_top1, metrics/accuracy_top5, val/loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 1.4451, 0.83099, 1, 1.4348, 0.00023157, 0.00023157, 0.00023157

|

| 3 |

+

2, 0.64566, 0.90141, 0.99296, 1.202, 0.00046492, 0.00046492, 0.00046492

|

| 4 |

+

3, 0.46756, 0.91549, 0.99296, 1.1523, 0.00069356, 0.00069356, 0.00069356

|

| 5 |

+

4, 0.37288, 0.9507, 0.99296, 1.1553, 0.00069279, 0.00069279, 0.00069279

|

| 6 |

+

5, 0.30248, 0.92958, 0.99296, 1.1385, 0.00068573, 0.00068573, 0.00068573

|

| 7 |

+

6, 0.21275, 0.94366, 0.99296, 1.1252, 0.00067866, 0.00067866, 0.00067866

|

| 8 |

+

7, 0.19737, 0.94366, 0.99296, 1.1256, 0.00067159, 0.00067159, 0.00067159

|

| 9 |

+

8, 0.15099, 0.95775, 1, 1.1256, 0.00066452, 0.00066452, 0.00066452

|

| 10 |

+

9, 0.11586, 0.95775, 1, 1.1135, 0.00065745, 0.00065745, 0.00065745

|

| 11 |

+

10, 0.13721, 0.96479, 1, 1.0939, 0.00065038, 0.00065038, 0.00065038

|

| 12 |

+

11, 0.137, 0.94366, 0.99296, 1.1014, 0.00064331, 0.00064331, 0.00064331

|

| 13 |

+

12, 0.08138, 0.95775, 0.99296, 1.0943, 0.00063625, 0.00063625, 0.00063625

|

| 14 |

+

13, 0.07742, 0.95775, 1, 1.0941, 0.00062918, 0.00062918, 0.00062918

|

| 15 |

+

14, 0.11946, 0.93662, 1, 1.1119, 0.00062211, 0.00062211, 0.00062211

|

| 16 |

+

15, 0.09459, 0.9507, 1, 1.1, 0.00061504, 0.00061504, 0.00061504

|

| 17 |

+

16, 0.08792, 0.93662, 1, 1.1033, 0.00060797, 0.00060797, 0.00060797

|

| 18 |

+

17, 0.08993, 0.94366, 1, 1.1092, 0.0006009, 0.0006009, 0.0006009

|

| 19 |

+

18, 0.09229, 0.94366, 1, 1.1084, 0.00059383, 0.00059383, 0.00059383

|

| 20 |

+

19, 0.06277, 0.94366, 1, 1.1019, 0.00058677, 0.00058677, 0.00058677

|

| 21 |

+

20, 0.05816, 0.94366, 1, 1.1096, 0.0005797, 0.0005797, 0.0005797

|

| 22 |

+

21, 0.05505, 0.94366, 1, 1.1082, 0.00057263, 0.00057263, 0.00057263

|

| 23 |

+

22, 0.03803, 0.9507, 1, 1.0967, 0.00056556, 0.00056556, 0.00056556

|

| 24 |

+

23, 0.05385, 0.9507, 1, 1.1082, 0.00055849, 0.00055849, 0.00055849

|

| 25 |

+

24, 0.04941, 0.93662, 1, 1.1062, 0.00055142, 0.00055142, 0.00055142

|

| 26 |

+

25, 0.0336, 0.94366, 1, 1.1041, 0.00054435, 0.00054435, 0.00054435

|

| 27 |

+

26, 0.03592, 0.95775, 1, 1.1041, 0.00053728, 0.00053728, 0.00053728

|

| 28 |

+

27, 0.03549, 0.95775, 1, 1.1039, 0.00053022, 0.00053022, 0.00053022

|

| 29 |

+

28, 0.0381, 0.95775, 1, 1.0904, 0.00052315, 0.00052315, 0.00052315

|

| 30 |

+

29, 0.033, 0.96479, 1, 1.0967, 0.00051608, 0.00051608, 0.00051608

|

| 31 |

+

30, 0.02672, 0.9507, 1, 1.0918, 0.00050901, 0.00050901, 0.00050901

|

| 32 |

+

31, 0.02363, 0.94366, 1, 1.1041, 0.00050194, 0.00050194, 0.00050194

|

| 33 |

+

32, 0.0432, 0.95775, 1, 1.0943, 0.00049487, 0.00049487, 0.00049487

|

| 34 |

+

33, 0.02597, 0.94366, 1, 1.1043, 0.0004878, 0.0004878, 0.0004878

|

| 35 |

+

34, 0.03105, 0.94366, 1, 1.1113, 0.00048074, 0.00048074, 0.00048074

|

| 36 |

+

35, 0.04371, 0.94366, 1, 1.0943, 0.00047367, 0.00047367, 0.00047367

|

| 37 |

+

36, 0.06177, 0.96479, 1, 1.0832, 0.0004666, 0.0004666, 0.0004666

|

| 38 |

+

37, 0.07132, 0.96479, 1, 1.0832, 0.00045953, 0.00045953, 0.00045953

|

| 39 |

+

38, 0.03094, 0.95775, 1, 1.0947, 0.00045246, 0.00045246, 0.00045246

|

| 40 |

+

39, 0.04341, 0.97887, 1, 1.0863, 0.00044539, 0.00044539, 0.00044539

|

| 41 |

+

40, 0.02172, 0.95775, 1, 1.085, 0.00043832, 0.00043832, 0.00043832

|

| 42 |

+

41, 0.032, 0.96479, 1, 1.0889, 0.00043126, 0.00043126, 0.00043126

|

| 43 |

+

42, 0.02171, 0.96479, 1, 1.085, 0.00042419, 0.00042419, 0.00042419

|

| 44 |

+

43, 0.01951, 0.96479, 1, 1.0836, 0.00041712, 0.00041712, 0.00041712

|

| 45 |

+

44, 0.04394, 0.95775, 1, 1.0936, 0.00041005, 0.00041005, 0.00041005

|

| 46 |

+

45, 0.01902, 0.96479, 1, 1.0813, 0.00040298, 0.00040298, 0.00040298

|

| 47 |

+

46, 0.04116, 0.96479, 1, 1.0904, 0.00039591, 0.00039591, 0.00039591

|

| 48 |

+

47, 0.0512, 0.98592, 1, 1.0799, 0.00038884, 0.00038884, 0.00038884

|

| 49 |

+

48, 0.01799, 0.96479, 1, 1.0848, 0.00038178, 0.00038178, 0.00038178

|

| 50 |

+

49, 0.03392, 0.9507, 1, 1.0891, 0.00037471, 0.00037471, 0.00037471

|

| 51 |

+

50, 0.04188, 0.9507, 1, 1.0957, 0.00036764, 0.00036764, 0.00036764

|

| 52 |

+

51, 0.02717, 0.9507, 1, 1.0908, 0.00036057, 0.00036057, 0.00036057

|

| 53 |

+

52, 0.01847, 0.9507, 1, 1.0936, 0.0003535, 0.0003535, 0.0003535

|

| 54 |

+

53, 0.02323, 0.9507, 1, 1.0967, 0.00034643, 0.00034643, 0.00034643

|

| 55 |

+

54, 0.03291, 0.94366, 1, 1.0916, 0.00033936, 0.00033936, 0.00033936

|

| 56 |

+

55, 0.02389, 0.9507, 1, 1.0824, 0.0003323, 0.0003323, 0.0003323

|

| 57 |

+

56, 0.02612, 0.96479, 1, 1.0926, 0.00032523, 0.00032523, 0.00032523

|

| 58 |

+

57, 0.0165, 0.9507, 1, 1.0871, 0.00031816, 0.00031816, 0.00031816

|

| 59 |

+

58, 0.01978, 0.9507, 1, 1.1014, 0.00031109, 0.00031109, 0.00031109

|

| 60 |

+

59, 0.01593, 0.9507, 1, 1.0932, 0.00030402, 0.00030402, 0.00030402

|

| 61 |

+

60, 0.00771, 0.94366, 1, 1.0928, 0.00029695, 0.00029695, 0.00029695

|

| 62 |

+

61, 0.02831, 0.9507, 1, 1.0898, 0.00028988, 0.00028988, 0.00028988

|

| 63 |

+

62, 0.02913, 0.94366, 1, 1.1123, 0.00028282, 0.00028282, 0.00028282

|

| 64 |

+

63, 0.03441, 0.94366, 1, 1.0984, 0.00027575, 0.00027575, 0.00027575

|

| 65 |

+

64, 0.02507, 0.93662, 1, 1.0953, 0.00026868, 0.00026868, 0.00026868

|

| 66 |

+

65, 0.03046, 0.9507, 1, 1.091, 0.00026161, 0.00026161, 0.00026161

|

| 67 |

+

66, 0.01546, 0.95775, 1, 1.0861, 0.00025454, 0.00025454, 0.00025454

|

| 68 |

+

67, 0.01936, 0.95775, 1, 1.0885, 0.00024747, 0.00024747, 0.00024747

|

| 69 |

+

68, 0.03504, 0.95775, 1, 1.0912, 0.0002404, 0.0002404, 0.0002404

|

| 70 |

+

69, 0.01841, 0.92958, 1, 1.1078, 0.00023334, 0.00023334, 0.00023334

|

| 71 |

+

70, 0.01763, 0.9507, 1, 1.091, 0.00022627, 0.00022627, 0.00022627

|

| 72 |

+

71, 0.01643, 0.9507, 1, 1.1006, 0.0002192, 0.0002192, 0.0002192

|

| 73 |

+

72, 0.01105, 0.95775, 1, 1.0906, 0.00021213, 0.00021213, 0.00021213

|

| 74 |

+

73, 0.00769, 0.94366, 1, 1.0918, 0.00020506, 0.00020506, 0.00020506

|

| 75 |

+

74, 0.00989, 0.95775, 1, 1.0953, 0.00019799, 0.00019799, 0.00019799

|

| 76 |

+

75, 0.01301, 0.9507, 1, 1.0996, 0.00019092, 0.00019092, 0.00019092

|

| 77 |

+

76, 0.01345, 0.9507, 1, 1.0941, 0.00018385, 0.00018385, 0.00018385

|

| 78 |

+

77, 0.00888, 0.94366, 1, 1.0916, 0.00017679, 0.00017679, 0.00017679

|

| 79 |

+

78, 0.00971, 0.93662, 1, 1.0967, 0.00016972, 0.00016972, 0.00016972

|

| 80 |

+

79, 0.01685, 0.94366, 1, 1.0996, 0.00016265, 0.00016265, 0.00016265

|

| 81 |

+

80, 0.00793, 0.93662, 1, 1.1041, 0.00015558, 0.00015558, 0.00015558

|

| 82 |

+

81, 0.00716, 0.94366, 1, 1.1115, 0.00014851, 0.00014851, 0.00014851

|

| 83 |

+

82, 0.0069, 0.94366, 1, 1.1055, 0.00014144, 0.00014144, 0.00014144

|

| 84 |

+

83, 0.0039, 0.93662, 1, 1.1035, 0.00013437, 0.00013437, 0.00013437

|

| 85 |

+

84, 0.0061, 0.93662, 1, 1.0975, 0.00012731, 0.00012731, 0.00012731

|

| 86 |

+

85, 0.00722, 0.93662, 1, 1.0965, 0.00012024, 0.00012024, 0.00012024

|

| 87 |

+

86, 0.01103, 0.94366, 1, 1.0965, 0.00011317, 0.00011317, 0.00011317

|

| 88 |

+

87, 0.0171, 0.94366, 1, 1.098, 0.0001061, 0.0001061, 0.0001061

|

| 89 |

+

88, 0.00555, 0.94366, 1, 1.0943, 9.9032e-05, 9.9032e-05, 9.9032e-05

|

| 90 |

+

89, 0.01206, 0.94366, 1, 1.1064, 9.1963e-05, 9.1963e-05, 9.1963e-05

|

| 91 |

+

90, 0.00835, 0.93662, 1, 1.1045, 8.4895e-05, 8.4895e-05, 8.4895e-05

|

| 92 |

+

91, 0.02702, 0.94366, 1, 1.0934, 7.7826e-05, 7.7826e-05, 7.7826e-05

|

| 93 |

+

92, 0.00546, 0.94366, 1, 1.0957, 7.0757e-05, 7.0757e-05, 7.0757e-05

|

| 94 |

+

93, 0.0051, 0.94366, 1, 1.0963, 6.3689e-05, 6.3689e-05, 6.3689e-05

|

| 95 |

+

94, 0.01562, 0.93662, 1, 1.1068, 5.662e-05, 5.662e-05, 5.662e-05

|

| 96 |

+

95, 0.02627, 0.93662, 1, 1.0965, 4.9552e-05, 4.9552e-05, 4.9552e-05

|

| 97 |

+

96, 0.00532, 0.93662, 1, 1.1014, 4.2483e-05, 4.2483e-05, 4.2483e-05

|

| 98 |

+

97, 0.01032, 0.94366, 1, 1.0965, 3.5414e-05, 3.5414e-05, 3.5414e-05

|

| 99 |

+

98, 0.00554, 0.94366, 1, 1.1115, 2.8346e-05, 2.8346e-05, 2.8346e-05

|

| 100 |

+

99, 0.00467, 0.93662, 1, 1.092, 2.1277e-05, 2.1277e-05, 2.1277e-05

|

| 101 |

+

100, 0.0077, 0.94366, 1, 1.0984, 1.4209e-05, 1.4209e-05, 1.4209e-05

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/results.png

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch0.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch1.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch2.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3330.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3331.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/train_batch3332.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch0_labels.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch0_pred.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch1_labels.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch1_pred.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch2_labels.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/val_batch2_pred.jpg

ADDED

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/weights/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7eb92c7095dd95b73fbefadf6b3bb502c642180440e31701ca623b4fc1371b1d

|

| 3 |

+

size 2976129

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts/weights/last.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:79d924986aee932d657b850205a6366b55440eba0ee5e2da4728c512f5bf6c60

|

| 3 |

+

size 2979585

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/meta.yaml

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

artifact_uri: /home/onyxia/work/open-prices-cls/runs/mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/artifacts

|

| 2 |

+

end_time: 1723033967632

|

| 3 |

+

entry_point_name: ''

|

| 4 |

+

experiment_id: '119284362706535067'

|

| 5 |

+

lifecycle_stage: active

|

| 6 |

+

run_id: e432b13dca424ce6b8ad60074d948b37

|

| 7 |

+

run_name: train

|

| 8 |

+

run_uuid: e432b13dca424ce6b8ad60074d948b37

|

| 9 |

+

source_name: ''

|

| 10 |

+

source_type: 4

|

| 11 |

+

source_version: ''

|

| 12 |

+

start_time: 1723032671898

|

| 13 |

+

status: 3

|

| 14 |

+

tags: []

|

| 15 |

+

user_id: onyxia

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg0

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

1723032685507 0.00023156756756756758 0

|

| 2 |

+

1723032697369 0.0004649188486486486 1

|

| 3 |

+

1723032710271 0.0006935577297297297 2

|

| 4 |

+

1723032723758 0.0006927942 3

|

| 5 |

+

1723032737868 0.0006857256 4

|

| 6 |

+

1723032749032 0.000678657 5

|

| 7 |

+

1723032762631 0.0006715884 6

|

| 8 |

+

1723032773871 0.0006645198 7

|

| 9 |

+

1723032786193 0.0006574512 8

|

| 10 |

+

1723032800400 0.0006503826 9

|

| 11 |

+

1723032814031 0.000643314 10

|

| 12 |

+

1723032824795 0.0006362454 11

|

| 13 |

+

1723032838079 0.0006291768 12

|

| 14 |

+

1723032849917 0.0006221082 13

|

| 15 |

+

1723032862976 0.0006150395999999999 14

|

| 16 |

+

1723032878163 0.000607971 15

|

| 17 |

+

1723032888896 0.0006009024 16

|

| 18 |

+

1723032901820 0.0005938338 17

|

| 19 |

+

1723032915168 0.0005867652000000001 18

|

| 20 |

+

1723032928498 0.0005796966000000001 19

|

| 21 |

+

1723032943047 0.000572628 20

|

| 22 |

+

1723032956616 0.0005655594 21

|

| 23 |

+

1723032968648 0.0005584908 22

|

| 24 |

+

1723032980585 0.0005514222 23

|

| 25 |

+

1723032994946 0.0005443536 24

|

| 26 |

+

1723033006013 0.000537285 25

|

| 27 |

+

1723033018575 0.0005302164 26

|

| 28 |

+

1723033030496 0.0005231478 27

|

| 29 |

+

1723033043133 0.0005160792 28

|

| 30 |

+

1723033058565 0.0005090105999999999 29

|

| 31 |

+

1723033070730 0.000501942 30

|

| 32 |

+

1723033083406 0.0004948734 31

|

| 33 |

+

1723033094607 0.00048780479999999994 32

|

| 34 |

+

1723033109163 0.00048073619999999996 33

|

| 35 |

+

1723033124712 0.0004736675999999999 34

|

| 36 |

+

1723033136043 0.000466599 35

|

| 37 |

+

1723033150293 0.00045953040000000007 36

|

| 38 |

+

1723033162998 0.00045246180000000003 37

|

| 39 |

+

1723033175188 0.0004453932 38

|

| 40 |

+

1723033187768 0.0004383246 39

|

| 41 |

+

1723033200811 0.000431256 40

|

| 42 |

+

1723033215686 0.00042418740000000005 41

|

| 43 |

+

1723033229084 0.0004171188 42

|

| 44 |

+

1723033241943 0.00041005020000000003 43

|

| 45 |

+

1723033255911 0.0004029816 44

|

| 46 |

+

1723033266050 0.000395913 45

|

| 47 |

+

1723033280555 0.0003888444000000001 46

|

| 48 |

+

1723033293670 0.00038177580000000005 47

|

| 49 |

+

1723033306133 0.0003747072 48

|

| 50 |

+

1723033320312 0.00036763860000000003 49

|

| 51 |

+

1723033334054 0.00036057 50

|

| 52 |

+

1723033346004 0.0003535014 51

|

| 53 |

+

1723033358160 0.0003464328 52

|

| 54 |

+

1723033370275 0.0003393642 53

|

| 55 |

+

1723033382206 0.00033229559999999996 54

|

| 56 |

+

1723033397303 0.000325227 55

|

| 57 |

+

1723033408142 0.00031815839999999995 56

|

| 58 |

+

1723033421015 0.0003110898 57

|

| 59 |

+

1723033431815 0.00030402120000000004 58

|

| 60 |

+

1723033444885 0.00029695260000000005 59

|

| 61 |

+

1723033457234 0.000289884 60

|

| 62 |

+

1723033472949 0.0002828154 61

|

| 63 |

+

1723033485272 0.0002757468 62

|

| 64 |

+

1723033498069 0.0002686782 63

|

| 65 |

+

1723033512018 0.0002616096 64

|

| 66 |

+

1723033522666 0.000254541 65

|

| 67 |

+

1723033535664 0.00024747239999999997 66

|

| 68 |

+

1723033547066 0.00024040379999999996 67

|

| 69 |

+

1723033560832 0.00023333519999999997 68

|

| 70 |

+

1723033571332 0.00022626660000000005 69

|

| 71 |

+

1723033584423 0.00021919800000000004 70

|

| 72 |

+

1723033597350 0.00021212940000000003 71

|

| 73 |

+

1723033608257 0.00020506080000000002 72

|

| 74 |

+

1723033621743 0.00019799220000000004 73

|

| 75 |

+

1723033633100 0.00019092360000000003 74

|

| 76 |

+

1723033647653 0.000183855 75

|

| 77 |

+

1723033658578 0.00017678639999999999 76

|

| 78 |

+

1723033672769 0.0001697178 77

|

| 79 |

+

1723033684748 0.0001626492 78

|

| 80 |

+

1723033696078 0.00015558059999999999 79

|

| 81 |

+

1723033708949 0.00014851199999999998 80

|

| 82 |

+

1723033720441 0.00014144339999999997 81

|

| 83 |

+

1723033733804 0.00013437480000000004 82

|

| 84 |

+

1723033748026 0.00012730620000000003 83

|

| 85 |

+

1723033759712 0.00012023760000000004 84

|

| 86 |

+

1723033772419 0.00011316900000000003 85

|

| 87 |

+

1723033785207 0.0001061004 86

|

| 88 |

+

1723033800634 9.903180000000001e-05 87

|

| 89 |

+

1723033813463 9.19632e-05 88

|

| 90 |

+

1723033828410 8.489459999999999e-05 89

|

| 91 |

+

1723033844551 7.782599999999998e-05 90

|

| 92 |

+

1723033860211 7.075739999999997e-05 91

|

| 93 |

+

1723033870590 6.368879999999998e-05 92

|

| 94 |

+

1723033885402 5.6620199999999956e-05 93

|

| 95 |

+

1723033895681 4.9551600000000036e-05 94

|

| 96 |

+

1723033907553 4.2483000000000034e-05 95

|

| 97 |

+

1723033922797 3.541440000000003e-05 96

|

| 98 |

+

1723033934226 2.834580000000002e-05 97

|

| 99 |

+

1723033947978 2.1277200000000014e-05 98

|

| 100 |

+

1723033960054 1.4208600000000007e-05 99

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg1

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

1723032685507 0.00023156756756756758 0

|

| 2 |

+

1723032697369 0.0004649188486486486 1

|

| 3 |

+

1723032710271 0.0006935577297297297 2

|

| 4 |

+

1723032723758 0.0006927942 3

|

| 5 |

+

1723032737868 0.0006857256 4

|

| 6 |

+

1723032749032 0.000678657 5

|

| 7 |

+

1723032762631 0.0006715884 6

|

| 8 |

+

1723032773871 0.0006645198 7

|

| 9 |

+

1723032786193 0.0006574512 8

|

| 10 |

+

1723032800400 0.0006503826 9

|

| 11 |

+

1723032814031 0.000643314 10

|

| 12 |

+

1723032824795 0.0006362454 11

|

| 13 |

+

1723032838079 0.0006291768 12

|

| 14 |

+

1723032849917 0.0006221082 13

|

| 15 |

+

1723032862976 0.0006150395999999999 14

|

| 16 |

+

1723032878163 0.000607971 15

|

| 17 |

+

1723032888896 0.0006009024 16

|

| 18 |

+

1723032901820 0.0005938338 17

|

| 19 |

+

1723032915168 0.0005867652000000001 18

|

| 20 |

+

1723032928498 0.0005796966000000001 19

|

| 21 |

+

1723032943047 0.000572628 20

|

| 22 |

+

1723032956616 0.0005655594 21

|

| 23 |

+

1723032968648 0.0005584908 22

|

| 24 |

+

1723032980585 0.0005514222 23

|

| 25 |

+

1723032994946 0.0005443536 24

|

| 26 |

+

1723033006013 0.000537285 25

|

| 27 |

+

1723033018575 0.0005302164 26

|

| 28 |

+

1723033030496 0.0005231478 27

|

| 29 |

+

1723033043133 0.0005160792 28

|

| 30 |

+

1723033058565 0.0005090105999999999 29

|

| 31 |

+

1723033070730 0.000501942 30

|

| 32 |

+

1723033083406 0.0004948734 31

|

| 33 |

+

1723033094607 0.00048780479999999994 32

|

| 34 |

+

1723033109163 0.00048073619999999996 33

|

| 35 |

+

1723033124712 0.0004736675999999999 34

|

| 36 |

+

1723033136043 0.000466599 35

|

| 37 |

+

1723033150293 0.00045953040000000007 36

|

| 38 |

+

1723033162998 0.00045246180000000003 37

|

| 39 |

+

1723033175188 0.0004453932 38

|

| 40 |

+

1723033187768 0.0004383246 39

|

| 41 |

+

1723033200811 0.000431256 40

|

| 42 |

+

1723033215686 0.00042418740000000005 41

|

| 43 |

+

1723033229084 0.0004171188 42

|

| 44 |

+

1723033241943 0.00041005020000000003 43

|

| 45 |

+

1723033255911 0.0004029816 44

|

| 46 |

+

1723033266050 0.000395913 45

|

| 47 |

+

1723033280555 0.0003888444000000001 46

|

| 48 |

+

1723033293670 0.00038177580000000005 47

|

| 49 |

+

1723033306133 0.0003747072 48

|

| 50 |

+

1723033320312 0.00036763860000000003 49

|

| 51 |

+

1723033334054 0.00036057 50

|

| 52 |

+

1723033346004 0.0003535014 51

|

| 53 |

+

1723033358160 0.0003464328 52

|

| 54 |

+

1723033370275 0.0003393642 53

|

| 55 |

+

1723033382206 0.00033229559999999996 54

|

| 56 |

+

1723033397303 0.000325227 55

|

| 57 |

+

1723033408142 0.00031815839999999995 56

|

| 58 |

+

1723033421015 0.0003110898 57

|

| 59 |

+

1723033431815 0.00030402120000000004 58

|

| 60 |

+

1723033444885 0.00029695260000000005 59

|

| 61 |

+

1723033457234 0.000289884 60

|

| 62 |

+

1723033472949 0.0002828154 61

|

| 63 |

+

1723033485272 0.0002757468 62

|

| 64 |

+

1723033498069 0.0002686782 63

|

| 65 |

+

1723033512018 0.0002616096 64

|

| 66 |

+

1723033522666 0.000254541 65

|

| 67 |

+

1723033535664 0.00024747239999999997 66

|

| 68 |

+

1723033547066 0.00024040379999999996 67

|

| 69 |

+

1723033560832 0.00023333519999999997 68

|

| 70 |

+

1723033571332 0.00022626660000000005 69

|

| 71 |

+

1723033584423 0.00021919800000000004 70

|

| 72 |

+

1723033597350 0.00021212940000000003 71

|

| 73 |

+

1723033608257 0.00020506080000000002 72

|

| 74 |

+

1723033621743 0.00019799220000000004 73

|

| 75 |

+

1723033633100 0.00019092360000000003 74

|

| 76 |

+

1723033647653 0.000183855 75

|

| 77 |

+

1723033658578 0.00017678639999999999 76

|

| 78 |

+

1723033672769 0.0001697178 77

|

| 79 |

+

1723033684748 0.0001626492 78

|

| 80 |

+

1723033696078 0.00015558059999999999 79

|

| 81 |

+

1723033708949 0.00014851199999999998 80

|

| 82 |

+

1723033720441 0.00014144339999999997 81

|

| 83 |

+

1723033733804 0.00013437480000000004 82

|

| 84 |

+

1723033748026 0.00012730620000000003 83

|

| 85 |

+

1723033759712 0.00012023760000000004 84

|

| 86 |

+

1723033772419 0.00011316900000000003 85

|

| 87 |

+

1723033785207 0.0001061004 86

|

| 88 |

+

1723033800634 9.903180000000001e-05 87

|

| 89 |

+

1723033813463 9.19632e-05 88

|

| 90 |

+

1723033828410 8.489459999999999e-05 89

|

| 91 |

+

1723033844551 7.782599999999998e-05 90

|

| 92 |

+

1723033860211 7.075739999999997e-05 91

|

| 93 |

+

1723033870590 6.368879999999998e-05 92

|

| 94 |

+

1723033885402 5.6620199999999956e-05 93

|

| 95 |

+

1723033895681 4.9551600000000036e-05 94

|

| 96 |

+

1723033907553 4.2483000000000034e-05 95

|

| 97 |

+

1723033922797 3.541440000000003e-05 96

|

| 98 |

+

1723033934226 2.834580000000002e-05 97

|

| 99 |

+

1723033947978 2.1277200000000014e-05 98

|

| 100 |

+

1723033960054 1.4208600000000007e-05 99

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/lr/pg2

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

1723032685507 0.00023156756756756758 0

|

| 2 |

+

1723032697369 0.0004649188486486486 1

|

| 3 |

+

1723032710271 0.0006935577297297297 2

|

| 4 |

+

1723032723758 0.0006927942 3

|

| 5 |

+

1723032737868 0.0006857256 4

|

| 6 |

+

1723032749032 0.000678657 5

|

| 7 |

+

1723032762631 0.0006715884 6

|

| 8 |

+

1723032773871 0.0006645198 7

|

| 9 |

+

1723032786193 0.0006574512 8

|

| 10 |

+

1723032800400 0.0006503826 9

|

| 11 |

+

1723032814031 0.000643314 10

|

| 12 |

+

1723032824795 0.0006362454 11

|

| 13 |

+

1723032838079 0.0006291768 12

|

| 14 |

+

1723032849917 0.0006221082 13

|

| 15 |

+

1723032862976 0.0006150395999999999 14

|

| 16 |

+

1723032878163 0.000607971 15

|

| 17 |

+

1723032888896 0.0006009024 16

|

| 18 |

+

1723032901820 0.0005938338 17

|

| 19 |

+

1723032915168 0.0005867652000000001 18

|

| 20 |

+

1723032928498 0.0005796966000000001 19

|

| 21 |

+

1723032943047 0.000572628 20

|

| 22 |

+

1723032956616 0.0005655594 21

|

| 23 |

+

1723032968648 0.0005584908 22

|

| 24 |

+

1723032980585 0.0005514222 23

|

| 25 |

+

1723032994946 0.0005443536 24

|

| 26 |

+

1723033006013 0.000537285 25

|

| 27 |

+

1723033018575 0.0005302164 26

|

| 28 |

+

1723033030496 0.0005231478 27

|

| 29 |

+

1723033043133 0.0005160792 28

|

| 30 |

+

1723033058565 0.0005090105999999999 29

|

| 31 |

+

1723033070730 0.000501942 30

|

| 32 |

+

1723033083406 0.0004948734 31

|

| 33 |

+

1723033094607 0.00048780479999999994 32

|

| 34 |

+

1723033109163 0.00048073619999999996 33

|

| 35 |

+

1723033124712 0.0004736675999999999 34

|

| 36 |

+

1723033136043 0.000466599 35

|

| 37 |

+

1723033150293 0.00045953040000000007 36

|

| 38 |

+

1723033162998 0.00045246180000000003 37

|

| 39 |

+

1723033175188 0.0004453932 38

|

| 40 |

+

1723033187768 0.0004383246 39

|

| 41 |

+

1723033200811 0.000431256 40

|

| 42 |

+

1723033215686 0.00042418740000000005 41

|

| 43 |

+

1723033229084 0.0004171188 42

|

| 44 |

+

1723033241943 0.00041005020000000003 43

|

| 45 |

+

1723033255911 0.0004029816 44

|

| 46 |

+

1723033266050 0.000395913 45

|

| 47 |

+

1723033280555 0.0003888444000000001 46

|

| 48 |

+

1723033293670 0.00038177580000000005 47

|

| 49 |

+

1723033306133 0.0003747072 48

|

| 50 |

+

1723033320312 0.00036763860000000003 49

|

| 51 |

+

1723033334054 0.00036057 50

|

| 52 |

+

1723033346004 0.0003535014 51

|

| 53 |

+

1723033358160 0.0003464328 52

|

| 54 |

+

1723033370275 0.0003393642 53

|

| 55 |

+

1723033382206 0.00033229559999999996 54

|

| 56 |

+

1723033397303 0.000325227 55

|

| 57 |

+

1723033408142 0.00031815839999999995 56

|

| 58 |

+

1723033421015 0.0003110898 57

|

| 59 |

+

1723033431815 0.00030402120000000004 58

|

| 60 |

+

1723033444885 0.00029695260000000005 59

|

| 61 |

+

1723033457234 0.000289884 60

|

| 62 |

+

1723033472949 0.0002828154 61

|

| 63 |

+

1723033485272 0.0002757468 62

|

| 64 |

+

1723033498069 0.0002686782 63

|

| 65 |

+

1723033512018 0.0002616096 64

|

| 66 |

+

1723033522666 0.000254541 65

|

| 67 |

+

1723033535664 0.00024747239999999997 66

|

| 68 |

+

1723033547066 0.00024040379999999996 67

|

| 69 |

+

1723033560832 0.00023333519999999997 68

|

| 70 |

+

1723033571332 0.00022626660000000005 69

|

| 71 |

+

1723033584423 0.00021919800000000004 70

|

| 72 |

+

1723033597350 0.00021212940000000003 71

|

| 73 |

+

1723033608257 0.00020506080000000002 72

|

| 74 |

+

1723033621743 0.00019799220000000004 73

|

| 75 |

+

1723033633100 0.00019092360000000003 74

|

| 76 |

+

1723033647653 0.000183855 75

|

| 77 |

+

1723033658578 0.00017678639999999999 76

|

| 78 |

+

1723033672769 0.0001697178 77

|

| 79 |

+

1723033684748 0.0001626492 78

|

| 80 |

+

1723033696078 0.00015558059999999999 79

|

| 81 |

+

1723033708949 0.00014851199999999998 80

|

| 82 |

+

1723033720441 0.00014144339999999997 81

|

| 83 |

+

1723033733804 0.00013437480000000004 82

|

| 84 |

+

1723033748026 0.00012730620000000003 83

|

| 85 |

+

1723033759712 0.00012023760000000004 84

|

| 86 |

+

1723033772419 0.00011316900000000003 85

|

| 87 |

+

1723033785207 0.0001061004 86

|

| 88 |

+

1723033800634 9.903180000000001e-05 87

|

| 89 |

+

1723033813463 9.19632e-05 88

|

| 90 |

+

1723033828410 8.489459999999999e-05 89

|

| 91 |

+

1723033844551 7.782599999999998e-05 90

|

| 92 |

+

1723033860211 7.075739999999997e-05 91

|

| 93 |

+

1723033870590 6.368879999999998e-05 92

|

| 94 |

+

1723033885402 5.6620199999999956e-05 93

|

| 95 |

+

1723033895681 4.9551600000000036e-05 94

|

| 96 |

+

1723033907553 4.2483000000000034e-05 95

|

| 97 |

+

1723033922797 3.541440000000003e-05 96

|

| 98 |

+

1723033934226 2.834580000000002e-05 97

|

| 99 |

+

1723033947978 2.1277200000000014e-05 98

|

| 100 |

+

1723033960054 1.4208600000000007e-05 99

|

mlflow/119284362706535067/e432b13dca424ce6b8ad60074d948b37/metrics/metrics/accuracy_top1

ADDED

|

@@ -0,0 +1,101 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

1723032686000 0.83099 0

|

| 2 |

+

1723032697772 0.90141 1

|

| 3 |

+

1723032710672 0.91549 2

|

| 4 |

+

1723032724105 0.9507 3

|

| 5 |

+

1723032738222 0.92958 4

|

| 6 |

+

1723032749354 0.94366 5

|

| 7 |

+

1723032762915 0.94366 6

|

| 8 |

+

1723032774217 0.95775 7

|

| 9 |

+

1723032786482 0.95775 8

|

| 10 |

+

1723032800782 0.96479 9

|

| 11 |

+

1723032814365 0.94366 10

|

| 12 |

+

1723032825063 0.95775 11

|

| 13 |

+

1723032838392 0.95775 12

|

| 14 |

+

1723032850239 0.93662 13

|

| 15 |

+

1723032863334 0.9507 14

|

| 16 |

+

1723032878515 0.93662 15

|

| 17 |

+

1723032889158 0.94366 16

|

| 18 |

+

1723032902384 0.94366 17

|

| 19 |

+

1723032915419 0.94366 18

|

| 20 |

+

1723032928906 0.94366 19

|

| 21 |

+

1723032943391 0.94366 20

|

| 22 |

+

1723032956892 0.9507 21

|

| 23 |

+

1723032969076 0.9507 22

|

| 24 |

+

1723032980933 0.93662 23

|

| 25 |

+

1723032995246 0.94366 24

|

| 26 |

+

1723033006330 0.95775 25

|

| 27 |

+

1723033018914 0.95775 26

|

| 28 |

+

1723033030812 0.95775 27

|

| 29 |

+

1723033043505 0.96479 28

|

| 30 |

+

1723033058991 0.9507 29

|

| 31 |

+

1723033071023 0.94366 30

|

| 32 |

+

1723033083687 0.95775 31

|

| 33 |

+

1723033094971 0.94366 32

|

| 34 |

+

1723033109542 0.94366 33

|

| 35 |

+

1723033125096 0.94366 34

|

| 36 |

+

1723033136380 0.96479 35

|

| 37 |

+

1723033150689 0.96479 36

|

| 38 |

+

1723033163348 0.95775 37

|

| 39 |

+

1723033175514 0.97887 38

|

| 40 |

+

1723033188061 0.95775 39

|

| 41 |

+

1723033201252 0.96479 40

|

| 42 |

+

1723033215947 0.96479 41

|

| 43 |

+

1723033229396 0.96479 42

|

| 44 |

+

1723033242252 0.95775 43

|

| 45 |

+

1723033256258 0.96479 44

|

| 46 |

+

1723033266602 0.96479 45

|

| 47 |

+

1723033281001 0.98592 46

|

| 48 |

+

1723033293966 0.96479 47

|

| 49 |

+

1723033306450 0.9507 48

|

| 50 |

+

1723033320644 0.9507 49

|

| 51 |

+

1723033334362 0.9507 50

|

| 52 |

+

1723033346314 0.9507 51

|

| 53 |

+

1723033358444 0.9507 52

|

| 54 |

+

1723033370711 0.94366 53

|

| 55 |

+

1723033382564 0.9507 54

|

| 56 |

+

1723033397725 0.96479 55

|

| 57 |

+

1723033408493 0.9507 56

|

| 58 |

+

1723033421325 0.9507 57

|

| 59 |

+

1723033432173 0.9507 58

|

| 60 |

+

1723033445265 0.94366 59

|

| 61 |

+

1723033457507 0.9507 60

|

| 62 |

+

1723033473299 0.94366 61

|

| 63 |

+

1723033485624 0.94366 62

|

| 64 |

+

1723033498366 0.93662 63

|

| 65 |

+

1723033512341 0.9507 64

|

| 66 |

+

1723033523003 0.95775 65

|

| 67 |

+

1723033535954 0.95775 66

|

| 68 |

+

1723033547386 0.95775 67

|

| 69 |

+

1723033561178 0.92958 68

|

| 70 |

+

1723033571610 0.9507 69

|

| 71 |

+