Update README.md

Browse files

README.md

CHANGED

|

@@ -22,37 +22,48 @@ GOVERNING TERMS: Use of the models listed above are governed by the [Creative Co

|

|

| 22 |

|

| 23 |

## Scores on Reasoning Benchmarks

|

| 24 |

|

| 25 |

-

|

| 26 |

-

| :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- |

|

| 27 |

-

| **1.5B**| - | 31.6 | 47.5 | 5.5 | 28.6 | 2.2 | 55.5 | 45.6 | 31.5 | 50.6 |

|

| 28 |

-

| **7B** | 54.7 | 61.1 | 71.9 | 8.3 | 63.3 | 16.2 | 84.7 | 78.2 | 63.5 | 80.3 |

|

| 29 |

-

| **14B** | 60.9 | 71.6 | 77.5 | 10.1 | 67.8 | 23.5 | 87.8 | 82.0 | 71.2 | 87.7 |

|

| 30 |

-

| **32B** | 64.3 | 73.1 | 80.0 | 11.9 | 70.2 | 28.5 | 89.2 | 84.0 | 73.8 | 88.0 |

|

| 31 |

|

| 32 |

-

|

| 33 |

|

| 34 |

-

| **Model** | **

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 35 |

| :--- | :--- | :--- | :--- |

|

| 36 |

| **1.5B** | | | |

|

| 37 |

| **AIME24** | 55.5 | 76.7 | 76.7 |

|

| 38 |

| **AIME25** | 45.6 | 70.0 | 70.0 |

|

| 39 |

| **HMMT Feb 25** | 31.5 | 46.7 | 53.3 |

|

| 40 |

-

| **BRUNO25** | 50.6 | 70.0 | 73.3 |

|

| 41 |

| **7B** | | | |

|

| 42 |

| **AIME24** | 84.7 | 93.3 | 93.3 |

|

| 43 |

| **AIME25** | 78.2 | 86.7 | 93.3 |

|

| 44 |

| **HMMT Feb 25** | 63.5 | 83.3 | 90.0 |

|

| 45 |

-

| **

|

| 46 |

| **14B** | | | |

|

| 47 |

| **AIME24** | 87.8 | 93.3 | 93.3 |

|

| 48 |

| **AIME25** | 82.0 | 90.0 | 90.0 |

|

| 49 |

| **HMMT Feb 25** | 71.2 | 86.7 | 93.3 |

|

| 50 |

-

| **

|

| 51 |

| **32B** | | | |

|

| 52 |

| **AIME24** | 89.2 | 93.3 | 93.3 |

|

| 53 |

| **AIME25** | 84.0 | 90.0 | 93.3 |

|

| 54 |

| **HMMT Feb 25** | 73.8 | 86.7 | 96.7 |

|

| 55 |

-

| **

|

|

|

|

| 56 |

|

| 57 |

|

| 58 |

## How to use the models?

|

|

@@ -80,8 +91,7 @@ You must use ```python for just the final solution code block with the following

|

|

| 80 |

"""

|

| 81 |

|

| 82 |

# Math generation prompt

|

| 83 |

-

# prompt = """

|

| 84 |

-

# Solve the following math problem. Make sure to put the answer (and only answer) inside \boxed{{}}.

|

| 85 |

#

|

| 86 |

# {user}

|

| 87 |

# """

|

|

@@ -155,9 +165,9 @@ This model is intended for developers and researchers who work on competitive ma

|

|

| 155 |

Huggingface [07/16/2025] via https://huggingface.co/nvidia/OpenReasoning-Nemotron-14B/ <br>

|

| 156 |

|

| 157 |

## Reference(s):

|

| 158 |

-

[2504.01943] OpenCodeReasoning: Advancing Data Distillation for Competitive Coding

|

| 159 |

-

[2504.01943] OpenCodeReasoning: Advancing Data Distillation for Competitive Coding

|

| 160 |

-

[2504.16891] AIMO-2 Winning Solution: Building State-of-the-Art Mathematical Reasoning Models with OpenMathReasoning dataset

|

| 161 |

<br>

|

| 162 |

|

| 163 |

## Model Architecture: <br>

|

|

|

|

| 22 |

|

| 23 |

## Scores on Reasoning Benchmarks

|

| 24 |

|

| 25 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

+

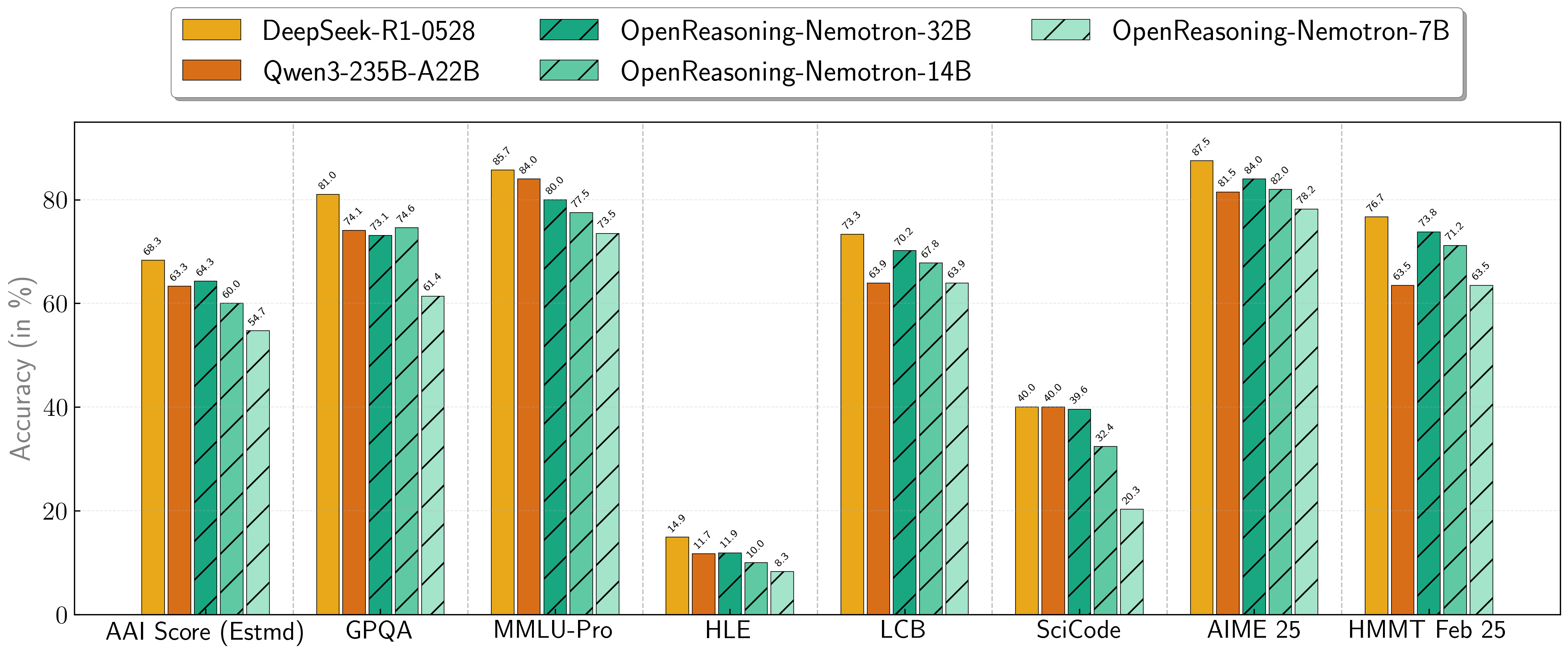

Our models demonstrate exceptional performance across a suite of challenging reasoning benchmarks. The 7B, 14B, and 32B models consistently set new state-of-the-art records for their size classes.

|

| 28 |

|

| 29 |

+

| **Model** | **AritificalAnalysisIndex*** | **GPQA** | **MMLU-PRO** | **HLE** | **LiveCodeBench*** | **SciCode** | **AIME24** | **AIME25** | **HMMT FEB 25** |

|

| 30 |

+

| :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- | :--- |

|

| 31 |

+

| **1.5B**| 31.0 | 31.6 | 47.5 | 5.5 | 28.6 | 2.2 | 55.5 | 45.6 | 31.5 |

|

| 32 |

+

| **7B** | 54.7 | 61.1 | 71.9 | 8.3 | 63.3 | 16.2 | 84.7 | 78.2 | 63.5 |

|

| 33 |

+

| **14B** | 60.9 | 71.6 | 77.5 | 10.1 | 67.8 | 23.5 | 87.8 | 82.0 | 71.2 |

|

| 34 |

+

| **32B** | 64.3 | 73.1 | 80.0 | 11.9 | 70.2 | 28.5 | 89.2 | 84.0 | 73.8 |

|

| 35 |

+

|

| 36 |

+

\* This is our estimation of the Artificial Analysis Intelligence Index, not an official score.

|

| 37 |

+

|

| 38 |

+

\* LiveCodeBench version 6, date range 2408-2505.

|

| 39 |

+

|

| 40 |

+

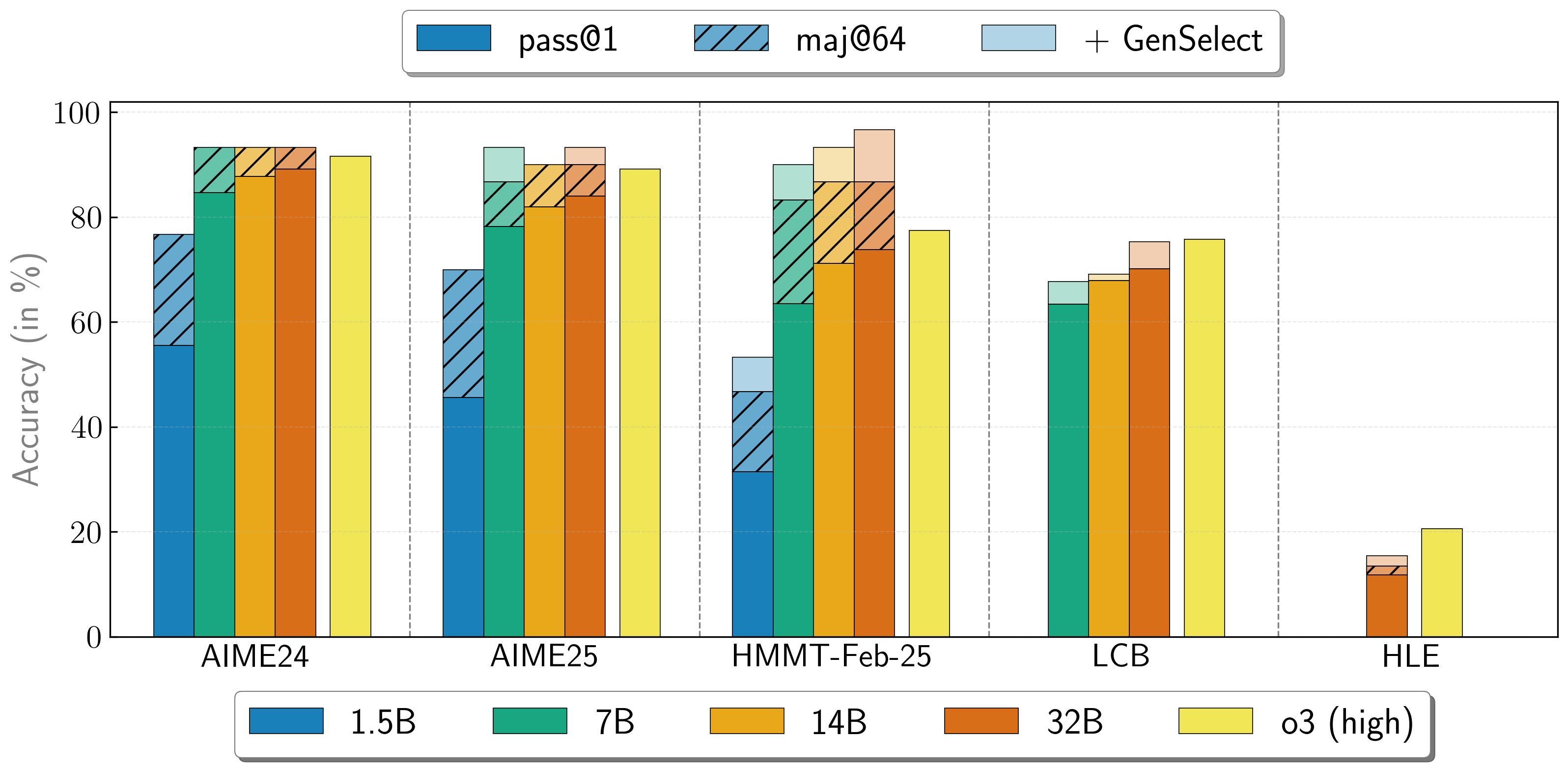

## Combining the work of multiple agents

|

| 41 |

+

OpenReasoning-Nemotron models can be used in a "heavy" mode by starting multiple parallel generations and combining them together via [generative solution selection (GenSelect)](https://arxiv.org/abs/2504.16891). To add this "skill" we follow the original GenSelect training pipeline except we do not train on the selection summary but use the full reasoning trace of DeepSeek R1 0528 671B instead. We only train models to select the best solution for math problems but surprisingly find that this capability directly generalizes to code and science questions! With this "heavy" GenSelect inference mode, OpenReasoning-Nemotron-32B model surpasses O3 (High) on math and coding benchmarks.

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

| **Model** | **Pass@1 (Avg@64)** | **Majority@64** | **GenSelect** |

|

| 46 |

| :--- | :--- | :--- | :--- |

|

| 47 |

| **1.5B** | | | |

|

| 48 |

| **AIME24** | 55.5 | 76.7 | 76.7 |

|

| 49 |

| **AIME25** | 45.6 | 70.0 | 70.0 |

|

| 50 |

| **HMMT Feb 25** | 31.5 | 46.7 | 53.3 |

|

|

|

|

| 51 |

| **7B** | | | |

|

| 52 |

| **AIME24** | 84.7 | 93.3 | 93.3 |

|

| 53 |

| **AIME25** | 78.2 | 86.7 | 93.3 |

|

| 54 |

| **HMMT Feb 25** | 63.5 | 83.3 | 90.0 |

|

| 55 |

+

| **LCB v6 2408-2505** | 63.4 | n/a | 67.7 |

|

| 56 |

| **14B** | | | |

|

| 57 |

| **AIME24** | 87.8 | 93.3 | 93.3 |

|

| 58 |

| **AIME25** | 82.0 | 90.0 | 90.0 |

|

| 59 |

| **HMMT Feb 25** | 71.2 | 86.7 | 93.3 |

|

| 60 |

+

| **LCB v6 2408-2505** | 67.9 | n/a | 69.1 |

|

| 61 |

| **32B** | | | |

|

| 62 |

| **AIME24** | 89.2 | 93.3 | 93.3 |

|

| 63 |

| **AIME25** | 84.0 | 90.0 | 93.3 |

|

| 64 |

| **HMMT Feb 25** | 73.8 | 86.7 | 96.7 |

|

| 65 |

+

| **LCB v6 2408-2505** | 70.2 | n/a | 75.3 |

|

| 66 |

+

| **HLE** | 11.8 | 13.4 | 15.5 |

|

| 67 |

|

| 68 |

|

| 69 |

## How to use the models?

|

|

|

|

| 91 |

"""

|

| 92 |

|

| 93 |

# Math generation prompt

|

| 94 |

+

# prompt = """Solve the following math problem. Make sure to put the answer (and only answer) inside \\boxed{}.

|

|

|

|

| 95 |

#

|

| 96 |

# {user}

|

| 97 |

# """

|

|

|

|

| 165 |

Huggingface [07/16/2025] via https://huggingface.co/nvidia/OpenReasoning-Nemotron-14B/ <br>

|

| 166 |

|

| 167 |

## Reference(s):

|

| 168 |

+

* [2504.01943] OpenCodeReasoning: Advancing Data Distillation for Competitive Coding

|

| 169 |

+

* [2504.01943] OpenCodeReasoning: Advancing Data Distillation for Competitive Coding

|

| 170 |

+

* [2504.16891] AIMO-2 Winning Solution: Building State-of-the-Art Mathematical Reasoning Models with OpenMathReasoning dataset

|

| 171 |

<br>

|

| 172 |

|

| 173 |

## Model Architecture: <br>

|