|

--- |

|

license: creativeml-openrail-m |

|

tags: |

|

- keras |

|

- diffusers |

|

- stable-diffusion |

|

- text-to-image |

|

- diffusion-models-class |

|

- keras-sprint |

|

- keras-dreambooth |

|

- scifi |

|

inference: true |

|

widget: |

|

- text: a drawing of drawbayc monkey as a turtle |

|

--- |

|

|

|

# KerasCV Stable Diffusion in Diffusers 🧨🤗 |

|

|

|

DreamBooth model for the `drawbayc monkey` concept trained by nielsgl on the `nielsgl/bayc-tiny` dataset, images from this [Kaggle dataset](https://www.kaggle.com/datasets/stanleyjzheng/bored-apes-yacht-club). |

|

It can be used by modifying the `instance_prompt`: **a drawing of drawbayc monkey** |

|

|

|

## Description |

|

|

|

The pipeline contained in this repository was created using a modified version of [this Space](https://huggingface.co/spaces/sayakpaul/convert-kerascv-sd-diffusers) for StableDiffusionV2 from KerasCV. The purpose is to convert the KerasCV Stable Diffusion weights in a way that is compatible with [Diffusers](https://github.com/huggingface/diffusers). This allows users to fine-tune using KerasCV and use the fine-tuned weights in Diffusers taking advantage of its nifty features (like [schedulers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/schedulers), [fast attention](https://huggingface.co/docs/diffusers/optimization/fp16), etc.). |

|

This model was created as part of the Keras DreamBooth Sprint 🔥. Visit the [organisation page](https://huggingface.co/keras-dreambooth) for instructions on how to take part! |

|

|

|

## Examples |

|

|

|

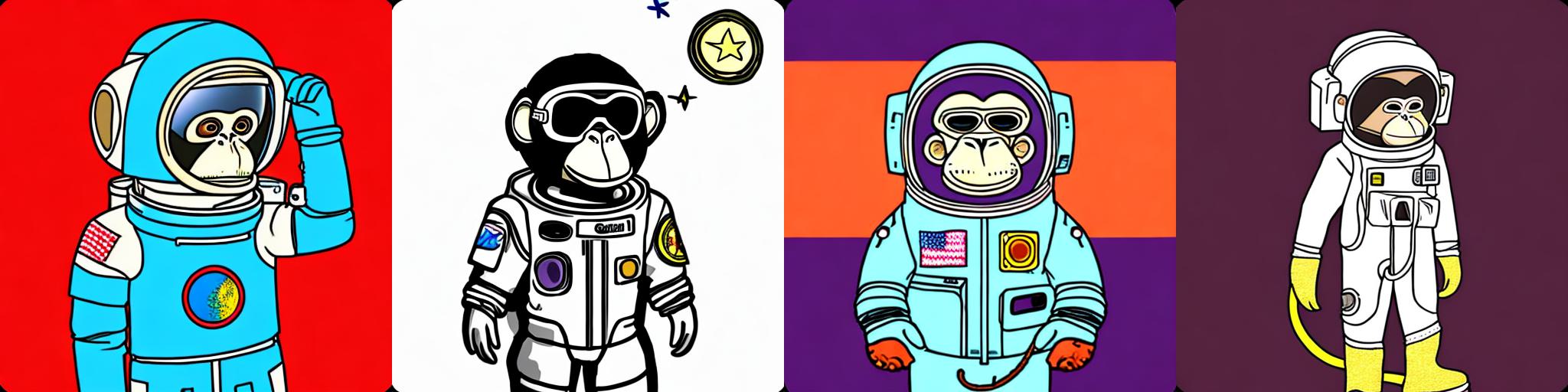

> A drawing of drawbayc monkey dressed as an astronaut |

|

|

|

|

|

|

|

> A drawing of drawbayc monkey dressed as the pope |

|

|

|

|

|

|

|

|

|

## Usage |

|

|

|

```python |

|

from diffusers import StableDiffusionPipeline |

|

|

|

pipeline = StableDiffusionPipeline.from_pretrained('nielsgl/dreambooth-bored-ape') |

|

image = pipeline().images[0] |

|

image |

|

``` |

|

|

|

## Training hyperparameters |

|

|

|

The following hyperparameters were used during training: |

|

|

|

| Hyperparameters | Value | |

|

| :-- | :-- | |

|

| name | RMSprop | |

|

| weight_decay | None | |

|

| clipnorm | None | |

|

| global_clipnorm | None | |

|

| clipvalue | None | |

|

| use_ema | False | |

|

| ema_momentum | 0.99 | |

|

| ema_overwrite_frequency | 100 | |

|

| jit_compile | True | |

|

| is_legacy_optimizer | False | |

|

| learning_rate | 0.0010000000474974513 | |

|

| rho | 0.9 | |

|

| momentum | 0.0 | |

|

| epsilon | 1e-07 | |

|

| centered | False | |

|

| training_precision | float32 | |

|

|