Adding modes, graphs and metadata.

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +92 -0

- model_card/layer_images/layer_0_attention_output_dense.png +0 -0

- model_card/layer_images/layer_0_attention_self_key.png +0 -0

- model_card/layer_images/layer_0_attention_self_query.png +0 -0

- model_card/layer_images/layer_0_attention_self_value.png +0 -0

- model_card/layer_images/layer_0_intermediate_dense.png +0 -0

- model_card/layer_images/layer_0_output_dense.png +0 -0

- model_card/layer_images/layer_10_attention_output_dense.png +0 -0

- model_card/layer_images/layer_10_attention_self_key.png +0 -0

- model_card/layer_images/layer_10_attention_self_query.png +0 -0

- model_card/layer_images/layer_10_attention_self_value.png +0 -0

- model_card/layer_images/layer_10_intermediate_dense.png +0 -0

- model_card/layer_images/layer_10_output_dense.png +0 -0

- model_card/layer_images/layer_11_attention_output_dense.png +0 -0

- model_card/layer_images/layer_11_attention_self_key.png +0 -0

- model_card/layer_images/layer_11_attention_self_query.png +0 -0

- model_card/layer_images/layer_11_attention_self_value.png +0 -0

- model_card/layer_images/layer_11_intermediate_dense.png +0 -0

- model_card/layer_images/layer_11_output_dense.png +0 -0

- model_card/layer_images/layer_1_attention_output_dense.png +0 -0

- model_card/layer_images/layer_1_attention_self_key.png +0 -0

- model_card/layer_images/layer_1_attention_self_query.png +0 -0

- model_card/layer_images/layer_1_attention_self_value.png +0 -0

- model_card/layer_images/layer_1_intermediate_dense.png +0 -0

- model_card/layer_images/layer_1_output_dense.png +0 -0

- model_card/layer_images/layer_2_attention_output_dense.png +0 -0

- model_card/layer_images/layer_2_attention_self_key.png +0 -0

- model_card/layer_images/layer_2_attention_self_query.png +0 -0

- model_card/layer_images/layer_2_attention_self_value.png +0 -0

- model_card/layer_images/layer_2_intermediate_dense.png +0 -0

- model_card/layer_images/layer_2_output_dense.png +0 -0

- model_card/layer_images/layer_3_attention_output_dense.png +0 -0

- model_card/layer_images/layer_3_attention_self_key.png +0 -0

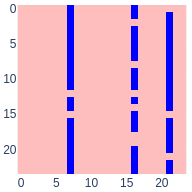

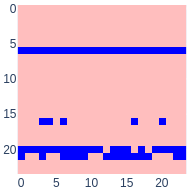

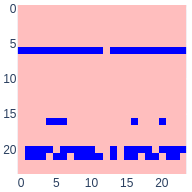

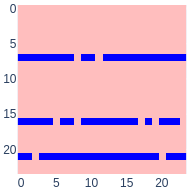

- model_card/layer_images/layer_3_attention_self_query.png +0 -0

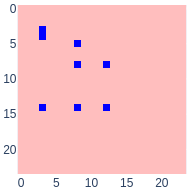

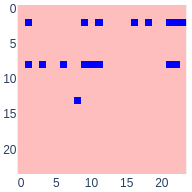

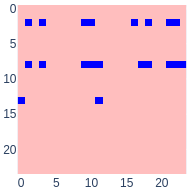

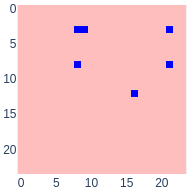

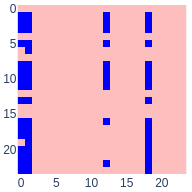

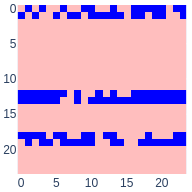

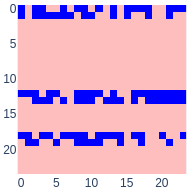

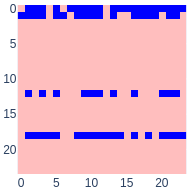

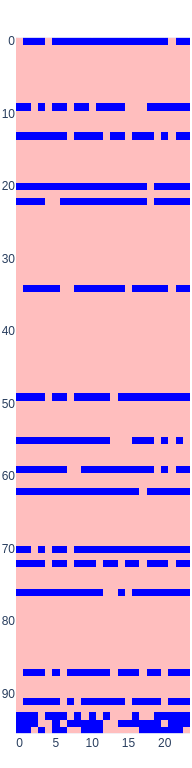

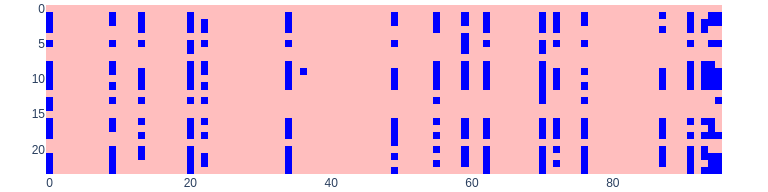

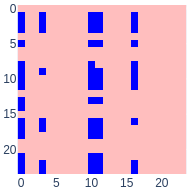

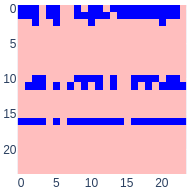

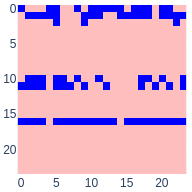

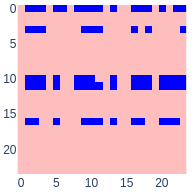

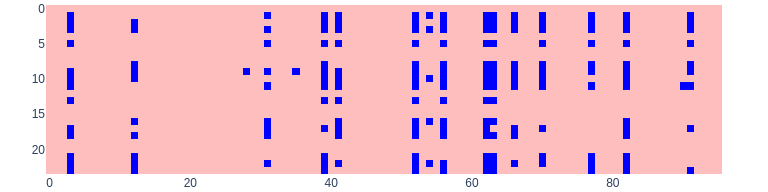

- model_card/layer_images/layer_3_attention_self_value.png +0 -0

- model_card/layer_images/layer_3_intermediate_dense.png +0 -0

- model_card/layer_images/layer_3_output_dense.png +0 -0

- model_card/layer_images/layer_4_attention_output_dense.png +0 -0

- model_card/layer_images/layer_4_attention_self_key.png +0 -0

- model_card/layer_images/layer_4_attention_self_query.png +0 -0

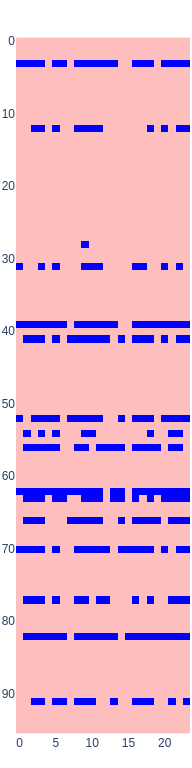

- model_card/layer_images/layer_4_attention_self_value.png +0 -0

- model_card/layer_images/layer_4_intermediate_dense.png +0 -0

- model_card/layer_images/layer_4_output_dense.png +0 -0

- model_card/layer_images/layer_5_attention_output_dense.png +0 -0

- model_card/layer_images/layer_5_attention_self_key.png +0 -0

- model_card/layer_images/layer_5_attention_self_query.png +0 -0

- model_card/layer_images/layer_5_attention_self_value.png +0 -0

- model_card/layer_images/layer_5_intermediate_dense.png +0 -0

- model_card/layer_images/layer_5_output_dense.png +0 -0

- model_card/layer_images/layer_6_attention_output_dense.png +0 -0

README.md

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: en

|

| 3 |

+

thumbnail:

|

| 4 |

+

license: mit

|

| 5 |

+

tags:

|

| 6 |

+

- question-answering

|

| 7 |

+

- bert

|

| 8 |

+

- bert-base

|

| 9 |

+

datasets:

|

| 10 |

+

- squad

|

| 11 |

+

metrics:

|

| 12 |

+

- squad

|

| 13 |

+

widget:

|

| 14 |

+

- text: "Where is the Eiffel Tower located?"

|

| 15 |

+

context: "The Eiffel Tower is a wrought-iron lattice tower on the Champ de Mars in Paris, France. It is named after the engineer Gustave Eiffel, whose company designed and built the tower."

|

| 16 |

+

- text: "Who is Frederic Chopin?"

|

| 17 |

+

context: "Frédéric François Chopin, born Fryderyk Franciszek Chopin (1 March 1810 – 17 October 1849), was a Polish composer and virtuoso pianist of the Romantic era who wrote primarily for solo piano."

|

| 18 |

+

---

|

| 19 |

+

|

| 20 |

+

## BERT-base uncased model fine-tuned on SQuAD v1

|

| 21 |

+

|

| 22 |

+

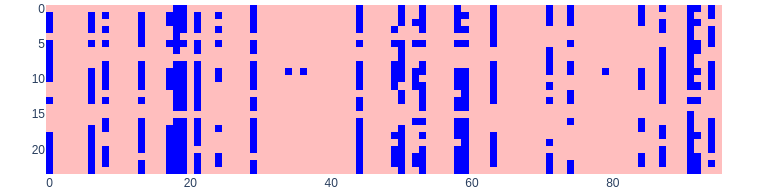

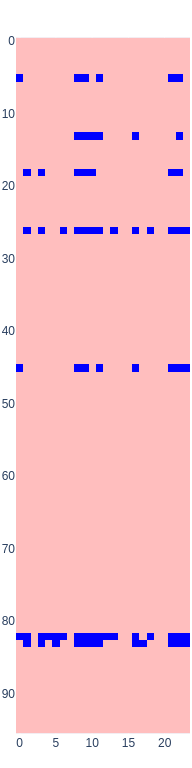

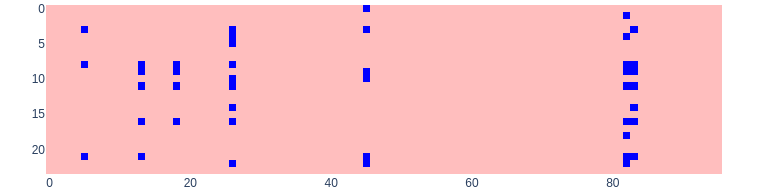

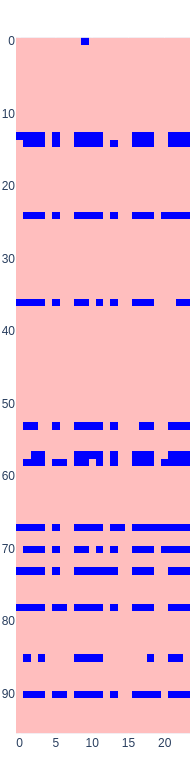

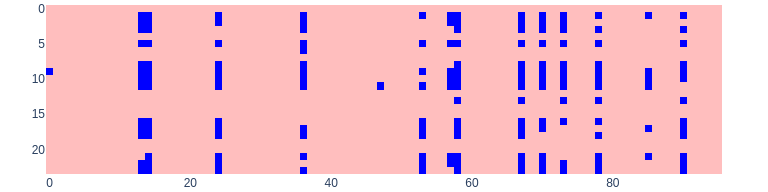

This model is block sparse: the **linear** layers contains **7.5%** of the original weights.

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

The model contains **28.2%** of the original weights **overall**.

|

| 26 |

+

|

| 27 |

+

The training use a modified version of Victor Sanh [Movement Pruning](https://arxiv.org/abs/2005.07683) method.

|

| 28 |

+

|

| 29 |

+

That means that with the [block-sparse](https://github.com/huggingface/pytorch_block_sparse) runtime it ran **1.92x** faster than an dense networks on the evaluation, at the price of some impact on the accuracy (see below).

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

This model was fine-tuned from the HuggingFace [BERT](https://www.aclweb.org/anthology/N19-1423/) base uncased checkpoint on [SQuAD1.1](https://rajpurkar.github.io/SQuAD-explorer), and distilled from the equivalent model [csarron/bert-base-uncased-squad-v1](https://huggingface.co/csarron/bert-base-uncased-squad-v1).

|

| 34 |

+

This model is case-insensitive: it does not make a difference between english and English.

|

| 35 |

+

|

| 36 |

+

## Pruning details

|

| 37 |

+

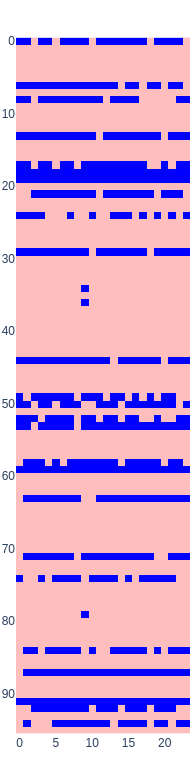

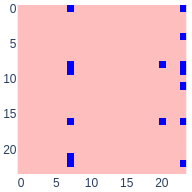

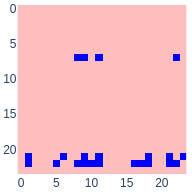

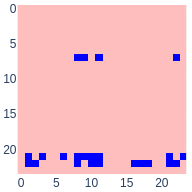

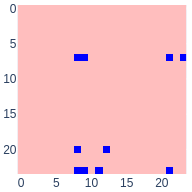

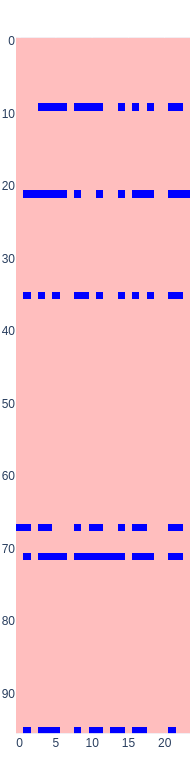

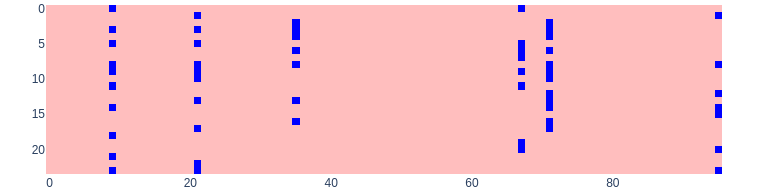

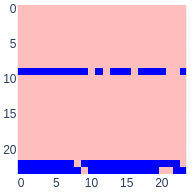

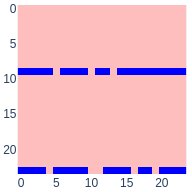

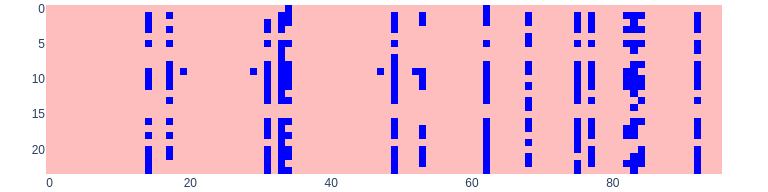

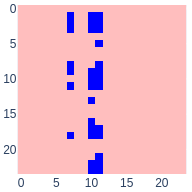

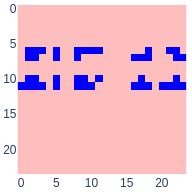

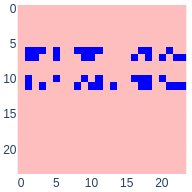

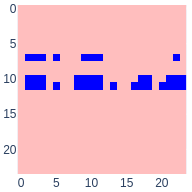

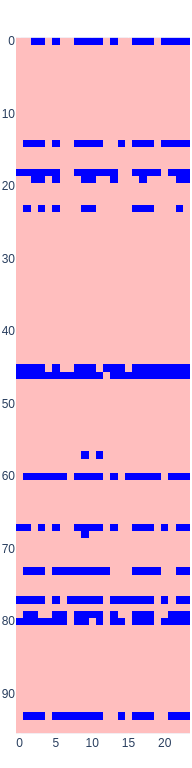

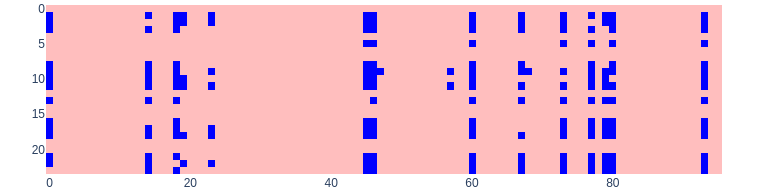

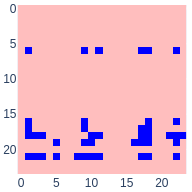

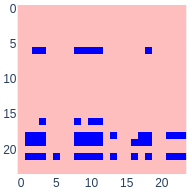

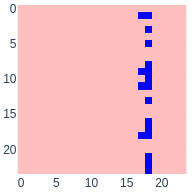

A side-effect of the block pruning is that some of the attention heads are completely removed: 106 heads were removed on a total of 144 (73.6%).

|

| 38 |

+

|

| 39 |

+

Here is a detailed view on how the remaining heads are distributed in the network after pruning.

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

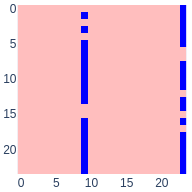

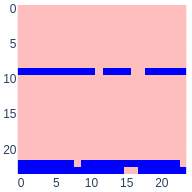

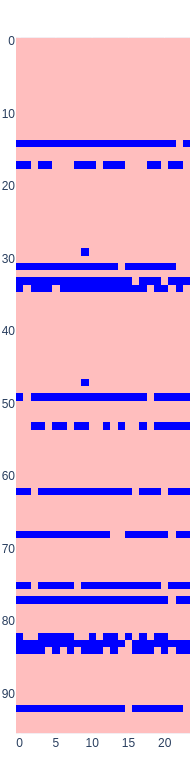

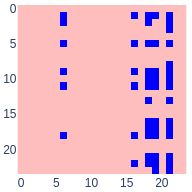

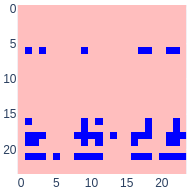

## Density plot

|

| 44 |

+

|

| 45 |

+

<script src="/madlag/bert-base-uncased-squad1.1-block-sparse-0.07-v1/raw/main/model_card/density.js" id="62a463c5-d1fc-424a-92df-94a31404cedd"></script>

|

| 46 |

+

|

| 47 |

+

## Details

|

| 48 |

+

|

| 49 |

+

| Dataset | Split | # samples |

|

| 50 |

+

| -------- | ----- | --------- |

|

| 51 |

+

| SQuAD1.1 | train | 90.6K |

|

| 52 |

+

| SQuAD1.1 | eval | 11.1k |

|

| 53 |

+

|

| 54 |

+

### Fine-tuning

|

| 55 |

+

- Python: `3.8.5`

|

| 56 |

+

|

| 57 |

+

- Machine specs:

|

| 58 |

+

|

| 59 |

+

```CPU: Intel(R) Core(TM) i7-6700K CPU

|

| 60 |

+

Memory: 64 GiB

|

| 61 |

+

GPUs: 1 GeForce GTX 3090, with 24GiB memory

|

| 62 |

+

GPU driver: 455.23.05, CUDA: 11.1

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

### Results

|

| 67 |

+

|

| 68 |

+

**Pytorch model file size**: `335M` (original BERT: `438M`)

|

| 69 |

+

|

| 70 |

+

| Metric | # Value | # Original ([Table 2](https://www.aclweb.org/anthology/N19-1423.pdf))|

|

| 71 |

+

| ------ | --------- | --------- |

|

| 72 |

+

| **EM** | **71.88** | **80.8** |

|

| 73 |

+

| **F1** | **81.36** | **88.5** |

|

| 74 |

+

|

| 75 |

+

## Example Usage

|

| 76 |

+

|

| 77 |

+

```python

|

| 78 |

+

from transformers import pipeline

|

| 79 |

+

|

| 80 |

+

qa_pipeline = pipeline(

|

| 81 |

+

"question-answering",

|

| 82 |

+

model="madlag/bert-base-uncased-squad1.1-block-sparse-0.07-v1",

|

| 83 |

+

tokenizer="madlag/bert-base-uncased-squad1.1-block-sparse-0.07-v1"

|

| 84 |

+

)

|

| 85 |

+

|

| 86 |

+

predictions = qa_pipeline({

|

| 87 |

+

'context': "Frédéric François Chopin, born Fryderyk Franciszek Chopin (1 March 1810 – 17 October 1849), was a Polish composer and virtuoso pianist of the Romantic era who wrote primarily for solo piano.",

|

| 88 |

+

'question': "Who is Frederic Chopin?",

|

| 89 |

+

})

|

| 90 |

+

|

| 91 |

+

print(predictions)

|

| 92 |

+

```

|

model_card/layer_images/layer_0_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_0_attention_self_key.png

ADDED

|

model_card/layer_images/layer_0_attention_self_query.png

ADDED

|

model_card/layer_images/layer_0_attention_self_value.png

ADDED

|

model_card/layer_images/layer_0_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_0_output_dense.png

ADDED

|

model_card/layer_images/layer_10_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_10_attention_self_key.png

ADDED

|

model_card/layer_images/layer_10_attention_self_query.png

ADDED

|

model_card/layer_images/layer_10_attention_self_value.png

ADDED

|

model_card/layer_images/layer_10_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_10_output_dense.png

ADDED

|

model_card/layer_images/layer_11_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_11_attention_self_key.png

ADDED

|

model_card/layer_images/layer_11_attention_self_query.png

ADDED

|

model_card/layer_images/layer_11_attention_self_value.png

ADDED

|

model_card/layer_images/layer_11_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_11_output_dense.png

ADDED

|

model_card/layer_images/layer_1_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_1_attention_self_key.png

ADDED

|

model_card/layer_images/layer_1_attention_self_query.png

ADDED

|

model_card/layer_images/layer_1_attention_self_value.png

ADDED

|

model_card/layer_images/layer_1_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_1_output_dense.png

ADDED

|

model_card/layer_images/layer_2_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_2_attention_self_key.png

ADDED

|

model_card/layer_images/layer_2_attention_self_query.png

ADDED

|

model_card/layer_images/layer_2_attention_self_value.png

ADDED

|

model_card/layer_images/layer_2_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_2_output_dense.png

ADDED

|

model_card/layer_images/layer_3_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_3_attention_self_key.png

ADDED

|

model_card/layer_images/layer_3_attention_self_query.png

ADDED

|

model_card/layer_images/layer_3_attention_self_value.png

ADDED

|

model_card/layer_images/layer_3_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_3_output_dense.png

ADDED

|

model_card/layer_images/layer_4_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_4_attention_self_key.png

ADDED

|

model_card/layer_images/layer_4_attention_self_query.png

ADDED

|

model_card/layer_images/layer_4_attention_self_value.png

ADDED

|

model_card/layer_images/layer_4_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_4_output_dense.png

ADDED

|

model_card/layer_images/layer_5_attention_output_dense.png

ADDED

|

model_card/layer_images/layer_5_attention_self_key.png

ADDED

|

model_card/layer_images/layer_5_attention_self_query.png

ADDED

|

model_card/layer_images/layer_5_attention_self_value.png

ADDED

|

model_card/layer_images/layer_5_intermediate_dense.png

ADDED

|

model_card/layer_images/layer_5_output_dense.png

ADDED

|

model_card/layer_images/layer_6_attention_output_dense.png

ADDED

|