update readme

Browse files- README.md +16 -10

- images/Phase1_data.png +0 -0

- images/Phase2_data.png +0 -0

README.md

CHANGED

|

@@ -2,17 +2,17 @@

|

|

| 2 |

license: apache-2.0

|

| 3 |

---

|

| 4 |

# **JetMoE**

|

| 5 |

-

**JetMoE-

|

| 6 |

JetMoE project aims to provide an LLaMA2-level performance and efficient language model with a limited budget.

|

| 7 |

To achieve this goal, JetMoE uses a sparsely activated architecture inspired by the [ModuleFormer](https://arxiv.org/abs/2306.04640).

|

| 8 |

Each JetMoE block consists of two MoE layers: Mixture of Attention Heads and Mixture of MLP Experts.

|

| 9 |

Given the input tokens, it activates a subset of its experts to process them.

|

| 10 |

-

Thus, JetMoE-

|

| 11 |

This sparse activation schema enables JetMoE to achieve much better training throughput than similar size dense models.

|

| 12 |

The model is trained with 1.25T tokens from publicly available datasets on 96 H100s within 13 days.

|

| 13 |

Given the current market price of H100 GPU hours, training the model costs around 0.1 million dollars.

|

| 14 |

-

To our surprise, JetMoE-

|

| 15 |

-

Compared to a model with similar training and inference computation, like Gemma-2B, JetMoE-

|

| 16 |

|

| 17 |

## Evaluation Results

|

| 18 |

For most benchmarks, we use the same evaluation methodology as in the [Open LLM leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard). For code benchmarks, we use the same evaluation methodology as in the LLaMA2 and Deepseek MoE paper. The evaluation results are as follows:

|

|

@@ -24,7 +24,7 @@ For most benchmarks, we use the same evaluation methodology as in the [Open LLM

|

|

| 24 |

|LLaMA-13B|13B|1T|**56.2**|**80.9**|47.7|39.5|**76.2**|7.6|51.4|22.0|15.8|

|

| 25 |

|DeepseekMoE-16B|2.8B|2T|53.2|79.8|46.3|36.1|73.7|17.3|51.1|34.0|**25.0**|

|

| 26 |

|Gemma-2B|2B|2T|48.4|71.8|41.8|33.1|66.3|16.9|46.4|28.0|24.4|

|

| 27 |

-

|JetMoE-

|

| 28 |

|

| 29 |

## Model Usage

|

| 30 |

To load the models, you need install [this package](https://github.com/yikangshen/JetMoE):

|

|

@@ -46,11 +46,11 @@ model = AutoModelForCausalLM.from_pretrained('jetmoe/jetmoe-8b')

|

|

| 46 |

The MoE code is based on the [ScatterMoE](https://github.com/shawntan/scattermoe). The code is still under active development, we are happy to receive any feedback or suggestions.

|

| 47 |

|

| 48 |

## Model Details

|

| 49 |

-

JetMoE-

|

| 50 |

Each block has two MoE layers: Mixture of Attention heads (MoA) and Mixture of MLP Experts (MoE).

|

| 51 |

Each MoA and MoE layer has 8 expert, and 2 experts are activated for each input token.

|

| 52 |

It has 8 billion parameters in total and 2.2B active parameters.

|

| 53 |

-

JetMoE-

|

| 54 |

|

| 55 |

<figure>

|

| 56 |

<center>

|

|

@@ -63,8 +63,14 @@ JetMoE-8B is trained on 1.25T tokens from publicly available datasets, with a le

|

|

| 63 |

|

| 64 |

**Output** Models generate text only.

|

| 65 |

|

| 66 |

-

## Training

|

| 67 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 68 |

|

| 69 |

## Authors

|

| 70 |

This project is currently contributed by the following authors:

|

|

@@ -88,7 +94,7 @@ Please cite the following paper if you use the data or code in this repo.

|

|

| 88 |

## JetMoE Model Index

|

| 89 |

|Model|Index|

|

| 90 |

|---|---|

|

| 91 |

-

|JetMoE-

|

| 92 |

|

| 93 |

## Ethical Considerations and Limitations

|

| 94 |

JetMoE is a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, JetMoE’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of JetMoE, developers should perform safety testing and tuning tailored to their specific applications of the model.

|

|

|

|

| 2 |

license: apache-2.0

|

| 3 |

---

|

| 4 |

# **JetMoE**

|

| 5 |

+

**JetMoE-8x1B** is an 8B Mixture-of-Experts (MoE) language model developed by [Yikang Shen](https://scholar.google.com.hk/citations?user=qff5rRYAAAAJ) and [MyShell](https://myshell.ai/).

|

| 6 |

JetMoE project aims to provide an LLaMA2-level performance and efficient language model with a limited budget.

|

| 7 |

To achieve this goal, JetMoE uses a sparsely activated architecture inspired by the [ModuleFormer](https://arxiv.org/abs/2306.04640).

|

| 8 |

Each JetMoE block consists of two MoE layers: Mixture of Attention Heads and Mixture of MLP Experts.

|

| 9 |

Given the input tokens, it activates a subset of its experts to process them.

|

| 10 |

+

Thus, JetMoE-8x1B has 8B parameters in total, but only 2B are activated for each input token.

|

| 11 |

This sparse activation schema enables JetMoE to achieve much better training throughput than similar size dense models.

|

| 12 |

The model is trained with 1.25T tokens from publicly available datasets on 96 H100s within 13 days.

|

| 13 |

Given the current market price of H100 GPU hours, training the model costs around 0.1 million dollars.

|

| 14 |

+

To our surprise, JetMoE-8x1B performs even better than LLaMA2-7B, LLaMA-13B, and DeepseekMoE-16B despite the lower training cost and computation.

|

| 15 |

+

Compared to a model with similar training and inference computation, like Gemma-2B, JetMoE-8x1B achieves better performance.

|

| 16 |

|

| 17 |

## Evaluation Results

|

| 18 |

For most benchmarks, we use the same evaluation methodology as in the [Open LLM leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard). For code benchmarks, we use the same evaluation methodology as in the LLaMA2 and Deepseek MoE paper. The evaluation results are as follows:

|

|

|

|

| 24 |

|LLaMA-13B|13B|1T|**56.2**|**80.9**|47.7|39.5|**76.2**|7.6|51.4|22.0|15.8|

|

| 25 |

|DeepseekMoE-16B|2.8B|2T|53.2|79.8|46.3|36.1|73.7|17.3|51.1|34.0|**25.0**|

|

| 26 |

|Gemma-2B|2B|2T|48.4|71.8|41.8|33.1|66.3|16.9|46.4|28.0|24.4|

|

| 27 |

+

|JetMoE-8x1B|2.2B|1.25T|48.7|80.5|**49.2**|**41.7**|70.2|**27.8**|**53.0**|**34.2**|14.6|

|

| 28 |

|

| 29 |

## Model Usage

|

| 30 |

To load the models, you need install [this package](https://github.com/yikangshen/JetMoE):

|

|

|

|

| 46 |

The MoE code is based on the [ScatterMoE](https://github.com/shawntan/scattermoe). The code is still under active development, we are happy to receive any feedback or suggestions.

|

| 47 |

|

| 48 |

## Model Details

|

| 49 |

+

JetMoE-8x1B has 24 blocks.

|

| 50 |

Each block has two MoE layers: Mixture of Attention heads (MoA) and Mixture of MLP Experts (MoE).

|

| 51 |

Each MoA and MoE layer has 8 expert, and 2 experts are activated for each input token.

|

| 52 |

It has 8 billion parameters in total and 2.2B active parameters.

|

| 53 |

+

JetMoE-8x1B is trained on 1.25T tokens from publicly available datasets, with a learning rate of 5.0 x 10<sup>-4</sup> and a global batch-size of 4M tokens.

|

| 54 |

|

| 55 |

<figure>

|

| 56 |

<center>

|

|

|

|

| 63 |

|

| 64 |

**Output** Models generate text only.

|

| 65 |

|

| 66 |

+

## Training Details

|

| 67 |

+

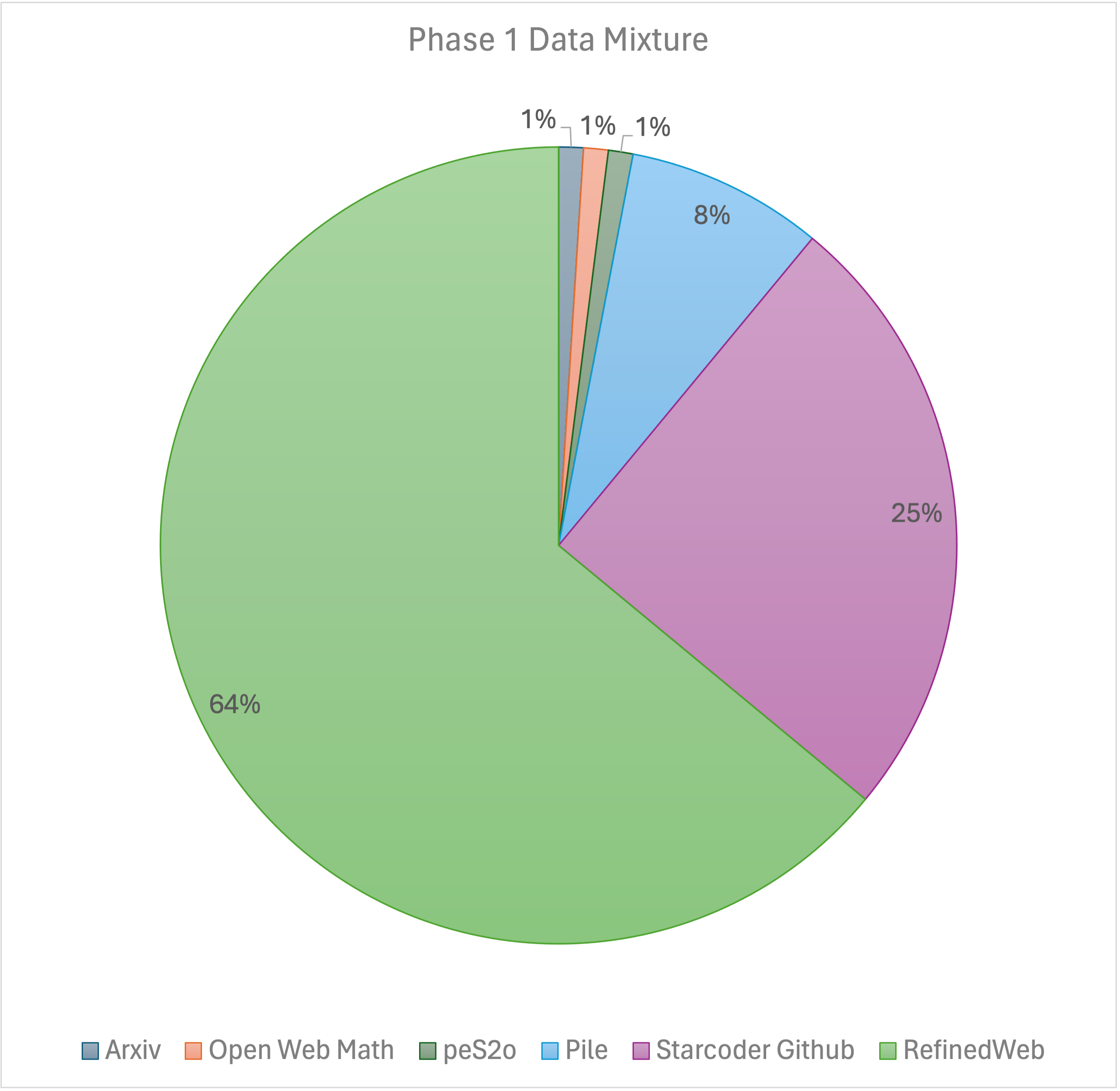

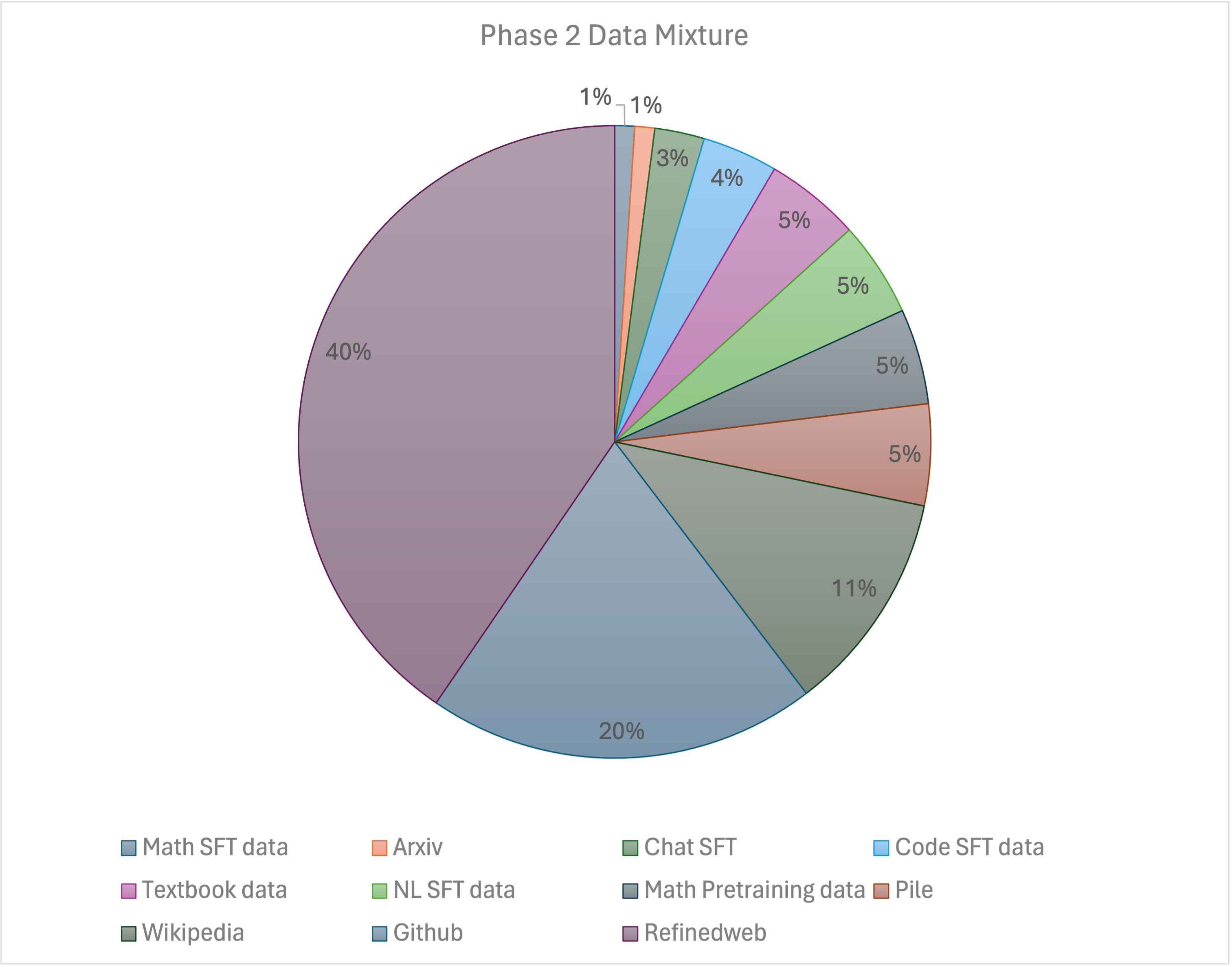

Our training recipe follows the [MiniCPM](https://shengdinghu.notion.site/MiniCPM-Unveiling-the-Potential-of-End-side-Large-Language-Models-d4d3a8c426424654a4e80e42a711cb20?pvs=4)'s two-stage training method. The first stage use a constant learning rate with linear warmup. It is trained 1 trillion tokens from large scale opensource pretraining datasets, including RefinedWeb, Pile, Starcoder Github data, etc. The second stage use an annealing phase with exponential learning rate data and is trained on 250 billion tokens from phase one datasets and extra high-quality opensource datasets.

|

| 68 |

+

<figure>

|

| 69 |

+

<center>

|

| 70 |

+

<img src="images/Phase1_data.png" width="40.3%">

|

| 71 |

+

<img src="images/Phase2_data.png" width="50%">

|

| 72 |

+

</center>

|

| 73 |

+

</figure>

|

| 74 |

|

| 75 |

## Authors

|

| 76 |

This project is currently contributed by the following authors:

|

|

|

|

| 94 |

## JetMoE Model Index

|

| 95 |

|Model|Index|

|

| 96 |

|---|---|

|

| 97 |

+

|JetMoE-8x1B| [Link](https://huggingface.co/jetmoe/jetmoe-8B) |

|

| 98 |

|

| 99 |

## Ethical Considerations and Limitations

|

| 100 |

JetMoE is a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, JetMoE’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of JetMoE, developers should perform safety testing and tuning tailored to their specific applications of the model.

|

images/Phase1_data.png

ADDED

|

images/Phase2_data.png

ADDED

|