metadata

language:

- multilingual

- en

- de

license: mit

widget:

- text: I don't get [MASK] er damit erreichen will.

example_title: Example 2

German-English Code-Switching BERT

A BERT-based model trained with masked language modelling on a large corpus of German--English code-switching. It was introduced in this paper. This model is case sensitive.

Overview

- Initialized language model: bert-base-multilingual-cased

- Training data: The TongueSwitcher Corpus

- Infrastructure: 4x Nvidia A100 GPUs

- Published: 16 October 2023

Hyperparameters

batch_size = 32

epochs = 1

n_steps = 191,950

max_seq_len = 512

learning_rate = 1e-4

weight_decay = 0.01

Adam beta = (0.9, 0.999)

lr_schedule = LinearWarmup

num_warmup_steps = 10,000

seed = 2021

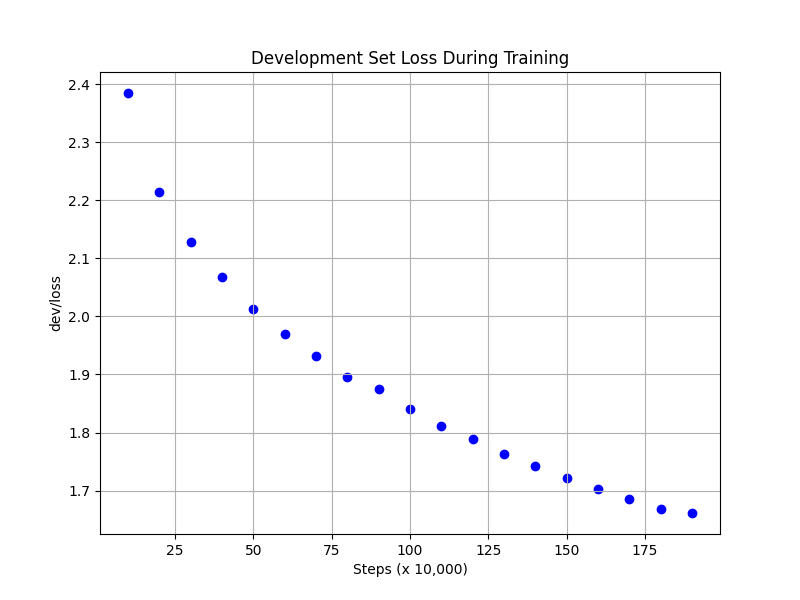

Performance

During training we monitored the evaluation loss on the TongueSwitcher dev set.

Authors

- Igor Sterner:

is473 [at] cam.ac.uk - Simone Teufel:

sht25 [at] cam.ac.uk

BibTeX entry and citation info

@inproceedings{sterner2023tongueswitcher,

author = {Igor Sterner and Simone Teufel},

title = {TongueSwitcher: Fine-Grained Identification of German-English Code-Switching},

booktitle = {Sixth Workshop on Computational Approaches to Linguistic Code-Switching},

year = {2023},

}