metadata

datasets:

- ewof/code-alpaca-instruct-unfiltered

library_name: peft

tags:

- open-llama

- llama

- code

- instruct

- instruct-code

- code-alpaca

- alpaca-instruct

- alpaca

- llama7b

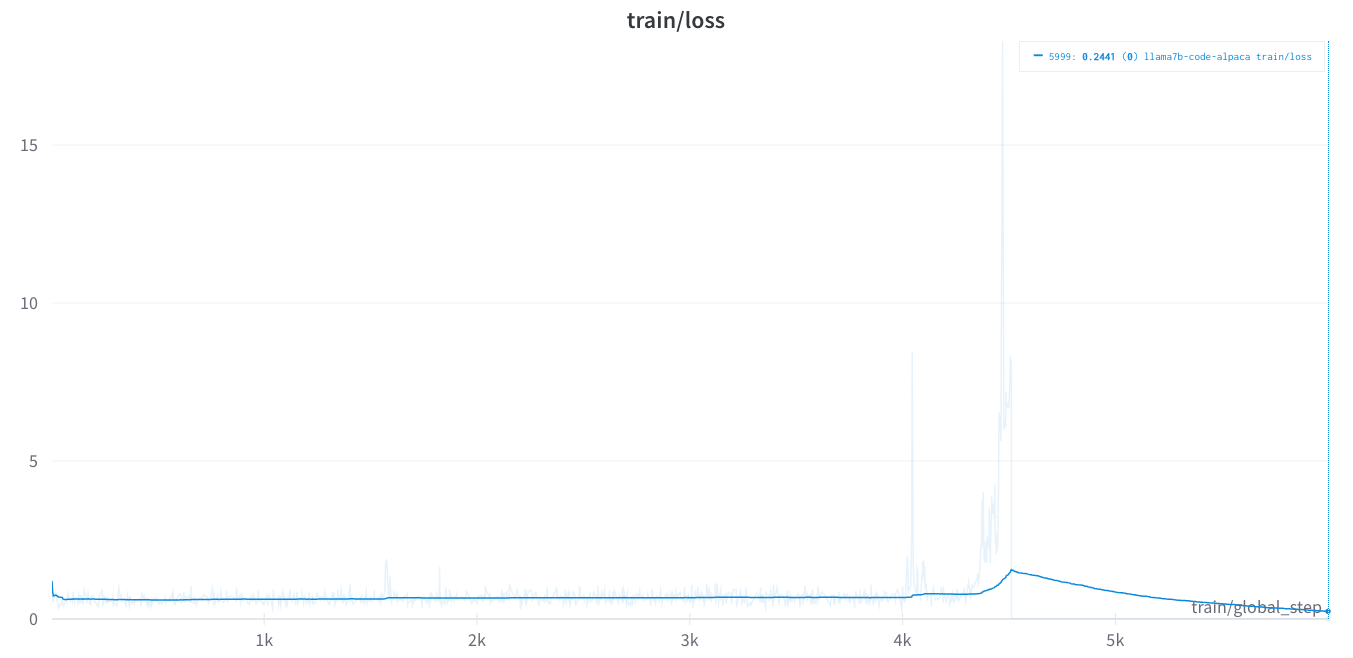

We finetuned Open Llama 7B on Code-Alpaca-Instruct Dataset (ewof/code-alpaca-instruct-unfiltered) for 3 epochs using MonsterAPI no-code LLM finetuner.

This dataset is HuggingFaceH4/CodeAlpaca_20K unfiltered, removing 36 instances of blatant alignment.

The finetuning session got completed in 75 minutes and costed us only $3 for the entire finetuning run!