Migrate model card from transformers-repo

Browse filesRead announcement at https://discuss.huggingface.co/t/announcement-all-model-cards-will-be-migrated-to-hf-co-model-repos/2755

Original file history: https://github.com/huggingface/transformers/commits/master/model_cards/bert-base-german-cased-README.md

README.md

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: de

|

| 3 |

+

license: mit

|

| 4 |

+

thumbnail: https://static.tildacdn.com/tild6438-3730-4164-b266-613634323466/german_bert.png

|

| 5 |

+

tags:

|

| 6 |

+

- exbert

|

| 7 |

+

---

|

| 8 |

+

|

| 9 |

+

<a href="https://huggingface.co/exbert/?model=bert-base-german-cased">

|

| 10 |

+

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

|

| 11 |

+

</a>

|

| 12 |

+

|

| 13 |

+

# German BERT

|

| 14 |

+

|

| 15 |

+

## Overview

|

| 16 |

+

**Language model:** bert-base-cased

|

| 17 |

+

**Language:** German

|

| 18 |

+

**Training data:** Wiki, OpenLegalData, News (~ 12GB)

|

| 19 |

+

**Eval data:** Conll03 (NER), GermEval14 (NER), GermEval18 (Classification), GNAD (Classification)

|

| 20 |

+

**Infrastructure**: 1x TPU v2

|

| 21 |

+

**Published**: Jun 14th, 2019

|

| 22 |

+

|

| 23 |

+

**Update April 3rd, 2020**: we updated the vocabulary file on deepset's s3 to conform with the default tokenization of punctuation tokens.

|

| 24 |

+

For details see the related [FARM issue](https://github.com/deepset-ai/FARM/issues/60). If you want to use the old vocab we have also uploaded a ["deepset/bert-base-german-cased-oldvocab"](https://huggingface.co/deepset/bert-base-german-cased-oldvocab) model.

|

| 25 |

+

|

| 26 |

+

## Details

|

| 27 |

+

- We trained using Google's Tensorflow code on a single cloud TPU v2 with standard settings.

|

| 28 |

+

- We trained 810k steps with a batch size of 1024 for sequence length 128 and 30k steps with sequence length 512. Training took about 9 days.

|

| 29 |

+

- As training data we used the latest German Wikipedia dump (6GB of raw txt files), the OpenLegalData dump (2.4 GB) and news articles (3.6 GB).

|

| 30 |

+

- We cleaned the data dumps with tailored scripts and segmented sentences with spacy v2.1. To create tensorflow records we used the recommended sentencepiece library for creating the word piece vocabulary and tensorflow scripts to convert the text to data usable by BERT.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

See https://deepset.ai/german-bert for more details

|

| 34 |

+

|

| 35 |

+

## Hyperparameters

|

| 36 |

+

|

| 37 |

+

```

|

| 38 |

+

batch_size = 1024

|

| 39 |

+

n_steps = 810_000

|

| 40 |

+

max_seq_len = 128 (and 512 later)

|

| 41 |

+

learning_rate = 1e-4

|

| 42 |

+

lr_schedule = LinearWarmup

|

| 43 |

+

num_warmup_steps = 10_000

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

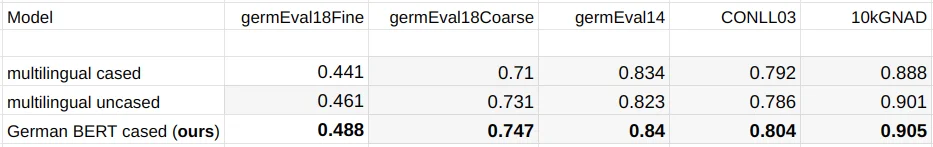

## Performance

|

| 47 |

+

|

| 48 |

+

During training we monitored the loss and evaluated different model checkpoints on the following German datasets:

|

| 49 |

+

|

| 50 |

+

- germEval18Fine: Macro f1 score for multiclass sentiment classification

|

| 51 |

+

- germEval18coarse: Macro f1 score for binary sentiment classification

|

| 52 |

+

- germEval14: Seq f1 score for NER (file names deuutf.\*)

|

| 53 |

+

- CONLL03: Seq f1 score for NER

|

| 54 |

+

- 10kGNAD: Accuracy for document classification

|

| 55 |

+

|

| 56 |

+

Even without thorough hyperparameter tuning, we observed quite stable learning especially for our German model. Multiple restarts with different seeds produced quite similar results.

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

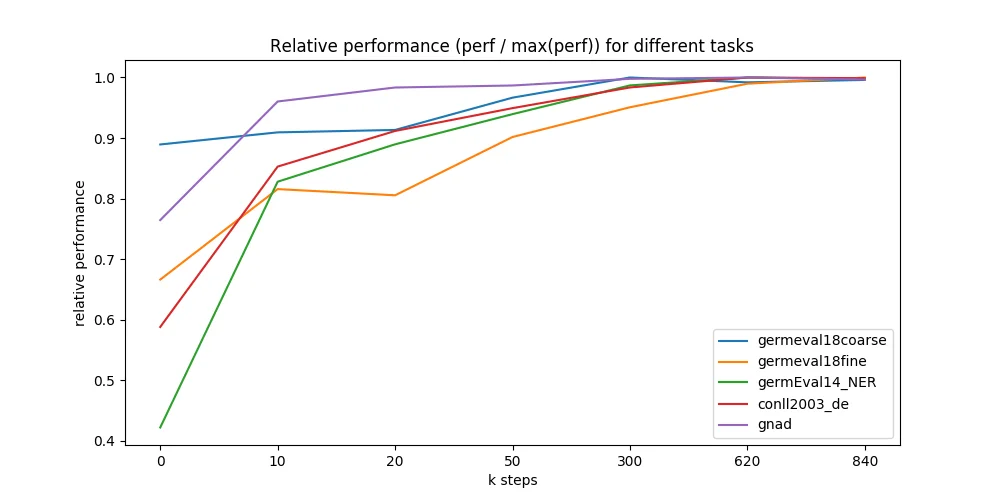

We further evaluated different points during the 9 days of pre-training and were astonished how fast the model converges to the maximally reachable performance. We ran all 5 downstream tasks on 7 different model checkpoints - taken at 0 up to 840k training steps (x-axis in figure below). Most checkpoints are taken from early training where we expected most performance changes. Surprisingly, even a randomly initialized BERT can be trained only on labeled downstream datasets and reach good performance (blue line, GermEval 2018 Coarse task, 795 kB trainset size).

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

## Authors

|

| 65 |

+

Branden Chan: `branden.chan [at] deepset.ai`

|

| 66 |

+

Timo Möller: `timo.moeller [at] deepset.ai`

|

| 67 |

+

Malte Pietsch: `malte.pietsch [at] deepset.ai`

|

| 68 |

+

Tanay Soni: `tanay.soni [at] deepset.ai`

|

| 69 |

+

|

| 70 |

+

## About us

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

We bring NLP to the industry via open source!

|

| 74 |

+

Our focus: Industry specific language models & large scale QA systems.

|

| 75 |

+

|

| 76 |

+

Some of our work:

|

| 77 |

+

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

|

| 78 |

+

- [FARM](https://github.com/deepset-ai/FARM)

|

| 79 |

+

- [Haystack](https://github.com/deepset-ai/haystack/)

|

| 80 |

+

|

| 81 |

+

Get in touch:

|

| 82 |

+

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Website](https://deepset.ai)

|