Upload model from GitHub.

Browse files- .gitattributes +2 -0

- CODEOWNERS +2 -0

- CODE_OF_CONDUCT.md +105 -0

- CodeT5.png +0 -0

- CodeT5_model_card.pdf +0 -0

- LICENSE.txt +12 -0

- README.md +247 -3

- SECURITY.md +7 -0

- _utils.py +306 -0

- codet5.gif +3 -0

- configs.py +137 -0

- evaluator/CodeBLEU/bleu.py +589 -0

- evaluator/CodeBLEU/calc_code_bleu.py +81 -0

- evaluator/CodeBLEU/dataflow_match.py +149 -0

- evaluator/CodeBLEU/keywords/c_sharp.txt +107 -0

- evaluator/CodeBLEU/keywords/java.txt +50 -0

- evaluator/CodeBLEU/parser/DFG.py +1184 -0

- evaluator/CodeBLEU/parser/__init__.py +8 -0

- evaluator/CodeBLEU/parser/build.py +21 -0

- evaluator/CodeBLEU/parser/build.sh +8 -0

- evaluator/CodeBLEU/parser/my-languages.so +3 -0

- evaluator/CodeBLEU/parser/utils.py +108 -0

- evaluator/CodeBLEU/readme.txt +1 -0

- evaluator/CodeBLEU/syntax_match.py +78 -0

- evaluator/CodeBLEU/utils.py +106 -0

- evaluator/CodeBLEU/weighted_ngram_match.py +558 -0

- evaluator/bleu.py +134 -0

- evaluator/smooth_bleu.py +208 -0

- models.py +398 -0

- run_clone.py +325 -0

- run_defect.py +314 -0

- run_gen.py +387 -0

- run_multi_gen.py +535 -0

- sh/exp_with_args.sh +94 -0

- sh/run_exp.py +165 -0

- tokenizer/apply_tokenizer.py +17 -0

- tokenizer/salesforce/codet5-merges.txt +0 -0

- tokenizer/salesforce/codet5-vocab.json +0 -0

- tokenizer/train_tokenizer.py +22 -0

- utils.py +263 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

codet5.gif filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

evaluator/CodeBLEU/parser/my-languages.so filter=lfs diff=lfs merge=lfs -text

|

CODEOWNERS

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Comment line immediately above ownership line is reserved for related gus information. Please be careful while editing.

|

| 2 |

+

#ECCN:Open Source

|

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,105 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Salesforce Open Source Community Code of Conduct

|

| 2 |

+

|

| 3 |

+

## About the Code of Conduct

|

| 4 |

+

|

| 5 |

+

Equality is a core value at Salesforce. We believe a diverse and inclusive

|

| 6 |

+

community fosters innovation and creativity, and are committed to building a

|

| 7 |

+

culture where everyone feels included.

|

| 8 |

+

|

| 9 |

+

Salesforce open-source projects are committed to providing a friendly, safe, and

|

| 10 |

+

welcoming environment for all, regardless of gender identity and expression,

|

| 11 |

+

sexual orientation, disability, physical appearance, body size, ethnicity, nationality,

|

| 12 |

+

race, age, religion, level of experience, education, socioeconomic status, or

|

| 13 |

+

other similar personal characteristics.

|

| 14 |

+

|

| 15 |

+

The goal of this code of conduct is to specify a baseline standard of behavior so

|

| 16 |

+

that people with different social values and communication styles can work

|

| 17 |

+

together effectively, productively, and respectfully in our open source community.

|

| 18 |

+

It also establishes a mechanism for reporting issues and resolving conflicts.

|

| 19 |

+

|

| 20 |

+

All questions and reports of abusive, harassing, or otherwise unacceptable behavior

|

| 21 |

+

in a Salesforce open-source project may be reported by contacting the Salesforce

|

| 22 |

+

Open Source Conduct Committee at ossconduct@salesforce.com.

|

| 23 |

+

|

| 24 |

+

## Our Pledge

|

| 25 |

+

|

| 26 |

+

In the interest of fostering an open and welcoming environment, we as

|

| 27 |

+

contributors and maintainers pledge to making participation in our project and

|

| 28 |

+

our community a harassment-free experience for everyone, regardless of gender

|

| 29 |

+

identity and expression, sexual orientation, disability, physical appearance,

|

| 30 |

+

body size, ethnicity, nationality, race, age, religion, level of experience, education,

|

| 31 |

+

socioeconomic status, or other similar personal characteristics.

|

| 32 |

+

|

| 33 |

+

## Our Standards

|

| 34 |

+

|

| 35 |

+

Examples of behavior that contributes to creating a positive environment

|

| 36 |

+

include:

|

| 37 |

+

|

| 38 |

+

* Using welcoming and inclusive language

|

| 39 |

+

* Being respectful of differing viewpoints and experiences

|

| 40 |

+

* Gracefully accepting constructive criticism

|

| 41 |

+

* Focusing on what is best for the community

|

| 42 |

+

* Showing empathy toward other community members

|

| 43 |

+

|

| 44 |

+

Examples of unacceptable behavior by participants include:

|

| 45 |

+

|

| 46 |

+

* The use of sexualized language or imagery and unwelcome sexual attention or

|

| 47 |

+

advances

|

| 48 |

+

* Personal attacks, insulting/derogatory comments, or trolling

|

| 49 |

+

* Public or private harassment

|

| 50 |

+

* Publishing, or threatening to publish, others' private information—such as

|

| 51 |

+

a physical or electronic address—without explicit permission

|

| 52 |

+

* Other conduct which could reasonably be considered inappropriate in a

|

| 53 |

+

professional setting

|

| 54 |

+

* Advocating for or encouraging any of the above behaviors

|

| 55 |

+

|

| 56 |

+

## Our Responsibilities

|

| 57 |

+

|

| 58 |

+

Project maintainers are responsible for clarifying the standards of acceptable

|

| 59 |

+

behavior and are expected to take appropriate and fair corrective action in

|

| 60 |

+

response to any instances of unacceptable behavior.

|

| 61 |

+

|

| 62 |

+

Project maintainers have the right and responsibility to remove, edit, or

|

| 63 |

+

reject comments, commits, code, wiki edits, issues, and other contributions

|

| 64 |

+

that are not aligned with this Code of Conduct, or to ban temporarily or

|

| 65 |

+

permanently any contributor for other behaviors that they deem inappropriate,

|

| 66 |

+

threatening, offensive, or harmful.

|

| 67 |

+

|

| 68 |

+

## Scope

|

| 69 |

+

|

| 70 |

+

This Code of Conduct applies both within project spaces and in public spaces

|

| 71 |

+

when an individual is representing the project or its community. Examples of

|

| 72 |

+

representing a project or community include using an official project email

|

| 73 |

+

address, posting via an official social media account, or acting as an appointed

|

| 74 |

+

representative at an online or offline event. Representation of a project may be

|

| 75 |

+

further defined and clarified by project maintainers.

|

| 76 |

+

|

| 77 |

+

## Enforcement

|

| 78 |

+

|

| 79 |

+

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

| 80 |

+

reported by contacting the Salesforce Open Source Conduct Committee

|

| 81 |

+

at ossconduct@salesforce.com. All complaints will be reviewed and investigated

|

| 82 |

+

and will result in a response that is deemed necessary and appropriate to the

|

| 83 |

+

circumstances. The committee is obligated to maintain confidentiality with

|

| 84 |

+

regard to the reporter of an incident. Further details of specific enforcement

|

| 85 |

+

policies may be posted separately.

|

| 86 |

+

|

| 87 |

+

Project maintainers who do not follow or enforce the Code of Conduct in good

|

| 88 |

+

faith may face temporary or permanent repercussions as determined by other

|

| 89 |

+

members of the project's leadership and the Salesforce Open Source Conduct

|

| 90 |

+

Committee.

|

| 91 |

+

|

| 92 |

+

## Attribution

|

| 93 |

+

|

| 94 |

+

This Code of Conduct is adapted from the [Contributor Covenant][contributor-covenant-home],

|

| 95 |

+

version 1.4, available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html.

|

| 96 |

+

It includes adaptions and additions from [Go Community Code of Conduct][golang-coc],

|

| 97 |

+

[CNCF Code of Conduct][cncf-coc], and [Microsoft Open Source Code of Conduct][microsoft-coc].

|

| 98 |

+

|

| 99 |

+

This Code of Conduct is licensed under the [Creative Commons Attribution 3.0 License][cc-by-3-us].

|

| 100 |

+

|

| 101 |

+

[contributor-covenant-home]: https://www.contributor-covenant.org (https://www.contributor-covenant.org/)

|

| 102 |

+

[golang-coc]: https://golang.org/conduct

|

| 103 |

+

[cncf-coc]: https://github.com/cncf/foundation/blob/master/code-of-conduct.md

|

| 104 |

+

[microsoft-coc]: https://opensource.microsoft.com/codeofconduct/

|

| 105 |

+

[cc-by-3-us]: https://creativecommons.org/licenses/by/3.0/us/

|

CodeT5.png

ADDED

|

CodeT5_model_card.pdf

ADDED

|

Binary file (114 kB). View file

|

|

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2021, Salesforce.com, Inc.

|

| 2 |

+

All rights reserved.

|

| 3 |

+

|

| 4 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 5 |

+

|

| 6 |

+

* Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 7 |

+

|

| 8 |

+

* Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 9 |

+

|

| 10 |

+

* Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 11 |

+

|

| 12 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

README.md

CHANGED

|

@@ -1,3 +1,247 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

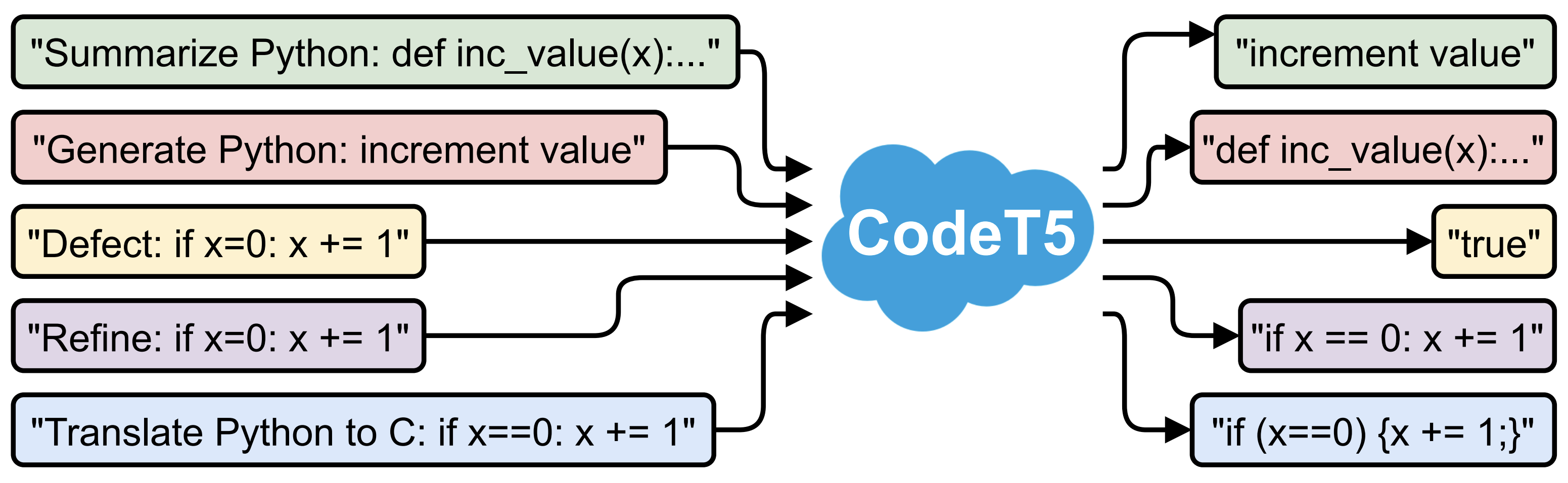

| 1 |

+

# CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation

|

| 2 |

+

|

| 3 |

+

This is the official PyTorch implementation for the following EMNLP 2021 paper from Salesforce Research:

|

| 4 |

+

|

| 5 |

+

**Title**: [CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation](https://arxiv.org/pdf/2109.00859.pdf)

|

| 6 |

+

|

| 7 |

+

**Authors**: [Yue Wang](https://yuewang-cuhk.github.io/), [Weishi Wang](https://www.linkedin.com/in/weishi-wang/)

|

| 8 |

+

, [Shafiq Joty](https://raihanjoty.github.io/), and [Steven C.H. Hoi](https://sites.google.com/view/stevenhoi/home)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## Updates

|

| 13 |

+

|

| 14 |

+

**July 06, 2022**

|

| 15 |

+

|

| 16 |

+

We release two large-sized CodeT5 checkpoints at Hugging Face: [Salesforce/codet5-large](https://huggingface.co/Salesforce/codet5-large) and [Salesforce/codet5-large-ntp-py](https://huggingface.co/Salesforce/codet5-large-ntp-py), which are introduced by the paper: [CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning](https://arxiv.org/pdf/2207.01780.pdf) by Hung Le, Yue Wang, Akhilesh Deepak Gotmare, Silvio Savarese, Steven C.H. Hoi.

|

| 17 |

+

|

| 18 |

+

* CodeT5-large was pretrained using Masked Span Prediction (MSP) objective on CodeSearchNet and achieve new SOTA results on several CodeXGLUE benchmarks. The finetuned checkpoints are released at [here](https://console.cloud.google.com/storage/browser/sfr-codet5-data-research/finetuned_models). See Appendix A.1 of the [paper](https://arxiv.org/pdf/2207.01780.pdf) for more details.

|

| 19 |

+

|

| 20 |

+

* CodeT5-large-ntp-py was first pretrained using Masked Span Prediction (MSP) objective on CodeSearchNet and GCPY (the Python split of [Github Code](https://huggingface.co/datasets/codeparrot/github-code) data), followed by another 10 epochs on GCPY using Next Token Prediction (NTP) objective.

|

| 21 |

+

|

| 22 |

+

CodeT5-large-ntp-py is especially optimized for Python code generation tasks and employed as the foundation model for our [CodeRL](https://github.com/salesforce/CodeRL), yielding new SOTA results on the APPS Python competition-level program synthesis benchmark. See the [paper](https://arxiv.org/pdf/2207.01780.pdf) for more details.

|

| 23 |

+

|

| 24 |

+

**Oct 29, 2021**

|

| 25 |

+

|

| 26 |

+

We release [fine-tuned checkpoints](https://console.cloud.google.com/storage/browser/sfr-codet5-data-research/finetuned_models)

|

| 27 |

+

for all the downstream tasks covered in the paper.

|

| 28 |

+

|

| 29 |

+

**Oct 25, 2021**

|

| 30 |

+

|

| 31 |

+

We release a CodeT5-base fine-tuned

|

| 32 |

+

checkpoint ([Salesforce/codet5-base-multi-sum](https://huggingface.co/Salesforce/codet5-base-multi-sum)) for

|

| 33 |

+

multilingual code summarzation. Below is how to use this model:

|

| 34 |

+

|

| 35 |

+

```python

|

| 36 |

+

from transformers import RobertaTokenizer, T5ForConditionalGeneration

|

| 37 |

+

|

| 38 |

+

if __name__ == '__main__':

|

| 39 |

+

tokenizer = RobertaTokenizer.from_pretrained('Salesforce/codet5-base')

|

| 40 |

+

model = T5ForConditionalGeneration.from_pretrained('Salesforce/codet5-base-multi-sum')

|

| 41 |

+

|

| 42 |

+

text = """def svg_to_image(string, size=None):

|

| 43 |

+

if isinstance(string, unicode):

|

| 44 |

+

string = string.encode('utf-8')

|

| 45 |

+

renderer = QtSvg.QSvgRenderer(QtCore.QByteArray(string))

|

| 46 |

+

if not renderer.isValid():

|

| 47 |

+

raise ValueError('Invalid SVG data.')

|

| 48 |

+

if size is None:

|

| 49 |

+

size = renderer.defaultSize()

|

| 50 |

+

image = QtGui.QImage(size, QtGui.QImage.Format_ARGB32)

|

| 51 |

+

painter = QtGui.QPainter(image)

|

| 52 |

+

renderer.render(painter)

|

| 53 |

+

return image"""

|

| 54 |

+

|

| 55 |

+

input_ids = tokenizer(text, return_tensors="pt").input_ids

|

| 56 |

+

|

| 57 |

+

generated_ids = model.generate(input_ids, max_length=20)

|

| 58 |

+

print(tokenizer.decode(generated_ids[0], skip_special_tokens=True))

|

| 59 |

+

# this prints: "Convert a SVG string to a QImage."

|

| 60 |

+

```

|

| 61 |

+

|

| 62 |

+

**Oct 18, 2021**

|

| 63 |

+

|

| 64 |

+

We add a [model card](https://github.com/salesforce/CodeT5/blob/main/CodeT5_model_card.pdf) for CodeT5! Please reach out

|

| 65 |

+

if you have any questions about it.

|

| 66 |

+

|

| 67 |

+

**Sep 24, 2021**

|

| 68 |

+

|

| 69 |

+

CodeT5 is now in [hugginface](https://huggingface.co/)!

|

| 70 |

+

|

| 71 |

+

You can simply load the model ([CodeT5-small](https://huggingface.co/Salesforce/codet5-small)

|

| 72 |

+

and [CodeT5-base](https://huggingface.co/Salesforce/codet5-base)) and do the inference:

|

| 73 |

+

|

| 74 |

+

```python

|

| 75 |

+

from transformers import RobertaTokenizer, T5ForConditionalGeneration

|

| 76 |

+

|

| 77 |

+

tokenizer = RobertaTokenizer.from_pretrained('Salesforce/codet5-base')

|

| 78 |

+

model = T5ForConditionalGeneration.from_pretrained('Salesforce/codet5-base')

|

| 79 |

+

|

| 80 |

+

text = "def greet(user): print(f'hello <extra_id_0>!')"

|

| 81 |

+

input_ids = tokenizer(text, return_tensors="pt").input_ids

|

| 82 |

+

|

| 83 |

+

# simply generate one code span

|

| 84 |

+

generated_ids = model.generate(input_ids, max_length=8)

|

| 85 |

+

print(tokenizer.decode(generated_ids[0], skip_special_tokens=True))

|

| 86 |

+

# this prints "{user.username}"

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

## Introduction

|

| 90 |

+

|

| 91 |

+

This repo provides the code for reproducing the experiments

|

| 92 |

+

in [CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation](https://arxiv.org/pdf/2109.00859.pdf)

|

| 93 |

+

. CodeT5 is a new pre-trained encoder-decoder model for programming languages, which is pre-trained on **8.35M**

|

| 94 |

+

functions in 8 programming languages (Python, Java, JavaScript, PHP, Ruby, Go, C, and C#). In total, it achieves

|

| 95 |

+

state-of-the-art results on **14 sub-tasks** in a code intelligence benchmark - [CodeXGLUE](https://github.com/microsoft/CodeXGLUE).

|

| 96 |

+

|

| 97 |

+

Paper link: https://arxiv.org/abs/2109.00859

|

| 98 |

+

|

| 99 |

+

Blog link: https://blog.salesforceairesearch.com/codet5/

|

| 100 |

+

|

| 101 |

+

The code currently includes two pre-trained checkpoints ([CodeT5-small](https://huggingface.co/Salesforce/codet5-small)

|

| 102 |

+

and [CodeT5-base](https://huggingface.co/Salesforce/codet5-base)) and scripts to fine-tune them on 4 generation tasks (

|

| 103 |

+

code summarization, code generation, translation, and refinement) plus 2 understanding tasks (code defect detection and

|

| 104 |

+

clone detection) in CodeXGLUE. We also provide their fine-tuned checkpoints to facilitate the easy replication

|

| 105 |

+

of our paper.

|

| 106 |

+

|

| 107 |

+

In practice, CodeT5 can be deployed as an AI-powered coding assistant to boost the productivity of software developers.

|

| 108 |

+

At Salesforce, we build an [AI coding assistant demo](https://github.com/salesforce/CodeT5/raw/main/codet5.gif) using

|

| 109 |

+

CodeT5 as a VS Code plugin to provide three capabilities for Apex developers:

|

| 110 |

+

|

| 111 |

+

- **Text-to-code generation**: generate code based on the natural language description.

|

| 112 |

+

- **Code autocompletion**: complete the whole function of code given the target function name.

|

| 113 |

+

- **Code summarization**: generate the summary of a function in natural language description.

|

| 114 |

+

|

| 115 |

+

## Table of Contents

|

| 116 |

+

|

| 117 |

+

1. [Citation](#citation)

|

| 118 |

+

2. [License](#license)

|

| 119 |

+

3. [Dependency](#dependency)

|

| 120 |

+

4. [Download](#download)

|

| 121 |

+

5. [Fine-tuning](#fine-tuning)

|

| 122 |

+

6. [Get Involved](#get-involved)

|

| 123 |

+

|

| 124 |

+

## Citation

|

| 125 |

+

|

| 126 |

+

If you find this code to be useful for your research, please consider citing:

|

| 127 |

+

|

| 128 |

+

```

|

| 129 |

+

@inproceedings{

|

| 130 |

+

wang2021codet5,

|

| 131 |

+

title={CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation},

|

| 132 |

+

author={Yue Wang, Weishi Wang, Shafiq Joty, Steven C.H. Hoi},

|

| 133 |

+

booktitle={Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP 2021},

|

| 134 |

+

year={2021},

|

| 135 |

+

}

|

| 136 |

+

|

| 137 |

+

@article{coderl2022,

|

| 138 |

+

title={CodeRL: Mastering Code Generation through Pretrained Models and Deep Reinforcement Learning},

|

| 139 |

+

author={Le, Hung and Wang, Yue and Gotmare, Akhilesh Deepak and Savarese, Silvio and Hoi, Steven C. H.},

|

| 140 |

+

journal={arXiv preprint arXiv:2207.01780},

|

| 141 |

+

year={2022}

|

| 142 |

+

}

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

## License

|

| 146 |

+

|

| 147 |

+

The code is released under the BSD-3 License (see `LICENSE.txt` for details), but we also ask that users respect the

|

| 148 |

+

following:

|

| 149 |

+

|

| 150 |

+

This software should not be used to promote or profit from:

|

| 151 |

+

|

| 152 |

+

violence, hate, and division,

|

| 153 |

+

|

| 154 |

+

environmental destruction,

|

| 155 |

+

|

| 156 |

+

abuse of human rights, or

|

| 157 |

+

|

| 158 |

+

the destruction of people's physical and mental health.

|

| 159 |

+

|

| 160 |

+

We encourage users of this software to tell us about the applications in which they are putting it to use by emailing

|

| 161 |

+

codeT5@salesforce.com, and to

|

| 162 |

+

use [appropriate](https://arxiv.org/abs/1810.03993) [documentation](https://www.partnershiponai.org/about-ml/) when

|

| 163 |

+

developing high-stakes applications of this model.

|

| 164 |

+

|

| 165 |

+

## Dependency

|

| 166 |

+

|

| 167 |

+

- Pytorch 1.7.1

|

| 168 |

+

- tensorboard 2.4.1

|

| 169 |

+

- transformers 4.6.1

|

| 170 |

+

- tree-sitter 0.2.2

|

| 171 |

+

|

| 172 |

+

## Download

|

| 173 |

+

|

| 174 |

+

* [Pre-trained checkpoints](https://console.cloud.google.com/storage/browser/sfr-codet5-data-research/pretrained_models)

|

| 175 |

+

* [Fine-tuning data](https://console.cloud.google.com/storage/browser/sfr-codet5-data-research/data)

|

| 176 |

+

* [Fine-tuned checkpoints](https://console.cloud.google.com/storage/browser/sfr-codet5-data-research/finetuned_models)

|

| 177 |

+

|

| 178 |

+

Instructions to download:

|

| 179 |

+

|

| 180 |

+

```

|

| 181 |

+

# pip install gsutil

|

| 182 |

+

cd your-cloned-codet5-path

|

| 183 |

+

|

| 184 |

+

gsutil -m cp -r "gs://sfr-codet5-data-research/pretrained_models" .

|

| 185 |

+

gsutil -m cp -r "gs://sfr-codet5-data-research/data" .

|

| 186 |

+

gsutil -m cp -r "gs://sfr-codet5-data-research/finetuned_models" .

|

| 187 |

+

```

|

| 188 |

+

|

| 189 |

+

## Fine-tuning

|

| 190 |

+

|

| 191 |

+

Go to `sh` folder, set the `WORKDIR` in `exp_with_args.sh` to be your cloned CodeT5 repository path.

|

| 192 |

+

|

| 193 |

+

You can use `run_exp.py` to run a broad set of experiments by simply passing the `model_tag`, `task`, and `sub_task`

|

| 194 |

+

arguments. In total, we support five models (i.e., ['roberta', 'codebert', 'bart_base', 'codet5_small', 'codet5_base'])

|

| 195 |

+

and six tasks (i.e., ['summarize', 'concode', 'translate', 'refine', 'defect', 'clone']). For each task, we use

|

| 196 |

+

the `sub_task` to specify which specific datasets to fine-tne on. Below is the full list:

|

| 197 |

+

|

| 198 |

+

| \--task | \--sub\_task | Description |

|

| 199 |

+

| --------- | ---------------------------------- | -------------------------------------------------------------------------------------------------------------------------------- |

|

| 200 |

+

| summarize | ruby/javascript/go/python/java/php | code summarization task on [CodeSearchNet](https://arxiv.org/abs/1909.09436) data with six PLs |

|

| 201 |

+

| concode | none | text-to-code generation on [Concode](https://aclanthology.org/D18-1192.pdf) data |

|

| 202 |

+

| translate | java-cs/cs-java | code-to-code translation between [Java and C#](https://arxiv.org/pdf/2102.04664.pdf) |

|

| 203 |

+

| refine | small/medium | code refinement on [code repair data](https://arxiv.org/pdf/1812.08693.pdf) with small/medium functions |

|

| 204 |

+

| defect | none | code defect detection in [C/C++ data](https://proceedings.neurips.cc/paper/2019/file/49265d2447bc3bbfe9e76306ce40a31f-Paper.pdf) |

|

| 205 |

+

| clone | none | code clone detection in [Java data](https://arxiv.org/pdf/2002.08653.pdf) |

|

| 206 |

+

|

| 207 |

+

For example, if you want to run CodeT5-base model on the code summarization task for Python, you can simply run:

|

| 208 |

+

|

| 209 |

+

```

|

| 210 |

+

python run_exp.py --model_tag codet5_base --task summarize --sub_task python

|

| 211 |

+

```

|

| 212 |

+

|

| 213 |

+

For multi-task training, you can type:

|

| 214 |

+

|

| 215 |

+

```

|

| 216 |

+

python run_exp.py --model_tag codet5_base --task multi_task --sub_task none

|

| 217 |

+

```

|

| 218 |

+

|

| 219 |

+

Besides, you can specify:

|

| 220 |

+

|

| 221 |

+

```

|

| 222 |

+

model_dir: where to save fine-tuning checkpoints

|

| 223 |

+

res_dir: where to save the performance results

|

| 224 |

+

summary_dir: where to save the training curves

|

| 225 |

+

data_num: how many data instances to use, the default -1 is for using the full data

|

| 226 |

+

gpu: the index of the GPU to use in the cluster

|

| 227 |

+

```

|

| 228 |

+

|

| 229 |

+

You can also revise the suggested

|

| 230 |

+

arguments [here](https://github.com/salesforce/CodeT5/blob/0bf3c0c43e92fcf54d9df68c793ac22f2b60aad4/sh/run_exp.py#L14) or directly customize the [exp_with_args.sh](https://github.com/salesforce/CodeT5/blob/main/sh/exp_with_args.sh) bash file.

|

| 231 |

+

Please refer to the argument flags in [configs.py](https://github.com/salesforce/CodeT5/blob/main/configs.py) for the full

|

| 232 |

+

available options. The saved training curves in `summary_dir` can be visualized using [tensorboard](https://pypi.org/project/tensorboard/).

|

| 233 |

+

Note that we employ one A100 GPU for all fine-tuning experiments.

|

| 234 |

+

|

| 235 |

+

### How to reproduce the results using the released finetuned checkpoints?

|

| 236 |

+

|

| 237 |

+

* Remove the `--do_train --do_eval --do_eval_bleu` and reserve only `--do_test` at [here](https://github.com/salesforce/CodeT5/blob/5b37c34f4bbbfcfd972c24a9dd1f45716568ecb5/sh/exp_with_args.sh#L84).

|

| 238 |

+

* Pass the path of your downloaded finetuned checkpoint to load at [here](https://github.com/salesforce/CodeT5/blob/5b37c34f4bbbfcfd972c24a9dd1f45716568ecb5/run_gen.py#L366), e.g., `file = "CodeT5/finetuned_models/summarize_python_codet5_base.bin"`

|

| 239 |

+

* Run the program: `python run_exp.py --model_tag codet5_base --task summarize --sub_task python`

|

| 240 |

+

|

| 241 |

+

### How to fine-tune on your own task and dataset?

|

| 242 |

+

If you want to fine-tune on your dataset, you can add your own task and sub_task in `configs.py` ([here](https://github.com/salesforce/CodeT5/blob/d27512d23ba6130e089e571d8c3e399760db1c31/configs.py#L11)) and add your data path and the function to read in `utils.py` ([here](https://github.com/salesforce/CodeT5/blob/5bb41e21b07fee73f310476a91ded00e385290d7/utils.py#L103) and [here](https://github.com/salesforce/CodeT5/blob/5bb41e21b07fee73f310476a91ded00e385290d7/utils.py#L149)). The read function can be implemented in `_utils.py` similar to [this one](https://github.com/salesforce/CodeT5/blob/aaf9c4a920c4986abfd54a74f5456b056b6409e0/_utils.py#L213). If your task to add is a generation task, you can simply reuse or customize the `run_gen.py`. For understanding tasks, please refer to `run_defect.py` and `run_clone.py`.

|

| 243 |

+

|

| 244 |

+

## Get Involved

|

| 245 |

+

|

| 246 |

+

Please create a GitHub issue if you have any questions, suggestions, requests or bug-reports. We welcome PRs!

|

| 247 |

+

|

SECURITY.md

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Security

|

| 2 |

+

|

| 3 |

+

Please report any security issue to [security@salesforce.com](mailto:security@salesforce.com)

|

| 4 |

+

as soon as it is discovered. This library limits its runtime dependencies in

|

| 5 |

+

order to reduce the total cost of ownership as much as can be, but all consumers

|

| 6 |

+

should remain vigilant and have their security stakeholders review all third-party

|

| 7 |

+

products (3PP) like this one and their dependencies.

|

_utils.py

ADDED

|

@@ -0,0 +1,306 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

def add_lang_by_task(target_str, task, sub_task):

|

| 5 |

+

if task == 'summarize':

|

| 6 |

+

target_str = '<en> ' + target_str

|

| 7 |

+

elif task == 'refine':

|

| 8 |

+

target_str = '<java> ' + target_str

|

| 9 |

+

elif task == 'translate':

|

| 10 |

+

if sub_task == 'java-cs':

|

| 11 |

+

target_str = '<c_sharp> ' + target_str

|

| 12 |

+

else:

|

| 13 |

+

target_str = '<java> ' + target_str

|

| 14 |

+

elif task == 'concode':

|

| 15 |

+

target_str = '<java> ' + target_str

|

| 16 |

+

elif task == 'defect':

|

| 17 |

+

target_str = target_str

|

| 18 |

+

return target_str

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def convert_examples_to_features(item):

|

| 22 |

+

example, example_index, tokenizer, args, stage = item

|

| 23 |

+

|

| 24 |

+

if args.model_type in ['t5', 'codet5'] and args.add_task_prefix:

|

| 25 |

+

if args.sub_task != 'none':

|

| 26 |

+

source_str = "{} {}: {}".format(args.task, args.sub_task, example.source)

|

| 27 |

+

else:

|

| 28 |

+

source_str = "{}: {}".format(args.task, example.source)

|

| 29 |

+

else:

|

| 30 |

+

source_str = example.source

|

| 31 |

+

|

| 32 |

+

source_str = source_str.replace('</s>', '<unk>')

|

| 33 |

+

source_ids = tokenizer.encode(source_str, max_length=args.max_source_length, padding='max_length', truncation=True)

|

| 34 |

+

assert source_ids.count(tokenizer.eos_token_id) == 1

|

| 35 |

+

if stage == 'test':

|

| 36 |

+

target_ids = []

|

| 37 |

+

else:

|

| 38 |

+

target_str = example.target

|

| 39 |

+

if args.add_lang_ids:

|

| 40 |

+

target_str = add_lang_by_task(example.target, args.task, args.sub_task)

|

| 41 |

+

if args.task in ['defect', 'clone']:

|

| 42 |

+

if target_str == 0:

|

| 43 |

+

target_str = 'false'

|

| 44 |

+

elif target_str == 1:

|

| 45 |

+

target_str = 'true'

|

| 46 |

+

else:

|

| 47 |

+

raise NameError

|

| 48 |

+

target_str = target_str.replace('</s>', '<unk>')

|

| 49 |

+

target_ids = tokenizer.encode(target_str, max_length=args.max_target_length, padding='max_length',

|

| 50 |

+

truncation=True)

|

| 51 |

+

assert target_ids.count(tokenizer.eos_token_id) == 1

|

| 52 |

+

|

| 53 |

+

return InputFeatures(

|

| 54 |

+

example_index,

|

| 55 |

+

source_ids,

|

| 56 |

+

target_ids,

|

| 57 |

+

url=example.url

|

| 58 |

+

)

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def convert_clone_examples_to_features(item):

|

| 62 |

+

example, example_index, tokenizer, args = item

|

| 63 |

+

if args.model_type in ['t5', 'codet5'] and args.add_task_prefix:

|

| 64 |

+

source_str = "{}: {}".format(args.task, example.source)

|

| 65 |

+

target_str = "{}: {}".format(args.task, example.target)

|

| 66 |

+

else:

|

| 67 |

+

source_str = example.source

|

| 68 |

+

target_str = example.target

|

| 69 |

+

code1 = tokenizer.encode(source_str, max_length=args.max_source_length, padding='max_length', truncation=True)

|

| 70 |

+

code2 = tokenizer.encode(target_str, max_length=args.max_source_length, padding='max_length', truncation=True)

|

| 71 |

+

source_ids = code1 + code2

|

| 72 |

+

return CloneInputFeatures(example_index, source_ids, example.label, example.url1, example.url2)

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def convert_defect_examples_to_features(item):

|

| 76 |

+

example, example_index, tokenizer, args = item

|

| 77 |

+

if args.model_type in ['t5', 'codet5'] and args.add_task_prefix:

|

| 78 |

+

source_str = "{}: {}".format(args.task, example.source)

|

| 79 |

+

else:

|

| 80 |

+

source_str = example.source

|

| 81 |

+

code = tokenizer.encode(source_str, max_length=args.max_source_length, padding='max_length', truncation=True)

|

| 82 |

+

return DefectInputFeatures(example_index, code, example.target)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

class CloneInputFeatures(object):

|

| 86 |

+

"""A single training/test features for a example."""

|

| 87 |

+

|

| 88 |

+

def __init__(self,

|

| 89 |

+

example_id,

|

| 90 |

+

source_ids,

|

| 91 |

+

label,

|

| 92 |

+

url1,

|

| 93 |

+

url2

|

| 94 |

+

):

|

| 95 |

+

self.example_id = example_id

|

| 96 |

+

self.source_ids = source_ids

|

| 97 |

+

self.label = label

|

| 98 |

+

self.url1 = url1

|

| 99 |

+

self.url2 = url2

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

class DefectInputFeatures(object):

|

| 103 |

+

"""A single training/test features for a example."""

|

| 104 |

+

|

| 105 |

+

def __init__(self,

|

| 106 |

+

example_id,

|

| 107 |

+

source_ids,

|

| 108 |

+

label

|

| 109 |

+

):

|

| 110 |

+

self.example_id = example_id

|

| 111 |

+

self.source_ids = source_ids

|

| 112 |

+

self.label = label

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

class InputFeatures(object):

|

| 116 |

+

"""A single training/test features for a example."""

|

| 117 |

+

|

| 118 |

+

def __init__(self,

|

| 119 |

+

example_id,

|

| 120 |

+

source_ids,

|

| 121 |

+

target_ids,

|

| 122 |

+

url=None

|

| 123 |

+

):

|

| 124 |

+

self.example_id = example_id

|

| 125 |

+

self.source_ids = source_ids

|

| 126 |

+

self.target_ids = target_ids

|

| 127 |

+

self.url = url

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

class Example(object):

|

| 131 |

+

"""A single training/test example."""

|

| 132 |

+

|

| 133 |

+

def __init__(self,

|

| 134 |

+

idx,

|

| 135 |

+

source,

|

| 136 |

+

target,

|

| 137 |

+

url=None,

|

| 138 |

+

task='',

|

| 139 |

+

sub_task=''

|

| 140 |

+

):

|

| 141 |

+

self.idx = idx

|

| 142 |

+

self.source = source

|

| 143 |

+

self.target = target

|

| 144 |

+

self.url = url

|

| 145 |

+

self.task = task

|

| 146 |

+

self.sub_task = sub_task

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

class CloneExample(object):

|

| 150 |

+

"""A single training/test example."""

|

| 151 |

+

|

| 152 |

+

def __init__(self,

|

| 153 |

+

code1,

|

| 154 |

+

code2,

|

| 155 |

+

label,

|

| 156 |

+

url1,

|

| 157 |

+

url2

|

| 158 |

+

):

|

| 159 |

+

self.source = code1

|

| 160 |

+

self.target = code2

|

| 161 |

+

self.label = label

|

| 162 |

+

self.url1 = url1

|

| 163 |

+

self.url2 = url2

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

def read_translate_examples(filename, data_num):

|

| 167 |

+

"""Read examples from filename."""

|

| 168 |

+

examples = []

|

| 169 |

+

assert len(filename.split(',')) == 2

|

| 170 |

+

src_filename = filename.split(',')[0]

|

| 171 |

+

trg_filename = filename.split(',')[1]

|

| 172 |

+

idx = 0

|

| 173 |

+

with open(src_filename) as f1, open(trg_filename) as f2:

|

| 174 |

+

for line1, line2 in zip(f1, f2):

|

| 175 |

+

src = line1.strip()

|

| 176 |

+

trg = line2.strip()

|

| 177 |

+

examples.append(

|

| 178 |

+

Example(

|

| 179 |

+

idx=idx,

|

| 180 |

+

source=src,

|

| 181 |

+

target=trg,

|

| 182 |

+

)

|

| 183 |

+

)

|

| 184 |

+

idx += 1

|

| 185 |

+

if idx == data_num:

|

| 186 |

+

break

|

| 187 |

+

return examples

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

def read_refine_examples(filename, data_num):

|

| 191 |

+

"""Read examples from filename."""

|

| 192 |

+

examples = []

|

| 193 |

+

assert len(filename.split(',')) == 2

|

| 194 |

+

src_filename = filename.split(',')[0]

|

| 195 |

+

trg_filename = filename.split(',')[1]

|

| 196 |

+

idx = 0

|

| 197 |

+

|

| 198 |

+

with open(src_filename) as f1, open(trg_filename) as f2:

|

| 199 |

+

for line1, line2 in zip(f1, f2):

|

| 200 |

+

examples.append(

|

| 201 |

+

Example(

|

| 202 |

+

idx=idx,

|

| 203 |

+

source=line1.strip(),

|

| 204 |

+

target=line2.strip(),

|

| 205 |

+

)

|

| 206 |

+

)

|

| 207 |

+

idx += 1

|

| 208 |

+

if idx == data_num:

|

| 209 |

+

break

|

| 210 |

+

return examples

|

| 211 |

+

|

| 212 |

+

|

| 213 |

+

def read_concode_examples(filename, data_num):

|

| 214 |

+

"""Read examples from filename."""

|

| 215 |

+

examples = []

|

| 216 |

+

|

| 217 |

+

with open(filename) as f:

|

| 218 |

+

for idx, line in enumerate(f):

|

| 219 |

+

x = json.loads(line)

|

| 220 |

+

examples.append(

|

| 221 |

+

Example(

|

| 222 |

+

idx=idx,

|

| 223 |

+

source=x["nl"].strip(),

|

| 224 |

+

target=x["code"].strip()

|

| 225 |

+

)

|

| 226 |

+

)

|

| 227 |

+

idx += 1

|

| 228 |

+

if idx == data_num:

|

| 229 |

+

break

|

| 230 |

+

return examples

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

def read_summarize_examples(filename, data_num):

|

| 234 |

+

"""Read examples from filename."""

|

| 235 |

+

examples = []

|

| 236 |

+

with open(filename, encoding="utf-8") as f:

|

| 237 |

+

for idx, line in enumerate(f):

|

| 238 |

+

line = line.strip()

|

| 239 |

+

js = json.loads(line)

|

| 240 |

+

if 'idx' not in js:

|

| 241 |

+

js['idx'] = idx

|

| 242 |

+

code = ' '.join(js['code_tokens']).replace('\n', ' ')

|

| 243 |

+

code = ' '.join(code.strip().split())

|

| 244 |

+

nl = ' '.join(js['docstring_tokens']).replace('\n', '')

|

| 245 |

+

nl = ' '.join(nl.strip().split())

|

| 246 |

+

examples.append(

|

| 247 |

+

Example(

|

| 248 |

+

idx=idx,

|

| 249 |

+

source=code,

|

| 250 |

+

target=nl,

|

| 251 |

+

)

|

| 252 |

+

)

|

| 253 |

+

if idx + 1 == data_num:

|

| 254 |

+

break

|

| 255 |

+

return examples

|

| 256 |

+

|

| 257 |

+

|

| 258 |

+

def read_defect_examples(filename, data_num):

|

| 259 |

+

"""Read examples from filename."""

|

| 260 |

+

examples = []

|

| 261 |

+

with open(filename, encoding="utf-8") as f:

|

| 262 |

+

for idx, line in enumerate(f):

|

| 263 |

+

line = line.strip()

|

| 264 |

+

js = json.loads(line)

|

| 265 |

+

|

| 266 |

+

code = ' '.join(js['func'].split())

|

| 267 |

+

examples.append(

|

| 268 |

+

Example(

|

| 269 |

+

idx=js['idx'],

|

| 270 |

+

source=code,

|

| 271 |

+

target=js['target']

|

| 272 |

+

)

|

| 273 |

+

)

|

| 274 |

+

if idx + 1 == data_num:

|

| 275 |

+

break

|

| 276 |

+

return examples

|

| 277 |

+

|

| 278 |

+

|

| 279 |

+

def read_clone_examples(filename, data_num):

|

| 280 |

+

"""Read examples from filename."""

|

| 281 |

+

index_filename = filename

|

| 282 |

+

url_to_code = {}

|

| 283 |

+

with open('/'.join(index_filename.split('/')[:-1]) + '/data.jsonl') as f:

|

| 284 |

+

for line in f:

|

| 285 |

+

line = line.strip()

|

| 286 |

+

js = json.loads(line)

|

| 287 |

+

code = ' '.join(js['func'].split())

|

| 288 |

+

url_to_code[js['idx']] = code

|

| 289 |

+

|

| 290 |

+

data = []

|

| 291 |

+

with open(index_filename) as f:

|

| 292 |

+

idx = 0

|

| 293 |

+

for line in f:

|

| 294 |

+

line = line.strip()

|

| 295 |

+

url1, url2, label = line.split('\t')

|

| 296 |

+

if url1 not in url_to_code or url2 not in url_to_code:

|

| 297 |

+

continue

|

| 298 |

+

if label == '0':

|

| 299 |

+

label = 0

|

| 300 |

+

else:

|

| 301 |

+

label = 1

|

| 302 |

+

data.append(CloneExample(url_to_code[url1], url_to_code[url2], label, url1, url2))

|

| 303 |

+

idx += 1

|

| 304 |

+

if idx == data_num:

|

| 305 |

+

break

|

| 306 |

+

return data

|

codet5.gif

ADDED

|

Git LFS Details

|

configs.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import random

|

| 2 |

+

import torch

|

| 3 |

+

import logging

|

| 4 |

+

import multiprocessing

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

logger = logging.getLogger(__name__)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def add_args(parser):

|

| 11 |

+

parser.add_argument("--task", type=str, required=True,

|

| 12 |

+

choices=['summarize', 'concode', 'translate', 'refine', 'defect', 'clone', 'multi_task'])

|

| 13 |

+

parser.add_argument("--sub_task", type=str, default='')

|

| 14 |

+

parser.add_argument("--lang", type=str, default='')

|

| 15 |

+

parser.add_argument("--eval_task", type=str, default='')

|

| 16 |

+

parser.add_argument("--model_type", default="codet5", type=str, choices=['roberta', 'bart', 'codet5'])

|

| 17 |

+

parser.add_argument("--add_lang_ids", action='store_true')

|

| 18 |

+

parser.add_argument("--data_num", default=-1, type=int)

|

| 19 |

+

parser.add_argument("--start_epoch", default=0, type=int)

|

| 20 |

+

parser.add_argument("--num_train_epochs", default=100, type=int)

|

| 21 |

+

parser.add_argument("--patience", default=5, type=int)

|

| 22 |

+

parser.add_argument("--cache_path", type=str, required=True)

|

| 23 |

+

parser.add_argument("--summary_dir", type=str, required=True)

|

| 24 |

+

parser.add_argument("--data_dir", type=str, required=True)

|

| 25 |

+

parser.add_argument("--res_dir", type=str, required=True)

|

| 26 |

+

parser.add_argument("--res_fn", type=str, default='')

|

| 27 |

+

parser.add_argument("--add_task_prefix", action='store_true', help="Whether to add task prefix for t5 and codet5")

|

| 28 |

+

parser.add_argument("--save_last_checkpoints", action='store_true')

|

| 29 |

+

parser.add_argument("--always_save_model", action='store_true')

|

| 30 |

+

parser.add_argument("--do_eval_bleu", action='store_true', help="Whether to evaluate bleu on dev set.")

|

| 31 |

+

|

| 32 |

+

## Required parameters

|

| 33 |

+

parser.add_argument("--model_name_or_path", default="roberta-base", type=str,

|

| 34 |

+

help="Path to pre-trained model: e.g. roberta-base")

|

| 35 |

+

parser.add_argument("--output_dir", default=None, type=str, required=True,

|

| 36 |

+