Transformers documentation

GLPN

GLPN

This is a recently introduced model so the API hasn’t been tested extensively. There may be some bugs or slight breaking changes to fix it in the future. If you see something strange, file a Github Issue.

Overview

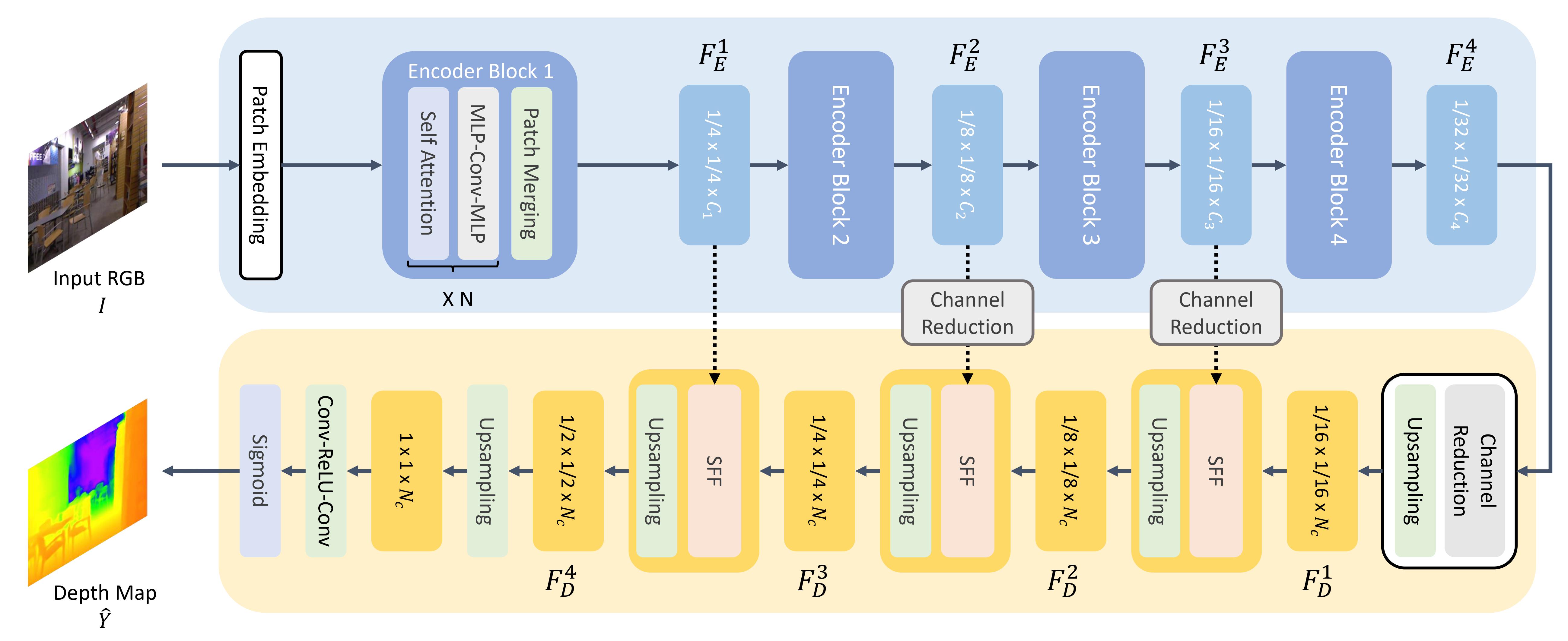

The GLPN model was proposed in Global-Local Path Networks for Monocular Depth Estimation with Vertical CutDepth by Doyeon Kim, Woonghyun Ga, Pyungwhan Ahn, Donggyu Joo, Sehwan Chun, Junmo Kim. GLPN combines SegFormer’s hierarchical mix-Transformer with a lightweight decoder for monocular depth estimation. The proposed decoder shows better performance than the previously proposed decoders, with considerably less computational complexity.

The abstract from the paper is the following:

Depth estimation from a single image is an important task that can be applied to various fields in computer vision, and has grown rapidly with the development of convolutional neural networks. In this paper, we propose a novel structure and training strategy for monocular depth estimation to further improve the prediction accuracy of the network. We deploy a hierarchical transformer encoder to capture and convey the global context, and design a lightweight yet powerful decoder to generate an estimated depth map while considering local connectivity. By constructing connected paths between multi-scale local features and the global decoding stream with our proposed selective feature fusion module, the network can integrate both representations and recover fine details. In addition, the proposed decoder shows better performance than the previously proposed decoders, with considerably less computational complexity. Furthermore, we improve the depth-specific augmentation method by utilizing an important observation in depth estimation to enhance the model. Our network achieves state-of-the-art performance over the challenging depth dataset NYU Depth V2. Extensive experiments have been conducted to validate and show the effectiveness of the proposed approach. Finally, our model shows better generalisation ability and robustness than other comparative models.

Tips:

- A notebook illustrating inference with GLPNForDepthEstimation can be found here.

- One can use GLPNImageProcessor to prepare images for the model.

Summary of the approach. Taken from the original paper.

Summary of the approach. Taken from the original paper.

This model was contributed by nielsr. The original code can be found here.

GLPNConfig

class transformers.GLPNConfig

< source >( num_channels = 3 num_encoder_blocks = 4 depths = [2, 2, 2, 2] sr_ratios = [8, 4, 2, 1] hidden_sizes = [32, 64, 160, 256] patch_sizes = [7, 3, 3, 3] strides = [4, 2, 2, 2] num_attention_heads = [1, 2, 5, 8] mlp_ratios = [4, 4, 4, 4] hidden_act = 'gelu' hidden_dropout_prob = 0.0 attention_probs_dropout_prob = 0.0 initializer_range = 0.02 drop_path_rate = 0.1 layer_norm_eps = 1e-06 is_encoder_decoder = False decoder_hidden_size = 64 max_depth = 10 head_in_index = -1 **kwargs )

Parameters

-

num_channels (

int, optional, defaults to 3) — The number of input channels. -

num_encoder_blocks (

int, optional, defaults to 4) — The number of encoder blocks (i.e. stages in the Mix Transformer encoder). -

depths (

List[int], optional, defaults to[2, 2, 2, 2]) — The number of layers in each encoder block. -

sr_ratios (

List[int], optional, defaults to[8, 4, 2, 1]) — Sequence reduction ratios in each encoder block. -

hidden_sizes (

List[int], optional, defaults to[32, 64, 160, 256]) — Dimension of each of the encoder blocks. -

patch_sizes (

List[int], optional, defaults to[7, 3, 3, 3]) — Patch size before each encoder block. -

strides (

List[int], optional, defaults to[4, 2, 2, 2]) — Stride before each encoder block. -

num_attention_heads (

List[int], optional, defaults to[1, 2, 4, 8]) — Number of attention heads for each attention layer in each block of the Transformer encoder. -

mlp_ratios (

List[int], optional, defaults to[4, 4, 4, 4]) — Ratio of the size of the hidden layer compared to the size of the input layer of the Mix FFNs in the encoder blocks. -

hidden_act (

strorfunction, optional, defaults to"gelu") — The non-linear activation function (function or string) in the encoder and pooler. If string,"gelu","relu","selu"and"gelu_new"are supported. -

hidden_dropout_prob (

float, optional, defaults to 0.0) — The dropout probability for all fully connected layers in the embeddings, encoder, and pooler. -

attention_probs_dropout_prob (

float, optional, defaults to 0.0) — The dropout ratio for the attention probabilities. -

initializer_range (

float, optional, defaults to 0.02) — The standard deviation of the truncated_normal_initializer for initializing all weight matrices. -

drop_path_rate (

float, optional, defaults to 0.1) — The dropout probability for stochastic depth, used in the blocks of the Transformer encoder. -

layer_norm_eps (

float, optional, defaults to 1e-6) — The epsilon used by the layer normalization layers. -

decoder_hidden_size (

int, optional, defaults to 32) — The dimension of the decoder. -

max_depth (

int, optional, defaults to 10) — The maximum depth of the decoder. -

head_in_index (

int, optional, defaults to -1) — The index of the features to use in the head.

This is the configuration class to store the configuration of a GLPNModel. It is used to instantiate an GLPN model according to the specified arguments, defining the model architecture. Instantiating a configuration with the defaults will yield a similar configuration to that of the GLPN vinvino02/glpn-kitti architecture.

Configuration objects inherit from PretrainedConfig and can be used to control the model outputs. Read the documentation from PretrainedConfig for more information.

Example:

>>> from transformers import GLPNModel, GLPNConfig

>>> # Initializing a GLPN vinvino02/glpn-kitti style configuration

>>> configuration = GLPNConfig()

>>> # Initializing a model from the vinvino02/glpn-kitti style configuration

>>> model = GLPNModel(configuration)

>>> # Accessing the model configuration

>>> configuration = model.configGLPNFeatureExtractor

class transformers.GLPNImageProcessor

< source >( do_resize: bool = True size_divisor: int = 32 resample = <Resampling.BILINEAR: 2> do_rescale: bool = True **kwargs )

Parameters

-

do_resize (

bool, optional, defaults toTrue) — Whether to resize the image’s (height, width) dimensions, rounding them down to the closest multiple ofsize_divisor. Can be overridden bydo_resizeinpreprocess. -

size_divisor (

int, optional, defaults to 32) — Whendo_resizeisTrue, images are resized so their height and width are rounded down to the closest multiple ofsize_divisor. Can be overridden bysize_divisorinpreprocess. -

resample (

PIL.Imageresampling filter, optional, defaults toPILImageResampling.BILINEAR) — Resampling filter to use if resizing the image. Can be overridden byresampleinpreprocess. -

do_rescale (

bool, optional, defaults toTrue) — Whether or not to apply the scaling factor (to make pixel values floats between 0. and 1.). Can be overridden bydo_rescaleinpreprocess.

Constructs a GLPN image processor.

Preprocess an image or a batch of images.

GLPNImageProcessor

class transformers.GLPNImageProcessor

< source >( do_resize: bool = True size_divisor: int = 32 resample = <Resampling.BILINEAR: 2> do_rescale: bool = True **kwargs )

Parameters

-

do_resize (

bool, optional, defaults toTrue) — Whether to resize the image’s (height, width) dimensions, rounding them down to the closest multiple ofsize_divisor. Can be overridden bydo_resizeinpreprocess. -

size_divisor (

int, optional, defaults to 32) — Whendo_resizeisTrue, images are resized so their height and width are rounded down to the closest multiple ofsize_divisor. Can be overridden bysize_divisorinpreprocess. -

resample (

PIL.Imageresampling filter, optional, defaults toPILImageResampling.BILINEAR) — Resampling filter to use if resizing the image. Can be overridden byresampleinpreprocess. -

do_rescale (

bool, optional, defaults toTrue) — Whether or not to apply the scaling factor (to make pixel values floats between 0. and 1.). Can be overridden bydo_rescaleinpreprocess.

Constructs a GLPN image processor.

preprocess

< source >( images: typing.Union[ForwardRef('PIL.Image.Image'), transformers.utils.generic.TensorType, typing.List[ForwardRef('PIL.Image.Image')], typing.List[transformers.utils.generic.TensorType]] do_resize: typing.Optional[bool] = None size_divisor: typing.Optional[int] = None resample = None do_rescale: typing.Optional[bool] = None return_tensors: typing.Union[str, transformers.utils.generic.TensorType, NoneType] = None data_format: ChannelDimension = <ChannelDimension.FIRST: 'channels_first'> **kwargs )

Parameters

-

images (

PIL.Image.ImageorTensorTypeorList[np.ndarray]orList[TensorType]) — The image or images to preprocess. -

do_resize (

bool, optional, defaults toself.do_resize) — Whether to resize the input such that the (height, width) dimensions are a multiple ofsize_divisor. -

size_divisor (

int, optional, defaults toself.size_divisor) — Whendo_resizeisTrue, images are resized so their height and width are rounded down to the closest multiple ofsize_divisor. -

resample (

PIL.Imageresampling filter, optional, defaults toself.resample) —PIL.Imageresampling filter to use if resizing the image e.g.PILImageResampling.BILINEAR. Only has an effect ifdo_resizeis set toTrue. -

do_rescale (

bool, optional, defaults toself.do_rescale) — Whether or not to apply the scaling factor (to make pixel values floats between 0. and 1.). -

return_tensors (

strorTensorType, optional) — The type of tensors to return. Can be one of:None: Return a list ofnp.ndarray.TensorType.TENSORFLOWor'tf': Return a batch of typetf.Tensor.TensorType.PYTORCHor'pt': Return a batch of typetorch.Tensor.TensorType.NUMPYor'np': Return a batch of typenp.ndarray.TensorType.JAXor'jax': Return a batch of typejax.numpy.ndarray.

-

data_format (

ChannelDimensionorstr, optional, defaults toChannelDimension.FIRST) — The channel dimension format for the output image. Can be one of:ChannelDimension.FIRST: image in (num_channels, height, width) format.ChannelDimension.LAST: image in (height, width, num_channels) format.

Preprocess the given images.

GLPNModel

class transformers.GLPNModel

< source >( config )

Parameters

- config (GLPNConfig) — Model configuration class with all the parameters of the model. Initializing with a config file does not load the weights associated with the model, only the configuration. Check out the from_pretrained() method to load the model weights.

The bare GLPN encoder (Mix-Transformer) outputting raw hidden-states without any specific head on top. This model is a PyTorch torch.nn.Module sub-class. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to general usage and behavior.

forward

< source >(

pixel_values: FloatTensor

output_attentions: typing.Optional[bool] = None

output_hidden_states: typing.Optional[bool] = None

return_dict: typing.Optional[bool] = None

)

→

transformers.modeling_outputs.BaseModelOutput or tuple(torch.FloatTensor)

Parameters

-

pixel_values (

torch.FloatTensorof shape(batch_size, num_channels, height, width)) — Pixel values. Padding will be ignored by default should you provide it. Pixel values can be obtained using GLPNImageProcessor. See GLPNImageProcessor.call() for details. -

output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. -

output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. -

return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple.

Returns

transformers.modeling_outputs.BaseModelOutput or tuple(torch.FloatTensor)

A transformers.modeling_outputs.BaseModelOutput or a tuple of

torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various

elements depending on the configuration (GLPNConfig) and inputs.

-

last_hidden_state (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size)) — Sequence of hidden-states at the output of the last layer of the model. -

hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, sequence_length, hidden_size).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

-

attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

The GLPNModel forward method, overrides the __call__ special method.

Although the recipe for forward pass needs to be defined within this function, one should call the Module

instance afterwards instead of this since the former takes care of running the pre and post processing steps while

the latter silently ignores them.

Example:

>>> from transformers import GLPNImageProcessor, GLPNModel

>>> import torch

>>> from datasets import load_dataset

>>> dataset = load_dataset("huggingface/cats-image")

>>> image = dataset["test"]["image"][0]

>>> feature_extractor = GLPNImageProcessor.from_pretrained("vinvino02/glpn-kitti")

>>> model = GLPNModel.from_pretrained("vinvino02/glpn-kitti")

>>> inputs = feature_extractor(image, return_tensors="pt")

>>> with torch.no_grad():

... outputs = model(**inputs)

>>> last_hidden_states = outputs.last_hidden_state

>>> list(last_hidden_states.shape)

[1, 512, 15, 20]GLPNForDepthEstimation

class transformers.GLPNForDepthEstimation

< source >( config )

Parameters

- config (GLPNConfig) — Model configuration class with all the parameters of the model. Initializing with a config file does not load the weights associated with the model, only the configuration. Check out the from_pretrained() method to load the model weights.

GLPN Model transformer with a lightweight depth estimation head on top e.g. for KITTI, NYUv2. This model is a PyTorch torch.nn.Module sub-class. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to general usage and behavior.

forward

< source >(

pixel_values: FloatTensor

labels: typing.Optional[torch.FloatTensor] = None

output_attentions: typing.Optional[bool] = None

output_hidden_states: typing.Optional[bool] = None

return_dict: typing.Optional[bool] = None

)

→

transformers.modeling_outputs.DepthEstimatorOutput or tuple(torch.FloatTensor)

Parameters

-

pixel_values (

torch.FloatTensorof shape(batch_size, num_channels, height, width)) — Pixel values. Padding will be ignored by default should you provide it. Pixel values can be obtained using GLPNImageProcessor. See GLPNImageProcessor.call() for details. -

output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. -

output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. -

return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple. -

labels (

torch.FloatTensorof shape(batch_size, height, width), optional) — Ground truth depth estimation maps for computing the loss.

Returns

transformers.modeling_outputs.DepthEstimatorOutput or tuple(torch.FloatTensor)

A transformers.modeling_outputs.DepthEstimatorOutput or a tuple of

torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various

elements depending on the configuration (GLPNConfig) and inputs.

-

loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Classification (or regression if config.num_labels==1) loss. -

predicted_depth (

torch.FloatTensorof shape(batch_size, height, width)) — Predicted depth for each pixel. -

hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, num_channels, height, width).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

-

attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, patch_size, sequence_length).Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

The GLPNForDepthEstimation forward method, overrides the __call__ special method.

Although the recipe for forward pass needs to be defined within this function, one should call the Module

instance afterwards instead of this since the former takes care of running the pre and post processing steps while

the latter silently ignores them.

Examples:

>>> from transformers import GLPNImageProcessor, GLPNForDepthEstimation

>>> import torch

>>> import numpy as np

>>> from PIL import Image

>>> import requests

>>> url = "http://images.cocodataset.org/val2017/000000039769.jpg"

>>> image = Image.open(requests.get(url, stream=True).raw)

>>> image_processor = GLPNImageProcessor.from_pretrained("vinvino02/glpn-kitti")

>>> model = GLPNForDepthEstimation.from_pretrained("vinvino02/glpn-kitti")

>>> # prepare image for the model

>>> inputs = image_processor(images=image, return_tensors="pt")

>>> with torch.no_grad():

... outputs = model(**inputs)

... predicted_depth = outputs.predicted_depth

>>> # interpolate to original size

>>> prediction = torch.nn.functional.interpolate(

... predicted_depth.unsqueeze(1),

... size=image.size[::-1],

... mode="bicubic",

... align_corners=False,

... )

>>> # visualize the prediction

>>> output = prediction.squeeze().cpu().numpy()

>>> formatted = (output * 255 / np.max(output)).astype("uint8")

>>> depth = Image.fromarray(formatted)