Create a competition

Creating a competition is super easy and you have full control over the data, evaluation metric and the hardware used.

To create a competition, you need to have a Hugging Face account. You will also need a write token which will be used throughout the competition to upload data and submissions. Please note that the write token is private and should not be shared with anyone and must not be refreshed during the course of the competition. In case you decide to refresh the token, you will need to update the token in the competition space’s settings otherwise the competitors will not be able to upload submissions and competition will stop working. You can find/generate a write token here.

To create a competition, you also need an organization. You can either create a new organization or use an existing one that you are already a member of. You can create a new organization here.

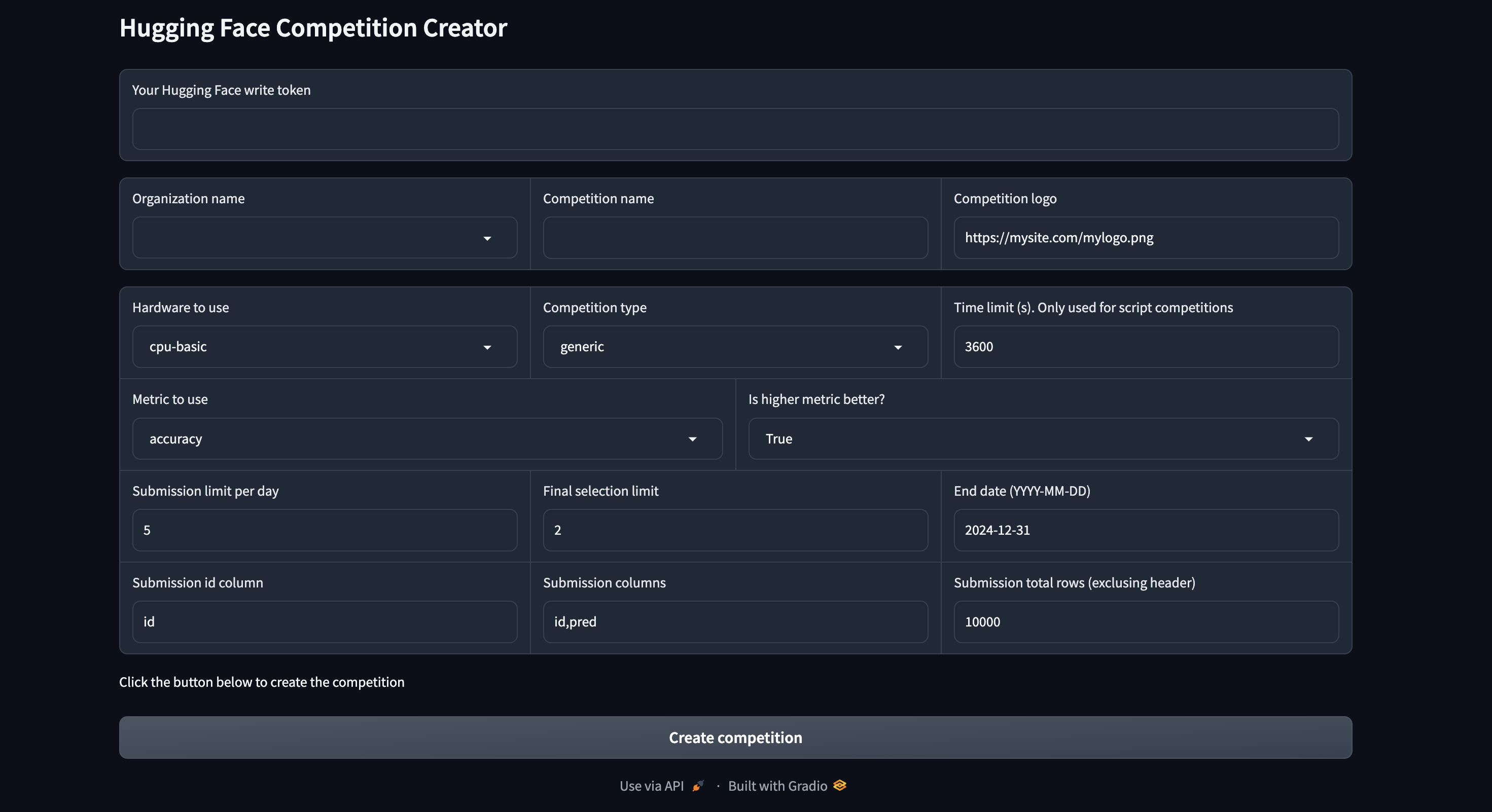

To create a competition, click here.

Competition creator

Please note: you will be able to change almost every setting later on. However, we dont recommend changing evaluation metric once the competition has started. As it will require you to re-evaluate all the submissions.

Types of competitions

generic: generic competitions are competitions where the participants submit a CSV file (or any other file) containing the predictions for the whole test set. The predictions are then evaluated against solution.csv (or a solution file) using the evaluation metric provided by the competition creator. These competitions are easy to setup and free to host (if you use cpu-basic). You can improve the evaluation runtime by upgrading generic competitions to cpu-upgrade. For generic competition, all the test data (without labels) is available to the participants all the time.

script: script competitions are competitions where the participants submit a python script that takes in the test set and outputs the predictions. The predictions are then evaluated against solution.csv (or a solution file) using the evaluation metric provided by the competition creator. These competitions are only free to host if you use cpu-basic as the backend for evaluation, and this is not recommended! In script competition, the test data can be kept private. The participants wont be able to see the test data at all. The participants submit a huggingface model repo containing

script.pywhich is run to generate predictions on hidden test data.