license: openrail++

base_model: stabilityai/stable-diffusion-xl-base-1.0

tags:

- stable-diffusion-xl

- stable-diffusion-xl-diffusers

- text-to-image

- diffusers

- controlnet

inference: false

SDXL-controlnet: Canny

These are controlnet weights trained on stabilityai/stable-diffusion-xl-base-1.0 with canny conditioning. You can find some example images in the following.

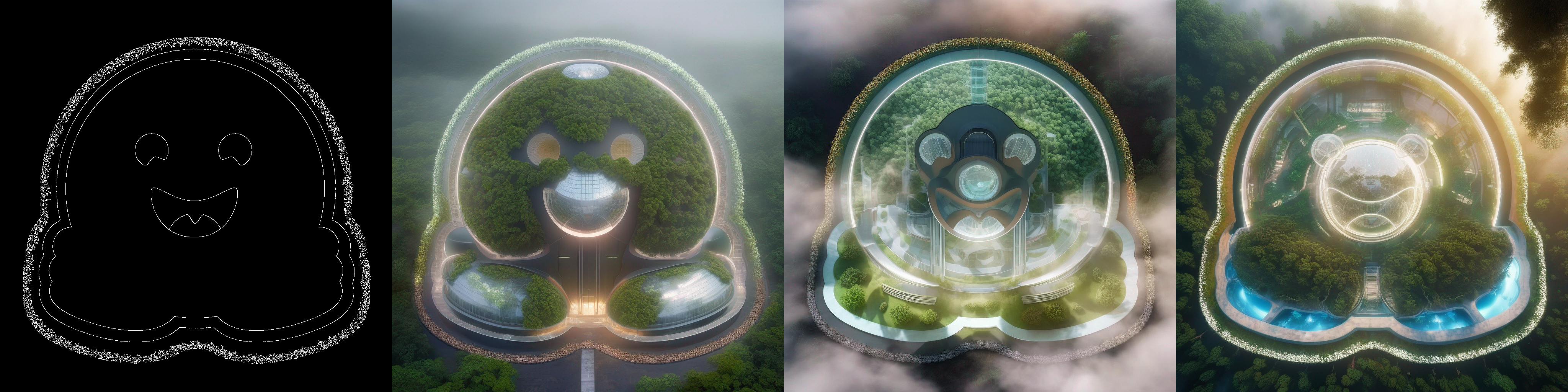

prompt: aerial view, a futuristic research complex in a bright foggy jungle, hard lighting

prompt: a woman, close up, detailed, beautiful, street photography, photorealistic, detailed, Kodak ektar 100, natural, candid shot

prompt: megatron in an apocalyptic world ground, runied city in the background, photorealistic

prompt: a couple watching sunset, 4k photo

Usage

Make sure to first install the libraries:

pip install accelerate transformers safetensors opencv-python diffusers

And then we're ready to go:

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline, AutoencoderKL

from diffusers.utils import load_image

from PIL import Image

import torch

import numpy as np

import cv2

prompt = "aerial view, a futuristic research complex in a bright foggy jungle, hard lighting"

negative_prompt = "low quality, bad quality, sketches"

image = load_image("https://huggingface.co/datasets/hf-internal-testing/diffusers-images/resolve/main/sd_controlnet/hf-logo.png")

controlnet_conditioning_scale = 0.5 # recommended for good generalization

controlnet = ControlNetModel.from_pretrained(

"diffusers/controlnet-canny-sdxl-1.0-small",

torch_dtype=torch.float16

)

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0",

controlnet=controlnet,

vae=vae,

torch_dtype=torch.float16,

)

pipe.enable_model_cpu_offload()

image = np.array(image)

image = cv2.Canny(image, 100, 200)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

image = Image.fromarray(image)

images = pipe(

prompt, negative_prompt=negative_prompt, image=image, controlnet_conditioning_scale=controlnet_conditioning_scale,

).images

images[0].save(f"hug_lab.png")

To more details, check out the official documentation of StableDiffusionXLControlNetPipeline.

🚨 Please note that this checkpoint is experimental and should be deeply investigated before being deployed. We encourage the community to build on top of it and improve it. 🚨

Training

Our training script was built on top of the official training script that we provide here. You can refer to this script for full discolsure.

Training data

This checkpoint was first trained for 20,000 steps on LAION 6A resized to a max minimum dimension of 384. It was then further trained for 20,000 steps on laion 6a resized to a max minimum dimension of 1024 and then filtered to contain only minimum 1024 images. We found the further high resolution finetuning was necessary for image quality.

Compute

one 8xA100 machine

Batch size

Data parallel with a single gpu batch size of 8 for a total batch size of 64.

Hyper Parameters

Constant learning rate of 1e-4 scaled by batch size for total learning rate of 64e-4

Mixed precision

fp16

Additional notes

- This checkpoint does not perform distillation. We just use a smaller ControlNet initialized from the SDXL UNet. We encourage the community to try and conduct distillation too, where the smaller ControlNet model would be initialized from a bigger ControlNet model. This resource might be of help in this regard.

- It does not have any attention blocks.

- It is better suited for simple conditioning images. For conditionings involving more complex structures, you should use the bigger checkpoints.