421f803355974a702f7603a70074546b87af62b9a352b2c06bd834024cd5af7b

Browse files- langchain_md_files/integrations/providers/dingo.mdx +31 -0

- langchain_md_files/integrations/providers/discord.mdx +38 -0

- langchain_md_files/integrations/providers/docarray.mdx +37 -0

- langchain_md_files/integrations/providers/doctran.mdx +37 -0

- langchain_md_files/integrations/providers/docugami.mdx +21 -0

- langchain_md_files/integrations/providers/docusaurus.mdx +20 -0

- langchain_md_files/integrations/providers/dria.mdx +25 -0

- langchain_md_files/integrations/providers/dropbox.mdx +21 -0

- langchain_md_files/integrations/providers/duckdb.mdx +19 -0

- langchain_md_files/integrations/providers/duckduckgo_search.mdx +25 -0

- langchain_md_files/integrations/providers/e2b.mdx +20 -0

- langchain_md_files/integrations/providers/edenai.mdx +62 -0

- langchain_md_files/integrations/providers/elasticsearch.mdx +108 -0

- langchain_md_files/integrations/providers/elevenlabs.mdx +27 -0

- langchain_md_files/integrations/providers/epsilla.mdx +23 -0

- langchain_md_files/integrations/providers/etherscan.mdx +18 -0

- langchain_md_files/integrations/providers/evernote.mdx +20 -0

- langchain_md_files/integrations/providers/facebook.mdx +93 -0

- langchain_md_files/integrations/providers/fauna.mdx +25 -0

- langchain_md_files/integrations/providers/figma.mdx +21 -0

- langchain_md_files/integrations/providers/flyte.mdx +153 -0

- langchain_md_files/integrations/providers/forefrontai.mdx +16 -0

- langchain_md_files/integrations/providers/geopandas.mdx +23 -0

- langchain_md_files/integrations/providers/git.mdx +19 -0

- langchain_md_files/integrations/providers/gitbook.mdx +15 -0

- langchain_md_files/integrations/providers/github.mdx +22 -0

- langchain_md_files/integrations/providers/golden.mdx +34 -0

- langchain_md_files/integrations/providers/google_serper.mdx +74 -0

- langchain_md_files/integrations/providers/gooseai.mdx +23 -0

- langchain_md_files/integrations/providers/gpt4all.mdx +55 -0

- langchain_md_files/integrations/providers/gradient.mdx +27 -0

- langchain_md_files/integrations/providers/graphsignal.mdx +44 -0

- langchain_md_files/integrations/providers/grobid.mdx +46 -0

- langchain_md_files/integrations/providers/groq.mdx +28 -0

- langchain_md_files/integrations/providers/gutenberg.mdx +15 -0

- langchain_md_files/integrations/providers/hacker_news.mdx +18 -0

- langchain_md_files/integrations/providers/hazy_research.mdx +19 -0

- langchain_md_files/integrations/providers/helicone.mdx +53 -0

- langchain_md_files/integrations/providers/hologres.mdx +23 -0

- langchain_md_files/integrations/providers/html2text.mdx +19 -0

- langchain_md_files/integrations/providers/huawei.mdx +37 -0

- langchain_md_files/integrations/providers/ibm.mdx +59 -0

- langchain_md_files/integrations/providers/ieit_systems.mdx +31 -0

- langchain_md_files/integrations/providers/ifixit.mdx +16 -0

- langchain_md_files/integrations/providers/iflytek.mdx +38 -0

- langchain_md_files/integrations/providers/imsdb.mdx +16 -0

- langchain_md_files/integrations/providers/infinispanvs.mdx +17 -0

- langchain_md_files/integrations/providers/infinity.mdx +11 -0

- langchain_md_files/integrations/providers/infino.mdx +35 -0

- langchain_md_files/integrations/providers/intel.mdx +108 -0

langchain_md_files/integrations/providers/dingo.mdx

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# DingoDB

|

| 2 |

+

|

| 3 |

+

>[DingoDB](https://github.com/dingodb) is a distributed multi-modal vector

|

| 4 |

+

> database. It combines the features of a data lake and a vector database,

|

| 5 |

+

> allowing for the storage of any type of data (key-value, PDF, audio,

|

| 6 |

+

> video, etc.) regardless of its size. Utilizing DingoDB, you can construct

|

| 7 |

+

> your own Vector Ocean (the next-generation data architecture following data

|

| 8 |

+

> warehouse and data lake). This enables

|

| 9 |

+

> the analysis of both structured and unstructured data through

|

| 10 |

+

> a singular SQL with exceptionally low latency in real time.

|

| 11 |

+

|

| 12 |

+

## Installation and Setup

|

| 13 |

+

|

| 14 |

+

Install the Python SDK

|

| 15 |

+

|

| 16 |

+

```bash

|

| 17 |

+

pip install dingodb

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

## VectorStore

|

| 21 |

+

|

| 22 |

+

There exists a wrapper around DingoDB indexes, allowing you to use it as a vectorstore,

|

| 23 |

+

whether for semantic search or example selection.

|

| 24 |

+

|

| 25 |

+

To import this vectorstore:

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

from langchain_community.vectorstores import Dingo

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

For a more detailed walkthrough of the DingoDB wrapper, see [this notebook](/docs/integrations/vectorstores/dingo)

|

langchain_md_files/integrations/providers/discord.mdx

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Discord

|

| 2 |

+

|

| 3 |

+

>[Discord](https://discord.com/) is a VoIP and instant messaging social platform. Users have the ability to communicate

|

| 4 |

+

> with voice calls, video calls, text messaging, media and files in private chats or as part of communities called

|

| 5 |

+

> "servers". A server is a collection of persistent chat rooms and voice channels which can be accessed via invite links.

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

```bash

|

| 10 |

+

pip install pandas

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

Follow these steps to download your `Discord` data:

|

| 14 |

+

|

| 15 |

+

1. Go to your **User Settings**

|

| 16 |

+

2. Then go to **Privacy and Safety**

|

| 17 |

+

3. Head over to the **Request all of my Data** and click on **Request Data** button

|

| 18 |

+

|

| 19 |

+

It might take 30 days for you to receive your data. You'll receive an email at the address which is registered

|

| 20 |

+

with Discord. That email will have a download button using which you would be able to download your personal Discord data.

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Document Loader

|

| 24 |

+

|

| 25 |

+

See a [usage example](/docs/integrations/document_loaders/discord).

|

| 26 |

+

|

| 27 |

+

**NOTE:** The `DiscordChatLoader` is not the `ChatLoader` but a `DocumentLoader`.

|

| 28 |

+

It is used to load the data from the `Discord` data dump.

|

| 29 |

+

For the `ChatLoader` see Chat Loader section below.

|

| 30 |

+

|

| 31 |

+

```python

|

| 32 |

+

from langchain_community.document_loaders import DiscordChatLoader

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

## Chat Loader

|

| 36 |

+

|

| 37 |

+

See a [usage example](/docs/integrations/chat_loaders/discord).

|

| 38 |

+

|

langchain_md_files/integrations/providers/docarray.mdx

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# DocArray

|

| 2 |

+

|

| 3 |

+

> [DocArray](https://docarray.jina.ai/) is a library for nested, unstructured, multimodal data in transit,

|

| 4 |

+

> including text, image, audio, video, 3D mesh, etc. It allows deep-learning engineers to efficiently process,

|

| 5 |

+

> embed, search, recommend, store, and transfer multimodal data with a Pythonic API.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

## Installation and Setup

|

| 9 |

+

|

| 10 |

+

We need to install `docarray` python package.

|

| 11 |

+

|

| 12 |

+

```bash

|

| 13 |

+

pip install docarray

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

## Vector Store

|

| 17 |

+

|

| 18 |

+

LangChain provides an access to the `In-memory` and `HNSW` vector stores from the `DocArray` library.

|

| 19 |

+

|

| 20 |

+

See a [usage example](/docs/integrations/vectorstores/docarray_hnsw).

|

| 21 |

+

|

| 22 |

+

```python

|

| 23 |

+

from langchain_community.vectorstores import DocArrayHnswSearch

|

| 24 |

+

```

|

| 25 |

+

See a [usage example](/docs/integrations/vectorstores/docarray_in_memory).

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

from langchain_community.vectorstores DocArrayInMemorySearch

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

## Retriever

|

| 32 |

+

|

| 33 |

+

See a [usage example](/docs/integrations/retrievers/docarray_retriever).

|

| 34 |

+

|

| 35 |

+

```python

|

| 36 |

+

from langchain_community.retrievers import DocArrayRetriever

|

| 37 |

+

```

|

langchain_md_files/integrations/providers/doctran.mdx

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Doctran

|

| 2 |

+

|

| 3 |

+

>[Doctran](https://github.com/psychic-api/doctran) is a python package. It uses LLMs and open-source

|

| 4 |

+

> NLP libraries to transform raw text into clean, structured, information-dense documents

|

| 5 |

+

> that are optimized for vector space retrieval. You can think of `Doctran` as a black box where

|

| 6 |

+

> messy strings go in and nice, clean, labelled strings come out.

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Installation and Setup

|

| 10 |

+

|

| 11 |

+

```bash

|

| 12 |

+

pip install doctran

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

## Document Transformers

|

| 16 |

+

|

| 17 |

+

### Document Interrogator

|

| 18 |

+

|

| 19 |

+

See a [usage example for DoctranQATransformer](/docs/integrations/document_transformers/doctran_interrogate_document).

|

| 20 |

+

|

| 21 |

+

```python

|

| 22 |

+

from langchain_community.document_loaders import DoctranQATransformer

|

| 23 |

+

```

|

| 24 |

+

### Property Extractor

|

| 25 |

+

|

| 26 |

+

See a [usage example for DoctranPropertyExtractor](/docs/integrations/document_transformers/doctran_extract_properties).

|

| 27 |

+

|

| 28 |

+

```python

|

| 29 |

+

from langchain_community.document_loaders import DoctranPropertyExtractor

|

| 30 |

+

```

|

| 31 |

+

### Document Translator

|

| 32 |

+

|

| 33 |

+

See a [usage example for DoctranTextTranslator](/docs/integrations/document_transformers/doctran_translate_document).

|

| 34 |

+

|

| 35 |

+

```python

|

| 36 |

+

from langchain_community.document_loaders import DoctranTextTranslator

|

| 37 |

+

```

|

langchain_md_files/integrations/providers/docugami.mdx

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Docugami

|

| 2 |

+

|

| 3 |

+

>[Docugami](https://docugami.com) converts business documents into a Document XML Knowledge Graph, generating forests

|

| 4 |

+

> of XML semantic trees representing entire documents. This is a rich representation that includes the semantic and

|

| 5 |

+

> structural characteristics of various chunks in the document as an XML tree.

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

```bash

|

| 11 |

+

pip install dgml-utils

|

| 12 |

+

pip install docugami-langchain

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

## Document Loader

|

| 16 |

+

|

| 17 |

+

See a [usage example](/docs/integrations/document_loaders/docugami).

|

| 18 |

+

|

| 19 |

+

```python

|

| 20 |

+

from docugami_langchain.document_loaders import DocugamiLoader

|

| 21 |

+

```

|

langchain_md_files/integrations/providers/docusaurus.mdx

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Docusaurus

|

| 2 |

+

|

| 3 |

+

>[Docusaurus](https://docusaurus.io/) is a static-site generator which provides

|

| 4 |

+

> out-of-the-box documentation features.

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

```bash

|

| 11 |

+

pip install -U beautifulsoup4 lxml

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

## Document Loader

|

| 15 |

+

|

| 16 |

+

See a [usage example](/docs/integrations/document_loaders/docusaurus).

|

| 17 |

+

|

| 18 |

+

```python

|

| 19 |

+

from langchain_community.document_loaders import DocusaurusLoader

|

| 20 |

+

```

|

langchain_md_files/integrations/providers/dria.mdx

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Dria

|

| 2 |

+

|

| 3 |

+

>[Dria](https://dria.co/) is a hub of public RAG models for developers to

|

| 4 |

+

> both contribute and utilize a shared embedding lake.

|

| 5 |

+

|

| 6 |

+

See more details about the LangChain integration with Dria

|

| 7 |

+

at [this page](https://dria.co/docs/integrations/langchain).

|

| 8 |

+

|

| 9 |

+

## Installation and Setup

|

| 10 |

+

|

| 11 |

+

You have to install a python package:

|

| 12 |

+

|

| 13 |

+

```bash

|

| 14 |

+

pip install dria

|

| 15 |

+

```

|

| 16 |

+

|

| 17 |

+

You have to get an API key from Dria. You can get it by signing up at [Dria](https://dria.co/).

|

| 18 |

+

|

| 19 |

+

## Retrievers

|

| 20 |

+

|

| 21 |

+

See a [usage example](/docs/integrations/retrievers/dria_index).

|

| 22 |

+

|

| 23 |

+

```python

|

| 24 |

+

from langchain_community.retrievers import DriaRetriever

|

| 25 |

+

```

|

langchain_md_files/integrations/providers/dropbox.mdx

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Dropbox

|

| 2 |

+

|

| 3 |

+

>[Dropbox](https://en.wikipedia.org/wiki/Dropbox) is a file hosting service that brings everything-traditional

|

| 4 |

+

> files, cloud content, and web shortcuts together in one place.

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

See the detailed [installation guide](/docs/integrations/document_loaders/dropbox#prerequisites).

|

| 10 |

+

|

| 11 |

+

```bash

|

| 12 |

+

pip install -U dropbox

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

## Document Loader

|

| 16 |

+

|

| 17 |

+

See a [usage example](/docs/integrations/document_loaders/dropbox).

|

| 18 |

+

|

| 19 |

+

```python

|

| 20 |

+

from langchain_community.document_loaders import DropboxLoader

|

| 21 |

+

```

|

langchain_md_files/integrations/providers/duckdb.mdx

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# DuckDB

|

| 2 |

+

|

| 3 |

+

>[DuckDB](https://duckdb.org/) is an in-process SQL OLAP database management system.

|

| 4 |

+

|

| 5 |

+

## Installation and Setup

|

| 6 |

+

|

| 7 |

+

First, you need to install `duckdb` python package.

|

| 8 |

+

|

| 9 |

+

```bash

|

| 10 |

+

pip install duckdb

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

## Document Loader

|

| 14 |

+

|

| 15 |

+

See a [usage example](/docs/integrations/document_loaders/duckdb).

|

| 16 |

+

|

| 17 |

+

```python

|

| 18 |

+

from langchain_community.document_loaders import DuckDBLoader

|

| 19 |

+

```

|

langchain_md_files/integrations/providers/duckduckgo_search.mdx

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# DuckDuckGo Search

|

| 2 |

+

|

| 3 |

+

>[DuckDuckGo Search](https://github.com/deedy5/duckduckgo_search) is a package that

|

| 4 |

+

> searches for words, documents, images, videos, news, maps and text

|

| 5 |

+

> translation using the `DuckDuckGo.com` search engine. It is downloading files

|

| 6 |

+

> and images to a local hard drive.

|

| 7 |

+

|

| 8 |

+

## Installation and Setup

|

| 9 |

+

|

| 10 |

+

You have to install a python package:

|

| 11 |

+

|

| 12 |

+

```bash

|

| 13 |

+

pip install duckduckgo-search

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

## Tools

|

| 17 |

+

|

| 18 |

+

See a [usage example](/docs/integrations/tools/ddg).

|

| 19 |

+

|

| 20 |

+

There are two tools available:

|

| 21 |

+

|

| 22 |

+

```python

|

| 23 |

+

from langchain_community.tools import DuckDuckGoSearchRun

|

| 24 |

+

from langchain_community.tools import DuckDuckGoSearchResults

|

| 25 |

+

```

|

langchain_md_files/integrations/providers/e2b.mdx

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# E2B

|

| 2 |

+

|

| 3 |

+

>[E2B](https://e2b.dev/) provides open-source secure sandboxes

|

| 4 |

+

> for AI-generated code execution. See more [here](https://github.com/e2b-dev).

|

| 5 |

+

|

| 6 |

+

## Installation and Setup

|

| 7 |

+

|

| 8 |

+

You have to install a python package:

|

| 9 |

+

|

| 10 |

+

```bash

|

| 11 |

+

pip install e2b_code_interpreter

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

## Tool

|

| 15 |

+

|

| 16 |

+

See a [usage example](/docs/integrations/tools/e2b_data_analysis).

|

| 17 |

+

|

| 18 |

+

```python

|

| 19 |

+

from langchain_community.tools import E2BDataAnalysisTool

|

| 20 |

+

```

|

langchain_md_files/integrations/providers/edenai.mdx

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Eden AI

|

| 2 |

+

|

| 3 |

+

>[Eden AI](https://docs.edenai.co/docs/getting-started-with-eden-ai) user interface (UI)

|

| 4 |

+

> is designed for handling the AI projects. With `Eden AI Portal`,

|

| 5 |

+

> you can perform no-code AI using the best engines for the market.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

## Installation and Setup

|

| 9 |

+

|

| 10 |

+

Accessing the Eden AI API requires an API key, which you can get by

|

| 11 |

+

[creating an account](https://app.edenai.run/user/register) and

|

| 12 |

+

heading [here](https://app.edenai.run/admin/account/settings).

|

| 13 |

+

|

| 14 |

+

## LLMs

|

| 15 |

+

|

| 16 |

+

See a [usage example](/docs/integrations/llms/edenai).

|

| 17 |

+

|

| 18 |

+

```python

|

| 19 |

+

from langchain_community.llms import EdenAI

|

| 20 |

+

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

## Chat models

|

| 24 |

+

|

| 25 |

+

See a [usage example](/docs/integrations/chat/edenai).

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

from langchain_community.chat_models.edenai import ChatEdenAI

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

## Embedding models

|

| 32 |

+

|

| 33 |

+

See a [usage example](/docs/integrations/text_embedding/edenai).

|

| 34 |

+

|

| 35 |

+

```python

|

| 36 |

+

from langchain_community.embeddings.edenai import EdenAiEmbeddings

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

## Tools

|

| 40 |

+

|

| 41 |

+

Eden AI provides a list of tools that grants your Agent the ability to do multiple tasks, such as:

|

| 42 |

+

* speech to text

|

| 43 |

+

* text to speech

|

| 44 |

+

* text explicit content detection

|

| 45 |

+

* image explicit content detection

|

| 46 |

+

* object detection

|

| 47 |

+

* OCR invoice parsing

|

| 48 |

+

* OCR ID parsing

|

| 49 |

+

|

| 50 |

+

See a [usage example](/docs/integrations/tools/edenai_tools).

|

| 51 |

+

|

| 52 |

+

```python

|

| 53 |

+

from langchain_community.tools.edenai import (

|

| 54 |

+

EdenAiExplicitImageTool,

|

| 55 |

+

EdenAiObjectDetectionTool,

|

| 56 |

+

EdenAiParsingIDTool,

|

| 57 |

+

EdenAiParsingInvoiceTool,

|

| 58 |

+

EdenAiSpeechToTextTool,

|

| 59 |

+

EdenAiTextModerationTool,

|

| 60 |

+

EdenAiTextToSpeechTool,

|

| 61 |

+

)

|

| 62 |

+

```

|

langchain_md_files/integrations/providers/elasticsearch.mdx

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Elasticsearch

|

| 2 |

+

|

| 3 |

+

> [Elasticsearch](https://www.elastic.co/elasticsearch/) is a distributed, RESTful search and analytics engine.

|

| 4 |

+

> It provides a distributed, multi-tenant-capable full-text search engine with an HTTP web interface and schema-free

|

| 5 |

+

> JSON documents.

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

### Setup Elasticsearch

|

| 10 |

+

|

| 11 |

+

There are two ways to get started with Elasticsearch:

|

| 12 |

+

|

| 13 |

+

#### Install Elasticsearch on your local machine via Docker

|

| 14 |

+

|

| 15 |

+

Example: Run a single-node Elasticsearch instance with security disabled.

|

| 16 |

+

This is not recommended for production use.

|

| 17 |

+

|

| 18 |

+

```bash

|

| 19 |

+

docker run -p 9200:9200 -e "discovery.type=single-node" -e "xpack.security.enabled=false" -e "xpack.security.http.ssl.enabled=false" docker.elastic.co/elasticsearch/elasticsearch:8.9.0

|

| 20 |

+

```

|

| 21 |

+

|

| 22 |

+

#### Deploy Elasticsearch on Elastic Cloud

|

| 23 |

+

|

| 24 |

+

`Elastic Cloud` is a managed Elasticsearch service. Signup for a [free trial](https://cloud.elastic.co/registration?utm_source=langchain&utm_content=documentation).

|

| 25 |

+

|

| 26 |

+

### Install Client

|

| 27 |

+

|

| 28 |

+

```bash

|

| 29 |

+

pip install elasticsearch

|

| 30 |

+

pip install langchain-elasticsearch

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

## Embedding models

|

| 34 |

+

|

| 35 |

+

See a [usage example](/docs/integrations/text_embedding/elasticsearch).

|

| 36 |

+

|

| 37 |

+

```python

|

| 38 |

+

from langchain_elasticsearch import ElasticsearchEmbeddings

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

## Vector store

|

| 42 |

+

|

| 43 |

+

See a [usage example](/docs/integrations/vectorstores/elasticsearch).

|

| 44 |

+

|

| 45 |

+

```python

|

| 46 |

+

from langchain_elasticsearch import ElasticsearchStore

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

### Third-party integrations

|

| 50 |

+

|

| 51 |

+

#### EcloudESVectorStore

|

| 52 |

+

|

| 53 |

+

```python

|

| 54 |

+

from langchain_community.vectorstores.ecloud_vector_search import EcloudESVectorStore

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

## Retrievers

|

| 58 |

+

|

| 59 |

+

### ElasticsearchRetriever

|

| 60 |

+

|

| 61 |

+

The `ElasticsearchRetriever` enables flexible access to all Elasticsearch features

|

| 62 |

+

through the Query DSL.

|

| 63 |

+

|

| 64 |

+

See a [usage example](/docs/integrations/retrievers/elasticsearch_retriever).

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

from langchain_elasticsearch import ElasticsearchRetriever

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

### BM25

|

| 71 |

+

|

| 72 |

+

See a [usage example](/docs/integrations/retrievers/elastic_search_bm25).

|

| 73 |

+

|

| 74 |

+

```python

|

| 75 |

+

from langchain_community.retrievers import ElasticSearchBM25Retriever

|

| 76 |

+

```

|

| 77 |

+

## Memory

|

| 78 |

+

|

| 79 |

+

See a [usage example](/docs/integrations/memory/elasticsearch_chat_message_history).

|

| 80 |

+

|

| 81 |

+

```python

|

| 82 |

+

from langchain_elasticsearch import ElasticsearchChatMessageHistory

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

## LLM cache

|

| 86 |

+

|

| 87 |

+

See a [usage example](/docs/integrations/llm_caching/#elasticsearch-cache).

|

| 88 |

+

|

| 89 |

+

```python

|

| 90 |

+

from langchain_elasticsearch import ElasticsearchCache

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

## Byte Store

|

| 94 |

+

|

| 95 |

+

See a [usage example](/docs/integrations/stores/elasticsearch).

|

| 96 |

+

|

| 97 |

+

```python

|

| 98 |

+

from langchain_elasticsearch import ElasticsearchEmbeddingsCache

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

## Chain

|

| 102 |

+

|

| 103 |

+

It is a chain for interacting with Elasticsearch Database.

|

| 104 |

+

|

| 105 |

+

```python

|

| 106 |

+

from langchain.chains.elasticsearch_database import ElasticsearchDatabaseChain

|

| 107 |

+

```

|

| 108 |

+

|

langchain_md_files/integrations/providers/elevenlabs.mdx

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ElevenLabs

|

| 2 |

+

|

| 3 |

+

>[ElevenLabs](https://elevenlabs.io/about) is a voice AI research & deployment company

|

| 4 |

+

> with a mission to make content universally accessible in any language & voice.

|

| 5 |

+

>

|

| 6 |

+

>`ElevenLabs` creates the most realistic, versatile and contextually-aware

|

| 7 |

+

> AI audio, providing the ability to generate speech in hundreds of

|

| 8 |

+

> new and existing voices in 29 languages.

|

| 9 |

+

|

| 10 |

+

## Installation and Setup

|

| 11 |

+

|

| 12 |

+

First, you need to set up an ElevenLabs account. You can follow the

|

| 13 |

+

[instructions here](https://docs.elevenlabs.io/welcome/introduction).

|

| 14 |

+

|

| 15 |

+

Install the Python package:

|

| 16 |

+

|

| 17 |

+

```bash

|

| 18 |

+

pip install elevenlabs

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

## Tools

|

| 22 |

+

|

| 23 |

+

See a [usage example](/docs/integrations/tools/eleven_labs_tts).

|

| 24 |

+

|

| 25 |

+

```python

|

| 26 |

+

from langchain_community.tools import ElevenLabsText2SpeechTool

|

| 27 |

+

```

|

langchain_md_files/integrations/providers/epsilla.mdx

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Epsilla

|

| 2 |

+

|

| 3 |

+

This page covers how to use [Epsilla](https://github.com/epsilla-cloud/vectordb) within LangChain.

|

| 4 |

+

It is broken into two parts: installation and setup, and then references to specific Epsilla wrappers.

|

| 5 |

+

|

| 6 |

+

## Installation and Setup

|

| 7 |

+

|

| 8 |

+

- Install the Python SDK with `pip/pip3 install pyepsilla`

|

| 9 |

+

|

| 10 |

+

## Wrappers

|

| 11 |

+

|

| 12 |

+

### VectorStore

|

| 13 |

+

|

| 14 |

+

There exists a wrapper around Epsilla vector databases, allowing you to use it as a vectorstore,

|

| 15 |

+

whether for semantic search or example selection.

|

| 16 |

+

|

| 17 |

+

To import this vectorstore:

|

| 18 |

+

|

| 19 |

+

```python

|

| 20 |

+

from langchain_community.vectorstores import Epsilla

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

For a more detailed walkthrough of the Epsilla wrapper, see [this notebook](/docs/integrations/vectorstores/epsilla)

|

langchain_md_files/integrations/providers/etherscan.mdx

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Etherscan

|

| 2 |

+

|

| 3 |

+

>[Etherscan](https://docs.etherscan.io/) is the leading blockchain explorer,

|

| 4 |

+

> search, API and analytics platform for `Ethereum`, a decentralized smart contracts platform.

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

## Installation and Setup

|

| 8 |

+

|

| 9 |

+

See the detailed [installation guide](/docs/integrations/document_loaders/etherscan).

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## Document Loader

|

| 13 |

+

|

| 14 |

+

See a [usage example](/docs/integrations/document_loaders/etherscan).

|

| 15 |

+

|

| 16 |

+

```python

|

| 17 |

+

from langchain_community.document_loaders import EtherscanLoader

|

| 18 |

+

```

|

langchain_md_files/integrations/providers/evernote.mdx

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# EverNote

|

| 2 |

+

|

| 3 |

+

>[EverNote](https://evernote.com/) is intended for archiving and creating notes in which photos, audio and saved web content can be embedded. Notes are stored in virtual "notebooks" and can be tagged, annotated, edited, searched, and exported.

|

| 4 |

+

|

| 5 |

+

## Installation and Setup

|

| 6 |

+

|

| 7 |

+

First, you need to install `lxml` and `html2text` python packages.

|

| 8 |

+

|

| 9 |

+

```bash

|

| 10 |

+

pip install lxml

|

| 11 |

+

pip install html2text

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

## Document Loader

|

| 15 |

+

|

| 16 |

+

See a [usage example](/docs/integrations/document_loaders/evernote).

|

| 17 |

+

|

| 18 |

+

```python

|

| 19 |

+

from langchain_community.document_loaders import EverNoteLoader

|

| 20 |

+

```

|

langchain_md_files/integrations/providers/facebook.mdx

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Facebook - Meta

|

| 2 |

+

|

| 3 |

+

>[Meta Platforms, Inc.](https://www.facebook.com/), doing business as `Meta`, formerly

|

| 4 |

+

> named `Facebook, Inc.`, and `TheFacebook, Inc.`, is an American multinational technology

|

| 5 |

+

> conglomerate. The company owns and operates `Facebook`, `Instagram`, `Threads`,

|

| 6 |

+

> and `WhatsApp`, among other products and services.

|

| 7 |

+

|

| 8 |

+

## Embedding models

|

| 9 |

+

|

| 10 |

+

### LASER

|

| 11 |

+

|

| 12 |

+

>[LASER](https://github.com/facebookresearch/LASER) is a Python library developed by

|

| 13 |

+

> the `Meta AI Research` team and used for

|

| 14 |

+

> creating multilingual sentence embeddings for

|

| 15 |

+

> [over 147 languages as of 2/25/2024](https://github.com/facebookresearch/flores/blob/main/flores200/README.md#languages-in-flores-200)

|

| 16 |

+

|

| 17 |

+

```bash

|

| 18 |

+

pip install laser_encoders

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

See a [usage example](/docs/integrations/text_embedding/laser).

|

| 22 |

+

|

| 23 |

+

```python

|

| 24 |

+

from langchain_community.embeddings.laser import LaserEmbeddings

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

## Document loaders

|

| 28 |

+

|

| 29 |

+

### Facebook Messenger

|

| 30 |

+

|

| 31 |

+

>[Messenger](https://en.wikipedia.org/wiki/Messenger_(software)) is an instant messaging app and

|

| 32 |

+

> platform developed by `Meta Platforms`. Originally developed as `Facebook Chat` in 2008, the company revamped its

|

| 33 |

+

> messaging service in 2010.

|

| 34 |

+

|

| 35 |

+

See a [usage example](/docs/integrations/document_loaders/facebook_chat).

|

| 36 |

+

|

| 37 |

+

```python

|

| 38 |

+

from langchain_community.document_loaders import FacebookChatLoader

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

## Vector stores

|

| 42 |

+

|

| 43 |

+

### Facebook Faiss

|

| 44 |

+

|

| 45 |

+

>[Facebook AI Similarity Search (Faiss)](https://engineering.fb.com/2017/03/29/data-infrastructure/faiss-a-library-for-efficient-similarity-search/)

|

| 46 |

+

> is a library for efficient similarity search and clustering of dense vectors. It contains algorithms that

|

| 47 |

+

> search in sets of vectors of any size, up to ones that possibly do not fit in RAM. It also contains supporting

|

| 48 |

+

> code for evaluation and parameter tuning.

|

| 49 |

+

|

| 50 |

+

[Faiss documentation](https://faiss.ai/).

|

| 51 |

+

|

| 52 |

+

We need to install `faiss` python package.

|

| 53 |

+

|

| 54 |

+

```bash

|

| 55 |

+

pip install faiss-gpu # For CUDA 7.5+ supported GPU's.

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

OR

|

| 59 |

+

|

| 60 |

+

```bash

|

| 61 |

+

pip install faiss-cpu # For CPU Installation

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

See a [usage example](/docs/integrations/vectorstores/faiss).

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

from langchain_community.vectorstores import FAISS

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

## Chat loaders

|

| 71 |

+

|

| 72 |

+

### Facebook Messenger

|

| 73 |

+

|

| 74 |

+

>[Messenger](https://en.wikipedia.org/wiki/Messenger_(software)) is an instant messaging app and

|

| 75 |

+

> platform developed by `Meta Platforms`. Originally developed as `Facebook Chat` in 2008, the company revamped its

|

| 76 |

+

> messaging service in 2010.

|

| 77 |

+

|

| 78 |

+

See a [usage example](/docs/integrations/chat_loaders/facebook).

|

| 79 |

+

|

| 80 |

+

```python

|

| 81 |

+

from langchain_community.chat_loaders.facebook_messenger import (

|

| 82 |

+

FolderFacebookMessengerChatLoader,

|

| 83 |

+

SingleFileFacebookMessengerChatLoader,

|

| 84 |

+

)

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

### Facebook WhatsApp

|

| 88 |

+

|

| 89 |

+

See a [usage example](/docs/integrations/chat_loaders/whatsapp).

|

| 90 |

+

|

| 91 |

+

```python

|

| 92 |

+

from langchain_community.chat_loaders.whatsapp import WhatsAppChatLoader

|

| 93 |

+

```

|

langchain_md_files/integrations/providers/fauna.mdx

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Fauna

|

| 2 |

+

|

| 3 |

+

>[Fauna](https://fauna.com/) is a distributed document-relational database

|

| 4 |

+

> that combines the flexibility of documents with the power of a relational,

|

| 5 |

+

> ACID compliant database that scales across regions, clouds or the globe.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

## Installation and Setup

|

| 9 |

+

|

| 10 |

+

We have to get the secret key.

|

| 11 |

+

See the detailed [guide](https://docs.fauna.com/fauna/current/learn/security_model/).

|

| 12 |

+

|

| 13 |

+

We have to install the `fauna` package.

|

| 14 |

+

|

| 15 |

+

```bash

|

| 16 |

+

pip install -U fauna

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

## Document Loader

|

| 20 |

+

|

| 21 |

+

See a [usage example](/docs/integrations/document_loaders/fauna).

|

| 22 |

+

|

| 23 |

+

```python

|

| 24 |

+

from langchain_community.document_loaders.fauna import FaunaLoader

|

| 25 |

+

```

|

langchain_md_files/integrations/providers/figma.mdx

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Figma

|

| 2 |

+

|

| 3 |

+

>[Figma](https://www.figma.com/) is a collaborative web application for interface design.

|

| 4 |

+

|

| 5 |

+

## Installation and Setup

|

| 6 |

+

|

| 7 |

+

The Figma API requires an `access token`, `node_ids`, and a `file key`.

|

| 8 |

+

|

| 9 |

+

The `file key` can be pulled from the URL. https://www.figma.com/file/{filekey}/sampleFilename

|

| 10 |

+

|

| 11 |

+

`Node IDs` are also available in the URL. Click on anything and look for the '?node-id={node_id}' param.

|

| 12 |

+

|

| 13 |

+

`Access token` [instructions](https://help.figma.com/hc/en-us/articles/8085703771159-Manage-personal-access-tokens).

|

| 14 |

+

|

| 15 |

+

## Document Loader

|

| 16 |

+

|

| 17 |

+

See a [usage example](/docs/integrations/document_loaders/figma).

|

| 18 |

+

|

| 19 |

+

```python

|

| 20 |

+

from langchain_community.document_loaders import FigmaFileLoader

|

| 21 |

+

```

|

langchain_md_files/integrations/providers/flyte.mdx

ADDED

|

@@ -0,0 +1,153 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

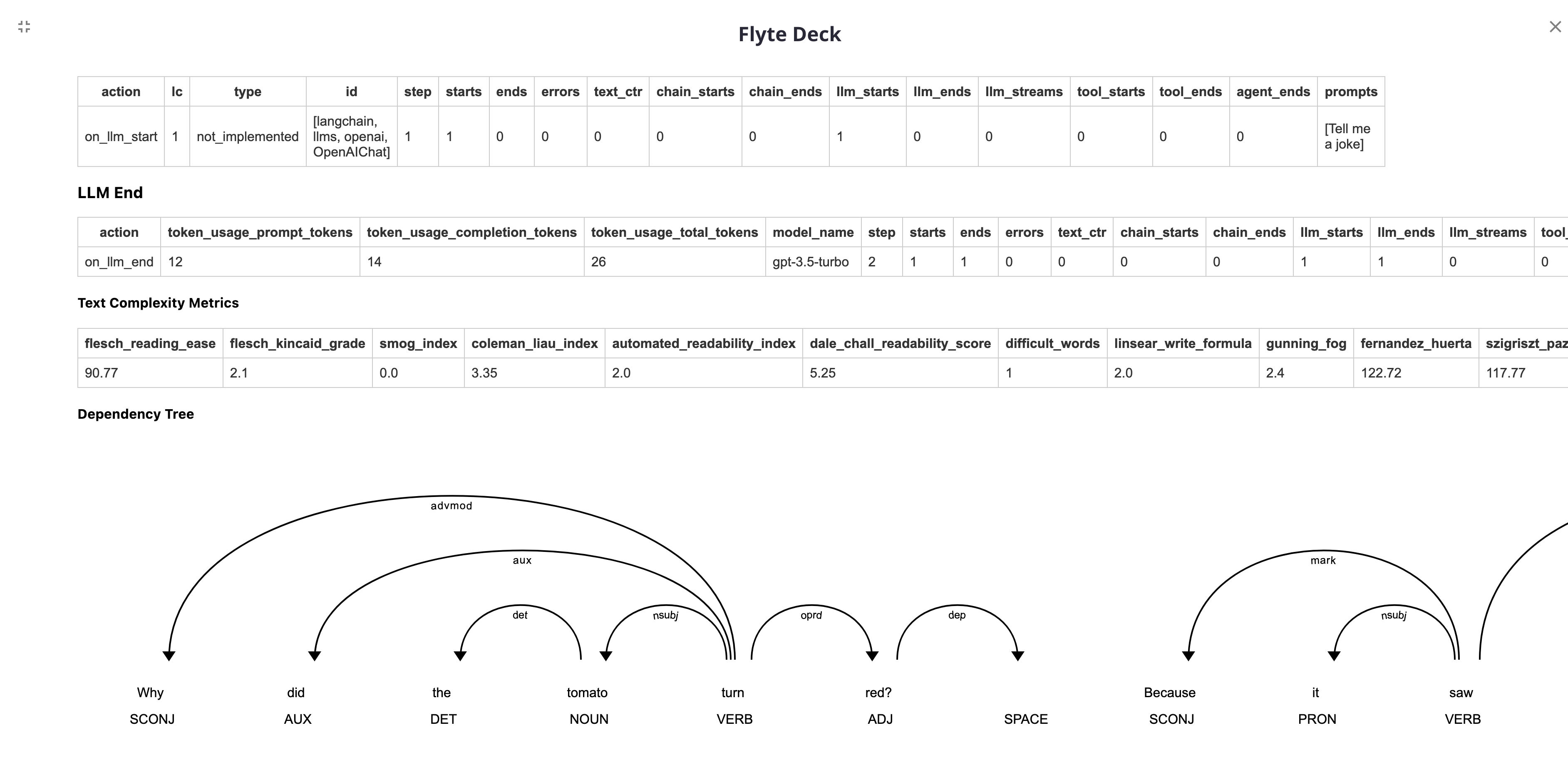

+

# Flyte

|

| 2 |

+

|

| 3 |

+

> [Flyte](https://github.com/flyteorg/flyte) is an open-source orchestrator that facilitates building production-grade data and ML pipelines.

|

| 4 |

+

> It is built for scalability and reproducibility, leveraging Kubernetes as its underlying platform.

|

| 5 |

+

|

| 6 |

+

The purpose of this notebook is to demonstrate the integration of a `FlyteCallback` into your Flyte task, enabling you to effectively monitor and track your LangChain experiments.

|

| 7 |

+

|

| 8 |

+

## Installation & Setup

|

| 9 |

+

|

| 10 |

+

- Install the Flytekit library by running the command `pip install flytekit`.

|

| 11 |

+

- Install the Flytekit-Envd plugin by running the command `pip install flytekitplugins-envd`.

|

| 12 |

+

- Install LangChain by running the command `pip install langchain`.

|

| 13 |

+

- Install [Docker](https://docs.docker.com/engine/install/) on your system.

|

| 14 |

+

|

| 15 |

+

## Flyte Tasks

|

| 16 |

+

|

| 17 |

+

A Flyte [task](https://docs.flyte.org/en/latest/user_guide/basics/tasks.html) serves as the foundational building block of Flyte.

|

| 18 |

+

To execute LangChain experiments, you need to write Flyte tasks that define the specific steps and operations involved.

|

| 19 |

+

|

| 20 |

+

NOTE: The [getting started guide](https://docs.flyte.org/projects/cookbook/en/latest/index.html) offers detailed, step-by-step instructions on installing Flyte locally and running your initial Flyte pipeline.

|

| 21 |

+

|

| 22 |

+

First, import the necessary dependencies to support your LangChain experiments.

|

| 23 |

+

|

| 24 |

+

```python

|

| 25 |

+

import os

|

| 26 |

+

|

| 27 |

+

from flytekit import ImageSpec, task

|

| 28 |

+

from langchain.agents import AgentType, initialize_agent, load_tools

|

| 29 |

+

from langchain.callbacks import FlyteCallbackHandler

|

| 30 |

+

from langchain.chains import LLMChain

|

| 31 |

+

from langchain_openai import ChatOpenAI

|

| 32 |

+

from langchain_core.prompts import PromptTemplate

|

| 33 |

+

from langchain_core.messages import HumanMessage

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

Set up the necessary environment variables to utilize the OpenAI API and Serp API:

|

| 37 |

+

|

| 38 |

+

```python

|

| 39 |

+

# Set OpenAI API key

|

| 40 |

+

os.environ["OPENAI_API_KEY"] = "<your_openai_api_key>"

|

| 41 |

+

|

| 42 |

+

# Set Serp API key

|

| 43 |

+

os.environ["SERPAPI_API_KEY"] = "<your_serp_api_key>"

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

Replace `<your_openai_api_key>` and `<your_serp_api_key>` with your respective API keys obtained from OpenAI and Serp API.

|

| 47 |

+

|

| 48 |

+

To guarantee reproducibility of your pipelines, Flyte tasks are containerized.

|

| 49 |

+

Each Flyte task must be associated with an image, which can either be shared across the entire Flyte [workflow](https://docs.flyte.org/en/latest/user_guide/basics/workflows.html) or provided separately for each task.

|

| 50 |

+

|

| 51 |

+

To streamline the process of supplying the required dependencies for each Flyte task, you can initialize an [`ImageSpec`](https://docs.flyte.org/en/latest/user_guide/customizing_dependencies/imagespec.html) object.

|

| 52 |

+

This approach automatically triggers a Docker build, alleviating the need for users to manually create a Docker image.

|

| 53 |

+

|

| 54 |

+

```python

|

| 55 |

+

custom_image = ImageSpec(

|

| 56 |

+

name="langchain-flyte",

|

| 57 |

+

packages=[

|

| 58 |

+

"langchain",

|

| 59 |

+

"openai",

|

| 60 |

+

"spacy",

|

| 61 |

+

"https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-3.5.0/en_core_web_sm-3.5.0.tar.gz",

|

| 62 |

+

"textstat",

|

| 63 |

+

"google-search-results",

|

| 64 |

+

],

|

| 65 |

+

registry="<your-registry>",

|

| 66 |

+

)

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

You have the flexibility to push the Docker image to a registry of your preference.

|

| 70 |

+

[Docker Hub](https://hub.docker.com/) or [GitHub Container Registry (GHCR)](https://docs.github.com/en/packages/working-with-a-github-packages-registry/working-with-the-container-registry) is a convenient option to begin with.

|

| 71 |

+

|

| 72 |

+

Once you have selected a registry, you can proceed to create Flyte tasks that log the LangChain metrics to Flyte Deck.

|

| 73 |

+

|

| 74 |

+

The following examples demonstrate tasks related to OpenAI LLM, chains and agent with tools:

|

| 75 |

+

|

| 76 |

+

### LLM

|

| 77 |

+

|

| 78 |

+

```python

|

| 79 |

+

@task(disable_deck=False, container_image=custom_image)

|

| 80 |

+

def langchain_llm() -> str:

|

| 81 |

+

llm = ChatOpenAI(

|

| 82 |

+

model_name="gpt-3.5-turbo",

|

| 83 |

+

temperature=0.2,

|

| 84 |

+

callbacks=[FlyteCallbackHandler()],

|

| 85 |

+

)

|

| 86 |

+

return llm.invoke([HumanMessage(content="Tell me a joke")]).content

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

### Chain

|

| 90 |

+

|

| 91 |

+

```python

|

| 92 |

+

@task(disable_deck=False, container_image=custom_image)

|

| 93 |

+

def langchain_chain() -> list[dict[str, str]]:

|

| 94 |

+

template = """You are a playwright. Given the title of play, it is your job to write a synopsis for that title.

|

| 95 |

+

Title: {title}

|

| 96 |

+

Playwright: This is a synopsis for the above play:"""

|

| 97 |

+

llm = ChatOpenAI(

|

| 98 |

+

model_name="gpt-3.5-turbo",

|

| 99 |

+

temperature=0,

|

| 100 |

+

callbacks=[FlyteCallbackHandler()],

|

| 101 |

+

)

|

| 102 |

+

prompt_template = PromptTemplate(input_variables=["title"], template=template)

|

| 103 |

+