text

stringlengths 23

371k

| source

stringlengths 32

152

|

|---|---|

Using 🤗 `transformers` at Hugging Face

🤗 `transformers` is a library maintained by Hugging Face and the community, for state-of-the-art Machine Learning for Pytorch, TensorFlow and JAX. It provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio. We are a bit biased, but we really like 🤗 `transformers`!

## Exploring 🤗 transformers in the Hub

There are over 25,000 `transformers` models in the Hub which you can find by filtering at the left of [the models page](https://huggingface.co/models?library=transformers&sort=downloads).

You can find models for many different tasks:

* Extracting the answer from a context ([question-answering](https://huggingface.co/models?library=transformers&pipeline_tag=question-answering&sort=downloads)).

* Creating summaries from a large text ([summarization](https://huggingface.co/models?library=transformers&pipeline_tag=summarization&sort=downloads)).

* Classify text (e.g. as spam or not spam, [text-classification](https://huggingface.co/models?library=transformers&pipeline_tag=text-classification&sort=downloads)).

* Generate a new text with models such as GPT ([text-generation](https://huggingface.co/models?library=transformers&pipeline_tag=text-generation&sort=downloads)).

* Identify parts of speech (verb, subject, etc.) or entities (country, organization, etc.) in a sentence ([token-classification](https://huggingface.co/models?library=transformers&pipeline_tag=token-classification&sort=downloads)).

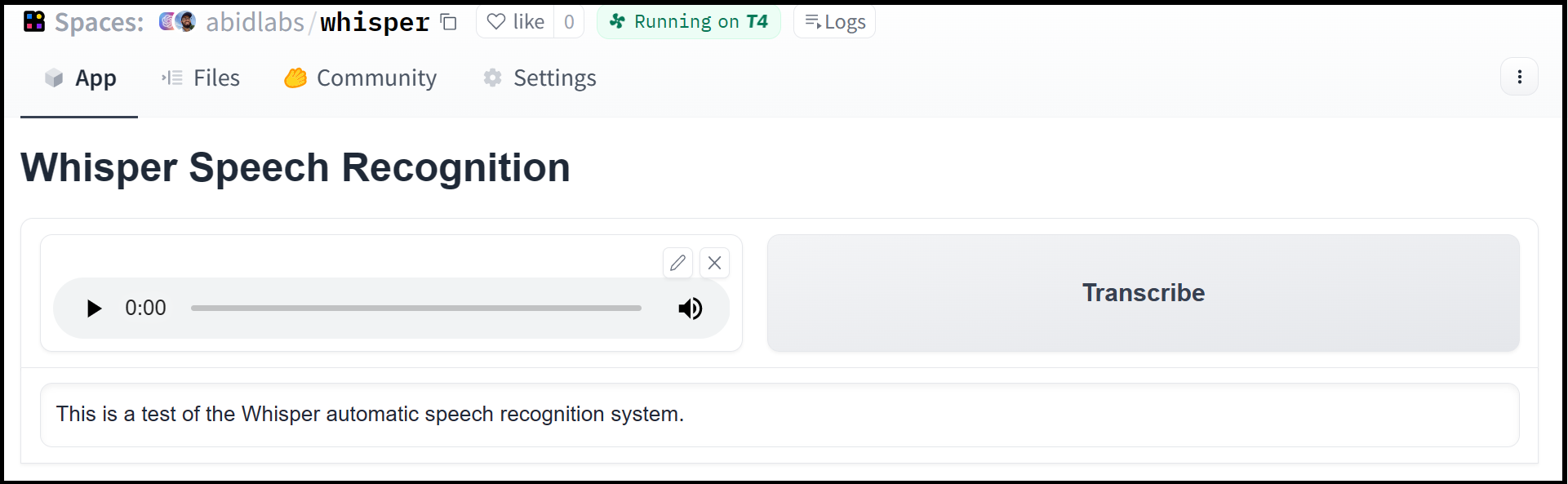

* Transcribe audio files to text ([automatic-speech-recognition](https://huggingface.co/models?library=transformers&pipeline_tag=automatic-speech-recognition&sort=downloads)).

* Classify the speaker or language in an audio file ([audio-classification](https://huggingface.co/models?library=transformers&pipeline_tag=audio-classification&sort=downloads)).

* Detect objects in an image ([object-detection](https://huggingface.co/models?library=transformers&pipeline_tag=object-detection&sort=downloads)).

* Segment an image ([image-segmentation](https://huggingface.co/models?library=transformers&pipeline_tag=image-segmentation&sort=downloads)).

* Do Reinforcement Learning ([reinforcement-learning](https://huggingface.co/models?library=transformers&pipeline_tag=reinforcement-learning&sort=downloads))!

You can try out the models directly in the browser if you want to test them out without downloading them thanks to the in-browser widgets!

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/libraries-transformers_widget.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/libraries-transformers_widget-dark.png"/>

</div>

## Using existing models

All `transformer` models are a line away from being used! Depending on how you want to use them, you can use the high-level API using the `pipeline` function or you can use `AutoModel` for more control.

```py

# With pipeline, just specify the task and the model id from the Hub.

from transformers import pipeline

pipe = pipeline("text-generation", model="distilgpt2")

# If you want more control, you will need to define the tokenizer and model.

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("distilgpt2")

model = AutoModelForCausalLM.from_pretrained("distilgpt2")

```

You can also load a model from a specific version (based on commit hash, tag name, or branch) as follows:

```py

model = AutoModel.from_pretrained(

"julien-c/EsperBERTo-small", revision="v2.0.1" # tag name, or branch name, or commit hash

)

```

If you want to see how to load a specific model, you can click `Use in Transformers` and you will be given a working snippet that you can load it! If you need further information about the model architecture, you can also click the "Read model documentation" at the bottom of the snippet.

<div class="flex justify-center">

<img class="block dark:hidden" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/libraries-transformers_snippet.png"/>

<img class="hidden dark:block" src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/hub/libraries-transformers_snippet-dark.png"/>

</div>

## Sharing your models

To read all about sharing models with `transformers`, please head out to the [Share a model](https://huggingface.co/docs/transformers/model_sharing) guide in the official documentation.

Many classes in `transformers`, such as the models and tokenizers, have a `push_to_hub` method that allows to easily upload the files to a repository.

```py

# Pushing model to your own account

model.push_to_hub("my-awesome-model")

# Pushing your tokenizer

tokenizer.push_to_hub("my-awesome-model")

# Pushing all things after training

trainer.push_to_hub()

```

There is much more you can do, so we suggest to review the [Share a model](https://huggingface.co/docs/transformers/model_sharing) guide.

## Additional resources

* Transformers [library](https://github.com/huggingface/transformers).

* Transformers [docs](https://huggingface.co/docs/transformers/index).

* Share a model [guide](https://huggingface.co/docs/transformers/model_sharing).

|

huggingface/hub-docs/blob/main/docs/hub/transformers.md

|

Data types

Datasets supported by Datasets Server have a tabular format, meaning a data point is represented in a row and its features are contained in columns. Using the `/first-rows` endpoint allows you to preview the first 100 rows of a dataset and information about each feature. Within the `features` key, you'll notice it returns a `_type` field. This value describes the data type of the column, and it is also known as a dataset's [`Features`](https://huggingface.co/docs/datasets/about_dataset_features).

There are several different data `Features` for representing different data formats such as [`Audio`](https://huggingface.co/docs/datasets/v2.5.2/en/package_reference/main_classes#datasets.Audio) and [`Image`](https://huggingface.co/docs/datasets/v2.5.2/en/package_reference/main_classes#datasets.Image) for speech and image data respectively. Knowing a dataset feature gives you a better understanding of the data type you're working with, and how you can preprocess it.

For example, the `/first-rows` endpoint for the [Rotten Tomatoes](https://huggingface.co/datasets/rotten_tomatoes) dataset returns the following:

```json

{"dataset": "rotten_tomatoes",

"config": "default",

"split": "train",

"features": [{"feature_idx": 0,

"name": "text",

"type": {"dtype": "string",

"id": null,

"_type": "Value"}},

{"feature_idx": 1,

"name": "label",

"type": {"num_classes": 2,

"names": ["neg", "pos"],

"id": null,

"_type": "ClassLabel"}}],

...

}

```

This dataset has two columns, `text` and `label`:

- The `text` column has a type of `Value`. The [`Value`](https://huggingface.co/docs/datasets/v2.5.2/en/package_reference/main_classes#datasets.Value) type is extremely versatile and represents scalar values such as strings, integers, dates, and even timestamp values.

- The `label` column has a type of `ClassLabel`. The [`ClassLabel`](https://huggingface.co/docs/datasets/v2.5.2/en/package_reference/main_classes#datasets.ClassLabel) type represents the number of classes in a dataset and their label names. Naturally, this means you'll frequently see `ClassLabel` used in classification datasets.

For a complete list of available data types, take a look at the [`Features`](https://huggingface.co/docs/datasets/v2.5.2/en/package_reference/main_classes#datasets.Features) documentation.

|

huggingface/datasets-server/blob/main/docs/source/data_types.mdx

|

What is Reinforcement Learning? [[what-is-reinforcement-learning]]

To understand Reinforcement Learning, let’s start with the big picture.

## The big picture [[the-big-picture]]

The idea behind Reinforcement Learning is that an agent (an AI) will learn from the environment by **interacting with it** (through trial and error) and **receiving rewards** (negative or positive) as feedback for performing actions.

Learning from interactions with the environment **comes from our natural experiences.**

For instance, imagine putting your little brother in front of a video game he never played, giving him a controller, and leaving him alone.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/Illustration_1.jpg" alt="Illustration_1" width="100%">

Your brother will interact with the environment (the video game) by pressing the right button (action). He got a coin, that’s a +1 reward. It’s positive, he just understood that in this game **he must get the coins.**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/Illustration_2.jpg" alt="Illustration_2" width="100%">

But then, **he presses the right button again** and he touches an enemy. He just died, so that's a -1 reward.

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit1/Illustration_3.jpg" alt="Illustration_3" width="100%">

By interacting with his environment through trial and error, your little brother understands that **he needs to get coins in this environment but avoid the enemies.**

**Without any supervision**, the child will get better and better at playing the game.

That’s how humans and animals learn, **through interaction.** Reinforcement Learning is just a **computational approach of learning from actions.**

### A formal definition [[a-formal-definition]]

We can now make a formal definition:

<Tip>

Reinforcement learning is a framework for solving control tasks (also called decision problems) by building agents that learn from the environment by interacting with it through trial and error and receiving rewards (positive or negative) as unique feedback.

</Tip>

But how does Reinforcement Learning work?

|

huggingface/deep-rl-class/blob/main/units/en/unit1/what-is-rl.mdx

|

--

title: Perplexity

emoji: 🤗

colorFrom: blue

colorTo: red

sdk: gradio

sdk_version: 3.19.1

app_file: app.py

pinned: false

tags:

- evaluate

- metric

description: >-

Perplexity (PPL) is one of the most common metrics for evaluating language models.

It is defined as the exponentiated average negative log-likelihood of a sequence, calculated with exponent base `e`.

For more information on perplexity, see [this tutorial](https://huggingface.co/docs/transformers/perplexity).

---

# Metric Card for Perplexity

## Metric Description

Given a model and an input text sequence, perplexity measures how likely the model is to generate the input text sequence.

As a metric, it can be used to evaluate how well the model has learned the distribution of the text it was trained on.

In this case, `model_id` should be the trained model to be evaluated, and the input texts should be the text that the model was trained on.

This implementation of perplexity is calculated with log base `e`, as in `perplexity = e**(sum(losses) / num_tokenized_tokens)`, following recent convention in deep learning frameworks.

## Intended Uses

Any language generation task.

## How to Use

The metric takes a list of text as input, as well as the name of the model used to compute the metric:

```python

from evaluate import load

perplexity = load("perplexity", module_type="metric")

results = perplexity.compute(predictions=predictions, model_id='gpt2')

```

### Inputs

- **model_id** (str): model used for calculating Perplexity. NOTE: Perplexity can only be calculated for causal language models.

- This includes models such as gpt2, causal variations of bert, causal versions of t5, and more (the full list can be found in the AutoModelForCausalLM documentation here: https://huggingface.co/docs/transformers/master/en/model_doc/auto#transformers.AutoModelForCausalLM )

- **predictions** (list of str): input text, where each separate text snippet is one list entry.

- **batch_size** (int): the batch size to run texts through the model. Defaults to 16.

- **add_start_token** (bool): whether to add the start token to the texts, so the perplexity can include the probability of the first word. Defaults to True.

- **device** (str): device to run on, defaults to `cuda` when available

### Output Values

This metric outputs a dictionary with the perplexity scores for the text input in the list, and the average perplexity.

If one of the input texts is longer than the max input length of the model, then it is truncated to the max length for the perplexity computation.

```

{'perplexities': [8.182524681091309, 33.42122268676758, 27.012239456176758], 'mean_perplexity': 22.871995608011883}

```

The range of this metric is [0, inf). A lower score is better.

#### Values from Popular Papers

### Examples

Calculating perplexity on predictions defined here:

```python

perplexity = evaluate.load("perplexity", module_type="metric")

input_texts = ["lorem ipsum", "Happy Birthday!", "Bienvenue"]

results = perplexity.compute(model_id='gpt2',

add_start_token=False,

predictions=input_texts)

print(list(results.keys()))

>>>['perplexities', 'mean_perplexity']

print(round(results["mean_perplexity"], 2))

>>>646.75

print(round(results["perplexities"][0], 2))

>>>32.25

```

Calculating perplexity on predictions loaded in from a dataset:

```python

perplexity = evaluate.load("perplexity", module_type="metric")

input_texts = datasets.load_dataset("wikitext",

"wikitext-2-raw-v1",

split="test")["text"][:50]

input_texts = [s for s in input_texts if s!='']

results = perplexity.compute(model_id='gpt2',

predictions=input_texts)

print(list(results.keys()))

>>>['perplexities', 'mean_perplexity']

print(round(results["mean_perplexity"], 2))

>>>576.76

print(round(results["perplexities"][0], 2))

>>>889.28

```

## Limitations and Bias

Note that the output value is based heavily on what text the model was trained on. This means that perplexity scores are not comparable between models or datasets.

See Meister and Cotterell, ["Language Model Evaluation Beyond Perplexity"]( https://arxiv.org/abs/2106.00085) (2021) for more information about alternative model evaluation strategies.

## Citation

```bibtex

@article{jelinek1977perplexity,

title={Perplexity—a measure of the difficulty of speech recognition tasks},

author={Jelinek, Fred and Mercer, Robert L and Bahl, Lalit R and Baker, James K},

journal={The Journal of the Acoustical Society of America},

volume={62},

number={S1},

pages={S63--S63},

year={1977},

publisher={Acoustical Society of America}

}

```

## Further References

- [Hugging Face Perplexity Blog Post](https://huggingface.co/docs/transformers/perplexity)

|

huggingface/evaluate/blob/main/metrics/perplexity/README.md

|

--

title: "Accelerate Large Model Training using DeepSpeed"

thumbnail: /blog/assets/83_accelerate_deepspeed/deepspeed-thumbnail.png

authors:

- user: smangrul

- user: sgugger

---

# Accelerate Large Model Training using DeepSpeed

In this post we will look at how we can leverage the **[Accelerate](https://github.com/huggingface/accelerate)** library for training large models which enables users to leverage the ZeRO features of **[DeeSpeed](https://www.deepspeed.ai)**.

# Motivation 🤗

**Tired of Out of Memory (OOM) errors while trying to train large models? We've got you covered. Large models are very performant [1] but difficult to train with the available hardware. To get the most of the available hardware for training large models one can leverage Data Parallelism using ZeRO - Zero Redundancy Optimizer [2]**.

Below is a short description of Data Parallelism using ZeRO with diagram from this [blog post](https://www.microsoft.com/en-us/research/blog/zero-deepspeed-new-system-optimizations-enable-training-models-with-over-100-billion-parameters/)

(Source: [link](https://www.microsoft.com/en-us/research/blog/zero-deepspeed-new-system-optimizations-enable-training-models-with-over-100-billion-parameters/))

a. **Stage 1** : Shards optimizer states across data parallel workers/GPUs

b. **Stage 2** : Shards optimizer states + gradients across data parallel workers/GPUs

c. **Stage 3**: Shards optimizer states + gradients + model parameters across data parallel workers/GPUs

d. **Optimizer Offload**: Offloads the gradients + optimizer states to CPU/Disk building on top of ZERO Stage 2

e. **Param Offload**: Offloads the model parameters to CPU/Disk building on top of ZERO Stage 3

In this blogpost we will look at how to leverage Data Parallelism using ZeRO using Accelerate. **[DeepSpeed](https://github.com/microsoft/deepspeed)**, **[FairScale](https://github.com/facebookresearch/fairscale/)** and **[PyTorch FullyShardedDataParallel (FSDP)](https://pytorch.org/blog/introducing-pytorch-fully-sharded-data-parallel-api/)** have implemented the core ideas of the ZERO paper. These have already been integrated in 🤗 `transformers` Trainer and 🤗 `accelerate` accompanied by great blogs [Fit More and Train Faster With ZeRO via DeepSpeed and FairScale](https://huggingface.co/blog/zero-deepspeed-fairscale) [4] and [Accelerate Large Model Training using PyTorch Fully Sharded Data Parallel](https://huggingface.co/blog/pytorch-fsdp) [5]. We defer the explanation of what goes behind the scenes to those blogs and mainly focus on leveraging DeepSpeed ZeRO using Accelerate.

# Accelerate 🚀: Leverage DeepSpeed ZeRO without any code changes

**Hardware setup**: 2X24GB NVIDIA Titan RTX GPUs. 60GB RAM.

We will look at the task of finetuning encoder-only model for text-classification. We will use pretrained `microsoft/deberta-v2-xlarge-mnli` (900M params) for finetuning on MRPC GLUE dataset.

The code is available here [run_cls_no_trainer.py](https://github.com/pacman100/accelerate-deepspeed-test/blob/main/src/modeling/run_cls_no_trainer.py). It is similar to the official text-classification example [here](https://github.com/huggingface/transformers/blob/main/examples/pytorch/text-classification/run_glue_no_trainer.py) with the addition of logic to measure train and eval time. Let's compare performance between Distributed Data Parallel (DDP) and DeepSpeed ZeRO Stage-2 in a Multi-GPU Setup.

To enable DeepSpeed ZeRO Stage-2 without any code changes, please run `accelerate config` and leverage the [Accelerate DeepSpeed Plugin](https://huggingface.co/docs/accelerate/deepspeed#accelerate-deepspeed-plugin).

**ZeRO Stage-2 DeepSpeed Plugin Example**

```bash

compute_environment: LOCAL_MACHINE

deepspeed_config:

gradient_accumulation_steps: 1

gradient_clipping: 1.0

offload_optimizer_device: none

offload_param_device: none

zero3_init_flag: false

zero_stage: 2

distributed_type: DEEPSPEED

fsdp_config: {}

machine_rank: 0

main_process_ip: null

main_process_port: null

main_training_function: main

mixed_precision: fp16

num_machines: 1

num_processes: 2

use_cpu: false

```

Now, run below command for training:

```bash

accelerate launch run_cls_no_trainer.py \

--model_name_or_path "microsoft/deberta-v2-xlarge-mnli" \

--task_name "mrpc" \

--ignore_mismatched_sizes \

--max_length 128 \

--per_device_train_batch_size 40 \

--learning_rate 2e-5 \

--num_train_epochs 3 \

--output_dir "/tmp/mrpc/deepspeed_stage2/" \

--with_tracking \

--report_to "wandb" \

```

In our Single-Node Multi-GPU setup, the maximum batch size that DDP supports without OOM error is 8. In contrast, DeepSpeed Zero-Stage 2 enables batch size of 40 without running into OOM errors. Therefore, DeepSpeed enables to fit **5X** more data per GPU when compared to DDP. Below is the snapshot of the plots from wandb [run](https://wandb.ai/smangrul/DDP_vs_DeepSpeed_cls_task?workspace=user-smangrul) along with benchmarking table comparing DDP vs DeepSpeed.

---

| Method | Batch Size Max | Train time per epoch (seconds) | Eval time per epoch (seconds) | F1 score | Accuracy |

| --- | --- | --- | --- | --- | --- |

| DDP (Distributed Data Parallel) | 8 | 103.57 | 2.04 | 0.931 | 0.904 |

| DeepSpeed ZeRO Stage 2 | **40** | **28.98** | **1.79** | **0.936** | **0.912** |

Table 1: Benchmarking DeepSpeed ZeRO Stage-2 on DeBERTa-XL (900M) model

---

With this bigger batch size, we observe ~**3.5X** speed up in total training time without any drop in perforamnce metrics, all this without changing any code. Yay! 🤗.

To be able to tweak more options, you will need to use a DeepSpeed config file and minimal code changes. Let's see how to do this.

# Accelerate 🚀: Leverage a DeepSpeed Config file to tweak more options

First, We will look at the task of finetuning a sequence-to-sequence model for training our own Chatbot. Specifically, we will finetune `facebook/blenderbot-400M-distill` on the [smangrul/MuDoConv](https://huggingface.co/datasets/smangrul/MuDoConv) (Multi-Domain Conversation) dataset. The dataset contains conversations from 10 different data sources covering personas, grounding in specific emotional contexts, goal-oriented (e.g., restaurant reservation) and general wikipedia topics (e.g, Cricket).

The code is available here [run_seq2seq_no_trainer.py](https://github.com/pacman100/accelerate-deepspeed-test/blob/main/src/modeling/run_seq2seq_no_trainer.py). Current pratice to effectively measure the `Engagingness` and `Humanness` of Chatbots is via Human evlauations which are expensive [6]. As such for this example, the metric being tracked is BLEU score (which isn't ideal but is the conventional metric for such tasks). One can adapt the code to train larger T5 models if you have access to GPUs that support `bfloat16` precision else you will run into `NaN` loss values. We will run a quick benchmark on `10000` train samples and `1000` eval samples as we are interested in DeepSpeed vs DDP.

We will leverage the DeepSpeed Zero Stage-2 config [zero2_config_accelerate.json](https://github.com/pacman100/accelerate-deepspeed-test/blob/main/src/modeling/configs/zero2_config_accelerate.json) (given below) For training. for detailed information on the various config features, please refer [DeeSpeed](https://www.deepspeed.ai) documentation.

```json

{

"fp16": {

"enabled": "true",

"loss_scale": 0,

"loss_scale_window": 1000,

"initial_scale_power": 15,

"hysteresis": 2,

"min_loss_scale": 1

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": "auto",

"weight_decay": "auto",

"torch_adam": true,

"adam_w_mode": true

}

},

"scheduler": {

"type": "WarmupDecayLR",

"params": {

"warmup_min_lr": "auto",

"warmup_max_lr": "auto",

"warmup_num_steps": "auto",

"total_num_steps": "auto"

}

},

"zero_optimization": {

"stage": 2,

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"overlap_comm": true,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"contiguous_gradients": true

},

"gradient_accumulation_steps": 1,

"gradient_clipping": "auto",

"steps_per_print": 2000,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}

```

To enable DeepSpeed ZeRO Stage-2 with above config, please run `accelerate config` and provide the config file path when asked. For more details, refer the 🤗 `accelerate` official documentation for [DeepSpeed Config File](https://huggingface.co/docs/accelerate/deepspeed#deepspeed-config-file).

**ZeRO Stage-2 DeepSpeed Config File Example**

```bash

compute_environment: LOCAL_MACHINE

deepspeed_config:

deepspeed_config_file: /path/to/zero2_config_accelerate.json

zero3_init_flag: false

distributed_type: DEEPSPEED

fsdp_config: {}

machine_rank: 0

main_process_ip: null

main_process_port: null

main_training_function: main

mixed_precision: fp16

num_machines: 1

num_processes: 2

use_cpu: false

```

Now, run below command for training:

```bash

accelerate launch run_seq2seq_no_trainer.py \

--dataset_name "smangrul/MuDoConv" \

--max_source_length 128 \

--source_prefix "chatbot: " \

--max_target_length 64 \

--val_max_target_length 64 \

--val_min_target_length 20 \

--n_val_batch_generations 5 \

--n_train 10000 \

--n_val 1000 \

--pad_to_max_length \

--num_beams 10 \

--model_name_or_path "facebook/blenderbot-400M-distill" \

--per_device_train_batch_size 200 \

--per_device_eval_batch_size 100 \

--learning_rate 1e-6 \

--weight_decay 0.0 \

--num_train_epochs 1 \

--gradient_accumulation_steps 1 \

--num_warmup_steps 100 \

--output_dir "/tmp/deepspeed_zero_stage2_accelerate_test" \

--seed 25 \

--logging_steps 100 \

--with_tracking \

--report_to "wandb" \

--report_name "blenderbot_400M_finetuning"

```

When using DeepSpeed config, if user has specified `optimizer` and `scheduler` in config, the user will have to use `accelerate.utils.DummyOptim` and `accelerate.utils.DummyScheduler`. Those are the only minor changes that the user has to do. Below we show an example of the minimal changes required when using DeepSpeed config:

```diff

- optimizer = torch.optim.Adam(optimizer_grouped_parameters, lr=args.learning_rate)

+ optimizer = accelerate.utils.DummyOptim(optimizer_grouped_parameters, lr=args.learning_rate)

- lr_scheduler = get_scheduler(

- name=args.lr_scheduler_type,

- optimizer=optimizer,

- num_warmup_steps=args.num_warmup_steps,

- num_training_steps=args.max_train_steps,

- )

+ lr_scheduler = accelerate.utils.DummyScheduler(

+ optimizer, total_num_steps=args.max_train_steps, warmup_num_steps=args.num_warmup_steps

+ )

```

---

| Method | Batch Size Max | Eval Size Max | Train time per epoch (seconds) | Eval time per epoch (seconds) |

| --- | --- | --- | --- | --- |

| DDP (Distributed Data Parallel) | 100 | 50 | 27.36 | 48.41 |

| DeepSpeed ZeRO Stage 2 | **200** | **100** | **19.06** | **39.27** |

Table 2: Benchmarking DeepSpeed ZeRO Stage-2 on BlenderBot (400M) model

In our Single-Node Multi-GPU setup, the maximum batch size that DDP supports without OOM error is 100. In contrast, DeepSpeed Zero-Stage 2 enables batch size of 200 without running into OOM errors. Therefore, DeepSpeed enables to fit **2X** more data per GPU when compared to DDP. We observe ~**1.44X** speedup in training and ~**1.23X** speedup in evaluation as we are able to fit more data on the same available hardware. As this model is of medium size, the speedup isn't that exciting but this will improve with bigger models. You can chat with the Chatbot trained using the entire data at 🤗 Space [smangrul/Chat-E](https://huggingface.co/spaces/smangrul/Chat-E). You can give bot a persona, ground conversation to a particular emotion, use to in goal-oriented tasks or in a free flow manner. Below is a fun conversation with the chatbot 💬. You can find snapshots of more conversations using different contexts [here](https://github.com/pacman100/accelerate-deepspeed-test/tree/main/src/chatbot_snapshots).

---

## CPU/Disk Offloading to enable training humongous models that won’t fit the GPU memory

On a single 24GB NVIDIA Titan RTX GPU, one cannot train GPT-XL Model (1.5B parameters) even with a batch size of 1. We will look at how we can use DeepSpeed ZeRO Stage-3 with CPU offloading of optimizer states, gradients and parameters to train GPT-XL Model.

We will leverage the DeepSpeed Zero Stage-3 CPU offload config [zero3_offload_config_accelerate.json](https://github.com/pacman100/accelerate-deepspeed-test/blob/main/src/modeling/configs/zero3_offload_config_accelerate.json) (given below) for training. The rest of the process of using the config with 🤗 `accelerate` is similar to the above experiment.

```json

{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"initial_scale_power": 16,

"hysteresis": 2,

"min_loss_scale": 1

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": "auto",

"weight_decay": "auto"

}

},

"scheduler": {

"type": "WarmupDecayLR",

"params": {

"warmup_min_lr": "auto",

"warmup_max_lr": "auto",

"warmup_num_steps": "auto",

"total_num_steps": "auto"

}

},

"zero_optimization": {

"stage": 3,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"offload_param": {

"device": "cpu",

"pin_memory": true

},

"overlap_comm": true,

"contiguous_gradients": true,

"reduce_bucket_size": "auto",

"stage3_prefetch_bucket_size": "auto",

"stage3_param_persistence_threshold": "auto",

"sub_group_size": 1e9,

"stage3_max_live_parameters": 1e9,

"stage3_max_reuse_distance": 1e9,

"stage3_gather_16bit_weights_on_model_save": true

},

"gradient_accumulation_steps": 1,

"gradient_clipping": "auto",

"steps_per_print": 2000,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}

```

**ZeRO Stage-3 CPU Offload DeepSpeed Config File Example**

```bash

compute_environment: LOCAL_MACHINE

deepspeed_config:

deepspeed_config_file: /path/to/zero3_offload_config_accelerate.json

zero3_init_flag: true

distributed_type: DEEPSPEED

fsdp_config: {}

machine_rank: 0

main_process_ip: null

main_process_port: null

main_training_function: main

mixed_precision: fp16

num_machines: 1

num_processes: 2

use_cpu: false

```

Now, run below command for training:

```bash

accelerate launch run_clm_no_trainer.py \

--config_name "gpt2-xl" \

--tokenizer_name "gpt2-xl" \

--dataset_name "wikitext" \

--dataset_config_name "wikitext-2-raw-v1" \

--block_size 128 \

--output_dir "/tmp/clm_deepspeed_stage3_offload__accelerate" \

--learning_rate 5e-4 \

--per_device_train_batch_size 16 \

--per_device_eval_batch_size 1 \

--num_train_epochs 1 \

--with_tracking \

--report_to "wandb"\

```

---

| Method | Batch Size Max | Train time per epoch (seconds) | Notes |

| --- | --- | --- | --- |

| DDP (Distributed Data Parallel) | - | - | OOM Error

| DeepSpeed ZeRO Stage 3 | **16** | 6608.35 | |

Table 3: Benchmarking DeepSpeed ZeRO Stage-3 CPU Offload on GPT-XL (1.5B) model

---

DDP will result in OOM error even with batch size 1. On the other hand, with DeepSpeed ZeRO Stage-3 CPU offload, we can train with a batch size of 16.

Finally, please, remember that, 🤗 `Accelerate` only integrates DeepSpeed, therefore if you

have any problems or questions with regards to DeepSpeed usage, please, file an issue with [DeepSpeed GitHub](https://github.com/microsoft/DeepSpeed/issues).

# References

[1] [Train Large, Then Compress: Rethinking Model Size for Efficient Training and Inference of Transformers](http://nlp.cs.berkeley.edu/pubs/Li-Wallace-Shen-Lin-Keutzer-Klein-Gonzalez_2020_Transformers_paper.pdf)

[2] [ZeRO: Memory Optimizations Toward Training Trillion Parameter Models](https://arxiv.org/pdf/1910.02054v3.pdf)

[3] [DeepSpeed: Extreme-scale model training for everyone - Microsoft Research](https://www.microsoft.com/en-us/research/blog/deepspeed-extreme-scale-model-training-for-everyone/)

[4] [Fit More and Train Faster With ZeRO via DeepSpeed and FairScale](https://huggingface.co/blog/zero-deepspeed-fairscale)

[5] [Accelerate Large Model Training using PyTorch Fully Sharded Data Parallel](https://huggingface.co/blog/pytorch-fsdp)

[6] [Recipes for building an open-domain chatbot](https://arxiv.org/pdf/2004.13637.pdf)

|

huggingface/blog/blob/main/accelerate-deepspeed.md

|

!-- DO NOT EDIT THIS FILE DIRECTLY. INSTEAD EDIT THE `readme_template.md` OR `guides/1)getting_started/1)quickstart.md` TEMPLATES AND THEN RUN `render_readme.py` SCRIPT. -->

<div align="center">

[<img src="../gradio.svg" alt="gradio" width=400>](https://gradio.app)<br>

<em>轻松构建 & 分享 令人愉快的机器学习程序</em>

[](https://github.com/gradio-app/gradio/actions/workflows/backend.yml)

[](https://github.com/gradio-app/gradio/actions/workflows/ui.yml)

[](https://pypi.org/project/gradio/)

[](https://pypi.org/project/gradio/)

[](https://twitter.com/gradio)

[官网](https://gradio.app)

| [文档](https://gradio.app/docs/)

| [指南](https://gradio.app/guides/)

| [开始](https://gradio.app/getting_started/)]

| [样例](../../demo/)

| [English](https://github.com/gradio-app/gradio#readme)

</div>

# Gradio: 用Python构建机器学习网页APP

Gradio是一个开源的Python库,用于构建演示机器学习或数据科学,以及web应用程序。

使用Gradio,您可以基于您的机器学习模型或数据科学工作流快速创建一个漂亮的用户界面,让用户可以”尝试“拖放他们自己的图像、粘贴文本、录制他们自己的声音,并通过浏览器与您的演示程序进行交互。

Gradio适用于:

- 向客户/合伙人/用户/学生**演示**您的机器学习模型。

- 通过自动共享链接快速**部署**您的模型,并获得模型性能反馈。

- 在开发过程中使用内置的操作和解释工具交互式地**调试**模型。

### 快速开始

**依赖**: Gradio只需要Python 3.7及以上版本!

#### Gradio能做什么?

与他人共享机器学习模型、API或数据科学工作流程的最佳方法之一就是创建一个**交互式应用**,让用户或同事在他们的浏览器中试用。

Gradio让你可以**用Python构建演示并分享它们**,而且通常只需几行代码!下面让我们开始吧。

#### Hello, World

要用Gradio运行"Hello World"示例,需要以下三个步骤:

1\. 用pip下载Gradio:

```bash

pip install gradio

```

2\. 用Python脚本或在Jupyter Notebook中运行下面的代码 (或者使用 [Google Colab](https://colab.research.google.com/drive/18ODkJvyxHutTN0P5APWyGFO_xwNcgHDZ?usp=sharing)):

```python

import gradio as gr

def greet(name):

return "Hello " + name + "!"

demo = gr.Interface(fn=greet, inputs="text", outputs="text")

demo.launch()

```

3\. 下面的演示会自动出现在Jupyter Notebook中,如果使用脚本运行则会在浏览器[http://localhost:7860](http://localhost:7860)弹出:

#### `Interface` 类

你可能会注意到,在运行示例时我们创建了一个 `gradio.Interface` 。 `Interface` 类可以用用户接口包装任意的Python函数。在上面的示例中,我们使用了一个基于文本的简单函数,但这个函数可以是任何东西,从音乐生成器到税率计算器,再到预训练机器学习模型的预测函数。

`Interface` 类核心需要三个参数初始化:

- `fn` : 被UI包装的函数

- `inputs` : 作为输入的组件 (例如: `"text"`, `"image"` or `"audio"`)

- `outputs` : 作为输出的组件 (例如: `"text"`, `"image"` or `"label"`)

下面我们进一步分析用于输入和输出的组件。

#### 组件属性

在之前的示例中我们可以看到一些简单的文本框组件 `Textbox` ,但是如果您想改变UI组件的外观或行为呢?

假设您想要自定义输入文本字段,例如您希望它更大并有一个文本占位符。如果我们使用 `Textbox` 的实际类,而不是使用字符串快捷方式,就可以通过组件属性实现个性化。

```python

import gradio as gr

def greet(name):

return "Hello " + name + "!"

demo = gr.Interface(

fn=greet,

inputs=gr.Textbox(lines=2, placeholder="Name Here..."),

outputs="text",

)

demo.launch()

```

#### 多输入和输出组件

假设您有一个更复杂的函数,有多个输入和输出。在下面的示例中,我们定义了一个函数,该函数接受字符串、布尔值和数字,并返回字符串和数字。观察应该如何传递输入和输出组件列表。

```python

import gradio as gr

def greet(name, is_morning, temperature):

salutation = "Good morning" if is_morning else "Good evening"

greeting = f"{salutation} {name}. It is {temperature} degrees today"

celsius = (temperature - 32) * 5 / 9

return greeting, round(celsius, 2)

demo = gr.Interface(

fn=greet,

inputs=["text", "checkbox", gr.Slider(0, 100)],

outputs=["text", "number"],

)

demo.launch()

```

您只需将组件包装在列表中。输入列表`inputs`中的每个组件依次对应函数的一个参数。输出列表`outputs`中的每个组件都对应于函数的一个返回值,两者均按顺序对应。

#### 一个图像示例

Gradio支持多种类型的组件,如 `Image`、`DateFrame`、`Video`或`Label` 。让我们尝试一个图像到图像的函数来感受一下!

```python

import numpy as np

import gradio as gr

def sepia(input_img):

sepia_filter = np.array([

[0.393, 0.769, 0.189],

[0.349, 0.686, 0.168],

[0.272, 0.534, 0.131]

])

sepia_img = input_img.dot(sepia_filter.T)

sepia_img /= sepia_img.max()

return sepia_img

demo = gr.Interface(sepia, gr.Image(shape=(200, 200)), "image")

demo.launch()

```

当使用`Image`组件作为输入时,您的函数将接收一个形状为 `(height, width, 3)` 的NumPy数组,其中最后一个维度表示RGB值。我们还将以NumPy数组的形式返回一张图像。

你也可以用 `type=` 关键字参数设置组件使用的数据类型。例如,如果你想让你的函数获取一个图像的文件路径,而不是一个NumPy数组时,输入 `Image` 组件可以写成:

```python

gr.Image(type="filepath", shape=...)

```

还要注意,我们的输入 `Image` 组件带有一个编辑按钮 🖉,它允许裁剪和放大图像。以这种方式操作图像可以帮助揭示机器学习模型中的偏见或隐藏的缺陷!

您可以在[Gradio文档](https://gradio.app/docs)中阅读更多关于组件以及如何使用它们。

#### Blocks: 更加灵活且可控

Gradio 提供了两个类来构建应用程序

1\. **Interface**,这为创建到目前为止我们一直在讨论的示例提供了一个高级抽象。

2\. **Blocks**,一个用于设计具有更灵活布局和数据流的web应用程序的初级API。block可以做许多事,比如特征化多个数据流和演示,控制组件在页面上出现的位置,处理复杂的数据流(例如,输出可以作为其他函数的输入),以及根据用户交互更新组件的属性/可见性,且仍然在Python中。如果您需要这种个性化,那就试试 `Blocks` 吧!

#### 你好, Blocks

让我们看一个简单的例子。注意这里的API与 `Interface` 有何不同。

```python

import gradio as gr

def greet(name):

return "Hello " + name + "!"

with gr.Blocks() as demo:

name = gr.Textbox(label="Name")

output = gr.Textbox(label="Output Box")

greet_btn = gr.Button("Greet")

greet_btn.click(fn=greet, inputs=name, outputs=output)

demo.launch()

```

注意事项:

- `Blocks` 由 `with` 子句组成,在该子句中创建的任何组件都会自动添加到应用程序中。

- 组件在应用程序中按创建的顺序垂直显示,(稍后我们将介绍自定义布局!)

- 一个 按钮 `Button` 被创建,然后添加了一个 `click` 事件监听器。这个API看起来很熟悉!就像 `Interface`一样, `click` 方法接受一个Python函数、输入组件和输出组件。

#### 更多复杂性

这里有一个应用程序可以让你感受一下`Blocks`的更多可能:

```python

import numpy as np

import gradio as gr

def flip_text(x):

return x[::-1]

def flip_image(x):

return np.fliplr(x)

with gr.Blocks() as demo:

gr.Markdown("Flip text or image files using this demo.")

with gr.Tabs():

with gr.TabItem("Flip Text"):

text_input = gr.Textbox()

text_output = gr.Textbox()

text_button = gr.Button("Flip")

with gr.TabItem("Flip Image"):

with gr.Row():

image_input = gr.Image()

image_output = gr.Image()

image_button = gr.Button("Flip")

text_button.click(flip_text, inputs=text_input, outputs=text_output)

image_button.click(flip_image, inputs=image_input, outputs=image_output)

demo.launch()

```

还有很多事情可以做!我们将在[使用blocks构建](https://gradio.app/building_with_blocks)部分为您介绍如何创建像这样复杂的 `Blocks` 应用程序。

恭喜你,你现在已经熟悉了Gradio的基础使用!🥳 去我们的[下一章](https://gradio.app/key_features) 了解Gradio的更多功能。

## 开源栈

Gradio是由许多很棒的开源库构建的,请一并支持它们!

[<img src="../huggingface_mini.svg" alt="huggingface" height=40>](https://huggingface.co)

[<img src="../python.svg" alt="python" height=40>](https://www.python.org)

[<img src="../fastapi.svg" alt="fastapi" height=40>](https://fastapi.tiangolo.com)

[<img src="../encode.svg" alt="encode" height=40>](https://www.encode.io)

[<img src="../svelte.svg" alt="svelte" height=40>](https://svelte.dev)

[<img src="../vite.svg" alt="vite" height=40>](https://vitejs.dev)

[<img src="../pnpm.svg" alt="pnpm" height=40>](https://pnpm.io)

[<img src="../tailwind.svg" alt="tailwind" height=40>](https://tailwindcss.com)

## 协议

Gradio is licensed under the Apache License 2.0 found in the [LICENSE](LICENSE) file in the root directory of this repository.

## 引用

另外请参阅论文 _[Gradio: Hassle-Free Sharing and Testing of ML Models in the Wild](https://arxiv.org/abs/1906.02569), ICML HILL 2019_,如果您在工作中使用Gradio请引用它。

```

@article{abid2019gradio,

title = {Gradio: Hassle-Free Sharing and Testing of ML Models in the Wild},

author = {Abid, Abubakar and Abdalla, Ali and Abid, Ali and Khan, Dawood and Alfozan, Abdulrahman and Zou, James},

journal = {arXiv preprint arXiv:1906.02569},

year = {2019},

}

```

|

gradio-app/gradio/blob/main/readme_files/zh-cn/README.md

|

More on Examples

In the [previous Guide](/main/guides/the-interface-class), we discussed how to provide example inputs for your demo to make it easier for users to try it out. Here, we dive into more details.

## Providing Examples

As covered in the [Key Features](/guides/key-features/#example-inputs) guide, adding examples to an Interface is as easy as providing a list of lists to the `examples`

keyword argument.

Each sublist is a data sample, where each element corresponds to an input of the prediction function.

The inputs must be ordered in the same order as the prediction function expects them.

If your interface only has one input component, then you can provide your examples as a regular list instead of a list of lists.

### Loading Examples from a Directory

You can also specify a path to a directory containing your examples. If your Interface takes only a single file-type input, e.g. an image classifier, you can simply pass a directory filepath to the `examples=` argument, and the `Interface` will load the images in the directory as examples.

In the case of multiple inputs, this directory must

contain a log.csv file with the example values.

In the context of the calculator demo, we can set `examples='/demo/calculator/examples'` and in that directory we include the following `log.csv` file:

```csv

num,operation,num2

5,"add",3

4,"divide",2

5,"multiply",3

```

This can be helpful when browsing flagged data. Simply point to the flagged directory and the `Interface` will load the examples from the flagged data.

### Providing Partial Examples

Sometimes your app has many input components, but you would only like to provide examples for a subset of them. In order to exclude some inputs from the examples, pass `None` for all data samples corresponding to those particular components.

## Caching examples

You may wish to provide some cached examples of your model for users to quickly try out, in case your model takes a while to run normally.

If `cache_examples=True`, the `Interface` will run all of your examples through your app and save the outputs when you call the `launch()` method. This data will be saved in a directory called `gradio_cached_examples` in your working directory by default. You can also set this directory with the `GRADIO_EXAMPLES_CACHE` environment variable, which can be either an absolute path or a relative path to your working directory.

Whenever a user clicks on an example, the output will automatically be populated in the app now, using data from this cached directory instead of actually running the function. This is useful so users can quickly try out your model without adding any load!

Keep in mind once the cache is generated, it will not be updated in future launches. If the examples or function logic change, delete the cache folder to clear the cache and rebuild it with another `launch()`.

|

gradio-app/gradio/blob/main/guides/02_building-interfaces/01_more-on-examples.md

|

Backend Testing Guidelines

- All the tests should test Backend functionalities. Frontend functionalities and e2e tests are done in Frontend.

- Make use of pytest fixtures whenever it is possible. With fixtures, objects with high initialize durations are reused within tests, ex. a client session.

- All test*data resides within \_gradio/test_data* and all test_files resides within test/test_files.

- When doing network operations do not forget to make use of async to make tests faster.

- Have clear class and function naming within the tests.

- Short descriptions within test functions are great.

- Library function docstrings is expected to contain an example, please add missing docstrings to the library while you are writing tests the related function.

- Library docstring examples and descriptions are expected to align with tests, please fix divergent tests and library docstrings.

|

gradio-app/gradio/blob/main/test/README.md

|

Loading methods

Methods for listing and loading evaluation modules:

## List

[[autodoc]] evaluate.list_evaluation_modules

## Load

[[autodoc]] evaluate.load

|

huggingface/evaluate/blob/main/docs/source/package_reference/loading_methods.mdx

|

Gradio and ONNX on Hugging Face

Related spaces: https://huggingface.co/spaces/onnx/EfficientNet-Lite4

Tags: ONNX, SPACES

Contributed by Gradio and the <a href="https://onnx.ai/">ONNX</a> team

## Introduction

In this Guide, we'll walk you through:

- Introduction of ONNX, ONNX model zoo, Gradio, and Hugging Face Spaces

- How to setup a Gradio demo for EfficientNet-Lite4

- How to contribute your own Gradio demos for the ONNX organization on Hugging Face

Here's an [example](https://onnx-efficientnet-lite4.hf.space/) of an ONNX model.

## What is the ONNX Model Zoo?

Open Neural Network Exchange ([ONNX](https://onnx.ai/)) is an open standard format for representing machine learning models. ONNX is supported by a community of partners who have implemented it in many frameworks and tools. For example, if you have trained a model in TensorFlow or PyTorch, you can convert it to ONNX easily, and from there run it on a variety of devices using an engine/compiler like ONNX Runtime.

The [ONNX Model Zoo](https://github.com/onnx/models) is a collection of pre-trained, state-of-the-art models in the ONNX format contributed by community members. Accompanying each model are Jupyter notebooks for model training and running inference with the trained model. The notebooks are written in Python and include links to the training dataset as well as references to the original paper that describes the model architecture.

## What are Hugging Face Spaces & Gradio?

### Gradio

Gradio lets users demo their machine learning models as a web app all in python code. Gradio wraps a python function into a user interface and the demos can be launched inside jupyter notebooks, colab notebooks, as well as embedded in your own website and hosted on Hugging Face Spaces for free.

Get started [here](https://gradio.app/getting_started)

### Hugging Face Spaces

Hugging Face Spaces is a free hosting option for Gradio demos. Spaces comes with 3 SDK options: Gradio, Streamlit and Static HTML demos. Spaces can be public or private and the workflow is similar to github repos. There are over 2000+ spaces currently on Hugging Face. Learn more about spaces [here](https://huggingface.co/spaces/launch).

### Hugging Face Models

Hugging Face Model Hub also supports ONNX models and ONNX models can be filtered through the [ONNX tag](https://huggingface.co/models?library=onnx&sort=downloads)

## How did Hugging Face help the ONNX Model Zoo?

There are a lot of Jupyter notebooks in the ONNX Model Zoo for users to test models. Previously, users needed to download the models themselves and run those notebooks locally for testing. With Hugging Face, the testing process can be much simpler and more user-friendly. Users can easily try certain ONNX Model Zoo model on Hugging Face Spaces and run a quick demo powered by Gradio with ONNX Runtime, all on cloud without downloading anything locally. Note, there are various runtimes for ONNX, e.g., [ONNX Runtime](https://github.com/microsoft/onnxruntime), [MXNet](https://github.com/apache/incubator-mxnet).

## What is the role of ONNX Runtime?

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. It makes live Gradio demos with ONNX Model Zoo model on Hugging Face possible.

ONNX Runtime inference can enable faster customer experiences and lower costs, supporting models from deep learning frameworks such as PyTorch and TensorFlow/Keras as well as classical machine learning libraries such as scikit-learn, LightGBM, XGBoost, etc. ONNX Runtime is compatible with different hardware, drivers, and operating systems, and provides optimal performance by leveraging hardware accelerators where applicable alongside graph optimizations and transforms. For more information please see the [official website](https://onnxruntime.ai/).

## Setting up a Gradio Demo for EfficientNet-Lite4

EfficientNet-Lite 4 is the largest variant and most accurate of the set of EfficientNet-Lite models. It is an integer-only quantized model that produces the highest accuracy of all of the EfficientNet models. It achieves 80.4% ImageNet top-1 accuracy, while still running in real-time (e.g. 30ms/image) on a Pixel 4 CPU. To learn more read the [model card](https://github.com/onnx/models/tree/main/vision/classification/efficientnet-lite4)

Here we walk through setting up a example demo for EfficientNet-Lite4 using Gradio

First we import our dependencies and download and load the efficientnet-lite4 model from the onnx model zoo. Then load the labels from the labels_map.txt file. We then setup our preprocessing functions, load the model for inference, and setup the inference function. Finally, the inference function is wrapped into a gradio interface for a user to interact with. See the full code below.

```python

import numpy as np

import math

import matplotlib.pyplot as plt

import cv2

import json

import gradio as gr

from huggingface_hub import hf_hub_download

from onnx import hub

import onnxruntime as ort

# loads ONNX model from ONNX Model Zoo

model = hub.load("efficientnet-lite4")

# loads the labels text file

labels = json.load(open("labels_map.txt", "r"))

# sets image file dimensions to 224x224 by resizing and cropping image from center

def pre_process_edgetpu(img, dims):

output_height, output_width, _ = dims

img = resize_with_aspectratio(img, output_height, output_width, inter_pol=cv2.INTER_LINEAR)

img = center_crop(img, output_height, output_width)

img = np.asarray(img, dtype='float32')

# converts jpg pixel value from [0 - 255] to float array [-1.0 - 1.0]

img -= [127.0, 127.0, 127.0]

img /= [128.0, 128.0, 128.0]

return img

# resizes the image with a proportional scale

def resize_with_aspectratio(img, out_height, out_width, scale=87.5, inter_pol=cv2.INTER_LINEAR):

height, width, _ = img.shape

new_height = int(100. * out_height / scale)

new_width = int(100. * out_width / scale)

if height > width:

w = new_width

h = int(new_height * height / width)

else:

h = new_height

w = int(new_width * width / height)

img = cv2.resize(img, (w, h), interpolation=inter_pol)

return img

# crops the image around the center based on given height and width

def center_crop(img, out_height, out_width):

height, width, _ = img.shape

left = int((width - out_width) / 2)

right = int((width + out_width) / 2)

top = int((height - out_height) / 2)

bottom = int((height + out_height) / 2)

img = img[top:bottom, left:right]

return img

sess = ort.InferenceSession(model)

def inference(img):

img = cv2.imread(img)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = pre_process_edgetpu(img, (224, 224, 3))

img_batch = np.expand_dims(img, axis=0)

results = sess.run(["Softmax:0"], {"images:0": img_batch})[0]

result = reversed(results[0].argsort()[-5:])

resultdic = {}

for r in result:

resultdic[labels[str(r)]] = float(results[0][r])

return resultdic

title = "EfficientNet-Lite4"

description = "EfficientNet-Lite 4 is the largest variant and most accurate of the set of EfficientNet-Lite model. It is an integer-only quantized model that produces the highest accuracy of all of the EfficientNet models. It achieves 80.4% ImageNet top-1 accuracy, while still running in real-time (e.g. 30ms/image) on a Pixel 4 CPU."

examples = [['catonnx.jpg']]

gr.Interface(inference, gr.Image(type="filepath"), "label", title=title, description=description, examples=examples).launch()

```

## How to contribute Gradio demos on HF spaces using ONNX models

- Add model to the [onnx model zoo](https://github.com/onnx/models/blob/main/.github/PULL_REQUEST_TEMPLATE.md)

- Create an account on Hugging Face [here](https://huggingface.co/join).

- See list of models left to add to ONNX organization, please refer to the table with the [Models list](https://github.com/onnx/models#models)

- Add Gradio Demo under your username, see this [blog post](https://huggingface.co/blog/gradio-spaces) for setting up Gradio Demo on Hugging Face.

- Request to join ONNX Organization [here](https://huggingface.co/onnx).

- Once approved transfer model from your username to ONNX organization

- Add a badge for model in model table, see examples in [Models list](https://github.com/onnx/models#models)

|

gradio-app/gradio/blob/main/guides/06_integrating-other-frameworks/Gradio-and-ONNX-on-Hugging-Face.md

|

!--Copyright 2022 The HuggingFace Team and Microsoft. All rights reserved.

Licensed under the MIT License; you may not use this file except in compliance with

the License.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# Graphormer

## Overview

The Graphormer model was proposed in [Do Transformers Really Perform Bad for Graph Representation?](https://arxiv.org/abs/2106.05234) by

Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng, Guolin Ke, Di He, Yanming Shen and Tie-Yan Liu. It is a Graph Transformer model, modified to allow computations on graphs instead of text sequences by generating embeddings and features of interest during preprocessing and collation, then using a modified attention.

The abstract from the paper is the following:

*The Transformer architecture has become a dominant choice in many domains, such as natural language processing and computer vision. Yet, it has not achieved competitive performance on popular leaderboards of graph-level prediction compared to mainstream GNN variants. Therefore, it remains a mystery how Transformers could perform well for graph representation learning. In this paper, we solve this mystery by presenting Graphormer, which is built upon the standard Transformer architecture, and could attain excellent results on a broad range of graph representation learning tasks, especially on the recent OGB Large-Scale Challenge. Our key insight to utilizing Transformer in the graph is the necessity of effectively encoding the structural information of a graph into the model. To this end, we propose several simple yet effective structural encoding methods to help Graphormer better model graph-structured data. Besides, we mathematically characterize the expressive power of Graphormer and exhibit that with our ways of encoding the structural information of graphs, many popular GNN variants could be covered as the special cases of Graphormer.*

This model was contributed by [clefourrier](https://huggingface.co/clefourrier). The original code can be found [here](https://github.com/microsoft/Graphormer).

## Usage tips

This model will not work well on large graphs (more than 100 nodes/edges), as it will make the memory explode.

You can reduce the batch size, increase your RAM, or decrease the `UNREACHABLE_NODE_DISTANCE` parameter in algos_graphormer.pyx, but it will be hard to go above 700 nodes/edges.

This model does not use a tokenizer, but instead a special collator during training.

## GraphormerConfig

[[autodoc]] GraphormerConfig

## GraphormerModel

[[autodoc]] GraphormerModel

- forward

## GraphormerForGraphClassification

[[autodoc]] GraphormerForGraphClassification

- forward

|

huggingface/transformers/blob/main/docs/source/en/model_doc/graphormer.md

|

Introduction [[introduction]]

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit6/thumbnail.png" alt="thumbnail"/>

In the last unit, we learned about Deep Q-Learning. In this value-based deep reinforcement learning algorithm, we **used a deep neural network to approximate the different Q-values for each possible action at a state.**

Since the beginning of the course, we have only studied value-based methods, **where we estimate a value function as an intermediate step towards finding an optimal policy.**

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit3/link-value-policy.jpg" alt="Link value policy" />

In value-based methods, the policy ** \(π\) only exists because of the action value estimates since the policy is just a function** (for instance, greedy-policy) that will select the action with the highest value given a state.

With policy-based methods, we want to optimize the policy directly **without having an intermediate step of learning a value function.**

So today, **we'll learn about policy-based methods and study a subset of these methods called policy gradient**. Then we'll implement our first policy gradient algorithm called Monte Carlo **Reinforce** from scratch using PyTorch.

Then, we'll test its robustness using the CartPole-v1 and PixelCopter environments.

You'll then be able to iterate and improve this implementation for more advanced environments.

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface-deep-rl-course/course-images/resolve/main/en/unit6/envs.gif" alt="Environments"/>

</figure>

Let's get started!

|

huggingface/deep-rl-class/blob/main/units/en/unit4/introduction.mdx

|

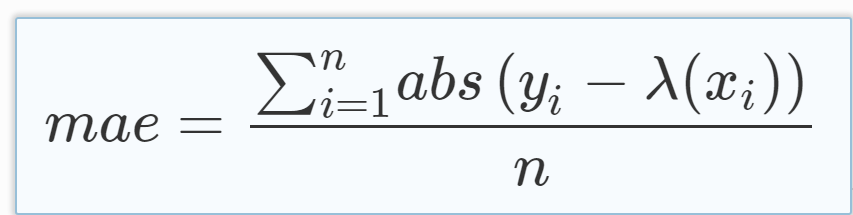

Metric Card for MAE

## Metric Description

Mean Absolute Error (MAE) is the mean of the magnitude of difference between the predicted and actual numeric values:

## How to Use

At minimum, this metric requires predictions and references as inputs.

```python

>>> mae_metric = datasets.load_metric("mae")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = mae_metric.compute(predictions=predictions, references=references)

```

### Inputs

Mandatory inputs:

- `predictions`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the estimated target values.

- `references`: numeric array-like of shape (`n_samples,`) or (`n_samples`, `n_outputs`), representing the ground truth (correct) target values.

Optional arguments:

- `sample_weight`: numeric array-like of shape (`n_samples,`) representing sample weights. The default is `None`.

- `multioutput`: `raw_values`, `uniform_average` or numeric array-like of shape (`n_outputs,`), which defines the aggregation of multiple output values. The default value is `uniform_average`.

- `raw_values` returns a full set of errors in case of multioutput input.

- `uniform_average` means that the errors of all outputs are averaged with uniform weight.

- the array-like value defines weights used to average errors.

### Output Values

This metric outputs a dictionary, containing the mean absolute error score, which is of type:

- `float`: if multioutput is `uniform_average` or an ndarray of weights, then the weighted average of all output errors is returned.

- numeric array-like of shape (`n_outputs,`): if multioutput is `raw_values`, then the score is returned for each output separately.

Each MAE `float` value ranges from `0.0` to `1.0`, with the best value being 0.0.

Output Example(s):

```python

{'mae': 0.5}

```

If `multioutput="raw_values"`:

```python

{'mae': array([0.5, 1. ])}

```

#### Values from Popular Papers

### Examples

Example with the `uniform_average` config:

```python

>>> from datasets import load_metric

>>> mae_metric = load_metric("mae")

>>> predictions = [2.5, 0.0, 2, 8]

>>> references = [3, -0.5, 2, 7]

>>> results = mae_metric.compute(predictions=predictions, references=references)

>>> print(results)

{'mae': 0.5}

```

Example with multi-dimensional lists, and the `raw_values` config:

```python

>>> from datasets import load_metric

>>> mae_metric = datasets.load_metric("mae", "multilist")

>>> predictions = [[0.5, 1], [-1, 1], [7, -6]]

>>> references = [[0, 2], [-1, 2], [8, -5]]

>>> results = mae_metric.compute(predictions=predictions, references=references)

>>> print(results)

{'mae': 0.75}

>>> results = mae_metric.compute(predictions=predictions, references=references, multioutput='raw_values')

>>> print(results)

{'mae': array([0.5, 1. ])}

```

## Limitations and Bias

One limitation of MAE is that the relative size of the error is not always obvious, meaning that it can be difficult to tell a big error from a smaller one -- metrics such as Mean Absolute Percentage Error (MAPE) have been proposed to calculate MAE in percentage terms.

Also, since it calculates the mean, MAE may underestimate the impact of big, but infrequent, errors -- metrics such as the Root Mean Square Error (RMSE) compensate for this by taking the root of error values.

## Citation(s)

```bibtex

@article{scikit-learn,

title={Scikit-learn: Machine Learning in {P}ython},

author={Pedregosa, F. and Varoquaux, G. and Gramfort, A. and Michel, V.

and Thirion, B. and Grisel, O. and Blondel, M. and Prettenhofer, P.

and Weiss, R. and Dubourg, V. and Vanderplas, J. and Passos, A. and

Cournapeau, D. and Brucher, M. and Perrot, M. and Duchesnay, E.},

journal={Journal of Machine Learning Research},

volume={12},

pages={2825--2830},

year={2011}

}

```

```bibtex

@article{willmott2005advantages,

title={Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance},

author={Willmott, Cort J and Matsuura, Kenji},

journal={Climate research},

volume={30},

number={1},

pages={79--82},

year={2005}

}

```

## Further References

- [Mean Absolute Error - Wikipedia](https://en.wikipedia.org/wiki/Mean_absolute_error)

|

huggingface/datasets/blob/main/metrics/mae/README.md

|

!--Copyright 2023 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

# Unconditional image generation

Unconditional image generation models are not conditioned on text or images during training. It only generates images that resemble its training data distribution.

This guide will explore the [train_unconditional.py](https://github.com/huggingface/diffusers/blob/main/examples/unconditional_image_generation/train_unconditional.py) training script to help you become familiar with it, and how you can adapt it for your own use-case.

Before running the script, make sure you install the library from source:

```bash

git clone https://github.com/huggingface/diffusers

cd diffusers

pip install .

```

Then navigate to the example folder containing the training script and install the required dependencies:

```bash

cd examples/unconditional_image_generation

pip install -r requirements.txt

```

<Tip>

🤗 Accelerate is a library for helping you train on multiple GPUs/TPUs or with mixed-precision. It'll automatically configure your training setup based on your hardware and environment. Take a look at the 🤗 Accelerate [Quick tour](https://huggingface.co/docs/accelerate/quicktour) to learn more.

</Tip>

Initialize an 🤗 Accelerate environment:

```bash

accelerate config

```

To setup a default 🤗 Accelerate environment without choosing any configurations:

```bash

accelerate config default

```

Or if your environment doesn't support an interactive shell like a notebook, you can use:

```bash

from accelerate.utils import write_basic_config

write_basic_config()

```

Lastly, if you want to train a model on your own dataset, take a look at the [Create a dataset for training](create_dataset) guide to learn how to create a dataset that works with the training script.

## Script parameters

<Tip>

The following sections highlight parts of the training script that are important for understanding how to modify it, but it doesn't cover every aspect of the script in detail. If you're interested in learning more, feel free to read through the [script](https://github.com/huggingface/diffusers/blob/main/examples/unconditional_image_generation/train_unconditional.py) and let us know if you have any questions or concerns.

</Tip>

The training script provides many parameters to help you customize your training run. All of the parameters and their descriptions are found in the [`parse_args()`](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L55) function. It provides default values for each parameter, such as the training batch size and learning rate, but you can also set your own values in the training command if you'd like.

For example, to speedup training with mixed precision using the bf16 format, add the `--mixed_precision` parameter to the training command:

```bash

accelerate launch train_unconditional.py \

--mixed_precision="bf16"

```

Some basic and important parameters to specify include:

- `--dataset_name`: the name of the dataset on the Hub or a local path to the dataset to train on

- `--output_dir`: where to save the trained model

- `--push_to_hub`: whether to push the trained model to the Hub

- `--checkpointing_steps`: frequency of saving a checkpoint as the model trains; this is useful if training is interrupted, you can continue training from that checkpoint by adding `--resume_from_checkpoint` to your training command

Bring your dataset, and let the training script handle everything else!

## Training script

The code for preprocessing the dataset and the training loop is found in the [`main()`](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L275) function. If you need to adapt the training script, this is where you'll need to make your changes.

The `train_unconditional` script [initializes a `UNet2DModel`](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L356) if you don't provide a model configuration. You can configure the UNet here if you'd like:

```py

model = UNet2DModel(

sample_size=args.resolution,

in_channels=3,

out_channels=3,

layers_per_block=2,

block_out_channels=(128, 128, 256, 256, 512, 512),

down_block_types=(

"DownBlock2D",

"DownBlock2D",

"DownBlock2D",

"DownBlock2D",

"AttnDownBlock2D",

"DownBlock2D",

),

up_block_types=(

"UpBlock2D",

"AttnUpBlock2D",

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

),

)

```

Next, the script initializes a [scheduler](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L418) and [optimizer](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L429):

```py

# Initialize the scheduler

accepts_prediction_type = "prediction_type" in set(inspect.signature(DDPMScheduler.__init__).parameters.keys())

if accepts_prediction_type:

noise_scheduler = DDPMScheduler(

num_train_timesteps=args.ddpm_num_steps,

beta_schedule=args.ddpm_beta_schedule,

prediction_type=args.prediction_type,

)

else:

noise_scheduler = DDPMScheduler(num_train_timesteps=args.ddpm_num_steps, beta_schedule=args.ddpm_beta_schedule)

# Initialize the optimizer

optimizer = torch.optim.AdamW(

model.parameters(),

lr=args.learning_rate,

betas=(args.adam_beta1, args.adam_beta2),

weight_decay=args.adam_weight_decay,

eps=args.adam_epsilon,

)

```

Then it [loads a dataset](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L451) and you can specify how to [preprocess](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L455) it:

```py

dataset = load_dataset("imagefolder", data_dir=args.train_data_dir, cache_dir=args.cache_dir, split="train")

augmentations = transforms.Compose(

[

transforms.Resize(args.resolution, interpolation=transforms.InterpolationMode.BILINEAR),

transforms.CenterCrop(args.resolution) if args.center_crop else transforms.RandomCrop(args.resolution),

transforms.RandomHorizontalFlip() if args.random_flip else transforms.Lambda(lambda x: x),

transforms.ToTensor(),

transforms.Normalize([0.5], [0.5]),

]

)

```

Finally, the [training loop](https://github.com/huggingface/diffusers/blob/096f84b05f9514fae9f185cbec0a4d38fbad9919/examples/unconditional_image_generation/train_unconditional.py#L540) handles everything else such as adding noise to the images, predicting the noise residual, calculating the loss, saving checkpoints at specified steps, and saving and pushing the model to the Hub. If you want to learn more about how the training loop works, check out the [Understanding pipelines, models and schedulers](../using-diffusers/write_own_pipeline) tutorial which breaks down the basic pattern of the denoising process.

## Launch the script

Once you've made all your changes or you're okay with the default configuration, you're ready to launch the training script! 🚀

<Tip warning={true}>

A full training run takes 2 hours on 4xV100 GPUs.

</Tip>

<hfoptions id="launchtraining">

<hfoption id="single GPU">

```bash

accelerate launch train_unconditional.py \

--dataset_name="huggan/flowers-102-categories" \

--output_dir="ddpm-ema-flowers-64" \

--mixed_precision="fp16" \

--push_to_hub

```

</hfoption>

<hfoption id="multi-GPU">

If you're training with more than one GPU, add the `--multi_gpu` parameter to the training command:

```bash

accelerate launch --multi_gpu train_unconditional.py \

--dataset_name="huggan/flowers-102-categories" \

--output_dir="ddpm-ema-flowers-64" \

--mixed_precision="fp16" \

--push_to_hub

```

</hfoption>

</hfoptions>

The training script creates and saves a checkpoint file in your repository. Now you can load and use your trained model for inference:

```py

from diffusers import DiffusionPipeline

import torch

pipeline = DiffusionPipeline.from_pretrained("anton-l/ddpm-butterflies-128").to("cuda")

image = pipeline().images[0]

```

|

huggingface/diffusers/blob/main/docs/source/en/training/unconditional_training.md

|

# 使用 Gradio 进行表格数据科学工作流

Related spaces: https://huggingface.co/spaces/scikit-learn/gradio-skops-integration,https://huggingface.co/spaces/scikit-learn/tabular-playground,https://huggingface.co/spaces/merve/gradio-analysis-dashboard

## 介绍

表格数据科学是机器学习中应用最广泛的领域,涉及的问题从客户分割到流失预测不等。在表格数据科学工作流的各个阶段中,将工作内容传达给利益相关者或客户可能很麻烦,这会阻碍数据科学家专注于重要事项,如数据分析和模型构建。数据科学家可能会花费数小时构建一个接受 DataFrame 并返回图表、预测或数据集中的聚类图的仪表板。在本指南中,我们将介绍如何使用 `gradio` 改进您的数据科学工作流程。我们还将讨论如何使用 `gradio` 和[skops](https://skops.readthedocs.io/en/stable/)一行代码即可构建界面!

### 先决条件

确保您已经[安装](/getting_started)了 `gradio` Python 软件包。

## 让我们创建一个简单的界面!

我们将看一下如何创建一个简单的界面,该界面根据产品信息预测故障。

```python

import gradio as gr

import pandas as pd

import joblib

import datasets

inputs = [gr.Dataframe(row_count = (2, "dynamic"), col_count=(4,"dynamic"), label="Input Data", interactive=1)]

outputs = [gr.Dataframe(row_count = (2, "dynamic"), col_count=(1, "fixed"), label="Predictions", headers=["Failures"])]

model = joblib.load("model.pkl")

# we will give our dataframe as example

df = datasets.load_dataset("merve/supersoaker-failures")

df = df["train"].to_pandas()

def infer(input_dataframe):

return pd.DataFrame(model.predict(input_dataframe))

gr.Interface(fn = infer, inputs = inputs, outputs = outputs, examples = [[df.head(2)]]).launch()

```

让我们来解析上述代码。

- `fn`:推理函数,接受输入数据帧并返回预测结果。

- `inputs`:我们使用 `Dataframe` 组件作为输入。我们将输入定义为具有 2 行 4 列的数据帧,最初的数据帧将呈现出上述形状的空数据帧。当将 `row_count` 设置为 `dynamic` 时,不必依赖于正在输入的数据集来预定义组件。

- `outputs`:用于存储输出的数据帧组件。该界面可以接受单个或多个样本进行推断,并在一列中为每个样本返回 0 或 1,因此我们将 `row_count` 设置为 2,`col_count` 设置为 1。`headers` 是由数据帧的列名组成的列表。

- `examples`:您可以通过拖放 CSV 文件或通过示例传递 pandas DataFrame,界面会自动获取其标题。

现在我们将为简化版数据可视化仪表板创建一个示例。您可以在相关空间中找到更全面的版本。

<gradio-app space="gradio/tabular-playground"></gradio-app>

```python

import gradio as gr

import pandas as pd

import datasets

import seaborn as sns

import matplotlib.pyplot as plt

df = datasets.load_dataset("merve/supersoaker-failures")

df = df["train"].to_pandas()

df.dropna(axis=0, inplace=True)

def plot(df):

plt.scatter(df.measurement_13, df.measurement_15, c = df.loading,alpha=0.5)

plt.savefig("scatter.png")

df['failure'].value_counts().plot(kind='bar')

plt.savefig("bar.png")

sns.heatmap(df.select_dtypes(include="number").corr())

plt.savefig("corr.png")

plots = ["corr.png","scatter.png", "bar.png"]

return plots

inputs = [gr.Dataframe(label="Supersoaker Production Data")]

outputs = [gr.Gallery(label="Profiling Dashboard", columns=(1,3))]

gr.Interface(plot, inputs=inputs, outputs=outputs, examples=[df.head(100)], title="Supersoaker Failures Analysis Dashboard").launch()

```

<gradio-app space="gradio/gradio-analysis-dashboard-minimal"></gradio-app>

我们将使用与训练模型相同的数据集,但这次我们将创建一个可视化仪表板以展示它。

- `fn`:根据数据创建图表的函数。

- `inputs`:我们使用了与上述相同的 `Dataframe` 组件。

- `outputs`:我们使用 `Gallery` 组件来存放我们的可视化结果。

- `examples`:我们将数据集本身作为示例。

## 使用 skops 一行代码轻松加载表格数据界面

`skops` 是一个构建在 `huggingface_hub` 和 `sklearn` 之上的库。通过最新的 `gradio` 集成,您可以使用一行代码构建表格数据界面!

```python

import gradio as gr

# 标题和描述是可选的

title = "Supersoaker产品缺陷预测"

description = "该模型预测Supersoaker生产线故障。在下面的数据帧组件中,您可以拖放数据集的任意切片或自行编辑值。"

gr.load("huggingface/scikit-learn/tabular-playground", title=title, description=description).launch()

```

<gradio-app space="gradio/gradio-skops-integration"></gradio-app>

使用 `skops` 将 `sklearn` 模型推送到 Hugging Face Hub 时,会包含一个包含示例输入和列名的 `config.json` 文件,解决的任务类型是 `tabular-classification` 或 `tabular-regression`。根据任务类型,`gradio` 构建界面并使用列名和示例输入来构建它。您可以[参考 skops 在 Hub 上托管模型的文档](https://skops.readthedocs.io/en/latest/auto_examples/plot_hf_hub.html#sphx-glr-auto-examples-plot-hf-hub-py)来了解如何使用 `skops` 将模型推送到 Hub。

|

gradio-app/gradio/blob/main/guides/cn/05_tabular-data-science-and-plots/using-gradio-for-tabular-workflows.md

|

!--Copyright 2022 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

⚠️ Note that this file is in Markdown but contain specific syntax for our doc-builder (similar to MDX) that may not be

rendered properly in your Markdown viewer.

-->

# YOLOS

## Overview