pretty_name: Cartoon Set

size_categories:

- 10K<n<100K

task_categories:

- image

- computer-vision

- generative-modelling

license: cc-by-4.0

Dataset Card for Cartoon Set

Table of Contents

Dataset Description

- Homepage: https://google.github.io/cartoonset/

- Repository: https://github.com/google/cartoonset/

- Paper: XGAN: Unsupervised Image-to-Image Translation for Many-to-Many Mappings

- Leaderboard:

- Point of Contact:

Dataset Summary

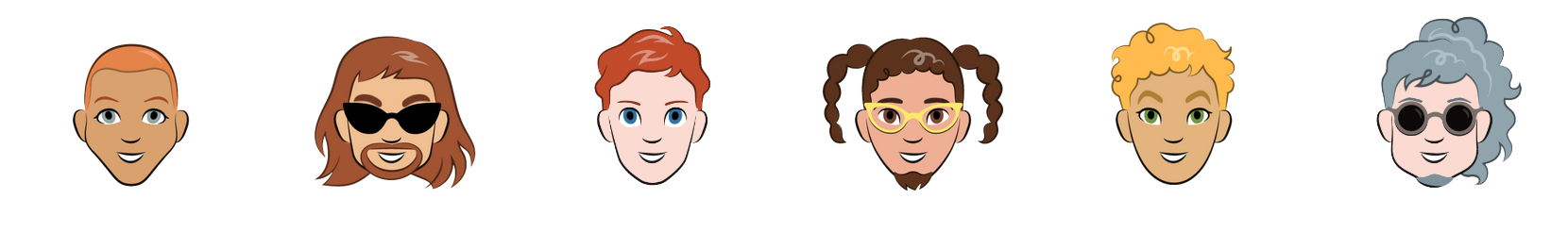

Cartoon Set is a collection of random, 2D cartoon avatar images. The cartoons vary in 10 artwork categories, 4 color categories, and 4 proportion categories, with a total of ~10^13 possible combinations. We provide sets of 10k and 100k randomly chosen cartoons and labeled attributes.

Usage

cartoonset provides the images as PNG byte strings, this gives you a bit more flexibility into how to load the data. Here we show 2 ways:

Using PIL:

import datasets

from io import BytesIO

from PIL import Image

ds = datasets.load_dataset("cgarciae/cartoonset", "10k") # or "100k"

def process_fn(sample):

img = Image.open(BytesIO(sample["img_bytes"]))

...

return {"img": img}

ds = ds.map(process_fn, remove_columns=["img_bytes"])

Using TensorFlow:

import datasets

import tensorflow as tf

hfds = datasets.load_dataset("cgarciae/cartoonset", "10k") # or "100k"

ds = tf.data.Dataset.from_generator(

lambda: hfds,

output_signature={

"img_bytes": tf.TensorSpec(shape=(), dtype=tf.string),

},

)

def process_fn(sample):

img = tf.image.decode_png(sample["img_bytes"], channels=3)

...

return {"img": img}

ds = ds.map(process_fn)

Additional features: You can also access the features that generated each sample e.g:

ds = datasets.load_dataset("cgarciae/cartoonset", "10k+features") # or "100k+features"

Apart from img_bytes these configurations add a total of 18 * 2 additional int features, these come in {feature}, {feature}_num_categories pairs where num_categories indicates the number of categories for that feature. See Data Fields for the complete list of features.

Dataset Structure

Data Instances

A sample from the training set is provided below:

{

'img_bytes': b'0x...',

}

If +features is added to the dataset name, the following additional fields are provided:

{

'img_bytes': b'0x...',

'eye_angle': 0,

'eye_angle_num_categories': 3,

'eye_lashes': 0,

'eye_lashes_num_categories': 2,

'eye_lid': 0,

'eye_lid_num_categories': 2,

'chin_length': 2,

'chin_length_num_categories': 3,

...

}

Data Fields

img_bytes: A byte string containing the raw data of a 500x500 PNG image.

If +features is appended to the dataset name, the following additional int32 fields are provided:

eye_angleeye_angle_num_categorieseye_lasheseye_lashes_num_categorieseye_lideye_lid_num_categorieschin_lengthchin_length_num_categorieseyebrow_weighteyebrow_weight_num_categorieseyebrow_shapeeyebrow_shape_num_categorieseyebrow_thicknesseyebrow_thickness_num_categoriesface_shapeface_shape_num_categoriesfacial_hairfacial_hair_num_categoriesfacial_hair_num_categoriesfacial_hair_num_categorieshairhair_num_categorieshair_num_categorieshair_num_categorieseye_coloreye_color_num_categoriesface_colorface_color_num_categorieshair_colorhair_color_num_categoriesglassesglasses_num_categoriesglasses_colorglasses_color_num_categorieseyes_slanteye_slant_num_categorieseyebrow_widtheyebrow_width_num_categorieseye_eyebrow_distanceeye_eyebrow_distance_num_categories

Data Splits

Train

Dataset Creation

Licensing Information

This data is licensed by Google LLC under a Creative Commons Attribution 4.0 International License.

Citation Information

@article{DBLP:journals/corr/abs-1711-05139,

author = {Amelie Royer and

Konstantinos Bousmalis and

Stephan Gouws and

Fred Bertsch and

Inbar Mosseri and

Forrester Cole and

Kevin Murphy},

title = {{XGAN:} Unsupervised Image-to-Image Translation for many-to-many Mappings},

journal = {CoRR},

volume = {abs/1711.05139},

year = {2017},

url = {http://arxiv.org/abs/1711.05139},

eprinttype = {arXiv},

eprint = {1711.05139},

timestamp = {Mon, 13 Aug 2018 16:47:38 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1711-05139.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}