metadata

license: mit

task_categories:

- text-generation

language:

- en

tags:

- code

pretty_name: OmniACT

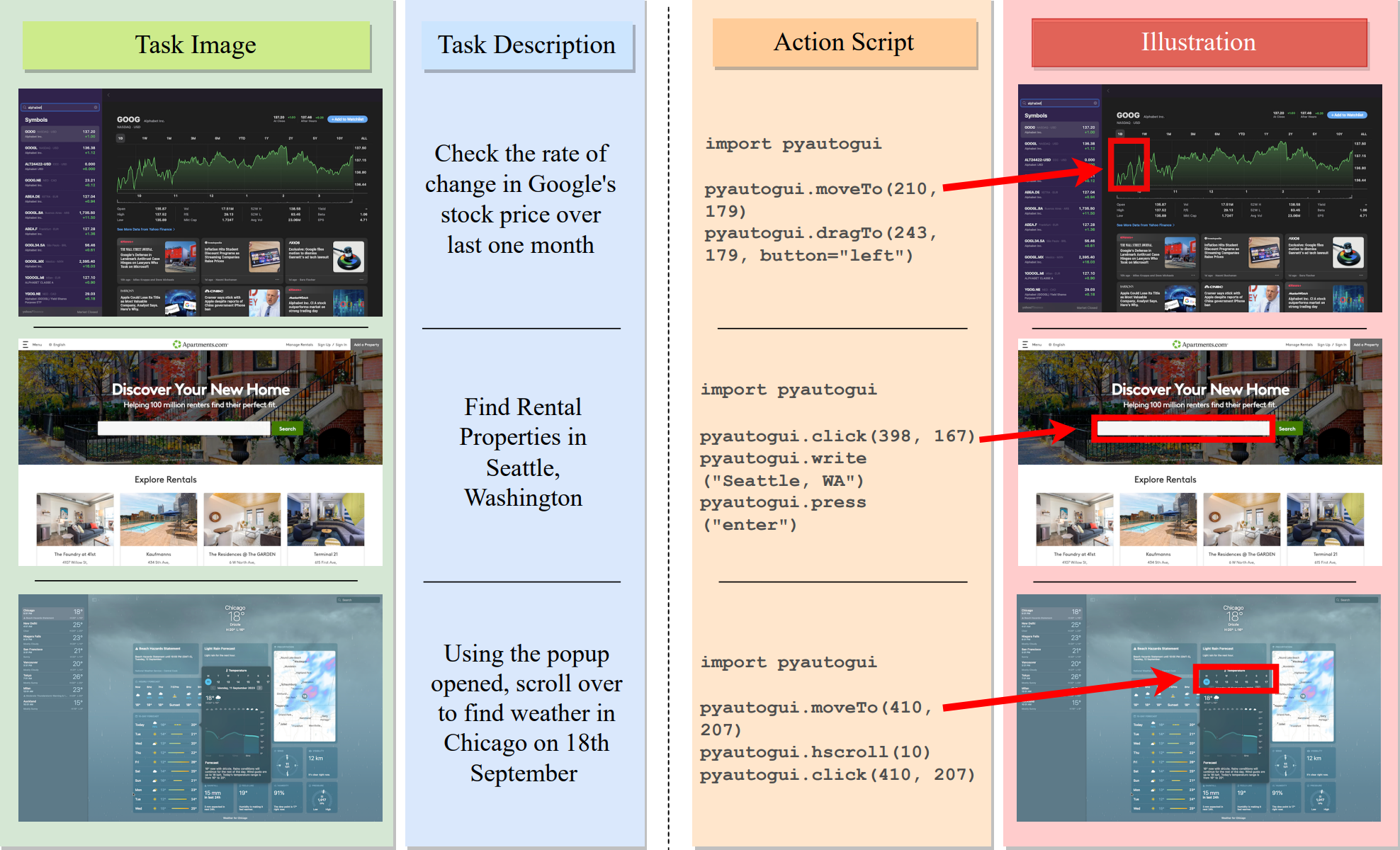

Dataset for OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web

Splits:

| split_name | count |

|---|---|

| train | 6788 |

| test | 2020 |

| val | 991 |

Example datapoint:

"2849": {

"task": "data/tasks/desktop/ibooks/task_1.30.txt",

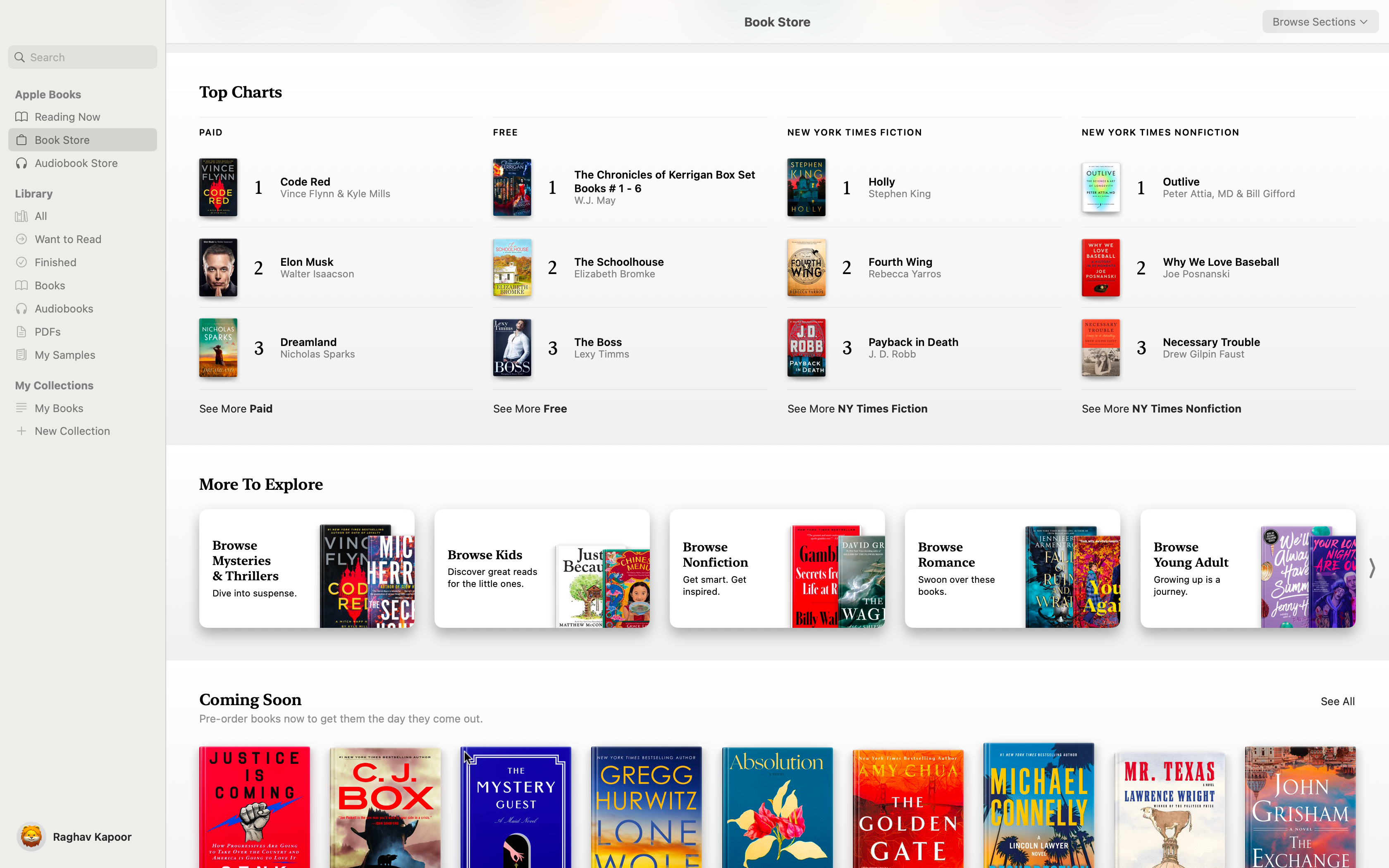

"image": "data/data/desktop/ibooks/screen_1.png",

"ocr": "ocr/desktop/ibooks/screen_1.json",

"color": "detact_color/desktop/ibooks/screen_1.json",

"icon": "detact_icon/desktop/ibooks/screen_1.json",

"box": "data/metadata/desktop/boxes/ibooks/screen_1.json"

},

where:

task- contains natural language description ("Task") along with the corresponding PyAutoGUI code ("Output Script"):

Task: Navigate to see the upcoming titles

Output Script:

pyautogui.moveTo(1881.5,1116.0)

image- screen image where the action is performed

To cite OmniACT, please use:

@misc{kapoor2024omniact, title={OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web}, author={Raghav Kapoor and Yash Parag Butala and Melisa Russak and Jing Yu Koh and Kiran Kamble and Waseem Alshikh and Ruslan Salakhutdinov}, year={2024}, eprint={2402.17553}, archivePrefix={arXiv}, primaryClass={cs.AI} } ```