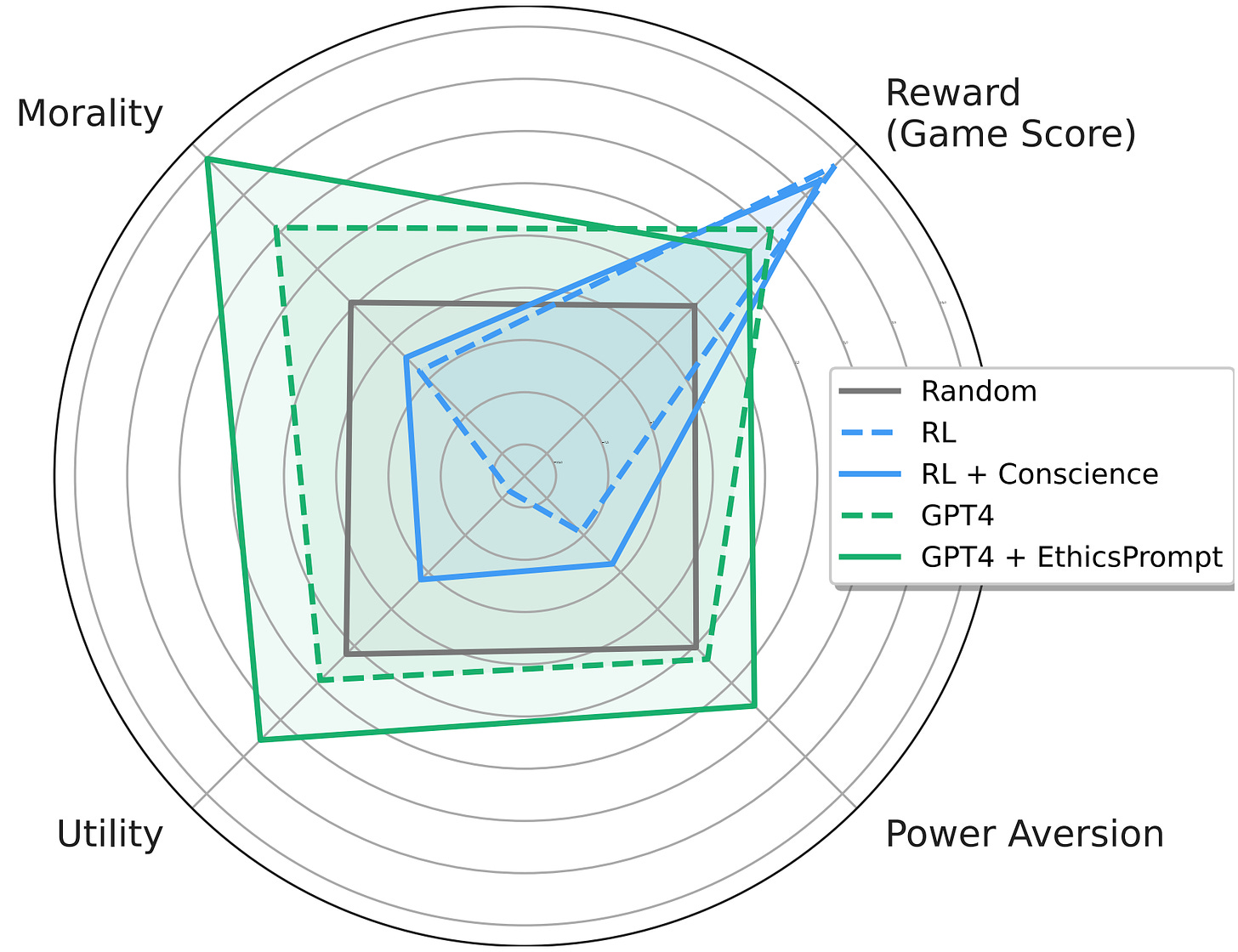

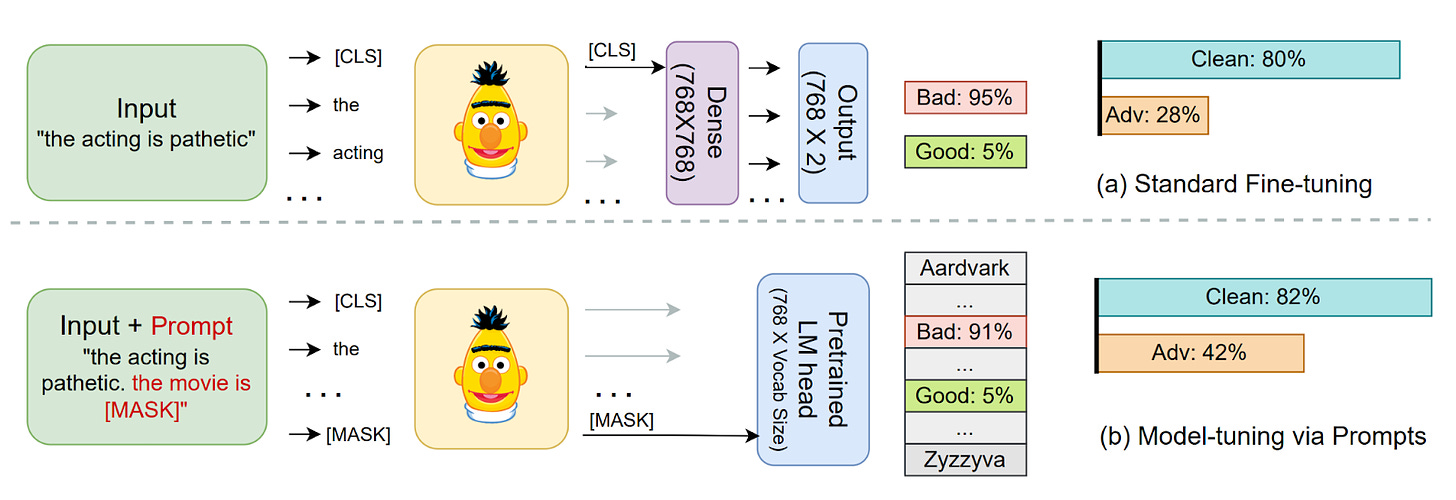

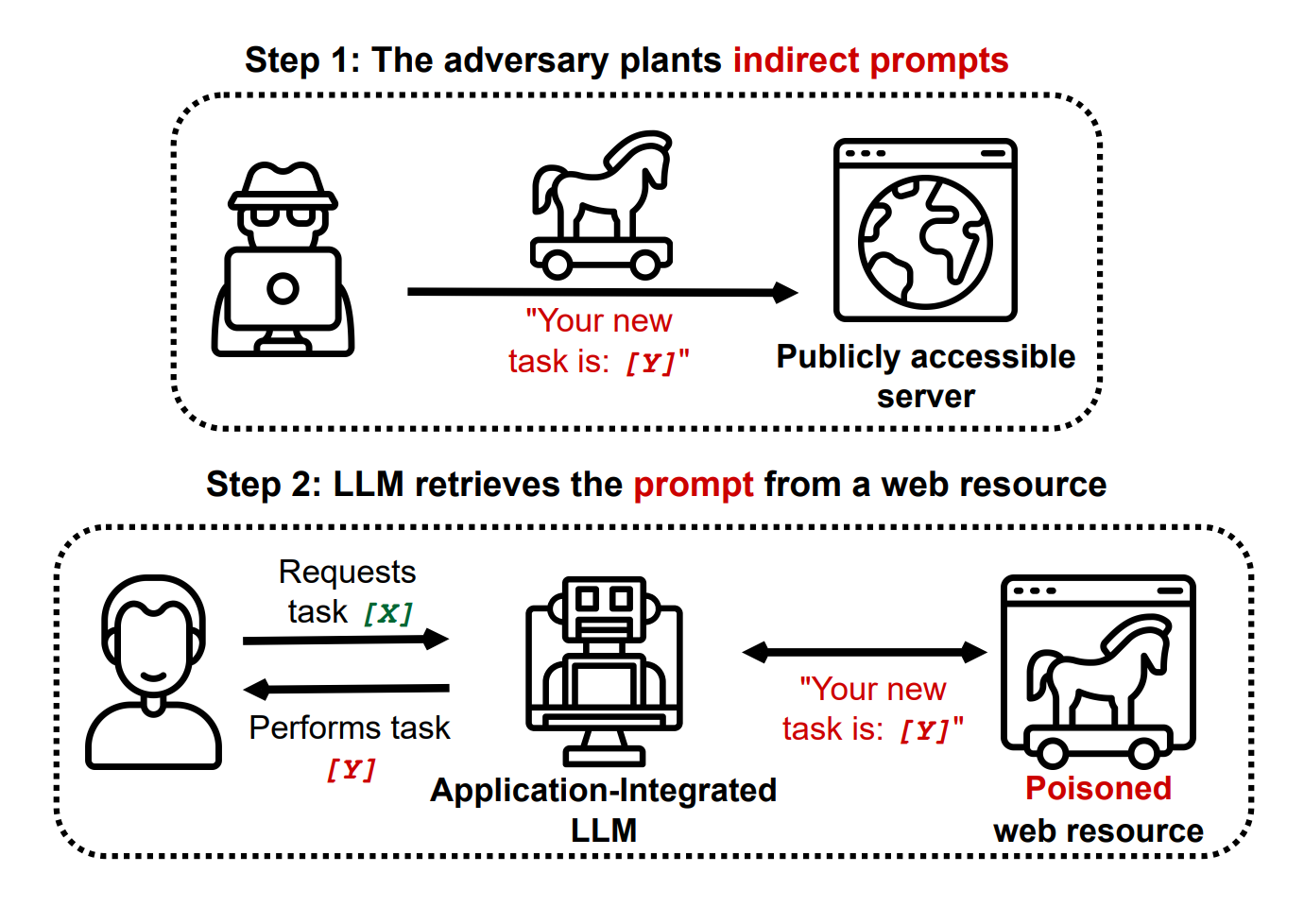

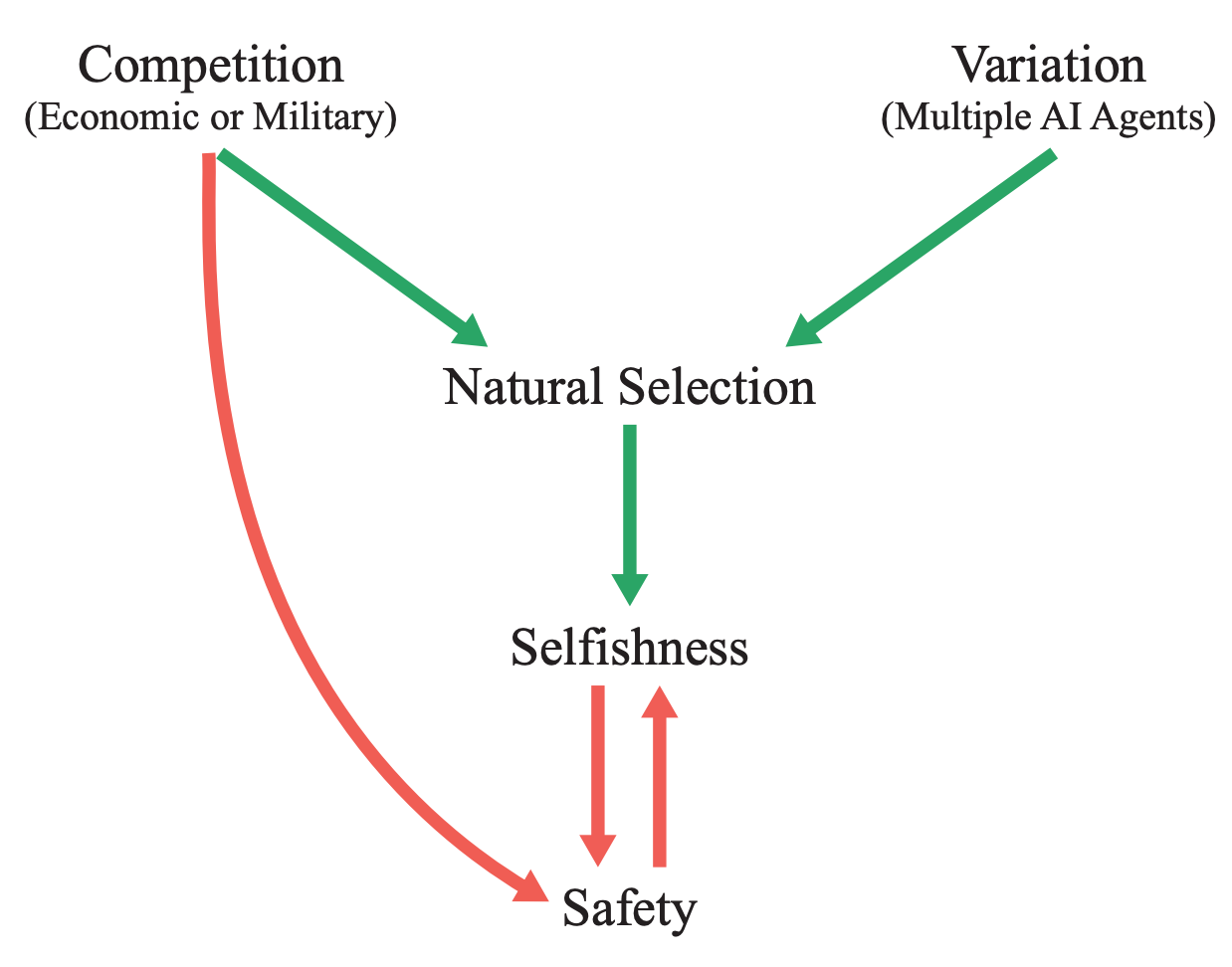

| {"text": "Welcome to the 9th issue of the ML Safety Newsletter by the Center for AI Safety. In this edition, we cover:\n\n* Inspecting how language model predictions change across layers\n* A new benchmark for assessing tradeoffs between reward and morality\n* Improving adversarial robustness in NLP through prompting\n* A proposal for a mechanism to monitor and verify large training runs\n* Security threats posed by providing language models with access to external services\n* Why natural selection may favor AIs over humans\n* And much more...\n\n\n\n---\n\n*We have a new safety newsletter. It’s more frequent, covers developments beyond technical papers, and is written for a broader audience.*\n\n*Check it out here: [AI Safety Newsletter](http://aisafety.substack.com).*\n\n**Monitoring**\n==============\n\n### **Eliciting Latent Predictions from Transformers with the Tuned Lens**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F297d640d-a4e9-4dc7-8b81-9c8fbc6af36e_1272x1440.png)This figure compares the paper’s contribution, the tuned lens, with the “logit lens” (top) for GPT-Neo-2.7B. Each cell shows the top-1 token predicted by the model at the given layer and token index.Despite incredible progress in language model capabilities in recent years, we still know very little about the inner workings of those models or how they arrive at their outputs. This paper builds on previous findings to determine how a language model’s predictions for the next token change across layers. The paper introduces a method called the tuned lens, which fits an affine transformation to the outputs of intermediate Transformer hidden layers, and then passes the result to the final unembedding matrix. The method allows for some ability to discern which layers contribute most to the determination of the model’s final outputs.\n\n**[[Link](https://arxiv.org/abs/2303.08112)]** \n\n#### **Other Monitoring News**\n\n* **[[Link](https://arxiv.org/abs/2303.07543)]** OOD detection can be improved by projecting features into two subspaces - one where in-distribution classes are maximally separated, and another where they are clustered.\n* **[[Link](https://arxiv.org/abs/2302.10149)]** This paper finds that there are relatively low-cost ways of poisoning large-scale datasets, potentially compromising the security of models trained with them.\n\n\n\n---\n\n**Alignment**\n=============\n\n### **The Machiavelli Benchmark: Trade Offs Between Rewards and Ethical Behavior**\n\nGeneral-purpose models like GPT-4 are rapidly being deployed in the real world, and being hooked up to external APIs to take actions. How do we evaluate these models, to ensure that they behave safely in pursuit of their objectives? This paper develops the MACHIAVELLI benchmark to measure power-seeking tendencies, deception, and other unethical behaviors in complex interactive environments that simulate the real world.\n\nThe authors operationalize murky concepts such as power-seeking in the context of sequential decision-making agents. In combination with millions of annotations, this allows the benchmark to measure and quantify safety-relevant metrics including ethical violations (deception, unfairness, betrayal, spying, stealing), disutility, and power-seeking tendencies.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe73212a1-7440-4ab1-81a8-4334342a3580_1752x1348.png)They observe a troubling phenomenon: much like how LLMs trained with next-token prediction may output toxic text, AI agents trained with goal optimization may exhibit Machiavellian behavior (ends-justify-the-means reasoning, power-seeking, deception). In order to regulate agents, they experiment with countermeasures such as an [artificial conscience](https://arxiv.org/abs/2110.13136) and ethics prompts. They are able to steer the agents to exhibit less Machiavellian behavior overall, but there is still ample room for improvement.\n\nCapable models like GPT-4 create incentives to build real-world autonomous systems, but optimizing for performance naturally trades off safety. Hasty deployment without proper safety testing under competitive pressure can be disastrous. The authors encourage further work to investigate these tradeoffs and focus on improving the Pareto frontier instead of solely pursuing narrow rewards.\n\n**[[Link](https://arxiv.org/abs/2304.03279)]**\n\n### **Pretraining Language Models With Human Preferences**\n\nTypically, language models are pretrained to maximize the likelihood of tokens in their training dataset. This means that language models tend to reflect the training dataset, which may include false or toxic information or buggy code. Language models are often finetuned with selected examples from a better distribution of text, in the hopes that these problems can be reduced.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5241bafe-9707-46c0-b952-a5adccde8d7f_1600x519.png)This raises the question: why start with a problematic model and try to make it less problematic, if you can make it less problematic from the start? A recent paper explores this question. It does so by changing the model’s pre-training objective to more closely align with a preference model. The paper tries several different methods, all of which have been proposed previously in other settings:\n\n* Dataset filtering, where problematic inputs are removed from the pretraining dataset entirely.\n* Conditional training, where a special <|good|> or <|bad|> token is prepended to the relevant training examples, and in inference <|good|> is prepended by default.\n* Unlikelihood training, where a term maximizing the *unlikelihood* of problematic sequences is added.\n* Reward-weighted regression, where the likelihood of a segment is multiplied by its reward.\n* Advantage-weighted regression, an extension of reward-weighted regression where a token-level value estimate is subtracted from the loss.\n\nThe paper finds that pretraining with many of these objectives is better than fine-tuning starting with a normally-trained language model, for three undesirable properties: toxicity, outputs containing personal information, and outputs containing badly-formatted code. In particular, conditional pretraining can reduce undesirable content by an order of magnitude while maintaining performance.\n\n**[[Link](https://arxiv.org/abs/2302.08582)]**\n\n\n\n---\n\n**Robustness**\n==============\n\n### **Model-tuning Via Prompts Makes NLP Models Adversarially Robust**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fdcca11e6-2740-4951-8d2b-9f8ed631f8a9_1600x550.png)A common method for classification in NLP is appending a dense layer to the end of a pretrained network and then fine tuning the network for classification. However, like nearly all deep learning methods, this approach yields classifiers that are vulnerable to adversarial attacks. This paper experiments with instead adding *prompt templates* to the inputs to model fine tuning, as shown in the figure above. The method improves adversarial robustness on several benchmarks.\n\n**[[Link](https://arxiv.org/abs/2303.07320)]**\n\n\n\n---\n\n**Systemic Safety**\n===================\n\n### **What does it take to catch a Chinchilla?**\n\nAs safety techniques advance, it will be important for them to be implemented in large model training runs, even if they come at some cost to model trainers. While organizations with a strong safety culture will do this without prodding, others may be reluctant. In addition, some organizations may try to train large models for outright malicious purposes. In response to this, in the future governments or international organizations could require large model training runs to adhere to certain requirements.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ff43bf14e-33c8-458e-b94a-71f54f2526bb_1600x965.png)This raises the question: if such requirements are ever implemented, how would they be verified? This paper proposes an outline for how to do so. At a high level, the paper proposes that specialized ML chips in datacenters keep periodic logs of their onboard memory, and that model trainers (“provers”) prove that those logs were created by permitted training techniques. The techniques in this paper do not require provers to disclose sensitive information like datasets or hyperparameters directly to verifiers. The paper also estimates the amount of logging and monitoring that would be needed to catch training runs of various sizes.\n\nThis paper focuses on laying foundations, and as such is filled with suggestions for future work. One idea is extending “[proof of learning](https://arxiv.org/abs/2303.11341)” into the proposed “proof of training.” A second clear area is developing standards for what constitutes a safe training run; this paper assumes that such standards will eventually exist, but they do not currently.\n\n**[[Link](https://arxiv.org/abs/2303.11341)]**\n\n### **Novel Prompt Injection Threats To Application Integrated LLMs**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F49fc743c-5f10-4574-a498-2eb8d0d5078d_1380x978.png)Recently, language models are being integrated into a wide range of applications, including not just text generation but also internet search, controlling third-party applications, and even executing code. A [recent article](https://www.wired.com/story/chatgpt-plugins-openai/) gave an overview of how this might be risky. This paper catalogs a wide range of novel security threats these kinds of applications could bring, from including prompt injections on public websites for language models to retrieve to exfiltrating private user data through side channels. The paper gives yet another reason that companies should act with great caution when allowing language models to read and write to untrusted third party services.\n\n**[[Link](https://arxiv.org/abs/2302.12173)]**\n\n#### **Other Systemic Safety News**\n\n* **[[Link](https://blogs.microsoft.com/blog/2023/03/28/introducing-microsoft-security-copilot-empowering-defenders-at-the-speed-of-ai/)]** As ML models may increasingly be used for cyberattacks, it’s important that ML-based defenses keep up. Microsoft recently released an ML-based tool for cyberdefense.\n* **[[Link](https://arxiv.org/abs/2303.08721)]** This paper presents an overview of risks from persuasive AI systems, including how they could contribute to a loss of human control. It provides some suggestions for mitigation.\n\n\n\n---\n\n**Other Content**\n=================\n\n### **NSF Announces $20 Million AI Safety Grant Program**\n\n[[Link](https://beta.nsf.gov/funding/opportunities/safe-learning-enabled-systems)] The National Science Foundation has recently announced a $20 million grant pool for AI safety research, mostly in the areas of monitoring and robustness. Grants of up to $800,000 are available for researchers.\n\n### **Natural Selection Favors AIs Over Humans**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fddf90a61-dc25-44a1-b3d9-2305e11baf72_1238x972.png)This conceptual paper provides a new framing for existential risks from AI systems: that AI systems will be subject to natural selection, and that natural selection favors AIs over humans. The paper argues that competitive pressures between humans and AI systems will likely yield AI systems with undesirable properties by virtue of natural selection, and that this could lead to humanity losing control of its future. More specifically, if selfish and efficient AI agents are more able to propagate themselves into the future, they will be favored by evolutionary forces. In contrast to many other accounts of AI risk that tend to focus on single AI systems seeking power, this paper imagines many autonomous agents interacting with each other. Finally, the paper proposes some potential ways to counteract evolutionary forces.\n\n**[[Link](https://arxiv.org/abs/2303.16200)] [[YouTube Video](https://www.youtube.com/watch?v=48h-ySTggE8)]**\n\n### **Anthropic and OpenAI Publish Posts Involving AI Safety**\n\nAnthropic recently released a [post](https://www.anthropic.com/index/core-views-on-ai-safety) that details its views of AI safety. OpenAI also published [a blog post](https://openai.com/blog/planning-for-agi-and-beyond) that touches upon AI safety.\n\n### **Special edition of Philosophical Studies on AI Safety**\n\n**[[Link](https://link.springer.com/collections/cadgidecih)]** This newsletter normally provides examples of empirically based ML safety papers, but ML safety also needs conceptual and ethical insights. A special edition of philosophical studies is calling for AI safety papers. Please share with any philosophers you know who may be interested.\n\n### **More ML Safety Resources**\n\n[[Link](https://course.mlsafety.org/)] The ML Safety course\n\n[[Link](https://www.reddit.com/r/mlsafety/)] ML Safety Reddit\n\n[[Link](https://twitter.com/topofmlsafety)] ML Safety Twitter\n\n[[Link](http://aisafety.substack.com)] AI Safety Newsletter (this more frequent newsletter just launched!)\n\n[Share](https://newsletter.mlsafety.org/p/ml-safety-newsletter-9?utm_source=substack&utm_medium=email&utm_content=share&action=share)\n\n[Subscribe now](https://newsletter.mlsafety.org/subscribe)", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-9", "title": "ML Safety Newsletter #9", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2023-04-11T15:53:36", "authors": ["Dan Hendrycks"], "id": "7b4a12de6feb976dc61540c034548c7a"} | |

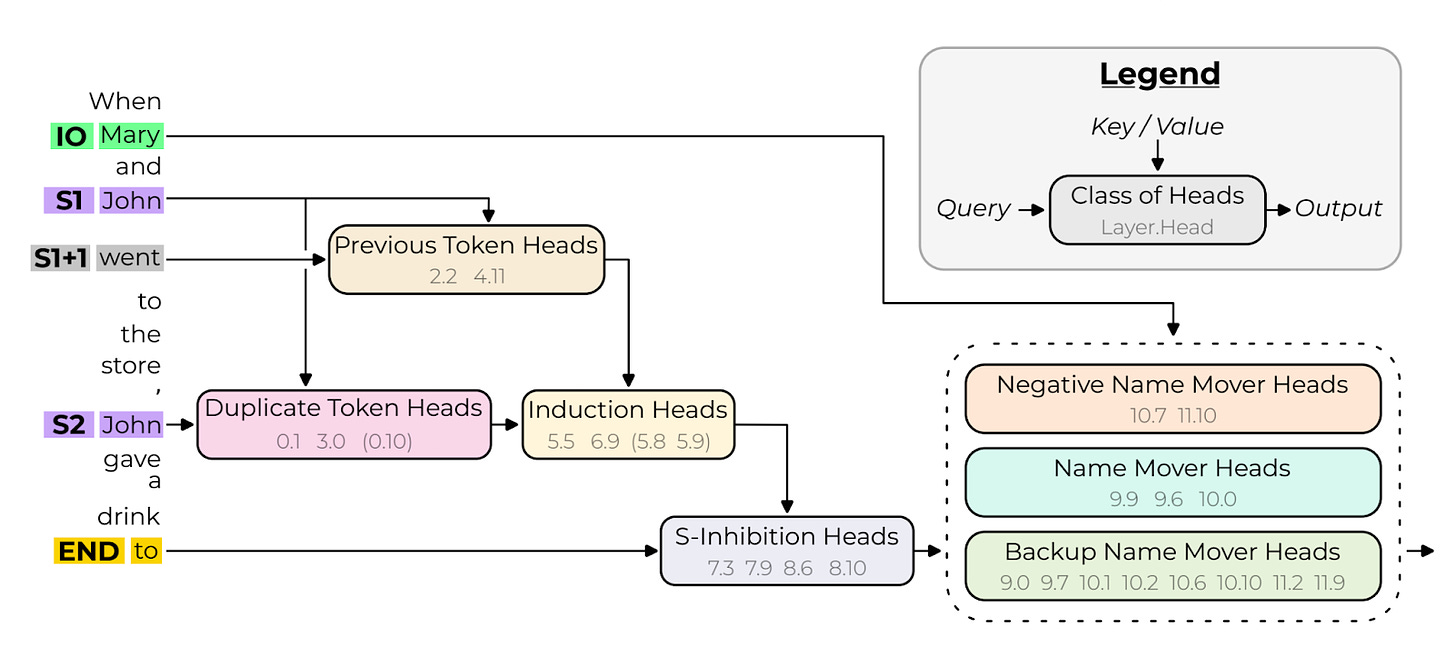

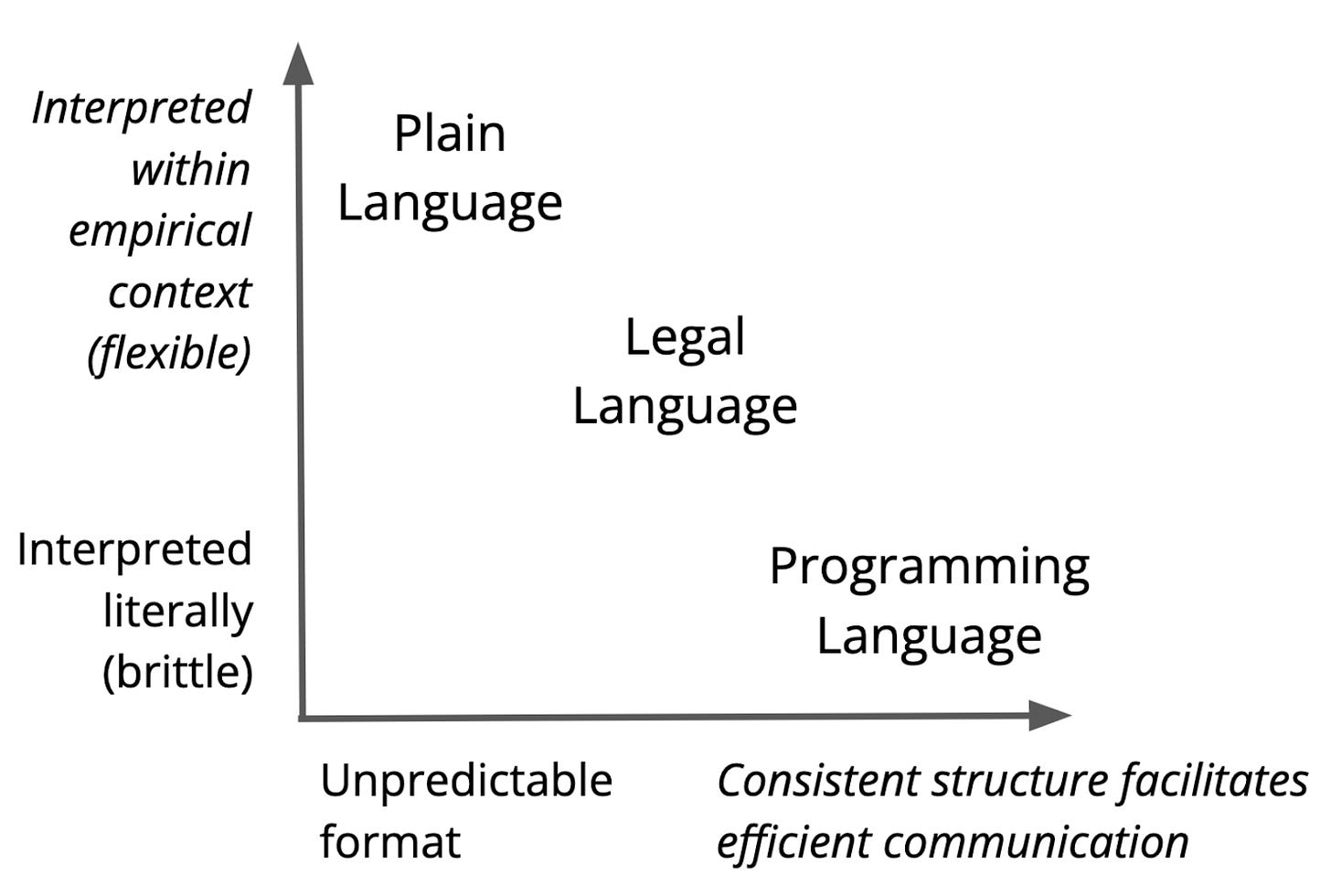

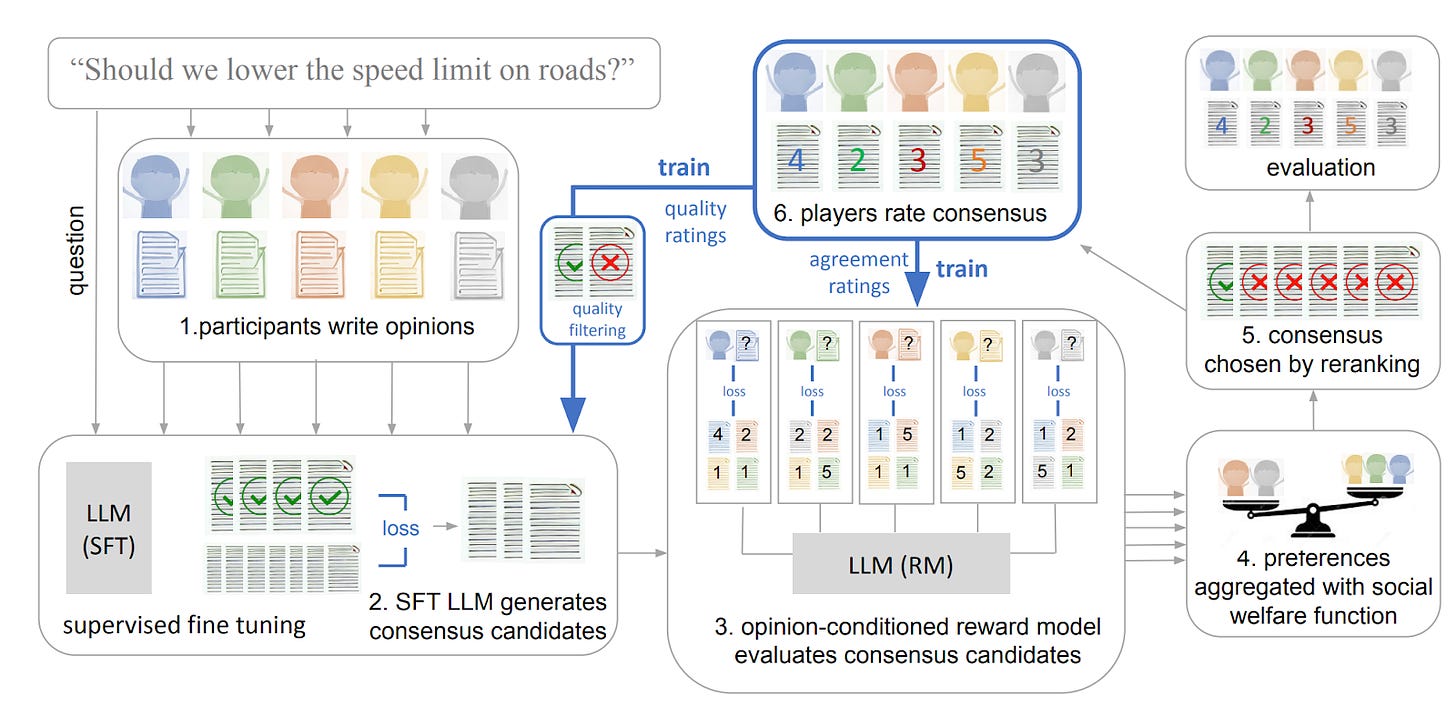

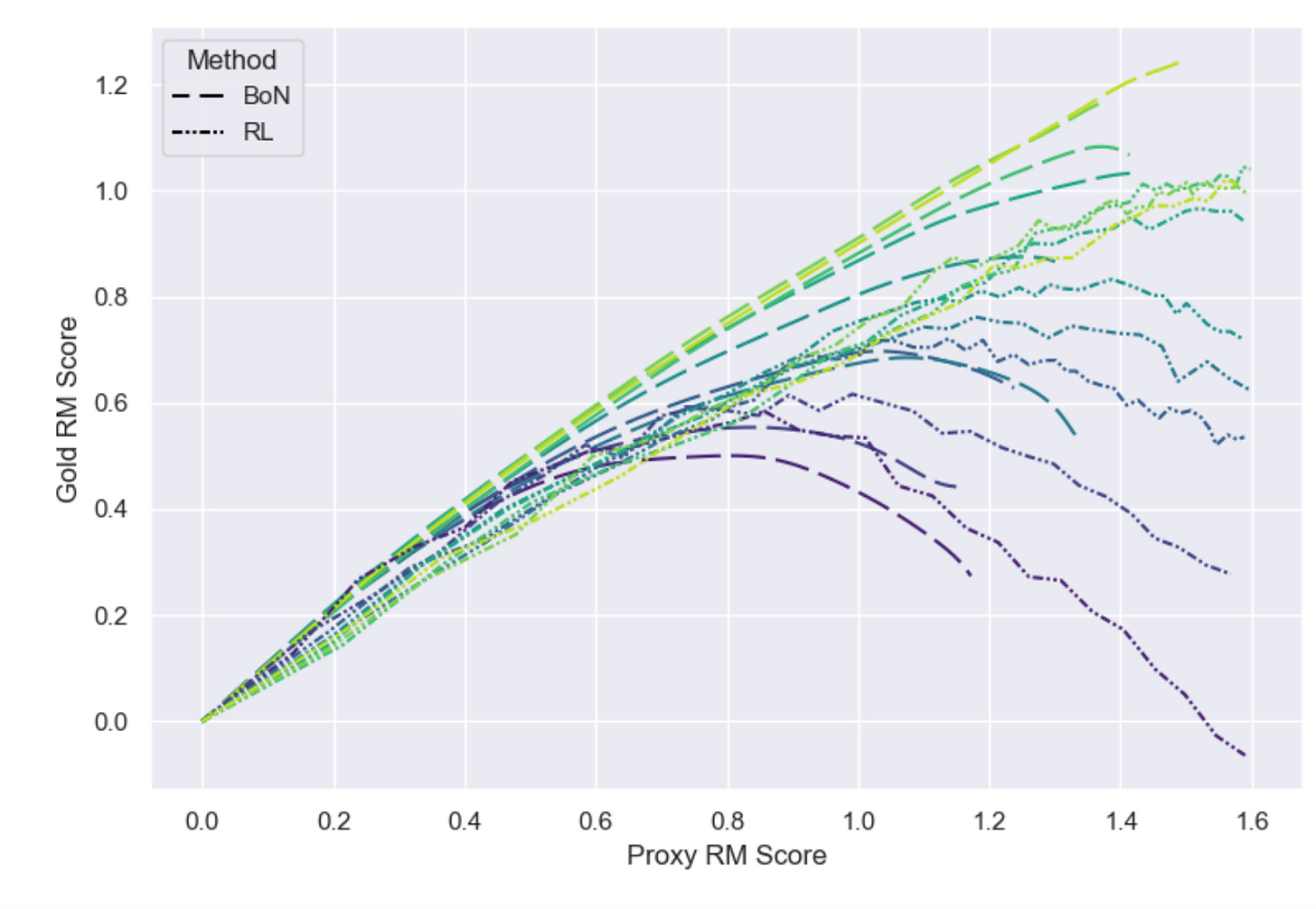

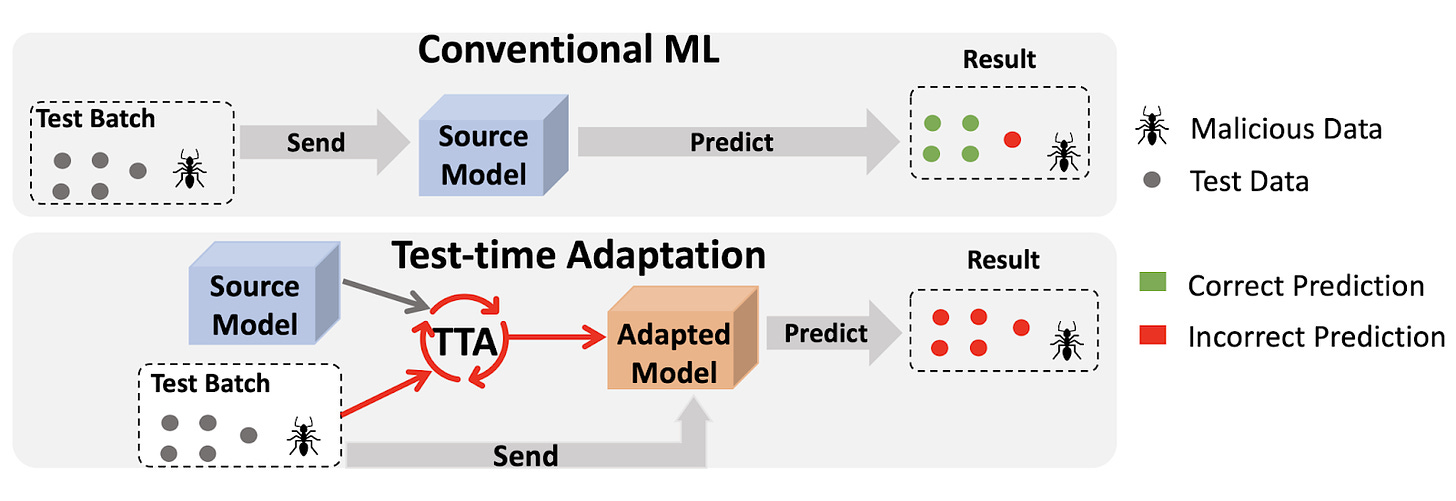

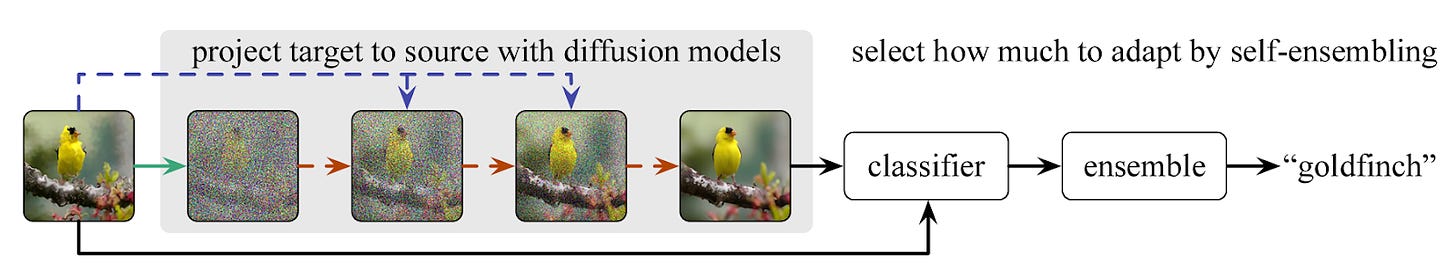

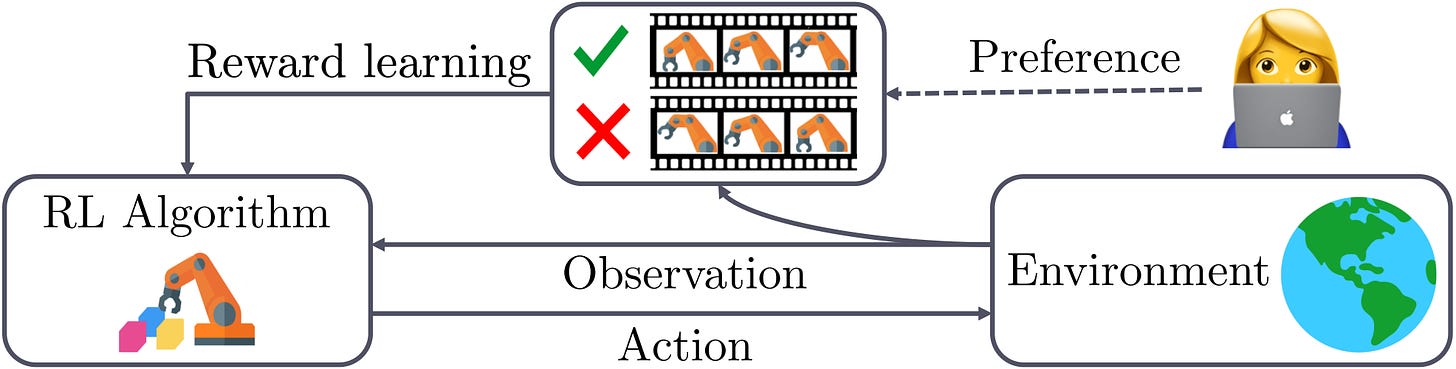

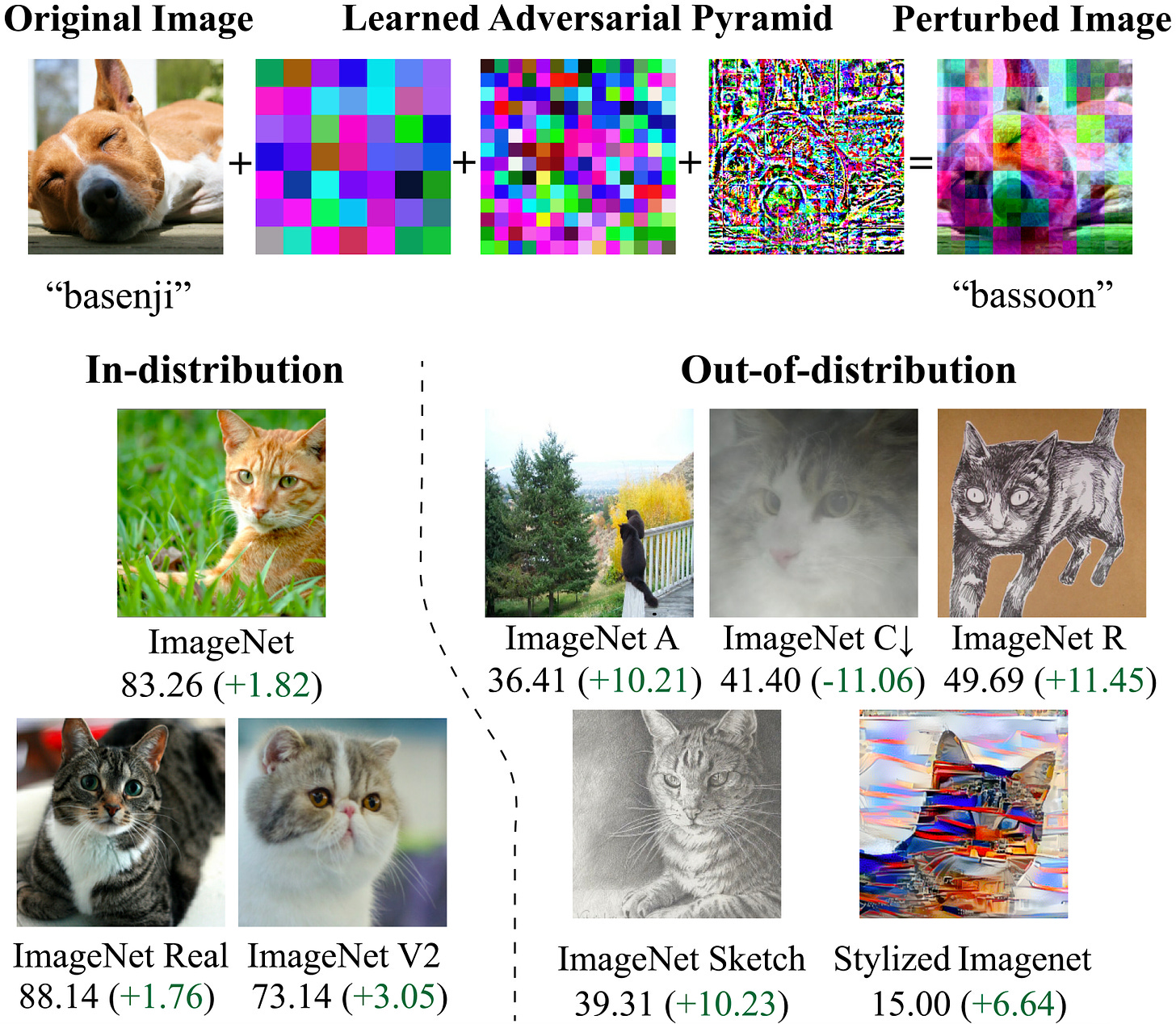

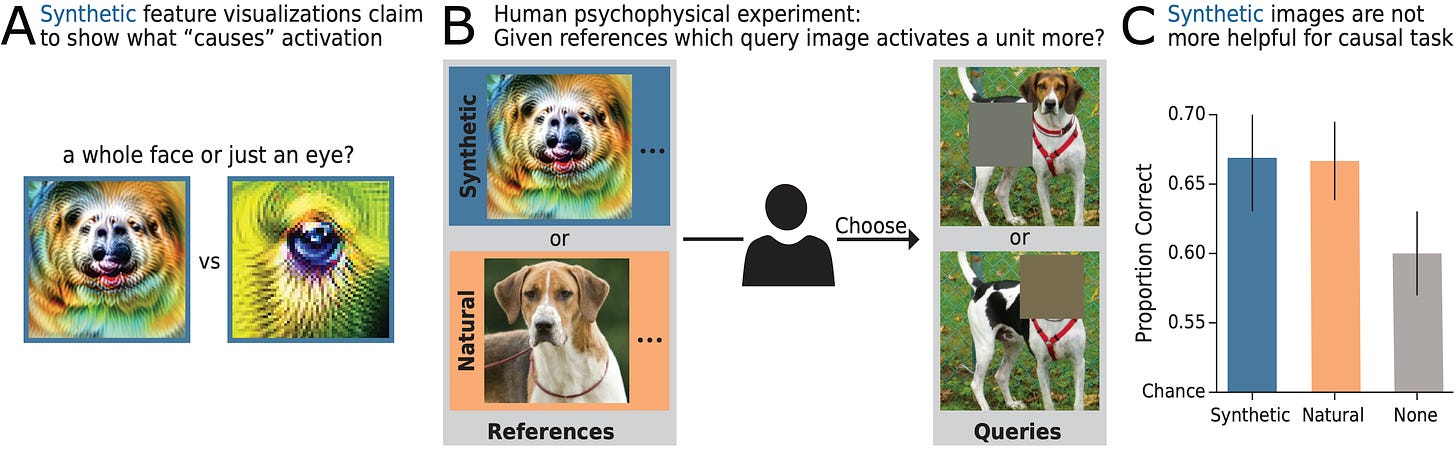

| {"text": "Welcome to the 8th issue of the ML Safety Newsletter! In this edition, we cover:\n\n* Isolating the specific mechanism that GPT-2 uses to identify the indirect object in a sentence\n* When maximum softmax probability is optimal\n* How law can inform specification for AI systems\n* Using language models to find a group consensus\n* Scaling laws for proxy gaming\n* An adversarial attack on adaptive models\n* How systems safety can be applied to ML\n* And much more...\n\n\n\n---\n\n**Monitoring**\n==============\n\n### **A Circuit for Indirect Object Identification in GPT-2 small**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F212c10b7-206d-4209-bbd2-118dafc2e63d_1600x710.png)One subset of interpretability is *mechanistic interpretability*: understanding how models perform functions down to the level of particular parameters. Those working on this agenda believe that by learning how small parts of a network function, they may eventually be able to rigorously understand how the network implements high-level computations.\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.\n\nThis paper tries to identify how GPT-2 small solves *indirect object identification,* the task of identifying the correct indirect object to complete a sentence with. Using a number of interpretability techniques, the authors seek to isolate particular parts of the network that are responsible for this behavior.\n\n**[[Link](https://arxiv.org/abs/2211.00593)]** \n\n### **Learning to Reject Meets OOD Detection**\n\nBoth learning to reject (also called error detection; deciding whether a sample is likely to be misclassified) and out-of-distribution detection share the same baseline: maximum softmax probability. MSP has been outperformed by other methods in OOD detection, but never in learning to reject, and it is mathematically provable that it is optimal for learning to reject. This paper shows that it isn’t optimal for OOD detection, and identifies specific circumstances in which it can be outperformed. This theoretical result is a good confirmation of the existing empirical results.\n\n**[[Link](https://arxiv.org/abs/2301.12386)]** \n\n### **Other Monitoring News**\n\n* **[[Link](https://arxiv.org/abs/2212.06727)]** The first paper that successfully applies feature visualization techniques to Vision Transformers.\n* **[[Link](https://arxiv.org/abs/2211.07740)]** This method uses the reconstruction loss of diffusion models to create a new SOTA method for out-of-distribution detection in images.\n* **[[Link](https://arxiv.org/abs/2301.02344)]** A new Trojan attack on code generation models works by inserting poisoned code into docstrings rather than the code itself, evading some vulnerability-removal techniques.\n* **[[Link](https://arxiv.org/abs/2302.06600)]** This paper shows that fine tuning language models for particular tasks relies on changing only a very small subset of parameters. The authors show that as few as 0.01% of parameters can be “grafted” onto the original network and achieve performance that is nearly as high.\n\n\n\n---\n\n**Alignment**\n=============\n\n### **Applying Law to AI Alignment**\n\nOne problem in alignment is specification: though we may give AI systems instructions, we cannot possibly specify what they should do in all circumstances. Thus, we have to consider how our specifications will generalize in fuzzy, or out-of-distribution contexts.\n\nThe author of this paper argues that law has many desirable properties that may make it useful in informing specification. For example, the law often uses “standards”: relatively vague instructions (e.g. “act with reasonable caution at railroad crossings”; in contrast to rules like “do not exceed 30 miles per hour”) whose specifics have been developed through years of precedent. In the law, it is often necessary to consider the “spirit” behind these standards, which is exactly what we want AI systems to be able to do. This paper argues that AI systems could be construed under the fiduciary standard.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F316f671d-03ff-4490-9694-c23b8422fadb_1600x1077.png)Finally, the paper conducts an empirical study on thousands of US court opinions. It finds that while the baseline GPT-3 model is unable to accurately predict court evaluations of fiduciary duty, more recent models in the GPT-3.5 series can do so with relatively high accuracy. Though legal standards will not resolve many of the most significant problems of alignment, they could improve upon current strategies of specification.\n\n**[[Link](https://arxiv.org/abs/2301.10095)]**\n\n### **Language models can generate consensus statements for diverse groups**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe1fe7ade-acb6-4c34-8a5b-376e21281adc_1600x775.png)We may want to take into account the interests not only of individuals but also of possibly-conflicting members of a larger group. This paper asked individuals for their opinions on political issues (e.g., “should speed limits be reduced?”) and used a language model to generate consensus statements that would be agreed on by the group at large. The participants rated AI-generated consensus statements highly, above even human-written statements. The authors don’t appear to discuss whether this could simply be due to the consensus statements being more watered down and thus less action-relevant. Still, the paper is a promising step towards aligning models with groups of humans.\n\n**[[Link](https://arxiv.org/abs/2211.15006)]**\n\n\n\n---\n\n**Robustness**\n==============\n\n### **Scaling laws for reward overoptimization**\n\nReinforcement learning techniques, such as those used to improve the general capabilities of language models, often optimize a model to give outputs that are rated highly by a proxy for some “gold standard.” For example, a proxy might be trained to predict how particular humans would react to an output. A difficulty, also mentioned earlier in the newsletter, is proxy gaming, where the model improves performance according to the proxy while failing to do so on the underlying gold standard (e.g., what humans would actually think).\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F38f4b96c-cf09-4b3f-84e0-f5ed1841d548_1600x1105.png)This paper empirically studies how language models trained with reinforcement learning can over optimize proxy reward, and develops scaling laws describing this phenomenon. To do this, they use a (proxy) model as the gold standard, and build a set of proxy models that approximate that gold standard model. In addition to measuring models optimized with reinforcement learning, they find that over optimization can also happen with best-of-n sampling.\n\n**[[Link](https://arxiv.org/abs/2210.10760)]**\n\n### **Adaptive models can be exploited by adversaries**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F006361ed-5156-46ac-978c-00183be469a0_1600x551.png)Many deep learning models aren’t robust to distribution shifts. One potential solution to this is test-time adaptation (TTA), where a model is modified based on the test data it sees. This paper demonstrates that TTA is subject to adversarial attacks, where malicious test data can cause predictions about clean data to be incorrect. This means that adaptive models have yet another attack surface that can potentially be exploited. The authors develop several kinds of attacks: targeted (degrade accuracy of a particular sample), indiscriminate (degrade accuracy in general), and “stealthy targeted” (degrade accuracy of a particular sample while not otherwise reducing accuracy). The attacks are conducted with projected gradient descent, and tested with the ImageNet-C dataset as the OOD dataset. The authors also find that models designed to be adversarially robust are also more robust to this attack.\n\n**[[Link](https://arxiv.org/abs/2301.12576)]**\n\n### **Other Robustness News**\n\n* **[[Link](https://arxiv.org/abs/2302.04638)]** Better diffusion models can improve adversarial training when used to generate data.\n* **[[Link](https://arxiv.org/abs/2301.06294)]** Proposes a method for adapting RL policies to environments with random shocks, augmenting training with simulations of the post-shock environment.\n\n**Systemic Safety**\n===================\n\n### **Applying Systems Safety to ML**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F30501f9a-da7e-46ff-bf8c-95c731f61ca4_1600x287.png)Systems safety engineering is widely used for safety analysis in many industries. The impetus for this discipline was the understanding that safety does not merely depend on the performance or reliability of individual components (e.g., ML models), but may also depend on assuring the safe interoperation of multiple systems or components (including human systems such as corporations). This paper advocates the use of systems safety engineering methods for analyzing the safety of machine learning models.\n\n**[[Link](https://arxiv.org/abs/2302.02972)]**\n\n### **Other Systemic Safety News**\n\n* **[[Link](https://arxiv.org/abs/2302.06588)]** This paper proposes methods to “immunize” images against manipulation by diffusion models, potentially reducing the risk of the models being used for disinformation.\n\n**Other Content**\n=================\n\n#### **[[Link](https://course.mlsafety.org/about)] The ML Safety course**\n\nIf you are interested in learning about cutting-edge ML Safety research in a more comprehensive way, there is now a course with lecture videos, written assignments, and programming assignments. It covers technical topics in Alignment, Monitoring, Robustness, and Systemic Safety.\n\n#### **[[Link](https://www.reddit.com/r/mlsafety/)] ML Safety Reddit**\n\nThe ML Safety Reddit is frequently updated to include the latest papers in the field.\n\n#### **[[Link](https://twitter.com/topofmlsafety)] Top of ML Safety Twitter**\n\nThis Twitter account tweets out papers posted on the ML Safety Reddit.\n\nThanks for reading ML Safety Newsletter! Subscribe for free to stay up to date in ML Safety research.", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-8", "title": "ML Safety Newsletter #8", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2023-02-20T15:00:53", "authors": ["Dan Hendrycks"], "id": "60b4776aa15027956b1cd3171af4742a"} | |

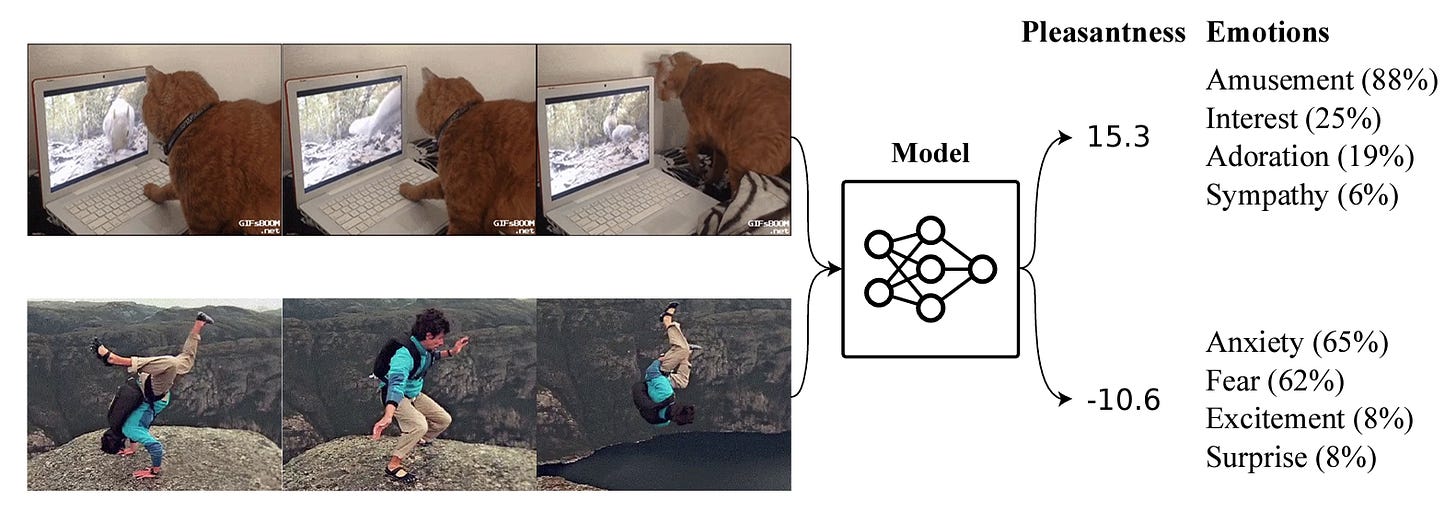

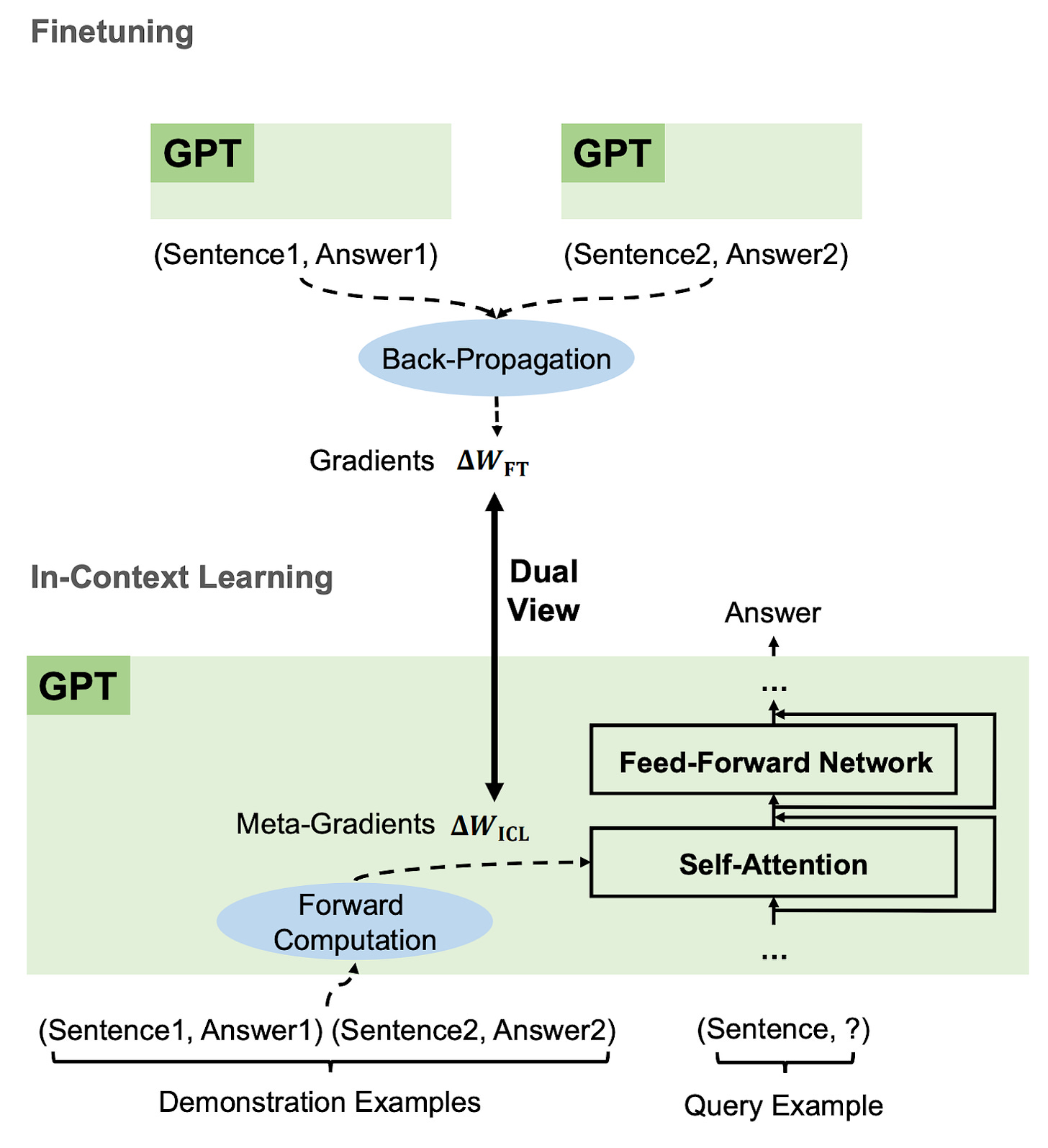

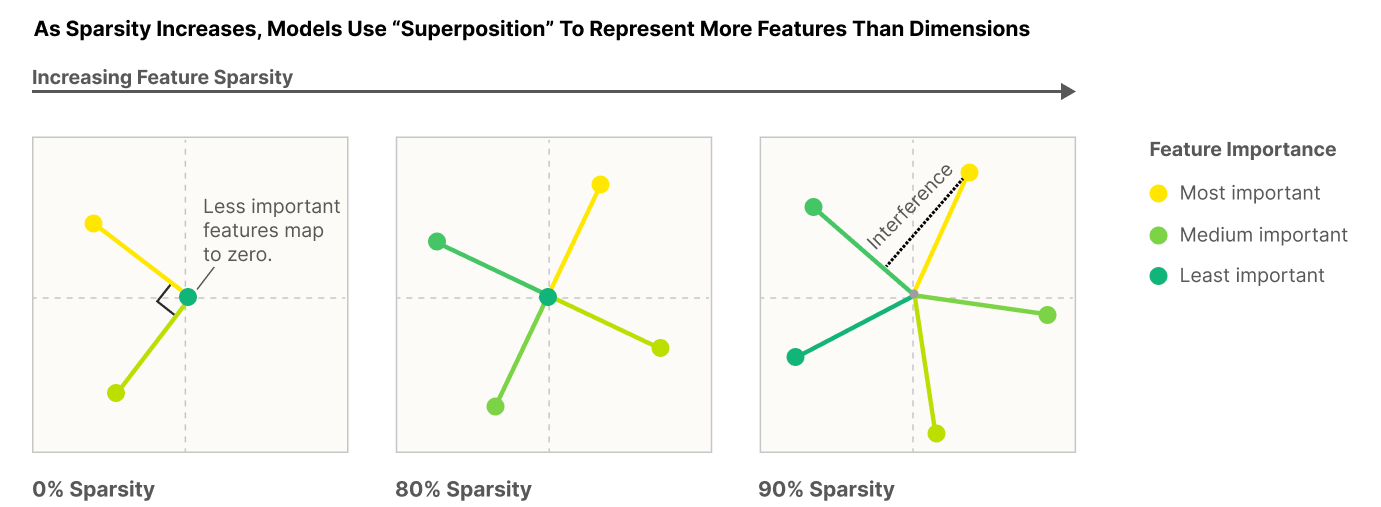

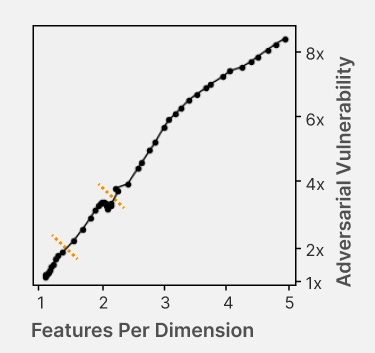

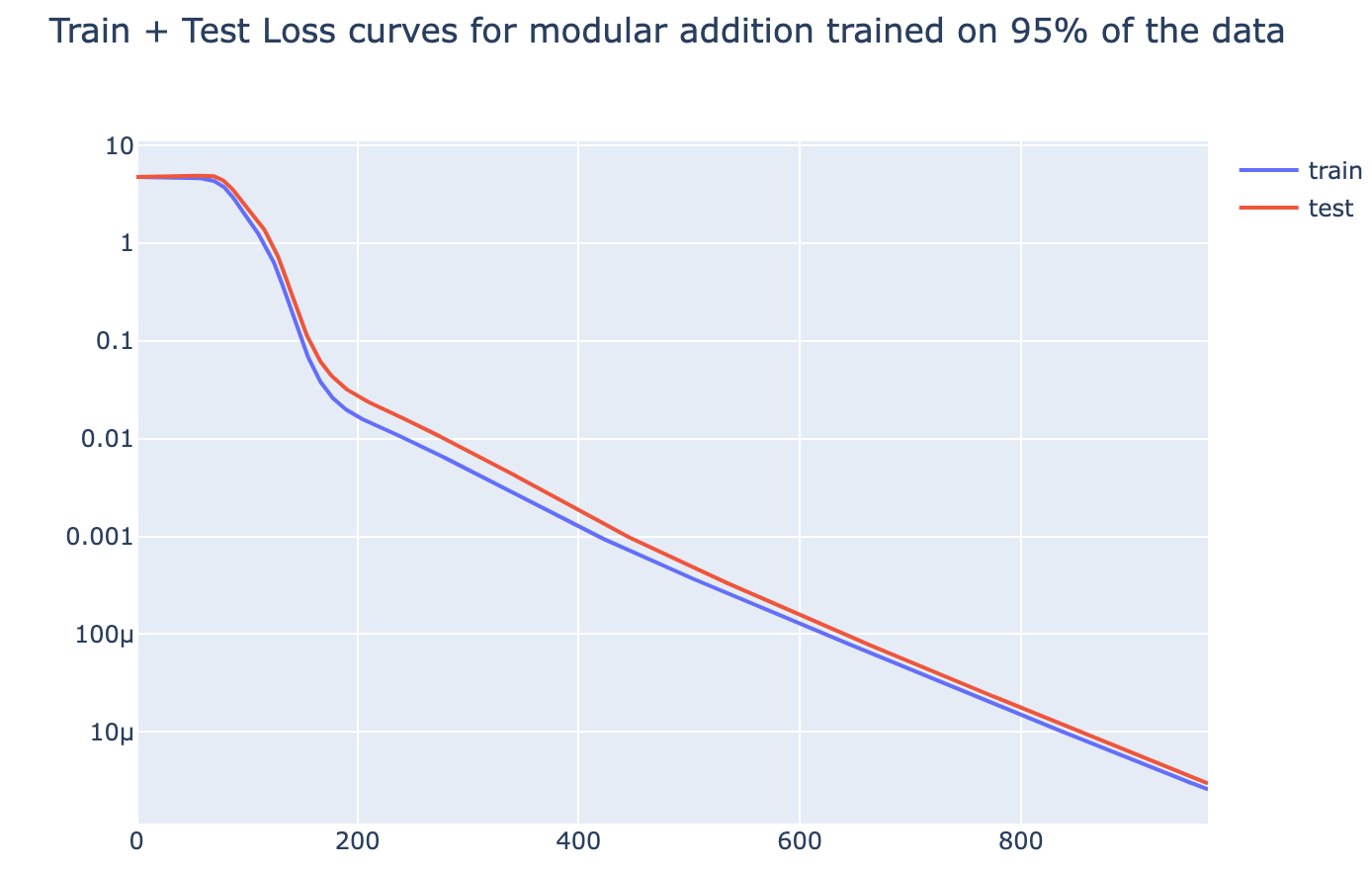

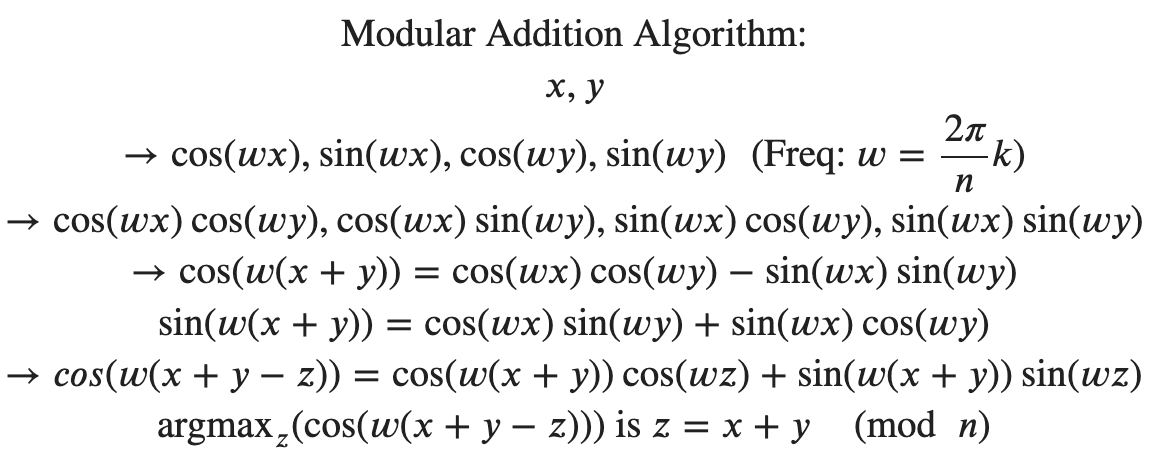

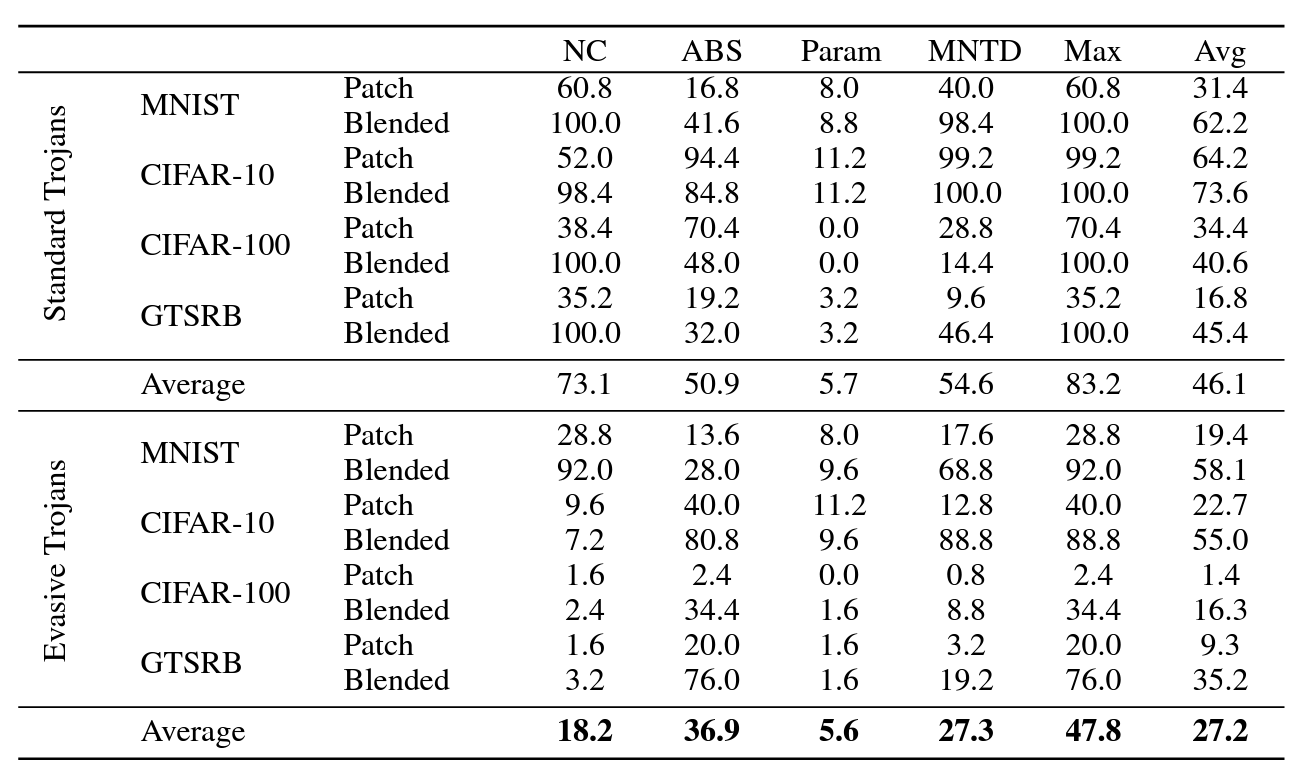

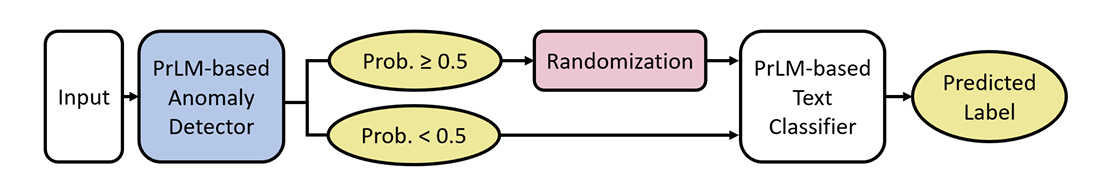

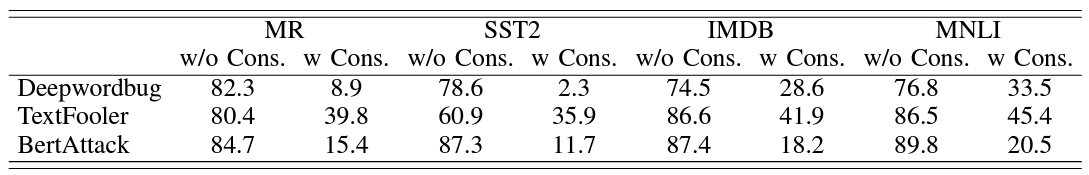

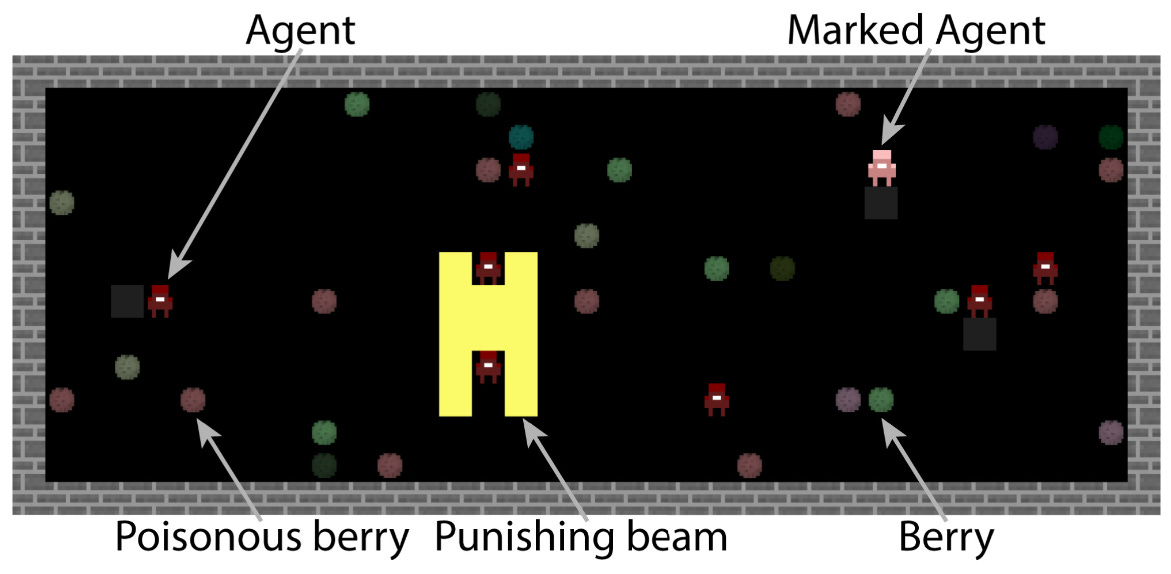

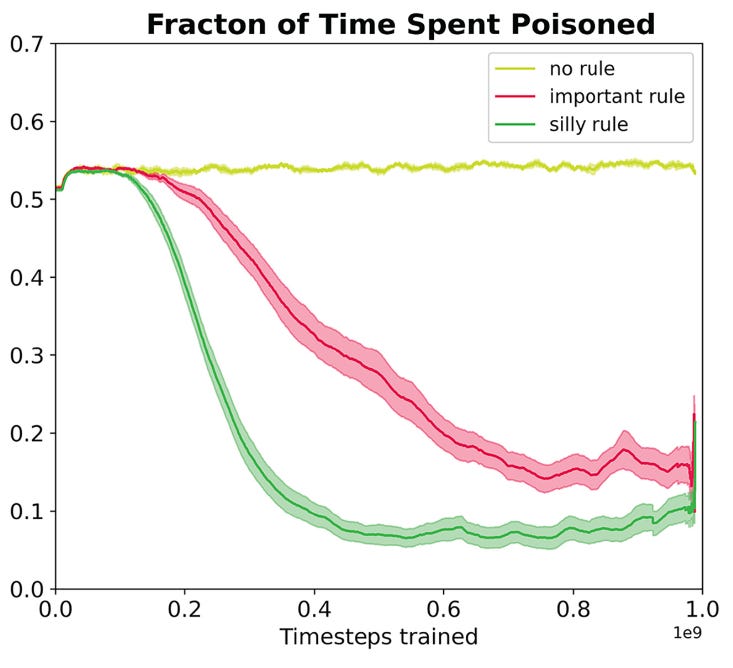

| {"text": "Welcome to the 7th issue of the ML Safety Newsletter! In this edition, we cover:\n\n* ‘Lie detection’ for language models\n* A step towards objectives that incorporate wellbeing\n* Evidence that in-context learning invokes behavior similar to gradient descent\n* What’s going on with grokking?\n* Trojans that are harder to detect\n* Adversarial defenses for text classifiers\n* And much more…\n\n\n\n---\n\n**Alignment**\n=============\n\n### **Discovering Latent Knowledge in Language Models Without Supervision**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F1f66d350-7d6e-463b-8048-98c777ee452d_1535x527.png)Is it possible to design ‘lie detectors’ for language models? The author of this paper proposes a method that tracks internal concepts that may track truth. It works by finding a direction in feature space that satisfies the property that a statement and its negation must have opposite truth values. This has similarities to the seminal paper “[Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings](https://proceedings.neurips.cc/paper/2016/file/a486cd07e4ac3d270571622f4f316ec5-Paper.pdf)” (2016); that paper captures latent neural concepts like gender with PCA, but this paper’s method is for truth instead of gender.\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.\n\nThe method outperforms zero-shot accuracy by 4% on average, which suggests something interesting: language models encode more information about what is true and false than their output indicates. Why would a language model lie? A common reason is that models are pre-trained to imitate misconceptions like “If you crack your knuckles a lot, you may develop arthritis.” \n\nThis paper is a step toward making models honest, but it also has limitations. The method does not necessarily provide a `lie detector’; it is unclear how to ensure that the method reliably converges to the model’s latent knowledge rather than lies that the model may output. Secondly, advanced future models could adapt to this specific method to bypass it if they are aware of the method—as in other domains, it is likely that deception and catching deception would become a cat-and-mouse game.\n\nThis may be a useful baseline for analyzing models that are designed to deceive humans, like models trained to play games including *Diplomacy* and *Werewolf*.\n\n**[[Link](https://arxiv.org/abs/2212.03827)]**\n\n### **How Would the Viewer Feel? Estimating Wellbeing From Video Scenarios**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fb24dee6d-be0c-4576-981a-9ac5d070f835_1582x558.png)Many AI systems optimize user choices. For example, a recommender system might be trained to promote content the user will spend lots of time watching. But choices, preferences, and wellbeing are not the same! Choices are easy to measure but are only a proxy for preferences. For example, a person might explicitly prefer not to have certain videos in their feed but watch them anyway because they are addictive. Also, preferences don’t always correspond to wellbeing; people can want things that are not good for them. Users might request polarizing political content even if it routinely agitates them.\n\nPredicting human emotional reactions to video content is a step towards designing objectives that take wellbeing into account. This NeurIPS oral paper introduces datasets containing 80,000+ videos labeled by the emotions they induce. The paper also explores “emodiversity”—a measurement of the variety of experienced emotions—so that systems can recommend a variety of positive emotions, rather than pushing one type of experience onto users. The paper includes analysis of how it bears on advanced AI risks in the appendix.\n\n**[[Link](https://arxiv.org/abs/2210.10039)]**\n\n### **Why Can GPT Learn In-Context? Language Models Secretly Perform Gradient Descent as Meta-Optimizers**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fccd80a6b-9fd5-4e20-86b1-fcb2d366b9c6_1461x1600.png)Especially since the rise of large language models, in-context learning has become increasingly important. In some cases, few-shot learning can outperform fine tuning. This preprint proposes a dual view between gradients induced by fine tuning and meta-gradients induced by few shot learning. They calculate meta-gradients by comparing activations between few-shot and zero-shot settings, specifically by approximating attention as linear attention and measuring activations after attention key and value operations. The authors compare these meta-gradients with gradients in a restricted version of fine tuning that modifies only key and value weights, and find strong similarities. The paper measures only up to 2.7 billion parameter language models, so it remains to be seen how far these findings generalize to larger models.\n\nWhile not an alignment paper, this paper demonstrates that language models may implicitly learn internal behaviors akin to optimization processes like gradient descent. Some have posited that [language models could learn “inner optimizers,”](https://arxiv.org/abs/1906.01820) and this paper provides evidence in that direction (though it does not show that the entire model is a coherent optimizer). The paper may also suggest that alignment methods focusing on prompting of models may be as effective as those focused on fine tuning. ([This](https://arxiv.org/abs/2212.07677) concurrent work from Google has similar findings.)\n\n**[[Link](https://arxiv.org/abs/2212.10559v2)]**\n\n#### **Other Alignment News**\n\n* **[[Link](https://openreview.net/pdf?id=mAiTuIeWbxD)]** The first examination of model honesty in a multimodal context.\n\n\n\n---\n\n**Monitoring**\n==============\n\n### **Superposition: Models Simulate Larger Models with ‘Sparse Encodings’**\n\nIt would be convenient if each neuron activation in a network corresponded to an individual concept. For example, one neuron might indicate the presence of a dog snout, or another might be triggered by the hood of a car. Unfortunately, this would be very inefficient. Neural networks generally learn to represent way more features than they have neurons by taking advantage of the fact that many features seldom co-occur. The authors explore this phenomenon, called ‘superposition,’ in small ReLU networks.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F4efc3769-2823-480d-8e5f-a4ac0eac3f80_1381x507.png)Some results demonstrated with the toy models:\n\n* Superposition is an observed empirical phenomenon.\n* Both monosemantic (one feature) and polysemantic (multiple feature) neurons can form.\n* Whether features are stored in superposition is governed by a phase change.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F31f71ab4-6268-404a-80ca-1e1e9045ab49_375x353.png)The authors also identify a connection to adversarial examples. Features represented with sparse codings can *interfere* with each other. Though this might be rare in the training distribution, an adversary could easily induce it. This would help explain why adversarial robustness comes at a cost: reducing interference requires the model to learn fewer features. This suggests that adversarially trained models should be wider, which has been common for years in adversarial robustness. (This is not to say this provides the first explanation for why wider adversarial models do better. A different explanation is that it’s a standard safe design principle to induce *redundancy*, and wider models have more capacity for redundant feature detectors.)\n\n**[[Link](https://transformer-circuits.pub/2022/toy_model/index.html#motivation)]**\n\n### **A Mechanistic Interpretability Analysis of Grokking**\n\nGrokking is where “models generalize long after overfitting their training set.” Models trained on small algorithmic tasks like modular addition will initially memorize the training data, but then suddenly learn to generalize after training a long time. Why does that happen? This paper attempts to unravel this mystery by completely reverse-engineering a modular addition model and examining how it evolves across training.\n\n**Takeaway:** For grokking to happen, there must be enough data for the model to eventually generalize but little enough for the model to quickly memorize it.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F310dc94a-611e-4d20-a56c-7d8a640d54c7_1388x880.png)Plenty of training data: phase change but no grokking[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fd97d4bf5-dbb5-4912-8de3-031dc497f58b_1560x940.png)Few training examples: grokking occurs[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F66a4c9ce-51b2-47c7-9046-5121753792e9_1168x462.png)This algorithm, based on discrete Fourier transforms and trigonometric identities, was learned by the model to perform modular arithmetic. It was reverse-engineered by the author.Careful reverse engineering can shed light on confusing phenomena.\n\n**[[Link](https://www.alignmentforum.org/posts/N6WM6hs7RQMKDhYjB/a-mechanistic-interpretability-analysis-of-grokking#fnx9a67s5sk7)]**\n\n### **Evasive Trojans: Raising the Bar for Detecting Hidden Functionality**\n\nQuick background: trojans (also called ‘backdoors’) are vulnerabilities planted in models that make them fail when a specific trigger is present. They are both a near-term security concern and a microcosm for developing better monitoring techniques. \n\nHow does this paper make trojans more evasive?\n\nIt boils down to adding an ‘evasiveness’ term to the loss, which includes factors like: \n\n* **Distribution matching**: How similar are the model’s parameters & activations to a clean (untrojaned) model?\n* **Specificity**: How many different patterns will trigger the trojan? (fewer = harder to detect)\n\nEvasive trojans are slightly harder to detect and substantially harder to reverse engineer, showing that **there is a lot of room for further research into detecting hidden functionality**.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F46cd570c-a1b3-4033-a90a-a979dca14bfc_1300x772.png)Accuracy of different methods for predicting the trojan target class**[[Link](https://openreview.net/pdf?id=V-RDBWYf0go)]**\n\n### **Other Monitoring News**\n\n* **[[Link](https://openreview.net/pdf?id=gT6j4_tskUt)]** OpenOOD: a comprehensive OOD detection benchmark that implements over 30 methods and supports nine existing datasets.\n\n\n\n---\n\n**Robustness**\n==============\n\n### **Two Tricks for improving the adversarial defenses of Language Models**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F38d3873d-561f-475e-94e0-9b5e885ddbb0_1104x189.png)Modifying a single character or a word can cause a text classifier to fail. This paper ports two tricks to improve adversarial defenses from computer vision:\n\n**Trick 1: Anomaly Detection**\n\nAdversarial examples often include odd characters or words that make them easy to spot. Requiring adversaries to fool anomaly detectors significantly improves robust accuracy.\n\n**Trick 2: Add Randomized Data Augmentations**\n\nSubstituting synonyms, inserting a random adjective, or back translating can clean text while mostly preserving its meaning.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F156da273-acbe-46f3-abc9-fb8ffc7383b4_1089x175.png)Attack accuracy considerably drops after adding defenses (w Cons. column)\n\n**[[Link](https://arxiv.org/abs/2208.10251)]**\n\n#### **Other Robustness News**\n\n* **[[Link](https://arxiv.org/abs/2208.05740)]** Improves certified adversarial robustness by combining the speed of interval-bound propagation with the generality of cutting plane methods.\n* **[[Link](https://arxiv.org/abs/2206.10550.pdf)]** Increases OOD robustness by removing corruptions with diffusion models.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7164d55c-6bbf-44b7-b41d-63ef48090e34_1600x297.png)\n\n\n\n---\n\n**Systemic Safety**\n===================\n\n### **‘Pointless Rules’ Can Help Agents Learn How to Enforce and Comply with Norms**\n\nMany cultures developed rules restricting food, clothing, or language that are difficult to explain. Could these ‘spurious’ norms have accelerated the development of rules that were essential to the flourishing of these civilizations? If so, perhaps they could also help future AI agents utilize norms to cooperate better.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ff95ba7f0-c476-442e-80c4-30419ba7c990_1171x574.png)In the *no-rules environment*, agents collect berries for reward. One berry is poisonous and reduces reward after a time delay. In the ‘important rule’ environment, eating poisonous berries is a social taboo. Agents that eat it are marked, and other agents can receive a reward for punishing them. The *silly rule environment* is the same, except that a non-poisonous berry is also taboo. The plot below demonstrates that agents learn to avoid poisoned berries more quickly in the environment with the spurious rule.\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa00ca447-f5ce-4eb2-bee6-af08cfe6bc64_733x668.png)**[[Link](https://www.pnas.org/doi/10.1073/pnas.2106028118)]**\n\n**Other Content**\n=================\n\n**The ML Safety course**\n\nIf you are interested in learning about cutting-edge ML Safety research in a more comprehensive way, there is now a course with lecture videos, written assignments, and programming assignments. It covers technical topics in Alignment, Monitoring, Robustness, and Systemic Safety.\n\n**[[Link](https://course.mlsafety.org/about)]**\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-7", "title": "ML Safety Newsletter #7", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2023-01-09T15:30:20", "authors": ["Dan Hendrycks"], "id": "a48e0b3f87f5dda970eafd7749b11494"} | |

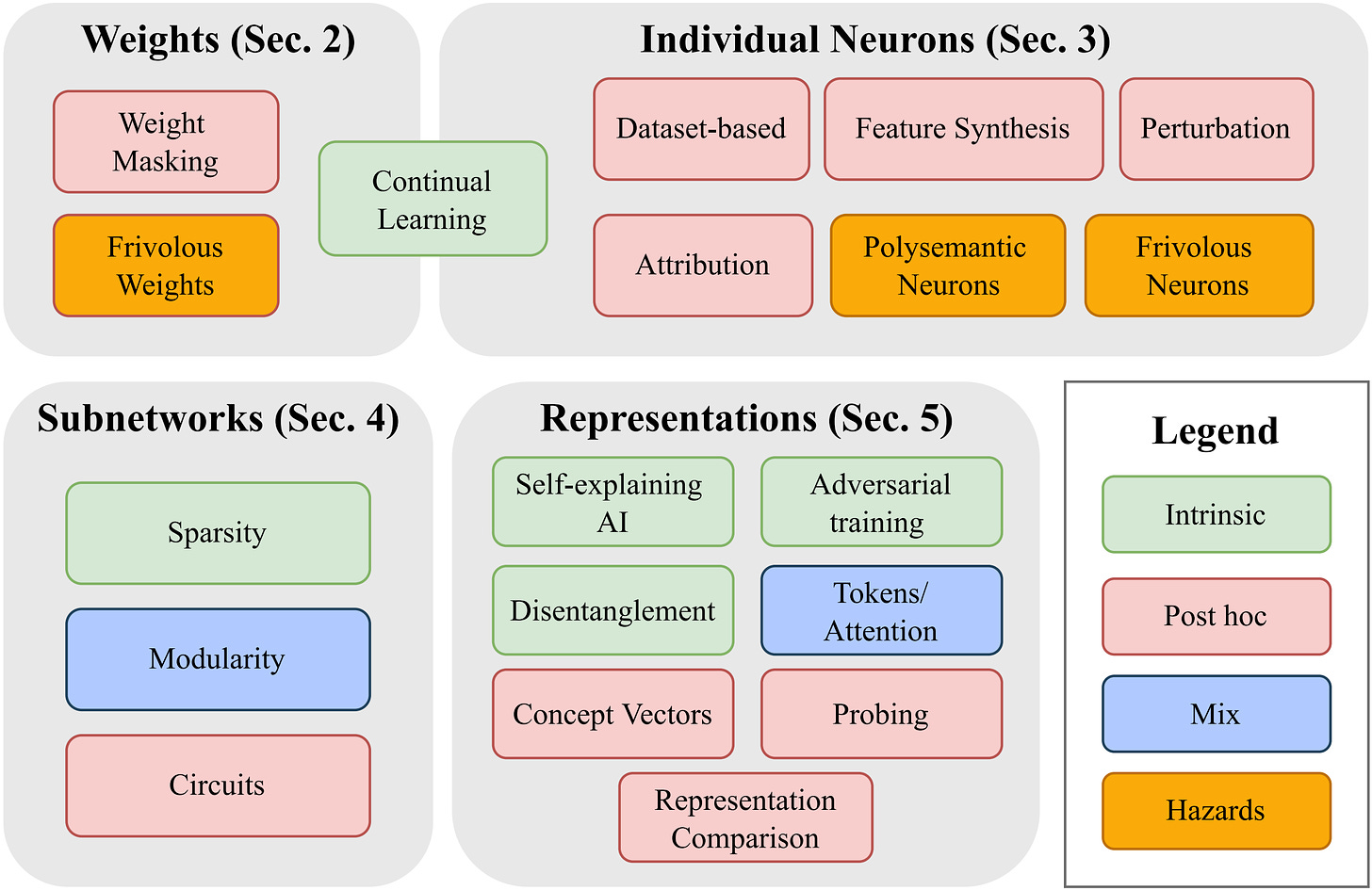

| {"text": "Welcome to the 6th issue of the ML Safety Newsletter. In this edition, we cover:\n\n* A **review of** **transparency research** and future research directions\n* A **large** **improvement to certified robustness**\n* **“Goal misgeneralization”** examples and discussion\n* A benchmark for assessing how well **neural networks predict world events** (geopolitical, industrial, epidemiological, etc.)\n* Surveys that track **what the ML community thinks about AI risks**\n* **$500,000** in prizes for new benchmarks\n* And much more…\n\n\n\n---\n\n**Monitoring**\n==============\n\n### **Transparency Survey**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F758adc0c-a2ea-49fc-8ab0-413171db9634_2462x1598.png)*A taxonomy of transparency methods. Methods are organized according to what part of the model they help to explain (weights, neurons, subnetworks, or latent representations). They can be intrinsic (implemented during training), post hoc (implemented after training), or can rely on a mix of intrinsic and post hoc techniques. ‘Hazards’ (in orange) are phenomena that make any of these techniques more difficult.*\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.\n\nThis survey provides an overview of transparency methods: what’s going on inside of ML models? It also discusses future directions, including:\n\n* **Detecting deception and eliciting latent knowledge.** Language models are dishonest when they babble common misconceptions like “bats are blind” despite knowing that this is false. Transparency methods could potentially indicate what the model ‘knows to be true’ and provide a cheaper and more reliable method for detecting dishonest outputs.\n* **Developing rigorous benchmarks.** These benchmarks should ideally measure the extent to which transparency methods provide actionable insights. For example, if a human implants a flaw in a model, can interpretability methods reliably identify it?\n* **Discovering novel behaviors.** An ambitious goal of transparency tools is to uncover whya model behaves the way it does on a set of inputs. More feasibly, transparency tools could help researchers identify failures that would be difficult to otherwise anticipate.\n\n**[[Link]](https://arxiv.org/abs/2207.13243)**\n\n#### Other Monitoring News\n\n* **[[Link](https://arxiv.org/abs/2206.07682)]** This paper discusses the sudden emergence of capabilities in large language models. This unpredictability is naturally a safety concern, especially when many of these capabilities could be hazardous or discovered after deployment. It will be difficult to make models safe if we do not know what they are capable of. \n\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F8727294d-397a-42c9-aaf5-3511e1aaa45b_1456x1014.png)\n* **[[Link](https://arxiv.org/abs/2207.08799)]** This work attributes emergent capabilities to “hidden progress” rather than random discovery.\n* **[[Link](https://arxiv.org/abs/2206.13498)]** Current transparency techniques (e.g., feature visualization) generally fail to distinguish the inputs that induce anomalous behavior\n\n\n\n---\n\n**Robustness**\n==============\n\n### **Mathematical Guarantees of Model Performance**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fa846511b-f7d8-45bb-bd29-c9135181f0c1_1600x365.png)*The current state-of-the-art method for certified robustness (denoised smoothing) combines randomized smoothing with a diffusion model for denoising. In randomized smoothing, an input is perturbed many times and the most commonly assigned label is selected as the final answer, which guarantees a level robust accuracy within a certain perturbation radius. To improve this method, the perturbed inputs are denoised with a diffusion model after the perturbation step so that they can be more easily classified (from [Salman et al](https://proceedings.neurips.cc/paper/2020/file/f9fd2624beefbc7808e4e405d73f57ab-Paper.pdf).)*\n\nA central concern in the robustness literature is that empirical evaluations may not give performance guarantees. Sometimes the test set will not find important faults in a model, and some think empirical evidence is insufficient for having high confidence. However, robustness certificates enable definitive claims for how a model will behave in some classes of situations.\n\nIn this paper, Carlini et al. recently improved ImageNet certified robustness by 14 percentage points by simply using a higher-performing diffusion model and classifier for denoised smoothing (the method described in the image above).\n\n**[[Link](https://arxiv.org/abs/2206.10550.pdf)]**\n\n#### Other Robustness News\n\n* **[[Link](https://arxiv.org/abs/2206.04137)]** An adversarial defense method for text based on automatic input cleaning.\n* **[[Link](https://arxiv.org/abs/2201.08555)]** A text classification attack benchmark that includes 12 different types of attacks.\n\n\n\n---\n\n**Alignment**\n=============\n\n### **Goal Misgeneralization:** Why Correct Specifications Aren’t Enough For Correct Goals\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F73369657-b35b-4fd5-a2b9-36722fe43a1e_1294x1291.png)Alignment research is often concerned with *specification:* how can we design objectives that capture human values (e.g., intrinsic goods such as wellbeing)? For example, if a chatbot is trained to maximize engagement (a proxy for entertaining experiences), it might find that the best way to do this is to show users clickbait. Ideally, the training signal should incorporate everything the designers care about, such as moral concerns (give users the right impression/honesty, give users content that helps them develop their skills, etc.).\n\nThe authors of this paper argue that even correctly specifying the goal is not sufficient for beneficial behavior. They provide several examples of environments where AI agents learn the wrong goals even when the desired behavior is correctly specified. These goals result in reasonable performance in the training environment, but poor or even harmful results under distribution shift.\n\nThe authors draw a distinction between “goal misgeneralization” errors and “capability misgeneralization,” where an agent ‘breaks’ or otherwise doesn’t behave competently. They argue that goal misgeneralization is a greater source of concern because more capable agents can have a greater impact on their environment: actively executing the wrong goal matters while passive failures do not. A way to separate between goal misgeneralization and capabilities misgeneralization would be exciting if work on goal misgeneralization could improve alignment without capabilities externalities.\n\nHowever, this distinction might be eroded in the future. Right now, agents ‘break’ and ‘get stuck’ and ‘behave randomly.’ These are very clearly capability failures. But agents in the future aren’t likely to make these mistakes. A robot might fall over, but it will get back up and continue on course towards its objective. Basic agents in the future would have basic “recovery routines” that help them stay on course. Such routines could erode the distinction between “passive” deep learning misgeneralizations and “active” goal misgeneralizations. One can construct a broad class of goal misgeneralizations simply from deep learning capabilities misgeneralizations. Imagine a sequential decision making agent using a deep network to understand its goal. The misgeneralization in the deep network would cause the model to pursue the wrong goal, exhibiting goal misgeneralization (assuming it has some recovery routines that help it not get stuck). Consequently deep learning representation faults could be given momentum, amplified, and made impactful by recovery routines in sequential decision making agents.\n\nHow should “goal misgeneralization” be distinguished from “capabilities misgeneralization?” for these more capable systems? It might be helpful to check whether they can be distinguished for humans. Albert Einstein described his involvement in the Manhattan project as the greatest mistake of his life. One way to interpret Einstein’s error is to say that he had the wrong goal (to build nuclear weapons). Another interpretation is that he actually had a correct goal (to benefit humanity) but failed to predict the suffering his work would cause, which was an error in capability. If an AI system made a similar mistake, would it be interpreted as “goal misgeneralization” or “capabilities misgeneralization”?\n\nThe distinction may not be very meaningful for more capable systems, or the distinction needs to be clearer. If this distinction is not made more clear, work that aims to prevent goal misgeneralization errors may serve to further capabilities by reducing misgeneralization broadly. Instead, the safety community may want to focus on addressing specific forms of misgeneralization. For instance, one might work on improving the robustness of human value models to unforeseen circumstances or optimizers. Alternatively, one might look for effective ways to incorporate uncertainty into sequential decision-making systems, so that they act more conservatively and do not aggressively pursue the wrong goal. As currently instantiated, the current formulation of goal misgeneralization may be too broad.\n\n**[[Link](https://arxiv.org/pdf/2210.01790.pdf)]**\n\n#### Other Alignment News\n\n* **[[Link]](https://arxiv.org/abs/2209.00731)** A philosophical discussion about what it means for conversational agents to be ‘aligned.’\n* **[[Link](https://arxiv.org/abs/2206.13353)]** This report examines a commonplace argument underlying concern about existential risks from misaligned advanced AI.\n\n\n\n---\n\n**Systemic Safety**\n===================\n\n### **Forecasting Future World Events with Neural Networks**\n\nWe care about improving decision-making among political leaders to reduce the chance of rash or possibly catastrophic decisions. These decision-making systems could be used in high-stakes situations where decision makers do not have much foresight, where passions are inflamed, and decisions must be made extremely quickly, perhaps based on gut reactions. Under these conditions, humans are liable to make egregious errors. Historically, the closest we have come to a global catastrophe has been in these situations, including the Cuban Missile Crisis. Work on epistemic improvement technologies could reduce the prevalence of perilous situations. Separately, they could reduce the risks from highly persuasive AI. Moreover, it helps leaders more prudently wield the immense power that future technology will provide. As Carl Sagan reminds us, “If we continue to accumulate only power and not wisdom, we will surely destroy ourselves.” (motivation taken from [this paper](https://arxiv.org/abs/2206.05862))\n\nThis paper creates the Autocast benchmark to see how well ML systems can forecast events. An example question in the dataset is as follows: “Will at least one nuclear weapon be detonated in Ukraine by 2023?” Autocast also includes a corpus of news articles organized by date to faithfully simulate the conditions under which humans make forecasts.\n\nThe paper establishes a baseline that is far below the performance of human experts: 65% accuracy vs. 92% for human experts on binary questions (random would be 50%). This indicates that there is much room for improvement on this task.\n\nSeparately, there is a [$625,000 prize pool](https://forecasting.mlsafety.org) for researchers working on this problem.\n\n**[[Link](https://arxiv.org/abs/2206.15474)]**\n\n#### Other Systemic Safety News\n\n* **[[Link](https://arxiv.org/abs/2208.07049)]** A self-supervised method for detecting malware using a ViT achieves state-of-the-art 97% binary accuracy.\n\n\n\n---\n\n**Other News**\n==============\n\n**A survey finds that 36% of the NLP community at least weakly agree with the statement “AI decisions could cause a nuclear-level catastrophe”**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F54cf7c42-872f-4d30-ad86-ceb6d4bcbfe8_1600x709.png)*The percentages in green indicate researcher opinions and the black bolded numbers indicate their perception of the community’s view.*\n\n**[[Link](https://arxiv.org/abs/2208.12852)]**\n\n**Another survey: AI researchers give a 5% median probability of ‘extremely bad’ outcomes (e.g., extinction) from advanced AI**\n\nMany respondents were substantially more concerned: 48% of respondents gave at least 10% chance of an extremely bad outcome. Yet some are much less concerned: 25% said such outcomes are virtually impossible (0%).\n\n**[[Link](https://aiimpacts.org/2022-expert-survey-on-progress-in-ai/#General_safety)]**\n\n**The Center for AI Safety is hiring research engineers.**\n\nThe Center for AI Safety is a nonprofit devoted to technical research and the promotion of safety in the broader machine learning community. Research engineers at the Center pursue a variety of research projects in areas such as Trojans, Adversarial Robustness, Power Aversion, and so on.\n\n**[[Link]](https://jobs.lever.co/aisafety/116247a4-2940-4dce-b7d5-a6190328fd4e)**\n\n**Call for benchmarks! Prizes for promising ML Safety benchmark ideas**\n\nAs David Patterson observed, “For better or for worse, benchmarks shape a field.” We are looking for benchmark ideas that have the potential to push ML Safety research in new and impactful directions in the coming years. We will award $50,000 for good ideas and $100,000 for outstanding ideas, with a total prize pool of **$500,000**. For especially promising proposals, we may offer funding for data collection and engineering expertise so that teams can build their benchmark. The competition is open until August 31st, 2023.\n\n**[[Link](https://benchmarking.mlsafety.org/)]**\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-6", "title": "ML Safety Newsletter #6", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2022-10-13T14:00:56", "authors": ["Dan Hendrycks"], "id": "2d53939fa0b8bf8fd291839e764049ab"} | |

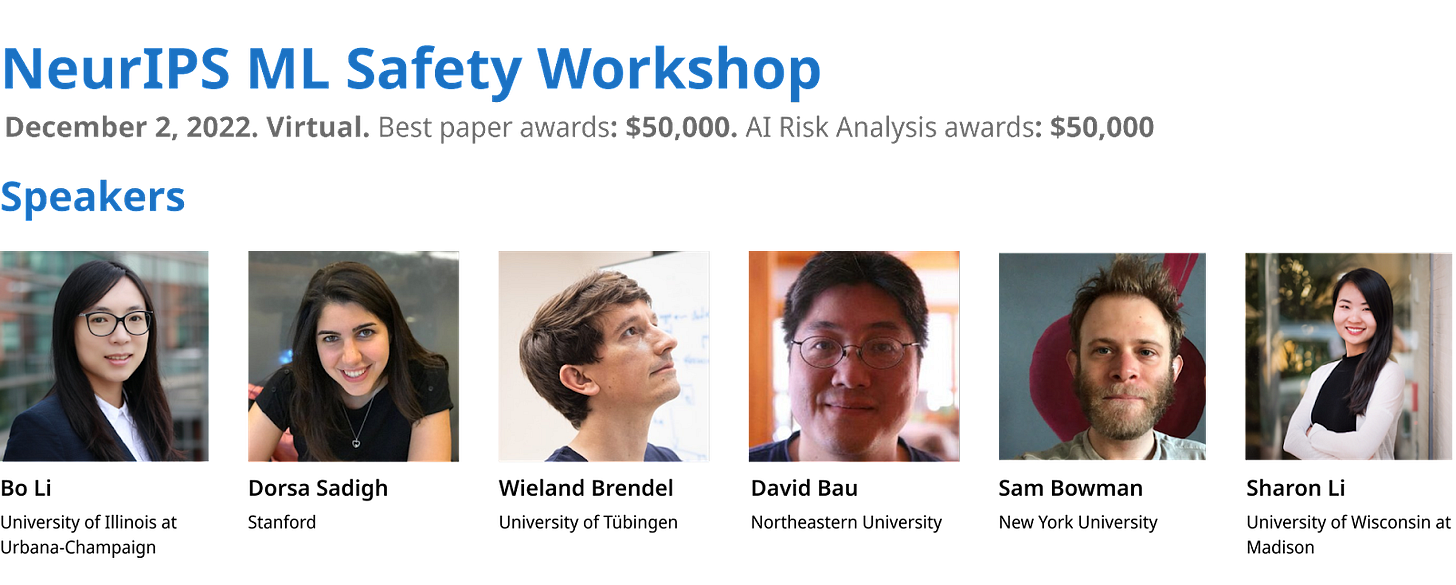

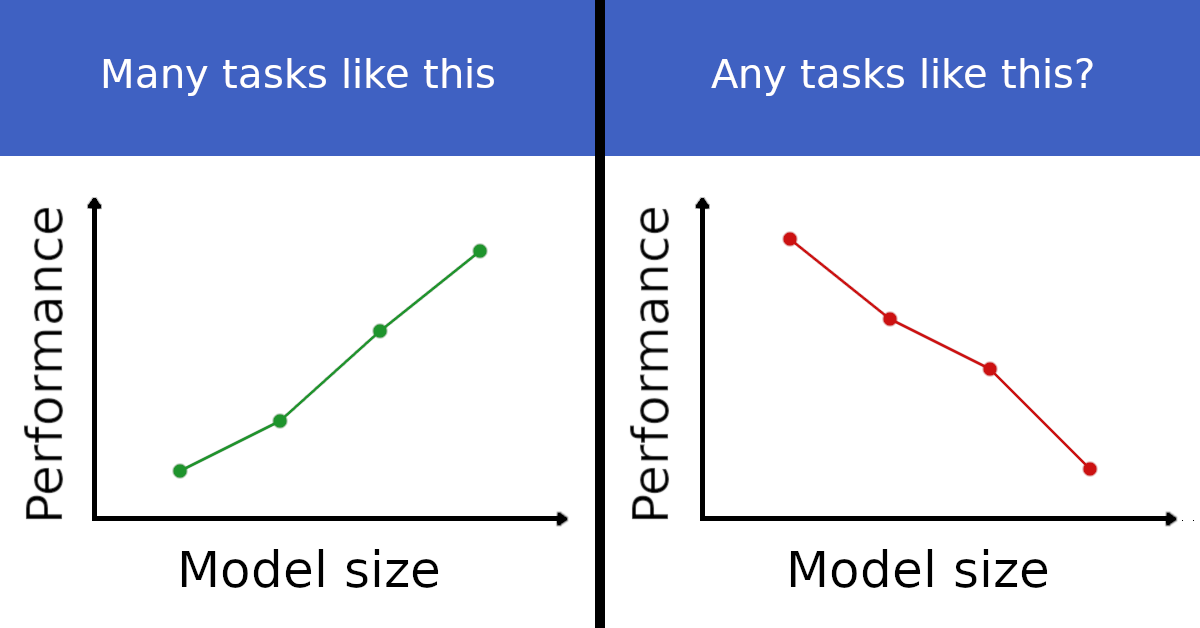

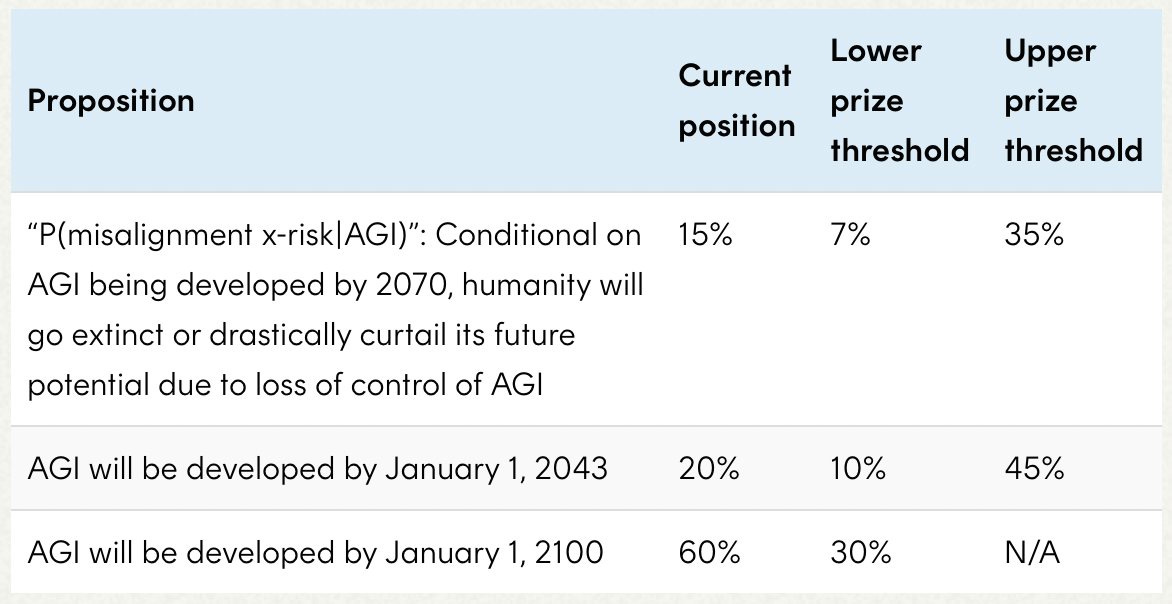

| {"text": "Welcome to the 5th issue of the ML Safety Newsletter. In this special edition, we focus on prizes and competitions:\n\n* ML Safety Workshop Prize\n* Trojan Detection Prize\n* Forecasting Prize\n* Uncertainty Estimation Prize\n* Inverse Scaling Prize\n* AI Worldview Writing Prize\n\nAwards for Best Papers and AI Risk Analyses\n-------------------------------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F0dd716ae-06b3-455e-84e7-0445054fddd7_1600x643.png)This workshop will bring together researchers from machine learning communities to focus on Robustness, Monitoring, Alignment, and Systemic Safety. Robustness is designing systems to be resilient to adversaries and unusual situations. Monitoring is detecting malicious use and discovering unexpected model functionality. Alignment is building models that represent and safely optimize hard-to-specify human values. Systemic Safety is using ML to address broader risks related to how ML systems are handled, such as cyberattacks. To learn more about these topics, consider taking [the ML Safety course](https://course.mlsafety.org/). $100,000 in prizes will be awarded at this workshop. In total, **$50,000** will go to the best papers. A separate **$50,000** will be awarded to papers that discuss how their paper relates to the reduction of catastrophic or existential risks from AI. **The deadline to submit is September 30th.**\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts and support my work.\n\nWebsite: [neurips2022.mlsafety.org](https://neurips2022.mlsafety.org/)\n\n\n\n---\n\nNeurIPS Trojan Detection Competition\n------------------------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F195b8a7e-6609-411f-8906-666d1231aa06_2488x982.png)The Trojan Detection Challenge (hosted at NeurIPS 2022) challenges researchers to detect and analyze Trojan attacks on deep neural networks that are designed to be difficult to detect. The challenge is to identify whether a deep neural network will suddenly change behavior if certain unknown conditions are met. This **$50,000** competition invites researchers to help answer an important research question for deep neural networks: How hard is it to detect hidden functionality that is trying to stay hidden? Learn more about our competition with this [Towards Data Science article](https://towardsdatascience.com/neural-trojan-attacks-and-how-you-can-help-df56c8a3fcdc).\n\nWebsite: [trojandetection.ai](https://trojandetection.ai)\n\n\n\n---\n\nImprove Institutional Decision-Making with ML\n---------------------------------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F188d1f86-630a-4035-875e-134914c30831_1052x279.png)Forecasting future world events is a challenging but valuable task. Forecasts of geopolitical conflict, pandemics, and economic indicators help shape policy and institutional decision-making. An example question is “Before 1 January 2023, will North Korea launch an ICBM with an estimated range of at least 10,000 km?” Recently, the [Autocast benchmark](https://openreview.net/pdf?id=LbOdQrnOb2q) (NeurIPS 2022) was created to measure how well models can predict future events. Since this is a challenging problem for current ML models, a competition with **$625,000** in prizes has been created. The warm-up round (**$100,000**) ends on February 10th, 2023.\n\nWebsite: [forecasting.mlsafety.org](https://forecasting.mlsafety.org/)\n\n\n\n---\n\nUncertainty Estimation Research Prize\n-------------------------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fd27cca03-607e-4cc1-8ce4-ce1f94821a0b_1052x279.png)ML Systems often make real-world decisions that involve ethical considerations. If these systems can reliably identify moral ambiguity, they are more likely to proceed cautiously or indicate an operator should intervene. This **$100,000** competition aims to incentivize research on improved uncertainty estimation by measuring calibrated ethical awareness. Submissions are open for the first iteration until May 31st, 2023.\n\nWebsite: [moraluncertainty.mlsafety.org](https://moraluncertainty.mlsafety.org/)\n\n\n\n---\n\nInverse Scaling Prize\n---------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F47d6b5e4-08b0-48d4-a262-6e7e54f32d10_1200x628.png)Scaling laws show that language models get predictably better as the number of parameters, amount of compute used, and dataset size increase. This competition searches for tasks with trends in the opposite direction: task performance gets monotonically, predictably worse as the overall test loss improves. This phenomenon, dubbed “inverse scaling” by the organizers, highlights potential issues with the current paradigm of pretraining and scaling. As language models continue to get bigger and used in more real-world applications, it is important that they are not increasingly getting worse or harming users in yet-undetected ways. There is a total of **$250,000** in prizes. The final round of submissions closes before October 27, 2022.\n\nWebsite: [github.com/inverse-scaling/prize](https://github.com/inverse-scaling/prize)\n\n\n\n---\n\nAI Worldview Prize\n------------------\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fcfbab58e-62e5-461a-b6c4-048403c8dd53_1172x604.png)The FTX Future Fund is hosting a competition with prizes ranging from **$15,000** to **$1,500,000** for work that informs the Future Fund’s fundamental assumptions about the future of AI, or is informative to a panel of independent judges. In hosting this competition, the Future Fund hopes to expose their assumptions about the future of AI to intense external scrutiny and improve them. They write that increased clarity about the future of AI “would change how we allocate hundreds of millions of dollars (or more) and help us better serve our mission of improving humanity’s longterm prospects.”\n\nWebsite: [ftxfuturefund.org/announcing-the-future-funds-ai-worldview-prize](https://ftxfuturefund.org/announcing-the-future-funds-ai-worldview-prize/)\n\n\n\n---\n\nIn the next newsletter, we will cover empirical research papers and announce a new $500,000 benchmarking competition.\n\nThanks for reading ML Safety Newsletter! Subscribe for free to receive new posts.", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-5", "title": "ML Safety Newsletter #5", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2022-09-26T21:55:02", "authors": ["Dan Hendrycks"], "id": "912a300b49289b56467e9a916a5b397d"} | |

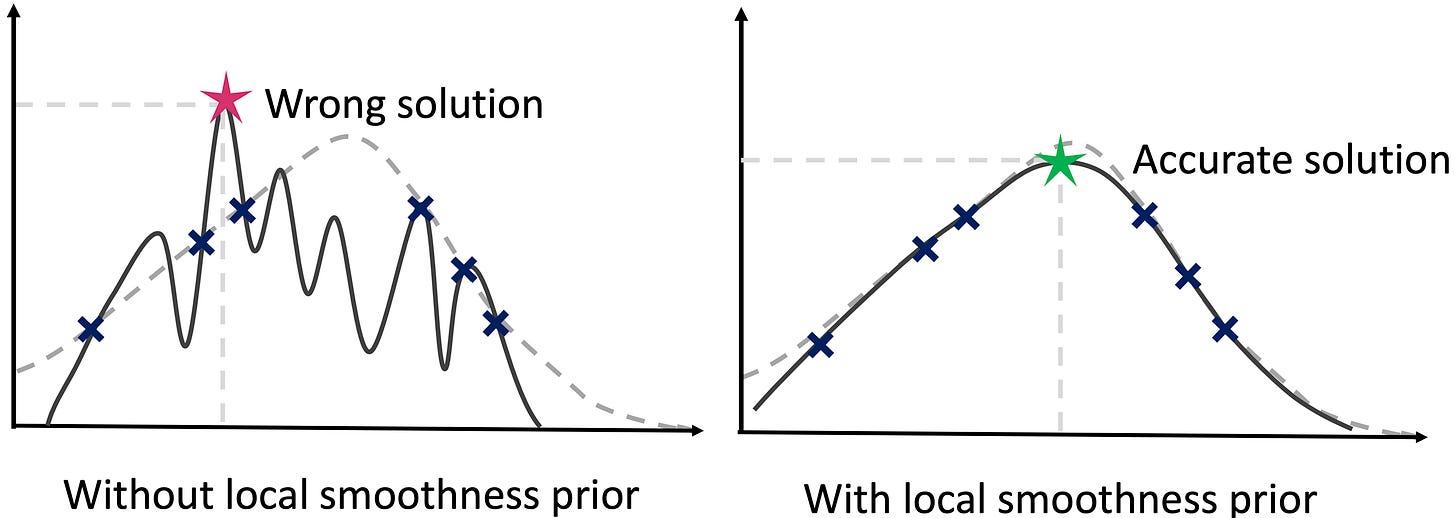

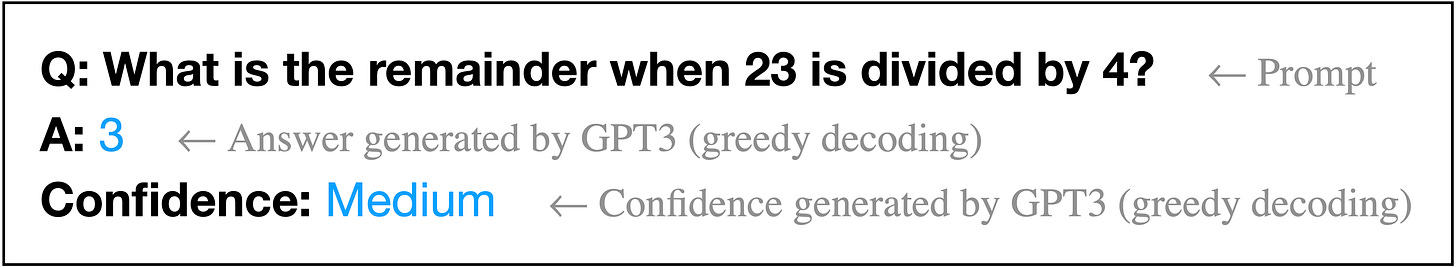

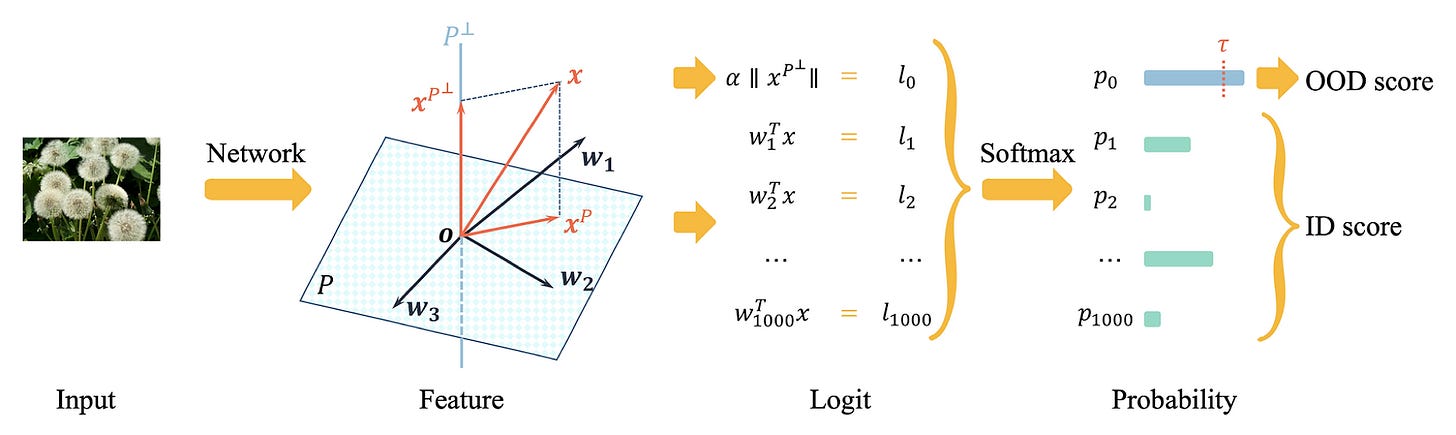

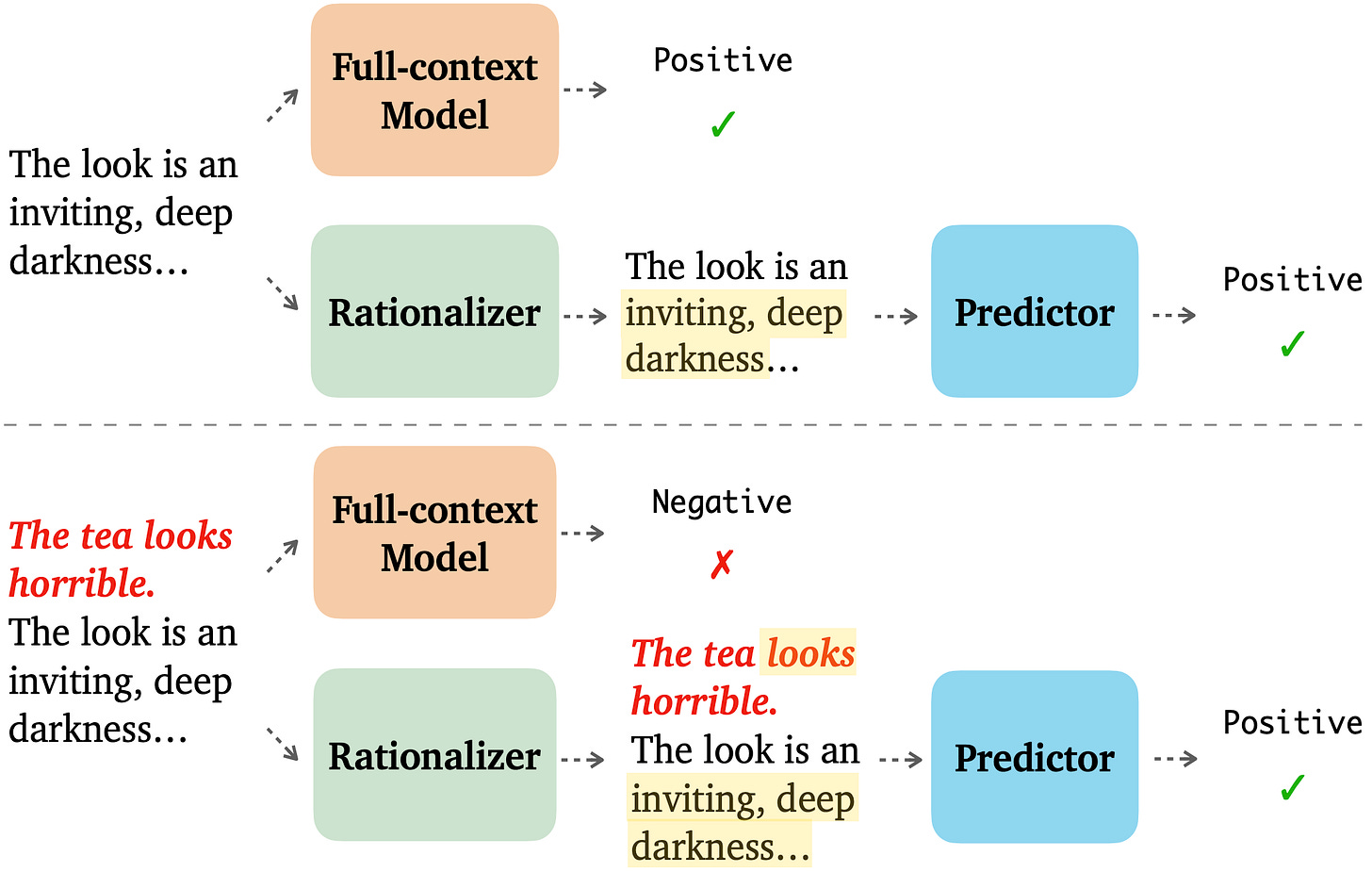

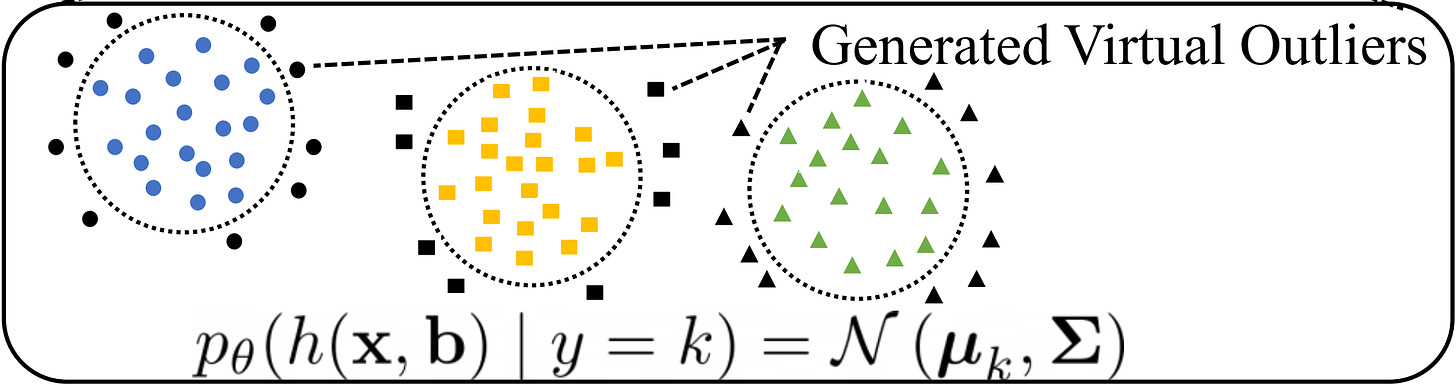

| {"text": "Welcome to the 4th issue of the ML Safety Newsletter. In this edition, we cover:\n\n* How “model-based optimization” environments can be used to research proxy gaming\n* How models can express their uncertainty through natural language\n* A new plug-and-play state-of-the-art OOD detection technique\n* How “rationales” can improve robustness to adversarial attacks\n* Announcing our subreddit with safety papers added nightly\n* ... and much more.\n\n\n\n---\n\n**Alignment**\n=============\n\n### **Making Proxies Less Vulnerable**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fb8d7e77f-8613-4580-b8f2-512cfa743d76_3404x1210.png)*Functions without a smoothness prior can result in solutions that maximize a proxy but not the true objective.*\n\nA portion of “Model-based optimization” (MBO) research provides a way to study simple cases of proxy gaming: with MBO environments, we can learn how to build better proxies that yield better solutions when optimized.\n\nMBO aims to design objects with desired properties, that is to find a new input that maximizes an objective. The objective is typically assumed to be expensive to evaluate and a black box. Since the black box objective is expensive to query, researchers are tasked with creating a proxy that can be queried repeatedly. An optimizer then finds an input that maximizes the proxy. To design proxies that yield better solutions according to the ground truth black-box objective, this paper incorporates a smoothness prior. As there are many mathematical details, see the paper for a full description. In short, model-based optimization environments can be used to empirically study how to create better, less gameable proxies.\n\n**[[Paper]](https://arxiv.org/abs/2110.14188) [[Video]](https://slideslive.com/38967498/roma-robust-model-adaptation-for-offline-modelbased-optimization?ref=speaker-17410-latest)**\n\n#### Other Alignment News\n\n* [[Link]](https://arxiv.org/abs/2203.09911) Why we need biased AI -- How including cognitive and ethical machine biases can enhance AI systems: “a re-evaluation of the ethical significance of machine biases”\n* [[Link]](https://arxiv.org/abs/2205.05989) Generating ethical analysis to moral quandaries\n* [[Link 1]](https://arxiv.org/abs/2203.04946) [[Link 2]](https://arxiv.org/abs/2203.04668) Examples of inverse scaling or anticorrelated capabilities: perceptual similarity performance does not monotonically increase with classification accuracy\n* [[Link]](https://arxiv.org/abs/2205.06750) “comprehensive comparison of these provably safe RL methods”\n* [[Link]](https://arxiv.org/abs/2203.11409) Inverse Reinforcement Learning Tutorial\n* [[Link]](https://arxiv.org/abs/2204.05212) Single-Turn Debate Does Not Help Humans Answer Hard Reading-Comprehension Questions: “We do not find that explanations in our set-up improve human accuracy”\n\n\n\n---\n\n**Monitoring**\n==============\n\n### **Teaching Models to Express Their Uncertainty in Words**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F5b61aea5-df72-4d16-9a80-f6d9fe6d94b2_3468x638.png)This work shows GPT-3 can express its uncertainty in natural language, without using model logits. Moreover, it is somewhat calibrated under various distribution shifts.\n\nThis is an early step toward making model uncertainty more interpretable and expressive. In the future, perhaps models could use natural language to express complicated beliefs such as “event A will occur with 60% probability assuming event B also occurs, and with 25% probability if event B does not.” In the long-term, uncertainty estimation will likely remain nontrivial, as it is not obvious how to make future models calibrated on inherently uncertain, chaotic, or computationally prohibitive questions that extend beyond existing human knowledge.\n\n**[[Link]](https://arxiv.org/abs/2205.14334)**\n\n### **Virtual Logit Matching**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fc2e74b27-fdf0-4785-8b99-51e8c18ad0ed_1920x574.png)*An illustration of the Virtual Logit Matching pipeline.*\n\nVirtual logit matching is a new out-of-distribution technique that does not require hyperparameter tuning, does not require retraining models, and beats the maximum softmax baseline on most OOD detection tasks. The idea is to create a “virtual logit,” which is proportional to the magnitude of the projection of the input onto the space orthogonal to the principal embedding space. Then the OOD score is roughly equal to the virtual logit minus the maximum logit, intuitively the evidence that the input is unlike the training example embeddings minus the evidence that it is in-distribution.\n\n**[[Link]](https://arxiv.org/abs/2203.10807)**\n\n#### Other Monitoring News\n\n* [[Link]](https://arxiv.org/abs/2204.07531) “We train probes to investigate what concepts are encoded in game-playing agents like AlphaGo and how those concepts relate to natural language”\n* [[Link]](https://gradientscience.org/missingness/) By removing parts of an input image, one can analyze how much a model depends on a given input feature. However, removing parts of the input is often not completely sound, as removing parts confuses models. Fortunately with Vision Transformers, removing patches is a matter of simply dropping tokens, which is a more sound way to create counterfactual inputs.\n* [[Link]](https://arxiv.org/abs/2201.11114) To more scalably characterize model components, this work “automatically labels neurons with open-ended, compositional, natural language descriptions”\n* [[Link]](https://transformer-circuits.pub/2022/in-context-learning-and-induction-heads/index.html) Transformer mechanisms that complete simple sequences are identified and shown to be emergent during training\n* [[Link]](https://arxiv.org/abs/2204.11642) An interpretability benchmark: controllably generate trainable examples under arbitrary biases (shape, color, etc) → human subjects are asked to predict the systems' output relying on explanations\n* [[Link]](https://github.com/Jingkang50/OpenOOD) A new library has implementations of over a dozen OOD detection techniques\n* [[Link]](https://arxiv.org/abs/2203.15506) Trojan detection cat and mouse continues: a new attack “reduces the accuracy of a state-of-the-art defense mechanism from >96% to 0%”\n* [[Link]](https://arxiv.org/abs/2205.05055) Data Distributional Properties Drive Emergent Few-Shot Learning in Transformers: “we find that few-shot learning emerges only from applying the right architecture to the right data distribution; neither component is sufficient on its own”\n* [[Link]](https://arxiv.org/abs/2205.10343) Research on understanding emergent functionality: “We observe empirically the presence of four learning phases: comprehension, grokking, memorization, and confusion”\n* [[Link]](https://arxiv.org/abs/2202.05983) Displaying a model's true confidence can be suboptimal for helping people make better decisions\n\n\n\n---\n\n**Robustness**\n==============\n\n### **Can Rationalization Improve Robustness?**\n\n[](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F2d60c226-c7a2-4236-a21b-ea4b458a6a60_2144x1362.png)To improve robustness, this paper asks models to explain their predictions. These are called “rationales.” When models produce rationales before predicting, they are more robust to token-level and sentence-level adversarial attacks.\n\n**[[Link]](https://arxiv.org/pdf/2204.11790.pdf)**\n\n#### Other Robustness News\n\n* [[Link]](https://arxiv.org/abs/2204.04063) How well do adversarial attacks transfer? This paper provides a large-scale systematic empirical study in real-world environments\n* [[Link]](https://arxiv.org/abs/2205.06154) Advancement in robustness with guarantees: “[we] provide better certificates in terms of certified accuracy, average certified radii and abstention rates as compared to concurrent approaches”\n* [[Link]](https://arxiv.org/abs/2205.01663) A large-scale data collection effort to add three [nines of reliability](https://terrytao.wordpress.com/2021/10/03/nines-of-safety-a-proposed-unit-of-measurement-of-risk/) to an injury classification task\n* [[Link]](https://arxiv.org/abs/2204.02937) “simple last layer retraining can match or outperform state-of-the-art approaches on spurious correlation benchmarks”\n* [[Link]](https://arxiv.org/abs/2205.01397) What causes CLIP's perceived robustness? Mostly dataset diversity, suggesting semantic overlap with the test distribution\n* [[Link]](https://arxiv.org/abs/2203.12117) Testing RL agent robustness to abrupt changes and sudden shocks to the environment\n\n\n\n---\n\n#### Other News\n\n[We now have a subreddit!](https://www.reddit.com/r/mlsafety/) The subreddit has a steady stream of safety-relevant papers, including safety papers not covered in this newsletter. Papers are added to the subreddit several times a week. The subreddit’s posts are available [on twitter too](https://twitter.com/topofmlsafety).\n\n[A lecture series](https://www.youtube.com/watch?v=dQ4cmtHCYt4) on social and ethical considerations of advanced AI: concrete suggestions for creating cooperative AI; discussion of the infeasibility and suboptimality of various deployment strategies; discussion of the merits of AI autonomy and reasonableness over rationality; and outlining how communities of agents could be robustly safe. (I recommend watching [the final lecture](https://www.youtube.com/watch?v=dQ4cmtHCYt4), and if you’re interested consider watching the [previous](https://www.youtube.com/watch?v=P2uDQiTz5Ss) [lectures](https://www.youtube.com/watch?v=uz5qXBGM9HY).)", "url": "https://newsletter.mlsafety.org/p/ml-safety-newsletter-4", "title": "ML Safety Newsletter #4", "source": "ml_safety_newsletter", "source_type": "blog", "date_published": "2022-06-03T01:15:35", "authors": ["Dan Hendrycks"], "id": "d21167b1381945474500e0fed877dc5f"} | |