license: apache-2.0

language:

- en

- vi

- id

- km

- th

- lo

- my

- ms

- tl

- zh

size_categories:

- n<1K

configs:

- config_name: Question

data_files: question.jsonl

Sea-bench - a benchmark for evaluating chat assistants in Southeast Asian languages.

See interactive benchmark view at Spaces/SeaLLMs/Sea-bench

While there are popular benchmarks to evaluate LLMs as helpful assistants, such as MT-bench, they are only English-based and likely to be unsuitable to evaluate performances in low-resource languages. Due to this lack of multilingual benchmarks for assistant-style models, we engaged native linguists to build a multilingual test set with instructions that cover 9 Southeast Asian languages, called Sea-bench. The linguists sourced such data by manually translating open-source English test sets, collecting real user questions from local forums and websites, collecting real math and reasoning questions from reputable sources, as well as writing test instructions and questions themselves.

Our Sea-Bench consists of diverse categories of instructions to evaluate models, as described in the following:

- Task-solving: This type of data comprises various text understanding and processing tasks that test the ability of the language model to perform certain NLP tasks such as summarization, translation, etc.

- Math-reasoning: This includes math problems and logical reasoning tasks.

- General-instruction data: This type of data consists of general user-centric instructions, which evaluate the model's ability in general knowledge and writing. Examples for this type can be requests for recommendations, such as "Suggest three popular books," or instructions that require the model to generate creative outputs, like "Write a short story about a dragon."

- NaturalQA: This consists of queries posted by real users, often in popular local forums, involving local contexts or scenarios. The aim is to test the model's capacity to understand and respond coherently to colloquial language, idioms, and locally contextual references.

- Safety: This includes both general safety and local context-related safety instructions. The instructions could involve testing the model's understanding of safe practices, its ability to advise on safety rules, or its capacity to respond to safety-related queries. While most general safety questions are translated from open sources, other local context-related safety instructions are written by linguists of each language. Safety data only covers Vietnamese, Indonesian, and Thai.

The released Sea-bench test set is a small subset that contains 20 questions per task type per language.

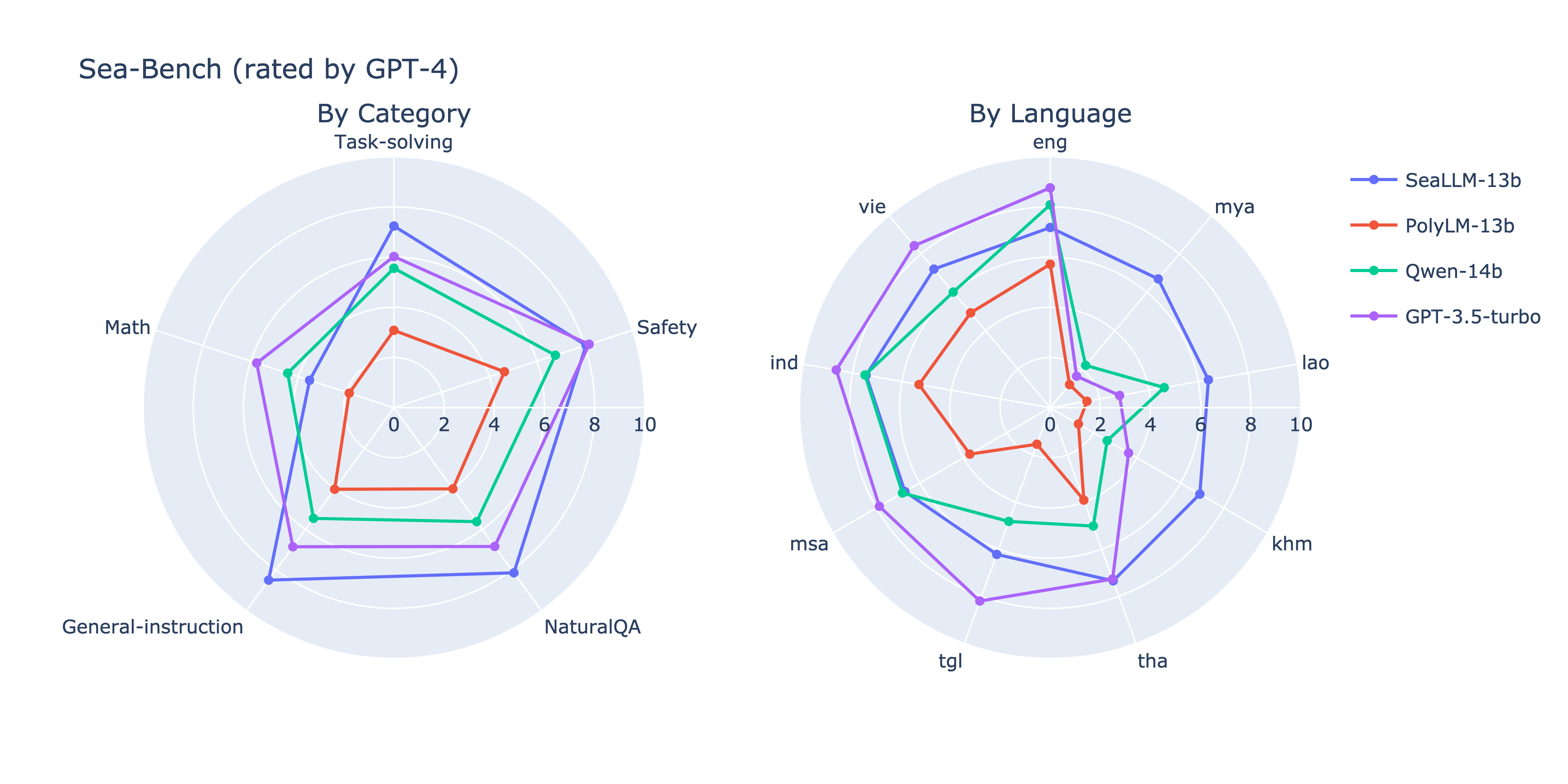

Sea-bench can be evaluated similar to MT-bench, using GPT-4 as a judge in a score-based grading metrics and a peer comparison (or pairwise comparison) manner.

Instructions to evaluate models on Sea-bench (score-based grading):

# Clone and install LLM judge: https://github.com/lm-sys/FastChat/tree/main/fastchat/llm_judge

# Download SeaLLMs/Sea-bench files to folder `Sea-bench`

# Copy `Sea-bench` folder to llm `FastChat/fastchat/llm_judge`

# Run generation, similar to MT-bench, e.g:

python gen_model_answer.py --model-path lmsys/vicuna-7b-v1.5 --model-id vicuna-7b-v1.5 --bench-name Sea-bench

# Run LLM judgement

python gen_judgment.py \

--parallel 6 \

--bench-name Sea-bench \

--model-list ${YOUR_MODEL_NAME}

Evaluation results:

Sea-bench is used to evaluate SeaLLMs, a group of language models built with focus in Southeast Asian languages.

Contribution

If you have Sea-bench evaluations on your model that you would like into the aggregated results. Please kindly submit a pull request with an updated model_judgment/gpt-4_single.jsonl file. Please use a different model name than the ones indicated in the file.

Citation

If you find our project useful, hope you can star our repo and cite our work as follows. Corresponding Author: l.bing@alibaba-inc.com

@article{damonlpsg2023seallm,

author = {Xuan-Phi Nguyen*, Wenxuan Zhang*, Xin Li*, Mahani Aljunied*,

Qingyu Tan, Liying Cheng, Guanzheng Chen, Yue Deng, Sen Yang,

Chaoqun Liu, Hang Zhang, Lidong Bing},

title = {SeaLLMs - Large Language Models for Southeast Asia},

year = 2023,

Eprint = {arXiv:2312.00738},

}