Datasets:

Update README.md

Browse files

README.md

CHANGED

|

@@ -9,23 +9,23 @@ tags:

|

|

| 9 |

- Reasoning

|

| 10 |

---

|

| 11 |

|

| 12 |

-

Knowledge

|

| 13 |

|

| 14 |

-

## *

|

| 15 |

|

| 16 |

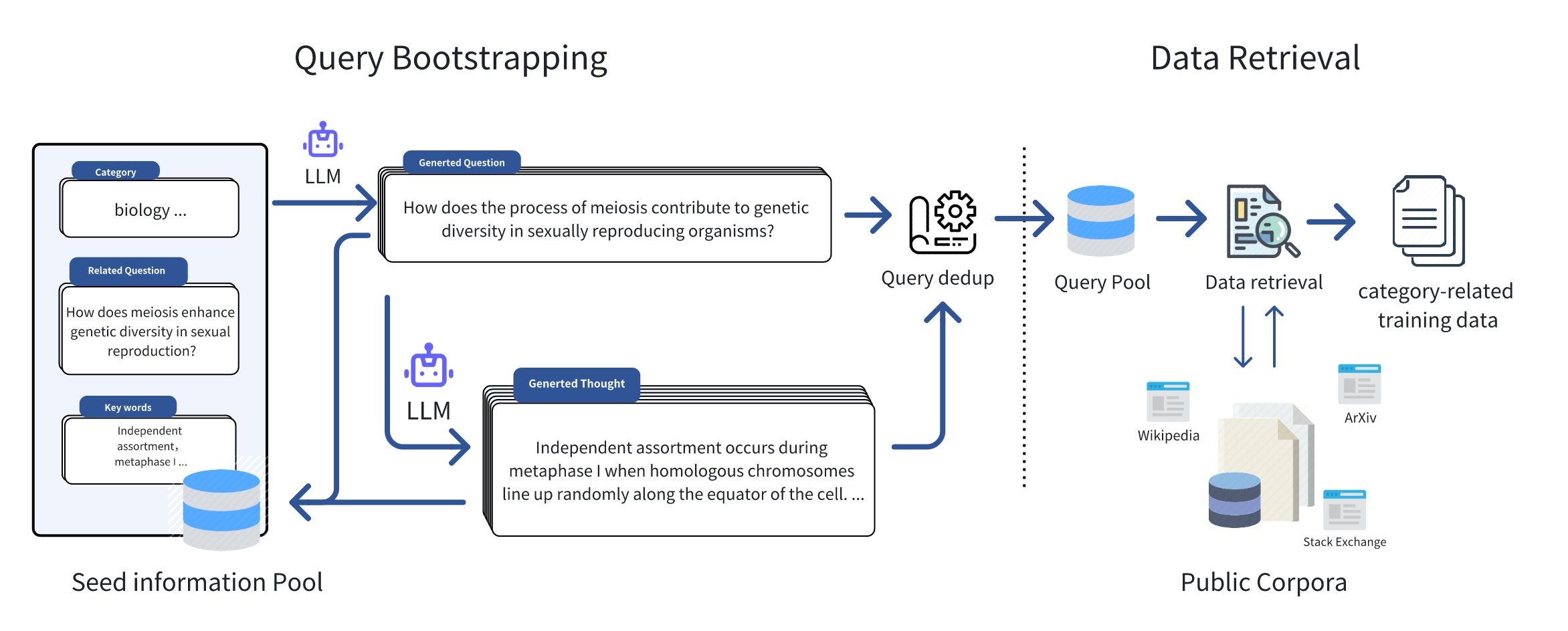

Just like the figure below, we initially collected seed information in some specific domains, such as keywords, frequently asked questions, and textbooks, to serve as inputs for the Query Bootstrapping stage. Leveraging the great generalization capability of large language models, we can effortlessly expand the initial seed information and extend it to an amount of domain-relevant queries. Inspiration from Self-instruct and WizardLM, we encompassed two stages of expansion, namely **Question Extension** and **Thought Generation**, which respectively extend the queries in terms of breadth and depth, for retrieving the domain-related data with a broader scope and deeper thought. Subsequently, based on the queries, we retrieved relevant documents from public corpora, and after performing operations such as duplicate data removal and filtering, we formed the final training dataset.

|

| 17 |

|

| 18 |

-

|

| 19 |

|

| 20 |

+

## **Retrieve-Pile** Statistics

|

| 21 |

|

| 22 |

+

Based on *Retrieve-from-CC* , we have formed a high-quality knowledge dataset **Retrieve-Pile**. As shown in Figure below, comparing with other datasets in academic and mathematical reasoning domains, we have acquired a large-scale, high-quality knowledge dataset at a lower cost, without the need for manual intervention. Through automated query bootstrapping, we efficiently capture the information about the seed query. **Retrieve-Pile** not only covers mathematical reasoning data but also encompasses rich knowledge-oriented corpora spanning various fields such as biology, physics, etc., enhancing its comprehensive research and application potential.

|

| 23 |

|

| 24 |

|

| 25 |

<img src="https://github.com/ngc7292/query_of_cc/blob/master/images/query_of_cc_timestamp_prop.png?raw=true" width="300px" style="center"/>

|

| 26 |

|

| 27 |

|

| 28 |

+

This table presents the top 10 web domains with the highest proportion of **Retrieve-Pile**, primarily including academic websites, high-quality forums, and some knowledge domain sites. Table provides a breakdown of the data sources' timestamps in **Retrieve-Pile**, with statistics conducted on an annual basis. It is evident that a significant portion of **Retrieve-Pile** is sourced from recent years, with a decreasing proportion for earlier timestamps. This trend can be attributed to the exponential growth of internet data and the inherent timeliness introduced by the **Retrieve-Pile**.

|

| 29 |

|

| 30 |

| **Web Domain** | **Count** |

|

| 31 |

|----------------------------|----------------|

|