license: cc-by-2.0

dataset_info:

features:

- name: sample_id

dtype: int32

- name: task_instruction

dtype: string

- name: task_instance

struct:

- name: context

dtype: string

- name: images_path

sequence: string

- name: choice_list

sequence: string

- name: combined_1_images

sequence: string

- name: response

dtype: string

splits:

- name: ActionLocalization_test

num_bytes: 291199

num_examples: 200

- name: ActionLocalization_adv

num_bytes: 291199

num_examples: 200

- name: ActionPrediction_test

num_bytes: 255687

num_examples: 200

- name: ActionPrediction_adv

num_bytes: 255687

num_examples: 200

- name: ActionSequence_test

num_bytes: 262234

num_examples: 200

- name: ActionSequence_adv

num_bytes: 262234

num_examples: 200

- name: ALFRED_test

num_bytes: 112715

num_examples: 200

- name: ALFRED_adv

num_bytes: 112715

num_examples: 200

- name: CharacterOrder_test

num_bytes: 274821

num_examples: 200

- name: CharacterOrder_adv

num_bytes: 274821

num_examples: 200

- name: CLEVR_Change_test

num_bytes: 114792

num_examples: 200

- name: CLEVR_Change_adv

num_bytes: 114792

num_examples: 200

- name: CounterfactualInference_test

num_bytes: 129074

num_examples: 200

- name: CounterfactualInference_adv

num_bytes: 129074

num_examples: 200

- name: DocVQA_test

num_bytes: 76660

num_examples: 200

- name: DocVQA_adv

num_bytes: 76660

num_examples: 200

- name: EgocentricNavigation_test

num_bytes: 559193

num_examples: 200

- name: EgocentricNavigation_adv

num_bytes: 559193

num_examples: 200

- name: GPR1200_test

num_bytes: 579624

num_examples: 600

- name: IEdit_test

num_bytes: 50907

num_examples: 200

- name: IEdit_adv

num_bytes: 50907

num_examples: 200

- name: ImageNeedleInAHaystack_test

num_bytes: 303423

num_examples: 320

- name: MMCoQA_test

num_bytes: 344623

num_examples: 200

- name: MMCoQA_adv

num_bytes: 344623

num_examples: 200

- name: MovingAttribute_test

num_bytes: 97299

num_examples: 200

- name: MovingAttribute_adv

num_bytes: 97299

num_examples: 200

- name: MovingDirection_test

num_bytes: 115832

num_examples: 200

- name: MovingDirection_adv

num_bytes: 115832

num_examples: 200

- name: MultiModalQA_test

num_bytes: 87978

num_examples: 200

- name: MultiModalQA_adv

num_bytes: 87978

num_examples: 200

- name: nuscenes_test

num_bytes: 87282

num_examples: 200

- name: nuscenes_adv

num_bytes: 87282

num_examples: 200

- name: ObjectExistence_test

num_bytes: 94139

num_examples: 200

- name: ObjectExistence_adv

num_bytes: 94139

num_examples: 200

- name: ObjectInteraction_test

num_bytes: 264032

num_examples: 200

- name: ObjectInteraction_adv

num_bytes: 264032

num_examples: 200

- name: ObjectShuffle_test

num_bytes: 289186

num_examples: 200

- name: ObjectShuffle_adv

num_bytes: 289186

num_examples: 200

- name: OCR_VQA_test

num_bytes: 80940

num_examples: 200

- name: OCR_VQA_adv

num_bytes: 80940

num_examples: 200

- name: SceneTransition_test

num_bytes: 266203

num_examples: 200

- name: SceneTransition_adv

num_bytes: 266203

num_examples: 200

- name: SlideVQA_test

num_bytes: 89462

num_examples: 200

- name: SlideVQA_adv

num_bytes: 89462

num_examples: 200

- name: Spot_the_Diff_test

num_bytes: 47823

num_examples: 200

- name: Spot_the_Diff_adv

num_bytes: 47823

num_examples: 200

- name: StateChange_test

num_bytes: 286783

num_examples: 200

- name: StateChange_adv

num_bytes: 286783

num_examples: 200

- name: TextNeedleInAHaystack_test

num_bytes: 11140730

num_examples: 320

- name: TQA_test

num_bytes: 92861

num_examples: 200

- name: TQA_adv

num_bytes: 92861

num_examples: 200

- name: WebQA_test

num_bytes: 202682

num_examples: 200

- name: WebQA_adv

num_bytes: 202682

num_examples: 200

- name: WikiVQA_test

num_bytes: 2557847

num_examples: 200

- name: WikiVQA_adv

num_bytes: 2557847

num_examples: 200

download_size: 12035444

dataset_size: 26288285

configs:

- config_name: default

data_files:

- split: ActionLocalization_test

path: preview/ActionLocalization_test-*

- split: ActionLocalization_adv

path: preview/ActionLocalization_adv-*

- split: ActionPrediction_test

path: preview/ActionPrediction_test-*

- split: ActionPrediction_adv

path: preview/ActionPrediction_adv-*

- split: ActionSequence_test

path: preview/ActionSequence_test-*

- split: ActionSequence_adv

path: preview/ActionSequence_adv-*

- split: ALFRED_test

path: preview/ALFRED_test-*

- split: ALFRED_adv

path: preview/ALFRED_adv-*

- split: CharacterOrder_test

path: preview/CharacterOrder_test-*

- split: CharacterOrder_adv

path: preview/CharacterOrder_adv-*

- split: CLEVR_Change_test

path: preview/CLEVR_Change_test-*

- split: CLEVR_Change_adv

path: preview/CLEVR_Change_adv-*

- split: CounterfactualInference_test

path: preview/CounterfactualInference_test-*

- split: CounterfactualInference_adv

path: preview/CounterfactualInference_adv-*

- split: DocVQA_test

path: preview/DocVQA_test-*

- split: DocVQA_adv

path: preview/DocVQA_adv-*

- split: EgocentricNavigation_test

path: preview/EgocentricNavigation_test-*

- split: EgocentricNavigation_adv

path: preview/EgocentricNavigation_adv-*

- split: GPR1200_test

path: preview/GPR1200_test-*

- split: IEdit_test

path: preview/IEdit_test-*

- split: IEdit_adv

path: preview/IEdit_adv-*

- split: ImageNeedleInAHaystack_test

path: preview/ImageNeedleInAHaystack_test-*

- split: MMCoQA_test

path: preview/MMCoQA_test-*

- split: MMCoQA_adv

path: preview/MMCoQA_adv-*

- split: MovingAttribute_test

path: preview/MovingAttribute_test-*

- split: MovingAttribute_adv

path: preview/MovingAttribute_adv-*

- split: MovingDirection_test

path: preview/MovingDirection_test-*

- split: MovingDirection_adv

path: preview/MovingDirection_adv-*

- split: MultiModalQA_test

path: preview/MultiModalQA_test-*

- split: MultiModalQA_adv

path: preview/MultiModalQA_adv-*

- split: nuscenes_test

path: preview/nuscenes_test-*

- split: nuscenes_adv

path: preview/nuscenes_adv-*

- split: ObjectExistence_test

path: preview/ObjectExistence_test-*

- split: ObjectExistence_adv

path: preview/ObjectExistence_adv-*

- split: ObjectInteraction_test

path: preview/ObjectInteraction_test-*

- split: ObjectInteraction_adv

path: preview/ObjectInteraction_adv-*

- split: ObjectShuffle_test

path: preview/ObjectShuffle_test-*

- split: ObjectShuffle_adv

path: preview/ObjectShuffle_adv-*

- split: OCR_VQA_test

path: preview/OCR_VQA_test-*

- split: OCR_VQA_adv

path: preview/OCR_VQA_adv-*

- split: SceneTransition_test

path: preview/SceneTransition_test-*

- split: SceneTransition_adv

path: preview/SceneTransition_adv-*

- split: SlideVQA_test

path: preview/SlideVQA_test-*

- split: SlideVQA_adv

path: preview/SlideVQA_adv-*

- split: Spot_the_Diff_test

path: preview/Spot_the_Diff_test-*

- split: Spot_the_Diff_adv

path: preview/Spot_the_Diff_adv-*

- split: StateChange_test

path: preview/StateChange_test-*

- split: StateChange_adv

path: preview/StateChange_adv-*

- split: TextNeedleInAHaystack_test

path: preview/TextNeedleInAHaystack_test-*

- split: TQA_test

path: preview/TQA_test-*

- split: TQA_adv

path: preview/TQA_adv-*

- split: WebQA_test

path: preview/WebQA_test-*

- split: WebQA_adv

path: preview/WebQA_adv-*

- split: WikiVQA_test

path: preview/WikiVQA_test-*

- split: WikiVQA_adv

path: preview/WikiVQA_adv-*

task_categories:

- visual-question-answering

- question-answering

- text-generation

- image-to-text

- video-classification

language:

- en

tags:

- Long-context

- MLLM

- VLM

- LLM

- Benchmark

pretty_name: MileBench

size_categories:

- 1K<n<10K

MileBench

Introduction

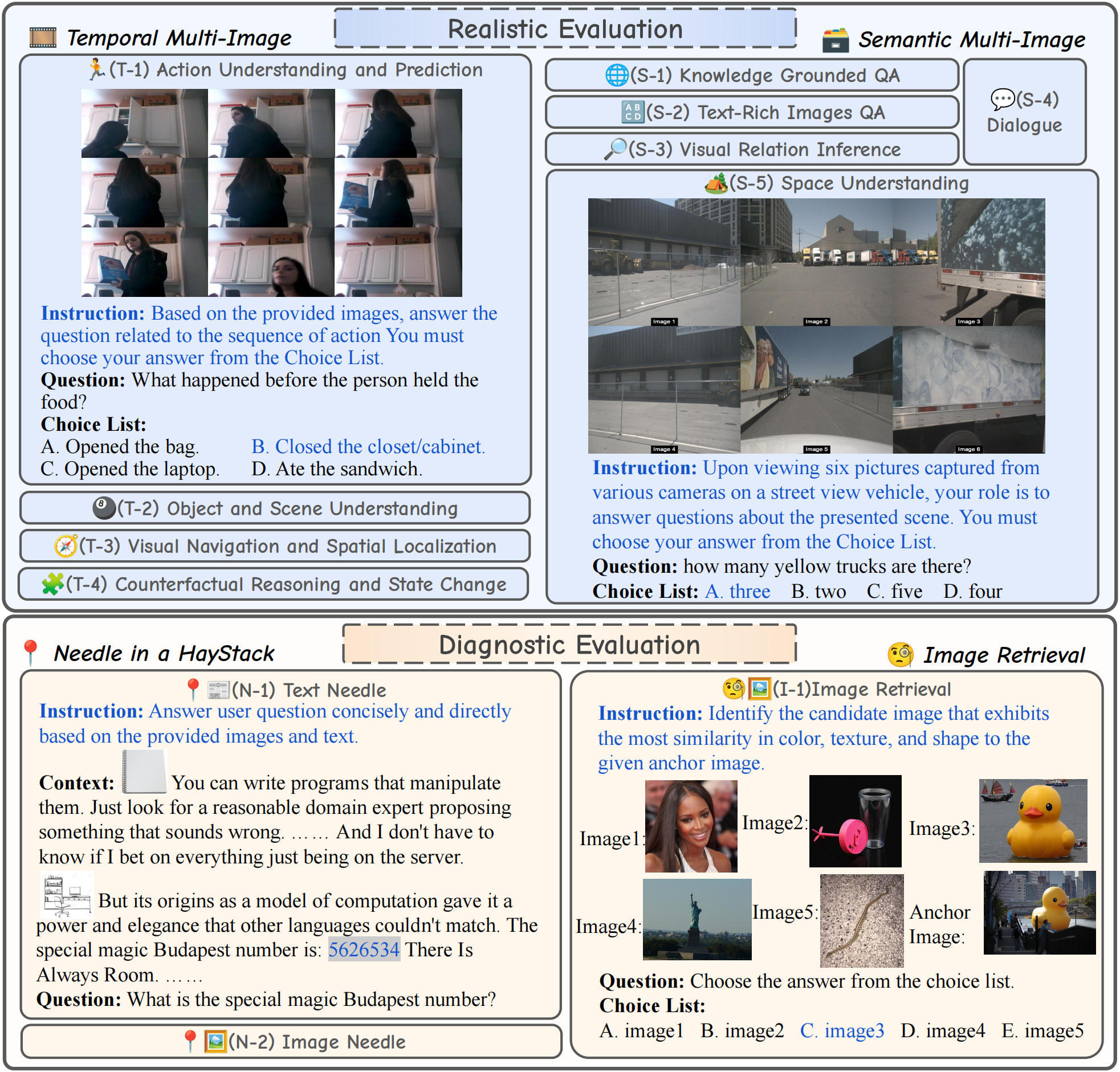

We introduce MileBench, a pioneering benchmark designed to test the MultImodal Long-contExt capabilities of MLLMs. This benchmark comprises not only multimodal long contexts, but also multiple tasks requiring both comprehension and generation. We establish two distinct evaluation sets, diagnostic and realistic, to systematically assess MLLMs’ long-context adaptation capacity and their ability to completetasks in long-context scenarios

To construct our evaluation sets, we gather 6,440 multimodal long-context samples from 21 pre-existing or self-constructed datasets, with an average of 15.2 images and 422.3 words each, as depicted in the figure, and we categorize them into their respective subsets.

How to use?

Please download MileBench.tar.gz and refer to Code for MileBench.

Links

- Homepage: MileBench Homepage

- Repository: MileBench GitHub

- Paper: Arxiv

- Point of Contact: Dingjie Song

Citation

If you find this project useful in your research, please consider cite:

@misc{song2024milebench,

title={MileBench: Benchmarking MLLMs in Long Context},

author={Dingjie Song and Shunian Chen and Guiming Hardy Chen and Fei Yu and Xiang Wan and Benyou Wang},

year={2024},

eprint={2404.18532},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@article{song2024milebench,

title={MileBench: Benchmarking MLLMs in Long Context},

author={Song, Dingjie and Chen, Shunian and Chen, Guiming Hardy and Yu, Fei and Wan, Xiang and Wang, Benyou},

journal={arXiv preprint arXiv:2404.18532},

year={2024}

}