Upload 7 files

Browse files- README.md +239 -0

- data/train-00000-of-01126.parquet +3 -0

- gitattributes.txt +37 -0

- github-code-stats-alpha.png +3 -0

- github-code.py +206 -0

- github_preprocessing.py +146 -0

- query.sql +84 -0

README.md

ADDED

|

@@ -0,0 +1,239 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

annotations_creators: []

|

| 3 |

+

language_creators:

|

| 4 |

+

- crowdsourced

|

| 5 |

+

- expert-generated

|

| 6 |

+

language:

|

| 7 |

+

- code

|

| 8 |

+

license:

|

| 9 |

+

- other

|

| 10 |

+

multilinguality:

|

| 11 |

+

- multilingual

|

| 12 |

+

pretty_name: github-code

|

| 13 |

+

size_categories:

|

| 14 |

+

- unknown

|

| 15 |

+

source_datasets: []

|

| 16 |

+

task_categories:

|

| 17 |

+

- text-generation

|

| 18 |

+

task_ids:

|

| 19 |

+

- language-modeling

|

| 20 |

+

---

|

| 21 |

+

|

| 22 |

+

# GitHub Code Dataset

|

| 23 |

+

|

| 24 |

+

## Dataset Description

|

| 25 |

+

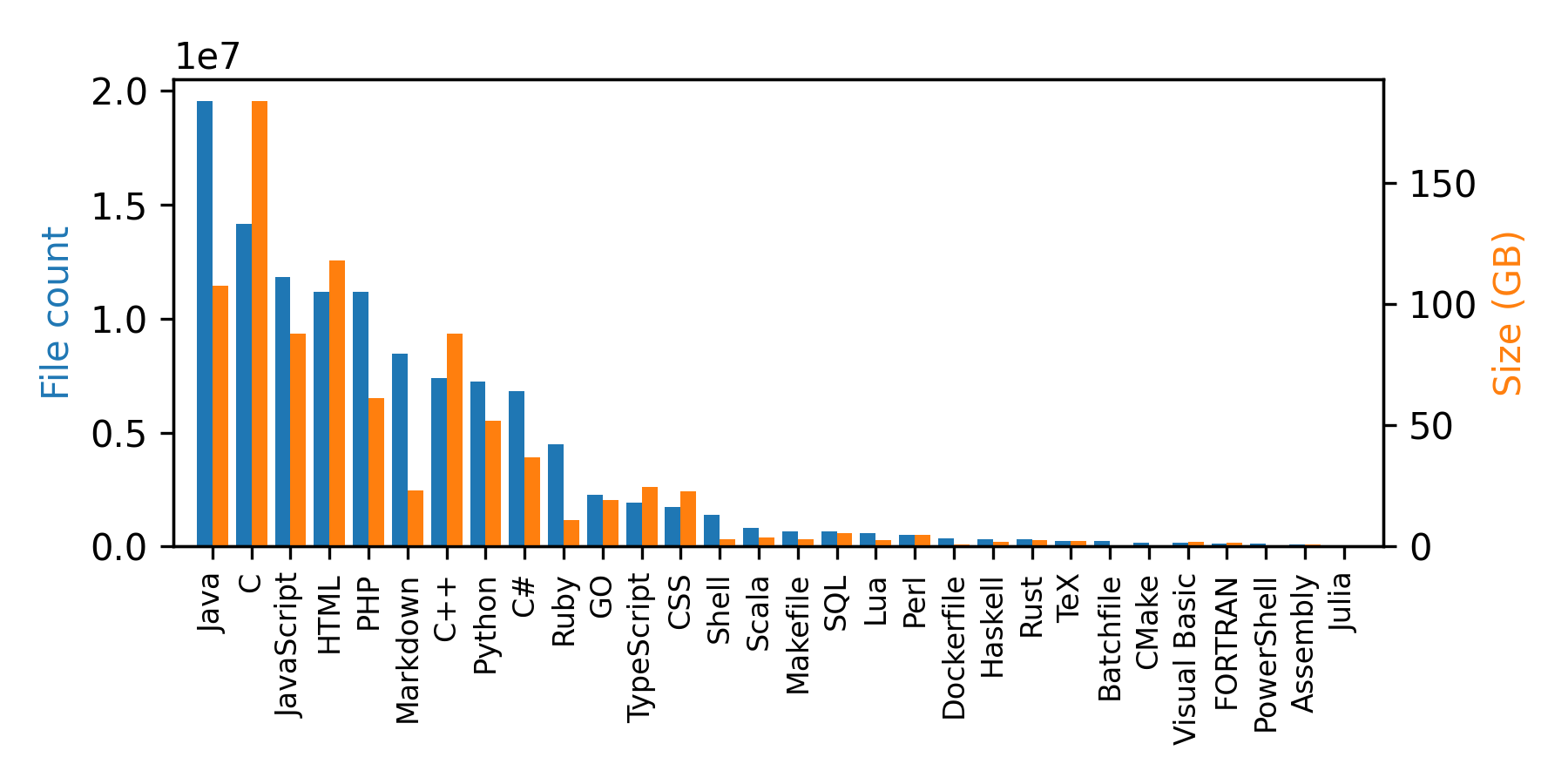

The GitHub Code dataset consists of 115M code files from GitHub in 32 programming languages with 60 extensions totaling in 1TB of data. The dataset was created from the public GitHub dataset on Google BiqQuery.

|

| 26 |

+

|

| 27 |

+

### How to use it

|

| 28 |

+

|

| 29 |

+

The GitHub Code dataset is a very large dataset so for most use cases it is recommended to make use of the streaming API of `datasets`. You can load and iterate through the dataset with the following two lines of code:

|

| 30 |

+

|

| 31 |

+

```python

|

| 32 |

+

from datasets import load_dataset

|

| 33 |

+

|

| 34 |

+

ds = load_dataset("codeparrot/github-code", streaming=True, split="train")

|

| 35 |

+

print(next(iter(ds)))

|

| 36 |

+

|

| 37 |

+

#OUTPUT:

|

| 38 |

+

{

|

| 39 |

+

'code': "import mod189 from './mod189';\nvar value=mod189+1;\nexport default value;\n",

|

| 40 |

+

'repo_name': 'MirekSz/webpack-es6-ts',

|

| 41 |

+

'path': 'app/mods/mod190.js',

|

| 42 |

+

'language': 'JavaScript',

|

| 43 |

+

'license': 'isc',

|

| 44 |

+

'size': 73

|

| 45 |

+

}

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

You can see that besides the code, repo name, and path also the programming language, license, and the size of the file are part of the dataset. You can also filter the dataset for any subset of the 30 included languages (see the full list below) in the dataset. Just pass the list of languages as a list. E.g. if your dream is to build a Codex model for Dockerfiles use the following configuration:

|

| 49 |

+

|

| 50 |

+

```python

|

| 51 |

+

ds = load_dataset("codeparrot/github-code", streaming=True, split="train", languages=["Dockerfile"])

|

| 52 |

+

print(next(iter(ds))["code"])

|

| 53 |

+

|

| 54 |

+

#OUTPUT:

|

| 55 |

+

"""\

|

| 56 |

+

FROM rockyluke/ubuntu:precise

|

| 57 |

+

|

| 58 |

+

ENV DEBIAN_FRONTEND="noninteractive" \

|

| 59 |

+

TZ="Europe/Amsterdam"

|

| 60 |

+

...

|

| 61 |

+

"""

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

We also have access to the license of the origin repo of a file so we can filter for licenses in the same way we filtered for languages:

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

ds = load_dataset("codeparrot/github-code", streaming=True, split="train", licenses=["mit", "isc"])

|

| 68 |

+

|

| 69 |

+

licenses = []

|

| 70 |

+

for element in iter(ds).take(10_000):

|

| 71 |

+

licenses.append(element["license"])

|

| 72 |

+

print(Counter(licenses))

|

| 73 |

+

|

| 74 |

+

#OUTPUT:

|

| 75 |

+

Counter({'mit': 9896, 'isc': 104})

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

Naturally, you can also download the full dataset. Note that this will download ~300GB compressed text data and the uncompressed dataset will take up ~1TB of storage:

|

| 79 |

+

```python

|

| 80 |

+

ds = load_dataset("codeparrot/github-code", split="train")

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

## Data Structure

|

| 84 |

+

|

| 85 |

+

### Data Instances

|

| 86 |

+

|

| 87 |

+

```python

|

| 88 |

+

{

|

| 89 |

+

'code': "import mod189 from './mod189';\nvar value=mod189+1;\nexport default value;\n",

|

| 90 |

+

'repo_name': 'MirekSz/webpack-es6-ts',

|

| 91 |

+

'path': 'app/mods/mod190.js',

|

| 92 |

+

'language': 'JavaScript',

|

| 93 |

+

'license': 'isc',

|

| 94 |

+

'size': 73

|

| 95 |

+

}

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

### Data Fields

|

| 99 |

+

|

| 100 |

+

|Field|Type|Description|

|

| 101 |

+

|---|---|---|

|

| 102 |

+

|code|string|content of source file|

|

| 103 |

+

|repo_name|string|name of the GitHub repository|

|

| 104 |

+

|path|string|path of file in GitHub repository|

|

| 105 |

+

|language|string|programming language as inferred by extension|

|

| 106 |

+

|license|string|license of GitHub repository|

|

| 107 |

+

|size|int|size of source file in bytes|

|

| 108 |

+

|

| 109 |

+

### Data Splits

|

| 110 |

+

|

| 111 |

+

The dataset only contains a train split.

|

| 112 |

+

|

| 113 |

+

## Languages

|

| 114 |

+

|

| 115 |

+

The dataset contains 30 programming languages with over 60 extensions:

|

| 116 |

+

|

| 117 |

+

```python

|

| 118 |

+

{

|

| 119 |

+

"Assembly": [".asm"],

|

| 120 |

+

"Batchfile": [".bat", ".cmd"],

|

| 121 |

+

"C": [".c", ".h"],

|

| 122 |

+

"C#": [".cs"],

|

| 123 |

+

"C++": [".cpp", ".hpp", ".c++", ".h++", ".cc", ".hh", ".C", ".H"],

|

| 124 |

+

"CMake": [".cmake"],

|

| 125 |

+

"CSS": [".css"],

|

| 126 |

+

"Dockerfile": [".dockerfile", "Dockerfile"],

|

| 127 |

+

"FORTRAN": ['.f90', '.f', '.f03', '.f08', '.f77', '.f95', '.for', '.fpp'],

|

| 128 |

+

"GO": [".go"],

|

| 129 |

+

"Haskell": [".hs"],

|

| 130 |

+

"HTML":[".html"],

|

| 131 |

+

"Java": [".java"],

|

| 132 |

+

"JavaScript": [".js"],

|

| 133 |

+

"Julia": [".jl"],

|

| 134 |

+

"Lua": [".lua"],

|

| 135 |

+

"Makefile": ["Makefile"],

|

| 136 |

+

"Markdown": [".md", ".markdown"],

|

| 137 |

+

"PHP": [".php", ".php3", ".php4", ".php5", ".phps", ".phpt"],

|

| 138 |

+

"Perl": [".pl", ".pm", ".pod", ".perl"],

|

| 139 |

+

"PowerShell": ['.ps1', '.psd1', '.psm1'],

|

| 140 |

+

"Python": [".py"],

|

| 141 |

+

"Ruby": [".rb"],

|

| 142 |

+

"Rust": [".rs"],

|

| 143 |

+

"SQL": [".sql"],

|

| 144 |

+

"Scala": [".scala"],

|

| 145 |

+

"Shell": [".sh", ".bash", ".command", ".zsh"],

|

| 146 |

+

"TypeScript": [".ts", ".tsx"],

|

| 147 |

+

"TeX": [".tex"],

|

| 148 |

+

"Visual Basic": [".vb"]

|

| 149 |

+

}

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

## Licenses

|

| 153 |

+

Each example is also annotated with the license of the associated repository. There are in total 15 licenses:

|

| 154 |

+

```python

|

| 155 |

+

[

|

| 156 |

+

'mit',

|

| 157 |

+

'apache-2.0',

|

| 158 |

+

'gpl-3.0',

|

| 159 |

+

'gpl-2.0',

|

| 160 |

+

'bsd-3-clause',

|

| 161 |

+

'agpl-3.0',

|

| 162 |

+

'lgpl-3.0',

|

| 163 |

+

'lgpl-2.1',

|

| 164 |

+

'bsd-2-clause',

|

| 165 |

+

'cc0-1.0',

|

| 166 |

+

'epl-1.0',

|

| 167 |

+

'mpl-2.0',

|

| 168 |

+

'unlicense',

|

| 169 |

+

'isc',

|

| 170 |

+

'artistic-2.0'

|

| 171 |

+

]

|

| 172 |

+

```

|

| 173 |

+

|

| 174 |

+

## Dataset Statistics

|

| 175 |

+

|

| 176 |

+

The dataset contains 115M files and the sum of all the source code file sizes is 873 GB (note that the size of the dataset is larger due to the extra fields). A breakdown per language is given in the plot and table below:

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

| | Language |File Count| Size (GB)|

|

| 181 |

+

|---:|:-------------|---------:|-------:|

|

| 182 |

+

| 0 | Java | 19548190 | 107.70 |

|

| 183 |

+

| 1 | C | 14143113 | 183.83 |

|

| 184 |

+

| 2 | JavaScript | 11839883 | 87.82 |

|

| 185 |

+

| 3 | HTML | 11178557 | 118.12 |

|

| 186 |

+

| 4 | PHP | 11177610 | 61.41 |

|

| 187 |

+

| 5 | Markdown | 8464626 | 23.09 |

|

| 188 |

+

| 6 | C++ | 7380520 | 87.73 |

|

| 189 |

+

| 7 | Python | 7226626 | 52.03 |

|

| 190 |

+

| 8 | C# | 6811652 | 36.83 |

|

| 191 |

+

| 9 | Ruby | 4473331 | 10.95 |

|

| 192 |

+

| 10 | GO | 2265436 | 19.28 |

|

| 193 |

+

| 11 | TypeScript | 1940406 | 24.59 |

|

| 194 |

+

| 12 | CSS | 1734406 | 22.67 |

|

| 195 |

+

| 13 | Shell | 1385648 | 3.01 |

|

| 196 |

+

| 14 | Scala | 835755 | 3.87 |

|

| 197 |

+

| 15 | Makefile | 679430 | 2.92 |

|

| 198 |

+

| 16 | SQL | 656671 | 5.67 |

|

| 199 |

+

| 17 | Lua | 578554 | 2.81 |

|

| 200 |

+

| 18 | Perl | 497949 | 4.70 |

|

| 201 |

+

| 19 | Dockerfile | 366505 | 0.71 |

|

| 202 |

+

| 20 | Haskell | 340623 | 1.85 |

|

| 203 |

+

| 21 | Rust | 322431 | 2.68 |

|

| 204 |

+

| 22 | TeX | 251015 | 2.15 |

|

| 205 |

+

| 23 | Batchfile | 236945 | 0.70 |

|

| 206 |

+

| 24 | CMake | 175282 | 0.54 |

|

| 207 |

+

| 25 | Visual Basic | 155652 | 1.91 |

|

| 208 |

+

| 26 | FORTRAN | 142038 | 1.62 |

|

| 209 |

+

| 27 | PowerShell | 136846 | 0.69 |

|

| 210 |

+

| 28 | Assembly | 82905 | 0.78 |

|

| 211 |

+

| 29 | Julia | 58317 | 0.29 |

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

## Dataset Creation

|

| 215 |

+

|

| 216 |

+

The dataset was created in two steps:

|

| 217 |

+

1. Files of with the extensions given in the list above were retrieved from the GitHub dataset on BigQuery (full query [here](https://huggingface.co/datasets/codeparrot/github-code/blob/main/query.sql)). The query was executed on _Mar 16, 2022, 6:23:39 PM UTC+1_.

|

| 218 |

+

2. Files with lines longer than 1000 characters and duplicates (exact duplicates ignoring whitespaces) were dropped (full preprocessing script [here](https://huggingface.co/datasets/codeparrot/github-code/blob/main/github_preprocessing.py)).

|

| 219 |

+

|

| 220 |

+

## Considerations for Using the Data

|

| 221 |

+

|

| 222 |

+

The dataset consists of source code from a wide range of repositories. As such they can potentially include harmful or biased code as well as sensitive information like passwords or usernames.

|

| 223 |

+

|

| 224 |

+

## Releases

|

| 225 |

+

|

| 226 |

+

You can load any older version of the dataset with the `revision` argument:

|

| 227 |

+

|

| 228 |

+

```Python

|

| 229 |

+

ds = load_dataset("codeparrot/github-code", revision="v1.0")

|

| 230 |

+

```

|

| 231 |

+

|

| 232 |

+

### v1.0

|

| 233 |

+

- Initial release of dataset

|

| 234 |

+

- The query was executed on _Feb 14, 2022, 12:03:16 PM UTC+1_

|

| 235 |

+

|

| 236 |

+

### v1.1

|

| 237 |

+

- Fix missing Scala/TypeScript

|

| 238 |

+

- Fix deduplication issue with inconsistent Python `hash`

|

| 239 |

+

- The query was executed on _Mar 16, 2022, 6:23:39 PM UTC+1_

|

data/train-00000-of-01126.parquet

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:58dfa4284bec1d8c75c1cbb578cb688d727aa2724d1ebc2199d8239871b101d6

|

| 3 |

+

size 391105

|

gitattributes.txt

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bin.* filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

# Audio files - uncompressed

|

| 29 |

+

*.pcm filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.sam filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.raw filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

# Audio files - compressed

|

| 33 |

+

*.aac filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.flac filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*.mp3 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.ogg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*.wav filter=lfs diff=lfs merge=lfs -text

|

github-code-stats-alpha.png

ADDED

|

Git LFS Details

|

github-code.py

ADDED

|

@@ -0,0 +1,206 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# coding=utf-8

|

| 2 |

+

# Copyright 2020 The HuggingFace Datasets Authors and the current dataset script contributor.

|

| 3 |

+

#

|

| 4 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 5 |

+

# you may not use this file except in compliance with the License.

|

| 6 |

+

# You may obtain a copy of the License at

|

| 7 |

+

#

|

| 8 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 9 |

+

#

|

| 10 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 11 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 12 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 13 |

+

# See the License for the specific language governing permissions and

|

| 14 |

+

# limitations under the License.

|

| 15 |

+

"""GitHub Code dataset."""

|

| 16 |

+

|

| 17 |

+

import os

|

| 18 |

+

|

| 19 |

+

import pyarrow as pa

|

| 20 |

+

import pyarrow.parquet as pq

|

| 21 |

+

|

| 22 |

+

import datasets

|

| 23 |

+

|

| 24 |

+

_REPO_NAME = "codeparrot/github-code"

|

| 25 |

+

|

| 26 |

+

_LANG_TO_EXTENSION = {

|

| 27 |

+

"Assembly": [".asm"],

|

| 28 |

+

"Batchfile": [".bat", ".cmd"],

|

| 29 |

+

"C": [".c", ".h"],

|

| 30 |

+

"C#": [".cs"],

|

| 31 |

+

"C++": [".cpp", ".hpp", ".c++", ".h++", ".cc", ".hh", ".C", ".H"],

|

| 32 |

+

"CMake": [".cmake"],

|

| 33 |

+

"CSS": [".css"],

|

| 34 |

+

"Dockerfile": [".dockerfile", "Dockerfile"],

|

| 35 |

+

"FORTRAN": ['.f90', '.f', '.f03', '.f08', '.f77', '.f95', '.for', '.fpp'],

|

| 36 |

+

"GO": [".go"],

|

| 37 |

+

"Haskell": [".hs"],

|

| 38 |

+

"HTML":[".html"],

|

| 39 |

+

"Java": [".java"],

|

| 40 |

+

"JavaScript": [".js"],

|

| 41 |

+

"Julia": [".jl"],

|

| 42 |

+

"Lua": [".lua"],

|

| 43 |

+

"Makefile": ["Makefile"],

|

| 44 |

+

"Markdown": [".md", ".markdown"],

|

| 45 |

+

"PHP": [".php", ".php3", ".php4", ".php5", ".phps", ".phpt"],

|

| 46 |

+

"Perl": [".pl", ".pm", ".pod", ".perl"],

|

| 47 |

+

"PowerShell": ['.ps1', '.psd1', '.psm1'],

|

| 48 |

+

"Python": [".py"],

|

| 49 |

+

"Ruby": [".rb"],

|

| 50 |

+

"Rust": [".rs"],

|

| 51 |

+

"SQL": [".sql"],

|

| 52 |

+

"Scala": [".scala"],

|

| 53 |

+

"Shell": [".sh", ".bash", ".command", ".zsh"],

|

| 54 |

+

"TypeScript": [".ts", ".tsx"],

|

| 55 |

+

"TeX": [".tex"],

|

| 56 |

+

"Visual Basic": [".vb"]

|

| 57 |

+

}

|

| 58 |

+

|

| 59 |

+

_LICENSES = ['mit',

|

| 60 |

+

'apache-2.0',

|

| 61 |

+

'gpl-3.0',

|

| 62 |

+

'gpl-2.0',

|

| 63 |

+

'bsd-3-clause',

|

| 64 |

+

'agpl-3.0',

|

| 65 |

+

'lgpl-3.0',

|

| 66 |

+

'lgpl-2.1',

|

| 67 |

+

'bsd-2-clause',

|

| 68 |

+

'cc0-1.0',

|

| 69 |

+

'epl-1.0',

|

| 70 |

+

'mpl-2.0',

|

| 71 |

+

'unlicense',

|

| 72 |

+

'isc',

|

| 73 |

+

'artistic-2.0']

|

| 74 |

+

|

| 75 |

+

_DESCRIPTION = """\

|

| 76 |

+

The GitHub Code dataest consists of 115M code files from GitHub in 32 programming \

|

| 77 |

+

languages with 60 extensions totalling in 1TB of text data. The dataset was created \

|

| 78 |

+

from the GitHub dataset on BiqQuery.

|

| 79 |

+

"""

|

| 80 |

+

|

| 81 |

+

_HOMEPAGE = "https://cloud.google.com/blog/topics/public-datasets/github-on-bigquery-analyze-all-the-open-source-code/"

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

_EXTENSION_TO_LANG = {}

|

| 85 |

+

for lang in _LANG_TO_EXTENSION:

|

| 86 |

+

for extension in _LANG_TO_EXTENSION[lang]:

|

| 87 |

+

_EXTENSION_TO_LANG[extension] = lang

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

_LANG_CONFIGS = ["all"] + list(_LANG_TO_EXTENSION.keys())

|

| 92 |

+

_LICENSE_CONFIGS = ["all"] + _LICENSES

|

| 93 |

+

|

| 94 |

+

class GithubCodeConfig(datasets.BuilderConfig):

|

| 95 |

+

"""BuilderConfig for the GitHub Code dataset."""

|

| 96 |

+

|

| 97 |

+

def __init__(self, *args, languages=["all"], licenses=["all"], **kwargs):

|

| 98 |

+

"""BuilderConfig for the GitHub Code dataset.

|

| 99 |

+

|

| 100 |

+

Args:

|

| 101 |

+

languages (:obj:`List[str]`): List of languages to load.

|

| 102 |

+

licenses (:obj:`List[str]`): List of licenses to load.

|

| 103 |

+

**kwargs: keyword arguments forwarded to super.

|

| 104 |

+

"""

|

| 105 |

+

super().__init__(

|

| 106 |

+

*args,

|

| 107 |

+

name="+".join(languages)+"-"+"+".join(licenses),

|

| 108 |

+

**kwargs,

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

languages = set(languages)

|

| 112 |

+

licenses = set(licenses)

|

| 113 |

+

|

| 114 |

+

assert all([language in _LANG_CONFIGS for language in languages]), f"Language not in {_LANG_CONFIGS}."

|

| 115 |

+

assert all([license in _LICENSE_CONFIGS for license in licenses]), f"License not in {_LICENSE_CONFIGS}."

|

| 116 |

+

|

| 117 |

+

if "all" in languages:

|

| 118 |

+

assert len(languages)==1, "Passed 'all' together with other languages."

|

| 119 |

+

self.filter_languages = False

|

| 120 |

+

else:

|

| 121 |

+

self.filter_languages = True

|

| 122 |

+

|

| 123 |

+

if "all" in licenses:

|

| 124 |

+

assert len(licenses)==1, "Passed 'all' together with other licenses."

|

| 125 |

+

self.filter_licenses = False

|

| 126 |

+

else:

|

| 127 |

+

self.filter_licenses = True

|

| 128 |

+

|

| 129 |

+

self.languages = set(languages)

|

| 130 |

+

self.licenses = set(licenses)

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

class GithubCode(datasets.GeneratorBasedBuilder):

|

| 135 |

+

"""GitHub Code dataset."""

|

| 136 |

+

|

| 137 |

+

VERSION = datasets.Version("1.0.0")

|

| 138 |

+

|

| 139 |

+

BUILDER_CONFIG_CLASS = GithubCodeConfig

|

| 140 |

+

BUILDER_CONFIGS = [GithubCodeConfig(languages=[lang], licenses=[license]) for lang in _LANG_CONFIGS

|

| 141 |

+

for license in _LICENSE_CONFIGS]

|

| 142 |

+

DEFAULT_CONFIG_NAME = "all-all"

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

def _info(self):

|

| 146 |

+

return datasets.DatasetInfo(

|

| 147 |

+

description=_DESCRIPTION,

|

| 148 |

+

features=datasets.Features({"code": datasets.Value("string"),

|

| 149 |

+

"repo_name": datasets.Value("string"),

|

| 150 |

+

"path": datasets.Value("string"),

|

| 151 |

+

"language": datasets.Value("string"),

|

| 152 |

+

"license": datasets.Value("string"),

|

| 153 |

+

"size": datasets.Value("int32")}),

|

| 154 |

+

supervised_keys=None,

|

| 155 |

+

homepage=_HOMEPAGE,

|

| 156 |

+

license="Multiple: see the 'license' field of each sample.",

|

| 157 |

+

|

| 158 |

+

)

|

| 159 |

+

|

| 160 |

+

def _split_generators(self, dl_manager):

|

| 161 |

+

num_shards = 1126

|

| 162 |

+

data_files = [

|

| 163 |

+

f"data/train-{_index:05d}-of-{num_shards:05d}.parquet"

|

| 164 |

+

for _index in range(num_shards)

|

| 165 |

+

]

|

| 166 |

+

files = dl_manager.download(data_files)

|

| 167 |

+

return [

|

| 168 |

+

datasets.SplitGenerator(

|

| 169 |

+

name=datasets.Split.TRAIN,

|

| 170 |

+

gen_kwargs={

|

| 171 |

+

"files": files,

|

| 172 |

+

},

|

| 173 |

+

),

|

| 174 |

+

]

|

| 175 |

+

|

| 176 |

+

def _generate_examples(self, files):

|

| 177 |

+

key = 0

|

| 178 |

+

for file_idx, file in enumerate(files):

|

| 179 |

+

with open(file, "rb") as f:

|

| 180 |

+

parquet_file = pq.ParquetFile(f)

|

| 181 |

+

for batch_idx, record_batch in enumerate(parquet_file.iter_batches(batch_size=10_000)):

|

| 182 |

+

pa_table = pa.Table.from_batches([record_batch])

|

| 183 |

+

for row_index in range(pa_table.num_rows):

|

| 184 |

+

row = pa_table.slice(row_index, 1).to_pydict()

|

| 185 |

+

|

| 186 |

+

lang = lang_from_name(row['path'][0])

|

| 187 |

+

license = row["license"][0]

|

| 188 |

+

|

| 189 |

+

if self.config.filter_languages and not lang in self.config.languages:

|

| 190 |

+

continue

|

| 191 |

+

if self.config.filter_licenses and not license in self.config.licenses:

|

| 192 |

+

continue

|

| 193 |

+

|

| 194 |

+

yield key, {"code": row['content'][0],

|

| 195 |

+

"repo_name": row['repo_name'][0],

|

| 196 |

+

"path": row['path'][0],

|

| 197 |

+

"license": license,

|

| 198 |

+

"language": lang,

|

| 199 |

+

"size": int(row['size'][0])}

|

| 200 |

+

key += 1

|

| 201 |

+

|

| 202 |

+

|

| 203 |

+

def lang_from_name(name):

|

| 204 |

+

for extension in _EXTENSION_TO_LANG:

|

| 205 |

+

if name.endswith(extension):

|

| 206 |

+

return _EXTENSION_TO_LANG[extension]

|

github_preprocessing.py

ADDED

|

@@ -0,0 +1,146 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gzip

|

| 2 |

+

import multiprocessing

|

| 3 |

+

import os

|

| 4 |

+

import shutil

|

| 5 |

+

import time

|

| 6 |

+

from argparse import Namespace

|

| 7 |

+

from collections import Counter

|

| 8 |

+

import numpy as np

|

| 9 |

+

from datasets import load_dataset, utils

|

| 10 |

+

import re

|

| 11 |

+

from huggingface_hub import Repository

|

| 12 |

+

from multiprocessing import Pool

|

| 13 |

+

from tqdm import tqdm

|

| 14 |

+

|

| 15 |

+

# Settings

|

| 16 |

+

config = {

|

| 17 |

+

"dataset_name": "./data/github",

|

| 18 |

+

"num_workers": 96,

|

| 19 |

+

"line_max": 1000,

|

| 20 |

+

"out_path": "./data/github-code",

|

| 21 |

+

"repo_name": "github-code",

|

| 22 |

+

"org": "lvwerra",

|

| 23 |

+

"shard_size": 1000 << 20}

|

| 24 |

+

|

| 25 |

+

args = Namespace(**config)

|

| 26 |

+

|

| 27 |

+

PATTERN = re.compile(r'\s+')

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

def hash_func(text):

|

| 31 |

+

return hashlib.md5(re.sub(PATTERN, '', text).encode("utf-8")).hexdigest()

|

| 32 |

+

|

| 33 |

+

def get_hash(example):

|

| 34 |

+

"""Get hash of content field."""

|

| 35 |

+

return {"hash": hash_func(example["content"])}

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def line_stats(example):

|

| 39 |

+

"""Calculates mean and max line length of file."""

|

| 40 |

+

line_lengths = [len(line) for line in example["content"].splitlines()]

|

| 41 |

+

return {"line_mean": np.mean(line_lengths), "line_max": max(line_lengths)}

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def alpha_stats(example):

|

| 45 |

+

"""Calculates mean and max line length of file."""

|

| 46 |

+

alpha_frac = np.mean([c.isalnum() for c in example["content"]])

|

| 47 |

+

return {"alpha_frac": alpha_frac}

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def check_uniques(example, uniques):

|

| 51 |

+

"""Check if current hash is still in set of unique hashes and remove if true."""

|

| 52 |

+

if example["hash"] in uniques:

|

| 53 |

+

uniques.remove(example["hash"])

|

| 54 |

+

return True

|

| 55 |

+

else:

|

| 56 |

+

return False

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def is_autogenerated(example, scan_width=5):

|

| 60 |

+

"""Check if file is autogenerated by looking for keywords in the first few lines of the file."""

|

| 61 |

+

keywords = ["auto-generated", "autogenerated", "automatically generated"]

|

| 62 |

+

lines = example["content"].splitlines()

|

| 63 |

+

for _, line in zip(range(scan_width), lines):

|

| 64 |

+

for keyword in keywords:

|

| 65 |

+

if keyword in line.lower():

|

| 66 |

+

return {"autogenerated": True}

|

| 67 |

+

else:

|

| 68 |

+

return {"autogenerated": False}

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def preprocess(example):

|

| 72 |

+

"""Chain all preprocessing steps into one function to not fill cache."""

|

| 73 |

+

results = dict()

|

| 74 |

+

results.update(get_hash(example))

|

| 75 |

+

results.update(line_stats(example))

|

| 76 |

+

return results

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def filter(example, uniques, args):

|

| 80 |

+

"""Filter dataset with heuristics."""

|

| 81 |

+

if not check_uniques(example, uniques):

|

| 82 |

+

return False

|

| 83 |

+

elif example["line_max"] > args.line_max:

|

| 84 |

+

return False

|

| 85 |

+

else:

|

| 86 |

+

return True

|

| 87 |

+

|

| 88 |

+

def save_shard(shard_tuple):

|

| 89 |

+

"""Save shard"""

|

| 90 |

+

filename, shard = shard_tuple

|

| 91 |

+

shard.to_parquet(filename)

|

| 92 |

+

|

| 93 |

+

# Load dataset

|

| 94 |

+

t_start = time.time()

|

| 95 |

+

ds = load_dataset(args.dataset_name, split="train", chunksize=40<<20)

|

| 96 |

+

print(f"Time to load dataset: {time.time()-t_start:.2f}")

|

| 97 |

+

|

| 98 |

+

# Run preprocessing

|

| 99 |

+

t_start = time.time()

|

| 100 |

+

ds = ds.map(preprocess, num_proc=args.num_workers)

|

| 101 |

+

print(f"Time to preprocess dataset: {time.time()-t_start:.2f}")

|

| 102 |

+

print(ds)

|

| 103 |

+

|

| 104 |

+

# Deduplicate hashes

|

| 105 |

+

uniques = set(ds.unique("hash"))

|

| 106 |

+

frac = len(uniques) / len(ds)

|

| 107 |

+

print(f"Fraction of duplicates: {1-frac:.2%}")

|

| 108 |

+

|

| 109 |

+

# Deduplicate data and apply heuristics

|

| 110 |

+

t_start = time.time()

|

| 111 |

+

ds = ds.filter(filter, fn_kwargs={"uniques": uniques, "args": args})

|

| 112 |

+

ds = ds.remove_columns(["line_mean", "line_max", "copies", "hash"])

|

| 113 |

+

print(f"Time to filter dataset: {time.time()-t_start:.2f}")

|

| 114 |

+

print(f"Size of filtered dataset: {len(ds)}")

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

# Save dataset in repo

|

| 118 |

+

repo = Repository(

|

| 119 |

+

local_dir=args.out_path,

|

| 120 |

+

clone_from=args.org + "/" + args.repo_name,

|

| 121 |

+

repo_type="dataset",

|

| 122 |

+

private=True,

|

| 123 |

+

use_auth_token=True,

|

| 124 |

+

git_user="lvwerra",

|

| 125 |

+

git_email="leandro.vonwerra@gmail.com",

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

os.mkdir(args.out_path + "/data")

|

| 129 |

+

|

| 130 |

+

if ds._indices is not None:

|

| 131 |

+

dataset_nbytes = ds.data.nbytes * len(ds._indices) / len(ds.data)

|

| 132 |

+

else:

|

| 133 |

+

dataset_nbytes = ds.data.nbytes

|

| 134 |

+

|

| 135 |

+

num_shards = int(dataset_nbytes / args.shard_size) + 1

|

| 136 |

+

print(f"Number of shards: {num_shards}")

|

| 137 |

+

|

| 138 |

+

t_start = time.time()

|

| 139 |

+

shards = (ds.shard(num_shards=num_shards, index=i, contiguous=True) for i in range(num_shards))

|

| 140 |

+

filenames = (f"{args.out_path}/data/train-{index:05d}-of-{num_shards:05d}.parquet" for index in range(num_shards))

|

| 141 |

+

|

| 142 |

+

with Pool(16) as p:

|

| 143 |

+

list(tqdm(p.imap_unordered(save_shard, zip(filenames, shards), chunksize=4), total=num_shards))

|

| 144 |

+

print(f"Time to save dataset: {time.time()-t_start:.2f}")

|

| 145 |

+

|

| 146 |

+

# To push to hub run `git add/commit/push` inside dataset repo folder

|

query.sql

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

SELECT

|

| 2 |

+

f.repo_name,

|

| 3 |

+

f.path,

|

| 4 |

+

c.copies,

|

| 5 |

+

c.size,

|

| 6 |

+

c.content,

|

| 7 |

+

l.license

|

| 8 |

+

FROM

|

| 9 |

+

(select f.*, row_number() over (partition by id order by path desc) as seqnum

|

| 10 |

+

from `bigquery-public-data.github_repos.files` AS f) f

|

| 11 |

+

JOIN

|

| 12 |

+

`bigquery-public-data.github_repos.contents` AS c

|

| 13 |

+

ON

|

| 14 |

+

f.id = c.id AND seqnum=1

|

| 15 |

+

JOIN

|

| 16 |

+

`bigquery-public-data.github_repos.licenses` AS l

|

| 17 |

+

ON

|

| 18 |

+

f.repo_name = l.repo_name

|

| 19 |

+

WHERE

|

| 20 |

+

NOT c.binary

|

| 21 |

+

AND ((f.path LIKE '%.asm'

|

| 22 |

+

OR f.path LIKE '%.bat'

|

| 23 |

+

OR f.path LIKE '%.cmd'

|

| 24 |

+

OR f.path LIKE '%.c'

|

| 25 |

+

OR f.path LIKE '%.h'

|

| 26 |

+

OR f.path LIKE '%.cs'

|

| 27 |

+

OR f.path LIKE '%.cpp'

|

| 28 |

+

OR f.path LIKE '%.hpp'

|

| 29 |

+

OR f.path LIKE '%.c++'

|

| 30 |

+

OR f.path LIKE '%.h++'

|

| 31 |

+

OR f.path LIKE '%.cc'

|

| 32 |

+

OR f.path LIKE '%.hh'

|

| 33 |

+

OR f.path LIKE '%.C'

|

| 34 |

+

OR f.path LIKE '%.H'

|

| 35 |

+

OR f.path LIKE '%.cmake'

|

| 36 |

+

OR f.path LIKE '%.css'

|

| 37 |

+

OR f.path LIKE '%.dockerfile'

|

| 38 |

+

OR f.path LIKE '%.f90'

|

| 39 |

+

OR f.path LIKE '%.f'

|

| 40 |

+

OR f.path LIKE '%.f03'

|

| 41 |

+

OR f.path LIKE '%.f08'

|

| 42 |

+

OR f.path LIKE '%.f77'

|

| 43 |

+

OR f.path LIKE '%.f95'

|

| 44 |

+

OR f.path LIKE '%.for'

|

| 45 |

+

OR f.path LIKE '%.fpp'

|

| 46 |

+

OR f.path LIKE '%.go'

|

| 47 |

+

OR f.path LIKE '%.hs'

|

| 48 |

+

OR f.path LIKE '%.html'

|

| 49 |

+

OR f.path LIKE '%.java'

|

| 50 |

+

OR f.path LIKE '%.js'

|

| 51 |

+

OR f.path LIKE '%.jl'

|

| 52 |

+

OR f.path LIKE '%.lua'

|

| 53 |

+

OR f.path LIKE '%.md'

|

| 54 |

+

OR f.path LIKE '%.markdown'

|

| 55 |

+

OR f.path LIKE '%.php'

|

| 56 |

+

OR f.path LIKE '%.php3'

|

| 57 |

+

OR f.path LIKE '%.php4'

|

| 58 |

+

OR f.path LIKE '%.php5'

|

| 59 |

+

OR f.path LIKE '%.phps'

|

| 60 |

+

OR f.path LIKE '%.phpt'

|

| 61 |

+

OR f.path LIKE '%.pl'

|

| 62 |

+

OR f.path LIKE '%.pm'

|

| 63 |

+

OR f.path LIKE '%.pod'

|

| 64 |

+

OR f.path LIKE '%.perl'

|

| 65 |

+

OR f.path LIKE '%.ps1'

|

| 66 |

+

OR f.path LIKE '%.psd1'

|

| 67 |

+

OR f.path LIKE '%.psm1'

|

| 68 |

+

OR f.path LIKE '%.py'

|

| 69 |

+

OR f.path LIKE '%.rb'

|

| 70 |

+

OR f.path LIKE '%.rs'

|

| 71 |

+

OR f.path LIKE '%.sql'

|

| 72 |

+

OR f.path LIKE '%.scala'

|

| 73 |

+

OR f.path LIKE '%.sh'

|

| 74 |

+

OR f.path LIKE '%.bash'

|

| 75 |

+

OR f.path LIKE '%.command'

|

| 76 |

+

OR f.path LIKE '%.zsh'

|

| 77 |

+

OR f.path LIKE '%.ts'

|

| 78 |

+

OR f.path LIKE '%.tsx'

|

| 79 |

+

OR f.path LIKE '%.tex'

|

| 80 |

+

OR f.path LIKE '%.vb'

|

| 81 |

+

OR f.path LIKE '%Dockerfile'

|

| 82 |

+

OR f.path LIKE '%Makefile')

|

| 83 |

+

AND (c.size BETWEEN 0

|

| 84 |

+

AND 1048575))

|