Update README.md

Browse files

README.md

CHANGED

|

@@ -11,6 +11,12 @@ licenses:

|

|

| 11 |

|

| 12 |

## General concept of the model

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

#### Inappropraiteness intuition

|

| 15 |

This model is trained on the dataset of inappropriate messages of the Russian language. Generally, an inappropriate utterance is an utterance that has not obscene words or any kind of toxic intent, but can still harm the reputation of the speaker. Find some sample for more intuition in the table below. Learn more about the concept of inappropriateness [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages). The properties of the dataset are the same as the one described in the article, the only difference is the size.

|

| 16 |

|

|

@@ -34,11 +40,7 @@ The model was trained, validated, and tested only on the samples with 100% confi

|

|

| 34 |

| macro avg | 0.86 | 0.85 | 0.85 | 10565 |

|

| 35 |

| weighted avg | 0.89 | 0.89 | 0.89 | 10565 |

|

| 36 |

|

| 37 |

-

#### Proposed usage

|

| 38 |

-

|

| 39 |

-

The 'inappropriateness' substance we tried to collect in the dataset and detect with the model is not a substitution of toxicity, it is rather a derivative of toxicity. So the model based on our dataset could serve as an additional layer of inappropriateness filtering after toxicity and obscenity filtration.

|

| 40 |

|

| 41 |

-

|

| 42 |

|

| 43 |

## Licensing Information

|

| 44 |

|

|

|

|

| 11 |

|

| 12 |

## General concept of the model

|

| 13 |

|

| 14 |

+

#### Proposed usage

|

| 15 |

+

|

| 16 |

+

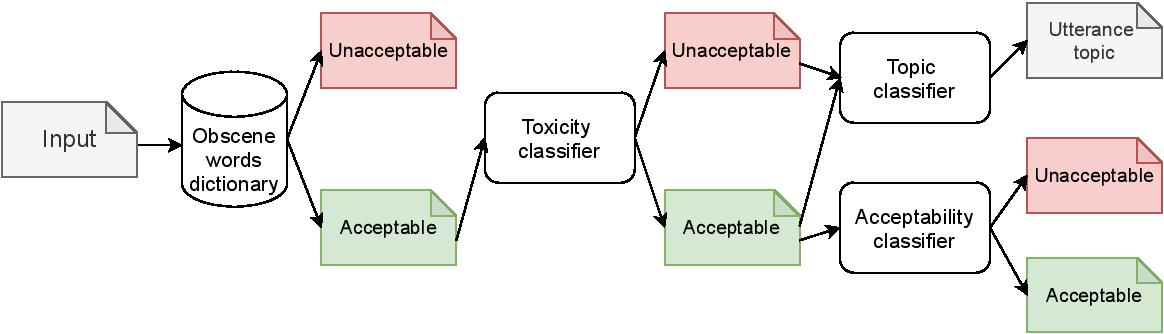

The **'inappropriateness'** substance we tried to collect in the dataset and detect with the model **is NOT a substitution of toxicity**, it is rather a derivative of toxicity. So the model based on our dataset could serve as **an additional layer of inappropriateness filtering after toxicity and obscenity filtration**. You can detect the exact sensitive topic by using [another model](https://huggingface.co/Skoltech/russian-sensitive-topics). The proposed pipeline is shown in the scheme below.

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

| 20 |

#### Inappropraiteness intuition

|

| 21 |

This model is trained on the dataset of inappropriate messages of the Russian language. Generally, an inappropriate utterance is an utterance that has not obscene words or any kind of toxic intent, but can still harm the reputation of the speaker. Find some sample for more intuition in the table below. Learn more about the concept of inappropriateness [in this article ](https://www.aclweb.org/anthology/2021.bsnlp-1.4/) presented at the workshop for Balto-Slavic NLP at the EACL-2021 conference. Please note that this article describes the first version of the dataset, while the model is trained on the extended version of the dataset open-sourced on our [GitHub](https://github.com/skoltech-nlp/inappropriate-sensitive-topics/blob/main/Version2/appropriateness/Appropriateness.csv) or on [kaggle](https://www.kaggle.com/nigula/russianinappropriatemessages). The properties of the dataset are the same as the one described in the article, the only difference is the size.

|

| 22 |

|

|

|

|

| 40 |

| macro avg | 0.86 | 0.85 | 0.85 | 10565 |

|

| 41 |

| weighted avg | 0.89 | 0.89 | 0.89 | 10565 |

|

| 42 |

|

|

|

|

|

|

|

|

|

|

| 43 |

|

|

|

|

| 44 |

|

| 45 |

## Licensing Information

|

| 46 |

|