Model save

Browse files- README.md +109 -0

- adapter_model.bin +1 -1

- cf.png +0 -0

- cf.txt +7 -7

- class_report.txt +7 -7

README.md

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

base_model: roberta-large

|

| 4 |

+

tags:

|

| 5 |

+

- generated_from_trainer

|

| 6 |

+

metrics:

|

| 7 |

+

- accuracy

|

| 8 |

+

- recall

|

| 9 |

+

- f1

|

| 10 |

+

model-index:

|

| 11 |

+

- name: lora-roberta-large-no-anger-f4-0927

|

| 12 |

+

results: []

|

| 13 |

+

---

|

| 14 |

+

|

| 15 |

+

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 16 |

+

should probably proofread and complete it, then remove this comment. -->

|

| 17 |

+

|

| 18 |

+

# lora-roberta-large-no-anger-f4-0927

|

| 19 |

+

|

| 20 |

+

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on an unknown dataset.

|

| 21 |

+

It achieves the following results on the evaluation set:

|

| 22 |

+

- Loss: 0.7423

|

| 23 |

+

- Accuracy: 0.7389

|

| 24 |

+

- Prec: 0.7379

|

| 25 |

+

- Recall: 0.7389

|

| 26 |

+

- F1: 0.7381

|

| 27 |

+

- B Acc: 0.6013

|

| 28 |

+

- Micro F1: 0.7389

|

| 29 |

+

- Prec Joy: 0.7415

|

| 30 |

+

- Recall Joy: 0.7893

|

| 31 |

+

- F1 Joy: 0.7646

|

| 32 |

+

- Prec Anger: 0.6020

|

| 33 |

+

- Recall Anger: 0.5728

|

| 34 |

+

- F1 Anger: 0.5871

|

| 35 |

+

- Prec Disgust: 0.4013

|

| 36 |

+

- Recall Disgust: 0.3896

|

| 37 |

+

- F1 Disgust: 0.3953

|

| 38 |

+

- Prec Fear: 0.5341

|

| 39 |

+

- Recall Fear: 0.5296

|

| 40 |

+

- F1 Fear: 0.5318

|

| 41 |

+

- Prec Neutral: 0.8416

|

| 42 |

+

- Recall Neutral: 0.8279

|

| 43 |

+

- F1 Neutral: 0.8347

|

| 44 |

+

- Prec Sadness: 0.6410

|

| 45 |

+

- Recall Sadness: 0.6338

|

| 46 |

+

- F1 Sadness: 0.6374

|

| 47 |

+

- Prec Surprise: 0.5093

|

| 48 |

+

- Recall Surprise: 0.4663

|

| 49 |

+

- F1 Surprise: 0.4869

|

| 50 |

+

|

| 51 |

+

## Model description

|

| 52 |

+

|

| 53 |

+

More information needed

|

| 54 |

+

|

| 55 |

+

## Intended uses & limitations

|

| 56 |

+

|

| 57 |

+

More information needed

|

| 58 |

+

|

| 59 |

+

## Training and evaluation data

|

| 60 |

+

|

| 61 |

+

More information needed

|

| 62 |

+

|

| 63 |

+

## Training procedure

|

| 64 |

+

|

| 65 |

+

### Training hyperparameters

|

| 66 |

+

|

| 67 |

+

The following hyperparameters were used during training:

|

| 68 |

+

- learning_rate: 0.001

|

| 69 |

+

- train_batch_size: 32

|

| 70 |

+

- eval_batch_size: 32

|

| 71 |

+

- seed: 42

|

| 72 |

+

- gradient_accumulation_steps: 4

|

| 73 |

+

- total_train_batch_size: 128

|

| 74 |

+

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

|

| 75 |

+

- lr_scheduler_type: linear

|

| 76 |

+

- lr_scheduler_warmup_ratio: 0.05

|

| 77 |

+

- num_epochs: 25.0

|

| 78 |

+

|

| 79 |

+

### Training results

|

| 80 |

+

|

| 81 |

+

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Prec | Recall | F1 | B Acc | Micro F1 | Prec Joy | Recall Joy | F1 Joy | Prec Anger | Recall Anger | F1 Anger | Prec Disgust | Recall Disgust | F1 Disgust | Prec Fear | Recall Fear | F1 Fear | Prec Neutral | Recall Neutral | F1 Neutral | Prec Sadness | Recall Sadness | F1 Sadness | Prec Surprise | Recall Surprise | F1 Surprise |

|

| 82 |

+

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:------:|:------:|:------:|:------:|:--------:|:--------:|:----------:|:------:|:----------:|:------------:|:--------:|:------------:|:--------------:|:----------:|:---------:|:-----------:|:-------:|:------------:|:--------------:|:----------:|:------------:|:--------------:|:----------:|:-------------:|:---------------:|:-----------:|

|

| 83 |

+

| 0.8167 | 1.25 | 2049 | 0.7756 | 0.7130 | 0.7003 | 0.7130 | 0.7030 | 0.5272 | 0.7130 | 0.7252 | 0.7430 | 0.7340 | 0.6026 | 0.3749 | 0.4622 | 0.4187 | 0.3646 | 0.3898 | 0.5369 | 0.4170 | 0.4694 | 0.7763 | 0.8629 | 0.8173 | 0.6123 | 0.5784 | 0.5949 | 0.4797 | 0.3495 | 0.4044 |

|

| 84 |

+

| 0.7639 | 2.5 | 4098 | 0.7302 | 0.7293 | 0.7206 | 0.7293 | 0.7224 | 0.5662 | 0.7293 | 0.7361 | 0.7617 | 0.7487 | 0.6187 | 0.5198 | 0.5649 | 0.3881 | 0.4229 | 0.4048 | 0.5851 | 0.4247 | 0.4922 | 0.7961 | 0.8570 | 0.8254 | 0.6380 | 0.6185 | 0.6281 | 0.532 | 0.3585 | 0.4283 |

|

| 85 |

+

| 0.7395 | 3.75 | 6147 | 0.7348 | 0.7287 | 0.7328 | 0.7287 | 0.7271 | 0.5793 | 0.7287 | 0.6989 | 0.8136 | 0.7519 | 0.6786 | 0.4384 | 0.5327 | 0.4180 | 0.3875 | 0.4022 | 0.4632 | 0.5830 | 0.5162 | 0.8480 | 0.8134 | 0.8303 | 0.6648 | 0.5950 | 0.6280 | 0.5210 | 0.4241 | 0.4676 |

|

| 86 |

+

| 0.789 | 5.0 | 8196 | 0.7419 | 0.7275 | 0.7206 | 0.7275 | 0.7180 | 0.5511 | 0.7275 | 0.6888 | 0.8113 | 0.7450 | 0.6014 | 0.5183 | 0.5568 | 0.4038 | 0.4021 | 0.4029 | 0.5747 | 0.4305 | 0.4923 | 0.8063 | 0.8420 | 0.8238 | 0.6861 | 0.5838 | 0.6308 | 0.6224 | 0.2695 | 0.3762 |

|

| 87 |

+

| 0.7439 | 6.25 | 10245 | 0.7608 | 0.7207 | 0.7317 | 0.7207 | 0.7224 | 0.5858 | 0.7207 | 0.6882 | 0.8143 | 0.7459 | 0.6198 | 0.5004 | 0.5537 | 0.3944 | 0.3542 | 0.3732 | 0.4556 | 0.5843 | 0.5120 | 0.8599 | 0.7888 | 0.8228 | 0.7047 | 0.5590 | 0.6235 | 0.4535 | 0.4996 | 0.4754 |

|

| 88 |

+

| 0.712 | 7.5 | 12294 | 0.7240 | 0.7298 | 0.7270 | 0.7298 | 0.7263 | 0.5809 | 0.7298 | 0.7057 | 0.8043 | 0.7518 | 0.6313 | 0.4795 | 0.5450 | 0.4141 | 0.4271 | 0.4205 | 0.5707 | 0.4517 | 0.5043 | 0.8329 | 0.8214 | 0.8271 | 0.6126 | 0.6459 | 0.6288 | 0.5209 | 0.4367 | 0.4751 |

|

| 89 |

+

| 0.7032 | 8.75 | 14343 | 0.7095 | 0.7344 | 0.7328 | 0.7344 | 0.7317 | 0.5833 | 0.7344 | 0.7557 | 0.7479 | 0.7518 | 0.6391 | 0.5302 | 0.5796 | 0.4311 | 0.3521 | 0.3876 | 0.4724 | 0.6062 | 0.5310 | 0.8188 | 0.8498 | 0.8340 | 0.6472 | 0.6140 | 0.6301 | 0.5605 | 0.3827 | 0.4549 |

|

| 90 |

+

| 0.6972 | 10.0 | 16392 | 0.7108 | 0.7343 | 0.7325 | 0.7343 | 0.7317 | 0.5923 | 0.7343 | 0.7158 | 0.8038 | 0.7572 | 0.5785 | 0.5474 | 0.5625 | 0.3615 | 0.4729 | 0.4097 | 0.5714 | 0.4865 | 0.5255 | 0.8322 | 0.8288 | 0.8305 | 0.6797 | 0.5973 | 0.6358 | 0.5403 | 0.4097 | 0.4660 |

|

| 91 |

+

| 0.6859 | 11.25 | 18441 | 0.7211 | 0.7376 | 0.7321 | 0.7376 | 0.7322 | 0.5792 | 0.7376 | 0.7067 | 0.8093 | 0.7545 | 0.6216 | 0.5325 | 0.5736 | 0.4119 | 0.4188 | 0.4153 | 0.5720 | 0.4755 | 0.5193 | 0.8264 | 0.8407 | 0.8335 | 0.6677 | 0.6099 | 0.6375 | 0.5876 | 0.3675 | 0.4522 |

|

| 92 |

+

| 0.6542 | 12.5 | 20490 | 0.7143 | 0.7347 | 0.7294 | 0.7347 | 0.7307 | 0.5817 | 0.7347 | 0.7358 | 0.7824 | 0.7584 | 0.6263 | 0.5407 | 0.5804 | 0.3931 | 0.3792 | 0.3860 | 0.5700 | 0.4665 | 0.5131 | 0.8203 | 0.8364 | 0.8283 | 0.6158 | 0.6658 | 0.6398 | 0.5400 | 0.4007 | 0.4600 |

|

| 93 |

+

| 0.6463 | 13.75 | 22539 | 0.7022 | 0.7369 | 0.7366 | 0.7369 | 0.7354 | 0.5947 | 0.7369 | 0.7371 | 0.7864 | 0.7610 | 0.5452 | 0.6393 | 0.5885 | 0.5170 | 0.3167 | 0.3928 | 0.5519 | 0.4858 | 0.5168 | 0.8455 | 0.8218 | 0.8335 | 0.6062 | 0.6649 | 0.6342 | 0.5320 | 0.4483 | 0.4866 |

|

| 94 |

+

| 0.6333 | 15.0 | 24588 | 0.7106 | 0.7405 | 0.7387 | 0.7405 | 0.7387 | 0.5982 | 0.7405 | 0.7558 | 0.7617 | 0.7587 | 0.6294 | 0.5631 | 0.5944 | 0.4637 | 0.3854 | 0.4209 | 0.4892 | 0.5817 | 0.5315 | 0.8292 | 0.8481 | 0.8385 | 0.6600 | 0.6140 | 0.6362 | 0.5320 | 0.4331 | 0.4775 |

|

| 95 |

+

| 0.6184 | 16.25 | 26637 | 0.7199 | 0.7338 | 0.7389 | 0.7338 | 0.7348 | 0.6077 | 0.7338 | 0.7207 | 0.8008 | 0.7586 | 0.6140 | 0.5571 | 0.5842 | 0.3692 | 0.4292 | 0.3969 | 0.5024 | 0.5972 | 0.5457 | 0.8534 | 0.8079 | 0.8301 | 0.6714 | 0.6 | 0.6337 | 0.5109 | 0.4618 | 0.4851 |

|

| 96 |

+

| 0.5916 | 17.5 | 28686 | 0.7220 | 0.7368 | 0.7376 | 0.7368 | 0.7363 | 0.6003 | 0.7368 | 0.7426 | 0.7859 | 0.7636 | 0.5858 | 0.5713 | 0.5784 | 0.3743 | 0.4125 | 0.3925 | 0.5766 | 0.4653 | 0.5150 | 0.8479 | 0.8258 | 0.8367 | 0.5879 | 0.6676 | 0.6252 | 0.5146 | 0.4735 | 0.4932 |

|

| 97 |

+

| 0.5823 | 18.75 | 30735 | 0.7228 | 0.7376 | 0.7374 | 0.7376 | 0.7364 | 0.5960 | 0.7376 | 0.7210 | 0.8058 | 0.7610 | 0.6206 | 0.5534 | 0.5851 | 0.4056 | 0.3625 | 0.3828 | 0.5199 | 0.5631 | 0.5406 | 0.8460 | 0.8200 | 0.8328 | 0.6599 | 0.6126 | 0.6354 | 0.5254 | 0.4546 | 0.4875 |

|

| 98 |

+

| 0.5728 | 20.0 | 32784 | 0.7313 | 0.7344 | 0.7365 | 0.7344 | 0.7349 | 0.6090 | 0.7344 | 0.7295 | 0.7934 | 0.7601 | 0.5795 | 0.5907 | 0.5851 | 0.3927 | 0.4271 | 0.4092 | 0.5434 | 0.5161 | 0.5294 | 0.8462 | 0.8115 | 0.8285 | 0.6541 | 0.6311 | 0.6424 | 0.4928 | 0.4933 | 0.4930 |

|

| 99 |

+

| 0.5562 | 21.25 | 34833 | 0.7414 | 0.7376 | 0.7372 | 0.7376 | 0.7366 | 0.5995 | 0.7376 | 0.7372 | 0.7934 | 0.7643 | 0.6308 | 0.5258 | 0.5735 | 0.3946 | 0.425 | 0.4092 | 0.5324 | 0.5341 | 0.5332 | 0.8433 | 0.8267 | 0.8349 | 0.6139 | 0.6374 | 0.6254 | 0.5249 | 0.4537 | 0.4867 |

|

| 100 |

+

| 0.5348 | 22.5 | 36882 | 0.7398 | 0.7370 | 0.7374 | 0.7370 | 0.7365 | 0.6017 | 0.7370 | 0.7268 | 0.8039 | 0.7634 | 0.5844 | 0.5892 | 0.5868 | 0.4013 | 0.3937 | 0.3975 | 0.5331 | 0.5238 | 0.5284 | 0.8488 | 0.8163 | 0.8322 | 0.6473 | 0.6275 | 0.6372 | 0.5194 | 0.4573 | 0.4864 |

|

| 101 |

+

| 0.5202 | 23.75 | 38931 | 0.7423 | 0.7389 | 0.7379 | 0.7389 | 0.7381 | 0.6013 | 0.7389 | 0.7415 | 0.7893 | 0.7646 | 0.6020 | 0.5728 | 0.5871 | 0.4013 | 0.3896 | 0.3953 | 0.5341 | 0.5296 | 0.5318 | 0.8416 | 0.8279 | 0.8347 | 0.6410 | 0.6338 | 0.6374 | 0.5093 | 0.4663 | 0.4869 |

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

### Framework versions

|

| 105 |

+

|

| 106 |

+

- Transformers 4.33.1

|

| 107 |

+

- Pytorch 2.0.1

|

| 108 |

+

- Datasets 2.12.0

|

| 109 |

+

- Tokenizers 0.13.3

|

adapter_model.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 7409629

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1f8ff222407d2a14b396aaa083c085406fe77c5e091b30eac3904fa90c765662

|

| 3 |

size 7409629

|

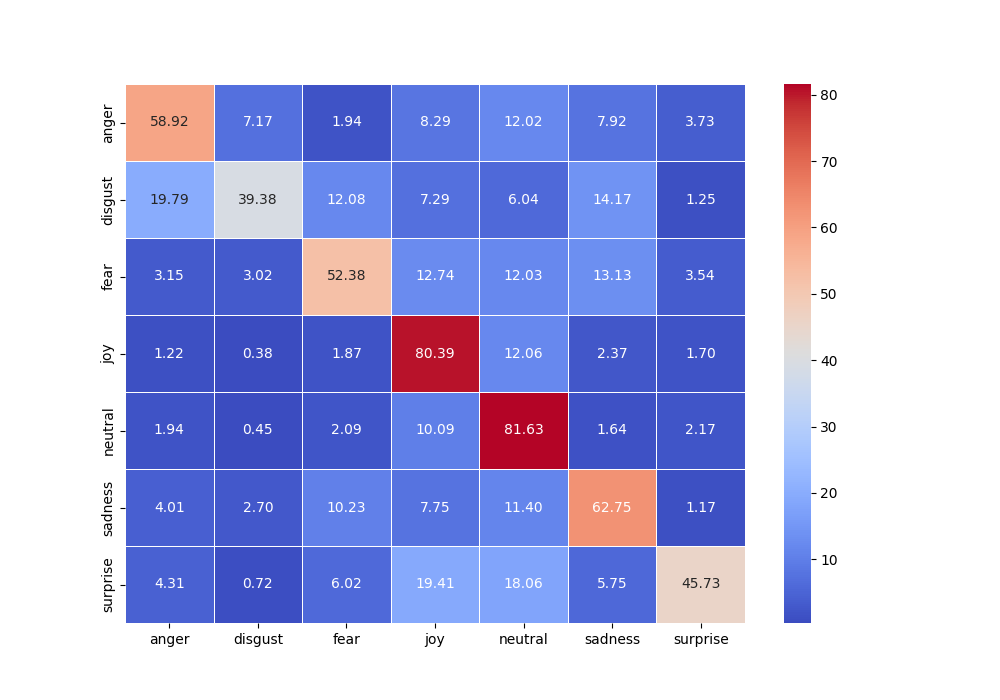

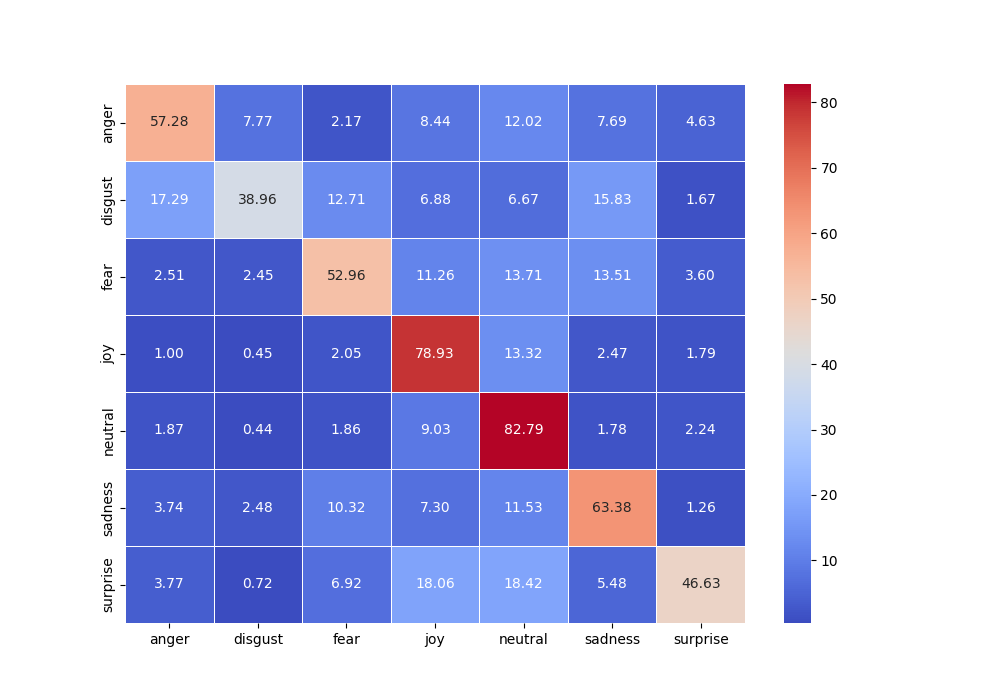

cf.png

CHANGED

|

|

cf.txt

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

-

5.

|

| 2 |

-

1.

|

| 3 |

-

|

| 4 |

-

1.

|

| 5 |

-

1.

|

| 6 |

-

|

| 7 |

-

|

|

|

|

| 1 |

+

5.728155339805824919e-01 7.766990291262135249e-02 2.165795369678864823e-02 8.439133681852128976e-02 1.202389843166542238e-01 7.692307692307692735e-02 4.630321135175503866e-02

|

| 2 |

+

1.729166666666666630e-01 3.895833333333333370e-01 1.270833333333333259e-01 6.875000000000000555e-02 6.666666666666666574e-02 1.583333333333333259e-01 1.666666666666666644e-02

|

| 3 |

+

2.509652509652509494e-02 2.445302445302445157e-02 5.296010296010296159e-01 1.126126126126126142e-01 1.370656370656370693e-01 1.351351351351351426e-01 3.603603603603603572e-02

|

| 4 |

+

1.001168029367595531e-02 4.505256132154179978e-03 2.052394460203570831e-02 7.892541298181211529e-01 1.331553479058902034e-01 2.469547805773402230e-02 1.785416319038878735e-02

|

| 5 |

+

1.871082421180653155e-02 4.397043689774534150e-03 1.861727009074749806e-02 9.027972682196651333e-02 8.278604172513799320e-01 1.777528300121620358e-02 2.235943493310880298e-02

|

| 6 |

+

3.738738738738738715e-02 2.477477477477477499e-02 1.031531531531531543e-01 7.297297297297297702e-02 1.153153153153153171e-01 6.337837837837837496e-01 1.261261261261261216e-02

|

| 7 |

+

3.773584905660377214e-02 7.187780772686433450e-03 6.918238993710691676e-02 1.805929919137466422e-01 1.841868823000898381e-01 5.480682839173404985e-02 4.663072776280323684e-01

|

class_report.txt

CHANGED

|

@@ -1,13 +1,13 @@

|

|

| 1 |

precision recall f1-score support

|

| 2 |

|

| 3 |

-

0anger 0.

|

| 4 |

1disgust 0.40 0.39 0.40 480

|

| 5 |

-

2fear 0.53 0.

|

| 6 |

-

3joy 0.

|

| 7 |

-

4neutral 0.

|

| 8 |

-

5sadness 0.

|

| 9 |

-

6surprise 0.

|

| 10 |

|

| 11 |

accuracy 0.74 23388

|

| 12 |

-

macro avg 0.61 0.60 0.

|

| 13 |

weighted avg 0.74 0.74 0.74 23388

|

|

|

|

| 1 |

precision recall f1-score support

|

| 2 |

|

| 3 |

+

0anger 0.60 0.57 0.59 1339

|

| 4 |

1disgust 0.40 0.39 0.40 480

|

| 5 |

+

2fear 0.53 0.53 0.53 1554

|

| 6 |

+

3joy 0.74 0.79 0.76 5993

|

| 7 |

+

4neutral 0.84 0.83 0.83 10689

|

| 8 |

+

5sadness 0.64 0.63 0.64 2220

|

| 9 |

+

6surprise 0.51 0.47 0.49 1113

|

| 10 |

|

| 11 |

accuracy 0.74 23388

|

| 12 |

+

macro avg 0.61 0.60 0.61 23388

|

| 13 |

weighted avg 0.74 0.74 0.74 23388

|