Update README.md

Browse files

README.md

CHANGED

|

@@ -196,6 +196,160 @@ Among the remaining ones LoRa and Dadaption are probably slightly better. This c

|

|

| 196 |

|

| 197 |

|

| 198 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 199 |

### Dataset

|

| 200 |

|

| 201 |

Here is the composition of the dataset

|

|

|

|

| 196 |

|

| 197 |

|

| 198 |

|

| 199 |

+

### A Certain Theory on Lora Transfer

|

| 200 |

+

|

| 201 |

+

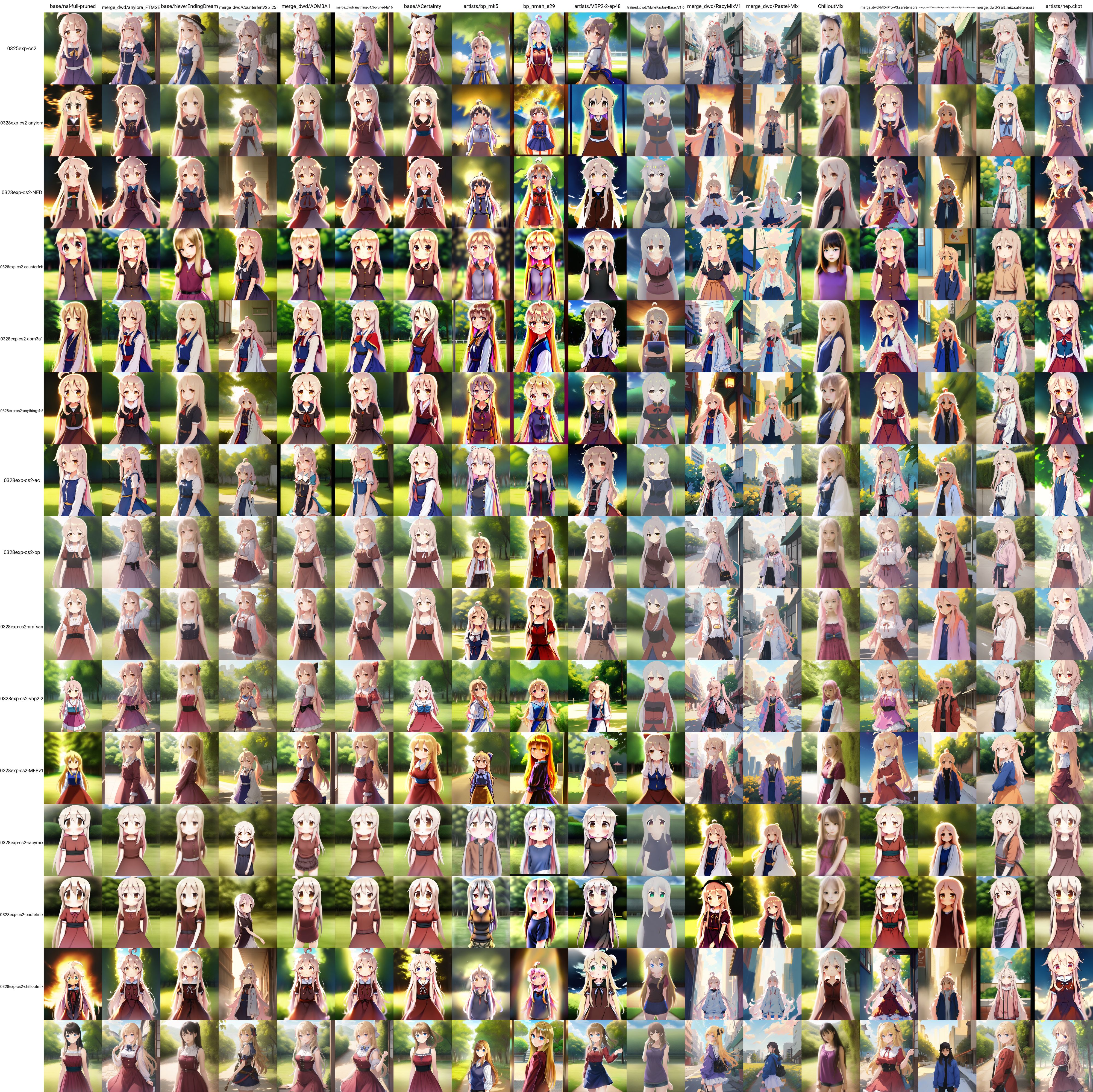

Inspired by the introduction of [AnyLora](https://civitai.com/models/23900/anylora) and an experiment done by @Machi#8166. I decided to further investigate the influence of base model. It is worth noticing that you may get different results due to difference in dataset and hyperparameters, though I do believe the general trend should be preserved. However, I do get some result that is contradictory to what Machi observed. In particular, counterfeit does not seem to be a good base model to train on in my experiments. Now, let's dive in.

|

| 202 |

+

|

| 203 |

+

**I only put jpgs below, I invite you to download pngs from https://huggingface.co/alea31415/LyCORIS-experiments/tree/main/generated_samples_0329 to check the details yourself**

|

| 204 |

+

|

| 205 |

+

#### Setup

|

| 206 |

+

|

| 207 |

+

I used the default setup described earlier except that I set clip skip to 2 here to be coherent with what the creator of AnyLora suggests.

|

| 208 |

+

I train on the following 15 models

|

| 209 |

+

|

| 210 |

+

1. NAI

|

| 211 |

+

2. [AnyLora](https://civitai.com/models/23900/anylora)

|

| 212 |

+

3. [NeverEndingDream](https://civitai.com/models/10028) (NED)

|

| 213 |

+

4. [Counterfeit-V2.5](https://civitai.com/models/10443/counterfeit-v25-25d-tweak)

|

| 214 |

+

5. [Aom3a1](https://civitai.com/models/9942/abyssorangemix3-aom3)

|

| 215 |

+

6. [Anything4.5](https://huggingface.co/andite/anything-v4.0/blob/main/anything-v4.5-pruned-fp16.ckpt)

|

| 216 |

+

7. [ACertainty](https://huggingface.co/JosephusCheung/ACertainty) (AC)

|

| 217 |

+

8. [BP](https://huggingface.co/Crosstyan/BPModel/blob/main/bp_mk5.safetensors)

|

| 218 |

+

9. [NMFSAN](https://huggingface.co/Crosstyan/BPModel/blob/main/NMFSAN/README.md)

|

| 219 |

+

10. [VBP2.2](https://t.me/StableDiffusion_CN/905650)

|

| 220 |

+

11. [MyneFactoryBaseV1.0](https://civitai.com/models/9106/myne-factory-base) (MFB)

|

| 221 |

+

12. [RacyMix](https://civitai.com/models/15727/racymix)

|

| 222 |

+

13. [PastelMix](https://civitai.com/models/5414/pastel-mix-stylized-anime-model-fantasyai)

|

| 223 |

+

14. [ChilloutMix](https://civitai.com/models/6424/chilloutmix)

|

| 224 |

+

|

| 225 |

+

In addition to the above we test on the following models

|

| 226 |

+

|

| 227 |

+

15. [Mix-Pro-V3](https://civitai.com/models/7241/mix-pro-v3)

|

| 228 |

+

16. [Fantasy Background](https://civitai.com/?query=Fantasy%20Background)

|

| 229 |

+

17. [Salt Mix](https://huggingface.co/yatoyun/Salt_mix)

|

| 230 |

+

18. nep (the model is not online anymore)

|

| 231 |

+

|

| 232 |

+

As we will see later, it seems that the above models can be roughly separate into the following groups

|

| 233 |

+

- Source (Ancestor) Models: NAI, AC

|

| 234 |

+

- Anything/Orange family: AOM, Anything, AnyLora, Counterfeit, NED

|

| 235 |

+

- Photorealistic: NeverEndingDream, ChilloutMix

|

| 236 |

+

- Pastel: RacyMix, PastelMix

|

| 237 |

+

- AC family: ACertainty, BP, NMFSAN (BP is trained from AC and NMFSAN is trained from BP)

|

| 238 |

+

- Outliers: the remaining ones, and notably MFB

|

| 239 |

+

|

| 240 |

+

#### Character Training

|

| 241 |

+

|

| 242 |

+

Most people who train characters want to be able to switch style by switching models. In this case you indeed want to use NAI, or otherwise the mysterious ACertainty

|

| 243 |

+

|

| 244 |

+

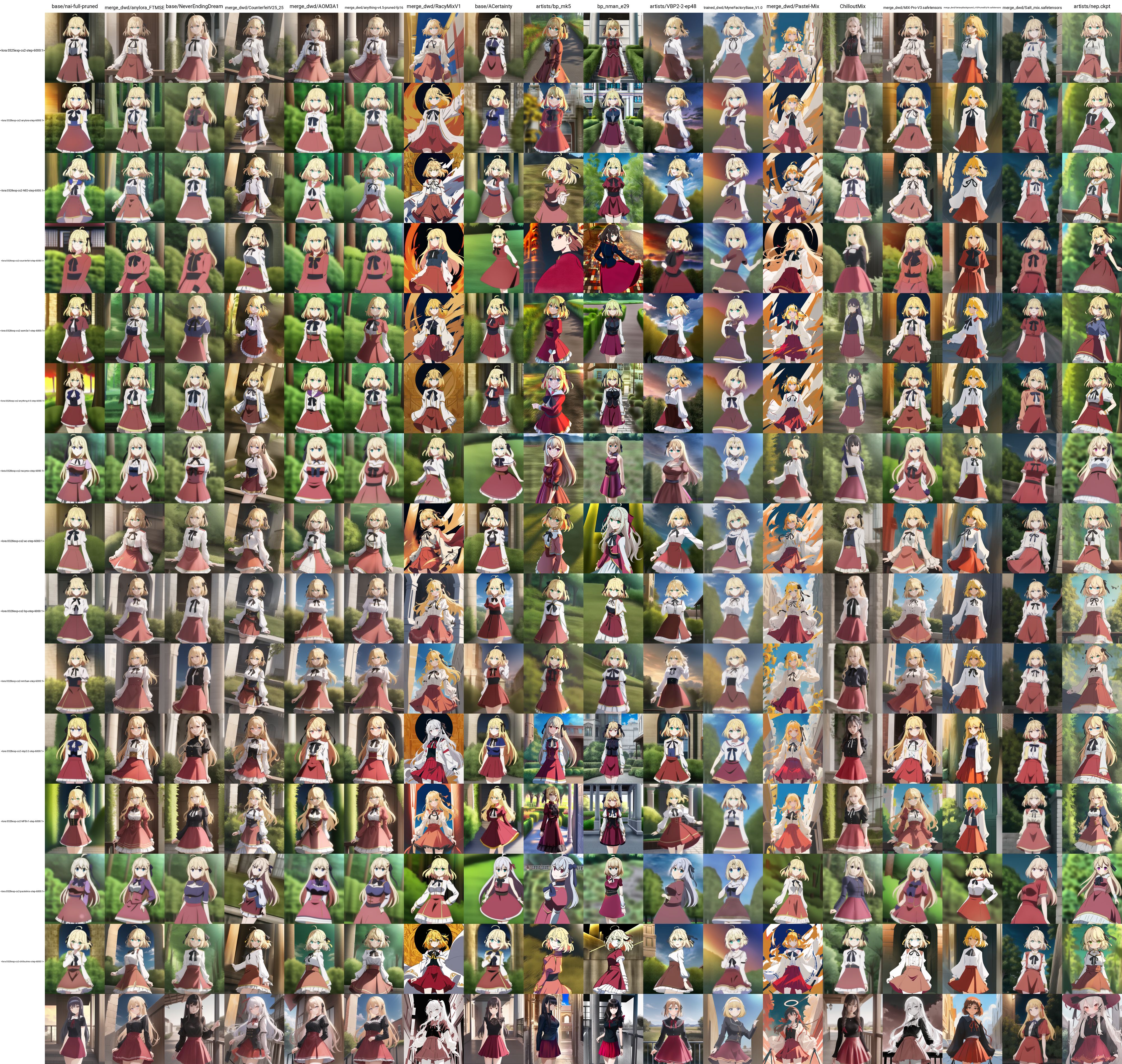

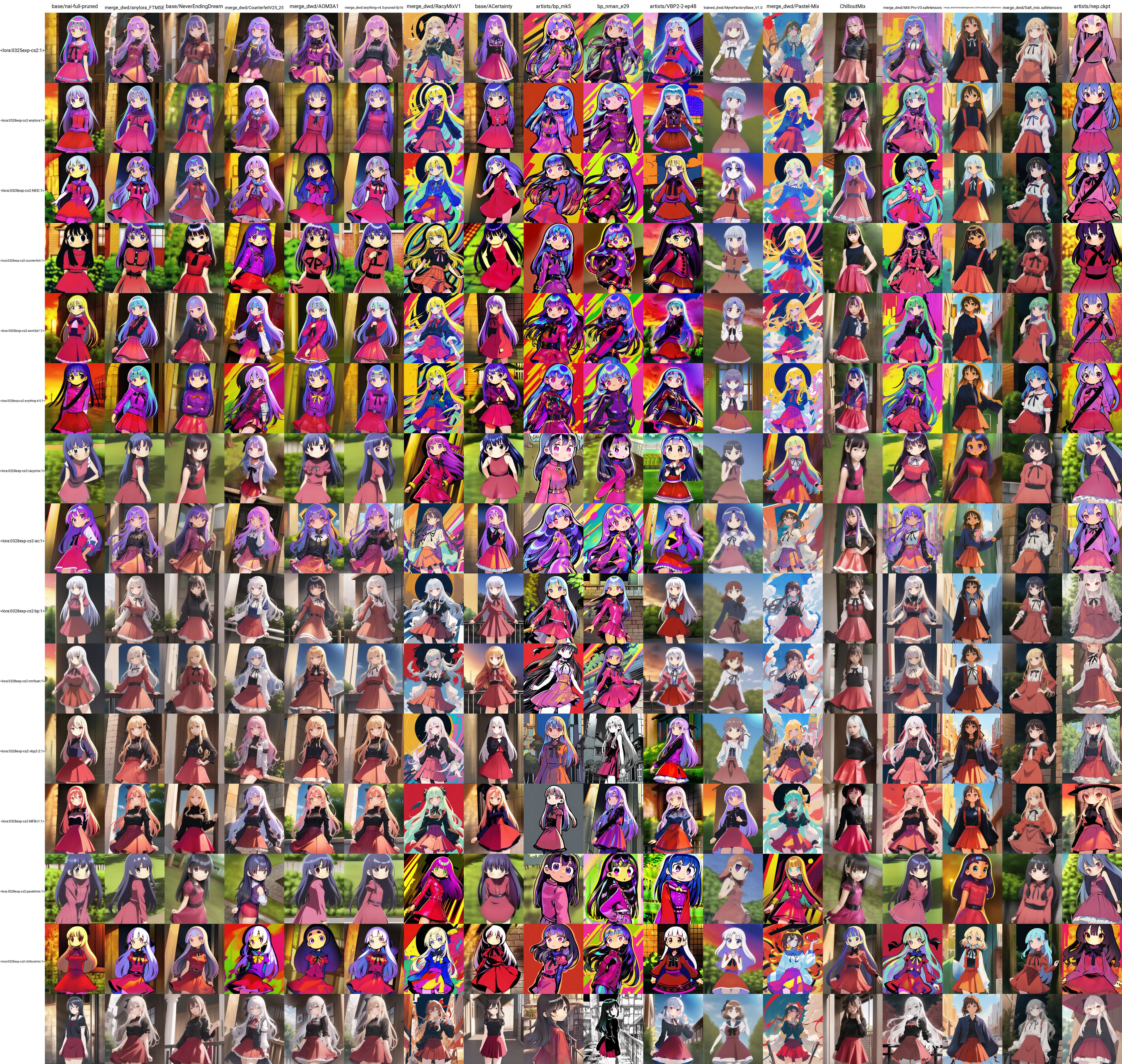

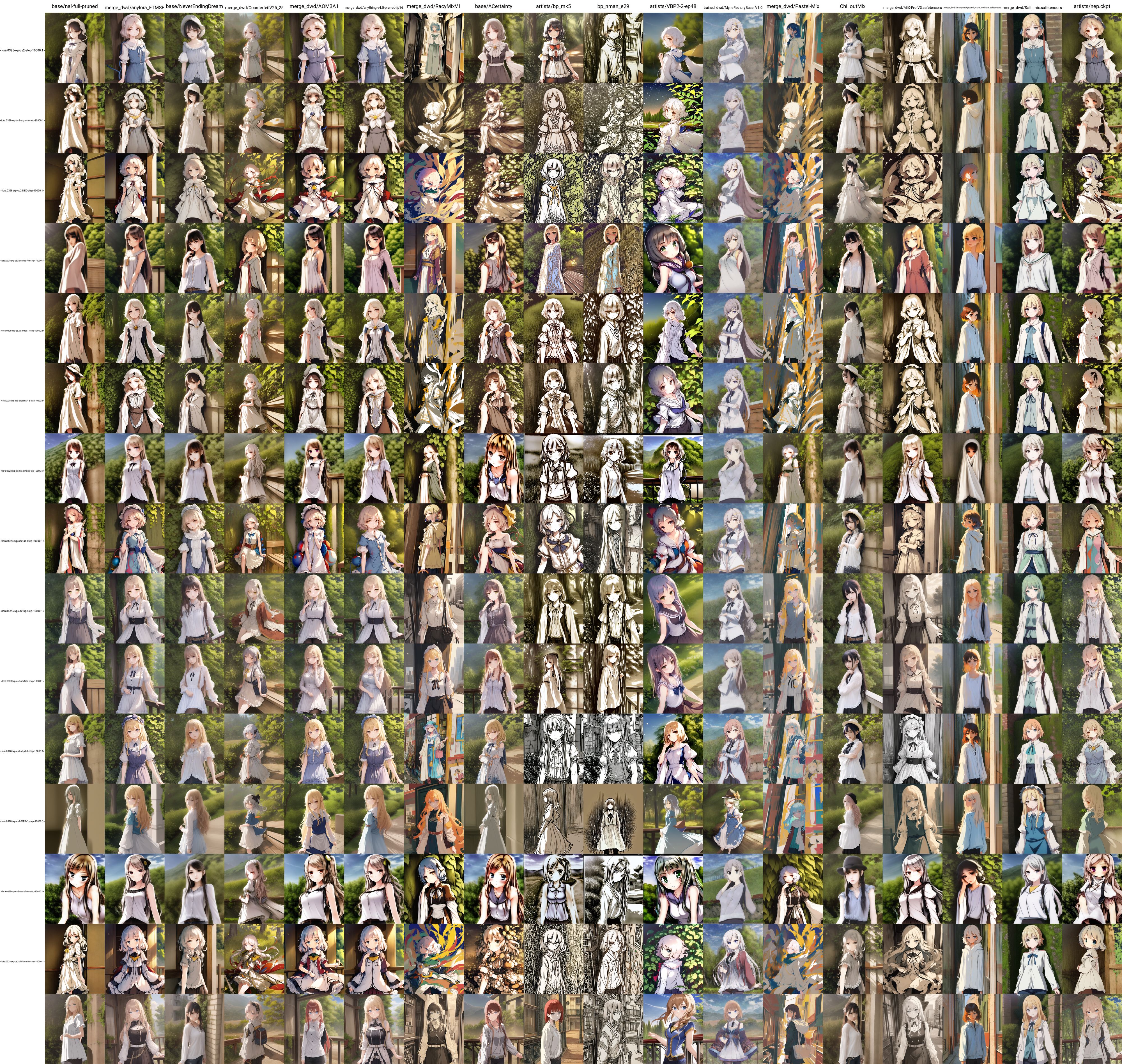

**Anisphia**

|

| 245 |

+

|

| 246 |

+

|

| 247 |

+

**Tilty**

|

| 248 |

+

|

| 249 |

+

|

| 250 |

+

Character training is mostly robust to model transfer. Nonetheless, if you pick models that are far away, such as MFB, or pastel-mix, to train on, the results could be much worse.

|

| 251 |

+

|

| 252 |

+

Of course you can have two characters in the same image as well.

|

| 253 |

+

|

| 254 |

+

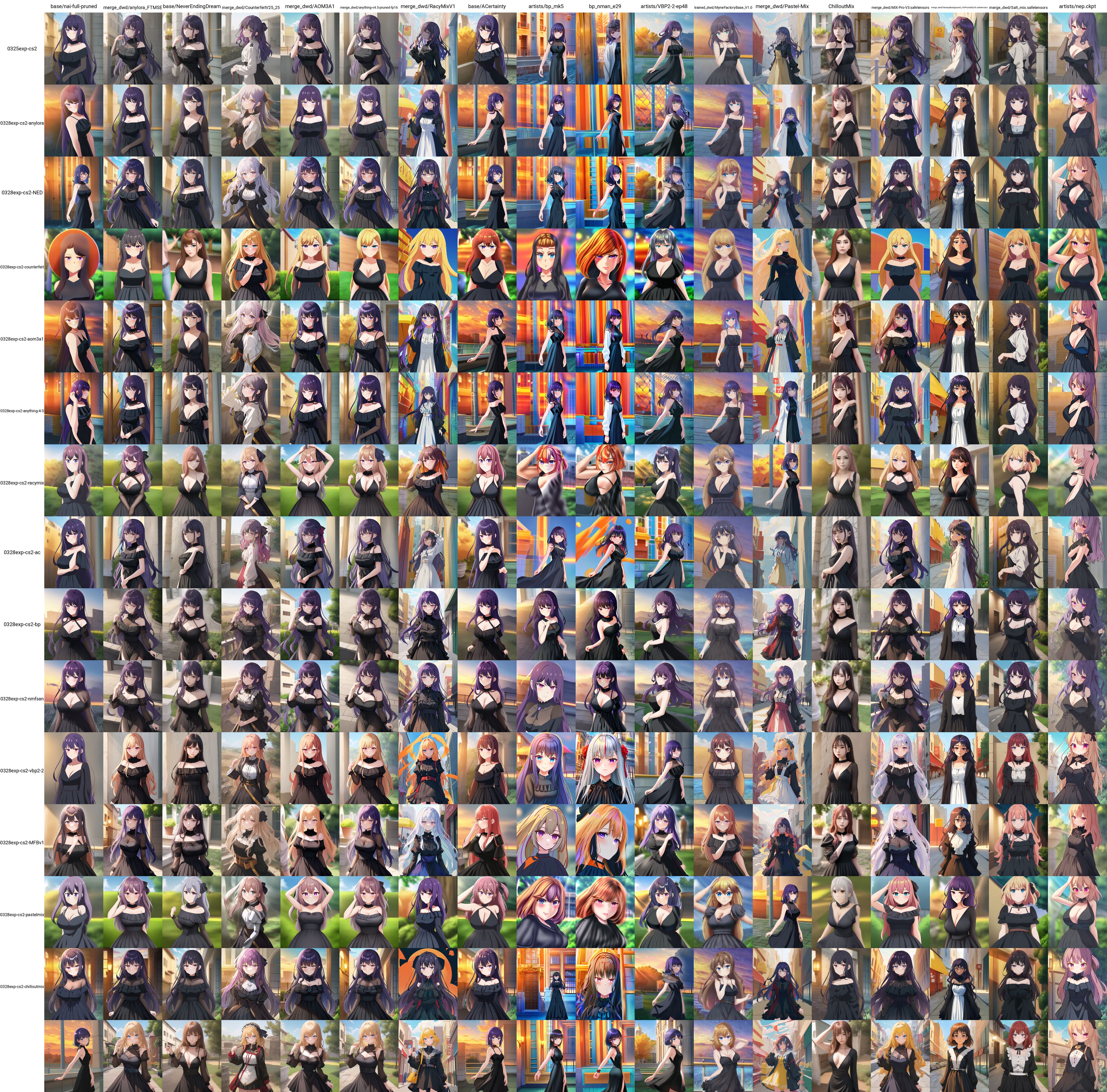

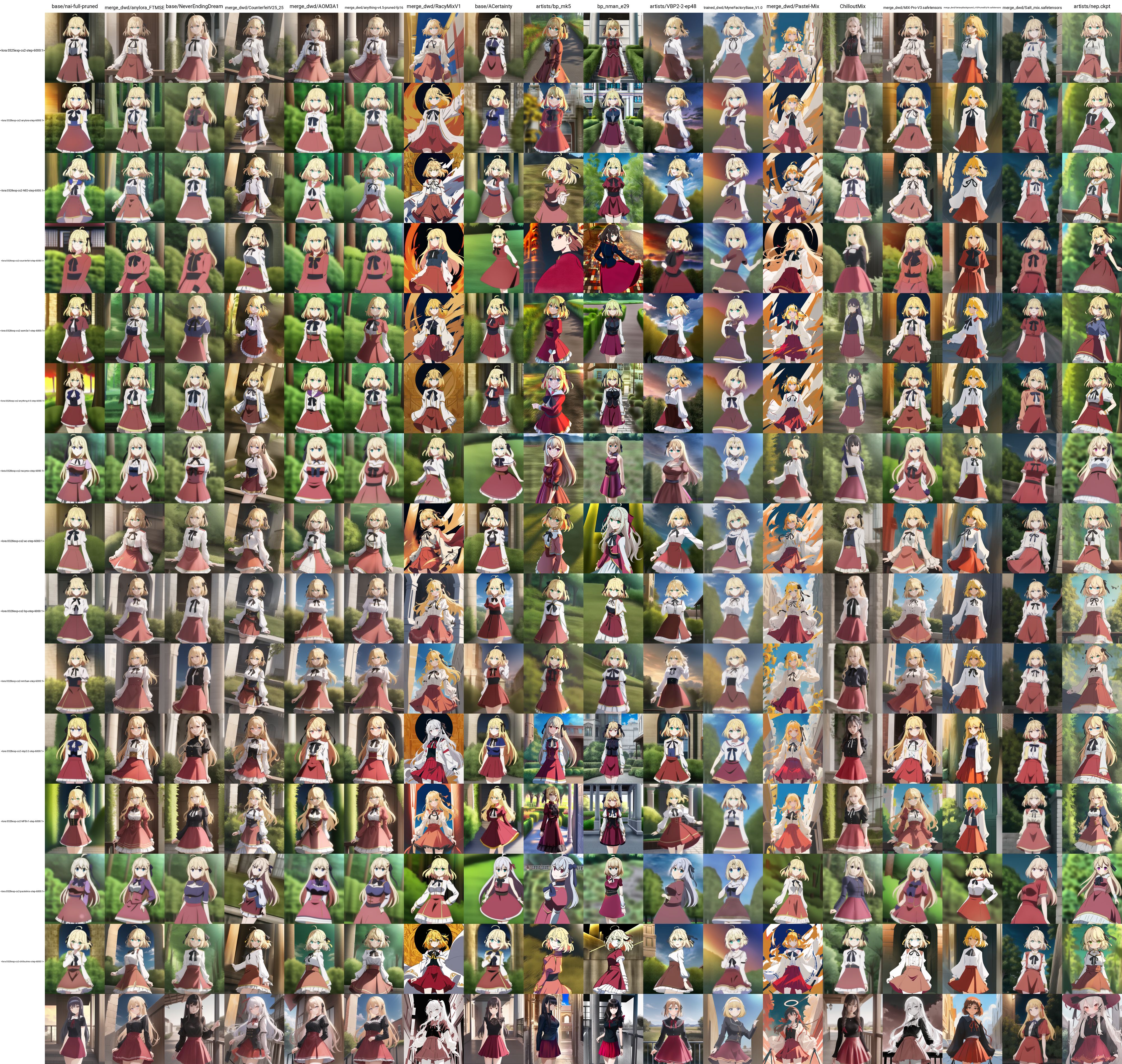

**Anisphia X Euphyllia**

|

| 255 |

+

|

| 256 |

+

|

| 257 |

+

|

| 258 |

+

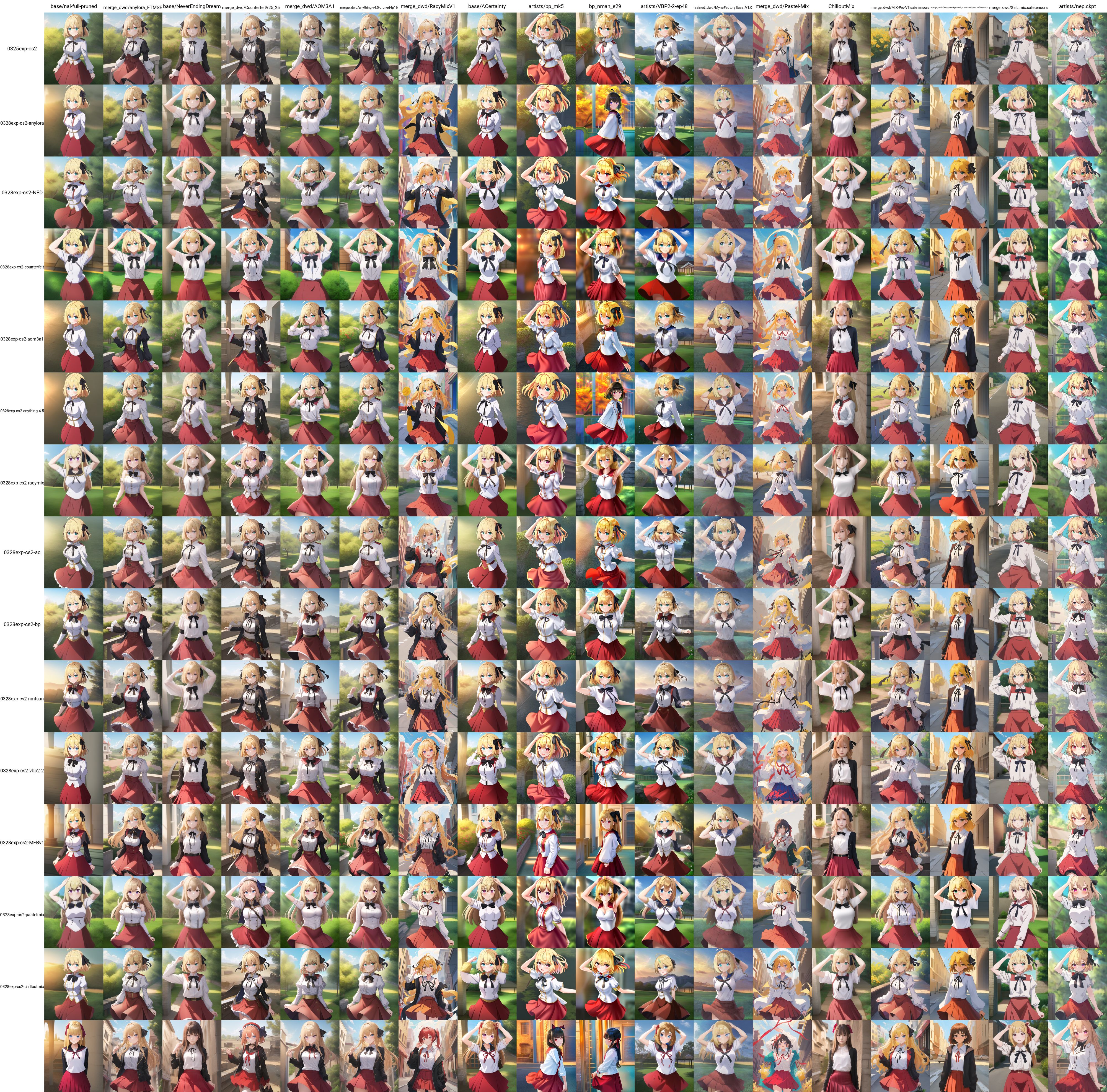

#### Style Training

|

| 259 |

+

|

| 260 |

+

In style training people may want to retain the styles when switching model but instead benefit from other components of the base model (such as more detailed background). Unfortunately, it seems that there is not a single go-to model for style training. The general trend is, you get better style preservation if the two models are close enough, and in particular if they are in the same group described above. This is actually already clear with the anime styles in the previous images. Let us check some more specific examples.

|

| 261 |

+

|

| 262 |

+

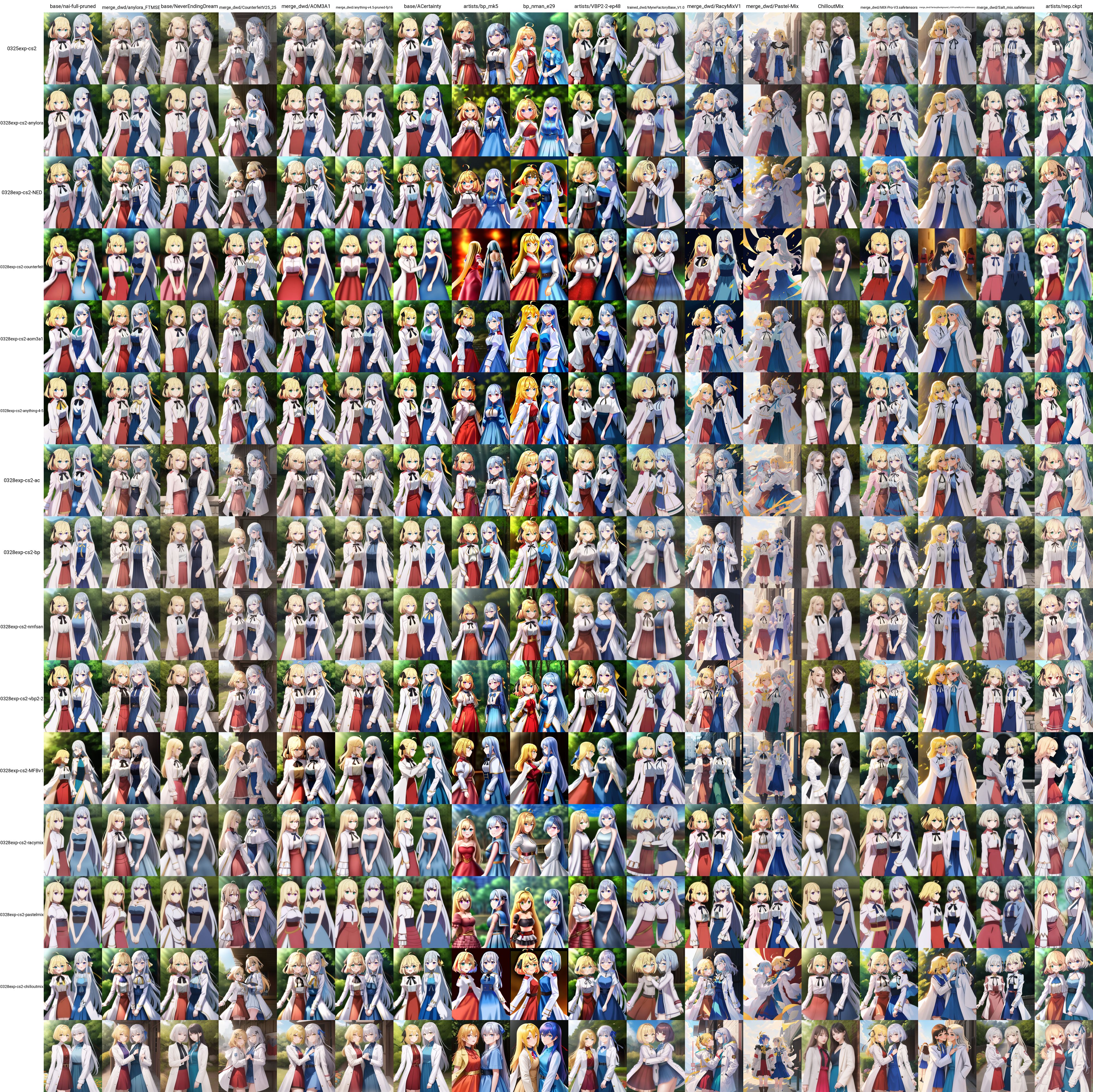

**ke-ta**

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

**onono imoko**

|

| 266 |

+

|

| 267 |

+

|

| 268 |

+

A lot of people like to use models of the orange and anything series. In this case, as claimed AnyLora can indeed be a good base model for style training. However, training on Anything, AOM, or even NED could also be more or less effective.

|

| 269 |

+

|

| 270 |

+

It is also worth noticing that while training on PastelMix and RacyMix give bad results on other models in general, they allow for style to be preserved on each other. Finally, training on ChilloutMix can kill the photo-realistic style of NED and vice versa.

|

| 271 |

+

|

| 272 |

+

|

| 273 |

+

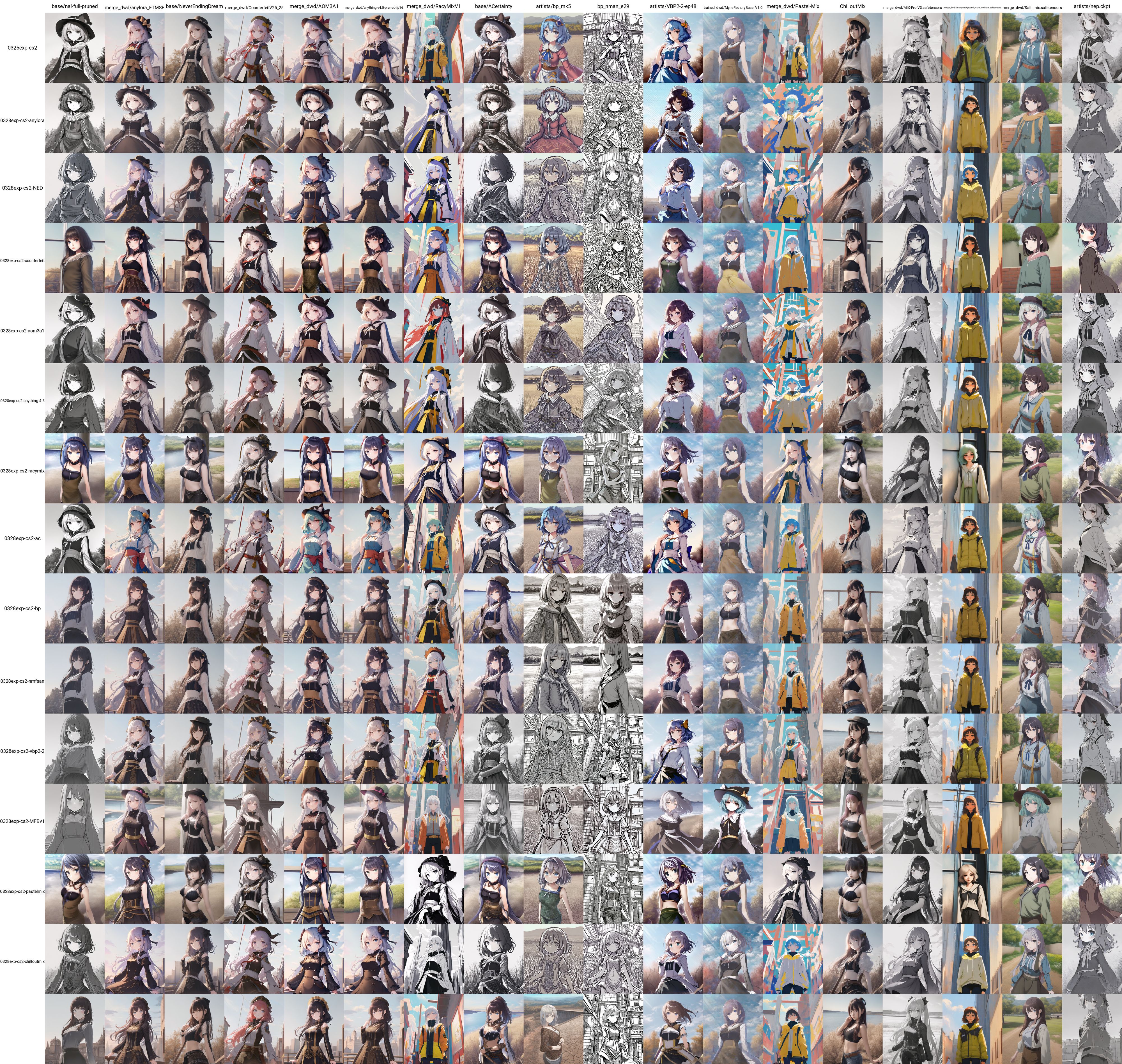

#### Why?

|

| 274 |

+

|

| 275 |

+

While a thorough explanation on how the model transfers would require much more investigation, I think one way to explain this is by considering the "style vector" pointing to every single model. When training on a merge of various models, these style vectors get corrected or compensated so the style is retained when switching to these closely related models. On the other hand, the source model is unaware of these style vectors. After training on it and transferring to another model the style vectors are added and we indeed recover the style of the new base model.

|

| 276 |

+

|

| 277 |

+

**A Case Study on AC family**

|

| 278 |

+

|

| 279 |

+

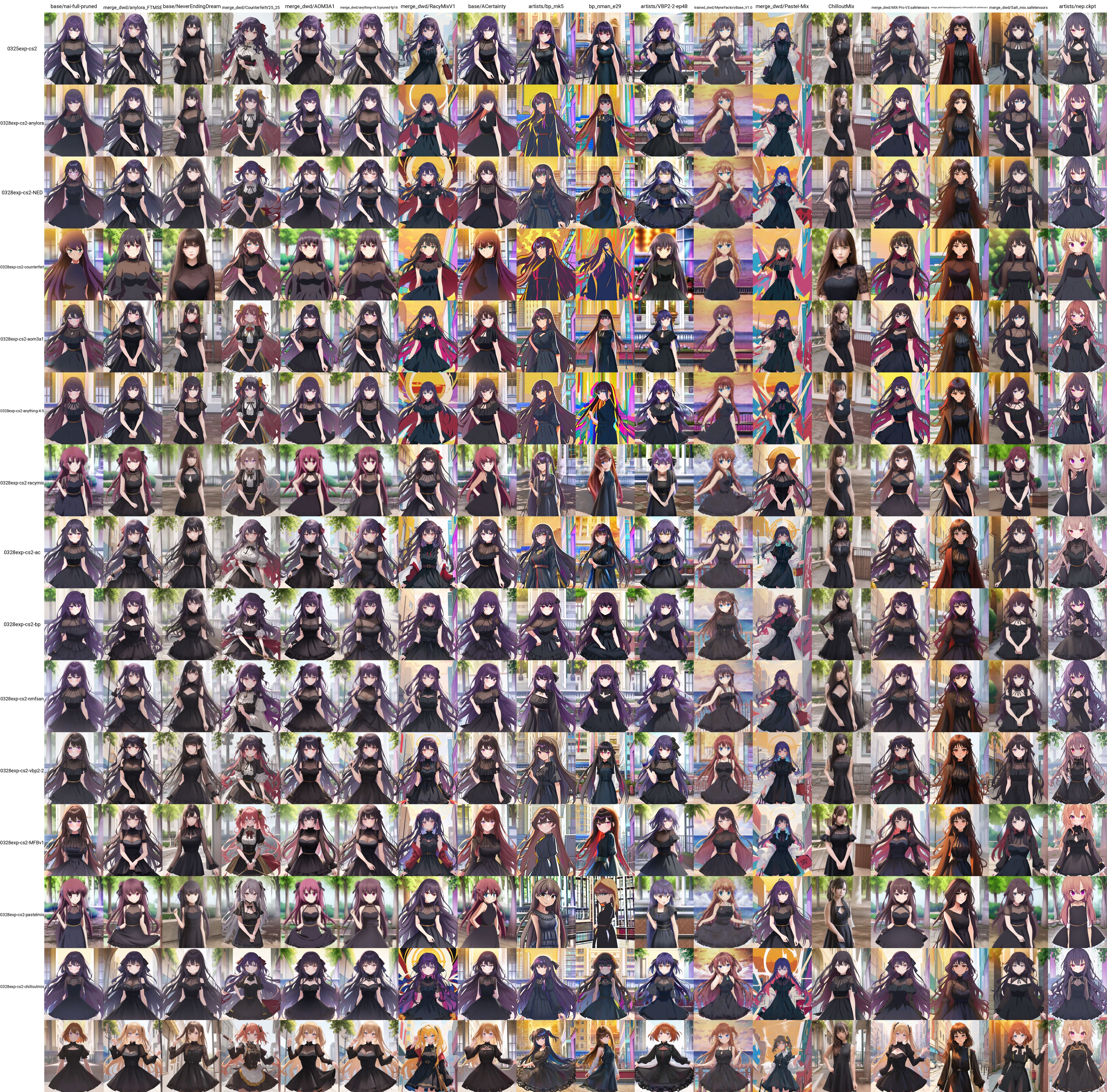

It is known that BP is trained from AC and NMFSAN is trained from BP, so this provides a good test case on how this affects the final result. According to the above theory, training on upstream model helps more get the style of downstream model, and this seems to be indeed the case.

|

| 280 |

+

|

| 281 |

+

We get more `fkey` style on nmfsan with the LoHa trained on AC (and not with the LoHa trained on nmfsan)

|

| 282 |

+

|

| 283 |

+

|

| 284 |

+

Same for `shion`

|

| 285 |

+

|

| 286 |

+

|

| 287 |

+

On the other hand, styles trained into LoHas would be less effective if applying from downstream models to upstream model, because what they need to achieve that may be much less than what the upstream model would need. We can also observe in early examples that LoHas trained on AnyLora can give bad result on NAI.

|

| 288 |

+

|

| 289 |

+

|

| 290 |

+

|

| 291 |

+

|

| 292 |

+

|

| 293 |

+

**Implication for Making Cosplay Images**

|

| 294 |

+

|

| 295 |

+

To summarize, if you want to have style of some model X, instead of training directly on X it would be better to train on an ancestor of X that does not contain this style.

|

| 296 |

+

Therefore, if you want to get cosplay of characters, you can do either of the following

|

| 297 |

+

- Train on NED and add a decent amount of photos in regularization set

|

| 298 |

+

- Train on AnyLora and transfer to NED

|

| 299 |

+

|

| 300 |

+

Lykon did show some successful results by only training with anime images on NED, but I doubt this is really optimal. Actually, he uses again a doll LoRa to reinforce the photo-realistic concept. It may be simper to just do what I suggest above.

|

| 301 |

+

|

| 302 |

+

|

| 303 |

+

**Some Myths**

|

| 304 |

+

|

| 305 |

+

Clearly, the thing that really matters is how the model is made, and not how the model looks like. A model that is versatile in style does not make it a good base model for whatever kind of training. In fact, VBP2-2 has around 300 styles trained in but LoHa trained on top of it does not transfer well to other models.

|

| 306 |

+

Similarly, two models that produce similar style do not mean they transfer well to each other. Both MFB and Salt-Mix have strong anime screenshot style but a LoHa trained on MFB does not transfer well to Salt-Mix.

|

| 307 |

+

|

| 308 |

+

|

| 309 |

+

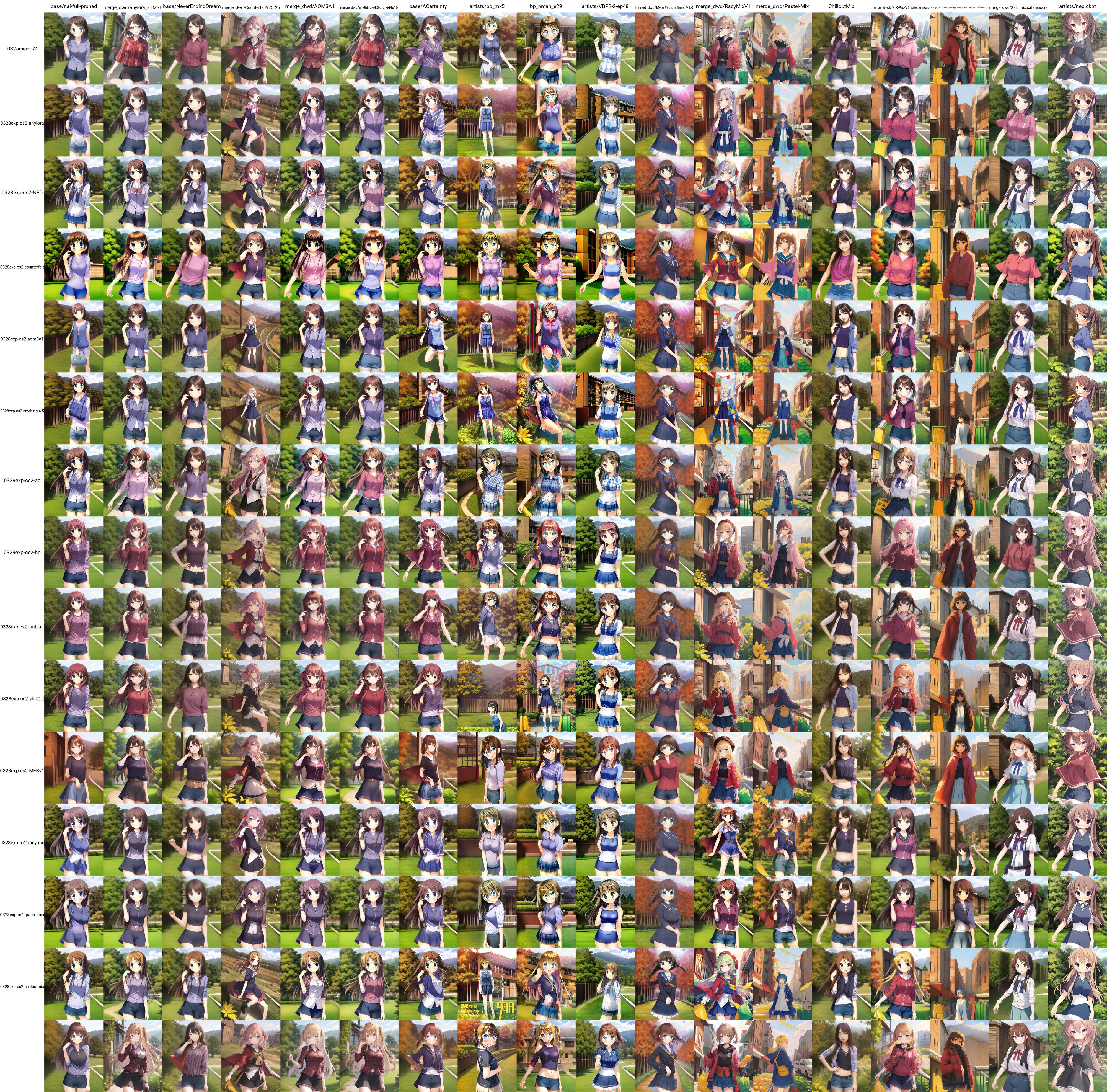

#### Training Speed

|

| 310 |

+

|

| 311 |

+

It is also suggested that you train faster on AnyLora. I try to look into this in several ways but I don't see a clear difference.

|

| 312 |

+

|

| 313 |

+

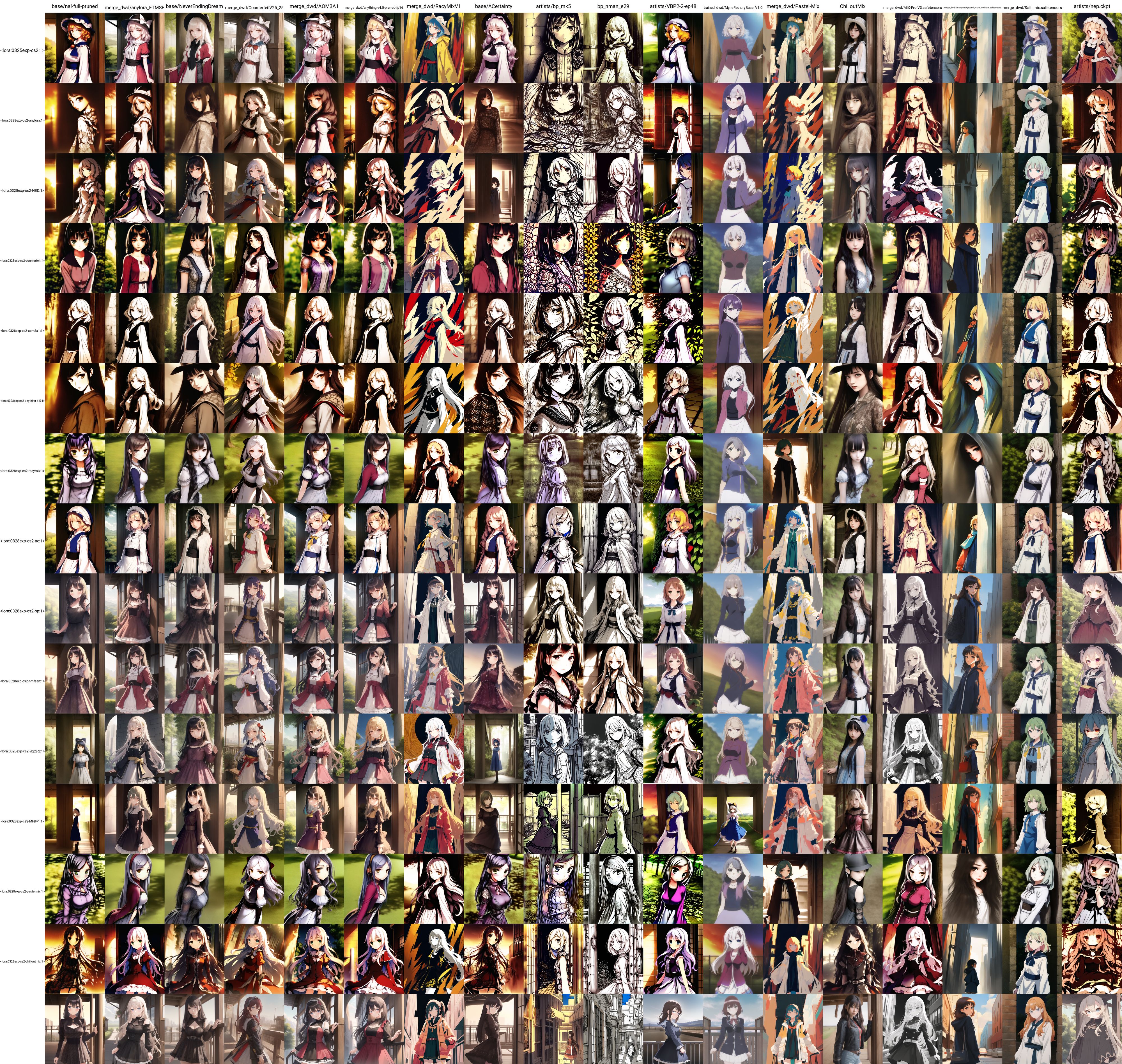

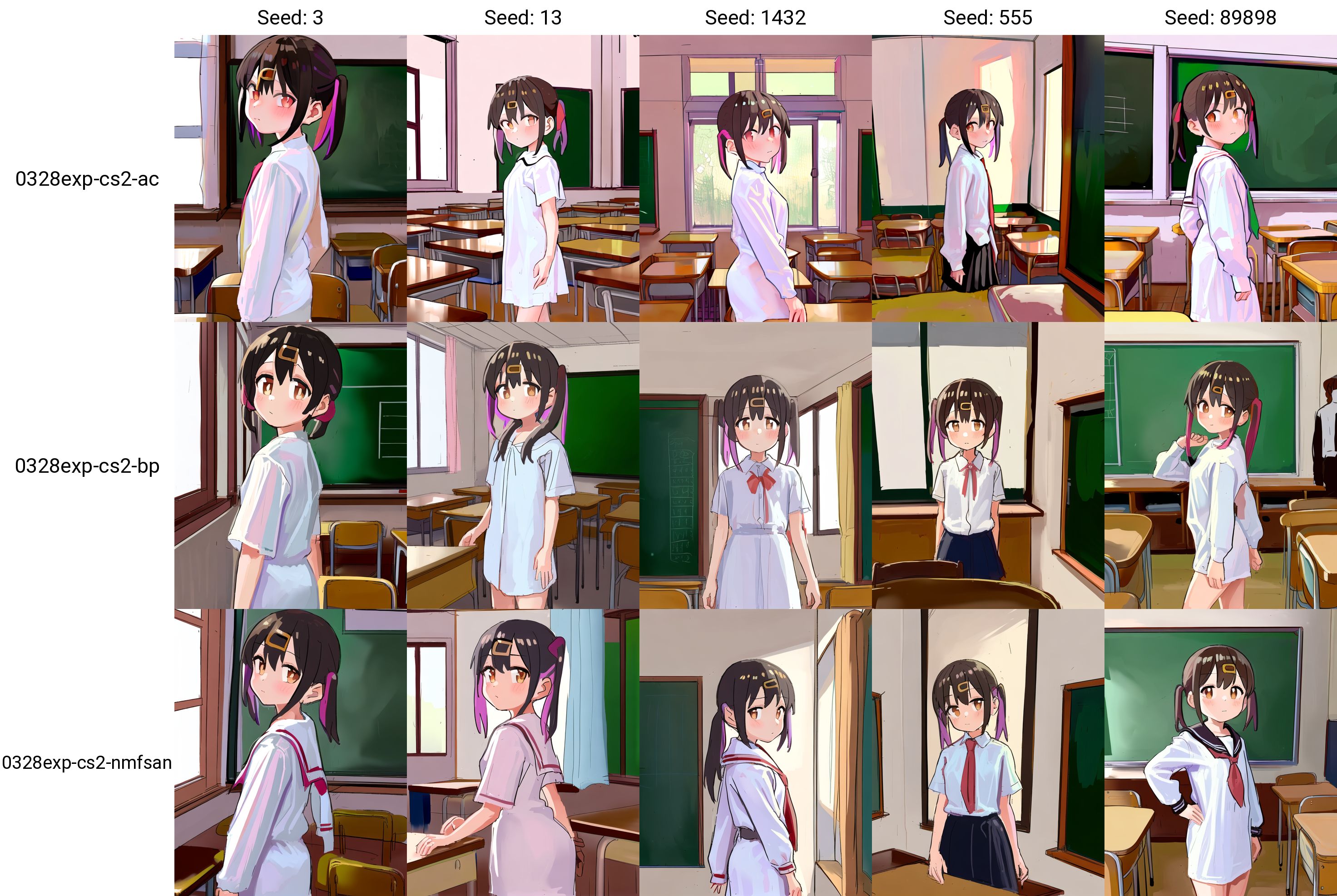

First, I use the 6000step checkpoints for characters

|

| 314 |

+

|

| 315 |

+

|

| 316 |

+

|

| 317 |

+

I do not see big difference between models of the same group.

|

| 318 |

+

In the Tilty example I even see training on NAI, BP, and NMFSAN seems to be faster, but this is only one seed.

|

| 319 |

+

|

| 320 |

+

I move on to check the 10000step checkpoints for styles

|

| 321 |

+

|

| 322 |

+

|

| 323 |

+

|

| 324 |

+

Again there is no big difference here.

|

| 325 |

+

|

| 326 |

+

Finally I use the final checkpoint and set the weight to 0.65 as suggested by Lykon.

|

| 327 |

+

|

| 328 |

+

|

| 329 |

+

|

| 330 |

+

|

| 331 |

+

The only thing that I observed is that VBP2.2 seems to fit a bit quicker on the styles I test here, but it is probably because it is already trained on them (although under a different triggering mechanism as VBP2.2 is trained with embedding).

|

| 332 |

+

|

| 333 |

+

|

| 334 |

+

#### Going further

|

| 335 |

+

|

| 336 |

+

**What are some good base models for "using" style LoRas**

|

| 337 |

+

|

| 338 |

+

In the above experiments, it seems that some good candidates that express well the trained styles are

|

| 339 |

+

- VBP2.2

|

| 340 |

+

- Mix-Pro-V3

|

| 341 |

+

- Counterfeit

|

| 342 |

+

|

| 343 |

+

**What else**

|

| 344 |

+

|

| 345 |

+

Using different base model can affect things in many other ways, such as the quality or the flexibility of the resulting LoRa. These are however very different to evaluate. I invite you to try the LoHas I trained here to see how they differ in this aspects.

|

| 346 |

+

|

| 347 |

+

**A style transfer example**

|

| 348 |

+

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

|

| 352 |

+

|

| 353 |

### Dataset

|

| 354 |

|

| 355 |

Here is the composition of the dataset

|