Merge branch 'main' of https://huggingface.co/alea31415/LyCORIS-experiments into main

Browse files- .gitattributes +1 -0

- README.md +73 -5

- generated_samples_0329/00032-20230330225216.png +3 -0

.gitattributes

CHANGED

|

@@ -264,3 +264,4 @@ trained_networks/0329_merge_based_models/0328exp-cs2-4m-av.safetensors filter=lf

|

|

| 264 |

trained_networks/0329_merge_based_models/0328exp-cs2-7m-ad-step-10000.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 265 |

merge_models/merge_exp/AleaMix.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 266 |

merge_models/merge_exp/tmp3.ckpt filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 264 |

trained_networks/0329_merge_based_models/0328exp-cs2-7m-ad-step-10000.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 265 |

merge_models/merge_exp/AleaMix.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 266 |

merge_models/merge_exp/tmp3.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 267 |

+

generated_samples_0329/00032-20230330225216.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -13,9 +13,25 @@ aniscreen, fanart

|

|

| 13 |

```

|

| 14 |

|

| 15 |

For `0324_all_aniscreen_tags`, I accidentally tag all the character images with `aniscreen`.

|

| 16 |

-

For

|

| 17 |

|

| 18 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

### Setting

|

| 20 |

|

| 21 |

Default settings are

|

|

@@ -26,9 +42,9 @@ Default settings are

|

|

| 26 |

- clip skip 1

|

| 27 |

|

| 28 |

Names of the files suggest how the setting is changed with respect to this default setup.

|

| 29 |

-

The configuration json files can

|

| 30 |

-

|

| 31 |

-

|

| 32 |

|

| 33 |

### Some observations

|

| 34 |

|

|

@@ -305,10 +321,62 @@ Lykon did show some successful results by only training with anime images on NED

|

|

| 305 |

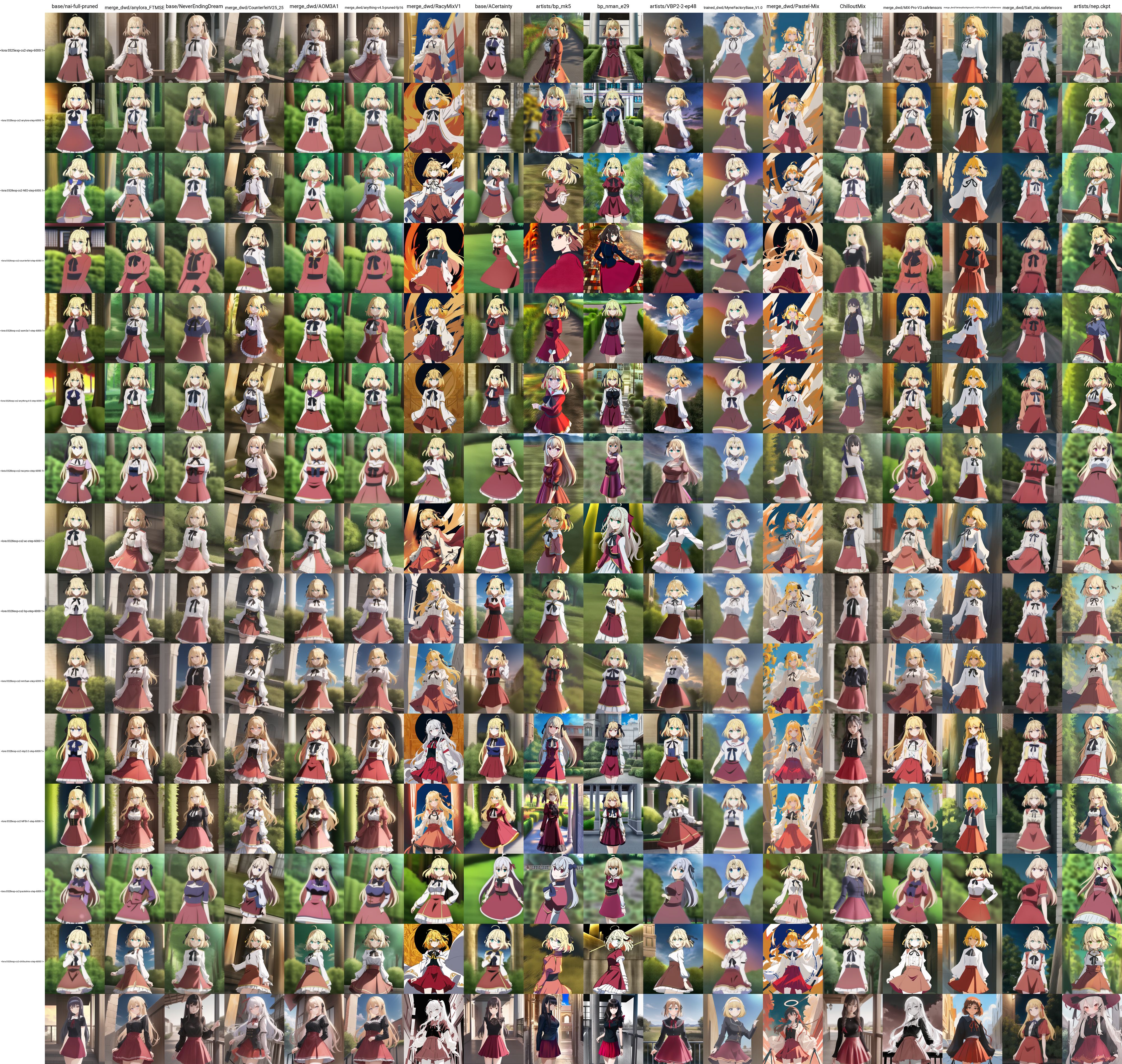

Clearly, the thing that really matters is how the model is made, and not how the model looks like. A model that is versatile in style does not make it a good base model for whatever kind of training. In fact, VBP2-2 has around 300 styles trained in but LoHa trained on top of it does not transfer well to other models.

|

| 306 |

Similarly, two models that produce similar style do not mean they transfer well to each other. Both MFB and Salt-Mix have strong anime screenshot style but a LoHa trained on MFB does not transfer well to Salt-Mix.

|

| 307 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 308 |

|

| 309 |

#### Training Speed

|

| 310 |

|

| 311 |

-

It is also suggested that you train faster on AnyLora. I try to look into this in several ways but I don't see a clear difference.

|

|

|

|

| 312 |

|

| 313 |

First, I use the 6000step checkpoints for characters

|

| 314 |

|

|

|

|

| 13 |

```

|

| 14 |

|

| 15 |

For `0324_all_aniscreen_tags`, I accidentally tag all the character images with `aniscreen`.

|

| 16 |

+

For the others, things are done correctly (anime screenshots tagged as `aniscreen`, fanart tagged as `fanart`).

|

| 17 |

|

| 18 |

|

| 19 |

+

For reference, this is what each character looks like

|

| 20 |

+

|

| 21 |

+

**Anisphia**

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

**Euphyllia**

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

**Tilty**

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

**OyamaMahiro (white hair one) and OyamaMihari (black hair one)**

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

As for the styles please check the artists' pixiv yourself (note there are R-18 images)

|

| 34 |

+

|

| 35 |

### Setting

|

| 36 |

|

| 37 |

Default settings are

|

|

|

|

| 42 |

- clip skip 1

|

| 43 |

|

| 44 |

Names of the files suggest how the setting is changed with respect to this default setup.

|

| 45 |

+

The configuration json files can otherwsie be found in the `config` sub-directories that lies in each folder.

|

| 46 |

+

For example [this](https://huggingface.co/alea31415/LyCORIS-experiments/blob/main/trained_networks/0325_captioning_clip_skip_resolution/configs/config-1679706673.427538-0325exp-cs1.json) is the default config.

|

| 47 |

+

|

| 48 |

|

| 49 |

### Some observations

|

| 50 |

|

|

|

|

| 321 |

Clearly, the thing that really matters is how the model is made, and not how the model looks like. A model that is versatile in style does not make it a good base model for whatever kind of training. In fact, VBP2-2 has around 300 styles trained in but LoHa trained on top of it does not transfer well to other models.

|

| 322 |

Similarly, two models that produce similar style do not mean they transfer well to each other. Both MFB and Salt-Mix have strong anime screenshot style but a LoHa trained on MFB does not transfer well to Salt-Mix.

|

| 323 |

|

| 324 |

+

**A Case Study on Customized Merge Model**

|

| 325 |

+

|

| 326 |

+

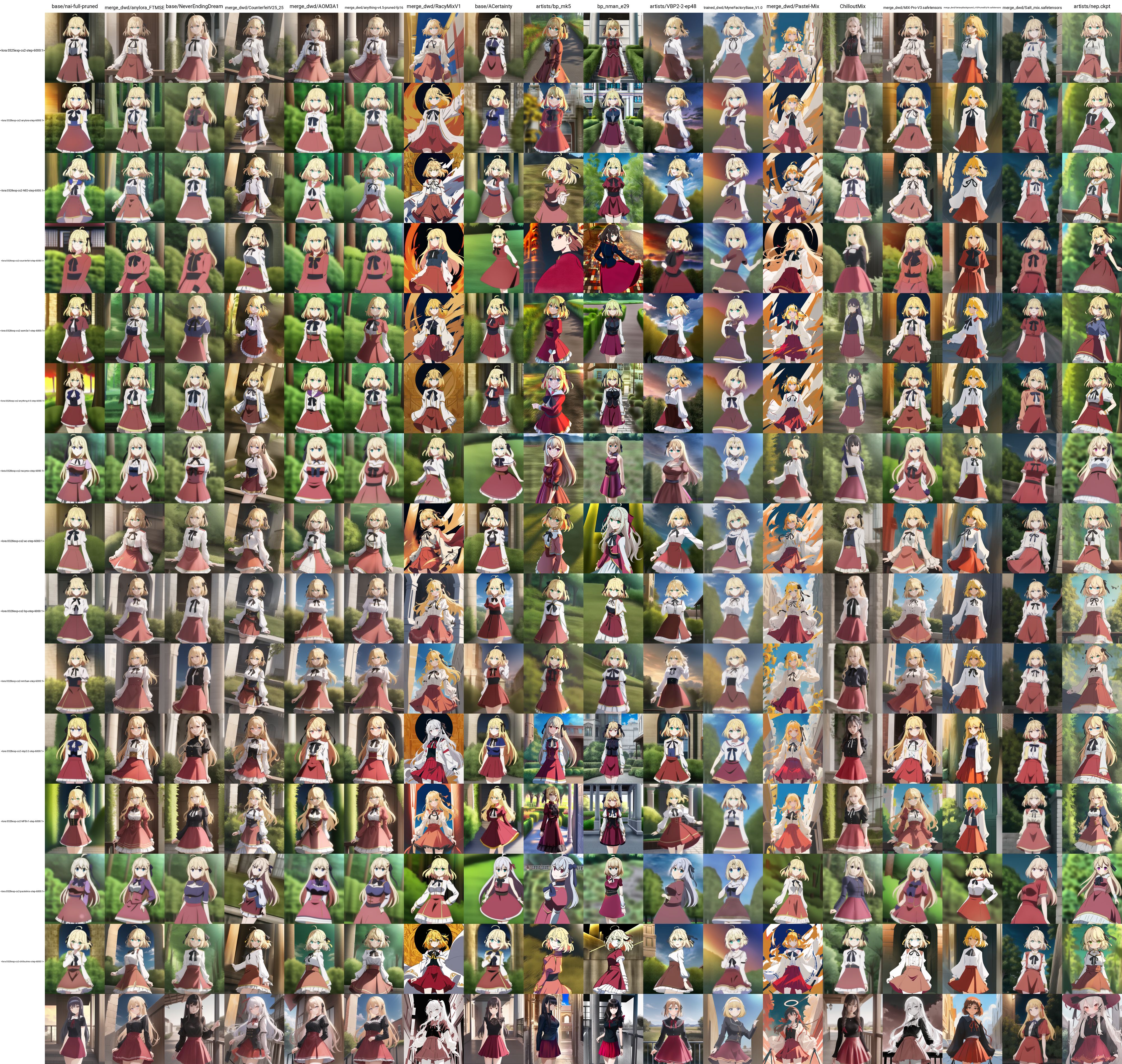

To understand whether you can train a style to be used on a group of models by simply merging these models, I pick a few models and merge them myself to see if this is really effective. I especially choose models that are far from each other, and consider both average and add difference merges. Here are the two recipes that I use.

|

| 327 |

+

|

| 328 |

+

```

|

| 329 |

+

# Recipe for average merge

|

| 330 |

+

tmp1 = nai-full-pruned + bp_nman_e29, 0.5, fp16, ckpt

|

| 331 |

+

tmp2 = __O1__ + nep, 0.333, fp16, ckpt

|

| 332 |

+

tmp3 = __O2__ + Pastel-Mix, 0.25, fp16, ckpt

|

| 333 |

+

tmp4 = __O3__ + fantasyBackground_v10PrunedFp16, 0.2, fp16, ckpt

|

| 334 |

+

tmp5 = __O4__ + MyneFactoryBase_V1.0, 0.166, fp16, ckpt

|

| 335 |

+

AleaMix = __O5__ + anylora_FTMSE, 0.142, fp16, ckpt

|

| 336 |

+

```

|

| 337 |

+

|

| 338 |

+

```

|

| 339 |

+

# Recipe for add difference merge

|

| 340 |

+

tmp1 = nai-full-pruned + bp_nman_e29, 0.5, fp16, ckpt

|

| 341 |

+

tmp2-ad = __O1__ + nep + nai-full-pruned, 0.5, fp16, safetensors

|

| 342 |

+

tmp3-ad = __O2__ + Pastel-Mix + nai-full-pruned, 0.5, fp16, safetensors

|

| 343 |

+

tmp4-ad = __O3__ + fantasyBackground_v10PrunedFp16 + nai-full-pruned, 0.5, fp16, safetensors

|

| 344 |

+

tmp5-ad = __O4__ + MyneFactoryBase_V1.0 + nai-full-pruned, 0.5, fp16, safetensors

|

| 345 |

+

AleaMix-ad = __O5__ + anylora_FTMSE + nai-full-pruned, 0.5, fp16, safetensors

|

| 346 |

+

```

|

| 347 |

+

|

| 348 |

+

I then trained on top of tmp3, AleaMix, tmp3-ad, and AleaMix-ad. It turns out that these models are too different so it does not work very well. Getting style transfer to PastelMix and FantasyBackgrond are quite difficult. I however observe the following.

|

| 349 |

+

|

| 350 |

+

- We generally get bad results when applying to NAI. This is in line with previous experiments.

|

| 351 |

+

- We get better transfer to NMFSAN compared to most of previous LoHas that are not trained on BP family.

|

| 352 |

+

- Add difference with too many models (7) with high weight (0.5) blows the model up: you can still train on it and get reasonable result but it does not transfer to individual component.

|

| 353 |

+

- Add difference with a smaller number of models (4) can work. It seems to be more effective then simple average sometimes (note that how the model trained on tmp3-ad manages to cancel out the style of nep and PastelMix in the examples below).

|

| 354 |

+

|

| 355 |

+

|

| 356 |

+

|

| 357 |

+

|

| 358 |

+

|

| 359 |

+

|

| 360 |

+

|

| 361 |

+

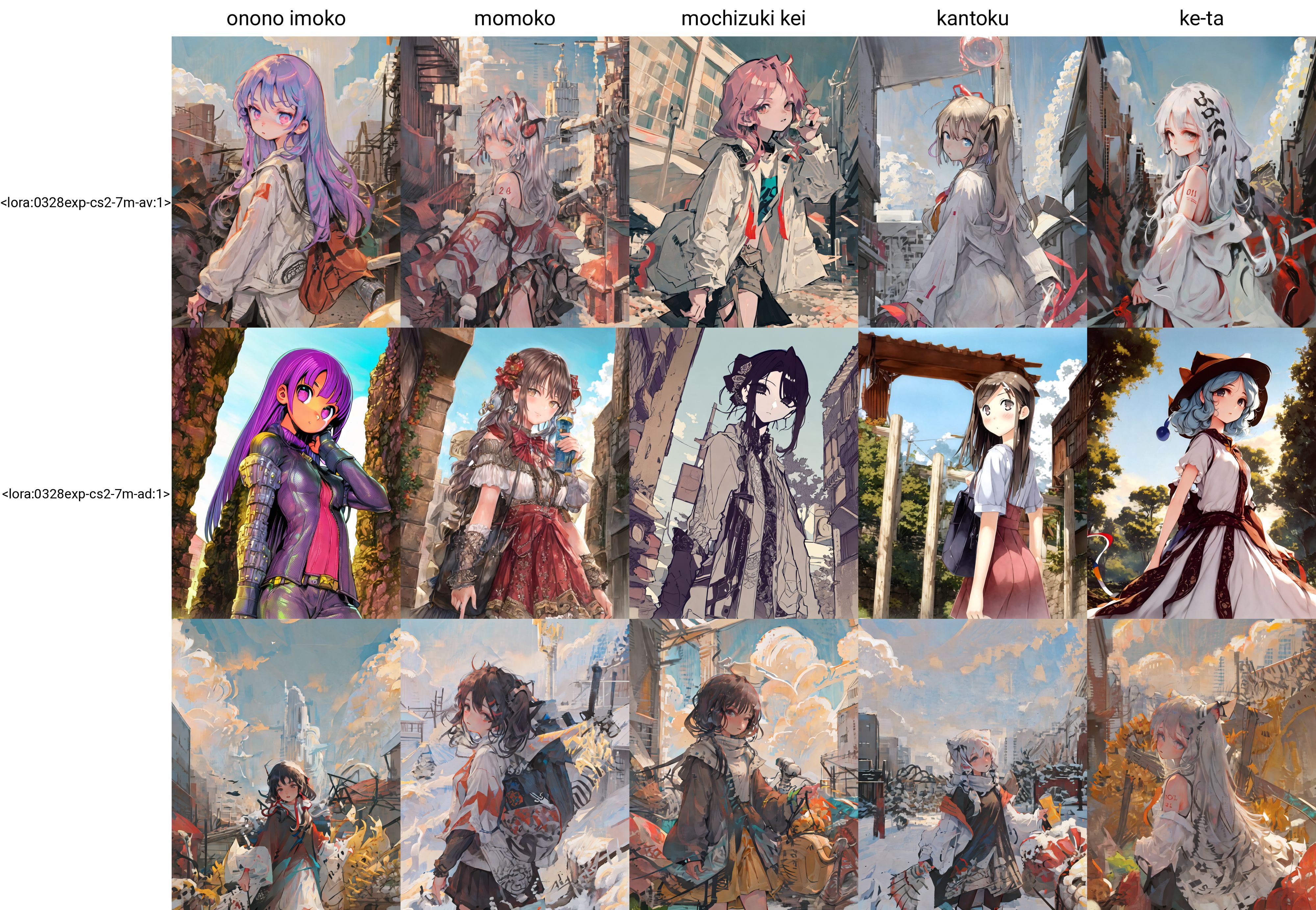

*An interesting observation*

|

| 362 |

+

|

| 363 |

+

While the model AleaMix-ad is barely usable, the LoHa trained on it produces very strong styles and excellent details

|

| 364 |

+

|

| 365 |

+

Results on AleaMix (the weighted sum version)

|

| 366 |

+

|

| 367 |

+

|

| 368 |

+

Results on AleaMix-ad (the add difference version)

|

| 369 |

+

|

| 370 |

+

|

| 371 |

+

However, you may also need to worry about some bad hand in such a model

|

| 372 |

+

|

| 373 |

+

|

| 374 |

+

|

| 375 |

|

| 376 |

#### Training Speed

|

| 377 |

|

| 378 |

+

It is also suggested that you train faster on AnyLora. I try to look into this in several ways but I don't see a clear difference.

|

| 379 |

+

Note that we should mostly focus on the diagonal (LoHa applied on the model used to train it).

|

| 380 |

|

| 381 |

First, I use the 6000step checkpoints for characters

|

| 382 |

|

generated_samples_0329/00032-20230330225216.png

ADDED

|

Git LFS Details

|