Augustus Odena

commited on

Commit

•

73445a4

1

Parent(s):

08f1c77

make README nice

Browse files- README.md +66 -3

- architecture.png +0 -0

README.md

CHANGED

|

@@ -1,5 +1,68 @@

|

|

| 1 |

---

|

| 2 |

license: cc

|

| 3 |

-

|

| 4 |

-

-

|

| 5 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: cc

|

| 3 |

+

---

|

| 4 |

+

# Fuyu-8B Model Card

|

| 5 |

+

|

| 6 |

+

## Model

|

| 7 |

+

|

| 8 |

+

[Fuyu-8B](https://www.adept.ai/blog/fuyu-8b) is a multi-modal text and image transformer trained by [Adept AI](https://www.adept.ai/).

|

| 9 |

+

|

| 10 |

+

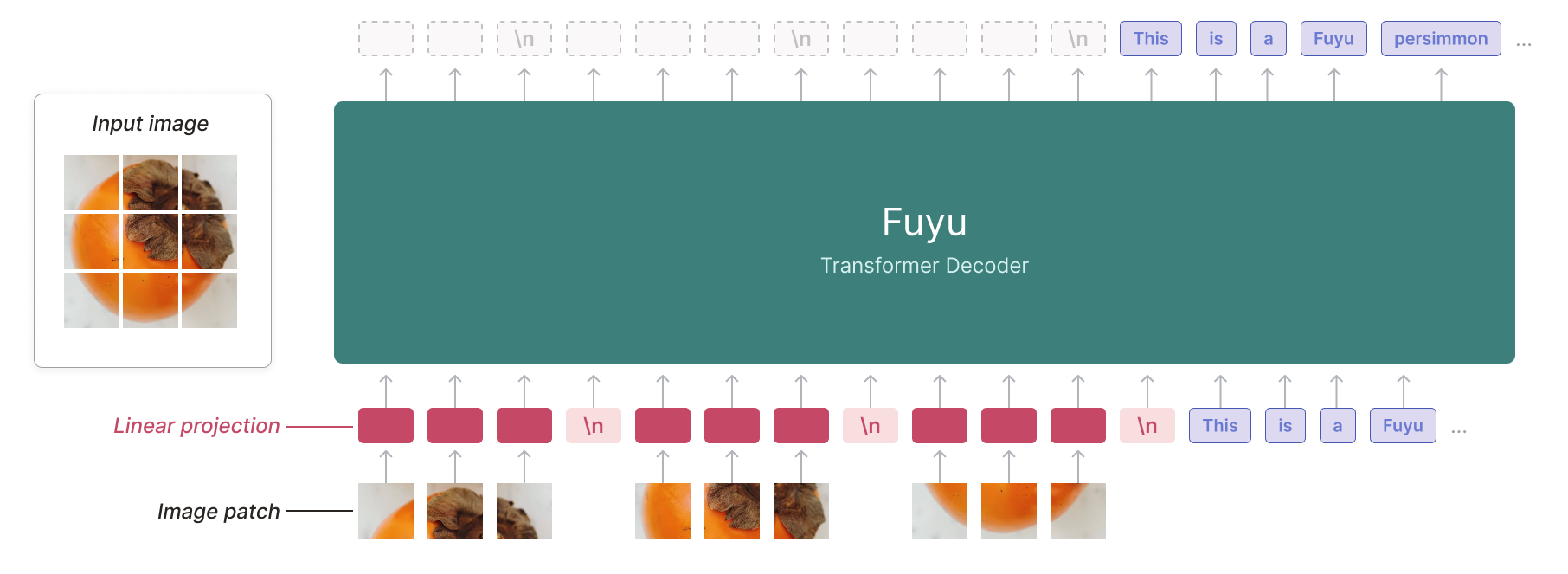

Architecturally, Fuyu is a vanilla decoder-only transformer - there is no image encoder.

|

| 11 |

+

Image patches are instead linearly projected into the first layer of the transformer, bypassing the embedding lookup.

|

| 12 |

+

We simply treat the transformer decoder like an image transformer (albeit with no pooling and causal attention).

|

| 13 |

+

See the below diagram for more details.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

This simplification allows us to support arbitrary image resolutions.

|

| 18 |

+

To accomplish this, we treat the sequence of image tokens like the sequence of text tokens.

|

| 19 |

+

We remove image-specific position embeddings and feed in as many image tokens as necessary in raster-scan order.

|

| 20 |

+

To tell the model when a line has broken, we simply use a special image-newline character.

|

| 21 |

+

The model can use its existing position embeddings to reason about different image sizes, and we can use images of arbitrary size at training time, removing the need for separate high and low-resolution training stages.

|

| 22 |

+

|

| 23 |

+

### Model Description

|

| 24 |

+

|

| 25 |

+

- **Developed by:** Adept-AI

|

| 26 |

+

- **Model type:** Decoder-only multi-modal transformer model

|

| 27 |

+

- **License:** [CC-BY-NC](https://creativecommons.org/licenses/by-nc/4.0/deed.en)

|

| 28 |

+

- **Model Description:** This is a multi-modal model that can consume images and text and produce test.

|

| 29 |

+

- **Resources for more information:** Check out our [blog post](https://www.adept.ai/blog/fuyu-8b).

|

| 30 |

+

|

| 31 |

+

## Evaluation

|

| 32 |

+

The chart above evaluates user preference for SDXL (with and without refinement) over SDXL 0.9 and Stable Diffusion 1.5 and 2.1.

|

| 33 |

+

The SDXL base model performs significantly better than the previous variants, and the model combined with the refinement module achieves the best overall performance.

|

| 34 |

+

Though not the focus of this model, we did evaluate it on standard image understanding benchmarks:

|

| 35 |

+

|

| 36 |

+

| Eval Task | Fuyu-8B | Fuyu-Medium | LLaVA 1.5 (13.5B) | QWEN-VL (10B) | PALI-X (55B) | PALM-e-12B | PALM-e-562B |

|

| 37 |

+

| ------------------- | ------- | ----------------- | ----------------- | ------------- | ------------ | ---------- | ----------- |

|

| 38 |

+

| VQAv2 | 74.2 | 77.4 | 80 | 79.5 | 86.1 | 76.2 | 80.0 |

|

| 39 |

+

| OKVQA | 60.6 | 63.1 | n/a | 58.6 | 66.1 | 55.5 | 66.1 |

|

| 40 |

+

| COCO Captions | 141 | 138 | n/a | n/a | 149 | 135 | 138 |

|

| 41 |

+

| AI2D | 64.5 | 73.7 | n/a | 62.3 | 81.2 | n/a | n/a |

|

| 42 |

+

|

| 43 |

+

## Uses

|

| 44 |

+

|

| 45 |

+

### Direct Use

|

| 46 |

+

|

| 47 |

+

The model is intended for research purposes only.

|

| 48 |

+

**Because this is a raw model release, we have not added further finetuning, postprocessing or sampling strategies to control for undesirable outputs. You should expect to have to fine-tune the model for your use-case.**

|

| 49 |

+

|

| 50 |

+

Possible research areas and tasks include

|

| 51 |

+

|

| 52 |

+

- Applications in computer control or digital agents.

|

| 53 |

+

- Research on multi-modal models generally.

|

| 54 |

+

|

| 55 |

+

Excluded uses are described below.

|

| 56 |

+

|

| 57 |

+

### Out-of-Scope Use

|

| 58 |

+

|

| 59 |

+

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

|

| 60 |

+

|

| 61 |

+

## Limitations and Bias

|

| 62 |

+

|

| 63 |

+

### Limitations

|

| 64 |

+

|

| 65 |

+

- Faces and people in general may not be generated properly.

|

| 66 |

+

|

| 67 |

+

### Bias

|

| 68 |

+

While the capabilities of these models are impressive, they can also reinforce or exacerbate social biases.

|

architecture.png

ADDED

|